The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

This article was written by Zhou Xiaofan, Wang Huafeng, Xu tong, and Xia Ming from Alibaba Cloud's Middleware Technology Department.

During 2019's Double 11 shopping event, we once again witnessed an astounding technical feat because, for this year's Double 11, at Alibaba we prepared for an entire year, migrating Alibaba's core e-commerce business units to Alibaba Cloud. And, thanks to all this preparation, during the big promotion, Alibaba's technical infrastructure withstood the peak of 540,000 transactions per second. This success marks the official entrance of our R&D and O&M models into the cloud-native era.

As a team that has been deeply engaged in tracing technology and application performance management (APM) for many years, engineers from the Alibaba EagleEye team have witnessed several upgrades to Alibaba's infrastructure, each of which has brought new challenges for the observability of the system. So, what exactly are the new challenges created by this "cloud-native" upgrade, you may say then?

The new paradigm advanced by cloud native has imposed a huge impact on Alibaba's exisiting R&D and O&M models, and concepts and systems like microservice and DevOps have increased the overall efficiency of our R&D models. However, at the same time, these new concepts and system have also lead to more difficulties in troubleshooting, and more particularly locating faults, especially when we consider the large number of microservices deployed at Alibaba.

Regardless, the growing maturity of container orchestration technologies such as containerization and Kubernetes makes the delivery of large-scale software several degrees easier. Yet, at the same time, this again also creates challenges for accurately evaluating our capacity and the scheduling resources for ensuring the best balance between costs and system stability. That is, new technologies explored by Alibaba this year, such as serverless and service mesh, will completely take over the work of the O&M middleware and the IaaS layer from users in the future. The extent of the automation of this infrastructure is yet another major challenge that we need to overcome.

However, it is also important. Infrastructure automation is a prerequisite for fully unleashing the benefits of cloud native, while at the same time observability is the cornerstone of automated decision-making. As such, in this article, we will discuss both automation and the concept of observability.

If the execution efficiency, success, and failure of each interface can be accurately tracked statistically, then the sequence of each user request can be completely traced, and the dependency relationships between applications and that between applications and their underlying resources can be sorted out in an automatic manner.

With such information, we can then be automatically led to the root cause of a service exception. Therefore, we can also address questions such as do I need to migrate, scale out, or remove the underlying resources that affect my business. We can use the values of the peak periods of Double 11 to automatically calculate whether the resources required for each application are sufficient and without waste.

One thing to clear up before we get too into our main topic in this article is the difference between observability and monitoring. Often, I come across people who assume that "observability" is just simply another rephrasing of "monitoring." They'll ask me, don't you mean "monitoring?" The answer is no. The reality of the situation is that,at least in the industry, the concepts are defined a bit differently.

Observability is not monitoring in the regard that monitoring focuses more on "problem discovery" and "warning". Whereas, in contrast to this, the ultimate goal of observability is to provide a reasonable explanation for the things that happen to a complex distributed system. Considering it from another perspective, monitoring focuses more on the software delivery and post-delivery processes. In contrast, observability is responsible for the complete lifecycle of the R&D and O&M procedures.

Observability itself is still composed of the commonplace tracing, metrics, and logging processes, which are all very mature technical fields in their own respect. However, how are these three things integrated with cloud infrastructure? How can they be better associated and integrated? And how can they better integrate with online businesses in the cloud era? Our team has been seeking for answers to these questions for the last two years.

During this year's Double 11, the engineers of the EagleEye team explored in four different areas of technical research in the hope of providing powerful support for Alibaba Group's comprehensive cloud migration and for the automation preparations needed for Double 11, and for the global stability of the system.

As Alibaba's e-commerce operations become increasingly more complex and diversified, the preparations for big promotions like Double 11 and 6.18 are being made increasingly refined and scenario based.

In the past, the owner of each microservice system worked according to the system itself, as well according its upstream and downstream situations. Although this splitting method is efficient, there inevitably are omissions. The root cause is the mismatch that exists between the mid-end application and the actual business scenario.

Consider a transaction system as an example. A transaction system carries multiple types of businesses, such as Tmall, Hema, and Fliggy, and does so all at the same time. The expected call volume and the downstream dependency path of each business are different. For the owner of this transaction system, it is difficult to sort out the impact of the upstream and downstream logical details of each business on this transaction system itself.

This year, the EagleEye team at Alibaba launched a scenario-based link feature. Combined with the business metadata dictionary, traffic is automatically colored by means of non-invasive automatic tagging. The actual traffic is the business, and the data of the business and downstream middleware is interconnected, moving from the previous application-centric view to the business scenario-centric view. This brings it closer to the real model for big promotions.

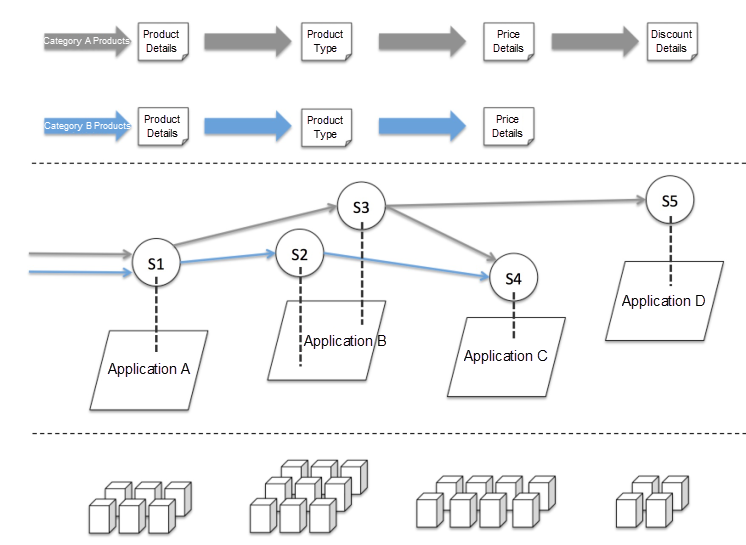

As shown in the graphic above, this is a case of querying commodities. The four systems, A, B, C, and D, provide query capabilities for "product details," "product type," "price details," and "discount details", respectively. Application A provides product query interface S1. Through EagleEye, we can quickly find that the B, C, and D applications are the dependencies of application A and are also the downstream of interface S1. In this case, the link data is sufficient for stable system governance.

However, this view provides no observability of the business, because this dependency structure contains two business scenarios. The links corresponding to both scenarios are also completely different. The link corresponding to Category A products is A - > B - > C - D, whereas the link corresponding to Category B products is A - > B - > C. Now assuming that the ratio of these two product categories is 1:1 during non-promotional days, whereas this ratio is 1:9 during major promotions, it is impossible for us to obtain a reasonable traffic estimation model by sorting out the links only from the perspective of the system or the business.

Therefore, if we can color both types of traffic at the system layer, we can easily sort out the links that correspond to the two business scenarios. This more refined perspective is especially important for ensuring business stability, more reasonable dependency sorting, and the configuration of throttling and degradation policies.

This kind of business scenario-based capability played a large role in the preparation for this year's Double 11. Many business systems have sorted out their core business links by using this capability. It makes preparation for challenges easier without omissions. At the same time, a series of service governance tools, with the support of EagleEye, have been comprehensively upgraded in scenarios such as scenario-based traffic recording and playback, scenario-based fault drill tools, and scenario-based precision tests and regression. In conjunction with these service governance tools that are more suitable for business scenarios, the granularity of observability that supported the entire Double 11 has entered the "high-definition era."

In the cloud-native era, with the introduction of technologies such as microservices, as well as the increasing business scale and increasing number of application instances, core business dependencies have become increasingly complicated. Although development efficiency has increased exponentially, the cost of locating faults is also extremely high. For example, when a business problem occurs, it is very difficult to quickly locate and mitigate the problem. As Alibaba Group's "guardian" of application performance, the EagleEye team is challenged this year by how to help users quickly locate faults.

To locate a fault, we must first answer this question, what is a fault? This requires the O&M personnel to have a deep understanding of the business. Many O&M engineers prefer to use an overkill approach with all observability indicators and various alerts, for a "sense of security." With this type of approach, the occurrence of a fault triggers abnormal indicators and increasing alert messages on their computer screen. This type of "observability" seems to be powerful, but the actual result is counterproductive.

The team carefully sorted out the incidents within the group over the years. The core applications within the group usually have four categories of faults, or non-business-logic faults, which are faults in the resource, traffic, latency, and error categories. The following list describes these categories in detail.

With these fault categories as the focus, what we need to do next is to "follow the trail of clues." Unfortunately, when the complexity of business operations grows, this "trail" also grows longer. Take the fault of sudden increases in latency as an example. There are many possible root causes behind this sort of situation, of which it may be a sharp increase in request volume due to upstream business promotion, or the frequent garbage collection of the corresponding application itself, which leads to an overall application slowdown. It may also be caused by slow response due to the heavy load of a downstream database. Besides this, there are many more other possible reasons. EagleEye used to provide only the indicator information. During that time, the O&M personnel would look at the data of a single trace, and move the cursor several times before they could see the complete tracing data. Needless to say, it's rather difficult to keep things efficient when switching among multiple systems for troubleshooting purposes is still ongoing.

But, at the end of the day, the very essence of what is locating faults is just a bunch of troubleshooting. You try, and you try, try again. It's about eliminating what it isn't to figure out the remaining possibilities of what it is. When you think hard about it, troubleshooting faults is simply a matter of listing possibilities and following through with the same process multiple times. Well, don't you think that's what computers do best? Of course it is. This is why intelligent fault location was developed.

When it comes to the topic of going smart, a lot of people's first impression is that some sort of algorithm must be involved, and algorithms can be intimidating. But, the reality is that people who know about machine learning understand that data quality is paramount, with models being of secondary importance, and that algorithms are the least important. The reliability and integrity of data collection and the modeling of domain models are vital. Intelligence can be implemented only when the data-based approach is accurate.

The evolution of intelligent fault location came out of the following thought process that, before anything else, we must ensure the quality of data. Thanks to the involvement of the EagleEye team in big data processing for many years, high data reliability has already been guaranteed for the most part. Without such reliability, if a fault occurs, you have to first check whether the fault is caused by your own metrics. The next step is a data completeness check and diagnostics modeling, which are the basics for intelligent diagnostics and for determining the granularity of locating faults. These two parts also complement each other. By creating a diagnostic model, you can identify leaks and fill in the gaps in the observability metrics. And, by completing the metrics, you can increase the depth of the diagnostic model.

Both parts can be continuously improved through the combination of three aspects. The first aspect is historical failure derivation, which is the equivalent to an exam for which you already have the answer sheet. The initial diagnostic model is built based on some historical faults and manual experience, and the remaining historical faults are deduced after much trial and error. However, models in this step are prone to overfitting. The second aspect is the use of chaos engineering to simulate common exceptions and constantly polish the model. The third aspect is the use of the online manual tagging method to continue completing observability indicators and polishing diagnostic models.

After these three stages, the foundation of the intelligent fault location is more or less set. The next step is to solve the efficiency problem. The model iterated from the preceding steps is far from the most efficient one. We have to remember that human experience and thinking are linear. However, computers are not. The team has performed two tasks for the existing model: edge diagnosis and intelligent pruning. Part of the positioning process is delivered to each proxy node. For some situations that may affect the system, key information at the scene of the incident is automatically saved and reported. The diagnostic system automatically adjusts the path based on the event weight.

Since the launch of the intelligent root cause location feature, it has helped with the location of the root cause of faults for thousands of applications, and has achieved high customer satisfaction. Based on the conclusions from root cause location, infrastructure automation has been greatly improved with observability as the cornerstone. During the preparation for this year's Double 11, the rapid fault location function provided more automated measures for the owners of application stability. We also believe that, in the cloud-native era, the dynamic balance among the operation quality, cost, and efficiency of enterprise applications is no longer out of reach, and will be available in the future.

What is "last mile" locating faults? What is the last mile in the field of distributed problem diagnostics, and what features does it have?

After the preceding analysis, you may now have an idea of what the last mile is about. Next, we will describe in detail how to locate problems in the last mile.

First, we need a method to accurately reach the starting point of the last mile. That is, we need to reach the application, service, or machine node where the root cause of a problem is located. This prevents invalid root cause analysis. So, how do we accurately define the root cause range in a complicated series of links? Here, we need to use the tracing capabilities commonly used in the APM field. Link tracing can accurately identify and analyze abnormal applications, services, or machines, indicating the correct direction for locating on the last mile.

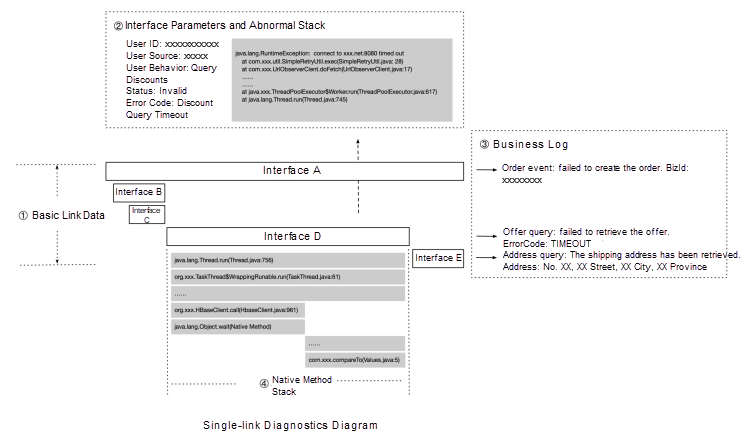

Then, we associate more details with the link data, such as the local method stack, business logs, machine status, and SQL parameters, to perform last-mile problem location, as shown in the following graphic:

Core interface tracking refers to tracking and recording basic link information, which includes the TraceId and RpcId (SpanId), time, status, IP address, and interface name. This information reflects the most basic link form. Automatic data association refers to automatically recording the associated information, which includes SQL statements, input and output request parameters, and exception stacks, during the call lifecycle. This type of information does not affect the link form but is a necessary condition for precise fault location in some scenarios. Active data association refers to actively associating the data that needs to be manually recorded during the call lifecycle. The data is generally business data, such as business logs and business identifiers. Business data is personalized and cannot be configured in a unified manner. However, active association with link data can greatly improve the efficiency of business problem diagnostics.

Native method stacks are useful because link tracing cannot be added to all methods due to performance and cost restrictions. In this case, we can use methods such as method sampling or online instrumentation to implement precise native slow-method troubleshooting.

Through last-mile fault location, we can thoroughly investigate system risks and quickly locate the root cause in preparation for daily operation and major promotions. Here are two practical use cases:

An application may experience an occasional RPC timeout when the overall traffic peaks. By analyzing the automatically recorded snapshots of native method stacks, we can find that the actually consumed time is all consumed on the log output statement. The cause is that LogBack versions earlier than 1.2.x are prone to hot locking in the high-concurrency synchronous call scenario. This issue can be completely solved by upgrading LogBack or adjusting LogBack to asynchronous log output.

When a user reports an order exception, the business personnel first use the UserId of this user to retrieve the business log for the corresponding order entry. Then, based on the trace ID associated with the log, all the business processes, statuses, and events that the downstream depends on are arranged in the actual call order so that the cause of the order exception can be quickly located. The UID cannot be automatically transparently transferred to all downstream links, but TraceId can.

Monitoring and alerts can only reflect the appearance of the problem. The root cause of the real problem still needs to be found in source code. This year, EagleEye made a major breakthrough in the "refined sampling" of diagnostic data. This greatly improves the precision and value of the data required for last-mile location, without increasing control costs. During the long preparation for Double 11, sources of system risks were filtered out one after another, ensuring that the day of the promotion went smoothly.

Over the past year, the EagleEye team has integrated the industry's mainstream observability frameworks by embracing open-source technologies. Tracing Analysis, a tracing service, has been released on Alibaba Cloud. It is compatible with mainstream open-source tracing frameworks such as Jaeger (OpenTracing), Zipkin, and SkyWalking. For applications that are already using these frameworks, you do not need to modify any code, but can simply modify the configuration file with the data reporting address. This gives you much greater tracing capabilities than open-source tracing products, with much lower construction costs.

The EagleEye team also released the fully managed Prometheus service, which solves the problems with the write performance, such as a large number of monitoring nodes and excessive resources occupied by the deployment of the open-source version. The problem of slow query is also optimized for wide-range and multi-dimensional queries. The optimized managed Prometheus cluster fully supports the monitoring of service mesh and several important Alibaba Cloud customers. We also contribute many points of optimization back to the community. Similarly, the managed Prometheus version is compatible with the open-source version, which can be migrated to the managed version with one click in Alibaba Cloud Container Service.

Observability and stability are inseparable. This year, EagleEye engineers sorted out a series of articles and tools from recent years related to observability and stability construction, and added them on GitHub. We also welcome you join us in this effort.

The Cloud Service Engine: Serverless Technology Powering Double 11

528 posts | 52 followers

FollowAlibaba Cloud Native Community - January 5, 2023

Alibaba Clouder - November 23, 2020

Alibaba Clouder - February 24, 2021

Alibaba Cloud Native Community - April 19, 2023

Alibaba Cloud Native Community - December 6, 2022

Alibaba Clouder - December 4, 2020

528 posts | 52 followers

Follow Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More Application Real-Time Monitoring Service

Application Real-Time Monitoring Service

Build business monitoring capabilities with real time response based on frontend monitoring, application monitoring, and custom business monitoring capabilities

Learn MoreMore Posts by Alibaba Cloud Native Community