By Gu Rong, Che Yang, Fan Bin

In recent years, more and more AI and big data applications are being deployed and run on cloud-native orchestration frameworks such as Kubernetes. This is because containers bring about efficient deployment and agile iteration, as well as exaggerate the natural advantages of cloud computing in terms of resource costs and elastic scaling. However, before Fluid occurs, the Cloud Native Computing Foundation (CNCF) landscape lacks a native component to help these data-intensive applications efficiently, securely, and conveniently access data in cloud-native scenarios.

Efficiently driving operations of big data and AI applications in cloud-native scenarios is a challenging issue that has both theoretical significance and application value. On the one hand, to solve this issue, we need to consider a series of theoretical and technical challenges in complex scenarios, such as application collaborative orchestration, scheduling optimization, and data caching. On the other hand, the solution to this issue can effectively promote big data and AI applications in a wide range of cloud-service scenarios.

To achieve systematic solutions, academics and industry experts need to cooperate closely. And that is why Dr. Gu Rong, an associate researcher at the Parallel Algorithms, Systems and Applications for Big Data Lab (PASALab) in Nanjing University, Che Yang, a senior technical expert at Alibaba Cloud container service, and Fan Bin, a founding member of the Alluxio project, jointly promoted the launch of the open source project, Fluid.

Fluid is an open-source cloud-native infrastructure project. Driven by the separation of computing and storage, Fluid aims to provide an efficient and convenient data abstraction for AI and cloud-native applications by abstracting data from storage, in order to achieve:

The data layer abstraction provided by Kubernetes enables data to be flexibly and efficiently moved, replicated, evicted, converted and managed between storage sources such as Hadoop Distributed File System (HDFS), Object Storage Service (OSS) and Ceph, and the computing of cloud-native applications. However, specific data operations are available to users, so users no longer have to worry about the efficiency of accessing remote data, the convenience of managing data sources, and how to help Kubernetes to make the O&M and scheduling decisions. Users only need to directly access the abstracted data through the most natural Kubernetes data volumes. The remaining tasks and underlying details are all submitted to Fluid.

Currently, the Fluid project focuses on two important scenarios: data set orchestration and application orchestration. The data set orchestration allows you to cache data of a specified data set to specific Kubernetes nodes. The application orchestration allows you to schedule an application to a node that can or has stored the specified data set. The two can also be combined to form a collaborative orchestration scenario, that is, node resource scheduling based on the data set and application requirements.

There are natural differences in the design concepts and mechanisms between the cloud-native environment and the big data processing frameworks. The Hadoop big data ecology, which was deeply influenced by Google's three papers GFS, MapReduce, and BigTable, has believed and practiced the concept of "move computing instead of data" from the very beginning. Therefore, the data-intensive computing frameworks such as Spark, Hive, and MapReduce and their applications must consider the data locality architecture to reduce the data transmission. However, with the changes of the times, in order to give consideration to the flexibility and usage costs of resource expansion, the architecture of separation of computing and storage is widely used in the emerging cloud-native environment. Therefore, a component like Fluid is needed in the cloud-native environment to supplement the lack of data locality caused by the big data framework embracing the cloud-native technology.

In addition, in the cloud-native environment, applications are usually deployed in a stateless microservice manner and are not centered on data processing. Usually taking data abstraction as the center, the data-intensive frameworks and applications perform computing jobs and assign and execute tasks. After the data-intensive frameworks are integrated into the cloud-native environment, a scheduling framework centered on data abstraction is also needed, such as Fluid.

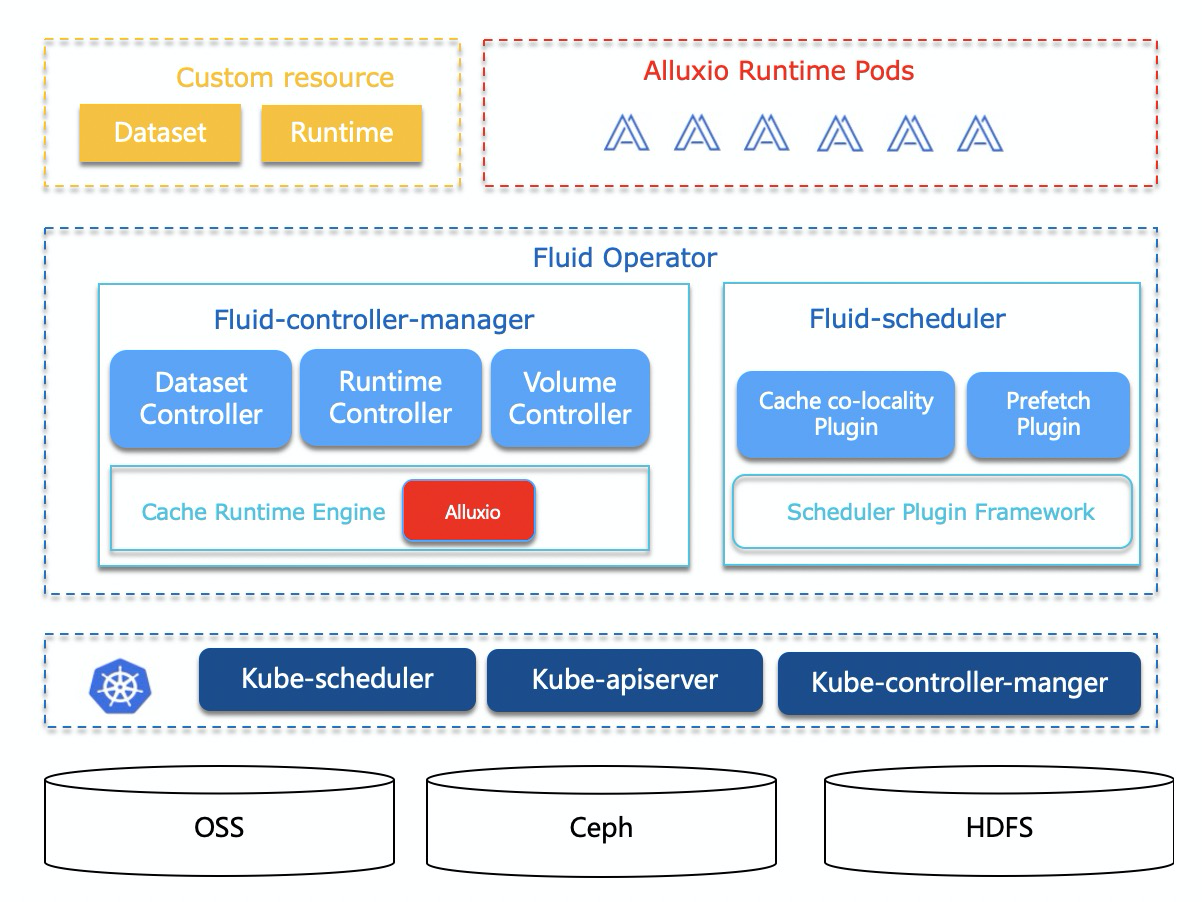

In view of Kubernetes' lack of intelligent awareness and scheduling optimization for application data and the limitation that the data orchestration engine, taking Alluxio as an example, has difficulty in directly controlling the cloud-native infrastructure layer, Fluid proposes a series of innovative methods, such as the collaborative orchestration of data applications, intelligent awareness and joint optimization. It forms an efficient support platform for data-intensive applications in cloud-native scenarios. **

The following figure shows the specific architecture:

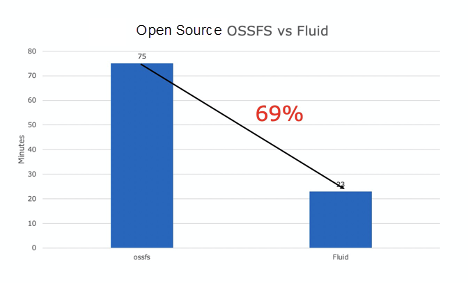

We have provided a Demo video to show you how to use Fluid to speed up AI model training on the cloud. In this Demo, using the same ResNet50 test code, the Fluid acceleration has obvious advantages over the native ossfs direct access regardless of the training speed per second or the total training duration, with the training time shortened by 69%.

The Demo video

The Fluid must be running in Kubernetes v1.14 or later that supports Container Storage Interface (CSI). You can deploy and manage Fluid Operator by using Helm v3 on the Kubernetes platform. Before you run Fluid, make sure that Helm is correctly installed in the Kubernetes cluster. To install and use Fluid, please refer to the Getting Started Guide

Fluid allows Kubernetes to truly have the basic capabilities of the distributed data caching. Open source is only a starting point, and everyone's participation is welcomed. If you find a bug or require a feature in the process of using it, you can directly submit the issue or Pull Request (PR) on GitHub to participate in the discussion.

Gu Rong, an associate researcher of the Department of Computer Science and Technology in Nanjing University, whose research direction is big data processing system, has published more than 20 papers in the frontier journals and conferences, such as the IEEE Transactions on Parallel and Distributed Systems (TPDS), IEEE International Conference on Data Engineering (ICDE), Parallel Computing, Journal of Parallel and Distributed Computing (JPDC), International Parallel and Distributed Processing Symposium (IPDPS) and International Conference on Parallel Processing (ICPP). His achievements have been applied to Sinopec, Baidu, Bytedance and other companies and Apache Spark. He won the first prize of Jiangsu Province Science and Technology in 2018 and the Youth Science and Technology Award of Jiangsu Computer Society in 2019. He was elected as the member of the Techinical Committee of Systems Software and the Task Force of Big Data, China Computer Federation, and the secretary general of the Big Data Committee of Jiangsu Computer Society.

Che Yang, a senior technical expert at Alibaba Cloud, specializes in the development of Kubernetes and container-related products. He focuses on how to use cloud-native technology to build machine learning platforms and systems and is the primary creator and maintainer of the GPU share scheduling.

Fan Bin is the member of Project Management Committee (PMC) of the open source project Alluxio and the maintainer of its source code. Before joining Alluxio, Fan Bin worked for Google and was engaged in the research and development of the next-generation large-scale distributed storage system. He received his doctorate from the Department of Computer Science at Carnegie Mellon University in 2013. During his doctorate, he was engaged in the design and implementation of distributed systems and was the creator of Cuckoo Filter.

Addressing Security Challenges in the Container and Cloud-Native Age

A Brief Introduction to AI Cloud-Native: TAL's AI Platform Practice

173 posts | 31 followers

FollowAlibaba Container Service - August 16, 2024

Alibaba Cloud Native Community - September 19, 2023

Alibaba Cloud Native Community - March 1, 2022

Alibaba Container Service - November 15, 2024

Alibaba Cloud Native Community - September 20, 2023

Alibaba Cloud Native Community - March 29, 2024

173 posts | 31 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Alibaba Container Service