Watch the replay of the Apsara Conference 2024 at this link!

By Yu Zhuang and Bingchang Tang

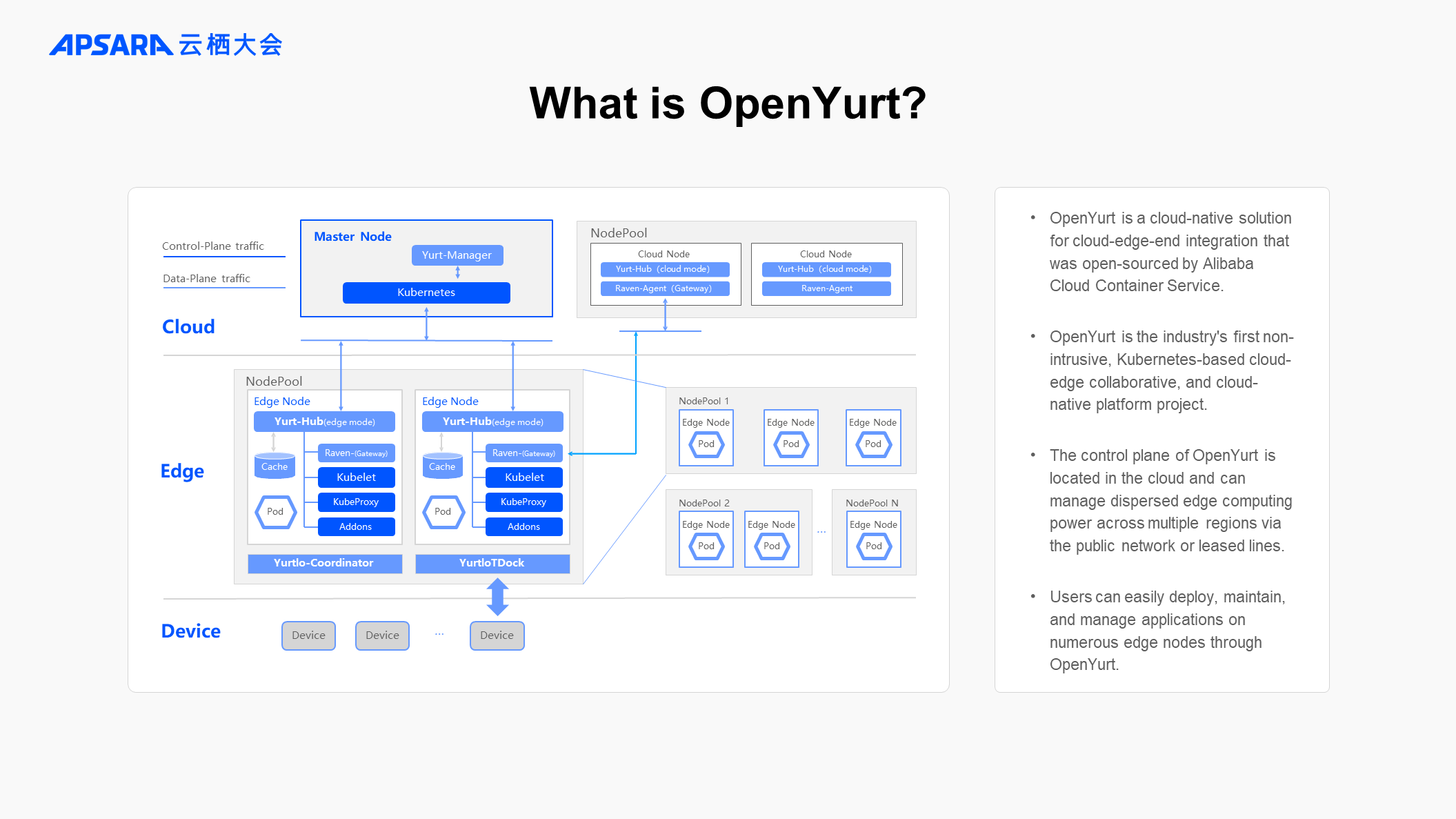

OpenYurt is a cloud-native solution for cloud-edge-end integration that was open-sourced in May 2020 by Alibaba Cloud Container Service Team. It became a CNCF sandbox project in September of the same year. As the industry's first non-intrusive edge computing platform for cloud-native systems, OpenYurt supports unified management of dispersed heterogeneous resources from the cloud, enabling users to easily deploy, maintain, and manage large-scale applications across numerous dispersed resources.

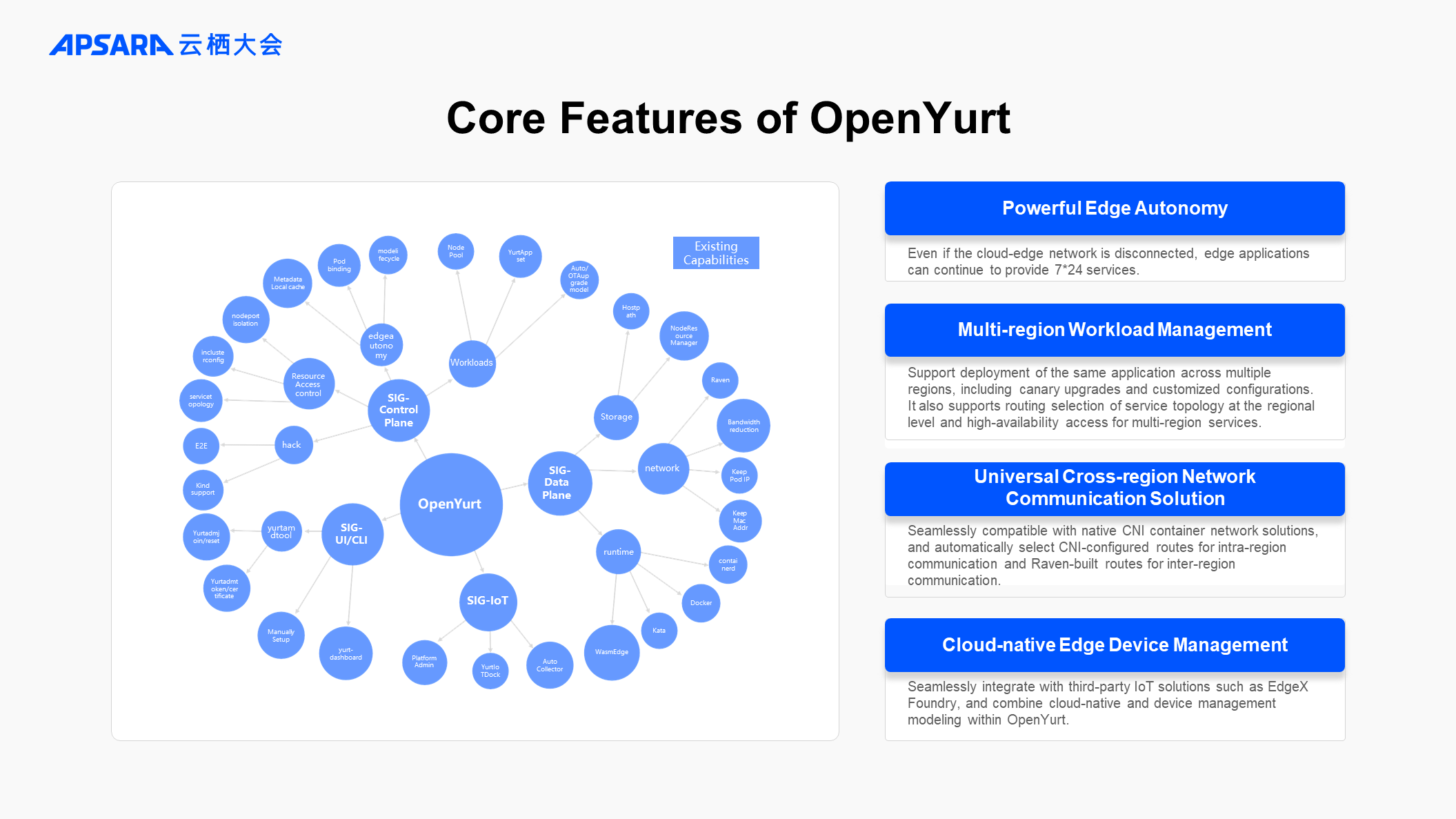

Based on the Kubernetes ecosystem, OpenYurt implements a cloud-edge integrated architecture in a non-intrusive manner. For edge computing scenarios, OpenYurt focuses on providing core capabilities such as edge autonomy, multi-region workload management, cross-region network communication, and cloud-native edge device management.

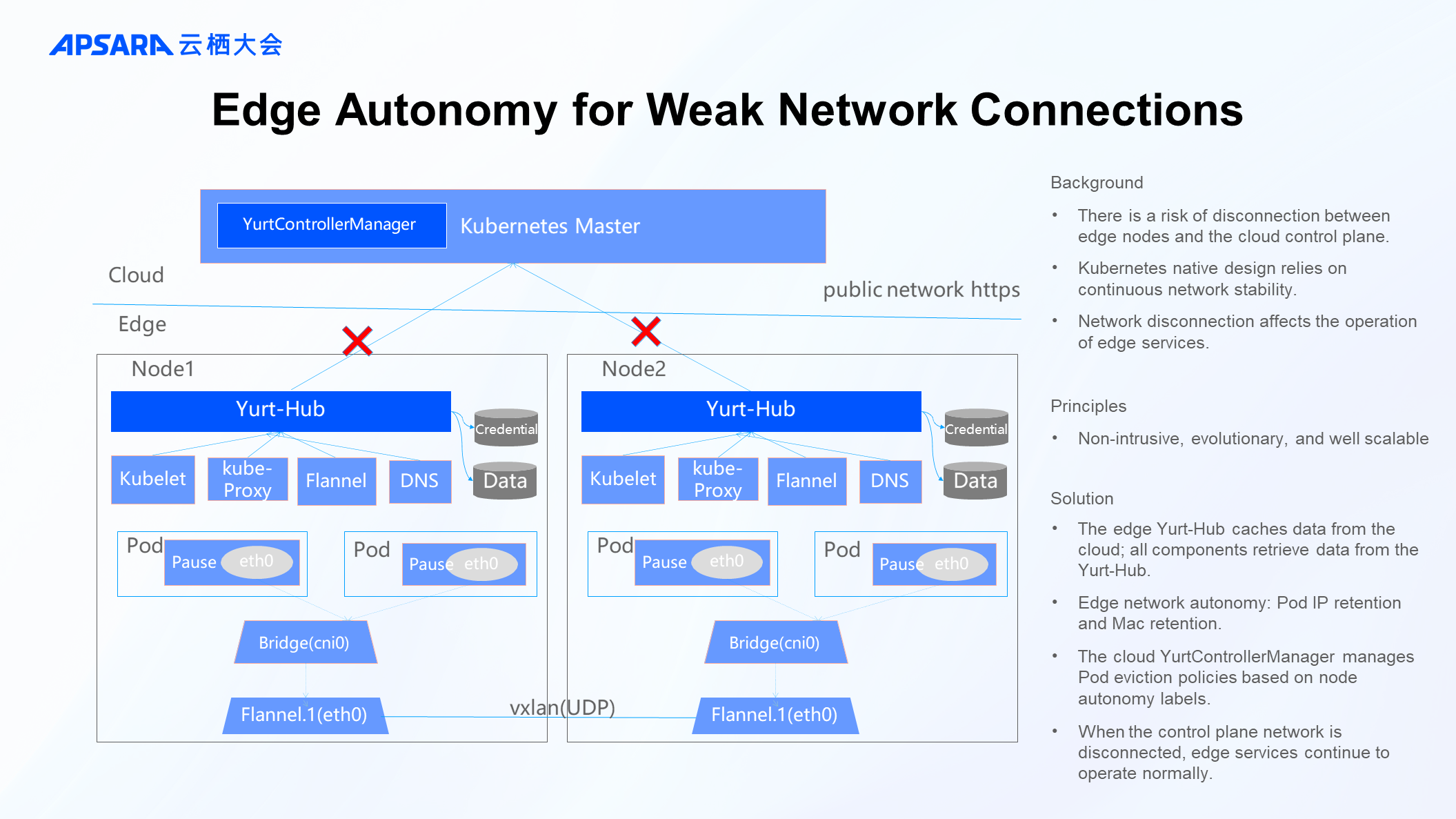

In cloud-edge management scenarios, edge computing resources typically need to establish a connection with cloud management through the public network. Due to the instability of the public network and the complexity of the edge network environment, there is a risk of network disruptions between edge resources and cloud management. In native Kubernetes, if a node is disconnected, the services running on it may be evicted, posing a challenge to service stability. To address this, OpenYurt provides autonomous capabilities during network disconnection through mechanisms like adding proxy caching, Pod IP retention, and Mac address retention on edge nodes, ensuring the stability of edge services when the network is disconnected.

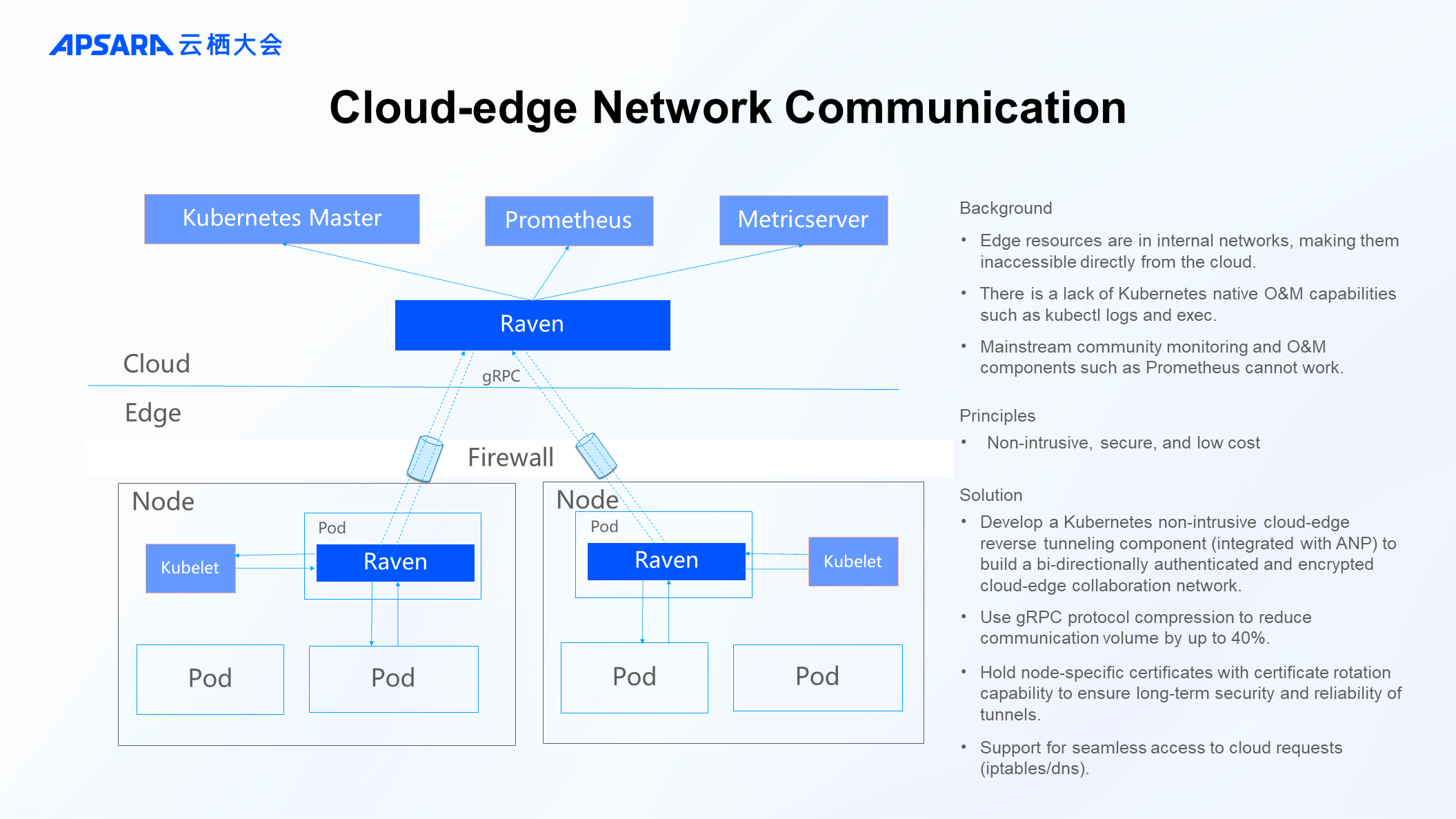

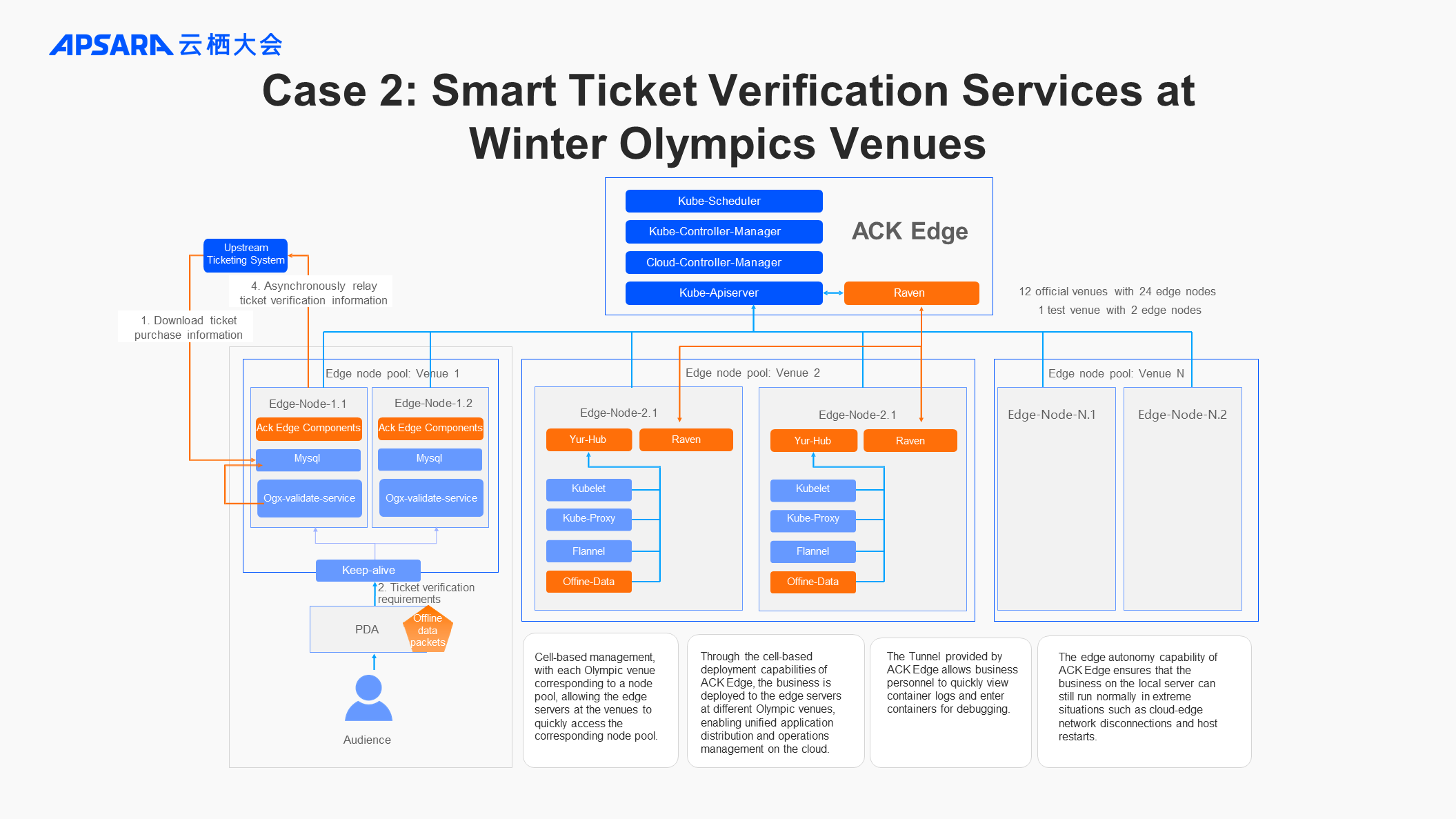

Edge nodes are usually located in internal networks, making it impossible to directly access edge resources from the cloud for operations such as viewing edge logs, remote login, or collecting monitoring data. To solve this issue, the OpenYurt community introduced Raven, a component for secure cross-region network communication, which establishes a reverse maintenance tunnel between the cloud and the edge, allowing maintenance requests from the cloud to reach the edge side through this tunnel.

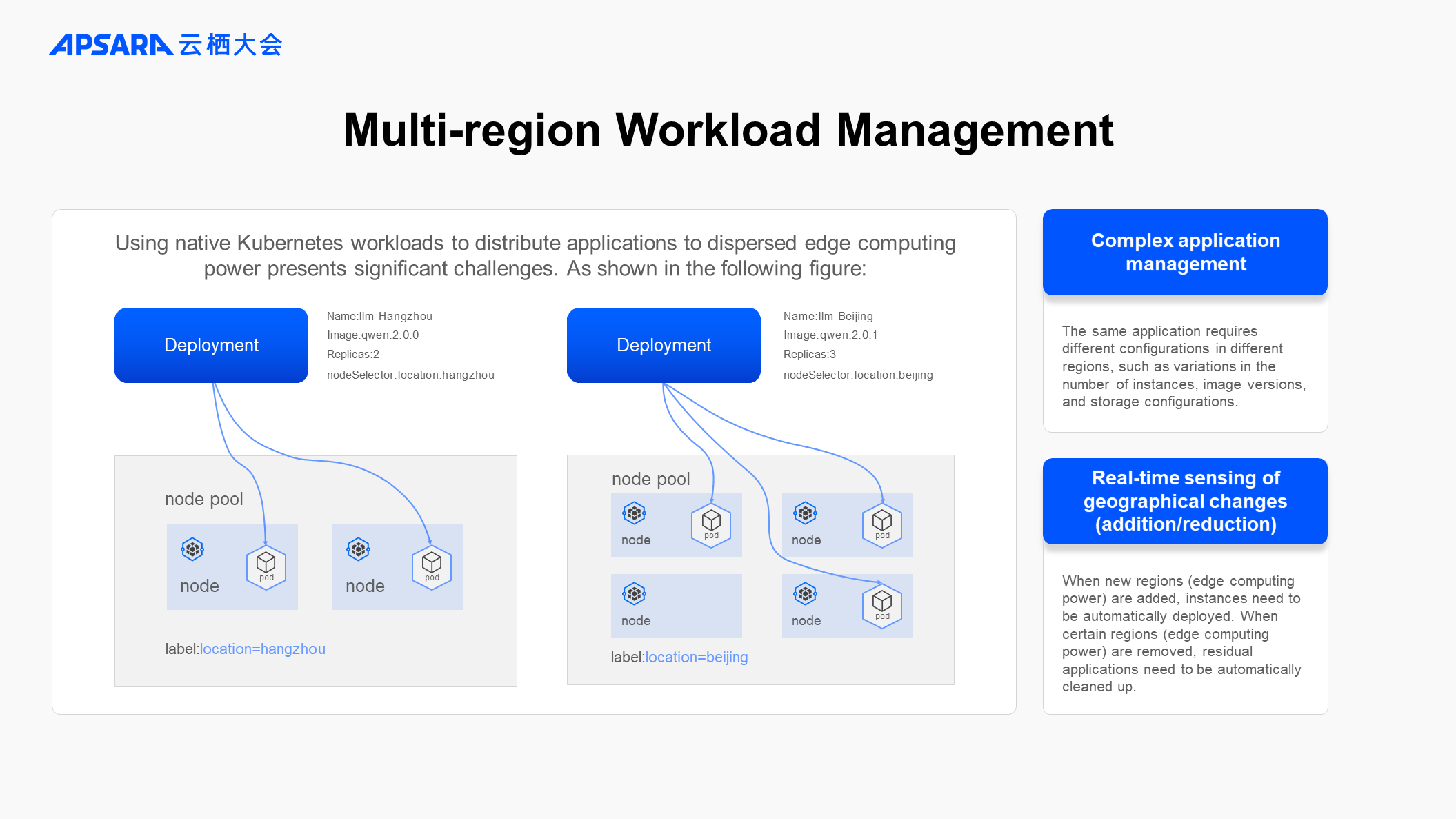

During the process of managing edge resources, we observed that edge resources tend to be geographically dispersed. Different configurations might be required for the same application in different regions, including variations in the number of instances, image versions, and storage configurations. If using the Kubernetes native Workloads, separate deployments would need to be made for each region along with proper label and configuration management.

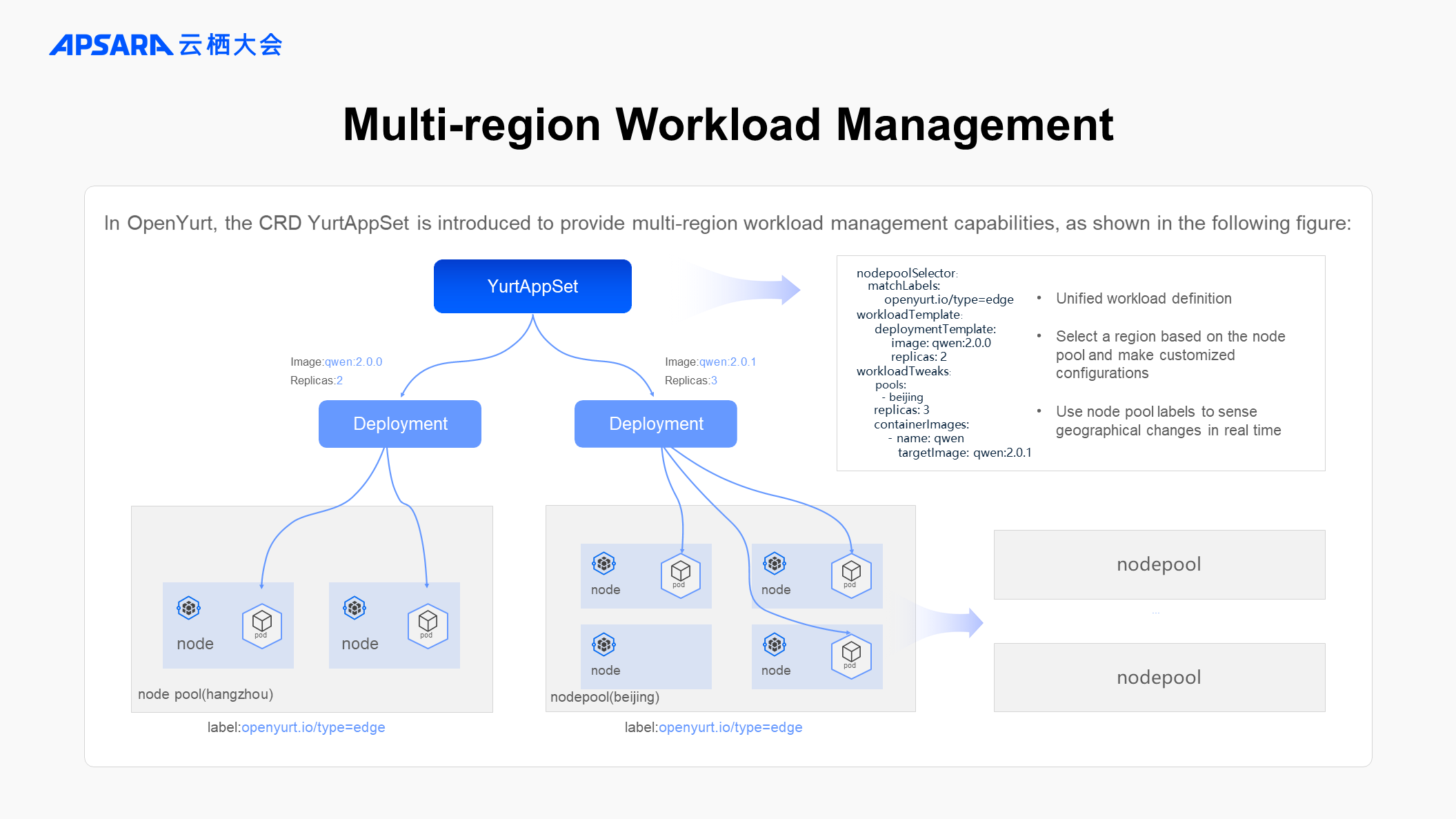

To simplify multi-region business management, OpenYurt designed and implemented a multi-region workload management model. This model introduces the concept of node pools, where nodes in different regions are grouped into separate node pools. Workloads can then be distributed across these multi-region (node pool) clusters. When changes occur in a node pool, the system automatically adjusts the deployment of workloads. Additionally, it supports differentiated configurations at the node pool level to meet the varied needs of applications in different regions.

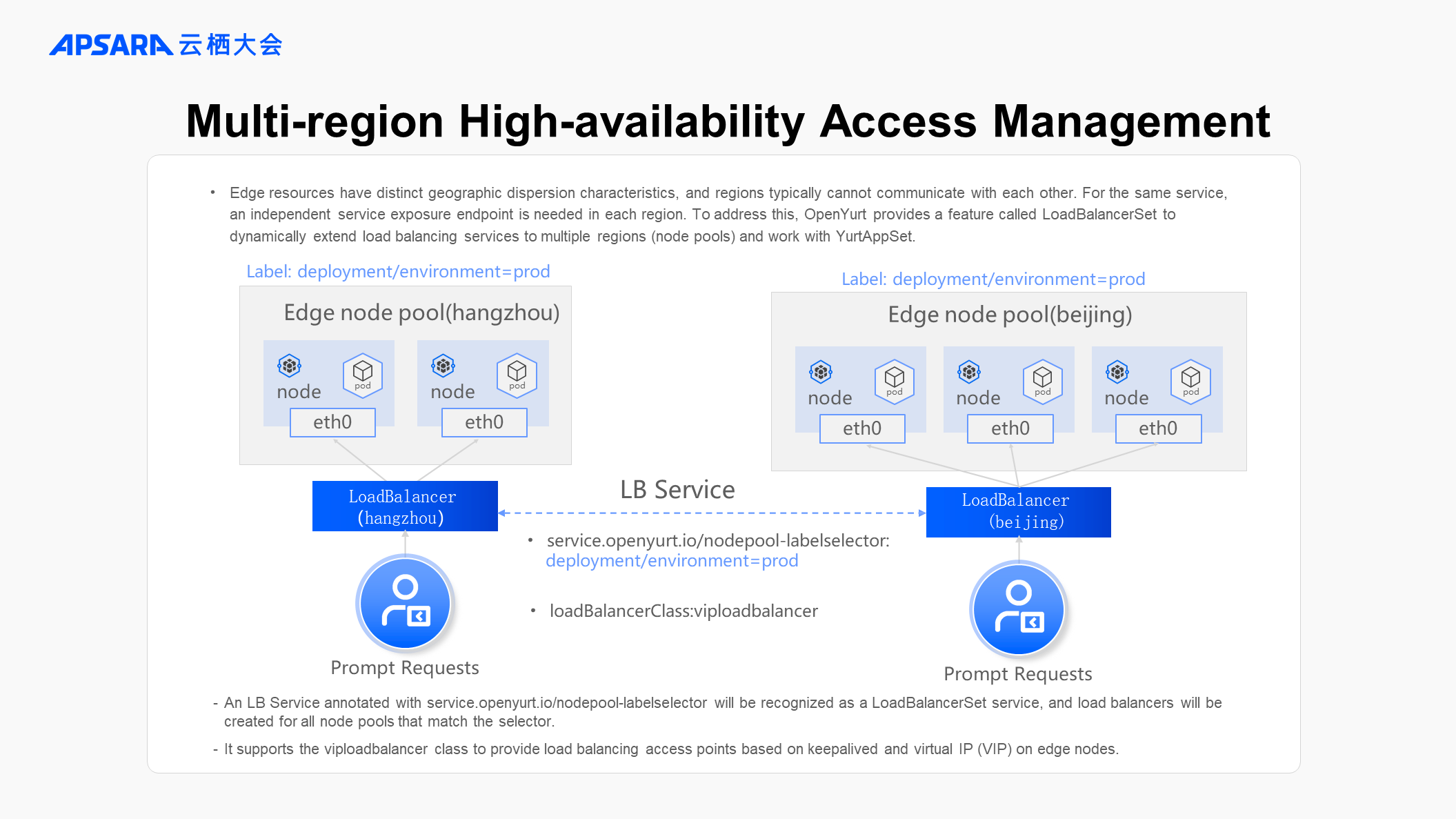

After providing a multi-region deployment model, there follows a need for multi-region service exposure. In edge scenarios, networks in different regions cannot interconnect, and the native service exposure methods of the community can result in the requested traffic being forwarded throughout the entire cluster, leading to service unavailability. Therefore, it is necessary to provide separate service exposure endpoints in each region, and each service exposure endpoint should only forward traffic to local services within that region. To maintain the consistency of experience in using native Services, OpenYurt supports specifying the region for service exposure via annotation configurations on Services and has implemented the automatic creation of region-level service exposure endpoints as well as the ability to keep traffic closed loop within the region.

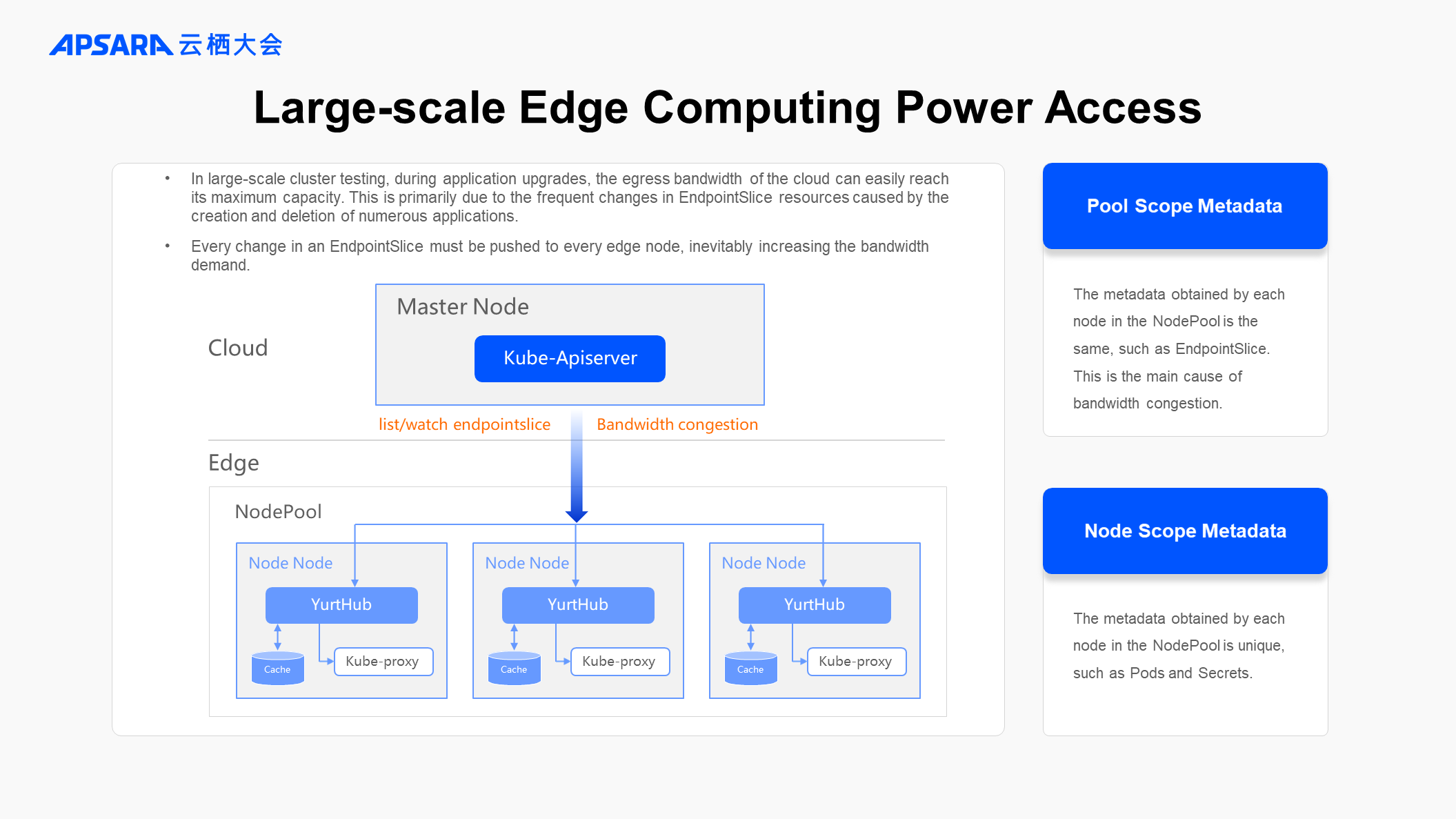

As OpenYurt is adopted in an increasing number of edge scenarios, attention has been drawn to its performance in large-scale scenarios. During large-scale testing, we found that in a cloud-edge integrated architecture, large-scale business releases or updates can lead to significant changes in cloud-edge traffic, easily reaching bandwidth limits. This is particularly problematic in public network communication scenarios, causing considerable traffic pressure and costs. The main traffic comes from system components deployed at the edge such as kubelet, coredns, and kube-proxy, and all related resources in the list-watch cluster such as endpointslices and services. To address this, the OpenYurt community offers node-level traffic reuse capabilities, which consolidate full requests for the same resource from different components on the node into a single request, significantly reducing cloud-edge traffic.

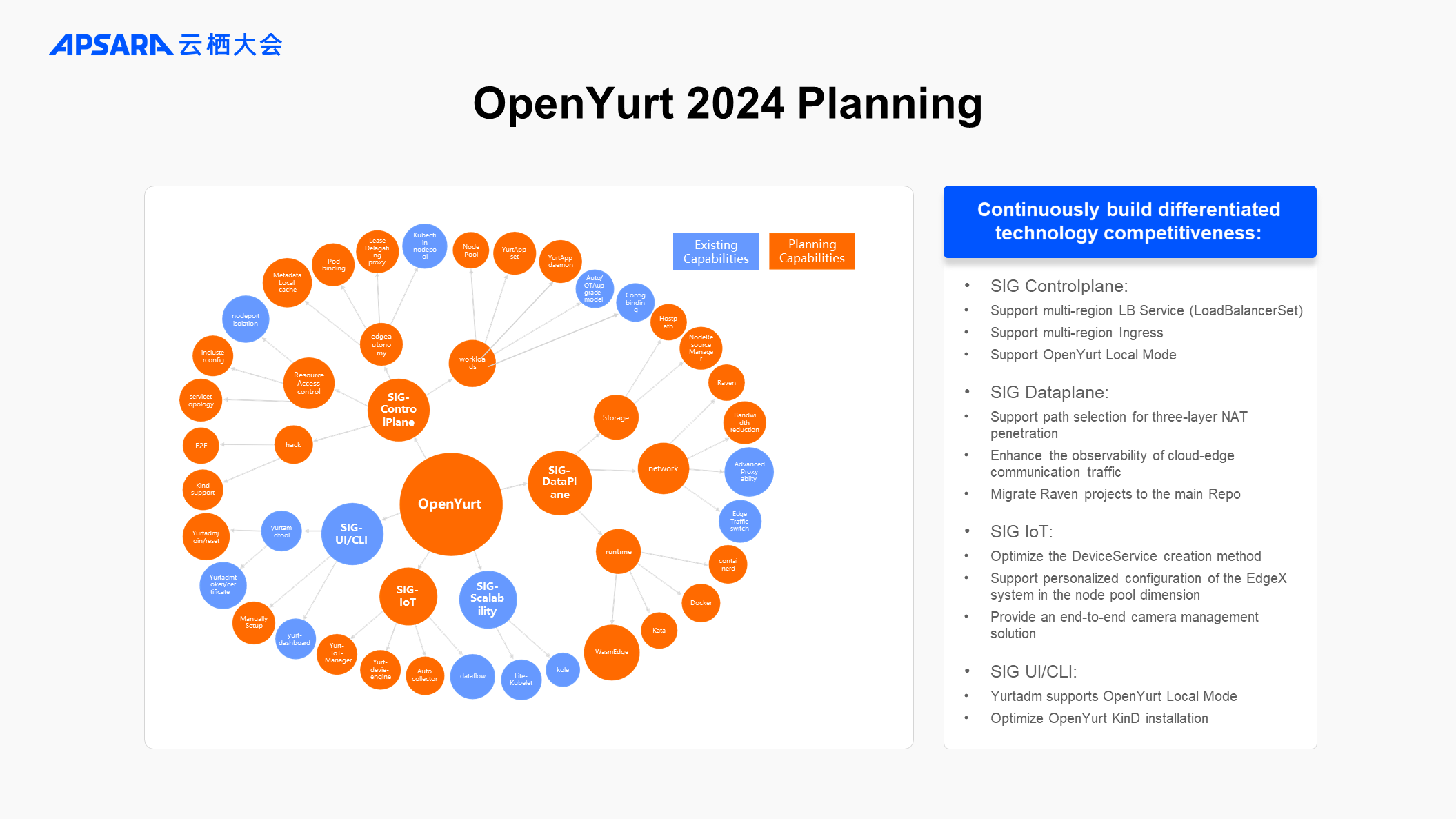

In the future, OpenYurt will continue to focus on cloud-edge integrated scenarios, aiming to solve the pain points of deploying cloud-native technologies at edge computing. It will prioritize new features such as OpenYurt Local Mode, node pool-level traffic reuse, and multi-region Ingress, while improving existing capabilities in autonomy, networking, device management, and ease of use, to address a wider range of edge computing scenarios.

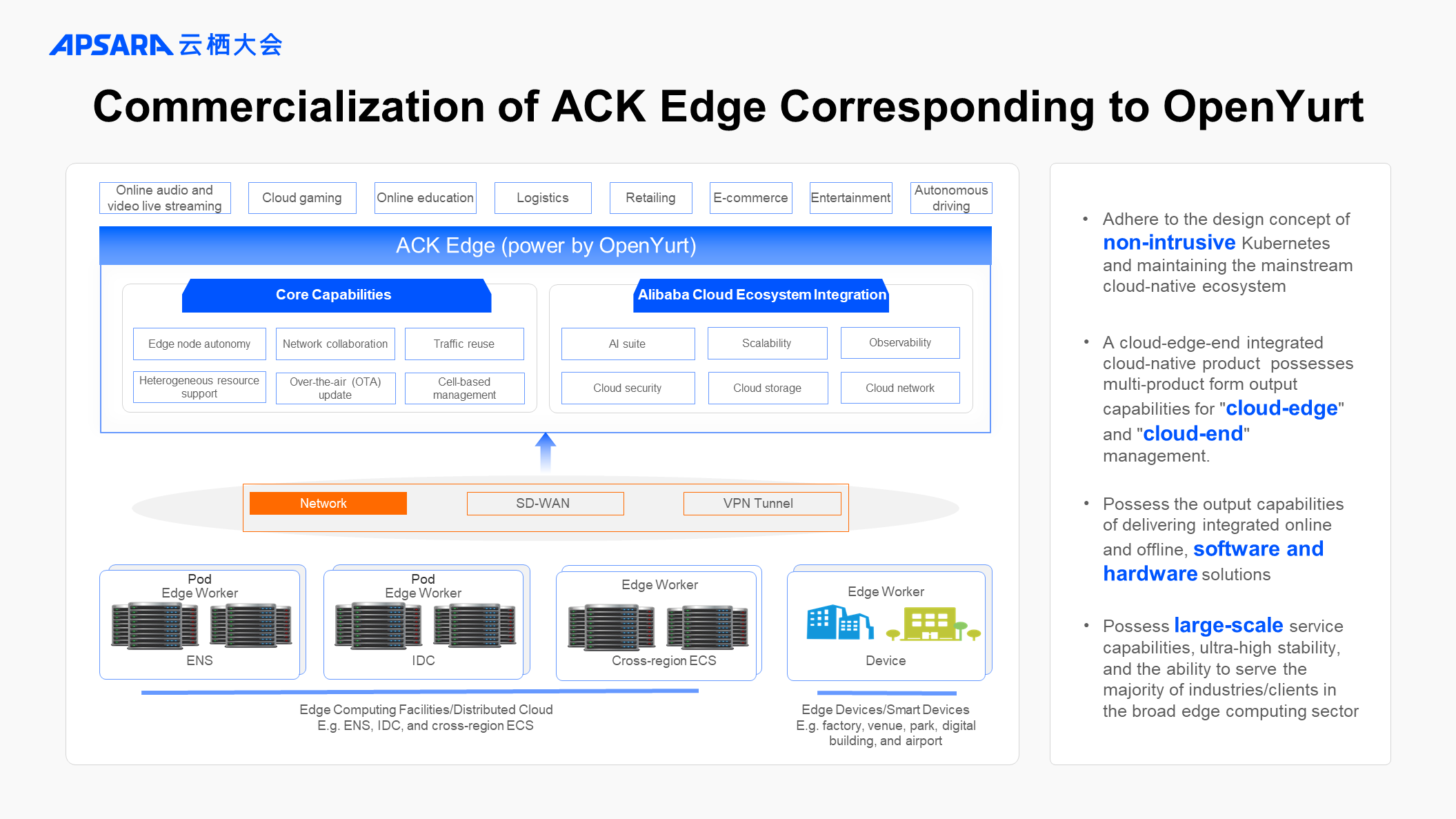

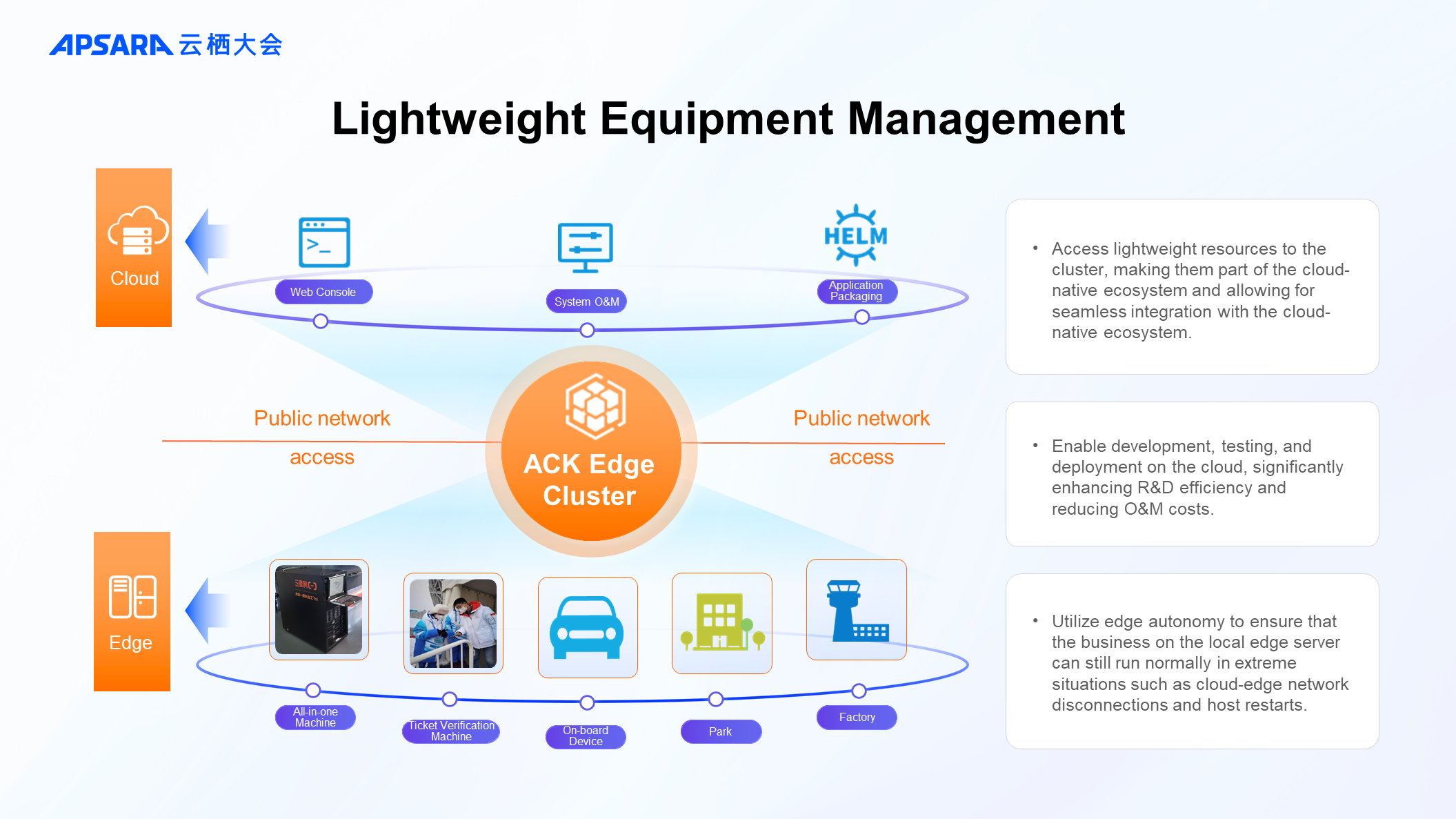

Within Alibaba Cloud, there is a commercial product called ACK Edge that corresponds to OpenYurt. The architecture of ACK Edge is divided into three parts: the first part is the accumulated product capabilities on the cloud over the years; the middle part is the network layer; and the bottom part is the resources and services on the edge side. Leveraging the network capabilities between the cloud and the edge, it achieves integrated management of cloud and edge resources. The cloud capabilities include two aspects: one is utilizing the cloud-edge tunnel to extend the existing product capabilities on the cloud such as AI suites, logging, and monitoring to the edge side, reducing the cost of building common cloud services locally; the other aspect is leveraging the cloud-edge integration capabilities to bring many cloud-native features such as containerization and container orchestration and scheduling to the edge, making the resources and services on the edge part of the cloud-native ecosystem. In terms of the middle network layer, in a cloud-edge architecture, a stable network solution is essential to handle the O&M traffic, and even business traffic, between the cloud and the edge. This solution could be an SD-WAN provided by cloud vendors or the Raven solution offered within the product. Finally, the edge side is also an area we are focusing on developing, and it differs from cloud data center management. Edge resources have characteristics such as complex network environments, strong heterogeneity, and geographical dispersion. To address these differences, we provide key capabilities such as edge network autonomy, heterogeneous resource access, and cell-based management.

After implementing the cloud-edge integrated architecture, many issues in edge scenarios can be resolved. Let’s look at several typical application scenarios.

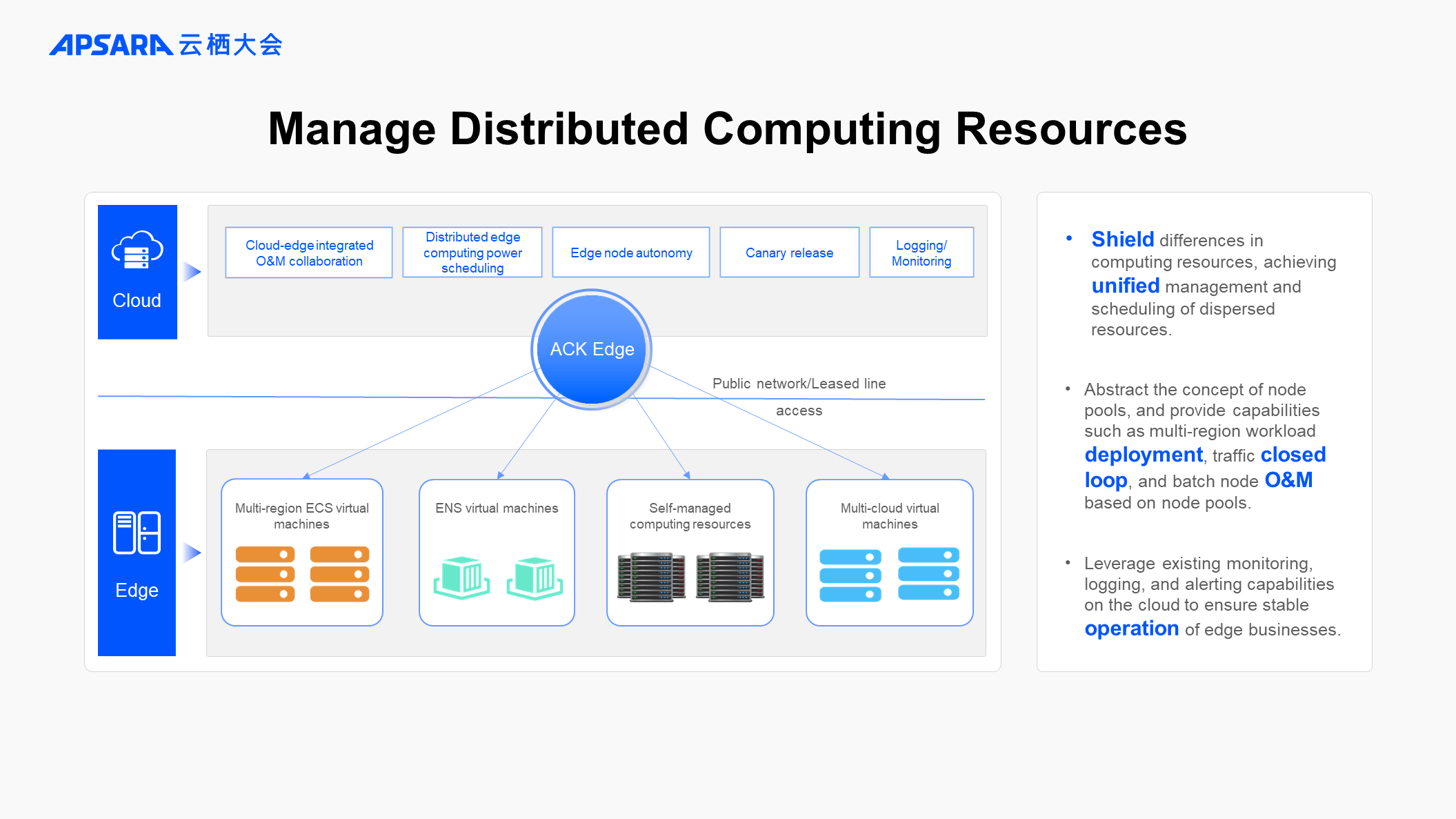

Manage dispersed computing resources to achieve unified management of various types of computing resources spread across multiple regions from the cloud center and unified task scheduling. In such scenarios, resources exhibit clear regional characteristics, with limited scale in each region and lack of centralized management and control, making deployment and operation challenging. Integrating these dispersed computing resources into ACK Edge for unified management, and leveraging the cloud-native operation system, can solve the problems of large-scale business management and operations. At the same time, it enables the deployment of product capabilities on the cloud such as logging and monitoring alerts to the edge, ensuring the stability of business distributed across multiple regions.

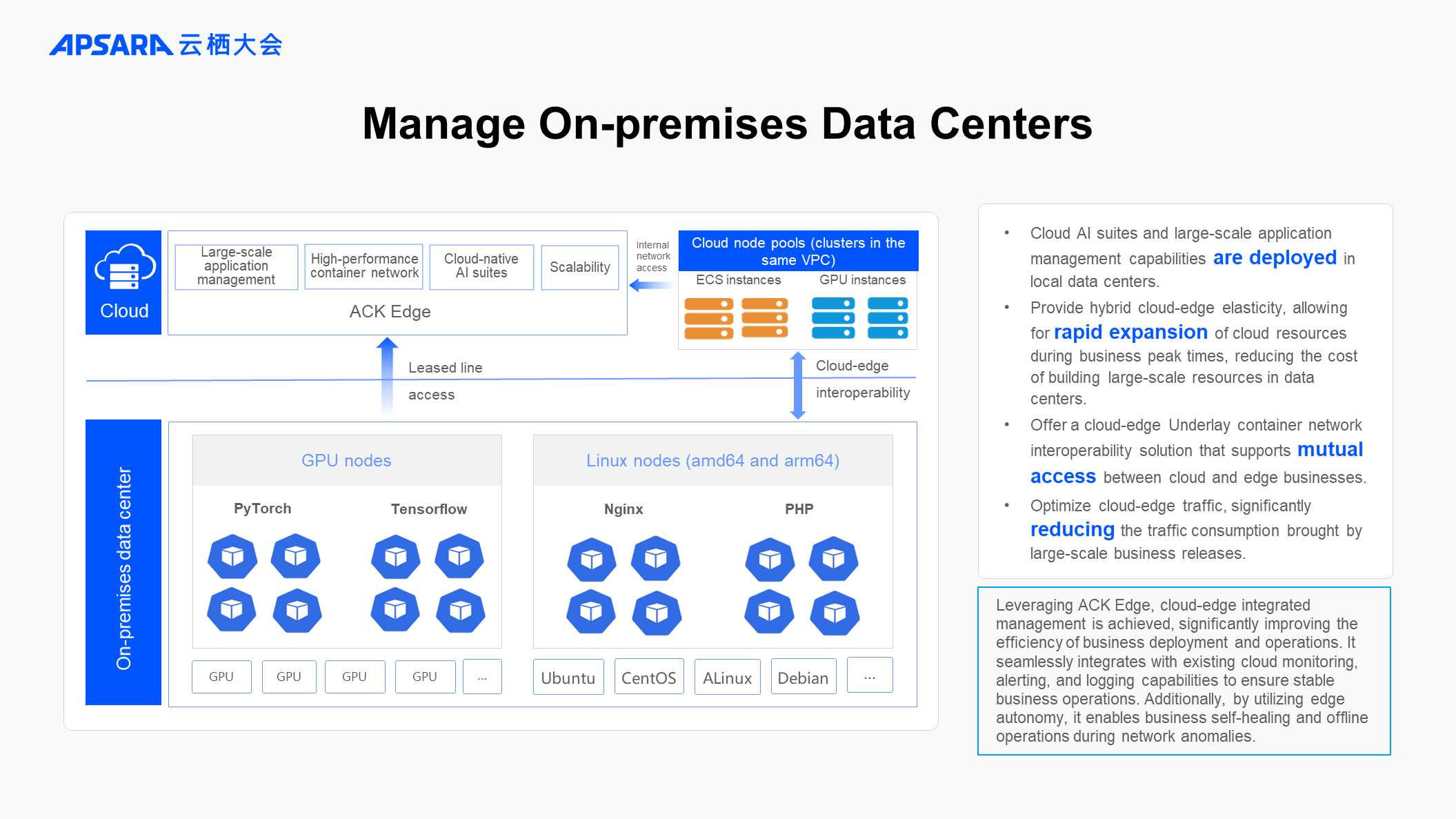

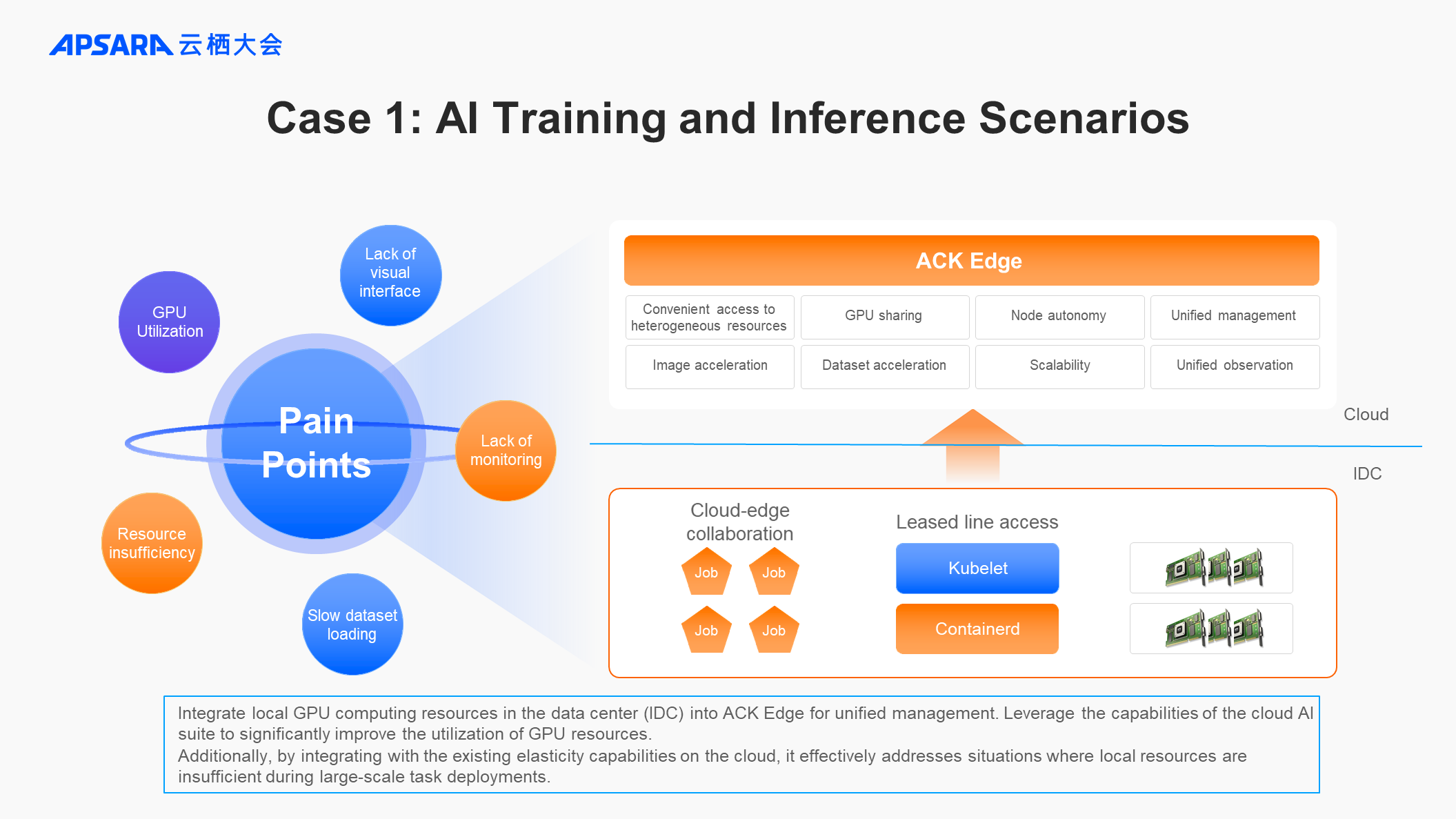

Manage local data centers and leverage cloud-edge leased lines to achieve seamless integration of cloud and on-premises capabilities. In this scenario, edge resources are located in local data centers, with relatively larger scales and direct connections to the cloud via leased lines. These businesses may involve online business, batch processing tasks, or AI training and inference. Such scenarios require high resource utilization and elasticity. By leveraging the cloud-edge integration provided by ACK Edge, mature resource management capabilities on the cloud can significantly improve the utilization of data center resources and seamlessly integrate with elasticity capabilities, effectively meeting the demand for elasticity during peak business periods.

Support lightweight device management and leverage the cloud-edge tunnel to achieve cloud-based development, testing, release, and operations of the business, enhancing the efficiency of operations on the end side. Resources in this type of scenario are usually small and are operated in complex network environments with a lack of remote operation methods. These resources could be integrated transportation machines, venue ticket verification machines, parking lot barriers, or even on-board devices. Through the cloud-edge operation tunnel and edge network autonomy capabilities provided by ACK Edge, business stability in extreme scenarios is ensured.

Since its commercial launch, ACK Edge has supported many customers in edge scenarios. Here are some typical customer cases to share with you.

Build a large-scale AI task training and inference platform based on ACK Edge. When customers use local data center resources for AI task training and inference, they encounter issues such as low resource utilization and a lack of monitoring tools during the training process. Additionally, during peak times for inference services, the resources in the local data center are insufficient to meet the demands of the business peaks. Through ACK Edge, integrating with cloud AI suite capabilities like GPU sharing and dataset acceleration, the utilization of local resources is improved. Leveraging the elasticity capabilities on the cloud meets the scaling requirements during peak times for inference tasks.

Smart ticket verification services at Winter Olympics venues. To increase the speed of audience entry, the ticket verification service needs to be deployed inside the venues. Due to security constraints, the resources within the venues have no public network access, which poses significant challenges for the development, testing, and maintenance of the ticketing system. To address this, the cloud-edge capabilities of ACK Edge are utilized to connect the resources within the venues to ACK Edge for unified management. This allows the business to be developed, deployed, and maintained on the cloud. Ultimately, this system supports all ticket verification services for the Olympic venues and ceremonies during the Beijing Winter Olympics.

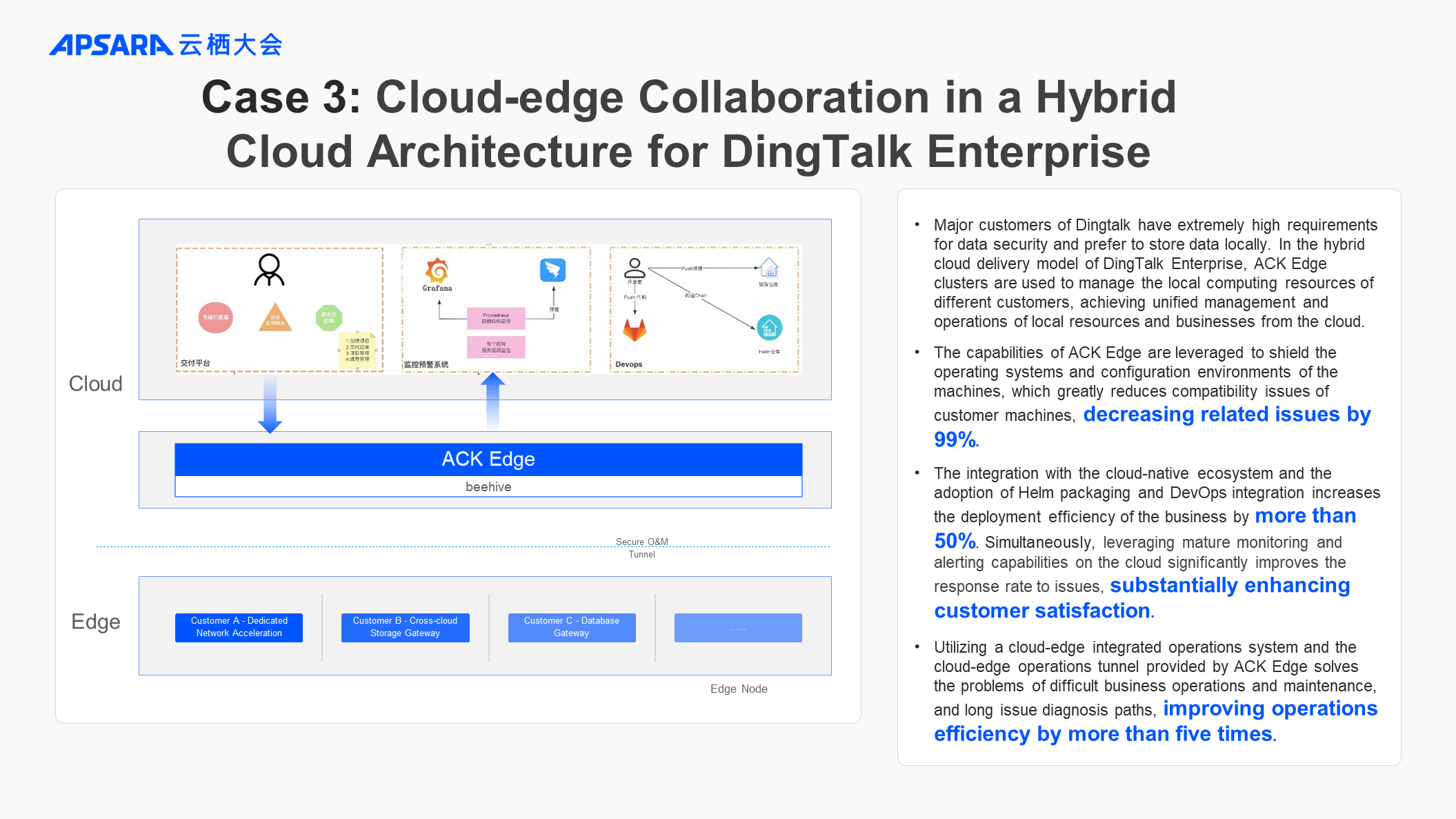

Hybrid cloud-edge collaboration for DingTalk Enterprise. Customers of DingTalk Enterprise have extremely high requirements for data security and privacy. Therefore, DingTalk proposes a hybrid cloud architecture where data is stored locally, and applications are deployed on the cloud. To ensure the stability of this architecture, DingTalk needs to deploy foundational services including traffic acceleration, service proxies, and database gateways on the customer side. With an increasing number of customers, issues arise such as the strong heterogeneity of customer-side environments and the difficulty in managing foundational services. To resolve these issues, ACK Edge is used to manage the foundational services and resources on the customer side, effectively shielding against the heterogeneity of underlying resources, reducing compatibility issues by 99%, and improving problem diagnosis efficiency by more than five times through the operation capabilities of the cloud-edge integration.

That concludes our presentation. Thank you.

175 posts | 31 followers

FollowAlibaba Developer - July 9, 2021

Alibaba Developer - January 11, 2021

Alibaba Cloud Native Community - January 9, 2023

Alibaba Developer - March 3, 2022

Alibaba Cloud Native Community - October 13, 2020

Alibaba Developer - May 31, 2021

175 posts | 31 followers

Follow Best Practices

Best Practices

Follow our step-by-step best practices guides to build your own business case.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Container Service