By Zhihao Xu and Yang Che

Cost, performance, and efficiency are becoming the three core factors influencing the production and application of large models, presenting new challenges for enterprise infrastructure in the creation and use of these models. The swift advancement of AI demands not only algorithmic breakthroughs but also engineering innovation.

Firstly, in the age of AI commercialization, the training and inference of large models are more widespread. Viewing large models pragmatically, there are only two possible outcomes after training a large model. The first is that the model proves to be useless, bringing the process to a halt. The other outcome is that the model is valuable and thus employed globally, with the primary usage stemming from inference. Whether it's OpenAI or Midjourney, users pay for each instance of inference. Over time, the ratio of model training to inference usage will shift towards thirty-seventy, or even twenty-eighty. Model inference will become the main battlefield of the future.

The challenge of large model inference lies in its cost, performance, and efficiency, with cost being the most significant. As larger models demand more resources, and due to the scarcity and therefore expense of GPUs, the cost of model inference continues to rise. Ultimately, users are willing to pay for the value of inference, not the cost. Reducing the cost per inference is thus the top priority for infrastructure teams.

On this foundation, performance becomes the core competitive advantage, especially for large models in the consumer market. Quicker inference and better performance are key to enhancing user engagement.

While the commercialization of large models is fraught with uncertainty, low cost and high performance are essential to staying in the game, and efficiency is what ensures you win at the table.

Moreover, efficiency is another challenge. Models need continuous updates, and here efficiency refers to the frequency and speed of updates. The greater the engineering efficiency, the better the chance of iterating more valuable models through rapid upgrades.

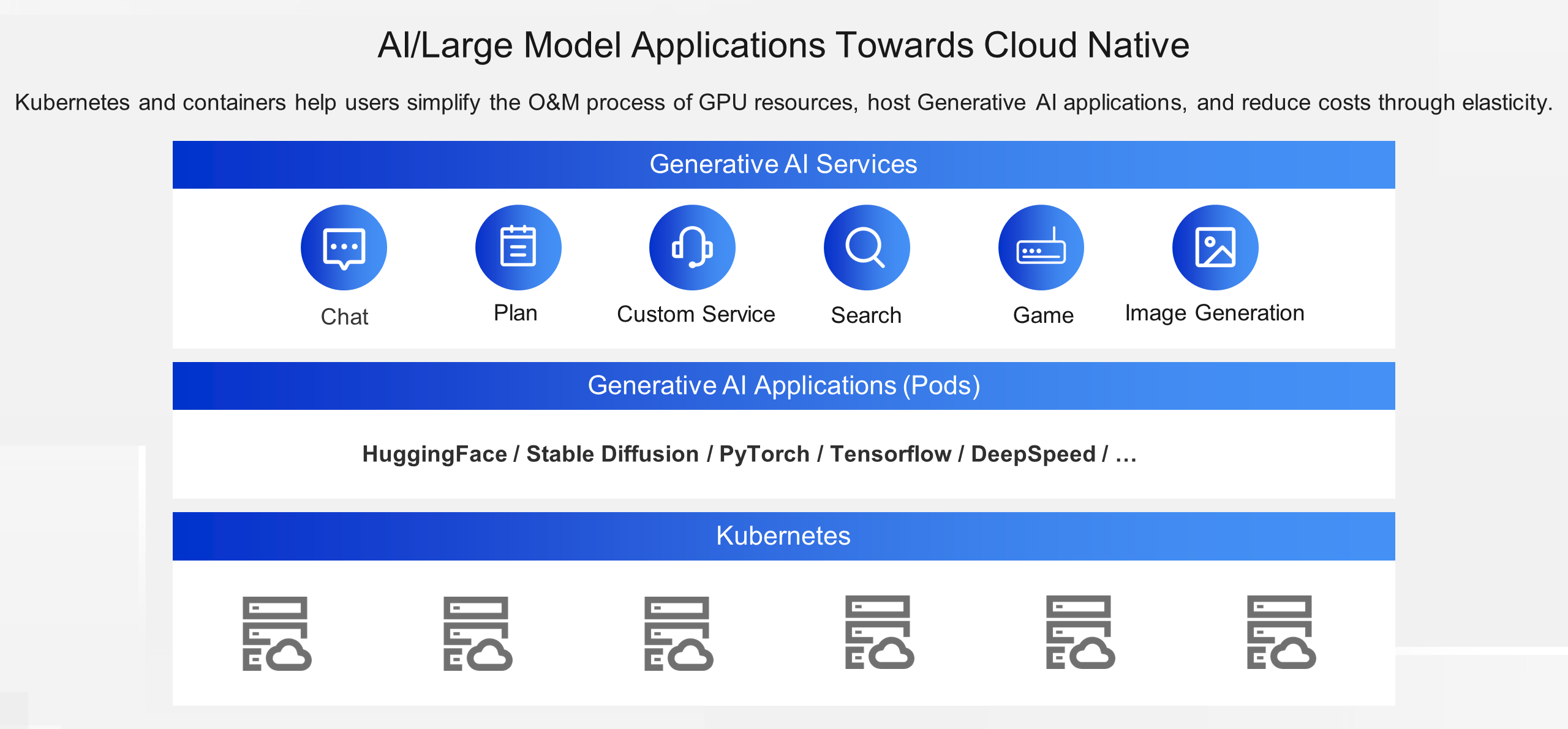

In recent years, containers and Kubernetes have emerged as the preferred runtime environments and platforms for an increasing number of AI applications. On one hand, Kubernetes helps users to standardize heterogeneous resources and simplify the operation and maintenance (O&M) processes. On the other hand, AI applications, which largely depend on GPUs, can benefit from the elasticity provided by Kubernetes to save on resource costs. Amidst the surge of AIGC and large models, running AI applications on Kubernetes is becoming the de facto standard.

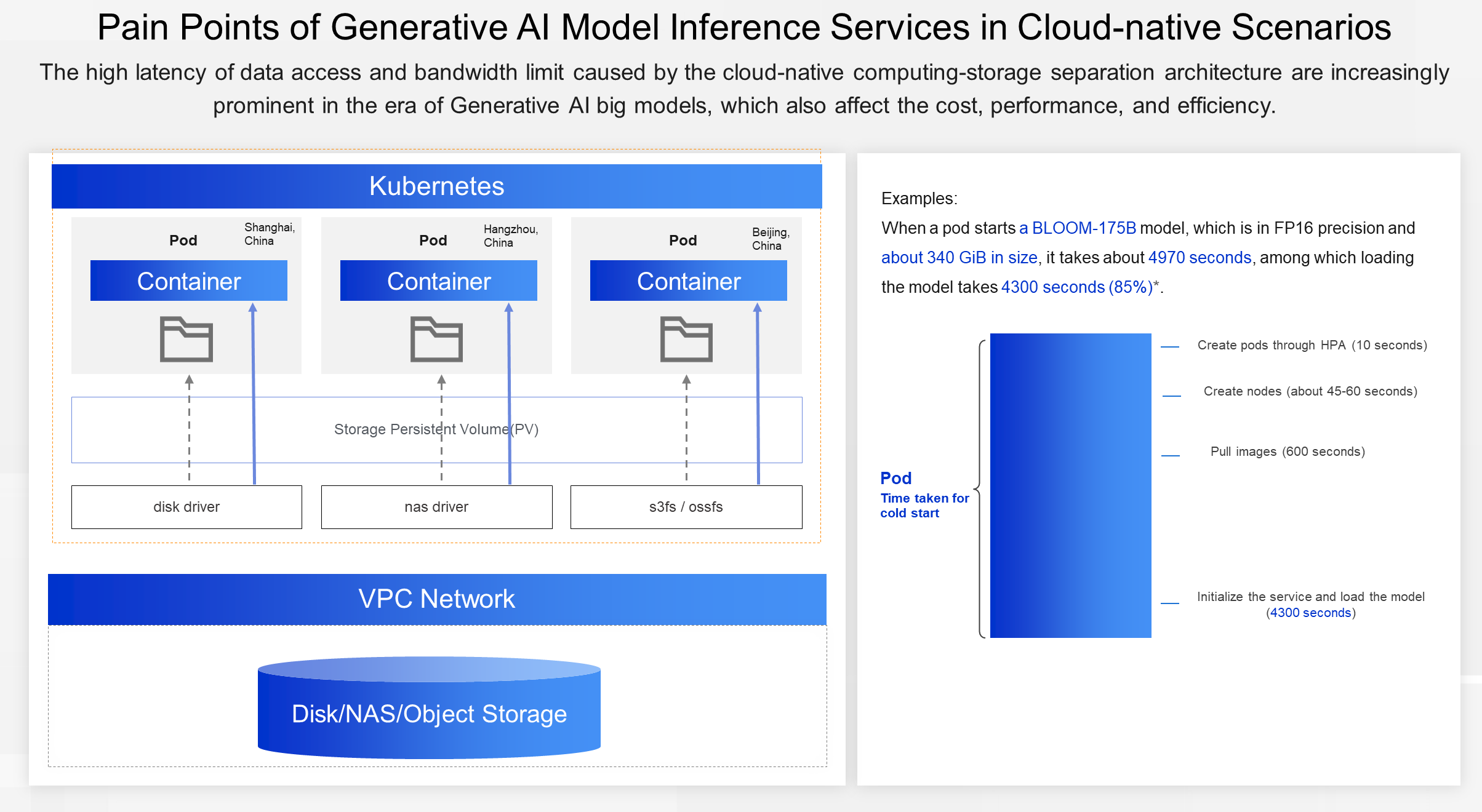

A key contradiction in AIGC inference scenarios lies in the growing size of large models versus the high latency in data access and bandwidth limitations caused by the compute-storage separation architecture. This contradiction affects cost, performance, and efficiency.

Scalability and on-demand use are a useful tool to control the cost of large models. However, the contradiction remains unsolved. Take a BLOOM-175B model as an example, as shown in the right part of the preceding figure. The model is in FP16 precision and about 340 GiB in size, but the scaling takes 82 minutes, close to one and a half hours. To find the root cause of the problem, we break down the startup time of the model. It is shown that time is mainly spent on HPA scaling, creating computing resources, pulling container images, and loading the model. It can be seen that loading a large model of about 340 GB in size from the object storage service (OSS) takes about 71 minutes, which takes up 85% of the total time. In this process, the I/O throughput only reaches a few hundred MB per second.

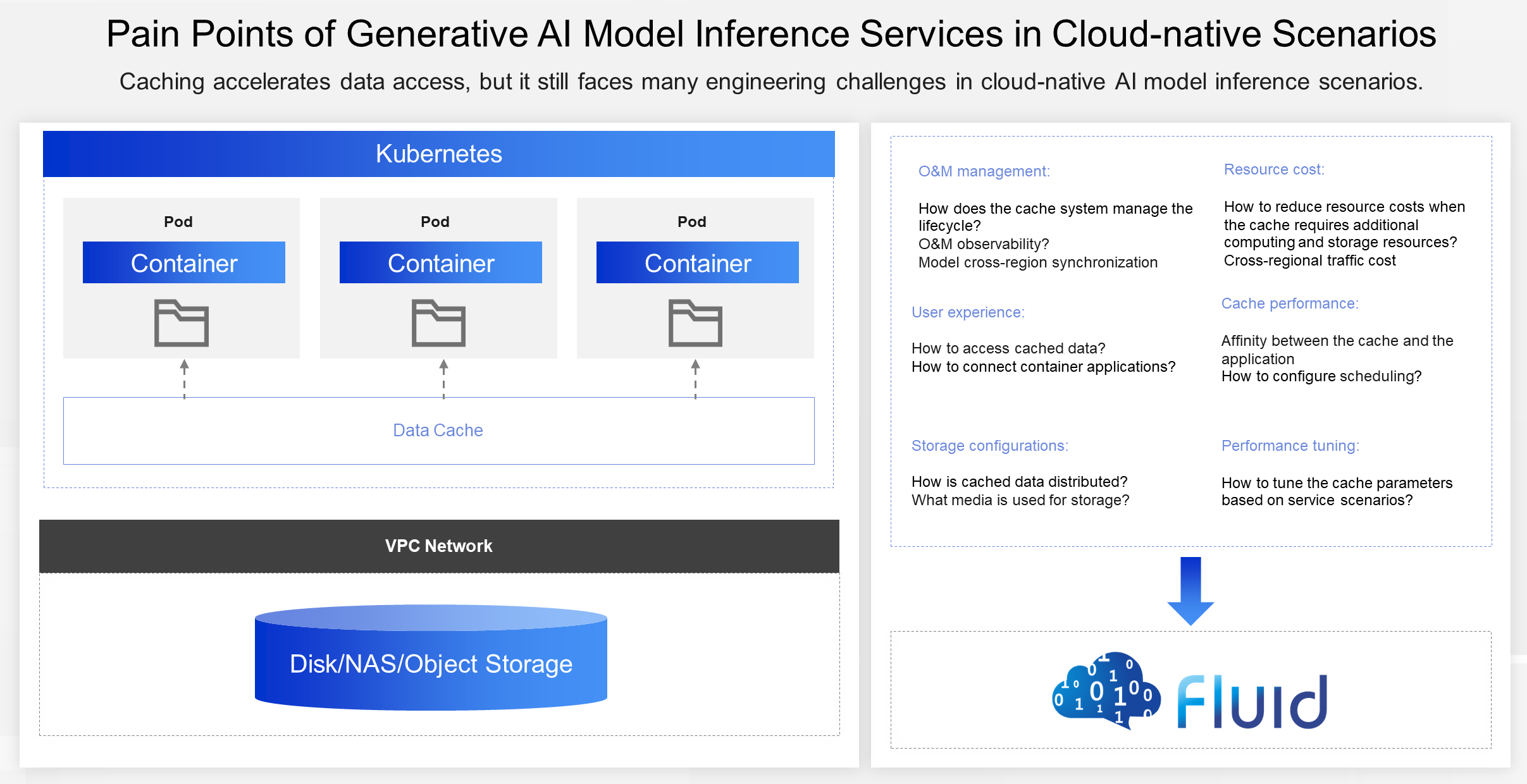

Then, do we have any solution to this contradiction? A common idea might be to add a caching layer, but is that sufficient? In practice, it's not that simple, and several issues may arise. The first issue is speed. Can the cache be effectively utilized? If caching doesn't improve speed, what's the culprit? Is it cache planning, hardware configuration, software configuration, network issues, or scheduling?

The second issue is cost. Caching machines are typically high-bandwidth, with large memory and local disks — configurations that are not cheap. Balancing maximum performance with cost control is a challenging problem.

The third issue is usability. How user-friendly is it? Does it require changes to user code? Does it increase the workload for the O&M team? With continuous model updates and synchronization, how can we reduce the O&M costs of the cache cluster and ease the burden on the O&M team?

It was in the context of addressing these challenges with the engineering implementation of caching solutions that the Fluid project was conceived.

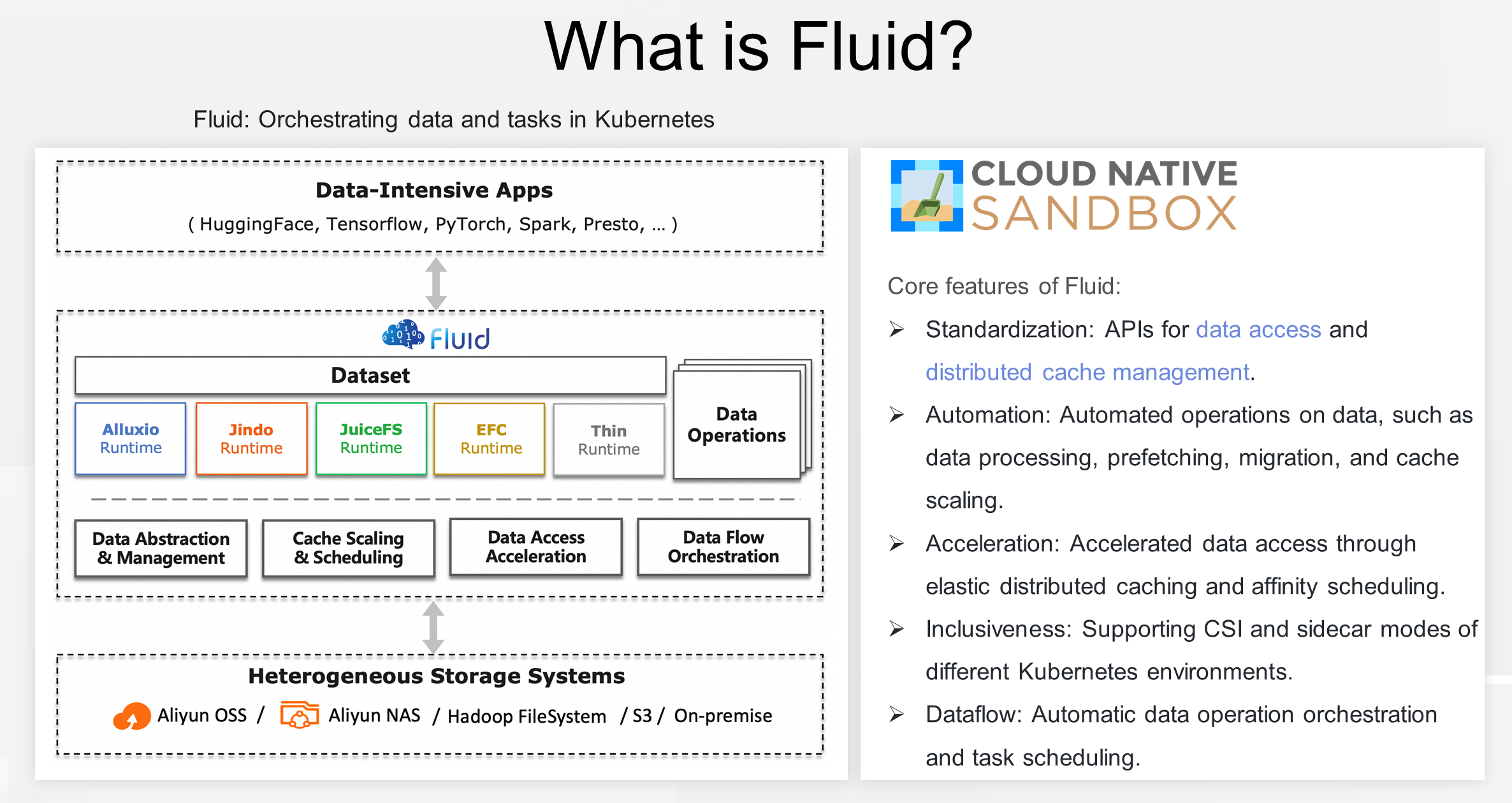

First, let's learn about the concept of Fluid. Fluid is responsible for orchestrating data and computing tasks that use data in Kubernetes. This includes not only spatial orchestration but also temporal orchestration. Spatial orchestration means that computing tasks are preferentially scheduled to nodes with cached data or about to cache data, improving the performance of data-intensive applications. Temporal orchestration allows simultaneous submissions of data operations and tasks. However, data migration and prefetching must be performed before the task is executed to ensure that the task runs smoothly without human intervention, thus improving engineering efficiency.

From the architecture diagram, Fluid connects various AI and big data applications upward and various heterogeneous storage systems downward. Fluid currently supports multiple cache systems, including Alluxio, JuiceFS, and in-house JindoFS and EFC.

Specifically, Fluid provides 5 core capabilities:

1.1 Fluid standardizes the patterns of data access and abstracts optimized data access methods for different scenarios, such as large language models, autonomous driving simulation data, and small files used for image recognition.

1.2 Fluid also standardizes cache orchestration. As various distributed cache systems emerge — like JuiceFS, Alluxio, JindoFS, and EFC, which accelerate different storage solutions — it's important to note they are not inherently designed for Kubernetes. If used on Kubernetes, they require standardized APIs. Fluid transforms these distributed cache systems into cache services that are manageable, elastic, observable, and self-healing, and exposes them through Kubernetes APIs.

Fluid automates data operations such as processing, prefetching, migration, and cache scaling using Custom Resource Definitions (CRDs), making it easier to integrate these operations into automated O&M systems.

Fluid speeds up data processing through optimized distributed caching and cache affinity scheduling for specific scenarios.

Fluid supports various runtime environments, including native, edge, serverless, and multi-cluster Kubernetes. Depending on the environment, you can choose different modes of Container Storage Interface (CSI) plugins and sidecars to run storage clients.

Ultimately, Fluid features data and task orchestration. You can define dataset-centered automated operation workflows and set the order of dependencies for data migration, prefetching, and task execution sequence.

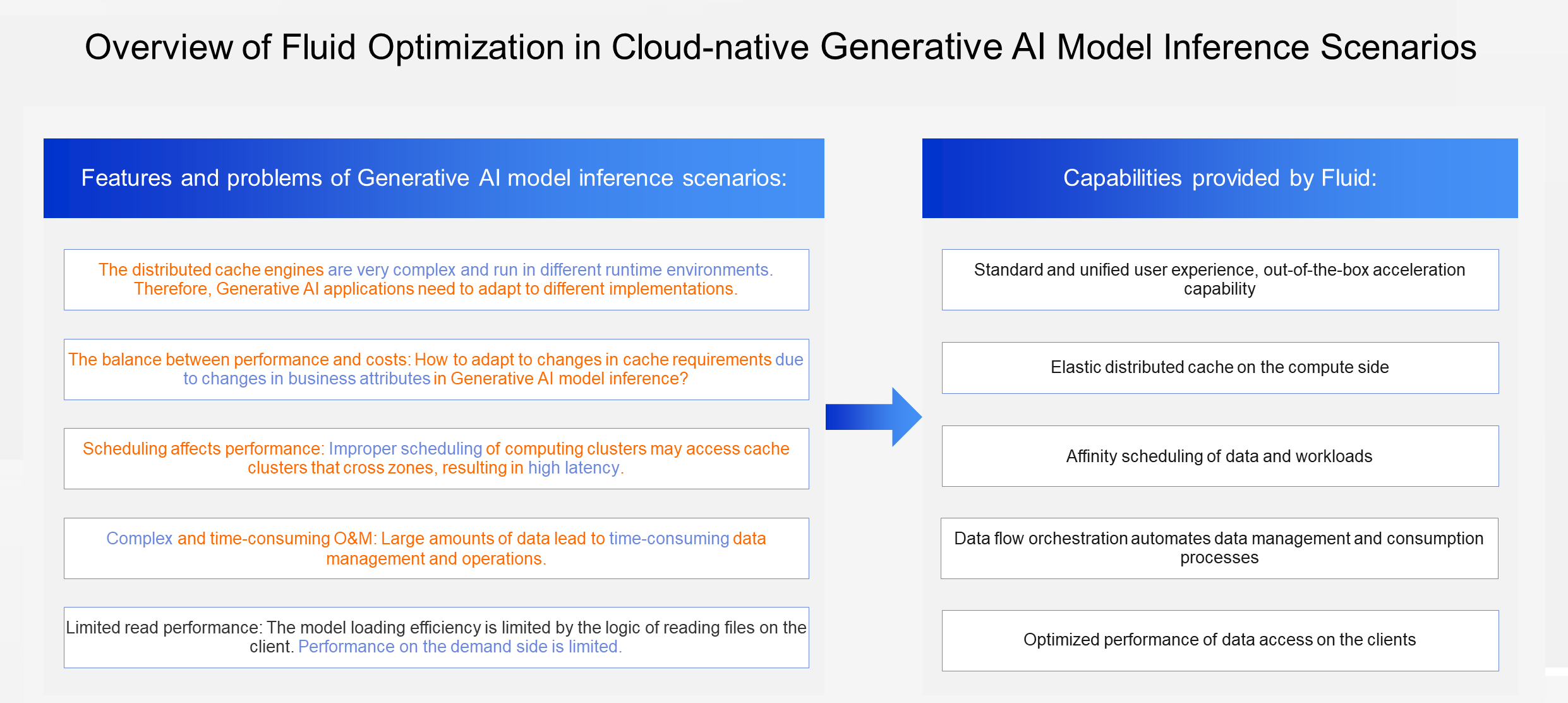

Back to the Generative AI model inference scenarios, Fluid has brought many optimization solutions.

First, Fluid provides one-click deployments and seamless connections. Distributed caches are very complex and run in diverse environments. Therefore, Generative AI applications need to adapt to different runtimes, including Alluxio, JuiceFS, JindoFS, and different runtime environments, including public, private, edge, and serverless clouds.

Second, Fluid provides elastic caches. Generative AI model inference services are flexible and changeable in business attributes, and elastic caches help you to scale your caches based on your business requirements, thus achieving maximized benefits while balancing the cost and performance.

Third, Fluid provides data-aware scheduling. It allocates compute tasks as close as possible to the data they use.

Fourth, Fluid provides data flow orchestration. It reduces the complexity through automated model inference and data consumption.

Finally, in terms of performance, Fluid also provides an optimized reading method suitable for cloud-native caching, thus making full use of node resources.

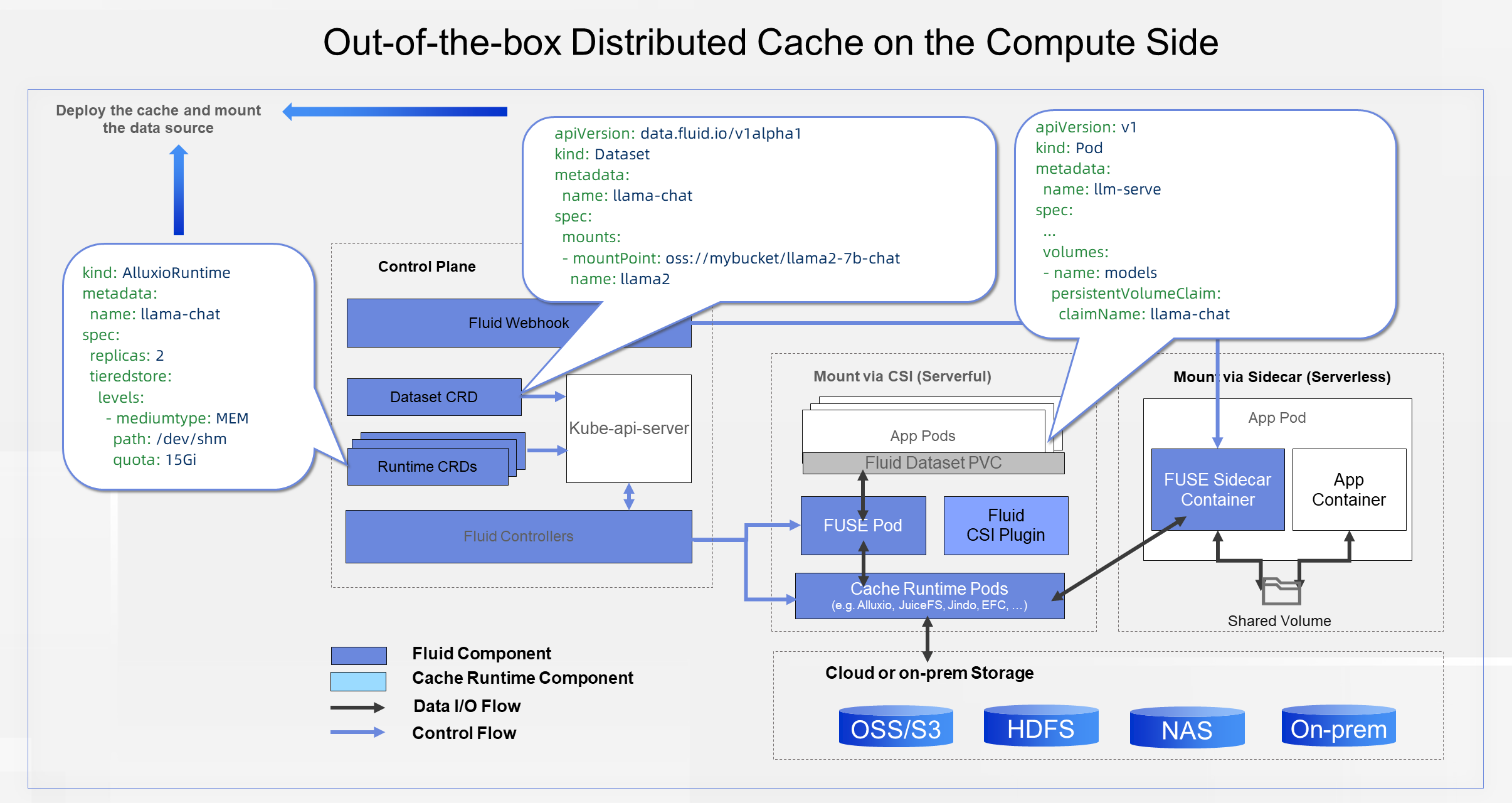

The preceding figure illustrates the technical architecture of Fluid. As shown in the figure, Fluid provides two CRDs: Dataset and Runtime, which represent the data source to be accessed and the corresponding cache system respectively. Here, we use the Alluxio cache system as an example, and the corresponding CRD is AlluxioRuntime.

The dataset contains the path to the data of the model that you need to access, such as a subdirectory in an OSS bucket. When the dataset and the corresponding runtime are created, Fluid automatically configures the cache, pulls the cache components, and creates a PVC. Inference applications only need to mount this PVC to read the model data from the cache, which is consistent with the Kubernetes standard storage method.

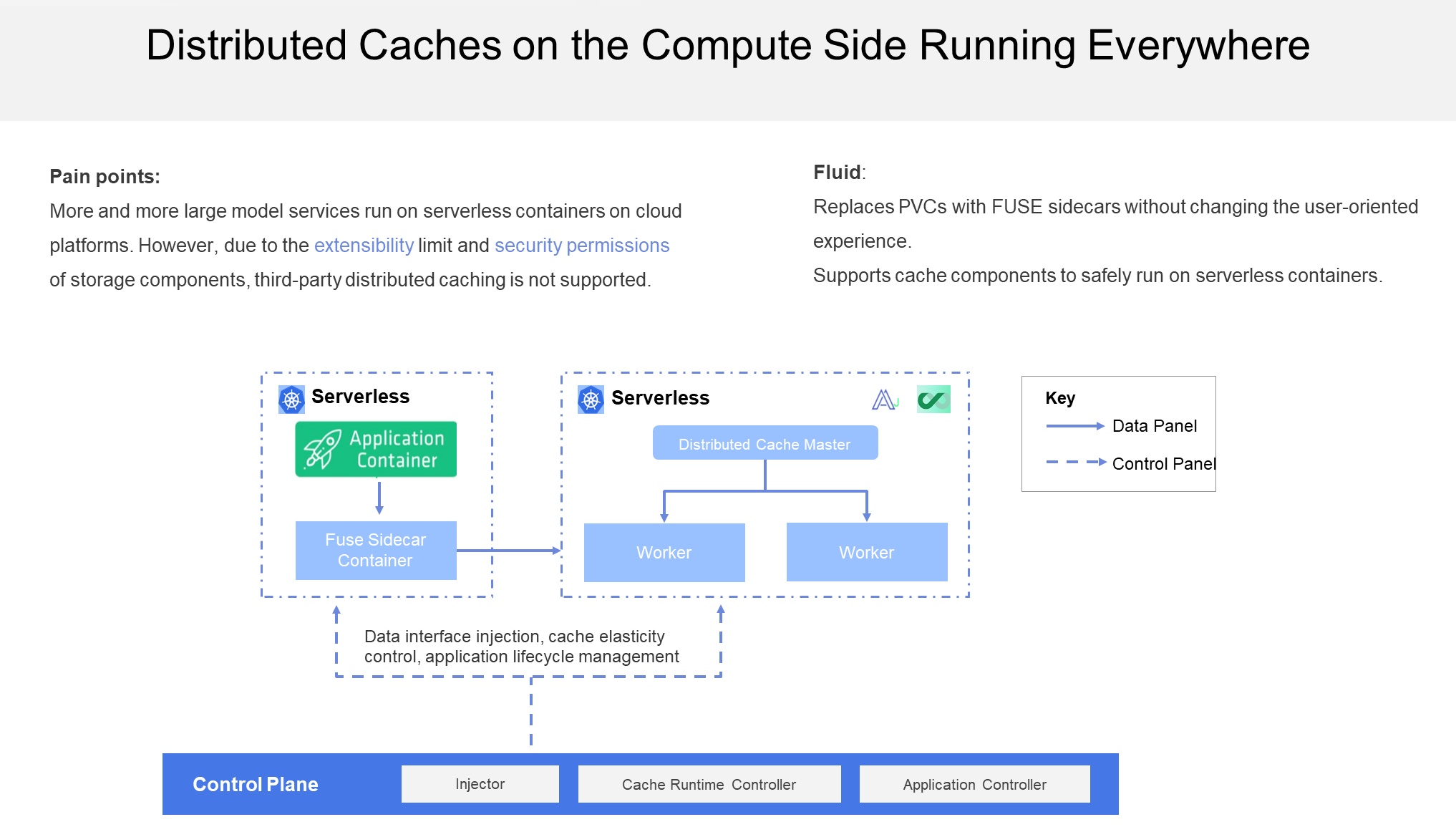

Generative AI inference runs on a variety of platforms, including Kubernetes for cloud services, self-managed Kubernetes, edge Kubernetes, and serverless Kubernetes. Due to its ease of use and low burden, serverless Kubernetes has increasingly become the choice of users. However, for security reasons, serverless Kubernetes does not open third-party storage interfaces, so only internal storage is supported.

On the serverless container platform, Fluid automatically converts a PVC into a sidecar that adapts to the underlying platform and opens a third-party configuration interface to allow and control the lifecycle of this sidecar container, ensuring that it runs before the application container starts and automatically exits after the application container ends. In this way, Fluid provides rich scalability and can run a variety of distributed cache engines.

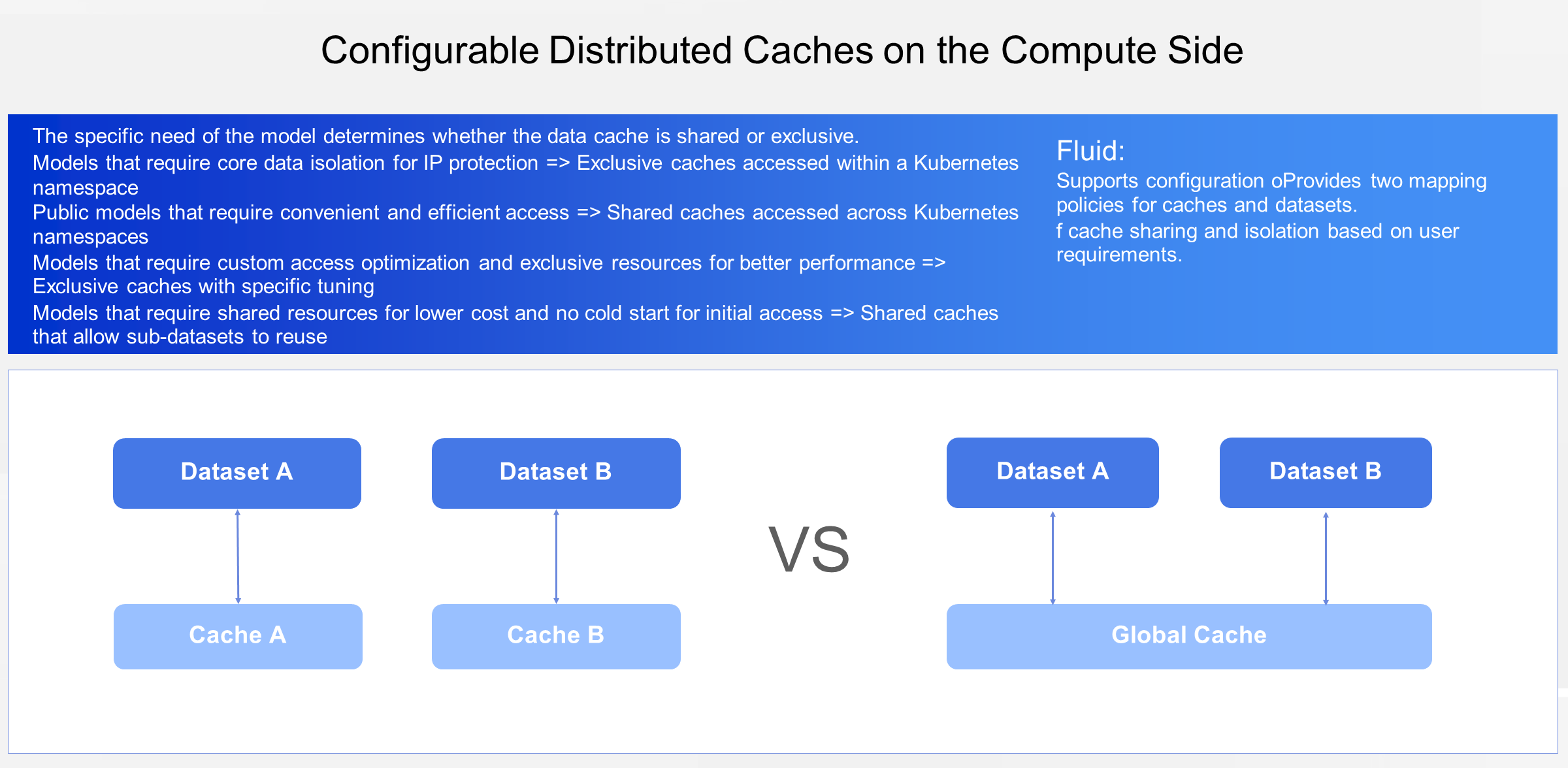

Whether Generative AI models should be shared or exclusive should depend on real business scenarios. For some models that require IP protection, access isolation of core data is necessary, while other open-source models have no such worries. Some models are highly sensitive to performance, especially in some of the most popular image generation scenarios where images are created based on text descriptions within 20 seconds, the time taken for loading such 8-10 GB models should be controlled to less than 5 seconds. This requires an exclusive allocation of I/O resources on the cache to avoid competition and cooperate with the specific tuning mode. For some novel image generation scenarios, users have to consider the resource cost. Fluid provides complete support for both exclusive and shared caches, which can be configured flexibly.

The second optimization provided by Fluid is the elastic distributed cache on the compute side. Here, we are talking about how to provide high performance.

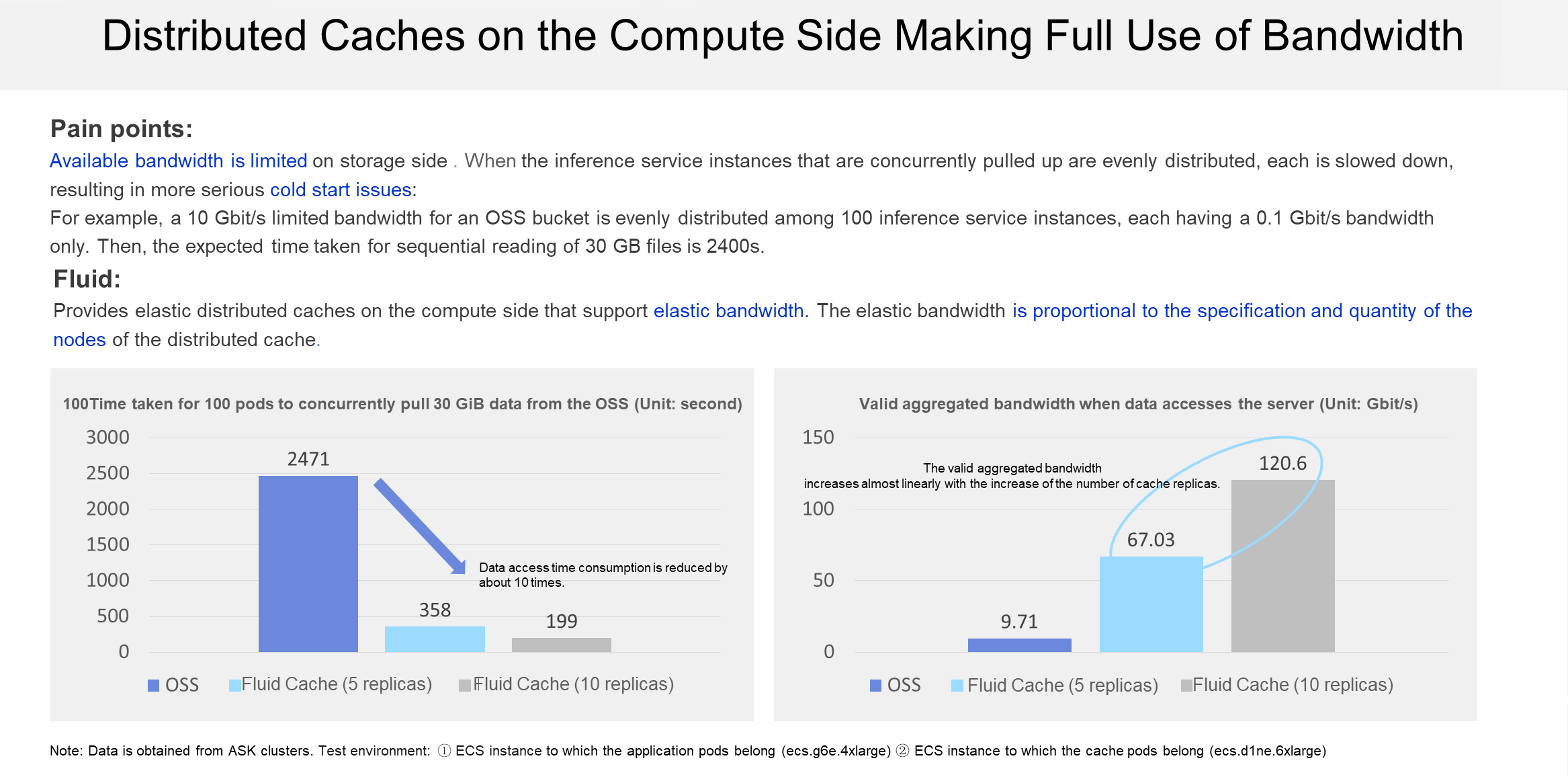

Why do we require an elastic distributed cache on the compute side? Isn't it enough to use a simple distributed cache? We can understand this problem from a technical point of view. In actual production scenarios, AI model inference service instances are often started concurrently. For example, if you need to pull up 100 inference service instances at a time and each instance needs to pull data from the OSS, the available bandwidth for each instance is only 1% of the total. If the default 10 Gbit/s OSS bucket loads a 30 GB model, the expected time taken for loading the model is 2400 seconds, for each instance.

In fact, the elastic distributed cache on the compute side exactly transforms the limited bandwidth of the underlying storage system into an elastic bandwidth in Kubernetes clusters. The size of this bandwidth depends on the number of nodes in your distributed cache. From this perspective, you can convert the cluster into an elastic distributed cache cluster that scales at any time according to the changing requirements for I/O of the business scenario. From the test data we can also see that if 100 pods are started concurrently, the process is accelerated when you use a cache. The more cache worker nodes are used, the better the performance. The main reason is that the aggregated bandwidth is larger, therefore each pod gets more bandwidth. As you can also see from the graph on the right part of the preceding figure, when you use more distributed cache nodes, the aggregated bandwidth also increases almost linearly.

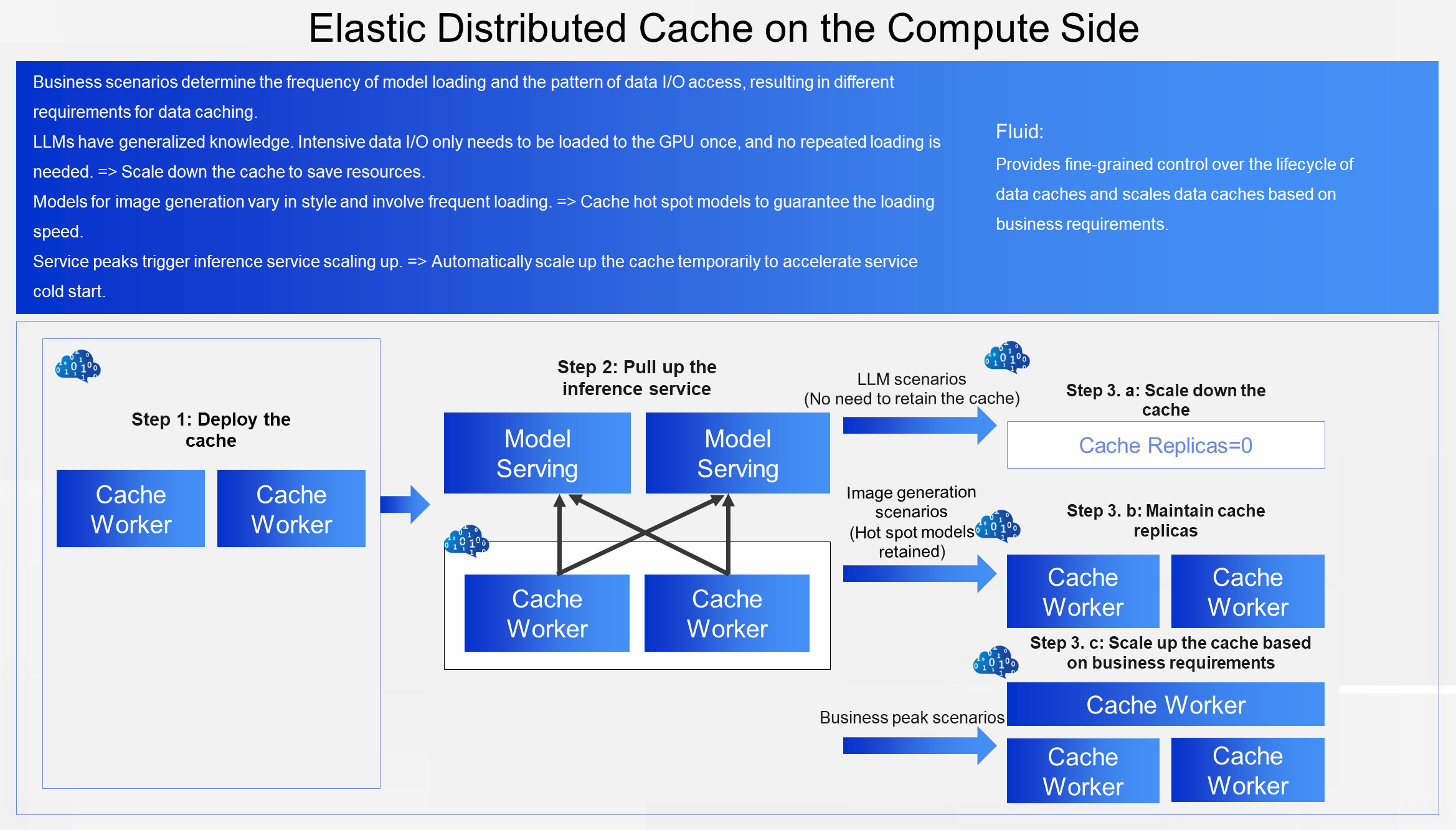

After introducing how to improve performance, the next question to consider is how to maximize the performance of the cache while decreasing the cost as much as possible. How to strike a balance between cost and performance is essentially related to the I/O access mode of the business scenario. The observability of Fluid on the cache, combined with manual scaling or automatic scaling in Kubernetes such as HPA and CronHPA, allows you to scale the data cache based on your business requirements. The following are several specific examples.

For large language model (LLM) scenarios, one of the features of these models is that they have strong generalized knowledge. Therefore, when you load an LLM to a GPU memory, it can provide services for various scenarios. Therefore, this service has high requirements for instant I/O throughput. To be specific, it requires the cache to scale up first and then instantly scale down to 0 when the inference service is ready.

For image generation scenarios, an SD (Stable Diffusion) model market contains a large number of SD models of different styles. Therefore, continuous I/O throughputs are required, especially for hot spot models. In this case, caching certain replicas is a better choice.

In either scenario, if the server needs to scale out due to business peaks, the data cache will follow it for a temporary scale-up to alleviate the cold start problem during the process.

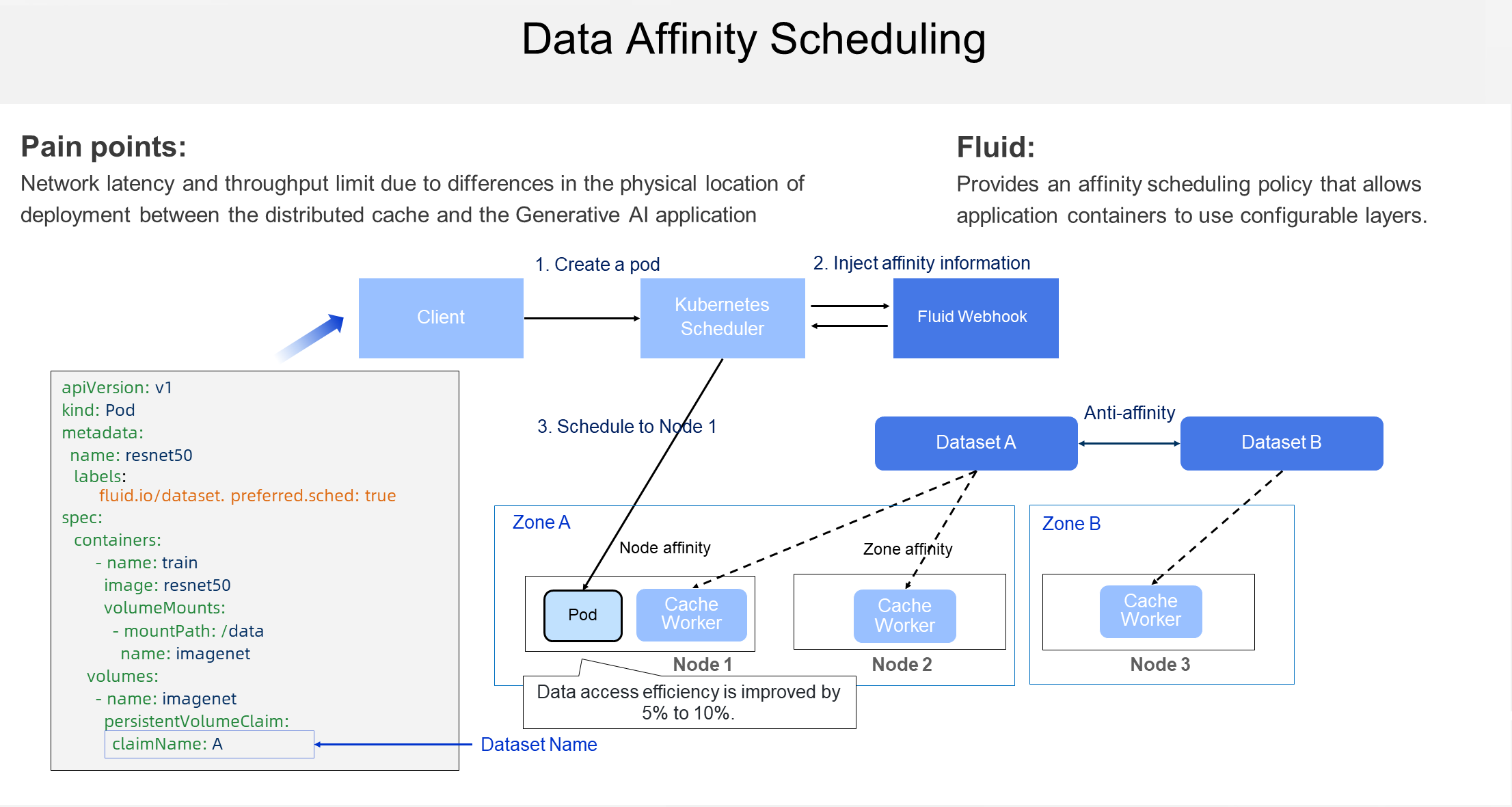

The public cloud provides flexible elasticity and high availability. This is achieved through the underlying multi-zone. Multi-zone is very suitable for Internet applications. It sacrifices a little bit of performance for application stability. However, in Generative AI large model scenarios, verifications indicate that the cross-zone latency is still problematic, because large model files are generally large, and the packets they transmit are numerous, which amplifies the latency. Therefore, the affinity between the cache and the application that uses the cache is crucial. Fluid provides non-intrusive affinity scheduling. It schedules applications based on the geographical location of the cache and preferentially schedules those that are in the same zone with the cache. It also provides the configurability of weak affinity and strong affinity to enable users to use it flexibly.

Now we understand that a flexible caching architecture is necessary and advantageous, but problems still exist in practice.

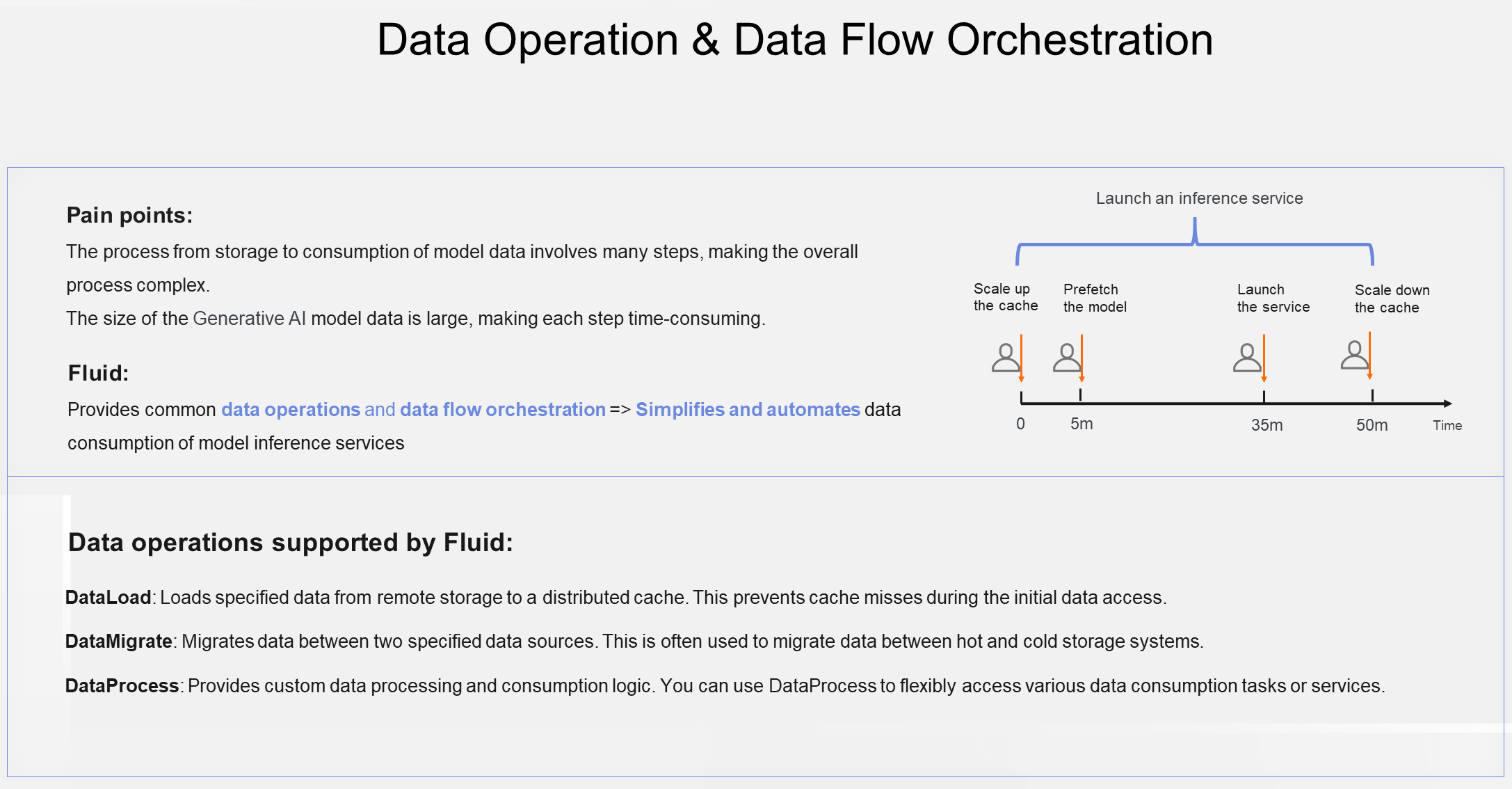

Consider the following process. A new AI model inference service is to be published today. First, you need to deploy a distributed cache and scale it up to cache certain replicas to avoid a cold start of the service. Next, you prefetch the model data and write it to the distributed cache to avoid a cache miss, which may take 30 minutes. Then, you pull up 100 service instances, and the startup of these instances may take another 10-20 minutes. Finally, after ensuring that the service publishing has no problem, you scale down the cache to reduce the cost.

In this process, you need to confirm the status of each step and operate to execute the next step from time to time. O&M of data access and consumption processes is complex and laborious.

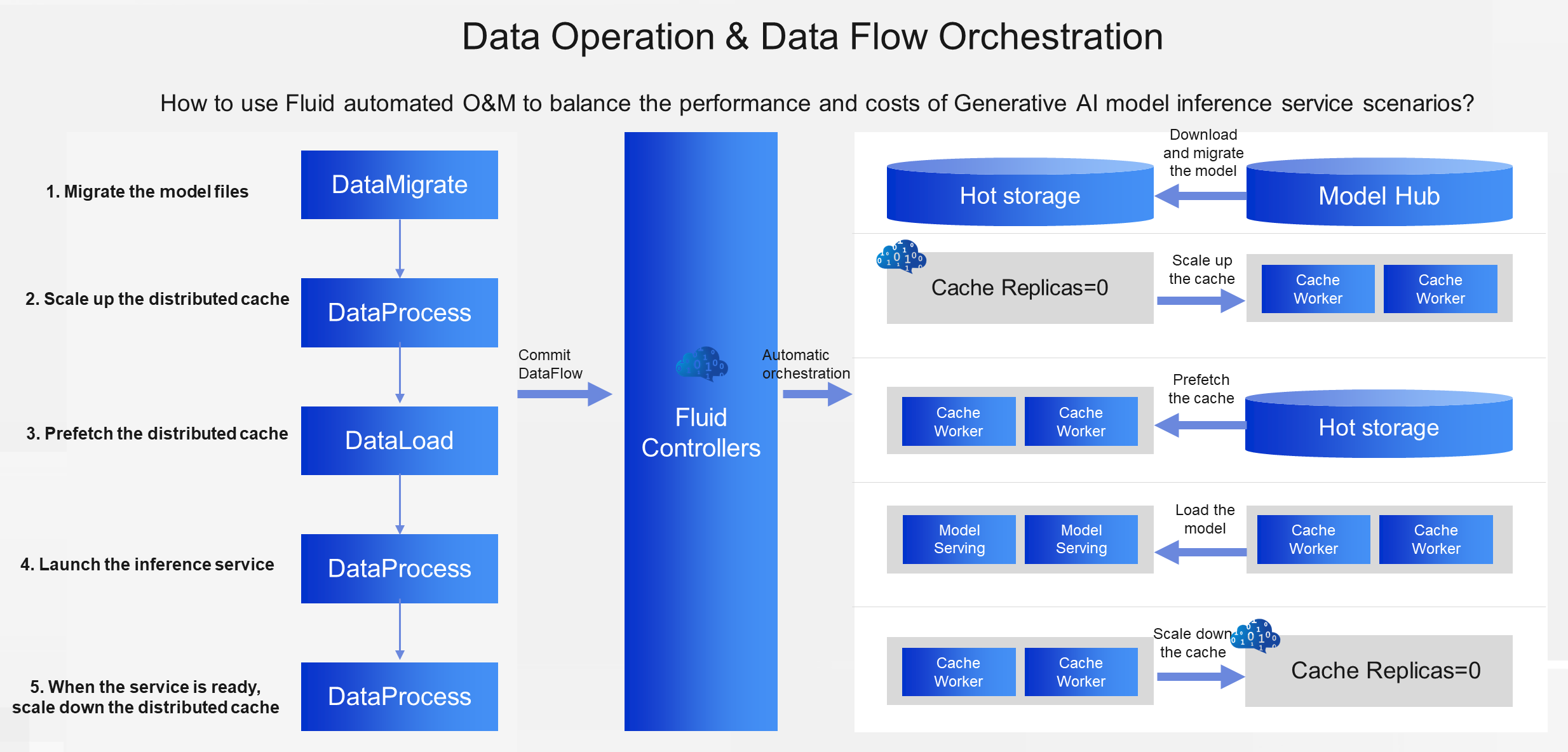

To solve this problem, Fluid defines the data consumption process as the processes of the service using the data cache and the system preparing the data cache. It automates these processes through data operation abstraction and data flow orchestration. For example, Fluid provides Kubernetes levels of abstraction to describe the most common operations related to data caching, such as data migration, data prefetching, and service-related data processing.

These data operations can be concatenated into a data flow, thus we can define the preceding example process with 5 steps of data operations. The O&M personnel only need to submit the data flow at a time, and Fluid will automatically complete the entire process of publishing the AI model inference service. This improves the automation ratio of the cache process.

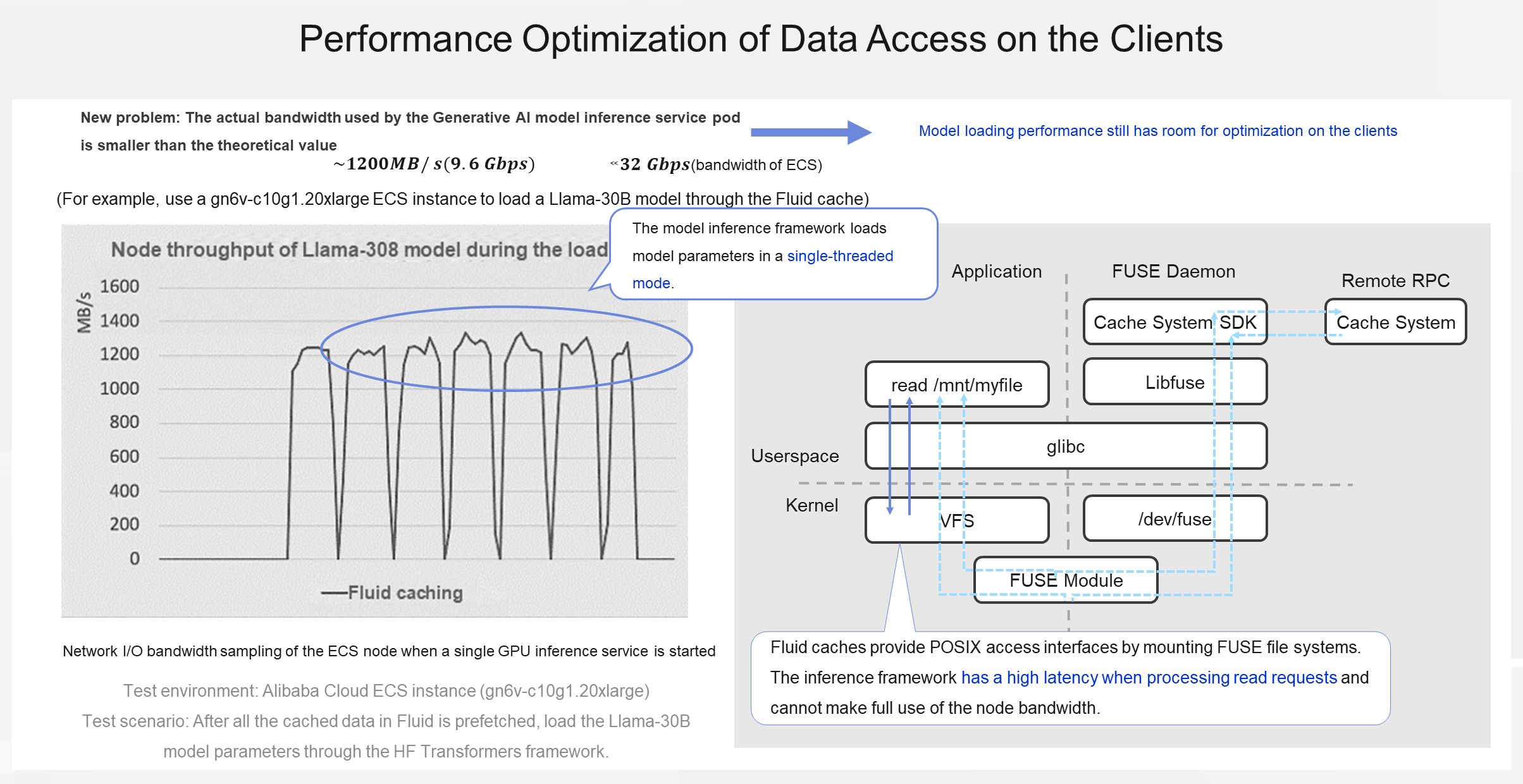

Then, the answer to the preceding question "How to use the cache well" lies in the resource cost and O&M efficiency. However, during the test, we found that the bandwidth used during the service startup process was much less than the bandwidth available for these GPU computing instances. This means that the loading efficiency of the model on the client still has room for optimization.

According to the node throughput, the runtime framework of AI inference reads model parameters by using a single thread. This can work in non-container environments. If local SSDs are used to store model parameters, the load throughput can easily reach 3 to 4 GB/s. However, in a computing-storage separation architecture, even if we use a cache, a file system in user space (FUSE) is required to mount the cache to the container. The FUSE overhead combined with additional RPC calls increases the latency of read requests, thus lowering the bandwidth upper limit that a single thread can reach.

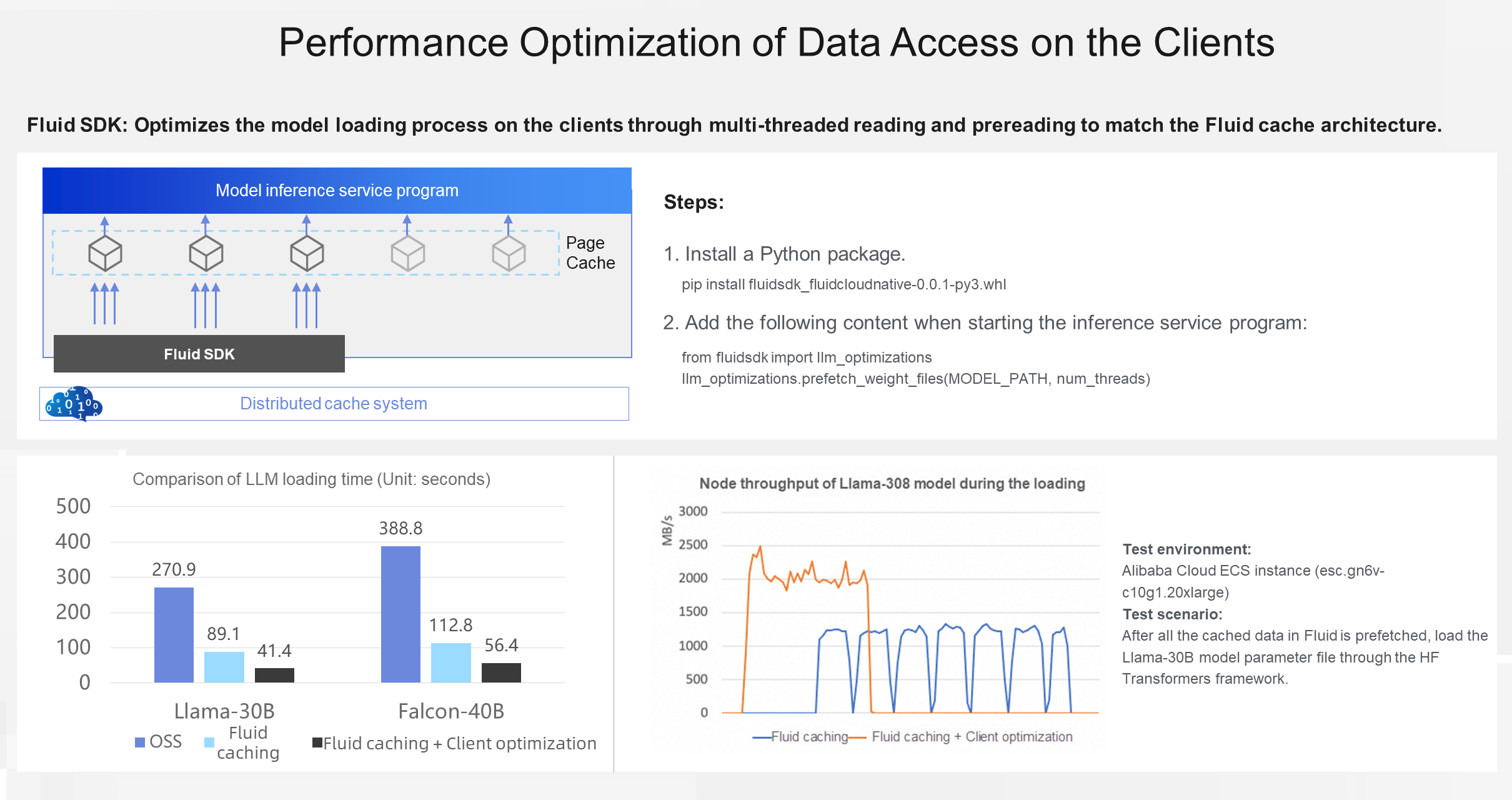

To maximize the huge I/O throughput provided by the distributed cache, Fluid provides an SDK for Python to read and preread by using parallel threads, thus accelerating the model loading process. From our test results, we can see that based on the distributed cache on the computing side, this kind of optimization on clients reduced the cold start time by half and made it possible to pull up a large model close to 100 GB in 1 minute. From the I/O throughput in the lower-right corner of the preceding figure, we can also see that Fluid fully utilized the bandwidth resources of GPU computing nodes.

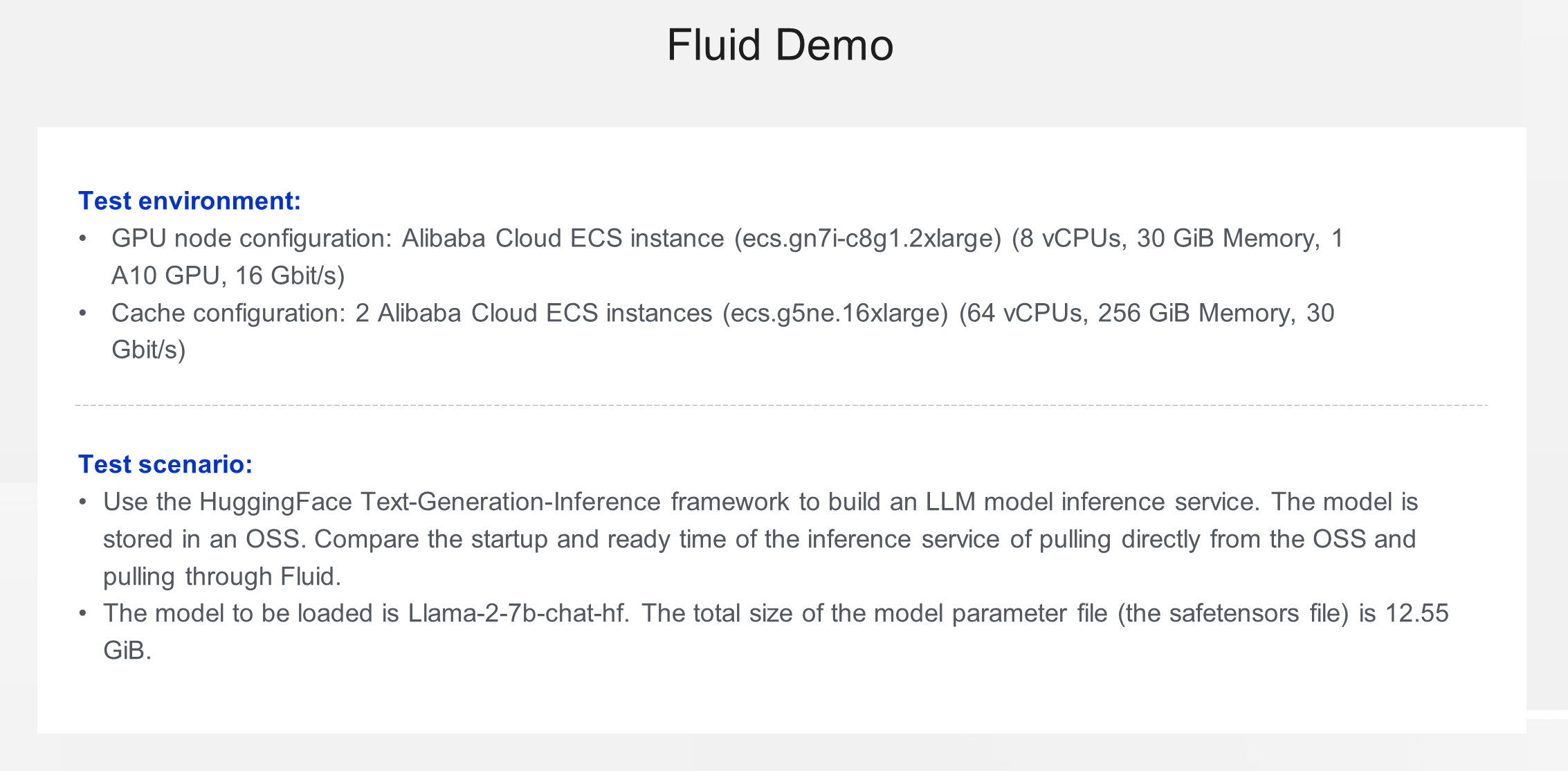

To evaluate the performance of Fluid, we adopted a HuggingFace Text-Generation-Inference framework to build a large language model (LLM) inference service. We stored the model in an OSS and compared the differences between the performance of pulling data directly from the OSS and starting the inference service through Fluid.

Let's take a look at the performance of direct access to OSS. First, create the PV and PVC for the OSS. Next, define a deployment. Mount the PVC to the pod in deployment and use TGI as the container image. Also, define a GPU card for model inference. Proceed with the deployment. Then, check the ready time of the service. In this test, the speed is set 5 times faster. Finally, the service is ready. The entire process takes 101 seconds. Considering that our model size is only 12.55 GB, the period is rather long.

Now, let's look at the optimization effect of Fluid. First, define the dataset and runtime resources of Fluid and deploy the distributed cache to the cluster. Then, define the data source, the number of nodes, and the storage medium and size of the cached data. It is the initial deployment of an elastic distributed cache, so the process may take about 40 seconds. When the cache is ready, we can view the cache monitoring information. The PVC and PV are also automatically created. Then, we made a few modifications to the preceding deployment to define a new one.

• Add an annotation to trigger automatic data prefetching in Fluid.

• Change the PVC of OSS to the PVC automatically created by the Fluid dataset.

• Replace TGI with an image that has client optimization.

Based on the analysis of the service's readiness time, the deployment only took 22 seconds. Additionally, we can attempt to scale up the existing deployment and observe the startup time of the second service instance. Since the required model data was fully cached, the second service instance was ready in just 10 seconds. This example demonstrates the optimization effect of Fluid, successfully increasing the service startup speed by about 10 times.

In summary, Fluid offers a ready-to-use and optimized built-in solution for the elastic acceleration of Generative AI models. It provides improved performance at a reduced cost and supports end-to-end automation. Furthermore, with the Fluid SDK, it is possible to further leverage the bandwidth capabilities of GPU instances to achieve an excellent acceleration effect.

Cloud-native Offline Workflow Orchestration: Kubernetes Clusters for Distributed Argo Workflows

Accelerating Image Generation in Stable Diffusion with TensorRT and Alibaba Cloud ACK

640 posts | 55 followers

FollowAlibaba Cloud Community - October 31, 2023

Alibaba Cloud Community - December 17, 2025

Alibaba Cloud Community - August 28, 2023

Alibaba Cloud Community - September 26, 2023

Alibaba Cloud Native Community - October 23, 2025

Alibaba Cloud Native Community - August 25, 2025

640 posts | 55 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn MoreMore Posts by Alibaba Cloud Native Community