By Yu Zhuang

In the previous article, Enhancing Self-created Kubernetes with Cloud Elasticity to Cope with Traffic Bursts, we discussed a method for adding cloud nodes to Kubernetes clusters in IDCs to handle business traffic growth. This method allows for flexible utilization of cloud resources through multi-level elastic scheduling and utilizes auto scaling to improve efficiency and reduce cloud costs.

However, this approach requires managing the node pool on the cloud yourself, which may not be suitable for everyone. As an alternative, you can consider using Elastic Container Instances (ECI) in serverless mode to run business pods to improve the efficiency of using CPU and GPU resources on the cloud.

The use of CPU and GPU resources in serverless mode on the cloud helps address the insufficient elasticity of IDC Kubernetes clusters, which may struggle to meet the requirements of rapid business growth, periodic business growth, and traffic bursts.

In serverless mode, you can directly submit business pods in Kubernetes clusters. These pods will run through ECIs, which have fast startup speeds and align with the lifecycle of business pods. With pay-as-you-go pricing, there's no need to create cloud nodes for Kubernetes clusters in IDCs, plan cloud resource capacity, or wait for ECS instances to be created. This approach provides extreme elasticity and reduces node operation and maintenance costs.

Using CPU and GPU resources in serverless mode on IDC Kubernetes clusters is suitable for the following business scenarios:

• Online businesses that require auto scaling to handle traffic fluctuations, such as online education and e-commerce. By using Serverless ECIs, you can significantly reduce the maintenance of fixed resource pools and computing costs.

• Data computing: In computing scenarios where Serverless ECI is used to host Spark, Presto, and ArgoWorkflow, it charges based on the uptime of pods, thus reducing computing costs.

• Continuous integration and continuous delivery (CI/CD) pipeline: Jenkins and GitLab Runner.

• Jobs: Jobs in AI computing scenarios and CronJobs.

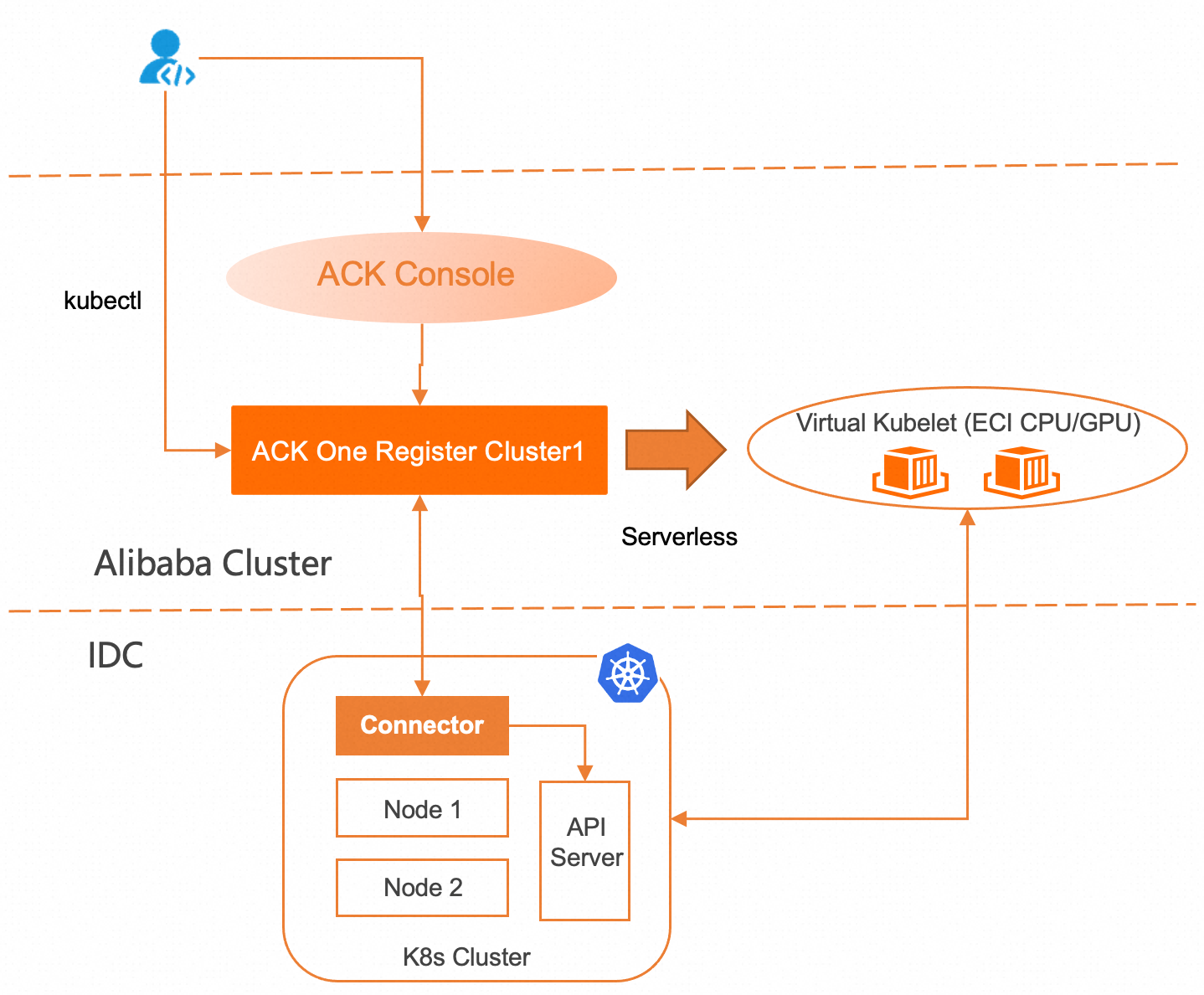

The Kubernetes cluster has been connected to the ACK One console through the ACK One registered cluster. For more information, see Simplifying Kubernetes Multi-cluster Management with the Right Approach.

Install the ack-virtual-node component in the ACK One registered cluster console. After the component is installed, view the cluster node pool through the registered cluster kubeconfig. virtual-kubelet is a virtual node that is connected to Alibaba Cloud Serverless ECIs.

kubectl get node

NAME STATUS ROLES AGE VERSION

iz8vb1xtnuu0ne6b58hvx0z Ready master 4d3h v1.20.9 // The IDC cluster node. In this example, there is only one master node, which is also a worker node and can run business containers.

virtual-kubelet-cn-zhangjiakou-a Ready agent 99s v1.20.9。

// Install the virtual node produced by the ack-virtual-node component.Method 1: Add a label to the pods: alibabacloud.com/eci=true. The pods will run in a Serverless ECI mode. In the example, a GPU-accelerated ECI is used to run CUDA tasks. You do not need to install and configure the NVIDIA driver and runtime.

a) Submit the pods and use Serverless ECIs to run them.

> cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: gpu-pod

labels:

alibabacloud.com/eci: "true" # Specify that use Serverless ECIs to run these pods.

annotations:

k8s.aliyun.com/eci-use-specs: ecs.gn5-c4g1.xlarge # Specify the supported GPU specification, which has 1 NVIDIA P100 GPU

spec:

restartPolicy: Never

containers:

- name: cuda-container

image: acr-multiple-clusters-registry.cn-hangzhou.cr.aliyuncs.com/ack-multiple-clusters/cuda10.2-vectoradd

resources:

limits:

nvidia.com/gpu: 1 # Apply for one GPU

EOFb) View the pods. The pods run on the virtual node virtual-kubelet and actually Serverless ECIs are used to run them in the backend.

> kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

gpu-pod 0/1 Completed 0 5m30s 172.16.217.90 virtual-kubelet-cn-zhangjiakou-a <none> <none>

> kubectl logs gpu-pod

Using CUDA Device [0]: Tesla P100-PCIE-16GB

GPU Device has SM 6.0 compute capability

[Vector addition of 50000 elements]

Copy input data from the host memory to the CUDA device

CUDA kernel launch with 196 blocks of 256 threads

Copy output data from the CUDA device to the host memory

Test PASSED

DoneMethod 2: Set a label for a namespace

Set a label for a namespace alibabacloud.com/eci=true. All new pods in the namespace will run in Serverless ECI mode.

kubectl label namespace <namespace-name> alibabacloud.com/eci=trueIn the previous demo, we used Serverless ECIs to run pods by setting labels for pods or namespaces. You can prioritize using the resources of nodes in IDCs to run pods during application execution. When the resources in IDCs are insufficient, you can utilize Serverless ECIs. To achieve this, you can leverage the multi-level elastic scheduling feature of ACK One registered clusters. By installing the ack-co-scheduler components, you can define ResourcePolicy CR objects to enable multi-level elastic scheduling.

ResourcePolicy CR is a namespace resource with the following important parameters

units: the schedulable units. During scale-out activities, pods are scheduled to nodes based on the priorities of the nodes listed under units in descending order. During scale-in activities, pods are deleted from the nodes based on the priorities of the nodes in ascending order.

The steps are as follows:

1) Define ResourcePolicy CR to preferentially use cluster resources in the IDC before using Serverless ECI resources on the cloud.

> cat << EOF | kubectl apply -f -

apiVersion: scheduling.alibabacloud.com/v1alpha1

kind: ResourcePolicy

metadata:

name: cost-balance-policy

spec:

selector:

app: nginx // Select an application pod.

strategy: prefer

units:

- resource: idc // Prioritize the node resources in IDCs.

- resource: eci // Use Serverless ECI resources when the IDC node resources are insufficient.

EOF2) Create an application deployment and start two replicas. Each replica requires two CPUs.

> cat << EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

labels:

app: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

name: nginx

annotations:

addannotion: "true"

labels:

app: nginx # The pod label must be the same as the one that you specified for the selector in the ResourcePolicy.

spec:

schedulerName: ack-co-scheduler

containers:

- name: nginx

image: acr-multiple-clusters-registry.cn-hangzhou.cr.aliyuncs.com/ack-multiple-clusters/nginx

resources:

requests:

cpu: 2

limits:

cpu: 2

EOF3) Run the following command to expand the application to four replicas. The Kubernetes cluster in the IDC has only one 6CPU node, and a maximum of two nginx pods can be started (system resources are reserved, and three pods cannot be started). If the remaining two replicas have insufficient resources on the nodes in the IDC, Serverless ECI is automatically used to run pods.

kubectl scale deployment nginx --replicas 44) View the running status of pods. Two pods run on nodes in the IDC, and two pods run on Alibaba Cloud Serverless ECI using virtual nodes.

> kubectl get pod -o widek get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE nginx-79cd98b4b5-97s47 1/1 Running 0 84s 10.100.75.22 iz8vb1xtnuu0ne6b58hvx0z nginx-79cd98b4b5-gxd8z 1/1 Running 0 84s 10.100.75.23 iz8vb1xtnuu0ne6b58hvx0z nginx-79cd98b4b5-k55rb 1/1 Running 0 58s 10.100.75.24 virtual-kubelet-cn-zhangjiakou-anginx-79cd98b4b5-m9jxm 1/1 Running 0 58s 10.100.75.25 virtual-kubelet-cn-zhangjiakou-aThis article discusses how Kubernetes clusters in IDCs utilize Alibaba Cloud CPU and GPU computing resources in Serverless ECI mode, leveraging ACK One registered clusters to handle business traffic growth. This approach is completely Serverless, eliminating the need for additional cloud node operations and maintenance.

[1] Overview of Registered Clusters

https://www.alibabacloud.com/help/en/doc-detail/155208.html

[2] Use Elastic Container Service to Scale out a Cluster

https://www.alibabacloud.com/help/en/doc-detail/164370.html

[3] Instance Types Supported by ECI

https://www.alibabacloud.com/help/en/doc-detail/451262.html

[4] Multi-level Elastic Scheduling

https://www.alibabacloud.com/help/en/doc-detail/446694.html

Enhancing Self-created Kubernetes with Cloud Elasticity to Cope with Traffic Bursts

Observability | Best Practices for Using Prometheus to Monitor Cassandra

212 posts | 13 followers

FollowAlibaba Container Service - November 21, 2024

Alibaba Cloud Native - October 18, 2023

Alibaba Container Service - July 16, 2019

Alibaba Container Service - April 18, 2024

Alibaba Container Service - November 7, 2024

Alibaba Developer - February 7, 2022

212 posts | 13 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Global Internet Access Solution

Global Internet Access Solution

Migrate your Internet Data Center’s (IDC) Internet gateway to the cloud securely through Alibaba Cloud’s high-quality Internet bandwidth and premium Mainland China route.

Learn More Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn More Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn MoreMore Posts by Alibaba Cloud Native