By Hang Yin

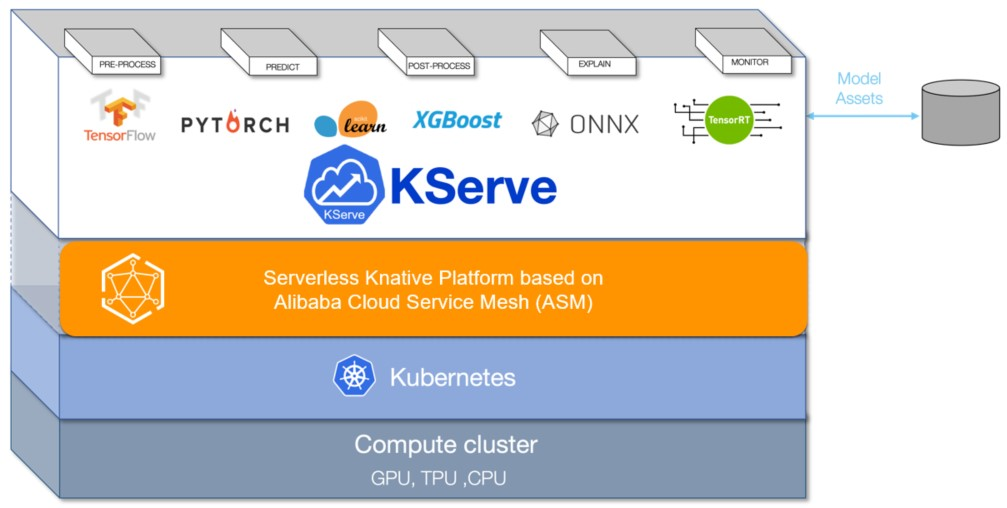

KServe (formerly KFServing) is a model server and inference engine for cloud-native environments. It provides capabilities of auto-scaling, scaling to zero, and canary deployment, and lays the foundation for cloud-native large-scale machine learning and deep learning model services. By integrating with runtimes of multiple machine learning model inference services such as MLServer, Tensorflow Serving, Triton, and TorchServe, KServe can run models developed based on multiple machine learning frameworks such as PyTorch, Tensorflow, and XGBoost, and expose them as model inference services through a unified API.

Based on the integration with the Knative Serving capabilities, Alibaba Cloud Service Mesh (ASM) supports the one-click integration with the KServe on ASM capability. This provides AI model inference services deployed in a Serverless manner. With the integrated KServe capabilities through ASM, developers can quickly deploy and manage machine learning model inference services in cloud-native applications, which reduces the workload of manual configuration and maintenance and improves development efficiency.

TensorRT-LLM is an easy-to-use Python API for defining large language models (LLMs) and building TensorRT engines that contain state-of-the-art optimizations to perform inference efficiently on NVIDIA GPUs. It is open sourced by NVIDIA. TensorRT-LLM supports the backend implementation of the Triton inference framework and allows optimized large language model inference services to be running on the KServe. This article takes KServe on ASM as an example to show how to build a TensorRT-LLM optimized LLM inference service based on KServe.

(1) In this example, an ACK Serverless cluster is used to run the AI model inference service in the serverless form. Therefore, you must first create an ACK Serverless cluster. For more information, please refer to Create an ACK Serverless cluster.

(2) Log on to the ASM console and create a service mesh instance. When you create an instance, select A cluster is automatically added to an ASM instance after the instance is created in the Kubernetes parameter.

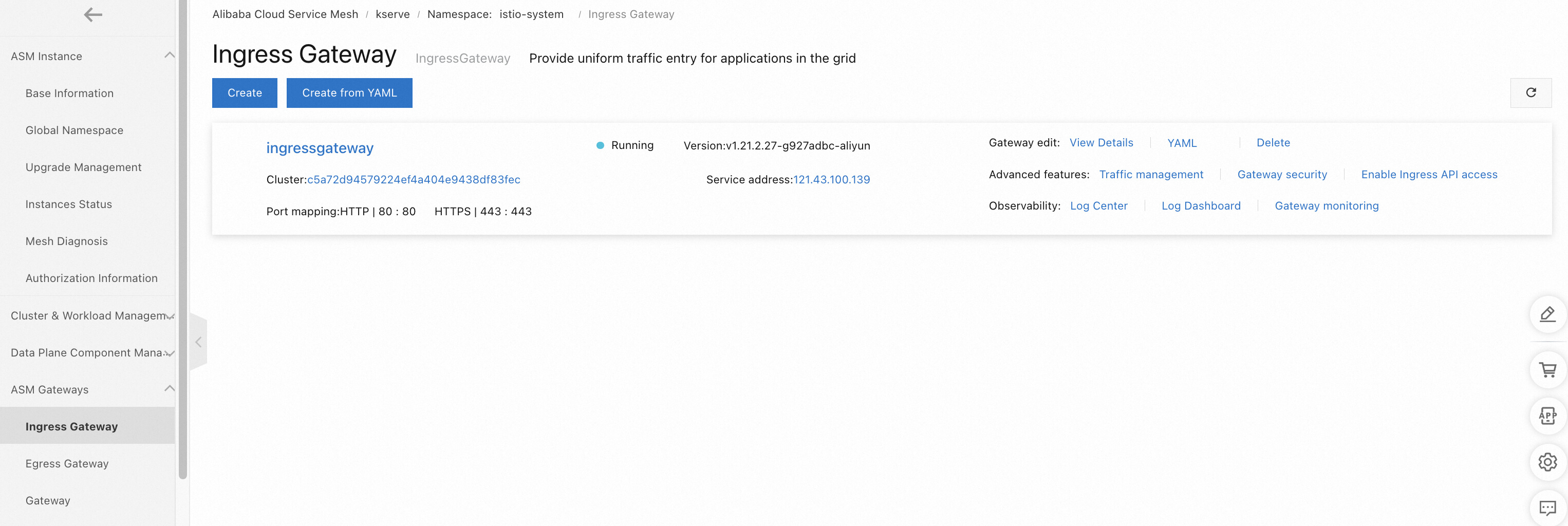

(3) On the instance detail page of the ASM console, create an ASM ingress gateway with the default name and configuration.

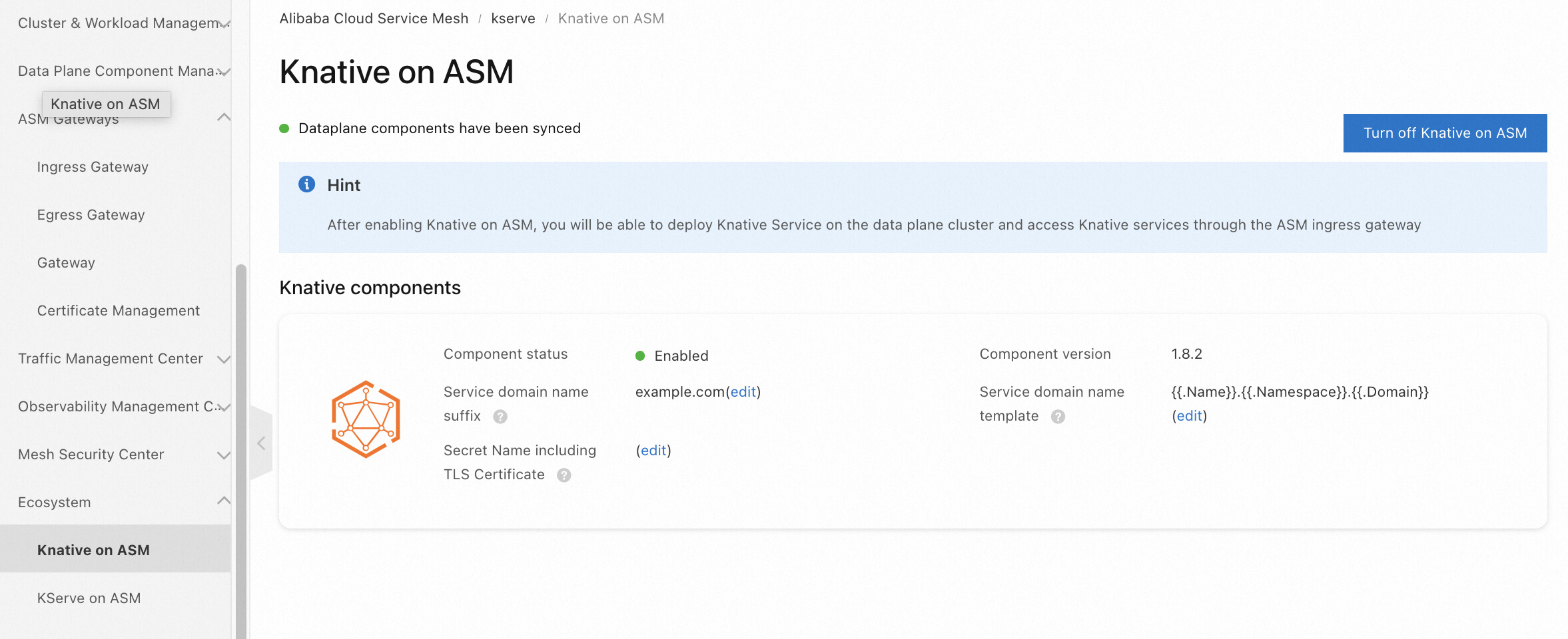

(4) On the instance detail page of the ASM console, choose Ecosystem > Knative on ASM in the left-side navigation panel to enable Knative components.

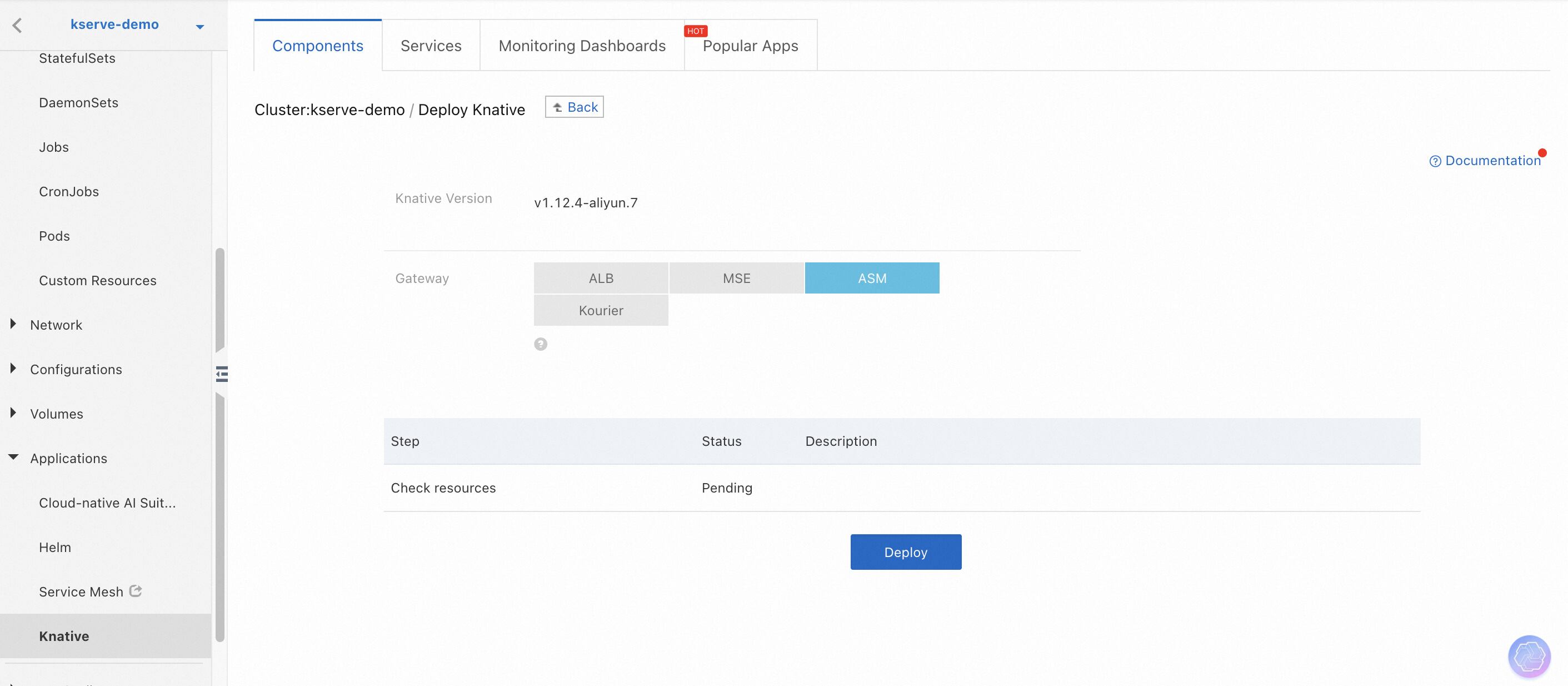

(5) On the Knative page of the ACK cluster console, choose ASM and deploy Knative with one click.

(6) On the instance detail page of the ASM console, choose Ecosystem > KServe on ASM to enable KServe on ASM. Then, configure the parameters as shown in the following figure. If the cert-manager already exists in the cluster, you do not need to select and enable the last item. Besides, since the modelmesh capability is not used in this example, you do not need to turn on the switch Install Model Service Mesh.

For more information about operations on the product, please refer to Integrate the cloud-native inference service KServe with ASM. By the preceding steps, the deployment of KServe and its underlying dependent environment has been completed. Next, various capabilities of KServe in Kubernetes clusters can be used.

(1) Download the Llama-2-7b-hf model from ModelScope.

# Check whether the git-lfs plug-in is installed.

# If not, you can execute yum install git-lfs or apt install git-lfs to install it.

git lfs install

# clone Llama-2-7b-hf project.

GIT_LFS_SKIP_SMUDGE=1 git clone https://www.modelscope.cn/shakechen/Llama-2-7b-hf.git

# Download the model file.

cd Llama-2-7b-hf/

git lfs pull(2) Prepare the model compilation script.

Create a trtllm-llama-2-7b.sh file with the following content:

#!/bin/sh

set -e

MODEL_MOUNT_PATH=/mnt/models

OUTPUT_DIR=/root/trt-llm

TRT_BACKEND_DIR=/root/tensorrtllm_backend

# clone tensorrtllm_backend

echo "clone tensorrtllm_backend..."

if [ -d "$TRT_BACKEND_DIR" ]; then

echo "directory $TRT_BACKEND_DIR exists, skip clone tensorrtllm_backend"

else

cd /root

git clone -b v0.9.0 https://github.com/triton-inference-server/tensorrtllm_backend.git

cd $TRT_BACKEND_DIR

git submodule update --init --recursive

git lfs install

git lfs pull

fi

# covert checkpoint

if [ -d "$OUTPUT_DIR/llama-2-7b-ckpt" ]; then

echo "directory $OUTPUT_DIR/llama-2-7b-ckpt exists, skip convert checkpoint"

else

echo "covert checkpoint..."

python3 $TRT_BACKEND_DIR/tensorrt_llm/examples/llama/convert_checkpoint.py \

--model_dir $MODEL_MOUNT_PATH/Llama-2-7b-hf \

--output_dir $OUTPUT_DIR/llama-2-7b-ckpt \

--dtype float16

fi

# build trtllm engine

if [ -d "$OUTPUT_DIR/llama-2-7b-engine" ]; then

echo "directory $OUTPUT_DIR/llama-2-7b-engine exists, skip convert checkpoint"

else

echo "build trtllm engine..."

trtllm-build --checkpoint_dir $OUTPUT_DIR/llama-2-7b-ckpt \

--remove_input_padding enable \

--gpt_attention_plugin float16 \

--context_fmha enable \

--gemm_plugin float16 \

--output_dir $OUTPUT_DIR/llama-2-7b-engine \

--paged_kv_cache enable \

--max_batch_size 8

fi

# config model

echo "config model..."

cd $TRT_BACKEND_DIR

cp all_models/inflight_batcher_llm/ llama_ifb -r

export HF_LLAMA_MODEL=$MODEL_MOUNT_PATH/Llama-2-7b-hf

export ENGINE_PATH=$OUTPUT_DIR/llama-2-7b-engine

python3 tools/fill_template.py -i llama_ifb/preprocessing/config.pbtxt tokenizer_dir:${HF_LLAMA_MODEL},triton_max_batch_size:8,preprocessing_instance_count:1

python3 tools/fill_template.py -i llama_ifb/postprocessing/config.pbtxt tokenizer_dir:${HF_LLAMA_MODEL},triton_max_batch_size:8,postprocessing_instance_count:1

python3 tools/fill_template.py -i llama_ifb/tensorrt_llm_bls/config.pbtxt triton_max_batch_size:8,decoupled_mode:False,bls_instance_count:1,accumulate_tokens:False

python3 tools/fill_template.py -i llama_ifb/ensemble/config.pbtxt triton_max_batch_size:8

python3 tools/fill_template.py -i llama_ifb/tensorrt_llm/config.pbtxt triton_backend:tensorrtllm,triton_max_batch_size:8,decoupled_mode:False,max_beam_width:1,engine_dir:${ENGINE_PATH},max_tokens_in_paged_kv_cache:1280,max_attention_window_size:1280,kv_cache_free_gpu_mem_fraction:0.5,exclude_input_in_output:True,enable_kv_cache_reuse:False,batching_strategy:inflight_fused_batching,max_queue_delay_microseconds:0

# run server

echo "run server..."

pip install SentencePiece

tritonserver --model-repository=$TRT_BACKEND_DIR/llama_ifb --http-port=8080 --grpc-port=9000 --metrics-port=8002 --disable-auto-complete-config --backend-config=python,shm-region-prefix-name=prefix0_(3) Upload OSS and create a PV/PVC in the cluster.

# Create a directory.

ossutil mkdir oss://<your-bucket-name>/Llama-2-7b-hf

# Upload the model file.

ossutil cp -r ./Llama-2-7b-hf oss://<your-bucket-name>/Llama-2-7b-hf

# Upload the script file.

chmod +x trtllm-llama-2-7b.sh

ossutil cp -r ./trtllm-llama-2-7b.sh oss://<your-bucket-name>/trtllm-llama-2-7b.sh(4) Create a PV and a PVC.

First, replace the following ${your-accesskey-id}, ${your-accesskey-secert}, ${your-bucket-name}, and ${your-bucket-endpoint} variables according to the notes. Then, run the following commands to create a PV and a PVC for the container to access OSS.

kubectl apply -f- << EOF

apiVersion: v1

kind: Secret

metadata:

name: oss-secret

stringData:

akId: ${your-accesskey-id} # The AK used to access OSS

akSecret: ${your-accesskey-secert} # The SK used to access OSS

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: llm-model

labels:

alicloud-pvname: llm-model

spec:

capacity:

storage: 30Gi

accessModes:

- ReadOnlyMany

persistentVolumeReclaimPolicy: Retain

csi:

driver: ossplugin.csi.alibabacloud.com

volumeHandle: model-oss

nodePublishSecretRef:

name: oss-secret

namespace: default

volumeAttributes:

bucket: ${your-bucket-name} # OSS bucket name

url: ${your-bucket-endpoint} # OSS bucket endpoint

otherOpts: "-o umask=022 -o max_stat_cache_size=0 -o allow_other"

path: "/"

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: llm-model

spec:

accessModes:

- ReadOnlyMany

resources:

requests:

storage: 30Gi

selector:

matchLabels:

alicloud-pvname: llm-model

EOFRun the following commands to create a ClusterServingRuntime.

kubectl apply -f- <<EOF

apiVersion: serving.kserve.io/v1alpha1

kind: ClusterServingRuntime

metadata:

name: triton-trtllm

spec:

annotations:

prometheus.kserve.io/path: /metrics

prometheus.kserve.io/port: "8002"

k8s.aliyun.com/eci-auto-imc: 'true'

k8s.aliyun.com/eci-use-specs : "ecs.gn7i-c8g1.2xlarge,ecs.gn7i-c16g1.4xlarge,ecs.gn7i-c32g1.8xlarge,ecs.gn7i-c48g1.12xlarge"

k8s.aliyun.com/eci-extra-ephemeral-storage: 100Gi

containers:

- args:

- tritonserver

- --model-store=/mnt/models

- --grpc-port=9000

- --http-port=8080

- --allow-grpc=true

- --allow-http=true

image: nvcr.io/nvidia/tritonserver:24.04-trtllm-python-py3

name: kserve-container

resources:

requests:

cpu: "4"

memory: 12Gi

protocolVersions:

- v2

- grpc-v2

supportedModelFormats:

- name: triton

version: "2"

EOFIn KServe, a ClusterServingRuntime is a configuration that defines how to run and manage model services in a clustered environment. ClusterServingRuntime allows users to define a set of parameters and configurations that specify how to run a model inference service in a Kubernetes cluster. This includes resource configuration (such as CPU and memory limits), scaling behavior (such as minimum and maximum number of replicas), and health check settings. This article configures a dedicated runtime deployment environment for LLM model inference services based on TensorRT-LLM through ClusterServingRuntime.

In the ClusterServingRuntime, ECI-related annotations such as k8s.aliyun.com/eci-use-specs (used to specify the ECI instance type on which the inference service is expected to run) and k8s.aliyun.com/eci-auto-imc (used to enable ImageCache) are used to ensure that the inference service runs on a sufficient GPU-accelerated elastic container instance.

Run the following commands to deploy the LLM inference service.

kubectl apply -f- << EOF

apiVersion: serving.kserve.io/v1beta1

kind: InferenceService

metadata:

name: llama-2-7b

spec:

predictor:

model:

modelFormat:

name: triton

version: "2"

runtime: triton-trtllm

storageUri: pvc://llm-model/

name: kserve-container

resources:

limits:

nvidia.com/gpu: "1"

requests:

cpu: "4"

memory: 12Gi

nvidia.com/gpu: "1"

command:

- sh

- -c

- /mnt/models/trtllm-llama-2-7b.sh

EOFInferenceService is a key CRD in KServe that is used to define and manage AI inference services in a cloud-native environment. In the preceding InferenceService, the runtime field is used to bind the preceding ClusterServingRuntime, and storageUri is used to declare the PVC where the model and the model compilation script are located.

You can check whether the application is ready by executing the following command.

kubectl get isvc llama-2-7bExpected output:

kubectl get isvc llama-2-7b

NAME URL READY PREV LATEST PREVROLLEDOUTREVISION LATESTREADYREVISION AGE

llama-2-7b http://llama-2-7b.default.example.com True 100 llama-2-7b-predictor-00001 13hAfter the LLM inference service is ready, you can obtain the IP address of the ASM gateway and access the LLM service through the gateway.

ASM_GATEWAY_IP=`kubectl -n istio-system get svc istio-ingressgateway -ojsonpath='{.status.loadBalancer.ingress[0].ip}'`

curl -H "Host: llama-2-7b.default.example.com" -H "Content-Type: application/json" \

http://$ASM_GATEWAY_IP:80/v2/models/ensemble/generate \

-d '{"text_input": "What is machine learning?", "max_tokens": 20, "bad_words": "", "stop_words": "", "pad_id": 2, "end_id": 2}'Expected output:

{"context_logits":0.0,"cum_log_probs":0.0,"generation_logits":0.0,"model_name":"ensemble","model_version":"1","output_log_probs":[0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0,0.0],"sequence_end":false,"sequence_id":0,"sequence_start":false,"text_output":"\nMachine learning is a type of artificial intelligence (AI) that allows software applications to become more accurate"}Based on the integration with the container service Knative Serving capabilities, ASM supports the one-click integration with the KServe on ASM capability. This provides AI model inference services deployed in a Serverless manner. This article takes the TensorRT-LLM-optimized Llama-2-hf model as an example to introduce the practical process of deploying serverless and optimized LLM model inference services in a cloud-native environment.

Alibaba Cloud Service Mesh Multi-Cluster Practices (1): Multi-Cluster Management Overview

Use Argo Workflows SDK for Python to Create Large-Scale Workflows

180 posts | 32 followers

FollowAlibaba Container Service - July 24, 2024

Alibaba Cloud Native - February 20, 2024

Alibaba Cloud Native Community - September 20, 2023

Alibaba Container Service - September 14, 2022

Alibaba Cloud Native - October 9, 2022

Alibaba Cloud Native Community - April 2, 2024

180 posts | 32 followers

Follow Alibaba Cloud Service Mesh

Alibaba Cloud Service Mesh

Alibaba Cloud Service Mesh (ASM) is a fully managed service mesh platform that is compatible with Istio.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More EasyDispatch for Field Service Management

EasyDispatch for Field Service Management

Apply the latest Reinforcement Learning AI technology to your Field Service Management (FSM) to obtain real-time AI-informed decision support.

Learn MoreMore Posts by Alibaba Container Service