By Xining Wang

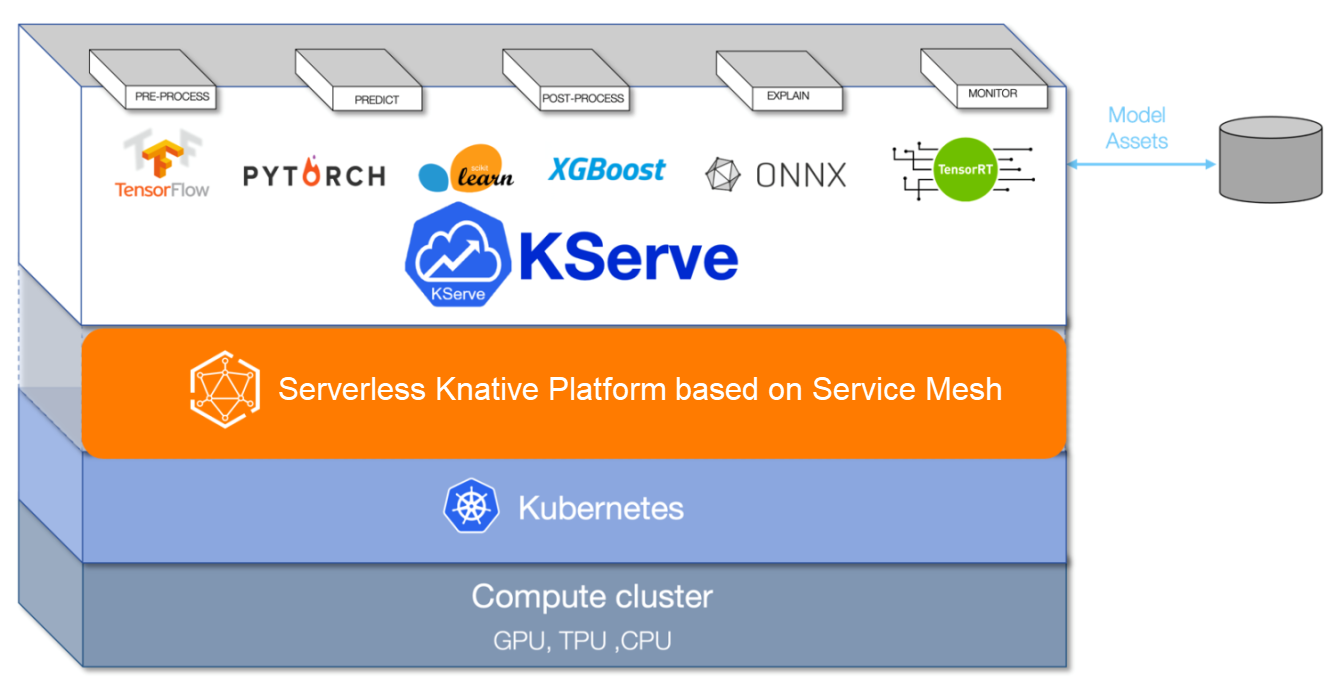

KServe (originally called KFServing) is a model server and inference engine in the cloud-native environment. It supports automatic scaling, zero scaling, and canary deployment. As a model server, KServe provides the foundation for large-scale service machine learning and deep learning models. KServe can be deployed as a traditional Kubernetes deployment or as a Serverless deployment that supports zeroing. It uses Istio and Knative Serving for Serverless deployment, featuring automatic traffic-based scaling together with blue/green and the canary deployment of models.

This article describes how to use Alibaba Cloud Service Mesh (ASM) and Alibaba Cloud Container Service for Kubernetes (ACK) for deployment.

KServe depends on the Cert Manager component. V1.8.0 or later is recommended. This article uses v1.8.0 as an example. Run the following command to install the instance:

kubectl apply -f https://alibabacloudservicemesh.oss-cn-beijing.aliyuncs.com/certmanager/v1.8.0/cert-manager.yamlBefore running the kserve.yaml command, make sure the apiVersion value of the following resources is changed from cert-manager.io/v1alpha2 to cert-manager.io/v1:

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: serving-cert

namespace: kserve

spec:

commonName: kserve-webhook-server-service.kserve.svc

dnsNames:

- kserve-webhook-server-service.kserve.svc

issuerRef:

kind: Issuer

name: selfsigned-issuer

secretName: kserve-webhook-server-cert

---

apiVersion: cert-manager.io/v1

kind: Issuer

metadata:

name: selfsigned-issuer

namespace: kserve

spec:

selfSigned: {}

---Since the file here has corrected the preceding apiVersion, the following command can be directly run to install and deploy the file:

kubectl apply -f https://alibabacloudservicemesh.oss-cn-beijing.aliyuncs.com/kserve/v0.7/kserve.yamlThe execution result is similar to the following:

namespace/kserve created

customresourcedefinition.apiextensions.k8s.io/inferenceservices.serving.kserve.io created

customresourcedefinition.apiextensions.k8s.io/trainedmodels.serving.kserve.io created

role.rbac.authorization.k8s.io/leader-election-role created

clusterrole.rbac.authorization.k8s.io/kserve-manager-role created

clusterrole.rbac.authorization.k8s.io/kserve-proxy-role created

rolebinding.rbac.authorization.k8s.io/leader-election-rolebinding created

clusterrolebinding.rbac.authorization.k8s.io/kserve-manager-rolebinding created

clusterrolebinding.rbac.authorization.k8s.io/kserve-proxy-rolebinding created

configmap/inferenceservice-config created

configmap/kserve-config created

secret/kserve-webhook-server-secret created

service/kserve-controller-manager-metrics-service created

service/kserve-controller-manager-service created

service/kserve-webhook-server-service created

statefulset.apps/kserve-controller-manager created

certificate.cert-manager.io/serving-cert created

issuer.cert-manager.io/selfsigned-issuer created

mutatingwebhookconfiguration.admissionregistration.k8s.io/inferenceservice.serving.kserve.io created

validatingwebhookconfiguration.admissionregistration.k8s.io/inferenceservice.serving.kserve.io created

validatingwebhookconfiguration.admissionregistration.k8s.io/trainedmodel.serving.kserve.io createdIf you have created an ASM gateway, skip this step.

In the ASM console, you can click Create on the UI to create an ASM. Port 80 is reserved for subsequent applications. Please see this link for more information.

Obtain the external IP address by running the following command:

kubectl --namespace istio-system get service istio-ingressgatewayUse the scikit-learn training model for testing

First, create a namespace for deploying KServe resources:

kubectl create namespace kserve-testkubectl apply -n kserve-test -f - <<EOF

apiVersion: "serving.kserve.io/v1beta1"

kind: "InferenceService"

metadata:

name: "sklearn-iris"

spec:

predictor:

sklearn:

storageUri: "https://alibabacloudservicemesh.oss-cn-beijing.aliyuncs.com/kserve/v0.7/model.joblib"

EOFCheck the creation status

Use the Kubeconfig data plane and run the following command to query the installation status of the inferenceservices sklearn-iris:

kubectl get inferenceservices sklearn-iris -n kserve-testThe execution result is similar to the following:

NAME URL READY PREV LATEST PREVROLLEDOUTREVISION LATESTREADYREVISION AGE

sklearn-iris http://sklearn-iris.kserve-test.example.com True 100 sklearn-iris-predictor-default-00001 7m8sAs the installation completes, the virtual service that corresponds to the model configuration is automatically created simultaneously. The results can be viewed in the Service Mesh ASM console. There are similar results shown below:

In addition, the gateway rule definition corresponding to Knative is shown (Note: It is under the namespace knative-serving). A similar result is shown below:

cat <<EOF > "./iris-input.json"

{

"instances": [

[6.8, 2.8, 4.8, 1.4],

[6.0, 3.4, 4.5, 1.6]

]

}

EOFObtain the SERVICE_HOSTNAME:

SERVICE_HOSTNAME=$(kubectl get inferenceservice sklearn-iris -n kserve-test -o jsonpath='{.status.url}' | cut -d "/" -f 3)

echo $SERVICE_HOSTNAMEThe running result is similar to the following:

sklearn-iris.kserve-test.example.comUse the previously created ASM gateway address to access the preceding sample model service and run the following command:

ASM_GATEWAY="XXXX"

curl -H "Host: ${SERVICE_HOSTNAME}" http://${ASM_GATEWAY}:80/v1/models/sklearn-iris:predict -d @./iris-input.jsonThe running result is similar to the following:

curl -H "Host: ${SERVICE_HOSTNAME}" http://${ASM_GATEWAY}:80/v1/models/sklearn-iris:predict -d @./iris-input.json

{"predictions": [1, 1]}Run the following command to test the performance of the model service deployed above:

kubectl create -f https://alibabacloudservicemesh.oss-cn-beijing.aliyuncs.com/kserve/v0.7/loadtest.yaml -n kserve-testhttps://alibabacloudservicemesh.oss-cn-beijing.aliyuncs.com/kserve/v0.7/loadtest.yaml

A similar result is obtained:

kubectl logs -n kserve-test load-testchzwx--1-kf29t

Requests [total, rate, throughput] 30000, 500.02, 500.01

Duration [total, attack, wait] 59.999s, 59.998s, 1.352ms

Latencies [min, mean, 50, 90, 95, 99, max] 1.196ms, 1.463ms, 1.378ms, 1.588ms, 1.746ms, 2.99ms, 18.873ms

Bytes In [total, mean] 690000, 23.00

Bytes Out [total, mean] 2460000, 82.00

Success [ratio] 100.00%

Status Codes [code:count] 200:30000

Error Set:The ability above is developed out of the demands of our customers. Our customers want to run KServe on top of service mesh technology to implement AI services. KServe runs smoothly on service mesh to implement the blue/green and canary deployment of model services, traffic distribution between revised versions, etc. It supports auto-scaling Serverless inference workload deployment, high scalability, and concurrency-based intelligent load routing.

As the industry's first fully managed Istio-compatible service mesh product, Alibaba Cloud Service Mesh (ASM) has maintained consistency with the community and industry trends (in terms of architecture) from the very beginning. The components of the control plane are hosted on the Alibaba Cloud side and are independent of the user clusters on the data plane. ASM products are customized and implemented based on community Istio. They provide component capabilities to support refined traffic management and security management on the managed control surface side. The managed mode decouples the lifecycle management of Istio components from the managed Kubernetes clusters, making the architecture more flexible and the system more scalable.

Serverless Containers and Automatic Scaling Based on Traffic Patterns

Serverless Gateway Enhancing: Integration of Alibaba Cloud Knative with Cloud Product ALB

223 posts | 33 followers

FollowXi Ning Wang(王夕宁) - July 21, 2023

Alibaba Container Service - March 7, 2025

Alibaba Cloud Native - November 3, 2022

Alibaba Cloud Community - December 8, 2021

Alibaba Cloud Native - February 20, 2024

Adrian Peng - February 1, 2021

223 posts | 33 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Alibaba Cloud Service Mesh

Alibaba Cloud Service Mesh

Alibaba Cloud Service Mesh (ASM) is a fully managed service mesh platform that is compatible with Istio.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More EasyDispatch for Field Service Management

EasyDispatch for Field Service Management

Apply the latest Reinforcement Learning AI technology to your Field Service Management (FSM) to obtain real-time AI-informed decision support.

Learn MoreMore Posts by Alibaba Container Service