By Jing Cai

Argo Workflows [1] is an open-source, cloud-native workflow engine that orchestrates jobs on Kubernetes. It simplifies the automation and management of complex workflows on Kubernetes, making it suitable for various scenarios, including scheduled tasks, machine learning, ETL and data analysis, model training, data flow pipelines, and CI/CD.

While Kubernetes Jobs can execute basic tasks, they lack the ability to define step dependencies and sequences. They also lack workflow templates, visual interfaces, and workflow-level error handling. As a result, Kubernetes Jobs are not suitable for business scenarios such as batch processing, data processing, scientific computing, and continuous integration.

As a CNCF graduate project, Argo Workflows has been widely used in various scenarios, with continuous integration (CI) being a key application area.

Continuous integration and continuous deployment (CI/CD) play a crucial role in the software development lifecycle, enabling teams to develop applications through an agile process and improve the quality of the applications they build. Continuous integration is an automated process that helps developers submit code changes to the master branch more frequently and reliably, after undergoing testing, building, and other steps.

As the most popular solution in the CI/CD field, Jenkins boasts several advantages, including being free and open-source, offering a rich set of plugins, and having a mature community. However, it still faces some challenges, particularly in today's cloud-native landscape.

• Non-Kubernetes native;

• With the increase of pipeline and plug-ins, Jenkins will face performance bottlenecks;

• The auto-scaling capability is insufficient, the concurrency is insufficient, the running time is long, and free computing increases costs;

• In terms of maintenance costs, a rich plug-in ecosystem can also lead to issues such as incompatible plug-in versions, untimely updates, or security vulnerabilities. Therefore, managing plug-in updates and permissions is an ongoing challenge;

• Defects in the project isolation and permission allocation scheme.

Compared to Jenkins, Argo Workflows offers several advantages. Built on Kubernetes, Argo Workflows inherits its benefits, including auto-scaling and concurrency capabilities. This enables it to handle large-scale pipelines with faster execution speeds and lower costs, allowing developers to focus on business functionality and deliver value to customers. Additionally, Argo Workflows seamlessly integrates with Argo CD, Argo Rollout, and Argo Event, providing more powerful capabilities for scenarios like CI. With Argo Workflows, you can build a more cloud-native, scalable, efficient, and cost-effective CI pipeline.

The comparison is as follows:

| Argo Workflows | Jenkins | |

| Kubernetes-native | It is Kubernetes-native, so it also has the container management advantages of Kubernetes, such as: • Automatic recovery after container failure • Support for auto-scaling • Supports for RBAC and the integrated SSO capability of Argo to easily implement multi-tenancy isolation for enterprises |

Non-Kubernetes native |

| Auto-scaling, concurrency, and performance | • Argo is used to handle large-scale pipelines and supports auto-scaling. • Concurrency makes running faster and more efficient. |

• Jenkins is more suitable for small-scale scenarios, and its performance will decrease when it is required to process a large number of pipelines. The auto-scaling capability is poor. • Insufficient concurrency and long running time. |

| Cost | • The auto-scaling capability helps minimize costs. • Its native support for spot nodes helps reduce costs. |

• Its free computing will increase costs. |

| Community and ecosystem | The Argo community continues to grow and seamlessly integrates with its ecological Argo CD, Argo Rollout, and Argo Event. This enables it to provide more powerful capabilities for scenarios such as CI. | The Jenkins community is mature and has abundant resources. A large number of plug-ins reduce the use threshold. However, over time, plug-in updates and permission management greatly increase O&M costs, making developers focus more on maintaining plug-ins instead of business functions and the value for customers. |

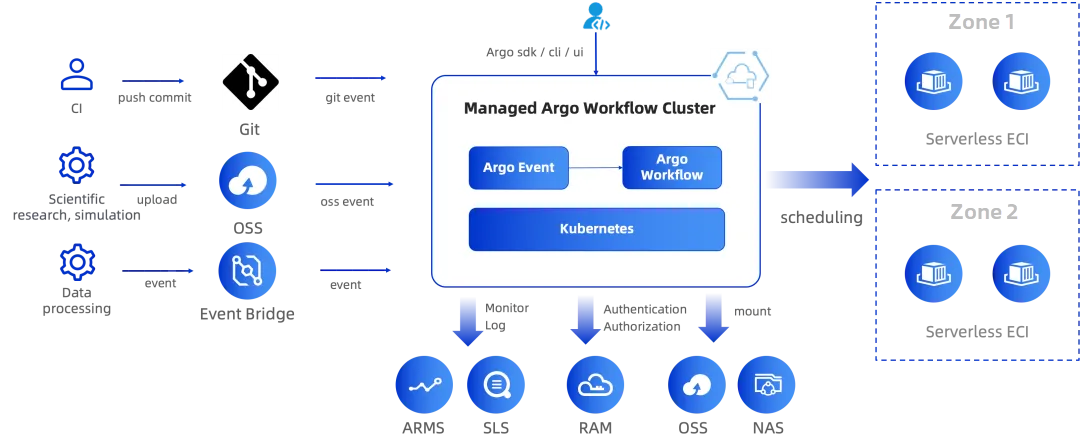

As a fully managed Argo Workflows service that complies with community specifications, ACK One Serverless Argo Workflows [2] is designed to handle large-scale computing-intensive jobs. It integrates with Alibaba Cloud Elastic Container Instance (ECI) to implement auto-scaling, ultrahigh elasticity, and on-demand scaling to minimize costs, and costs can be reduced by 80% with the preemptible spot ECI [3].

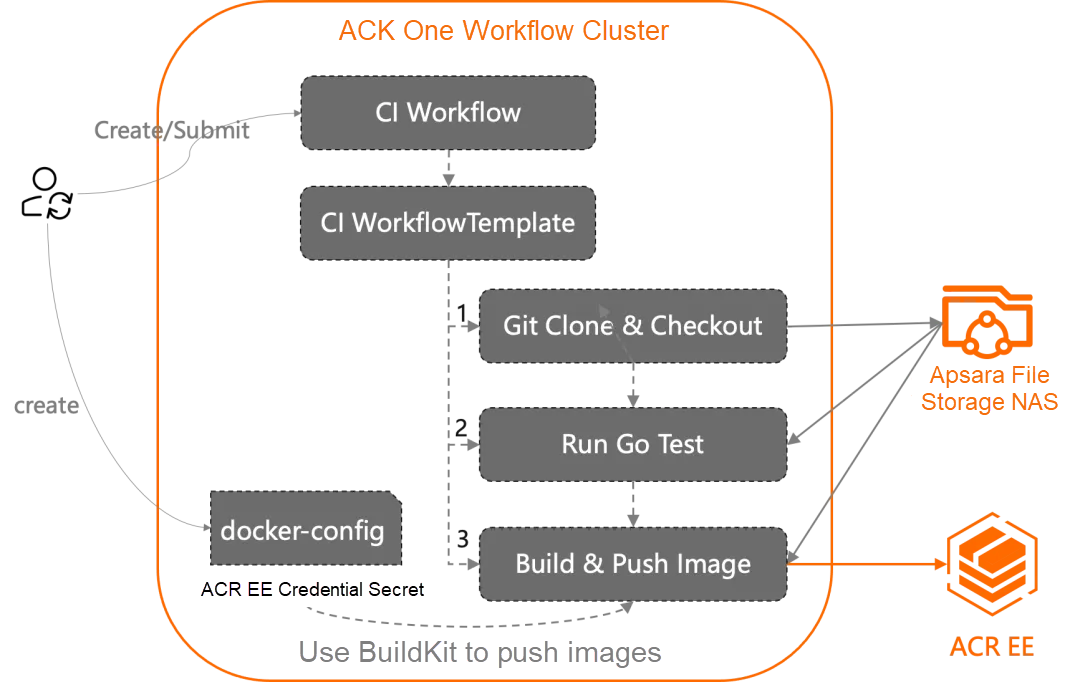

To build a CI pipeline based on an ACK One Serverless Argo workflow cluster, BuildKit [4] is used to build and push container images. In addition, BuildKit Cache is used to [5] accelerate image building, and NAS is used to store Go mod cache to accelerate go test and go build, which greatly accelerates the CI pipeline process.

The ClusterWorkflowTemplate of the implemented CI Pipeline is predefined in a workflow cluster (named ci-go-v1), which mainly consists of three steps:

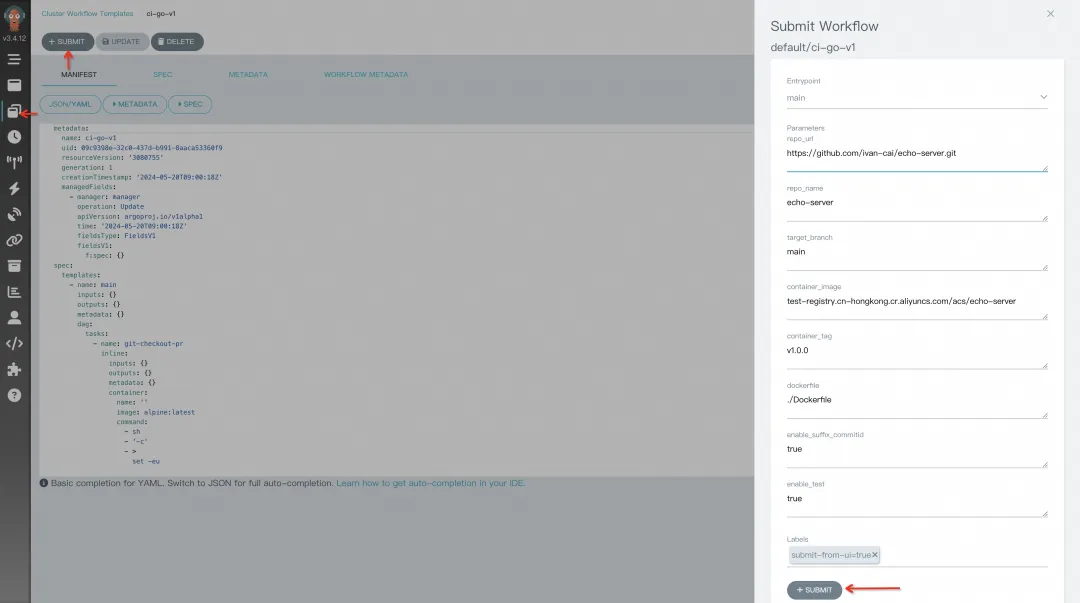

You can perform the following steps to complete the running of the CI Pipeline. For more information, please refer to the best practice [6]:

By default, workflow clusters provide a predefined ClusterWorkflowTemplate named ci-go-v1, and the YAML is as follows. For more information about the parameters, see the best practice [6]:

apiVersion: argoproj.io/v1alpha1

kind: ClusterWorkflowTemplate

metadata:

name: ci-go-v1

spec:

entrypoint: main

volumes:

- name: run-test

emptyDir: {}

- name: workdir

persistentVolumeClaim:

claimName: pvc-nas

- name: docker-config

secret:

secretName: docker-config

arguments:

parameters:

- name: repo_url

value: ""

- name: repo_name

value: ""

- name: target_branch

value: "main"

- name: container_image

value: ""

- name: container_tag

value: "v1.0.0"

- name: dockerfile

value: "./Dockerfile"

- name: enable_suffix_commitid

value: "true"

- name: enable_test

value: "true"

templates:

- name: main

dag:

tasks:

- name: git-checkout-pr

inline:

container:

image: alpine:latest

command:

- sh

- -c

- |

set -eu

apk --update add git

cd /workdir

echo "Start to Clone "{{workflow.parameters.repo_url}}

git -C "{{workflow.parameters.repo_name}}" pull || git clone {{workflow.parameters.repo_url}}

cd {{workflow.parameters.repo_name}}

echo "Start to Checkout target branch" {{workflow.parameters.target_branch}}

git checkout {{workflow.parameters.target_branch}}

echo "Get commit id"

git rev-parse --short origin/{{workflow.parameters.target_branch}} > /workdir/{{workflow.parameters.repo_name}}-commitid.txt

commitId=$(cat /workdir/{{workflow.parameters.repo_name}}-commitid.txt)

echo "Commit id is got: "$commitId

echo "Git Clone and Checkout Complete."

volumeMounts:

- name: "workdir"

mountPath: /workdir

resources:

requests:

memory: 1Gi

cpu: 1

activeDeadlineSeconds: 1200

- name: run-test

when: "{{workflow.parameters.enable_test}} == true"

inline:

container:

image: golang:1.22-alpine

command:

- sh

- -c

- |

set -eu

if [ ! -d "/workdir/pkg/mod" ]; then

mkdir -p /workdir/pkg/mod

echo "GOMODCACHE Directory /pkg/mod is created"

fi

export GOMODCACHE=/workdir/pkg/mod

cp -R /workdir/{{workflow.parameters.repo_name}} /test/{{workflow.parameters.repo_name}}

echo "Start Go Test..."

cd /test/{{workflow.parameters.repo_name}}

go test -v ./...

echo "Go Test Complete."

volumeMounts:

- name: "workdir"

mountPath: /workdir

- name: run-test

mountPath: /test

resources:

requests:

memory: 4Gi

cpu: 2

activeDeadlineSeconds: 1200

depends: git-checkout-pr

- name: build-push-image

inline:

container:

image: moby/buildkit:v0.13.0-rootless

command:

- sh

- -c

- |

set -eu

tag={{workflow.parameters.container_tag}}

if [ {{workflow.parameters.enable_suffix_commitid}} == "true" ]

then

commitId=$(cat /workdir/{{workflow.parameters.repo_name}}-commitid.txt)

tag={{workflow.parameters.container_tag}}-$commitId

fi

echo "Image Tag is: "$tag

echo "Start to Build And Push Container Image"

cd /workdir/{{workflow.parameters.repo_name}}

buildctl-daemonless.sh build \

--frontend \

dockerfile.v0 \

--local \

context=. \

--local \

dockerfile=. \

--opt filename={{workflow.parameters.dockerfile}} \

build-arg:GOPROXY=http://goproxy.cn,direct \

--output \

type=image,\"name={{workflow.parameters.container_image}}:${tag},{{workflow.parameters.container_image}}:latest\",push=true,registry.insecure=true \

--export-cache mode=max,type=registry,ref={{workflow.parameters.container_image}}:buildcache \

--import-cache type=registry,ref={{workflow.parameters.container_image}}:buildcache

echo "Build And Push Container Image {{workflow.parameters.container_image}}:${tag} and {{workflow.parameters.container_image}}:latest Complete."

env:

- name: BUILDKITD_FLAGS

value: --oci-worker-no-process-sandbox

- name: DOCKER_CONFIG

value: /.docker

volumeMounts:

- name: workdir

mountPath: /workdir

- name: docker-config

mountPath: /.docker

securityContext:

seccompProfile:

type: Unconfined

runAsUser: 1000

runAsGroup: 1000

resources:

requests:

memory: 4Gi

cpu: 2

activeDeadlineSeconds: 1200

depends: run-test

Parameter descriptions:

| Parameter | Description | Parameter value |

| repo_url | The URL of the Git repository. | https://github.com/ivan-cai/echo-server.git |

| repo_name | The name of the repository. | echo-server |

| target_branch | The destination branch of the repository. | Default value: main. |

| container_image | The image to be built. | test-registry.cn-hongkong.cr.aliyuncs.com/acs/echo-server |

| container_tag | The image tag. | Default: v1.0.0. |

| dockerfile | The directory and name of the Dockerfile. Specify the relative path in the root directory. |

Default: ./Dockerfile. |

| enable_suffix_commitid | Specify whether to append the commit ID to the image tag. | true/false (default value: true). |

| enable_test | Specify whether to run the Go Test step. | true/false (default value: true). |

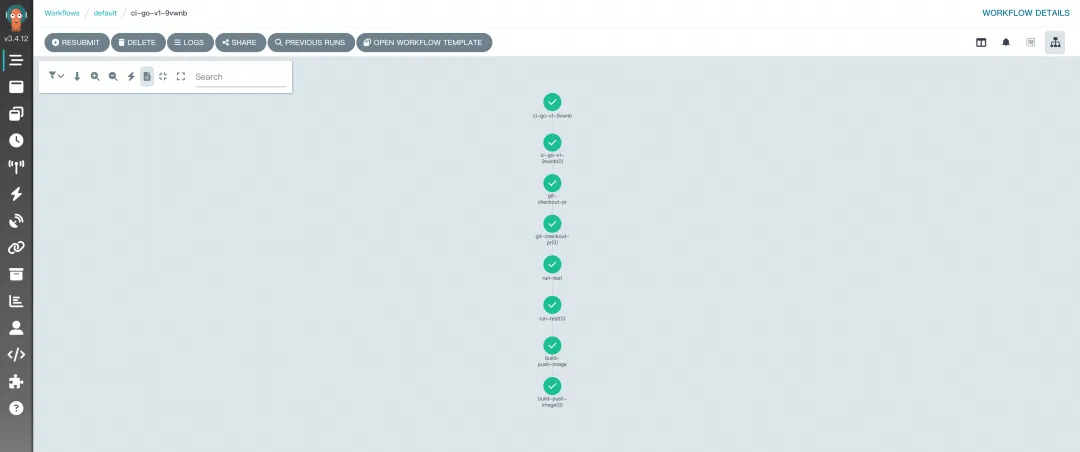

After you complete the configuration, you can view the status of the pipeline on the Workflows details page of the Argo UI as follows:

As a fully managed Argo Workflows service, ACK One Serverless Argo Workflows can help you implement larger-scale, faster, and lower-cost CI pipelines. With event-driven architectures such as ACK One GitOps 8 and Argo Event, you can build complete automated CI/CD pipelines.

[1] Argo Workflows

https://argoproj.github.io/argo-workflows/

[2] ACK One Serverless Argo Workflows

https://www.alibabacloud.com/help/en/ack/distributed-cloud-container-platform-for-kubernetes/user-guide/overview-12

[3] Preemptible ECI

https://www.alibabacloud.com/help/en/eci/use-cases/run-jobs-on-a-preemptible-instance

[4] BuildKit

https://github.com/moby/buildkit

[5] BuildKit Cache

https://github.com/moby/buildkit?tab=readme-ov-file#cache

[6] Best Practices

https://www.alibabacloud.com/help/en/ack/distributed-cloud-container-platform-for-kubernetes/use-cases/building-a-ci-pipeline-of-golang-project-based-on-workflow-cluster

[7] ACK One Workflow Cluster Console

https://account.aliyun.com/login/login.htm?oauth_callback=https%3A%2F%2Fcs.console.aliyun.com%2Fone%3Fspm%3Da2c4g.11186623.0.0.555018e1SiD2lC#/argowf/cluster/detail

[8] ACK One GitOps

https://www.alibabacloud.com/help/en/ack/distributed-cloud-container-platform-for-kubernetes/user-guide/gitops-overview

Analyzing the Distributed Inference Process Using vLLM and Ray from the Perspective of Source Code

223 posts | 33 followers

FollowAlibaba Container Service - September 19, 2024

Alibaba Container Service - December 18, 2024

Alibaba Container Service - December 5, 2024

Alibaba Container Service - April 16, 2021

Alibaba Cloud Native - May 23, 2023

Neel_Shah - December 25, 2025

223 posts | 33 followers

Follow Alibaba Cloud Flow

Alibaba Cloud Flow

An enterprise-level continuous delivery tool.

Learn More DevOps Solution

DevOps Solution

Accelerate software development and delivery by integrating DevOps with the cloud

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn MoreMore Posts by Alibaba Container Service