By Hang Yin

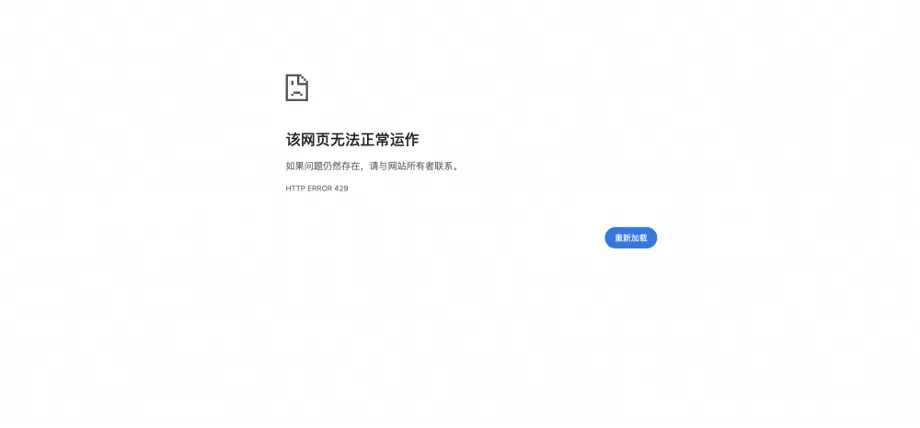

Throttling is a mechanism that limits the number of requests sent to a service. It specifies the maximum number of requests that clients can send to a server in a given period of time, such as 300 requests per minute or 10 requests per second. The aim of throttling is to prevent a service from being overloaded because it receives excessive requests from a specific client IP address or from global clients. For example, if you limit the number of requests sent to a service to 300 per minute, the 301st request is denied. At the same time, the HTTP 429 Too Many Requests status code that indicates excessive requests is returned.

Envoy proxies implement throttling in the following modes: local throttling and global throttling. Local throttling is used to limit the request rate of each service instance. Global throttling uses the global gRPC service to provide throttling for the entire Alibaba Cloud Service Mesh (ASM) instance. Local throttling can be used together with global throttling to provide different levels of throttling.

ASM uses the token bucket algorithm to implement throttling. The token bucket algorithm is a method that limits the number of requests sent to services based on a certain number of tokens in a bucket. Tokens fill in the bucket at a constant rate. When a request is sent to a service, a token is removed from the bucket. When the bucket is empty, requests are denied.

This article uses the Boutique application as an example to describe how to configure global throttling and local throttling for different applications in ASM.

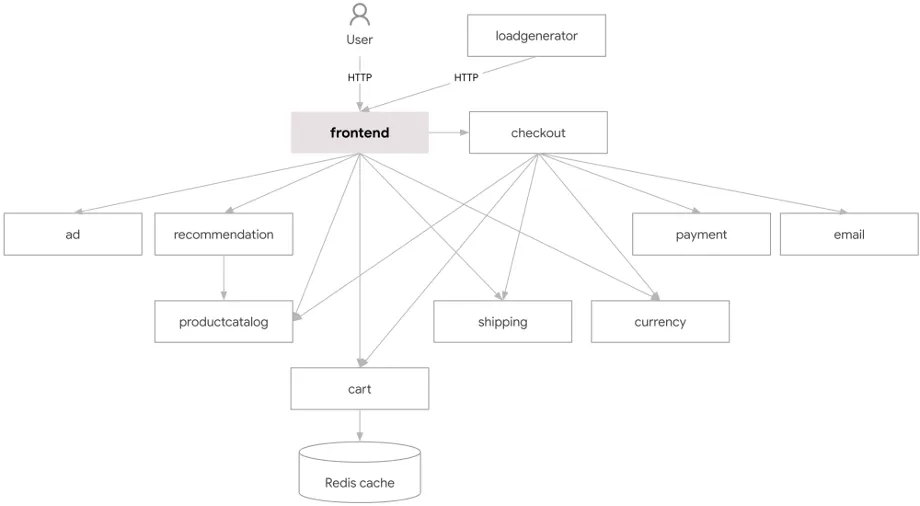

Boutique is an instance application deployed based on the cloud-native architecture, consisting of 11 services.

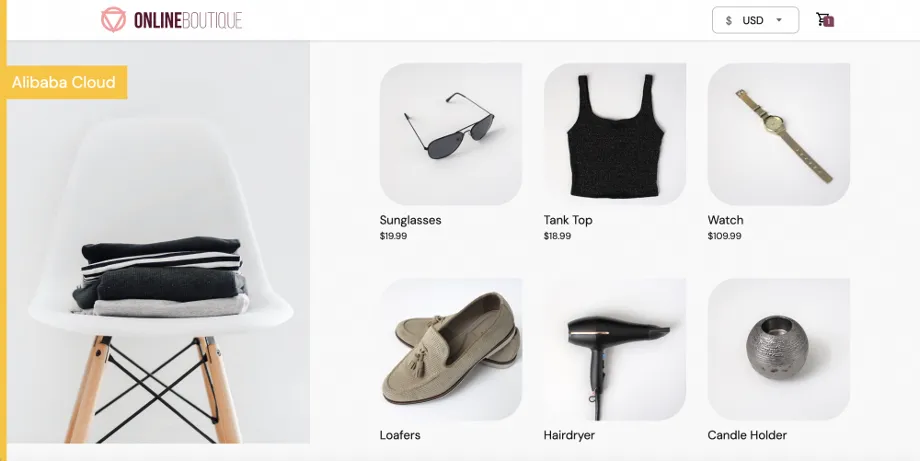

After deploying Boutique, you can access a simulated e-commerce application that provides functions such as viewing product lists, adding items to the cart, and placing orders. This article will use this application as an example to demonstrate the effect of throttling for ASM in practical application scenarios.

First, create a namespace called demo to deploy the application. Then, synchronize it with the ASM global namespace, and enable automatic sidecar proxy injection. For more information about how to synchronize data with an ASM global namespace and how to enable automatic sidecar proxy injection for the namespace, see Manage global namespaces.

kubectl create namespace demoCreate a boutique.yaml file that contains the following content:

apiVersion: apps/v1

kind: Deployment

metadata:

name: emailservice

spec:

selector:

matchLabels:

app: emailservice

template:

metadata:

labels:

app: emailservice

spec:

serviceAccountName: default

terminationGracePeriodSeconds: 5

securityContext:

fsGroup: 1000

runAsGroup: 1000

runAsNonRoot: true

runAsUser: 1000

containers:

- name: server

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

readOnlyRootFilesystem: true

image: registry.cn-shanghai.aliyuncs.com/asm-samples/emailservice:v0.9.0-aliyun

imagePullPolicy: Always

ports:

- containerPort: 8080

env:

- name: PORT

value: "8080"

- name: DISABLE_PROFILER

value: "1"

readinessProbe:

periodSeconds: 5

grpc:

port: 8080

livenessProbe:

periodSeconds: 5

grpc:

port: 8080

resources:

requests:

cpu: 100m

memory: 64Mi

limits:

cpu: 200m

memory: 128Mi

---

apiVersion: v1

kind: Service

metadata:

name: emailservice

spec:

type: ClusterIP

selector:

app: emailservice

ports:

- name: grpc

port: 5000

targetPort: 8080

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: checkoutservice

spec:

selector:

matchLabels:

app: checkoutservice

template:

metadata:

labels:

app: checkoutservice

spec:

serviceAccountName: default

securityContext:

fsGroup: 1000

runAsGroup: 1000

runAsNonRoot: true

runAsUser: 1000

containers:

- name: server

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

readOnlyRootFilesystem: true

image: registry.cn-shanghai.aliyuncs.com/asm-samples/checkoutservice:v0.9.0-aliyun

imagePullPolicy: Always

ports:

- containerPort: 5050

readinessProbe:

grpc:

port: 5050

livenessProbe:

grpc:

port: 5050

env:

- name: PORT

value: "5050"

- name: PRODUCT_CATALOG_SERVICE_ADDR

value: "productcatalogservice:3550"

- name: SHIPPING_SERVICE_ADDR

value: "shippingservice:50051"

- name: PAYMENT_SERVICE_ADDR

value: "paymentservice:50051"

- name: EMAIL_SERVICE_ADDR

value: "emailservice:5000"

- name: CURRENCY_SERVICE_ADDR

value: "currencyservice:7000"

- name: CART_SERVICE_ADDR

value: "cartservice:7070"

resources:

requests:

cpu: 100m

memory: 64Mi

limits:

cpu: 200m

memory: 128Mi

---

apiVersion: v1

kind: Service

metadata:

name: checkoutservice

spec:

type: ClusterIP

selector:

app: checkoutservice

ports:

- name: grpc

port: 5050

targetPort: 5050

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: recommendationservice

spec:

selector:

matchLabels:

app: recommendationservice

template:

metadata:

labels:

app: recommendationservice

spec:

serviceAccountName: default

terminationGracePeriodSeconds: 5

securityContext:

fsGroup: 1000

runAsGroup: 1000

runAsNonRoot: true

runAsUser: 1000

containers:

- name: server

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

readOnlyRootFilesystem: true

image: registry.cn-shanghai.aliyuncs.com/asm-samples/recommendationservice:v0.9.0-aliyun

imagePullPolicy: Always

ports:

- containerPort: 8080

readinessProbe:

periodSeconds: 5

grpc:

port: 8080

livenessProbe:

periodSeconds: 5

grpc:

port: 8080

env:

- name: PORT

value: "8080"

- name: PRODUCT_CATALOG_SERVICE_ADDR

value: "productcatalogservice:3550"

- name: DISABLE_PROFILER

value: "1"

resources:

requests:

cpu: 100m

memory: 220Mi

limits:

cpu: 200m

memory: 450Mi

---

apiVersion: v1

kind: Service

metadata:

name: recommendationservice

spec:

type: ClusterIP

selector:

app: recommendationservice

ports:

- name: grpc

port: 8080

targetPort: 8080

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: frontend

spec:

selector:

matchLabels:

app: frontend

template:

metadata:

labels:

app: frontend

annotations:

sidecar.istio.io/rewriteAppHTTPProbers: "true"

spec:

serviceAccountName: default

securityContext:

fsGroup: 1000

runAsGroup: 1000

runAsNonRoot: true

runAsUser: 1000

containers:

- name: server

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

readOnlyRootFilesystem: true

image: registry.cn-shanghai.aliyuncs.com/asm-samples/frontend:v0.9.0-1-aliyun

imagePullPolicy: Always

ports:

- containerPort: 8080

readinessProbe:

initialDelaySeconds: 10

httpGet:

path: "/_healthz"

port: 8080

httpHeaders:

- name: "Cookie"

value: "shop_session-id=x-readiness-probe"

livenessProbe:

initialDelaySeconds: 10

httpGet:

path: "/_healthz"

port: 8080

httpHeaders:

- name: "Cookie"

value: "shop_session-id=x-liveness-probe"

env:

- name: PORT

value: "8080"

- name: PRODUCT_CATALOG_SERVICE_ADDR

value: "productcatalogservice:3550"

- name: CURRENCY_SERVICE_ADDR

value: "currencyservice:7000"

- name: CART_SERVICE_ADDR

value: "cartservice:7070"

- name: RECOMMENDATION_SERVICE_ADDR

value: "recommendationservice:8080"

- name: SHIPPING_SERVICE_ADDR

value: "shippingservice:50051"

- name: CHECKOUT_SERVICE_ADDR

value: "checkoutservice:5050"

- name: AD_SERVICE_ADDR

value: "adservice:9555"

# # ENV_PLATFORM: One of: local, gcp, aws, azure, onprem, alibaba

# # When not set, defaults to "local" unless running in GKE, otherwies auto-sets to gcp

- name: ENV_PLATFORM

value: "alibaba"

- name: ENABLE_PROFILER

value: "0"

# - name: CYMBAL_BRANDING

# value: "true"

# - name: FRONTEND_MESSAGE

# value: "Replace this with a message you want to display on all pages."

resources:

requests:

cpu: 100m

memory: 64Mi

limits:

cpu: 200m

memory: 128Mi

---

apiVersion: v1

kind: Service

metadata:

name: frontend

spec:

type: ClusterIP

selector:

app: frontend

ports:

- name: http

port: 80

targetPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: frontend-external

spec:

type: LoadBalancer

selector:

app: frontend

ports:

- name: http

port: 80

targetPort: 8080

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: paymentservice

spec:

selector:

matchLabels:

app: paymentservice

template:

metadata:

labels:

app: paymentservice

spec:

serviceAccountName: default

terminationGracePeriodSeconds: 5

securityContext:

fsGroup: 1000

runAsGroup: 1000

runAsNonRoot: true

runAsUser: 1000

containers:

- name: server

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

readOnlyRootFilesystem: true

image: registry.cn-shanghai.aliyuncs.com/asm-samples/paymentservice:v0.9.0-aliyun

imagePullPolicy: Always

ports:

- containerPort: 50051

env:

- name: PORT

value: "50051"

- name: DISABLE_PROFILER

value: "1"

readinessProbe:

grpc:

port: 50051

livenessProbe:

grpc:

port: 50051

resources:

requests:

cpu: 100m

memory: 64Mi

limits:

cpu: 200m

memory: 128Mi

---

apiVersion: v1

kind: Service

metadata:

name: paymentservice

spec:

type: ClusterIP

selector:

app: paymentservice

ports:

- name: grpc

port: 50051

targetPort: 50051

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: productcatalogservice

spec:

selector:

matchLabels:

app: productcatalogservice

template:

metadata:

labels:

app: productcatalogservice

spec:

serviceAccountName: default

terminationGracePeriodSeconds: 5

securityContext:

fsGroup: 1000

runAsGroup: 1000

runAsNonRoot: true

runAsUser: 1000

containers:

- name: server

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

readOnlyRootFilesystem: true

image: registry.cn-shanghai.aliyuncs.com/asm-samples/productcatalogservice:v0.9.0-aliyun

imagePullPolicy: Always

ports:

- containerPort: 3550

env:

- name: PORT

value: "3550"

- name: DISABLE_PROFILER

value: "1"

readinessProbe:

grpc:

port: 3550

livenessProbe:

grpc:

port: 3550

resources:

requests:

cpu: 100m

memory: 64Mi

limits:

cpu: 200m

memory: 128Mi

---

apiVersion: v1

kind: Service

metadata:

name: productcatalogservice

spec:

type: ClusterIP

selector:

app: productcatalogservice

ports:

- name: grpc

port: 3550

targetPort: 3550

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: cartservice

spec:

selector:

matchLabels:

app: cartservice

template:

metadata:

labels:

app: cartservice

spec:

serviceAccountName: default

terminationGracePeriodSeconds: 5

securityContext:

fsGroup: 1000

runAsGroup: 1000

runAsNonRoot: true

runAsUser: 1000

containers:

- name: server

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

readOnlyRootFilesystem: true

image: registry.cn-shanghai.aliyuncs.com/asm-samples/cartservice:v0.9.0-aliyun

imagePullPolicy: Always

ports:

- containerPort: 7070

env:

- name: REDIS_ADDR

value: "redis-cart:6379"

resources:

requests:

cpu: 200m

memory: 64Mi

limits:

cpu: 300m

memory: 128Mi

readinessProbe:

initialDelaySeconds: 15

grpc:

port: 7070

livenessProbe:

initialDelaySeconds: 15

periodSeconds: 10

grpc:

port: 7070

---

apiVersion: v1

kind: Service

metadata:

name: cartservice

spec:

type: ClusterIP

selector:

app: cartservice

ports:

- name: grpc

port: 7070

targetPort: 7070

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: loadgenerator

spec:

selector:

matchLabels:

app: loadgenerator

replicas: 0

template:

metadata:

labels:

app: loadgenerator

annotations:

sidecar.istio.io/rewriteAppHTTPProbers: "true"

spec:

serviceAccountName: default

terminationGracePeriodSeconds: 5

restartPolicy: Always

securityContext:

fsGroup: 1000

runAsGroup: 1000

runAsNonRoot: true

runAsUser: 1000

initContainers:

- command:

- /bin/sh

- -exc

- |

echo "Init container pinging frontend: ${FRONTEND_ADDR}..."

STATUSCODE=$(wget --server-response http://${FRONTEND_ADDR} 2>&1 | awk '/^ HTTP/{print $2}')

if test $STATUSCODE -ne 200; then

echo "Error: Could not reach frontend - Status code: ${STATUSCODE}"

exit 1

fi

name: frontend-check

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

readOnlyRootFilesystem: true

image: registry-cn-hangzhou.ack.aliyuncs.com/dev/busybox:latest

env:

- name: FRONTEND_ADDR

value: "frontend:80"

containers:

- name: main

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

readOnlyRootFilesystem: true

image: registry.cn-shanghai.aliyuncs.com/asm-samples/loadgenerator:v0.9.0-aliyun

imagePullPolicy: Always

env:

- name: FRONTEND_ADDR

value: "frontend:80"

- name: USERS

value: "10"

resources:

requests:

cpu: 300m

memory: 256Mi

limits:

cpu: 500m

memory: 512Mi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: currencyservice

spec:

selector:

matchLabels:

app: currencyservice

template:

metadata:

labels:

app: currencyservice

spec:

serviceAccountName: default

terminationGracePeriodSeconds: 5

securityContext:

fsGroup: 1000

runAsGroup: 1000

runAsNonRoot: true

runAsUser: 1000

containers:

- name: server

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

readOnlyRootFilesystem: true

image: registry.cn-shanghai.aliyuncs.com/asm-samples/currencyservice:v0.9.0-aliyun

imagePullPolicy: Always

ports:

- name: grpc

containerPort: 7000

env:

- name: PORT

value: "7000"

- name: DISABLE_PROFILER

value: "1"

readinessProbe:

grpc:

port: 7000

livenessProbe:

grpc:

port: 7000

resources:

requests:

cpu: 100m

memory: 64Mi

limits:

cpu: 200m

memory: 128Mi

---

apiVersion: v1

kind: Service

metadata:

name: currencyservice

spec:

type: ClusterIP

selector:

app: currencyservice

ports:

- name: grpc

port: 7000

targetPort: 7000

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: shippingservice

spec:

selector:

matchLabels:

app: shippingservice

template:

metadata:

labels:

app: shippingservice

spec:

serviceAccountName: default

securityContext:

fsGroup: 1000

runAsGroup: 1000

runAsNonRoot: true

runAsUser: 1000

containers:

- name: server

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

readOnlyRootFilesystem: true

image: registry.cn-shanghai.aliyuncs.com/asm-samples/shippingservice:v0.9.0-aliyun

imagePullPolicy: Always

ports:

- containerPort: 50051

env:

- name: PORT

value: "50051"

- name: DISABLE_PROFILER

value: "1"

readinessProbe:

periodSeconds: 5

grpc:

port: 50051

livenessProbe:

grpc:

port: 50051

resources:

requests:

cpu: 100m

memory: 64Mi

limits:

cpu: 200m

memory: 128Mi

---

apiVersion: v1

kind: Service

metadata:

name: shippingservice

spec:

type: ClusterIP

selector:

app: shippingservice

ports:

- name: grpc

port: 50051

targetPort: 50051

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis-cart

spec:

selector:

matchLabels:

app: redis-cart

template:

metadata:

labels:

app: redis-cart

spec:

securityContext:

fsGroup: 1000

runAsGroup: 1000

runAsNonRoot: true

runAsUser: 1000

containers:

- name: redis

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

readOnlyRootFilesystem: true

image: registry-cn-hangzhou.ack.aliyuncs.com/dev/redis:alpine

ports:

- containerPort: 6379

readinessProbe:

periodSeconds: 5

tcpSocket:

port: 6379

livenessProbe:

periodSeconds: 5

tcpSocket:

port: 6379

volumeMounts:

- mountPath: /data

name: redis-data

resources:

limits:

memory: 256Mi

cpu: 125m

requests:

cpu: 70m

memory: 200Mi

volumes:

- name: redis-data

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

name: redis-cart

spec:

type: ClusterIP

selector:

app: redis-cart

ports:

- name: tcp-redis

port: 6379

targetPort: 6379

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: adservice

spec:

selector:

matchLabels:

app: adservice

template:

metadata:

labels:

app: adservice

spec:

serviceAccountName: default

terminationGracePeriodSeconds: 5

securityContext:

fsGroup: 1000

runAsGroup: 1000

runAsNonRoot: true

runAsUser: 1000

containers:

- name: server

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

privileged: false

readOnlyRootFilesystem: true

image: registry.cn-shanghai.aliyuncs.com/asm-samples/adservice:v0.9.0-aliyun

imagePullPolicy: Always

ports:

- containerPort: 9555

env:

- name: PORT

value: "9555"

resources:

requests:

cpu: 200m

memory: 180Mi

limits:

cpu: 300m

memory: 300Mi

readinessProbe:

initialDelaySeconds: 20

periodSeconds: 15

grpc:

port: 9555

livenessProbe:

initialDelaySeconds: 20

periodSeconds: 15

grpc:

port: 9555

---

apiVersion: v1

kind: Service

metadata:

name: adservice

spec:

type: ClusterIP

selector:

app: adservice

ports:

- name: grpc

port: 9555

targetPort: 9555Run the following command to create the application:

kubectl apply -f boutique.yaml -n demoCreate a boutique-gateway.yaml that contains the following content:

apiVersion: networking.istio.io/v1beta1

kind: Gateway

metadata:

name: boutique-gateway

spec:

selector:

istio: ingressgateway

servers:

- hosts:

- '*'

port:

name: http

number: 8000

protocol: HTTP

---

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: boutique

spec:

gateways:

- boutique-gateway

hosts:

- '*'

http:

- name: boutique-route

route:

- destination:

host: frontendRun the following command to create a routing rule for the application:

kubectl apply -f boutique-gateway.yaml -n demo(2) Configure the Demo Global Throttling Service

apiVersion: v1

kind: ServiceAccount

metadata:

name: redis

---

apiVersion: v1

kind: Service

metadata:

name: redis

labels:

app: redis

spec:

ports:

- name: redis

port: 6379

selector:

app: redis

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis

spec:

replicas: 1

selector:

matchLabels:

app: redis

template:

metadata:

labels:

app: redis

sidecar.istio.io/inject: "false"

spec:

containers:

- image: registry-cn-hangzhou.ack.aliyuncs.com/dev/redis:alpine

imagePullPolicy: Always

name: redis

ports:

- name: redis

containerPort: 6379

restartPolicy: Always

serviceAccountName: redis

---

apiVersion: v1

kind: ConfigMap

metadata:

name: ratelimit-config

data:

config.yaml: |

{}

---

apiVersion: v1

kind: Service

metadata:

name: ratelimit

labels:

app: ratelimit

spec:

ports:

- name: http-port

port: 8080

targetPort: 8080

protocol: TCP

- name: grpc-port

port: 8081

targetPort: 8081

protocol: TCP

- name: http-debug

port: 6070

targetPort: 6070

protocol: TCP

selector:

app: ratelimit

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: ratelimit

spec:

replicas: 1

selector:

matchLabels:

app: ratelimit

strategy:

type: Recreate

template:

metadata:

labels:

app: ratelimit

sidecar.istio.io/inject: "false"

spec:

containers:

# Latest image from https://hub.docker.com/r/envoyproxy/ratelimit/tags

- image: registry-cn-hangzhou.ack.aliyuncs.com/dev/ratelimit:e059638d

imagePullPolicy: Always

name: ratelimit

command: ["/bin/ratelimit"]

env:

- name: LOG_LEVEL

value: debug

- name: REDIS_SOCKET_TYPE

value: tcp

- name: REDIS_URL

value: redis.default.svc.cluster.local:6379

- name: USE_STATSD

value: "false"

- name: RUNTIME_ROOT

value: /data

- name: RUNTIME_SUBDIRECTORY

value: ratelimit

- name: RUNTIME_WATCH_ROOT

value: "false"

- name: RUNTIME_IGNOREDOTFILES

value: "true"

ports:

- containerPort: 8080

- containerPort: 8081

- containerPort: 6070

volumeMounts:

- name: config-volume

# $RUNTIME_ROOT/$RUNTIME_SUBDIRECTORY/$RUNTIME_APPDIRECTORY/config.yaml

mountPath: /data/ratelimit/config

volumes:

- name: config-volume

configMap:

name: ratelimit-configRun the following command to configure the throttling service:

kubectl apply -f ratelimitsvc.yamlGlobal throttling limits the number of requests sent to multiple services. In this mode, all the services in a cluster share the throttling configuration. Therefore, the best practice for global throttling is to configure it at the traffic entry point of the entire system (typically configure it on an ingress gateway). This setup controls the total incoming traffic and ensures that the system's overall load remains manageable.

This article will demonstrate the deployment of global throttling on an ingress gateway.

Use kubeconfig to connect to the ASM instance, and then run the following command to configure the global throttling service:

kubectl apply -f- <<EOF

apiVersion: istio.alibabacloud.com/v1beta1

kind: ASMGlobalRateLimiter

metadata:

name: global-limit

namespace: istio-system

spec:

workloadSelector:

labels:

app: istio-ingressgateway

rateLimitService:

host: ratelimit.default.svc.cluster.local

port: 8081

timeout:

seconds: 5

isGateway: true

configs:

- name: boutique

limit:

unit: SECOND

quota: 100000

match:

vhost:

name: '*'

port: 8000

route:

name_match: boutique-route # The name must be the same as the route name in the virtualservice.

limit_overrides:

- request_match:

header_match:

- name: :path

prefix_match: /product

limit:

unit: MINUTE

quota: 5

EOFFor the Boutique application, the entry point is a frontend service. Each time you access the application, it generates a large number of static requests for JPG, CSS, and JS files, but these requests will not cause significant stress on the system. That is to say, we mainly need to limit requests that actually put pressure on backend services. Therefore, the total throttling is configured as 100,000 requests per second on the ingress gateway (which is almost no limit). However, for requests with the path starting with /product (these requests will cause east-west traffic access within the cluster), a smaller throttling value is set separately by using limit_overrides (it is set to 5 requests per minute here for demonstration).

Next, run the following command to re-obtain the global throttling yaml.

kubectl get asmglobalratelimiter -n istio-system global-limit -oyamlExpected output:

apiVersion: istio.alibabacloud.com/v1

kind: ASMGlobalRateLimiter

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"istio.alibabacloud.com/v1beta1","kind":"ASMGlobalRateLimiter","metadata":{"annotations":{},"name":"global-limit","namespace":"istio-system"},"spec":{"configs":[{"limit":{"quota":100000,"unit":"SECOND"},"limit_overrides":[{"limit":{"quota":5,"unit":"MINUTE"},"request_match":{"header_match":[{"name":":path","prefix_match":"/product"}]}}],"match":{"vhost":{"name":"*","port":8000,"route":{"name_match":"boutique-route"}}},"name":"boutique"}],"isGateway":true,"rateLimitService":{"host":"ratelimit.default.svc.cluster.local","port":8081,"timeout":{"seconds":5}},"workloadSelector":{"labels":{"app":"istio-ingressgateway"}}}}

creationTimestamp: "2024-06-11T12:19:11Z"

generation: 1

name: global-limit

namespace: istio-system

resourceVersion: "1620810225"

uid: e7400112-20bb-4751-b0ca-f611e6da0197

spec:

configs:

- limit:

quota: 100000

unit: SECOND

limit_overrides:

- limit:

quota: 5

unit: MINUTE

request_match:

header_match:

- name: :path

prefix_match: /product

match:

vhost:

name: '*'

port: 8000

route:

name_match: boutique-route

name: boutique

isGateway: true

rateLimitService:

host: ratelimit.default.svc.cluster.local

port: 8081

timeout:

seconds: 5

workloadSelector:

labels:

app: istio-ingressgateway

status:

config.yaml: |

descriptors:

- descriptors:

- key: header_match

rate_limit:

requests_per_unit: 5

unit: MINUTE

value: RateLimit[global-limit.istio-system]-Id[238116753]

key: generic_key

rate_limit:

requests_per_unit: 100000

unit: SECOND

value: RateLimit[global-limit.istio-system]-Id[828717099]

domain: ratelimit.default.svc.cluster.local

message: ok

status: successfulNext, copy the config.yaml field in the status and paste it to the configuration of the throttling service. This configuration is a ConfigMap named ratelimit-config (you can find it in the deployment list of the global throttling service). It is the throttling service that actually determines whether to limit the request.

Connect kubectl to the ACK cluster and then run the following command:

kubectl apply -f- <<EOF

apiVersion: v1

data:

config.yaml: |

descriptors:

- descriptors:

- key: header_match

rate_limit:

requests_per_unit: 5

unit: MINUTE

value: RateLimit[global-limit.istio-system]-Id[238116753]

key: generic_key

rate_limit:

requests_per_unit: 100000

unit: SECOND

value: RateLimit[global-limit.istio-system]-Id[828717099]

domain: ratelimit.default.svc.cluster.local

kind: ConfigMap

metadata:

name: ratelimit-config

namespace: default

EOFYou can configure local throttling for services that are sensitive to workload or those that are relatively critical in the cluster. Local throttling is configured on a per Envoy process basis. An Envoy process is a pod in which an Envoy proxy is injected. You can set a throttling policy respectively for each replica of the service. Although it is impossible to precisely control the overall request rate received by the service, it is suitable for scenarios where throttling is set appropriately for each workload based on the request capacity of the service workload.

This article uses the recommendation service in the Boutique application as an example. This service plays its role in recommending related products in the application without impacting the core business. However, due to the complex recommendation process, its workload may be more sensitive to the number of requests than other services.

Run the following command to configure a local throttling rule:

kubectl apply -f- <<EOF

apiVersion: istio.alibabacloud.com/v1

kind: ASMLocalRateLimiter

metadata:

name: recommend-limit

namespace: demo

spec:

configs:

- limit:

fill_interval:

seconds: 60

quota: 1

match:

vhost:

name: '*'

port: 8080

route:

header_match:

- invert_match: false

name: ':path'

prefix_match: /hipstershop.RecommendationService/ListRecommendations

isGateway: false

workloadSelector:

labels:

app: recommendationservice

EOFA local throttling rule is configured for the workload of the recommendation service. At the same time, only the request with the path prefix /hipstershop.RecommendationService/ListRecommendations will be limited. This request is used to list all recommended products and is identified as the primary source of workload stress. The throttling is set to only one request per 60 seconds here for demonstration.

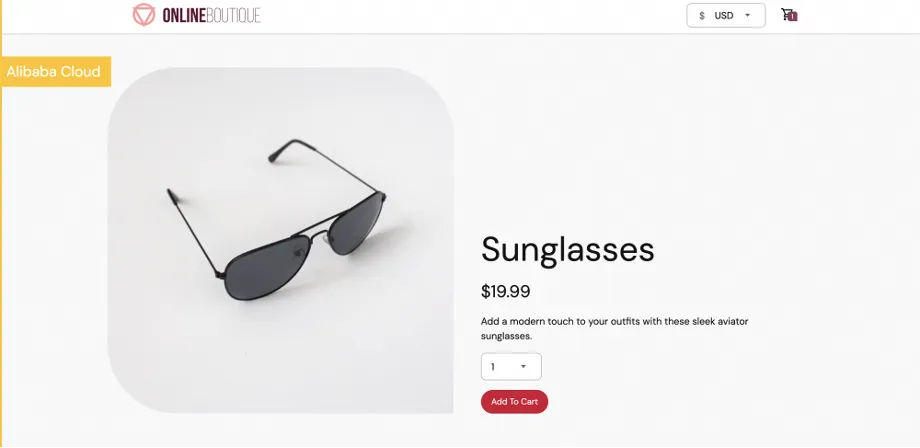

You can use a browser to access port 8000 of the ASM gateway to access the Demo Boutique application. For more information about how to obtain the IP address of the ASM gateway, see Obtain the IP address of the ingress gateway. Click any product on the home page to enter the product details page where you can see the details of the product and related products recommended.

When accessing the page for the second time, you can see that the list of recommendations below disappears, indicating that the recommendation service is throttled. In other words, local throttling starts to take effect.

If you continuously refresh the product page more than 5 times a minute, you can find that the browser reports a 429 error, which indicates that the entire system is throttled. That is, global throttling starts to take effect.

After you configure local throttling or global throttling for a gateway or a service in the cluster, the gateway or the sidecar proxy will generate throttling-related metrics. You can use the Prometheus agent to collect these metrics and configure alert rules to help observe throttling events.

Envoy, serving as the dataplane proxy for Istio, offers various monitoring metrics while implementing request proxy and orchestration. However, for efficiency reasons, Istio does not expose all relevant metrics by default, including throttling-related metrics.

For these metrics, we need to use proxyStatsMatcher to expose them.

In the Envoy, local throttling and global throttling expose different sets of metrics:

For local throttling, you can use the following metrics:

| Metric | Description |

|---|---|

| envoy_http_local_rate_limiter_http_local_rate_limit_enabled | Total number of requests for which throttling is triggered |

| envoy_http_local_rate_limiter_http_local_rate_limit_ok | Total number of responses to requests that have tokens in the token bucket |

| envoy_http_local_rate_limiter_http_local_rate_limit_rate_limited | Total number of requests that have no tokens available (throttling is not necessarily enforced) |

| envoy_http_local_rate_limiter_http_local_rate_limit_enforced | Total number of requests to which throttling is applied (for example, the HTTP 429 status code is returned) |

For global throttling, you can use the following metrics:

| Description | Metric |

|---|---|

| envoy_cluster_ratelimit_ok | Total number of requests allowed by global throttling |

| envoy_cluster_ratelimit_over_limit | Total number of requests that are determined to trigger throttling by global throttling |

| envoy_cluster_ratelimit_error | Total number of requests that fail to call global throttling |

All of these metrics are of the Counter type. You can use the regular expression .*http_local_rate_limit.* to match the local throttling metrics and .*ratelimit.* to match the global throttling metrics.

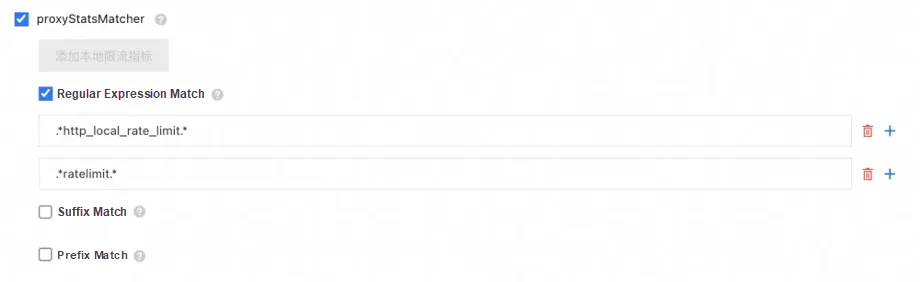

In ASM, you can use the sidecar proxy configuration feature to add a sidecar proxy to the proxyStatsMatcher. For more information, see proxyStatsMatcher to perform operations in the ASM console. In the proxyStatsMatcher, we can select Regular Expression Match and add a regular expression to match the throttling-related metric. The following is a screenshot of a sample configuration.

After configuring the proxyStatsMatcher in the sidecar proxy configuration, you need to redeploy the pod to take effect. Then, the sidecar proxy exposes throttling-related metrics.

For an ASM gateway, you need to use pod annotation to add a proxyStatsMatcher to the gateway:

podAnnotations:

proxy.istio.io/config: |

proxyStatsMatcher:

inclusionRegexps:

- ".*http_local_rate_limit.*"

- ".*ratelimit.*"Note: The preceding operations can cause the gateway to restart.

In the Prometheus instance corresponding to the ACK cluster on the data plane, you can configure scrap_configs to collect these exposed metrics. This article will use the data plane with Alibaba Cloud ACK clusters integrating Alibaba Cloud Managed Service for Prometheus as an example.

Currently, we can add custom service discovery rules to collect metrics exposed by the Envoy. For more information, see Manage custom service discovery. In the custom service discovery configuration, you can enter the following sample configurations:

- job_name: envoy-stats

honor_timestamps: true

scrape_interval: 30s

scrape_timeout: 30s

metrics_path: /stats/prometheus

scheme: http

follow_redirects: true

relabel_configs:

- source_labels: [__meta_kubernetes_pod_container_port_name]

separator: ;

regex: .*-envoy-prom

replacement: $1

action: keep

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

separator: ;

regex: ([^:]+)(?::\d+)?;(\d+)

target_label: __address__

replacement: $1:15090

action: replace

- {separator: ;, regex: __meta_kubernetes_pod_label_(.+), replacement: $1, action: labelmap}

- source_labels: [__meta_kubernetes_namespace]

separator: ;

regex: (.*)

target_label: namespace

replacement: $1

action: replace

- source_labels: [__meta_kubernetes_pod_name]

separator: ;

regex: (.*)

target_label: pod_name

replacement: $1

action: replace

metric_relabel_configs:

- source_labels: [__name__]

separator: ;

regex: istio_.*

replacement: $1

action: drop

kubernetes_sd_configs:

- {role: pod, follow_redirects: true}The preceding configuration collects metrics through Envoy's http-envoy-prom(15090) port and excludes some metrics starting with istio (as these metrics have been integrated by ASM metric monitoring by default).

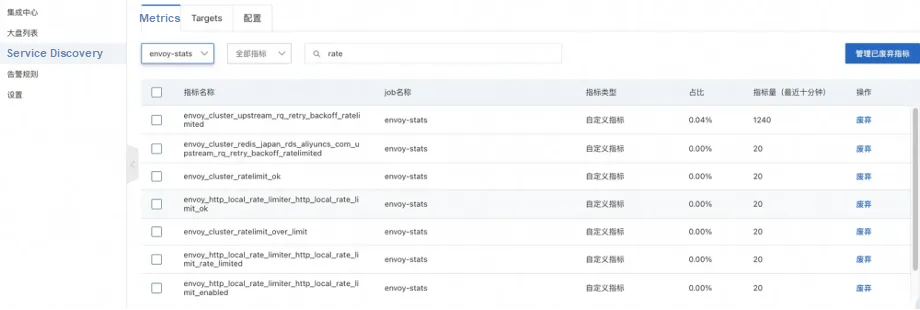

After a period of time, you can find the throttling-related metrics collected by the job named envoy-stats in the service discovery of the ARMS console:

After the Prometheus instance collects related metrics, you can configure alert rules based on these metrics. For Alibaba Cloud Managed Service for Prometheus, you can refer to Use a custom PromQL statement to create an alert rule.

When configuring the alert rule, you must configure the PromQL statement based on your business requirements. All throttling-related metrics are the Counter metric type. You can use the increase parameter to configure the growth of some key metrics.

For example, for local throttling, envoy_http_local_rate_limiter_http_local_rate_limit_enforced represents the total number of requests to which throttling is applied and is a more concerned indicator. We can configure such a custom PromQL statement as an alert rule.

increase(envoy_http_local_rate_limiter_http_local_rate_limit_enforced[5m]) > 10This means that the number of throttled requests exceeds 10 within 5 minutes. In this case, we want to trigger an alert (the actual number needs to be adjusted based on specific business metrics).

For global throttling, the metric with a similar semantic is envoy_cluster_ratelimit_over_limit. Similarly, alerts can also be triggered through a PromQL statement.

Comprehensive Analysis of Service Mesh Load Balancing Algorithm

222 posts | 33 followers

FollowAlibaba Container Service - December 18, 2024

Alibaba Container Service - May 23, 2025

Xi Ning Wang(王夕宁) - July 21, 2023

Alibaba Container Service - March 12, 2025

Xi Ning Wang(王夕宁) - July 1, 2021

Alibaba Container Service - October 12, 2024

222 posts | 33 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More IT Services Solution

IT Services Solution

Alibaba Cloud helps you create better IT services and add more business value for your customers with our extensive portfolio of cloud computing products and services.

Learn MoreMore Posts by Alibaba Container Service