By Hang Yin

In the rapidly evolving world of cloud-native microservices with increasingly complex application architectures, managing agile release iteration processes for all services while building development and testing environments for applications poses significant challenges.

From the perspective of building development and testing environments, the most commonly adopted deployment methods are primarily the following two:

• Full-scale Single Testing Environment: The simplest deployment mode, where all developers share a single testing environment. For a given application, only one test version can exist in the current environment. When an application developer deploys their version for testing, other developers can only wait. Moreover, if one developer deploys a non-functional version, the tests of all other application developers on the trace will be affected.

• Full-scale Multiple Testing Environments: Each developer deploys a separate testing environment. For larger applications, this deployment method can be cost-prohibitive (consider the resource waste of deploying a standalone environment for an application with thousands of services).

Similar problems arise during application releases: when publishing new versions of an application, the simplest and most manageable approach is often to package all services together and release a new version, and to conduct a release process, such as canary release by traffic ratio, between the two versions. However, as the scale of services grows, the resource consumption of this method becomes significant (especially when only a few individual services are updated in one iteration). If these services include AI services that depend on GPU resources, the consumption of each release becomes even more substantial.

All these difficulties point to a common demand: to build efficient, on-demand, resource-saving, and highly reliable isolated environments for cloud-native microservice applications, streamlining the entire development, testing, deployment, and release process to reduce costs and increase efficiency.

Based on its product capabilities, Alibaba Cloud Service Mesh (ASM) proposes a multi-cluster deployment solution that addresses these issues through a traffic swimlane scenario. Its main features include:

• Permission and Deployment Isolation: By separating the development and testing environments from the production and deployment environments, dual-cluster deployment provides separate permission control and deployment machines, preventing development and testing services from interfering with production and enhancing overall application reliability.

• Efficient On-demand Deployment: Leveraging the traffic swimlanes in loose mode of ASM, in the environment for development and testing or canary release version, only a small number of new versions of services that need updating are deployed. When the target service requested does not exist in the current environment, the request target falls back to the stable version of the target service, known as the “baseline version”, completing the entire trace. This enables developers and O&M personnel to iterate applications at a faster speed and lower cost, achieving truly agile development.

• Unified Traffic Control: By using a service mesh instance to manage two clusters, service mesh administrators can control the development, testing, and release pace uniformly, ensuring that the upstream and downstream dependencies of services under development remain consistent with the production environment to the greatest extent, avoiding online issues caused by inconsistencies between development and production environments.

• Non-intrusiveness/Low-intrusiveness: Traffic swimlane capabilities of ASM are based on baggage pass-through, implemented through OpenTelemetry auto-instrumentation and service mesh Sidecar injection. All these capabilities can be realized without intrusion into the business code, allowing developers to focus solely on the business logic.

This article introduces a multi-cluster and multi-environment deployment solution based on traffic swimlanes and demonstrates the entire process of a sample cloud-native microservice application from development, and testing to canary release.

Part1 of this article series: Alibaba Cloud Service Mesh Multi-cluster Practice (Part 1): Multi-cluster Management Overview.

Let's get started! To achieve everything mentioned earlier, we first need two ACK clusters and add them to the same service mesh instance.

Specifically, the following prerequisites need to be prepared:

• An ASM Enterprise or Ultimate edition instance is created, with a version of 1.21.6.54 or later. For specific operations, please refer to Create an ASM Instance or Update an ASM Instance.

• Two ACK clusters are created and added to the ASM instance. For specific operations, please refer to Add a Cluster to an ASM Instance. The two clusters will be used as the production cluster and the development cluster respectively.

• Create gateways named ingressgateway and ingressgateway-dev in the production and development clusters respectively. For specific operations, please refer to Create an Ingress Gateway.

• Create gateway rules named ingressgateway and ingressgateway-dev in the istio-system namespace. For specific operations, please refer to Manage Gateway Rules.

apiVersion: networking.istio.io/v1beta1

kind: Gateway

metadata:

name: ingressgateway

namespace: istio-system

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- '*'

---

apiVersion: networking.istio.io/v1beta1

kind: Gateway

metadata:

name: ingressgateway-dev

namespace: istio-system

spec:

selector:

istio: ingressgateway-dev

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- '*'When adding multiple clusters to ASM, you may need some network planning and configuration to ensure mutual access between the two clusters. You can refer to Multi-cluster Management Overview prepared by ASM for guidance.

After the clusters and service mesh environment are set up, this article will use OpenTelemetry auto-instrumentation to add baggage pass-through capabilities to the services that will be deployed in the two clusters.

In both clusters, you need to perform the following steps:

Connect to the Kubernetes cluster added to the ASM instance using kubectl. Run the following command to create the opentelemetry-operator-system namespace.

kubectl create namespace opentelemetry-operator-systemRun the following command to use Helm to install the OpenTelemetry Operator in the opentelemetry-operator-system namespace. (For Helm installation steps, please refer to Install Helm.)

helm repo add open-telemetry https://open-telemetry.github.io/opentelemetry-helm-charts

helm install \

--namespace=opentelemetry-operator-system \

--version=0.46.0 \

--set admissionWebhooks.certManager.enabled=false \

--set admissionWebhooks.certManager.autoGenerateCert=true \

--set manager.image.repository="registry-cn-hangzhou.ack.aliyuncs.com/acs/opentelemetry-operator" \

--set manager.image.tag="0.92.1" \

--set kubeRBACProxy.image.repository="registry-cn-hangzhou.ack.aliyuncs.com/acs/kube-rbac-proxy" \

--set kubeRBACProxy.image.tag="v0.13.1" \

--set manager.collectorImage.repository="registry-cn-hangzhou.ack.aliyuncs.com/acs/opentelemetry-collector" \

--set manager.collectorImage.tag="0.97.0" \

--set manager.opampBridgeImage.repository="registry-cn-hangzhou.ack.aliyuncs.com/acs/operator-opamp-bridge" \

--set manager.opampBridgeImage.tag="0.97.0" \

--set manager.targetAllocatorImage.repository="registry-cn-hangzhou.ack.aliyuncs.com/acs/target-allocator" \

--set manager.targetAllocatorImage.tag="0.97.0" \

--set manager.autoInstrumentationImage.java.repository="registry-cn-hangzhou.ack.aliyuncs.com/acs/autoinstrumentation-java" \

--set manager.autoInstrumentationImage.java.tag="1.32.1" \

--set manager.autoInstrumentationImage.nodejs.repository="registry-cn-hangzhou.ack.aliyuncs.com/acs/autoinstrumentation-nodejs" \

--set manager.autoInstrumentationImage.nodejs.tag="0.49.1" \

--set manager.autoInstrumentationImage.python.repository="registry-cn-hangzhou.ack.aliyuncs.com/acs/autoinstrumentation-python" \

--set manager.autoInstrumentationImage.python.tag="0.44b0" \

--set manager.autoInstrumentationImage.dotnet.repository="registry-cn-hangzhou.ack.aliyuncs.com/acs/autoinstrumentation-dotnet" \

--set manager.autoInstrumentationImage.dotnet.tag="1.2.0" \

--set manager.autoInstrumentationImage.go.repository="registry-cn-hangzhou.ack.aliyuncs.com/acs/opentelemetry-go-instrumentation" \

--set manager.autoInstrumentationImage.go.tag="v0.10.1.alpha-2-aliyun" \

opentelemetry-operator open-telemetry/opentelemetry-operatorRun the following command to check whether the opentelemetry-operator works:

kubectl get pod -n opentelemetry-operator-systemExpected Output:

NAME READY STATUS RESTARTS AGE

opentelemetry-operator-854fb558b5-pvllj 2/2 Running 0 1mCreate an instrumentation.yaml file with the following content.

apiVersion: opentelemetry.io/v1alpha1

kind: Instrumentation

metadata:

name: demo-instrumentation

spec:

propagators:

- baggage

sampler:

type: parentbased_traceidratio

argument: "1"Run the following command to declare auto-instrumentation in the default namespace.

kubectl apply -f instrumentation.yamlAt this point, you might be wondering what all of this is about. So, let's take a brief detour to explain what baggage pass-through, auto-instrumentation, and the traffic swimlane in loose mode of ASM are. This will give you a foundation for understanding what happens next. Alternatively, you can skip ahead to Step 1.

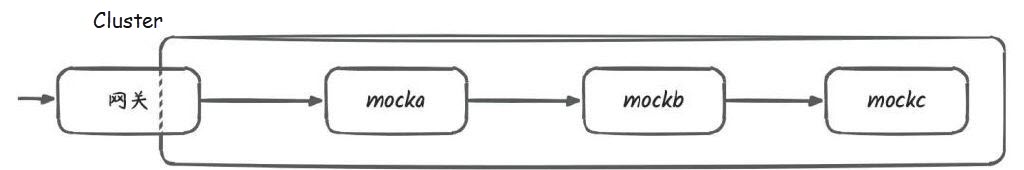

For distributed systems such as cloud-native microservice applications, the system as a whole is typically exposed to external access through a gateway, while the multiple microservices that make up the application are deployed in a cluster and call each other through serving local domain names.

When a request arrives at the gateway, to respond to the request, the cluster often initiates multiple calls because services typically make remote procedure calls (RPCs) to other services they depend on during business logic processing. Each of these calls corresponds to an internal request within the cluster. Upon receiving a request, other services may continue to send requests to the services they depend on. All these requests will form a trace.

For example, the following figure shows a distributed system consisting of three services with the dependency relationship being mocka -> mockb -> mockc. When an external request arrives at mocka, it initiates additional requests from mocka to mockb and from mockb to mockc. These three requests together form a trace.

Finally, let's look at a broader explanation:

Broadly speaking, a trace represents the execution process of a transaction or process within a (distributed) system. In the OpenTracing standard, the trace is a Directed Acyclic Graph (DAG) composed of multiple spans. Each span represents a named and timed segment of continuous execution within the trace.

It can be seen that a trace corresponds to multiple independent requests, and the only connection between these requests is that they are initiated in response to the same external request. However, when a large number of requests continuously arrive at the gateway, there is no way to establish any connection between subsequent requests and the trace.

Baggage is a standardized mechanism developed by OpenTelemetry to transfer context information across processes in traces of a distributed system.

So first of all, what is OpenTelemetry? Here is an introduction: https://opentelemetry.io/docs/what-is-opentelemetry/

OpenTelemetry is an Observabilityframework and toolkit designed to create and manage telemetry data such as traces, metrics, and logs.

As referenced, OpenTelemetry is an observablility framework and toolkit designed to manage telemetry data. So how can such an observablility tool help us? This brings us to the concept of instrumentation. The following sentence is quoted from the official OpenTelemetry documentation:

In order to make a system observable, it must be instrumented: That is, code from the system's components must emit traces, metrics, and logs.

An important part of OpenTelemetry's work is the instrumentation of the application system, that is, making business code "observable", which means that the system needs to have these capabilities:

• Generate logs to connect to the log collection system

• Pass through trace information (including trace IDs) in the trace to connect to tracing systems

• Generate metrics to connect to metric collection systems such as Prometheus

The OpenTelemetry project was proposed by the CNCF community in 2019, backed by CNCF and several major cloud vendors. It has become a top-level project in CNCF and a de facto standard in the cloud-native observability domain.

Back to Baggage, it is essentially an HTTP request header named "Baggage" that has strict content conventions and can carry context data for the trace, such as tenant ID, trace ID, and security credentials, in key-value pairs. For example:

baggage: userId=alice,serverNode=DF%2028,isProduction=falseAlongside proposing the Baggage standard, the OpenTelemetry community offers various methods to pass through Baggage request headers across the same trace, allowing access to the trace context information at any request.

Typically, you can integrate OpenTelemetry SDK into the service code to pass through Baggage. For cloud-native applications deployed in Kubernetes clusters, you can use the OpenTelemetry Operator for auto-instrumentation, which does not require modifying the business code. In this article, we will adopt this approach.

We have covered a lot about traces and trace context. How does this relate to the scenario in this article? The scenario in this article is implemented based on multi-cluster management and traffic swimlanes (loose mode) of ASM.

Multi-cluster management is described in the preceding article. Let's look at traffic swimlanes (loose mode), which primarily provide the ability to create isolated environments for applications on demand (for development and testing or canary releases).

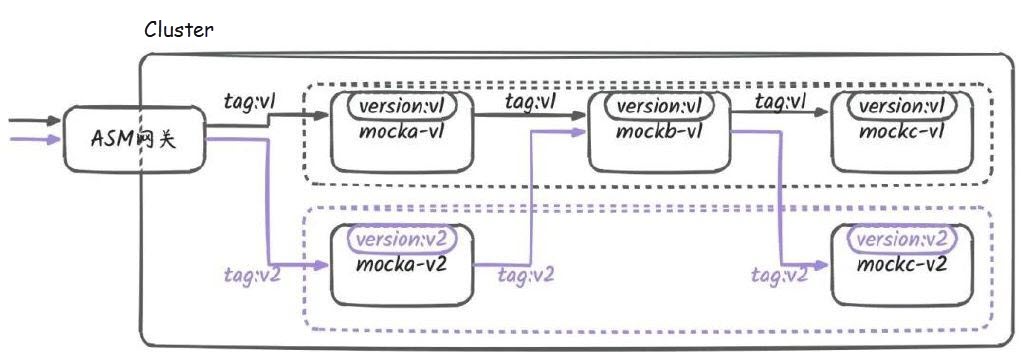

As shown in the following figure, when building an environment for development or a canary release version, you can distinguish pod versions using labels on service pods (for example, using the version label of the pod). Traffic swimlanes can isolate related versions (or other characteristics) of an application into an independent running environment (that is, a swimlane), and control the flow of requests within the entire trace, ensuring that the request targets are always the same version.

Additionally, traffic swimlanes (loose mode) offer the ability to deploy services on demand in the environment. For example, in the scenario below, the mockb service does not undergo any changes in the v2 version. In this case, there is no need to deploy the v2 version of mockb; instead, you just need to designate the v1 version of mockb as the baseline. Requests will automatically switch to the v1 version, and in subsequent requests along the same trace, they will continue to call the v2 version of the mockc service.

Traffic swimlanes (loose mode) achieve the above scenario through the following process:

1) Traffic Labeling:

After a request passes through an ASM gateway, you can define routing rules based on the virtual services of the service mesh to route the request to different versions of the system ingress service. At this point, a label can be added to the request through virtual services to identify the target version. Labeling refers to adding a specific request header to a request. For example, a tag request header is added in the preceding figure. You can distinguish the target version of a request by tag: v1 and tag: v2.

2) Traffic Label Pass-through:

ASM will maintain the pass-through of traffic labels in a trace (that is, adding the same label request header to all requests in the trace).

Since trace context pass-through is closely related to the business code, the service mesh that is not intrusive to the business cannot directly complete it. ASM leverages several mature scenarios of trace pass-through in the cloud-native observability industry to accomplish this step:

• Baggage Pass-through: This is the Baggage standard mentioned earlier. Baggage is the primary standard for trace context pass-through promoted by the OpenTelemetry community, so ASM recommends using this method to pass through labels. Moreover, with the help of OpenTelemetry Operator auto-instrumentation, this may be achieved in a non-intrusive manner.

• Trace ID Pass-through: Trace ID is used in various distributed tracing standards, such as W3C TraceContext, b3, and datadog. A Trace ID is often a unique random ID used to independently identify each trace, and different requests in the same trace will have the same Trace ID.

• Custom Request Header Pass-through: In the service code of applications, some request headers with business significance may already be passed through.

When using traffic swimlanes (loose mode), you only need to specify which of these scenarios your application satisfies, and ASM will automatically configure the preservation and restoration of traffic label information to ensure that the request header for traffic labels is always present in a request trace.

3) Routing Based on Traffic Labels:

When a request is initiated in a trace, the service mesh will first restore the label information of the trace on the request (that is, add the label request header), and then route the request header to the corresponding version of the service based on the label request header. Routing itself is based on virtual services.

4) Traffic Fallback:

When routing requests, the service mesh will check whether the target version exists. If the target version does not exist, the request target will fall back to a predefined baseline version. Traffic fallback is crucial for on-demand deployment, significantly improving the efficiency and flexibility of development, testing, and release processes while reducing resource consumption.

In this example, we will use an application named mock to simulate the testing and release processes of a microservice application. The application consists of three services that are mocka, mockb, and mockc. Each service declares its dependencies on each other and its version information in environment variables and forms a mocka -> mockb -> mockc trace.

When accessing mocka, the response body will record the version information and IP addresses of the services in the trace to facilitate observation. For example:

-> mocka(version: v1, ip: 192.168.0.26)-> mockb(version: v1, ip: 192.168.0.20)-> mockc(version: v1, ip: 192.168.0.32)The example involves using the kubeconfig files of two clusters and connecting them to each cluster to deploy workloads. In the following content, we assume that the kubeconfig files of two clusters are saved in the ~/.kube/config and ~/.kube/config2 paths respectively. In this way, you can operate services in the production deployment cluster using kubectl and services in the development and testing cluster using kubectl --kubeconfig ~/.kube/config2.

1) Enable automatic Sidecar mesh proxy injection for the default namespace. For specific operations, please refer to Manage Global Namespaces.

2) Create a mock.yaml file with the following content.

apiVersion: v1

kind: Service

metadata:

name: mocka

labels:

app: mocka

service: mocka

spec:

ports:

- port: 8000

name: http

selector:

app: mocka

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mocka-v1

labels:

app: mocka

version: v1

spec:

replicas: 1

selector:

matchLabels:

app: mocka

version: v1

ASM_TRAFFIC_TAG: v1

template:

metadata:

labels:

app: mocka

version: v1

ASM_TRAFFIC_TAG: v1

annotations:

instrumentation.opentelemetry.io/inject-java: "true"

instrumentation.opentelemetry.io/container-names: "default"

spec:

containers:

- name: default

image: registry-cn-hangzhou.ack.aliyuncs.com/acs/asm-mock:v0.1-java

imagePullPolicy: IfNotPresent

env:

- name: version

value: v1

- name: app

value: mocka

- name: upstream_url

value: "http://mockb:8000/"

ports:

- containerPort: 8000

---

apiVersion: v1

kind: Service

metadata:

name: mockb

labels:

app: mockb

service: mockb

spec:

ports:

- port: 8000

name: http

selector:

app: mockb

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mockb-v1

labels:

app: mockb

version: v1

spec:

replicas: 1

selector:

matchLabels:

app: mockb

version: v1

ASM_TRAFFIC_TAG: v1

template:

metadata:

labels:

app: mockb

version: v1

ASM_TRAFFIC_TAG: v1

annotations:

instrumentation.opentelemetry.io/inject-java: "true"

instrumentation.opentelemetry.io/container-names: "default"

spec:

containers:

- name: default

image: registry-cn-hangzhou.ack.aliyuncs.com/acs/asm-mock:v0.1-java

imagePullPolicy: IfNotPresent

env:

- name: version

value: v1

- name: app

value: mockb

- name: upstream_url

value: "http://mockc:8000/"

ports:

- containerPort: 8000

---

apiVersion: v1

kind: Service

metadata:

name: mockc

labels:

app: mockc

service: mockc

spec:

ports:

- port: 8000

name: http

selector:

app: mockc

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mockc-v1

labels:

app: mockc

version: v1

spec:

replicas: 1

selector:

matchLabels:

app: mockc

version: v1

ASM_TRAFFIC_TAG: v1

template:

metadata:

labels:

app: mockc

version: v1

ASM_TRAFFIC_TAG: v1

annotations:

instrumentation.opentelemetry.io/inject-java: "true"

instrumentation.opentelemetry.io/container-names: "default"

spec:

containers:

- name: default

image: registry-cn-hangzhou.ack.aliyuncs.com/acs/asm-mock:v0.1-java

imagePullPolicy: IfNotPresent

env:

- name: version

value: v1

- name: app

value: mockc

ports:

- containerPort: 8000For each instance service Pod, the annotations, instrumentation.opentelemetry.io/inject-java: "true" and instrumentation.opentelemetry.io/container-names: "default", are added. These annotations declare that the instance service is implemented in Java and require that the OpenTelemetry Operator performs auto-instrumentation on the container named default.

At the same time, each Pod has a version: v1 label to indicate that it belongs to the original stable version v1.

3) Run the following command to deploy the instance service.

kubectl apply -f mock.yamlBased on the OpenTelemetry auto-instrumentation mechanism, the deployed service Pod will automatically have the capability to pass through Baggage in the trace.

ASM manages all services in distributed applications through swimlane groups and swimlanes. ASMSwimLaneGroup uniformly declares the services included in the application, the swimlane mode, the dependent trace pass-through method, and the baseline version of each service. ASMSwimLane declares the information required for an isolated environment of the application, primarily including which label is used to identify service versions and allows for the addition of selected services to the swimlane group on demand.

1) Create a swimlane-v1.yaml file with the following content.

# Declarative Configuration of the Swimlane Group

apiVersion: istio.alibabacloud.com/v1

kind: ASMSwimLaneGroup

metadata:

name: mock

spec:

ingress:

gateway:

name: ingressgateway

namespace: istio-system

type: ASM

isPermissive: true # Declare that all swimlanes in the swimlane group are in loose mode

permissiveModeConfiguration:

routeHeader: version # The traffic label request header is version

serviceLevelFallback: # Declare the baseline version of the service. The current cluster only has the v1 version, so the baseline version is v1.

default/mocka: v1

default/mockb: v1

default/mockc: v1

traceHeader: baggage # Complete traffic label pass-through based on baggage pass-through

services: # Declare which services are included in the application

- cluster:

id: ce6ed3969d62c4baf89fb3b7f60be7f73 # The ID of the production cluster

name: mocka

namespace: default

- cluster:

id: ce6ed3969d62c4baf89fb3b7f60be7f73

name: mockb

namespace: default

- cluster:

id: ce6ed3969d62c4baf89fb3b7f60be7f73

name: mockc

namespace: default

---

# Declarative Configuration of Swimlanes

apiVersion: istio.alibabacloud.com/v1

kind: ASMSwimLane

metadata:

labels:

swimlane-group: mock # Specify the subordinate swimlane group

name: v1

spec:

labelSelector:

version: v1

services: # Specify which services in the application are included in the v1 version. For the first stable version, this swimlane necessarily includes all services within the swimlane group.

- name: mocka

namespace: default

- name: mockb

namespace: default

- name: mockc

namespace: default2) Run the following command to deploy the swimlane group and the v1 lane definition for the mock application.

kubectl apply -f swimlane-v1.yamlAfter the preceding two steps, the application and the version isolation environment in the cluster are ready. Finally, to launch the application, you just need to expose the application to external access through the gateway.

1) Create an ingress-vs.yaml file with the following content.

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: swimlane-ingress-vs

namespace: istio-system

spec:

gateways:

- istio-system/ingressgateway

hosts:

- '*'

http:

- route:

- destination:

host: mocka.default.svc.cluster.local

subset: v1 # Enter the swimlane name v1

headers: # Label traffic

request:

set:

version: v1• To make the virtual service effective on the gateway relies on the previously created gateway rule (Gateway CR). In the prerequisites, we created an ASM gateway named ingressgateway and a gateway rule with the same name. It is this gateway rule that is referenced in the preceding virtual service.

• In the virtual service, we add a headers section to the route configuration. This section is mainly used for traffic labeling. When the routing destination is determined to be the v1 version, we set the version: v1 request header to attach the v1 version context information to the trace.

• In actual production practices, it also includes practices such as configuring domain names, https, and throttling on the gateway. This article omits these contents and focuses on the development, testing, and release processes.

2) Run the following command to create routing rules exposed to external access for the first version of the mock application.

kubectl apply -f ingress-vs.yaml3) Test accessing the application: You can access the mock application through the curl gateway IP address.

curl {Gateway IP Address}

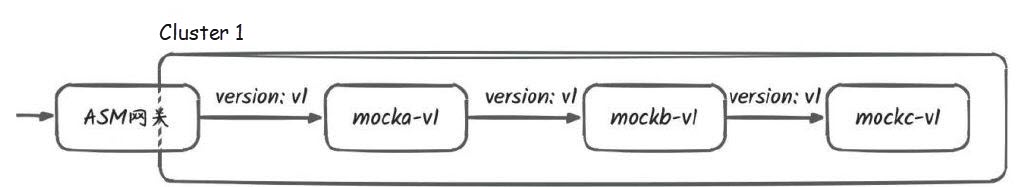

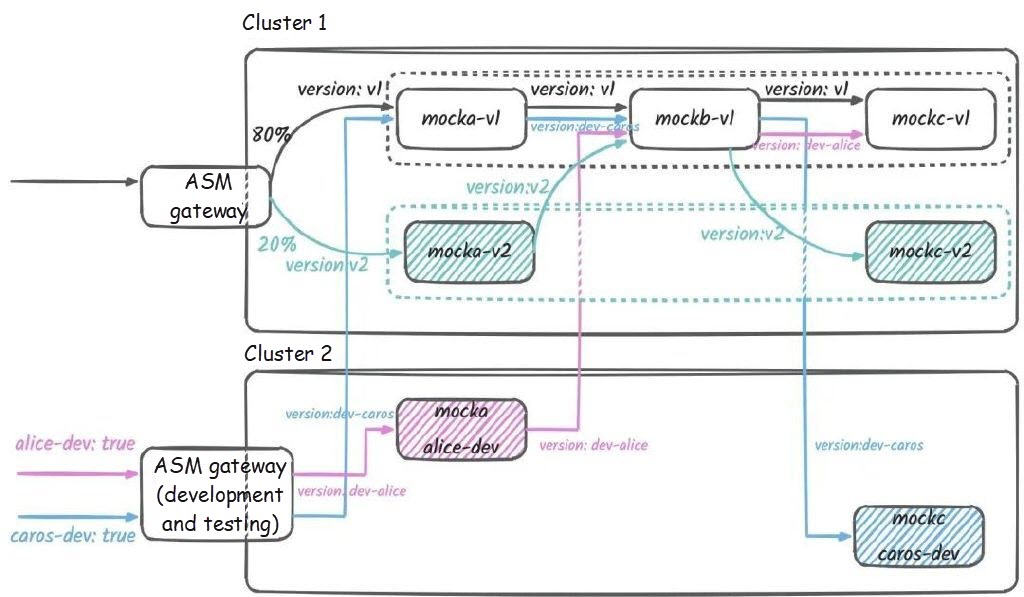

-> mocka(version: v1, ip: 192.168.0.26)-> mockb(version: v1, ip: 192.168.0.20)-> mockc(version: v1, ip: 192.168.0.32)For information on how to obtain the ASM gateway address, please refer to View Gateway Information. From the output, you can see that the overall traffic trace consistently passes through the v1 version of the service, indicating a successful application is launched as expected! At this point, the traffic topology in the cluster is as follows:

After the v1 version of the application is successfully launched, developers and testers will iterate on the application based on the v1 version to develop the v2 version. First, create the same mock Service in the development and testing cluster.

Run the following command:

kubectl --kubeconfig ~/.kube/config2 apply -f- <<EOF

apiVersion: v1

kind: Service

metadata:

name: mocka

labels:

app: mocka

service: mocka

spec:

ports:

- port: 8000

name: http

selector:

app: mocka

---

apiVersion: v1

kind: Service

metadata:

name: mockb

labels:

app: mockb

service: mockb

spec:

ports:

- port: 8000

name: http

selector:

app: mockb

---

apiVersion: v1

kind: Service

metadata:

name: mockc

labels:

app: mockc

service: mockc

spec:

ports:

- port: 8000

name: http

selector:

app: mockc

EOFNow assume that the v2 version of the application needs to update the mocka and mockc services. There are two developers, Alice and Caros, who will develop mocka and mockc respectively. They can create swimlanes to build their own development environments.

For Alice, she can create an alice-dev.yaml file with the following content.

# Workload of the Development Version

apiVersion: apps/v1

kind: Deployment

metadata:

name: mocka-dev-alice

labels:

app: mocka

version: dev-alice

spec:

replicas: 1

selector:

matchLabels:

app: mocka

version: dev-alice

template:

metadata:

labels:

app: mocka

version: dev-alice

annotations:

instrumentation.opentelemetry.io/inject-java: "true"

instrumentation.opentelemetry.io/container-names: "default"

spec:

containers:

- name: default

image: registry-cn-hangzhou.ack.aliyuncs.com/acs/asm-mock:v0.1-java

imagePullPolicy: IfNotPresent

env:

- name: version

value: dev-alice

- name: app

value: mocka

- name: upstream_url

value: "http://mockb:8000/"

ports:

- containerPort: 8000

---

# Declarative Configuration of Swimlanes

apiVersion: istio.alibabacloud.com/v1

kind: ASMSwimLane

metadata:

labels:

swimlane-group: mock # Specify the subordinate swimlane group

name: dev-alice

spec:

labelSelector:

version: dev-alice

services: # Specify which services are included in Alice's development environment (other services use the baseline version)

- name: mocka

namespace: default

---

# Traffic Redirection Configuration of the Development Environment

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: swimlane-ingress-vs-alice

namespace: istio-system

spec:

gateways:

- istio-system/ingressgateway-dev

hosts:

- '*'

http:

- match:

- headers:

alice-dev:

exact: "true"

name: route-alice

route:

- destination:

host: mocka.default.svc.cluster.local

subset: dev-alice # The mocka service that is sent to the development version

headers:

request:

set:

version: dev-alice #Traffic labelingNext, run the following command to create Alice's development environment:

kubectl --kubeconfig ~/.kube/config2 apply -f alice-dev.yamlThis creates a workload for the mocka service that Alice is developing and specifies the isolated environment that Alice uses through declarative definitions of the swimlane and traffic redirection rules. By adding alice-dev: true to the request header, you can access this environment. When accessing, use the IP address of the ASM gateway in the development cluster. The method to obtain the IP address is the same as mentioned above.

curl -H "alice-dev: true" { IP address of the ASM gateway in the development and testing environment}

-> mocka(version: dev-alice, ip: 192.168.0.23)-> mockb(version: v1, ip: 192.168.0.20)-> mockc(version: v1, ip: 192.168.0.32)For Caros, the process is similar. He can create a caros-dev.yaml file with the following content.

# Workload of the Development Version

apiVersion: apps/v1

kind: Deployment

metadata:

name: mockc-dev-caros

labels:

app: mockc

version: dev-caros

spec:

replicas: 1

selector:

matchLabels:

app: mockc

version: dev-caros

template:

metadata:

labels:

app: mockc

version: dev-caros

annotations:

instrumentation.opentelemetry.io/inject-java: "true"

instrumentation.opentelemetry.io/container-names: "default"

spec:

containers:

- name: default

image: registry-cn-hangzhou.ack.aliyuncs.com/acs/asm-mock:v0.1-java

imagePullPolicy: IfNotPresent

env:

- name: version

value: dev-caros

- name: app

value: mockc

ports:

- containerPort: 8000

---

# Declarative Configuration of Swimlanes

apiVersion: istio.alibabacloud.com/v1

kind: ASMSwimLane

metadata:

labels:

swimlane-group: mock # Specify the subordinate swimlane group

name: dev-caros

spec:

labelSelector:

version: dev-caros

services: # Specify which services are included in Caros's development environment (other services use the baseline version)

- name: mockc

namespace: default

---

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: swimlane-ingress-vs-caros

namespace: istio-system

spec:

gateways:

- istio-system/ingressgateway-dev

hosts:

- '*'

http:

- match:

- headers:

caros-dev:

exact: "true"

name: route-caros

route:

- destination:

host: mocka.default.svc.cluster.local

subset: v1 # Caros's environment still uses the v1 version of mocka, but it labels the traffic with dev-caros, which will be directed to the dev-caros version subsequently.

headers:

request:

set:

version: dev-caros #Traffic labelingRun the command to create the environment:

kubectl --kubeconfig ~/.kube/config2 apply -f caros-dev.yamlNow, you can use different request headers to access the gateway of the development cluster. The following results can be observed:

curl -H "alice-dev: true" { IP address of the ASM gateway in the development cluster}

-> mocka(version: dev-alice, ip: 192.168.0.23)-> mockb(version: v1, ip: 192.168.0.20)-> mockc(version: v1, ip: 192.168.0.32)

curl -H "caros-dev: true" { IP address of the ASM gateway in the development cluster}

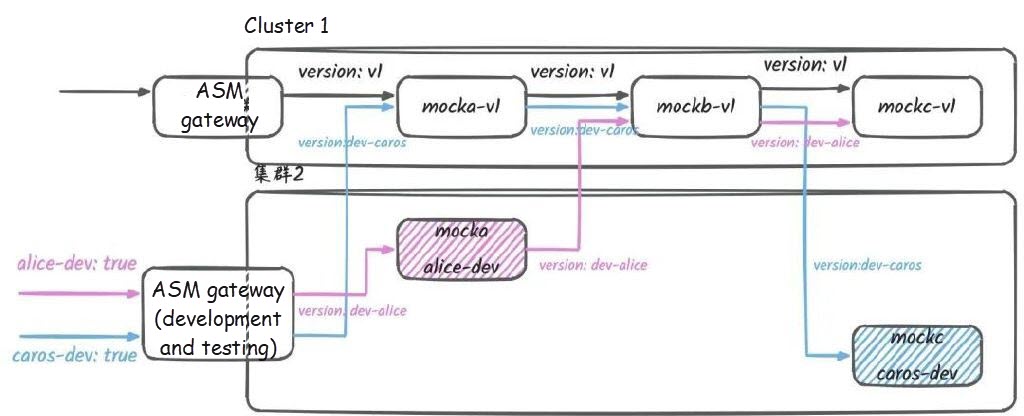

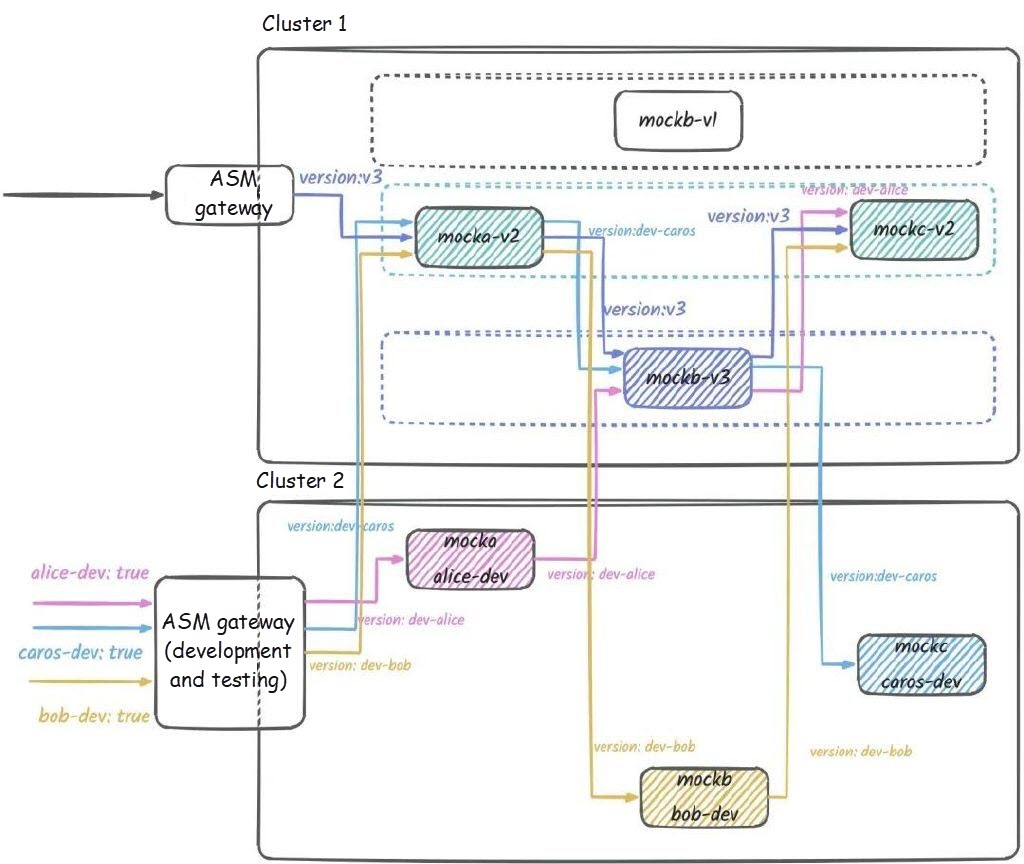

-> mocka(version: v1, ip: 192.168.0.26)-> mockb(version: v1, ip: 192.168.0.20)-> mockc(version: dev-caros, ip: 192.168.0.24)At this point, the traffic topology is as follows:

The production and development environments use their respective ASM gateways for traffic redirection, avoiding mutual interference between traffic redirection rules in the production and development/testing environments. At the same time, developers can create their own development and testing environments on demand and efficiently. In the development and testing environment, they only need to deploy the services they are developing, while other services in the application can directly use the baseline stable version.

After the development is complete, you can use the YAML file previously used to create the environment to quickly destroy the development environment (optional).

kubectl --kubeconfig ~/.kube/config2 delete -f alice-dev.yaml

kubectl --kubeconfig ~/.kube/config2 delete -f caros-dev.yamlAlternatively, you can keep these two development environments. In this article, we choose to keep these development environments for continued use by developers and testers.

After the development and testing are completed, O&M personnel can publish the new mocka and mockc services to the production cluster, create a new v2 version for the application, and enable the canary release process for the v2 version. The entire process is similar to that of deploying the v1 version.

1) Create a mock-v2.yaml file with the following content.

apiVersion: apps/v1

kind: Deployment

metadata:

name: mocka-v2

labels:

app: mocka

version: v2

spec:

replicas: 1

selector:

matchLabels:

app: mocka

version: v2

ASM_TRAFFIC_TAG: v2

template:

metadata:

labels:

app: mocka

version: v2

ASM_TRAFFIC_TAG: v2

annotations:

instrumentation.opentelemetry.io/inject-java: "true"

instrumentation.opentelemetry.io/container-names: "default"

spec:

containers:

- name: default

image: registry-cn-hangzhou.ack.aliyuncs.com/acs/asm-mock:v0.1-java

imagePullPolicy: IfNotPresent

env:

- name: version

value: v2

- name: app

value: mocka

- name: upstream_url

value: "http://mockb:8000/"

ports:

- containerPort: 8000

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mockc-v2

labels:

app: mockc

version: v2

spec:

replicas: 1

selector:

matchLabels:

app: mockc

version: v2

ASM_TRAFFIC_TAG: v2

template:

metadata:

labels:

app: mockc

version: v2

ASM_TRAFFIC_TAG: v2

annotations:

instrumentation.opentelemetry.io/inject-java: "true"

instrumentation.opentelemetry.io/container-names: "default"

spec:

containers:

- name: default

image: registry-cn-hangzhou.ack.aliyuncs.com/acs/asm-mock:v0.1-java

imagePullPolicy: IfNotPresent

env:

- name: version

value: v2

- name: app

value: mockc

ports:

- containerPort: 80002) Run the following command to deploy the instance service.

kubectl apply -f mock-v2.yamlCompared with the v1 version of the application, the v2 iteration does not add any new services and only iterates the mocka and mockc services. Therefore, the deployment content only includes the deployment of these two services with the version: v2 label.

In the same way, when iterating a new version of the application, we only need to create a traffic swimlane in a declarative way based on its corresponding workload label and the services included in the iteration.

1) Create a swimlane-v2.yaml file with the following content.

# Declarative Configuration of the V2 Swimlane

apiVersion: istio.alibabacloud.com/v1

kind: ASMSwimLane

metadata:

labels:

swimlane-group: mock # Specify the subordinate swimlane group

name: v2

spec:

labelSelector:

version: v2

services: # Specify which services (mocka and mockc) in the application are included in the v2 version.

- name: mocka

namespace: default

- name: mockc

namespace: default2) Run the following command to deploy the swimlane group and the v2 swimlane definition for the mock application.

kubectl apply -f swimlane-v2.yamlCompared with the v1 version, the v2 swimlanes only contain mocka and mockc, and use version: v2 to match the services of the corresponding version.

After the v2 environment is deployed, you can modify the virtual service that is already active on the gateway to route traffic to v2 in a certain proportion, thus completing a canary release process.

Modify the ingress-vs.yaml file created above to the following content:

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: swimlane-ingress-vs

namespace: istio-system

spec:

gateways:

- istio-system/ingressgateway

hosts:

- '*'

http:

- route:

- destination:

host: mocka.default.svc.cluster.local

subset: v1 # Enter the swimlane name v1

weight: 80

headers: # Label traffic

request:

set:

version: v1

- destination:

host: mocka.default.svc.cluster.local

subset: v2 # Enter the swimlane name v2

weight: 20

headers: # Label traffic

request:

set:

version: v2Compared with the original version, this virtual service adds a routing destination (mocka of the v2 version), and adds version: v2 to the request (request labeling), to indicate that subsequent requests in the trace should remain within the v2 swimlane. The weight field sets an 80:20 traffic ratio between v1 and v2, directing a small portion of traffic to v2 for online testing of its stability.

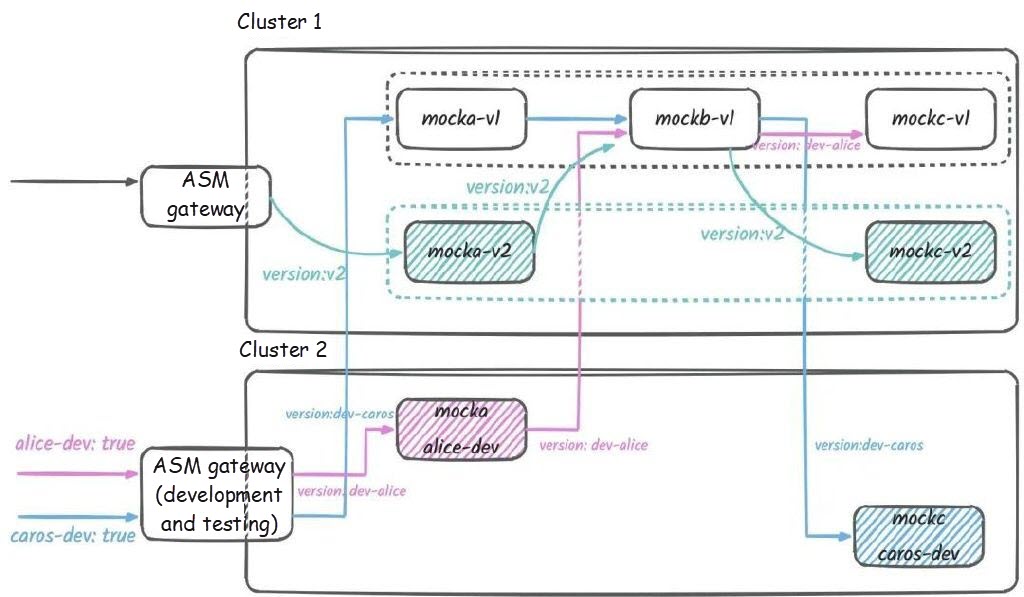

At this point, continuously access the gateway of the production cluster, and you will see that traffic is sent to the two versions at an approximate 80:20 ratio. The trace for the v1 application is v1 -> v1 -> v1, while for the v2 application, it is v2 -> v1 -> v2.

for i in {1..100}; do curl http://{IP address of the ASM gateway }/ ; echo ''; sleep 1; done;

-> mocka(version: v1, ip: 192.168.0.26)-> mockb(version: v1, ip: 192.168.0.20)-> mockc(version: v1, ip: 192.168.0.32)

-> mocka(version: v1, ip: 192.168.0.26)-> mockb(version: v1, ip: 192.168.0.20)-> mockc(version: v1, ip: 192.168.0.32)

-> mocka(version: v1, ip: 192.168.0.26)-> mockb(version: v1, ip: 192.168.0.20)-> mockc(version: v1, ip: 192.168.0.32)

-> mocka(version: v1, ip: 192.168.0.26)-> mockb(version: v1, ip: 192.168.0.20)-> mockc(version: v1, ip: 192.168.0.32)

-> mocka(version: v1, ip: 192.168.0.26)-> mockb(version: v1, ip: 192.168.0.20)-> mockc(version: v1, ip: 192.168.0.32)

-> mocka(version: v1, ip: 192.168.0.26)-> mockb(version: v1, ip: 192.168.0.20)-> mockc(version: v1, ip: 192.168.0.32)

-> mocka(version: v1, ip: 192.168.0.26)-> mockb(version: v1, ip: 192.168.0.20)-> mockc(version: v1, ip: 192.168.0.32)

-> mocka(version: v2, ip: 192.168.0.30)-> mockb(version: v1, ip: 192.168.0.20)-> mockc(version: v2, ip: 192.168.0.31)

-> mocka(version: v1, ip: 192.168.0.26)-> mockb(version: v1, ip: 192.168.0.20)-> mockc(version: v1, ip: 192.168.0.32)

-> mocka(version: v2, ip: 192.168.0.30)-> mockb(version: v1, ip: 192.168.0.20)-> mockc(version: v2, ip: 192.168.0.31)

...At this point, the traffic topology in the cluster is as follows:

After the v2 version is launched in canary release, we can incrementally switch the traffic between v1 and v2. This is accomplished by adjusting the ratios of the two swimlanes in the ingress-vs.yaml file. Eventually, when the traffic ratio for the v2 version is set to 100, no more requests are sent to the v1 version online.

The ingress-vs.yaml file at this point should be as follows:

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: swimlane-ingress-vs

namespace: istio-system

spec:

gateways:

- istio-system/ingressgateway

hosts:

- '*'

http:

- route:

- destination:

host: mocka.default.svc.cluster.local

subset: v2

headers:

request:

set:

version: v2At this point, the traffic topology in the cluster is as follows:

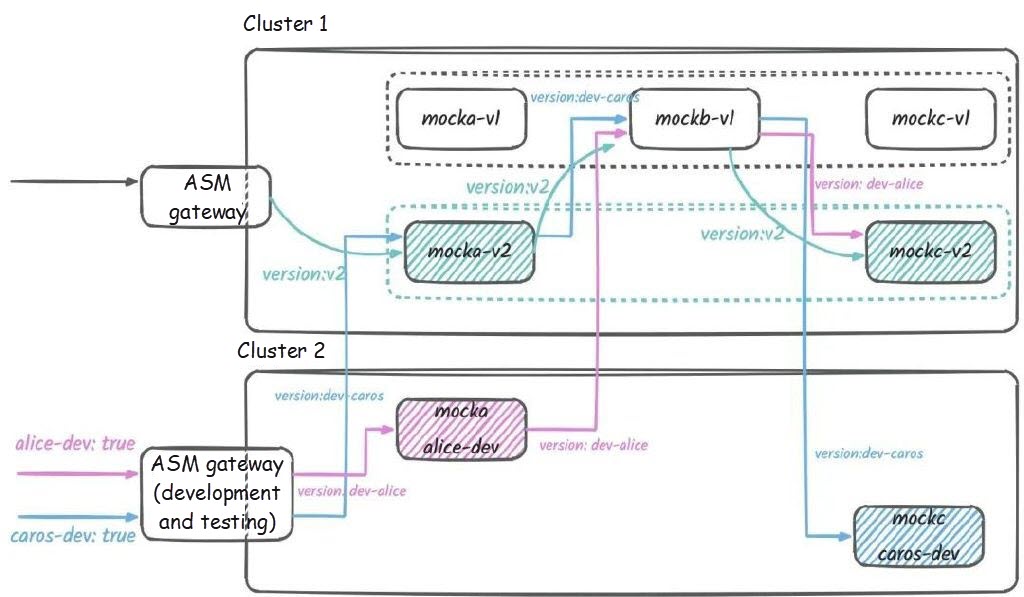

If you access the gateway of the production cluster, you can see that all the requests are returned as v2 -> v1 -> v2 (the request output is not shown here). The v2 version is officially launched.

At the end of the release, since the online mocka and mockc services are updated, we need to switch the baseline versions of these two services and clean up the v1 versions of mocka and mockc that are no longer used.

Modify the swimlane-v1.yaml file created above (this file contains the swimlane group and the definition of the v1 swimlane) and replace it with the following content:

# Declarative Configuration of the Swimlane Group

apiVersion: istio.alibabacloud.com/v1

kind: ASMSwimLaneGroup

metadata:

name: mock

spec:

ingress:

gateway:

name: ingressgateway

namespace: istio-system

type: ASM

isPermissive: true # Declare that all swimlanes in the swimlane group are in loose mode

permissiveModeConfiguration:

routeHeader: version # The traffic label request header is version

serviceLevelFallback: # Declare the baseline version of the service and update the baseline version of mocka and mockc to v2

default/mocka: v2

default/mockb: v1

default/mockc: v2

traceHeader: baggage # Complete traffic label pass-through based on baggage pass-through

services: # Declare which services are included in the application

- cluster:

id: ce6ed3969d62c4baf89fb3b7f60be7f73 # The ID of the production cluster

name: mocka

namespace: default

- cluster:

id: ce6ed3969d62c4baf89fb3b7f60be7f73

name: mockb

namespace: default

- cluster:

id: ce6ed3969d62c4baf89fb3b7f60be7f73

name: mockc

namespace: default

---

# Declarative Configuration of Swimlanes

apiVersion: istio.alibabacloud.com/v1

kind: ASMSwimLane

metadata:

labels:

swimlane-group: mock # Specify the subordinate swimlane group

name: v1

spec:

labelSelector:

version: v1

services: # Specify which services in the application are included in the v1 version. After the baseline is updated, the mocka and mockc services can be removed from the v1 swimlane.

- name: mockb

namespace: defaultThe preceding declarative configuration update adjusts the baseline version of mocka and mockc to v2 and removes mocka and mockc from the v1 swimlane. This indicates that the v1 versions of these two services are discarded.

At this point, all traffic no longer passes through the v1 versions of mocka and mockc. The traffic topology in the cluster is as follows:

By accessing Alice's development environment, you can observe the switch of the baseline version:

curl -H "alice-dev:true" { IP address of the ASM gateway in the development cluster}

-> mocka(version: dev-alice, ip: 192.168.0.23)-> mockb(version: v1, ip: 192.168.0.20)-> mockc(version: v2, ip: 192.168.0.31)Finally, you can delete the v1 deployment of these two services to complete the offline:

kubectl delete deployment mocka-v1 mockc-v1After the v2 version is officially launched, the application enters the development iteration process of the v3 version. We assume that the v3 version has only updated the mockb service for one round. Assume that there is a developer named Bob who is responsible for mockb and is now working on a new round of development for it. He can create his own development environment similarly to how Alice and Caros did:

Create a bob-dev.yaml file with the following content:

# Workload of the Development Version

apiVersion: apps/v1

kind: Deployment

metadata:

name: mockb-dev-bob

labels:

app: mockb

version: dev-bob

spec:

replicas: 1

selector:

matchLabels:

app: mockb

version: dev-bob

template:

metadata:

labels:

app: mockb

version: dev-bob

annotations:

instrumentation.opentelemetry.io/inject-java: "true"

instrumentation.opentelemetry.io/container-names: "default"

spec:

containers:

- name: default

image: registry-cn-hangzhou.ack.aliyuncs.com/acs/asm-mock:v0.1-java

imagePullPolicy: IfNotPresent

env:

- name: version

value: dev-bob

- name: app

value: mockb

- name: upstream_url

value: "http://mockc:8000/"

ports:

- containerPort: 8000

---

# Declarative Configuration of Swimlanes

apiVersion: istio.alibabacloud.com/v1

kind: ASMSwimLane

metadata:

labels:

swimlane-group: mock # Specify the subordinate swimlane group

name: dev-bob

spec:

labelSelector:

version: dev-bob

services: # Specify which services are included in Bob's development environment (other services use the baseline version)

- name: mockb

namespace: default

---

# Traffic Redirection Configuration of the Development Environment

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: swimlane-ingress-vs-bob

namespace: istio-system

spec:

gateways:

- istio-system/ingressgateway-dev

hosts:

- '*'

http:

- match:

- headers:

bob-dev:

exact: "true"

name: route-bob

route:

- destination:

host: mocka.default.svc.cluster.local

subset: v2 # The mocka service that is sent to the baseline version

headers:

request:

set:

version: dev-bob #Traffic labeling, specifying that subsequent traffic is directed to Bob's development environmentNext, run the following command to create his development environment:

kubectl --kubeconfig ~/.kube/config2 apply -f bob-dev.yamlThis creates a workload for the mockb service that Bob is developing and specifies the isolated environment that Bob uses through declarative definitions of the swimlane and traffic redirection rules. By adding bob-dev: true to the request header, you can access this environment. When accessing, use the IP address of the ASM gateway in the development cluster. The method to obtain the IP address is the same as mentioned above.

curl -H "bob-dev: true" { IP address of the ASM gateway in the development and testing environment}

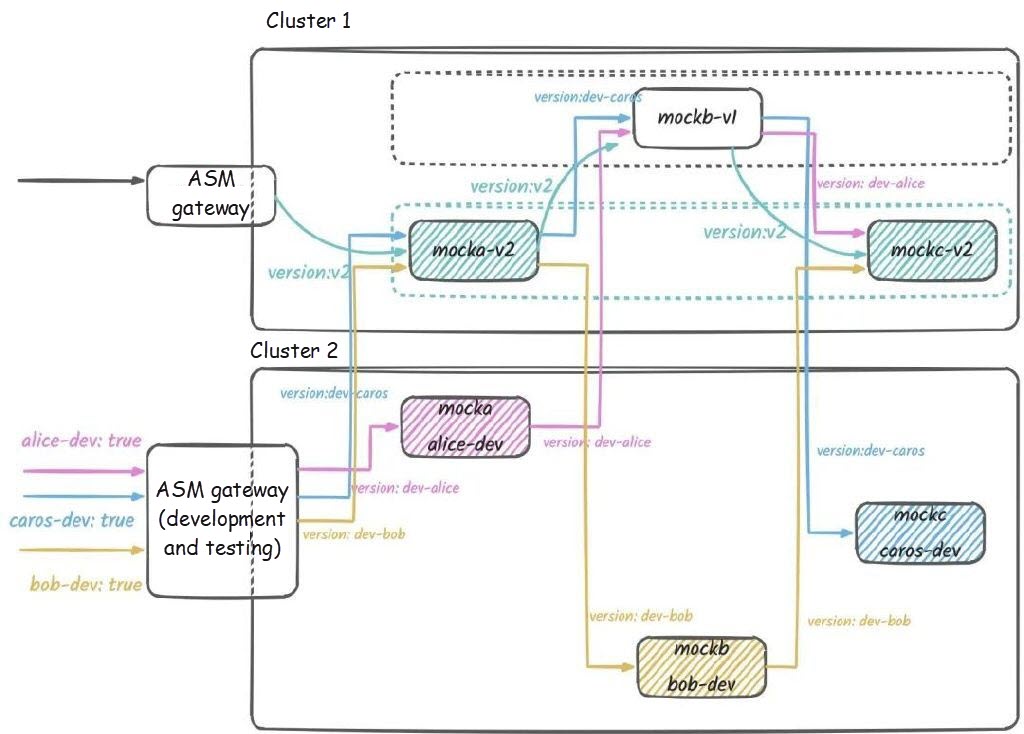

-> mocka(version: v2, ip: 192.168.0.30)-> mockb(version: dev-bob, ip: 192.168.0.80)-> mockc(version: v2, ip: 192.168.0.31)After Bob's development and testing environment is added, the traffic topology in the cluster is as follows:

Finally, we launch the v3 version of the application, and O&M personnel will perform similar operations as when releasing the v2 version.

1) Create a mock-v3.yaml file with the following content.

apiVersion: apps/v1

kind: Deployment

metadata:

name: mockb-v3

labels:

app: mockb

version: v3

spec:

replicas: 1

selector:

matchLabels:

app: mockb

version: v3

ASM_TRAFFIC_TAG: v3

template:

metadata:

labels:

app: mockb

version: v3

ASM_TRAFFIC_TAG: v3

annotations:

instrumentation.opentelemetry.io/inject-java: "true"

instrumentation.opentelemetry.io/container-names: "default"

spec:

containers:

- name: default

image: registry-cn-hangzhou.ack.aliyuncs.com/acs/asm-mock:v0.1-java

imagePullPolicy: IfNotPresent

env:

- name: version

value: v3

- name: app

value: mockb

- name: upstream_url

value: "http://mockc:8000/"

ports:

- containerPort: 80002) Run the following command to deploy the instance service.

kubectl apply -f mock-v3.yamlIn v3, only mocka and mocc are iterated. Therefore, the deployment content only includes the deployment of mockb with the version: v3 label.

1) Create a swimlane-v3.yaml file with the following content.

# Declarative Configuration of V3 Swimlane

apiVersion: istio.alibabacloud.com/v1

kind: ASMSwimLane

metadata:

labels:

swimlane-group: mock # Specify the subordinate swimlane group

name: v3

spec:

labelSelector:

version: v3

services: # Specify which service iterations are included in the v3 version (only mockb has been iterated)

- name: mockb

namespace: default2) Run the following command to deploy the swimlane group and the v2 swimlane definition for the mock application.

kubectl apply -f swimlane-v3.yamlCompared with the v1 version, the v2 swimlanes only contain mocka and mockc, and use version: v2 to match the services of the corresponding version.

After the v3 environment is deployed, you can modify the virtual service that is already active on the gateway to route traffic to the v3 version of the application.

Modify the ingress-vs.yaml file created above to the following content:

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: swimlane-ingress-vs

namespace: istio-system

spec:

gateways:

- istio-system/ingressgateway

hosts:

- '*'

http:

- route:

- destination:

host: mocka.default.svc.cluster.local

subset: v2 # Direct traffic to the latest version of mocka (currently v2)

headers: # Label traffic

request:

set:

version: v3Here, the process of canary release (which would normally involve switching the traffic ratio between v2 and v3) is omitted. Instead, the v3 version of the application is directly launched.

After launching, similar to when v2 was launched, the baseline versions of the services recorded in the swimlane group are modified. Modify the swimlane-v1.yaml file created above to the following content:

# Declarative Configuration of the Swimlane Group

apiVersion: istio.alibabacloud.com/v1

kind: ASMSwimLaneGroup

metadata:

name: mock

spec:

ingress:

gateway:

name: ingressgateway

namespace: istio-system

type: ASM

isPermissive: true # Declare that all swimlanes in the swimlane group are in loose mode

permissiveModeConfiguration:

routeHeader: version # The traffic label request header is version

serviceLevelFallback: # Declare the baseline version of services and update the baseline version of mockb to v3

default/mocka: v2

default/mockb: v3

default/mockc: v2

traceHeader: baggage # Complete traffic label pass-through based on baggage pass-through

services: # Declare which services are included in the application

- cluster:

id: ce6ed3969d62c4baf89fb3b7f60be7f73 # The ID of the production cluster

name: mocka

namespace: default

- cluster:

id: ce6ed3969d62c4baf89fb3b7f60be7f73

name: mockb

namespace: default

- cluster:

id: ce6ed3969d62c4baf89fb3b7f60be7f73

name: mockc

namespace: defaultRun the following command to update the declaration of the service baseline version in the swimlane group:

kubectl apply -f swimlane-v1.yamlWhen you access the ASM gateway in the production cluster again, you will find that the trace has changed to v2 -> v3 -> v2.

curl {IP Address of the ASM gateway}

-> mocka(version: v2, ip: 192.168.0.30)-> mockb(version: v3, ip: 192.168.0.19)-> mockc(version: v2, ip: 192.168.0.31)At this point, the traffic topology in the cluster is as follows:

We found that for the initial v1 version of the application, there are no v1 versions of any services still accepting traffic. This means that our v1 version can be completely offline, which involves deleting the remaining mockb deployment for v1 and the corresponding swimlane declaration for v1.

kubectl delete deployment mockb-v1

kubectl delete asmswimlane v1For future iterations of this application, you can simply follow the above process repeatedly. All operations in this article are based on declarative definitions using YAML, which makes it easy to integrate with existing CICD systems based on the Kubernetes API operation, enabling automated release processes such as Argo CD and Apsara Devops.

This article introduces the traffic swimlanes and multi-cluster management capabilities of ASM, which allow continuous application iteration while also enabling individual developers and testers to build isolated application environments in separate development and testing clusters.

Furthermore, isolated environments can be deployed on demand, and all operations can be performed using declarative configuration YAML, significantly enhancing the efficiency of application development, testing, and deployment processes while notably reducing resource consumption when setting up deployment environments. When implementing traffic swimlanes (loose mode) based on the OpenTelemetry Baggage pass-through solution, the whole solution can achieve a non-intrusive effect on the business code, reducing the burden of development and O&M.

The prerequisite for this solution is to establish network connectivity between the two clusters, allowing services in both clusters to access each other. For this best practice, you can choose the method of "connecting cross-region networks through ASM east-west gateways" to configure network interconnection. Since the traffic between the development/testing cluster and the production cluster is not particularly high, this solution can significantly reduce costs.

For more information, please refer to Alibaba Cloud Service Mesh (ASM) Multi-cluster Practice (Part 1): Multi-cluster Management Overview.

Use Argo Workflows to Orchestrate Genetic Computing Workflows

175 posts | 31 followers

FollowAlibaba Cloud Native Community - July 12, 2022

Alibaba Developer - November 17, 2021

Alibaba Developer - June 21, 2021

Alibaba Cloud Native Community - September 16, 2022

Alibaba Container Service - August 21, 2024

Xi Ning Wang(王夕宁) - July 21, 2023

175 posts | 31 followers

Follow Alibaba Cloud Service Mesh

Alibaba Cloud Service Mesh

Alibaba Cloud Service Mesh (ASM) is a fully managed service mesh platform that is compatible with Istio.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn MoreMore Posts by Alibaba Container Service