By KubeVela – Project Maintainer

As an implementation of the Open Application Model (OAM) on Kubernetes, KubeVela has evolved from oam-kubernetes-runtime in just over half a year, and it is gaining significant momentum. It has been on the top of GitHub's Go language trends, on the home page of HackerNews, and gained worldwide end users from different industries, including MasterCard, Springer Nature, 4Paradigm, SILOT, and Upbound. Moreover, commercial products based on KubeVela have emerged, such as Oracle Cloud and Napptive. At the end of March 2021, the KubeVela community announced the release of version 1.0 with all stable APIs, officially starting the move to enterprise-level production availability.

However, if you are not very concerned about the cloud-native field or don't have a deep understanding of KubeVela, don't worry. This article takes advantage of this release to provide a detailed overview of the development of KubeVela, explain its core ideas and vision, and reveal the principles behind this rising cloud-native application management platform.

First of all, what is KubeVela? In simple terms, KubeVela is a "programmable" cloud-native application management and delivery platform.

What is programmable? What is the relationship between Kubernetes and KubeVela? What problems can it help solve?

Platform-as-a-Service (PaaS) systems, such as Cloud Foundry and Heroku, have been satisfying all users with their simple and efficient application deployment experience since their launch. Kubernetes is very popular within cloud-native. What are the problems that previous PaaS systems, including Docker, have encountered?

Anyone that has used PaaS will have a deep feeling about one of the essential defects of the system, "the capability dilemma" of the PaaS system.

Figure 1 - The Capability Dilemma of PaaS System

As shown in figure 1, when you start using a PaaS system, the experience is often very good, and problems can always be solved appropriately. However, over time, a very annoying situation will occur. The demands of the application begin to exceed the capabilities that PaaS can provide. What's more, once this problem occurs, users' satisfaction with the PaaS system will collapse. Either redeveloping the platform to add features or modifying the application to adapt to the platform is a huge investment with very little return. Besides, at this time, every user will begin to lose confidence in the platform and think, "Who knows whether the next system will be better or application overhaul will happen again soon?"

This problem is the main reason why PaaS has not become mainstream even though it has all the elements necessary for cloud-native.

Compared with PaaS, Kubernetes has more prominent characteristics. Although Kubernetes is criticized as "complex," its advantages will gradually emerge as the complexity of applications increases. When user requirements start supporting the custom resource definition (CRD) controller, you'll be glad you have Kubernetes.

Kubernetes is a powerful and robust infrastructure access platform that is known as the platform for the platform. The APIs and working methods of Kubernetes are naturally unsuitable for direct interaction with users. However, Kubernetes can access any infrastructure consistently, providing various methods for platform engineers to build upper layer systems, such as PaaS. This bug-level infrastructure supply method makes the most sophisticated PaaS systems very inconvenient by comparison. For many large enterprises struggling to build internal application platforms, this is a welcome relief. These enterprises are the users that PaaS vendors want to attract.

If a large enterprise has to decide whether to adopt a PaaS system or Kubernetes, the platform team is often the one to make the decision. The opinions of the platform team are important, but it does not mean that the ideas of the end users can be ignored. In any organization, the business team that directly creates value holds the highest voice, just a little later in the process.

Therefore, in most cases, any platform team that obtains Kubernetes will not let the business learn Kubernetes directly. Instead, they will build a "cloud-native" PaaS based on Kubernetes, and use it to serve the business side.

The cloud-native PaaS remains almost unchanged. The only change is that the cloud-native PaaS today is implemented based on Kubernetes, which has made it a lot easier.

What about the actual practice?

The PaaS built based on Kubernetes seems pretty good, but the whole process is somewhat difficult. At best, the process is PaaS development. 80% of the work is related to designing and developing the UI, while the rest is related to installing and maintaining the Kubernetes plug-ins. What is even more regrettable is that the PaaS built this way is not essentially different from the previous PaaS. Any time user demands change, a lot of time will be spent redesigning, modifying the frontend, and scheduling the launch. As a result, the ever-changing ecosystem and unlimited scalability of Kubernetes are sealed by the PaaS we have built. So, what is the value of Kubernetes in the PaaS?

New PaaS and limitations were introduced to address the inherent limitations of PaaS. This is not only a dilemma but also a core issue for many enterprises during their implementation of cloud-native technologies. It seems to lock users into a fixed layer of abstractions and capability set once again. The cloud-native benefits show how platform development has become easier. Unfortunately, for business users, this doesn't make much sense.

What's more, the introduction of cloud-native and Kubernetes makes operation and maintenance (O&M) personnel delicate. Originally, their mastery of business operations best practices was the most important asset of the enterprise. However, after applying cloud-native technologies, the workload in enterprises has to be taken over by Kubernetes. Therefore, many people say Kubernetes will replace O&M. This is an exaggeration, but it does reflect the anxiety brought about by the trend. From another perspective, how should the experience and best practices of application O&M be implemented under the cloud-native development environment? Let's take a simple workload as an example. A Kubernetes deployment object where fields are exposed/not exposed to the user is reflected in the PaaS UI, but it cannot be decided by the frontend developer.

Alibaba is one of the pioneers in the industry for cloud-native technologies. The aforementioned cloud-native technical challenge around application platforms has long been exposed. At the end of 2019, the Alibaba Foundational Technologies Team worked with the R&D Efficiency Team to explore and address this issue. The concept of a "programmable" application platform was proposed in the form of open-source projects from OAM and KubeVela. This system has become the mainstream way for Alibaba to build application platforms quickly.

"Programmable" means that in the process of building the upper platform, the abstractions are not directly overlaid on Kubernetes, even if it is just a UI. Instead, the capabilities provided by the infrastructure are abstracted and managed in a codified way through the CUE template language.

For example, an Alibaba PaaS wants to provide users with a capability called web service. It refers to any service from external access deployed by the methods of Kubernetes deployment and service, exposing configuration items, such as images and ports, to users.

In the traditional method, a central repository domain (CRD) called web service may be implemented, and then the deployment and the service in its controller will be encapsulated. However, this inevitably causes "the capability dilemma" of the PaaS system:

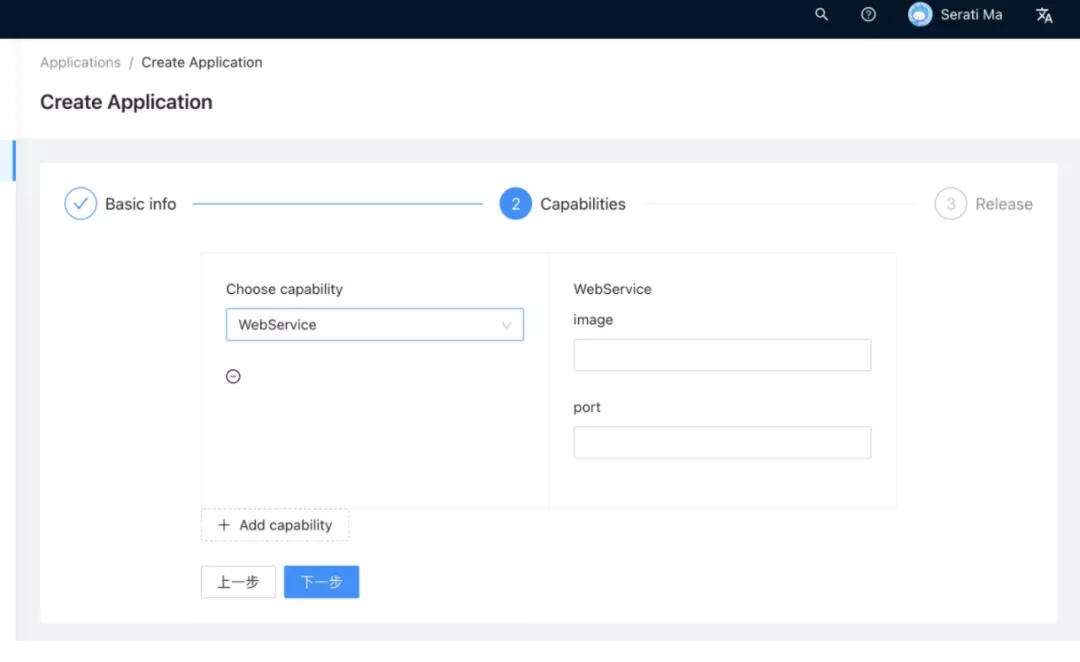

$ kubectl apply -f web-service.yamlMore importantly, once the preceding command is executed, KubeVela will automatically generate the help document and frontend form structure of this capability according to the content of the CUE template. Therefore, users can know the functions of the web service in the PaaS system immediately, such as the parameters and field types, and use it directly, as shown in figure 2.

Figure 2 - Automatic Form Generation Diagram by KubeVela

In KubeVela, all platform capabilities, such as Canary Release, Ingress, and Autoscaler, are defined, maintained, and delivered to users this way. The end-to-end integration between the user experience layer and the Kubernetes capability layer allows platform teams to implement PaaS and any upper-layer platforms quickly, including AI PaaS and big data PaaS at a very low cost. Meanwhile, users' continuous evolution demands can also be responded to efficiently.

Most importantly, at the implementation layer, KubeVela does not simply render CUE templates in the client. Instead, the Kubernetes controller renders and maintains the generated API objects. There are three reasons for this process:

Therefore, the design of KubeVela mentioned above fundamentally solves the long-standing problems in the production environment of the traditional IaC system despite if the system has a good user experience. What's more, in most cases, the time to respond to user needs is reduced from weeks to hours, completely breaking down the barriers between cloud-native technology and the end user experience. KubeVela is implemented based on the native Kubernetes, which ensures the strict robustness of the platform. No matter what CI/CD or GitOps tool is available, as long as it supports Kubernetes, it must support KubeVela without any integration cost. This system is visually referred to as the Platform-as-Code.

When referring to the concepts of KubeVela and CUE templates, many users have questioned the relationship between KubeVela and Helm.

Helm, like CUE, is a tool for encapsulating and abstracting Kubernetes API resources. Helm uses the Go template language and applies it naturally to the design idea of the Platform-as-Code of KubeVela.

Therefore, in KubeVela 1.0, any Helm package can be deployed as an application component. More importantly, the Helm component, the CUE component, and all the capabilities in KubeVela apply to them. This allows the delivery of the Helm packages through KubeVela to bring some very important capabilities that are difficult to provide with the existing tools for users.

For example, many Helm packages come from third parties, such as Kafka charts, which may be created by the enterprise behind Kafka. In general, packages can be used, but the template inside can't be changed. Otherwise, users will have to maintain the modified chart on their own.

This problem can easily be solved in KubeVela. Specifically, KubeVela provides a capability of an operational side called Patch, which allows patches to the resources encapsulated in the components, such as the Helm package, to be delivered in a declarative manner. There is no need to check whether this field is revealed through the chart template. The patch will not restart the component instance after the resource is rendered by Helm and before being committed to the Kubernetes cluster for processing.

For example, there is a built-in system of canary release in KubeVela called AppRollout. Through this system, users can also release the Helm packages gradually as a whole without paying attention to the workload type instead of releasing for a single deployment workload, as controllers like Flagger do. In other words, even if there is an operator in the chart, canary release can still be performed in KubeVela. Furthermore, if you integrate KubeVela with Argo Workflow, more complex behavior, such as the release order and topology of Helm packages, can be specified easily.

In addition to supporting Helm, KubeVela 1.0 aims to become the most powerful platform for the delivery, release, and O&M of Helm charts.

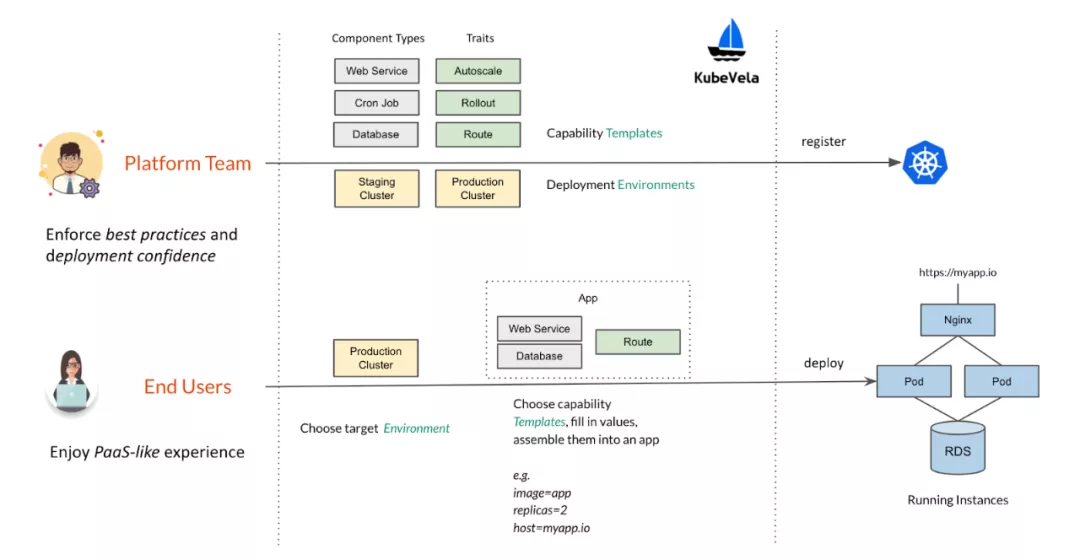

Thanks to the Platform-as-Code design, the KubeVela-based application platform is naturally self-serviceable for users, as shown in figure 3.

Figure 3 - KubeVela Self-Delivery Flowchart

Specifically, a platform team can maintain a large number of code-based "capability templates" in the system with minimal labor cost. However, as the end user of the platform, the business team only needs to select several capability templates on the PaaS UI according to application deployment requirements and fill in the parameters to complete the delivery themselves. No matter how complex the application is, business users have low learning costs and will automatically follow the specifications defined in the template by default. During the process of deployment, operation and maintenance of the application is managed by Kubernetes in an automated manner, reducing the burden on users.

More importantly, thanks to this mechanism, the O&M personnel once again play a core role in the platform team. In particular, they design and write capability templates through CUE or Helm and then install the templates into the KubeVela system for business teams to use. This is the process of O&M personnel to combine the business requirements with the best practices of the entire platform in a codified manner into capability modules that can be reused and customized. Moreover, during the process, the personnel only need to understand the core concepts of Kubernetes without customizing and developing Kubernetes. On the other hand, these code-based capability modules have extremely high reusability and can be changed and released easily without additional R&D costs in most cases. The most agile "cloud-native" O&M practices can enable the business to feel the core value of cloud-native with "efficient integration of R&D, delivery, and O&M."

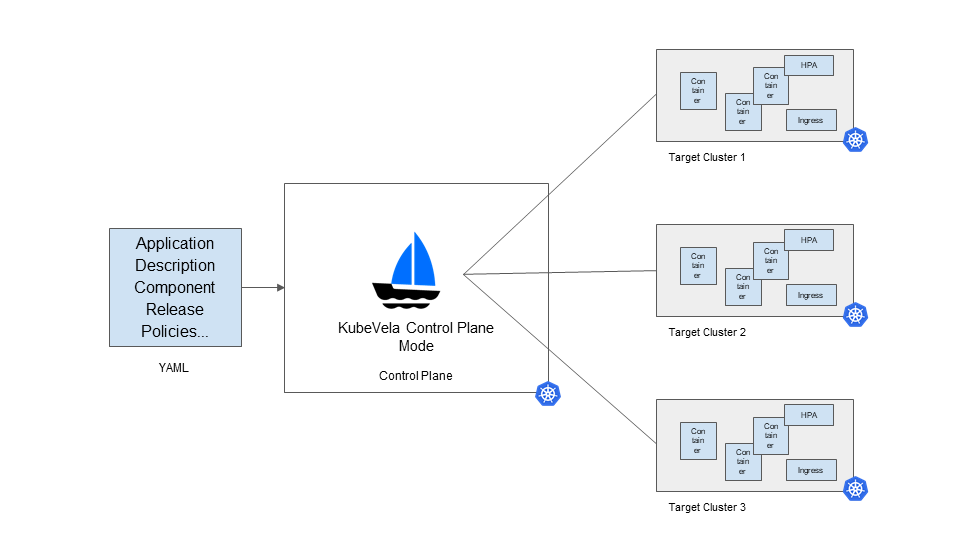

Another major update to KubeVela 1.0 is the improved system deployment structure and the provision of the control plane mode. It provides the capability to deliver versioned applications for multiple environments and clusters. By doing so, a typical deployment of KubeVela in the production environment is shown in figure 4.

Figure 4 - Control Plane of KubeVela Deployment Diagram

In this scenario, KubeVela supports descriptions for multi-environment applications and configuration of placement policies for applications. It also supports the online deployment of multiple versions of the application and a canary release model through Istio. For more information, please read this document.

After the release of version 1.0, KubeVela will continue to evolve based on the architecture above. One of the main works is migrating and upgrading the KubeVela Dashboard, CLI, and Appfile to interact with the KubeVela control plane via gRPC, instead of dealing with the target cluster directly (like the previous versions.) Since this part of the work is still in progress, everyone with experience in building the next generation of "programmable" platforms is welcome to participate. At the same time, Springer Nature, a well-known European technology publisher, is also participating to help migrate from CloudFoundry to KubeVela smoothly.

After summarizing the current design and capabilities of KubeVela, some may say it is an inevitable development path for cloud-native application platforms.

More importantly, based on the Platform-as-Code concept, KubeVela provides more reasonable organization methods for future cloud-native application platform teams.

Based on this system, the KubeVela application platform can also be used in powerful "no-difference" application delivery scenarios, achieving a completely environment-independent cloud application delivery experience.

KubeVela 1.0 was released based on the OAM model and maximum validation in cloud-native application delivery scenarios. It represents stable APIs and a mature usage paradigm. However, this does not mean the end but a brand-new beginning. KubeVela 1.0 opens the future of a "programmable" application platform, which is an effective path to release the potential of cloud-native. As a result, end users and software delivery partners can enjoy the charm of cloud-native technology from the very beginning. This project will achieve its vision of making shipping applications more enjoyable!

You can find more details about KubeVela and the OAM through the following materials:

Welcome to the Chinese localization translation of KubeVela documents organized by the cloud-native community!

Fluid Helps Improve Data Elasticity with Customized Auto Scaling

640 posts | 55 followers

FollowAlibaba Cloud Native Community - March 20, 2023

Alibaba Cloud Native Community - April 19, 2023

Alibaba Cloud Native Community - January 27, 2022

Alibaba Cloud Native Community - February 9, 2023

Alibaba Cloud Native Community - March 1, 2023

Alibaba Cloud Native Community - March 8, 2023

640 posts | 55 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn MoreMore Posts by Alibaba Cloud Native Community