By Yuanyuan Ma

The field of artificial intelligence is developing rapidly due to the emergence of Large Language Models (LLMs). These advanced AI models can communicate with humans in natural language and possess reasoning capabilities, fundamentally transforming the way humans access information and process text data.

The HTTP protocol has always been a first-class citizen in service meshes, and existing LLM providers typically offer services via HTTP requests. Based on HTTP, ASM has enhanced support for LLM request protocols. It now supports common LLM provider protocol standards, providing users with a simple and efficient integration experience. With ASM, users can implement canary access, routing in proportion, and various observability features for LLMs. Once applications integrate with LLMs using Alibaba Cloud Service Mesh (ASM), they can further decouple the application from LLMProvider, improving the robustness and maintainability of the entire trace.

ASM supports managing Large Language Model (LLM) traffic through LLMProvider and LLMRoute resources. LLMProvider registers LLM services, while LLMRoute sets traffic rules, allowing applications to flexibly switch models to meet diverse scenario requirements.

This series of documents describes how to manage LLM traffic in ASM from the perspectives of traffic routing, observability, and security. This is the first article in the series, focusing on traffic routing capabilities.

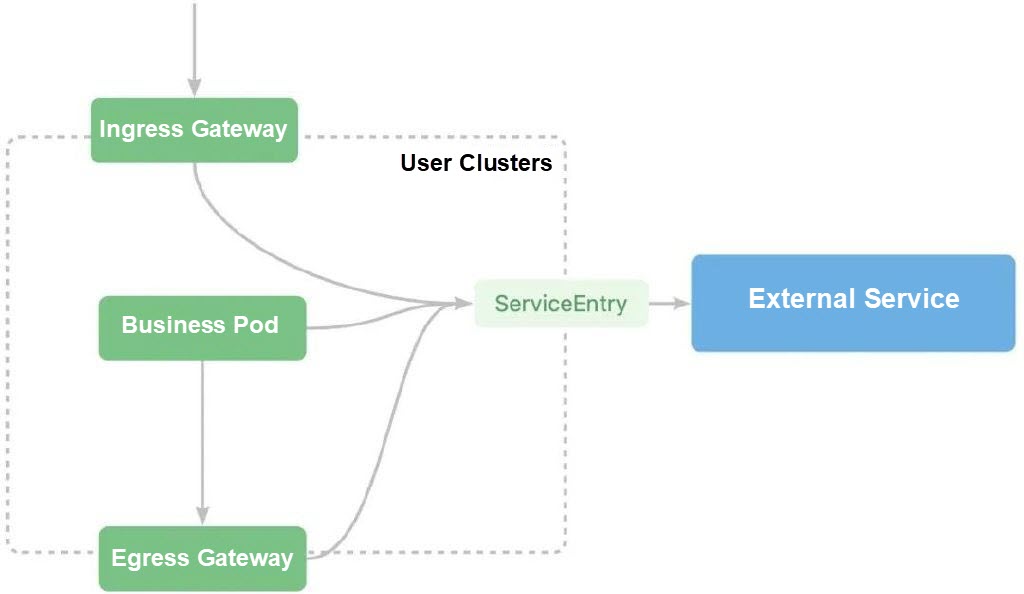

In a service mesh, to register a typical external HTTP service within the cluster, you first configure ServiceEntry, followed by configuring routing rules with VirtualService. Afterward, you can call this external service through a gateway or business Pod. If you do not register for direct calls, you will not be able to enjoy the traffic management, observability, and security capabilities provided by the service mesh.

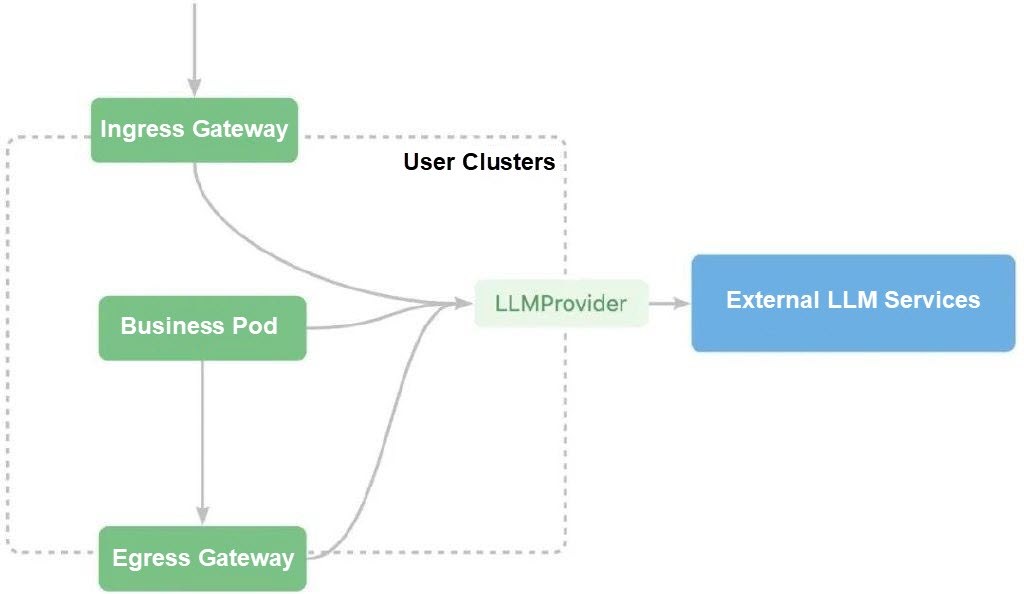

However, the native ServiceEntry can only handle ordinary TCP and HTTP traffic. LLM requests have some specific advanced parameters based on the HTTP protocol that ordinary ServiceEntry cannot directly support. To address this, ASM introduces two new resources:

• LLMProvider: It corresponds to ServiceEntry for the HTTP protocol. Users can use LLMProvider to register external LLM service providers to the cluster and configure the Host, APIKey, and other model parameters of the LLM providers.

• LLMRoute: It corresponds to VirtualService for the HTTP protocol. Users can use LLMRoute to configure traffic rules, distributing traffic according to ratios or specific matching conditions to a particular LLMProvider.

This article uses two examples to demonstrate how to manage LLM traffic in the cluster using LLMProvider and LLMRoute.

• Add a cluster to an ASM instance, and the version of the ASM instance is v1.21.6.88 or later.

• The Sidecar injection is enabled. For specific operations, please refer to Configuring a Sidecar Injection Policy.

• DashScope is activated and the available API_KEY is obtained. For specific operations, please refer to How to Activate DashScope and Create an API-KEY_DashScope-Alibaba Cloud Help Center.

• The second example in this article requires that the API service of Moonshot is activated and the available API_KEY is obtained. For specific operations, please refer to Moonshot AI Open Platform.

Use the kubeconfig file of the ACK cluster and apply the following YAML template to create a sleep application.

apiVersion: v1

kind: ServiceAccount

metadata:

name: sleep

---

apiVersion: v1

kind: Service

metadata:

name: sleep

labels:

app: sleep

service: sleep

spec:

ports:

- port: 80

name: http

selector:

app: sleep

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: sleep

spec:

replicas: 1

selector:

matchLabels:

app: sleep

template:

metadata:

labels:

app: sleep

spec:

terminationGracePeriodSeconds: 0

serviceAccountName: sleep

containers:

- name: sleep

image: registry-cn-hangzhou.ack.aliyuncs.com/ack-demo/curl:asm-sleep

command: ["/bin/sleep", "infinity"]

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /etc/sleep/tls

name: secret-volume

volumes:

- name: secret-volume

secret:

secretName: sleep-secret

optional: true

---Use the Kubeconfig file of the ASM cluster to create the following resources:

apiVersion: istio.alibabacloud.com/v1beta1

kind: LLMProvider

metadata:

name: dashscope-qwen

spec:

host: dashscope.aliyuncs.com # Different providers cannot have the same host.

path: /compatible-mode/v1/chat/completions

configs:

defaultConfig:

openAIConfig:

model: qwen-1.8b-chat # Open-source large models are used by default.

stream: false

apiKey: ${ API_KEY of dashscope}After creation, you can directly access dashscope.aliyuncs.com over HTTP in the sleep pod. The Sidecar of ASM automatically converts the request into a format that conforms to the OpenAI LLM protocol (DashScope is compatible with the LLM protocol of OpenAI), adds APIKey to the request, updates the HTTP protocol to HTTPS, and finally sends the request to the LLM provider server outside the cluster.

Use the kubeconfig file of ACK to run the following command for testing:

kubectl exec deployment/sleep -it -- curl --location 'http://dashscope.aliyuncs.com' \

--header 'Content-Type: application/json' \

--data '{

"messages": [

{"role": "user", "content": " Please introduce yourself"}

]

}'

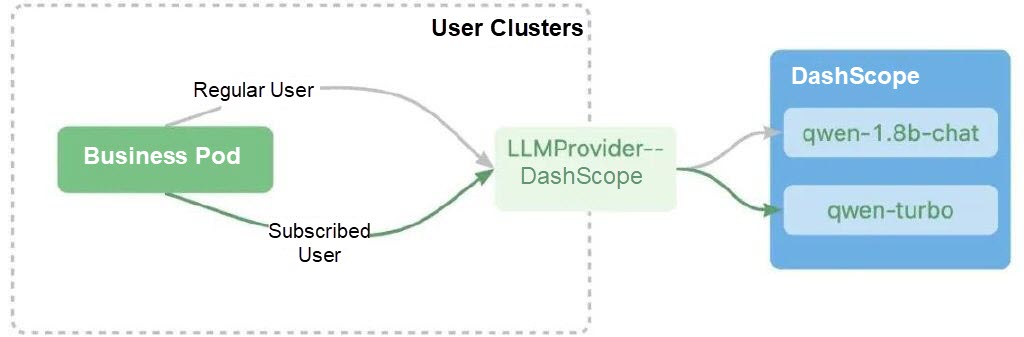

{"choices":[{"message":{"role":"assistant","content":"I am a large-scale language model from Alibaba Cloud. My name is Tongyi Qianwen. My main function is to answer user questions, provide information, and engage in conversations. I can understand user questions and generate an answer or suggestion based on natural language. I can also learn new knowledge and apply it to various scenarios. If you have any questions or need help, please feel free to ask me and I will do my best to support you."},"finish_reason":"stop","index":0,"logprobs":null}],"object":"chat.completion","usage":{"prompt_tokens":3,"completion_tokens":72,"total_tokens":75},"created":1720680044,"system_fingerprint":null,"model":"qwen-1.8b-chat","id":"chatcmpl-1c33b950-3220-9bfe-9066-xxxxxxxxxxxx"}The model we used in the preceding step is qwen-1.8b-chat provided by DashScope, which is one of the smaller parameter models in the open-source series of Tongyi Qianwen models. The functionality to be implemented in this section is: By default, when regular users make a call, they will use the qwen-1.8b-chat model; when subscribed users make a call, they will use the more powerful qwen-turbo model. There will be a special header on the requests of subscribed users to identify the user identities.

Use the kubeconfig file of the ASM cluster to create the following LLMRoute:

apiVersion: istio.alibabacloud.com/v1beta1

kind: LLMRoute

metadata:

name: dashscope-route

spec:

host: dashscope.aliyuncs.com # Different providers cannot have the same host.

rules:

- name: vip-route

matches:

- headers:

user-type:

exact: subscriber # This is a dedicated route for subscribed users, which will be provided with specialized configuration in the provider later.

backendRefs:

- providerHost: dashscope.aliyuncs.com

- backendRefs:

- providerHost: dashscope.aliyuncs.comThis configuration allows requests that carry user-type:subscriber to follow the vip-route routing rule.

Use the kubeconfig file of the ASM cluster to modify LLMProvider and add route-level configurations:

apiVersion: istio.alibabacloud.com/v1beta1

kind: LLMProvider

metadata:

name: dashscope-qwen

spec:

host: dashscope.aliyuncs.com

path: /compatible-mode/v1/chat/completions

configs:

defaultConfig:

openAIConfig:

model: qwen-1.8b-chat # Open-source models are used by default.

stream: false

apiKey: ${API_KEY of dashscope}

routeSpecificConfigs:

vip-route: # A dedicated route for subscribed users

openAIConfig:

model: qwen-turbo # The qwen-turbo model for subscribed users

stream: false

apiKey: ${API_KEY of dashscope}Run the test by using the kubeconfig file of the ACK cluster:

kubectl exec deployment/sleep -it -- curl --location 'http://dashscope.aliyuncs.com' \

--header 'Content-Type: application/json' \

--data '{

"messages": [

{"role": "user", "content": "Please introduce yourself"}

]

}'

{"choices":[{"message":{"role":"assistant","content":"I am a large-scale language model from Alibaba Cloud. My name is Tongyi Qianwen. My main function is to answer user questions, provide information, and engage in conversations. I can understand user questions and generate an answer or suggestion based on natural language. I can also learn new knowledge and apply it to various scenarios. If you have any questions or need help, please feel free to ask me and I will do my best to support you."},"finish_reason":"stop","index":0,"logprobs":null}],"object":"chat.completion","usage":{"prompt_tokens":3,"completion_tokens":72,"total_tokens":75},"created":1720682745,"system_fingerprint":null,"model":"qwen-1.8b-chat","id":"chatcmpl-3d117bd7-9bfb-9121-9fc2-xxxxxxxxxxxx"}kubectl exec deployment/sleep -it -- curl --location 'http://dashscope.aliyuncs.com' \

--header 'Content-Type: application/json' \

--header 'user-type: subscriber' \

--data '{

"messages": [

{"role": "user", "content": "Please introduce yourself"}

]

}'

{"choices":[{"message":{"role":"assistant","content":"Hello, I am a large-scale language model from Alibaba Cloud. My name is Tongyi Qianwen. As an AI assistant, I aim to help users get accurate and useful information and solve their problems and puzzles. I can provide knowledge across various domains, engage in conversations, and even create text. However, please note that all the content I provide is based on the data I am trained on, and may not include the latest events or personal information. If you have any questions, feel free to ask me anytime!"},"finish_reason":"stop","index":0,"logprobs":null}],"object":"chat.completion","usage":{"prompt_tokens":11,"completion_tokens":85,"total_tokens":96},"created":1720683416,"system_fingerprint":null,"model":"qwen-turbo","id":"chatcmpl-9cbc7c56-06e9-9639-a50d-xxxxxxxxxxxx"}You can see that the qwen-turbo model is used for subscribed users.

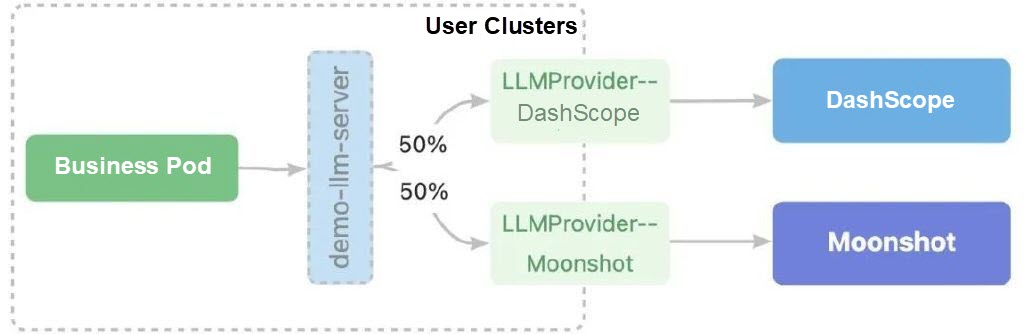

In the production environment, you may need to switch between different LLMProviders due to business changes. ASM also provides the capability to route traffic in proportion between different LLMProviders. Here, we will demonstrate distributing 50% of the traffic to Moonshot and 50% to DashScope.

The demo-llm-server is a normal service in the cluster and corresponds to no endpoint.

Create an LLMProvider first, and use the kubeconfig file of ASM to create the following resources:

apiVersion: istio.alibabacloud.com/v1beta1

kind: LLMProvider

metadata:

name: moonshot

spec:

host: api.moonshot.cn # Different providers cannot have the same host.

path: /v1/chat/completions

configs:

defaultConfig:

openAIConfig:

model: moonshot-v1-8k

stream: false

apiKey: ${API_KEY of Moonshot}Next, use the kubeconfig file of ACK to create a Service. When clients access this Service, traffic will be distributed to different LLMProviders in proportion through the routing rules we configured.

apiVersion: v1

kind: Service

metadata:

name: demo-llm-server

namespace: default

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

selector:

app: none

type: ClusterIPConfigure LLMRoute to route requests that access demo-llm-server to DashScope and Moonshot in proportion. Use the kubeconfig file of the ASM cluster to apply the following configurations:

apiVersion: istio.alibabacloud.com/v1beta1

kind: LLMRoute

metadata:

name: demo-llm-server

namespace: default

spec:

host: demo-llm-server

rules:

- backendRefs:

- providerHost: dashscope.aliyuncs.com

weight: 50

- providerHost: api.moonshot.cn

weight: 50

name: migrate-ruleUse the kubeconfig file of the ACK cluster to run the following test multiple times. You can see that about 50% of the requests are sent to Moonshot, and 50% to DashScope.

kubectl exec deployment/sleep -it -- curl --location 'http://demo-llm-server' \

--header 'Content-Type: application/json' \

--data '{

"messages": [

{"role": "user", "content": "Please introduce yourself"}

]

}'Sample Output:

{"id":"cmpl-cafd47b181204cdbb4a4xxxxxxxxxxxx","object":"chat.com pletion","created":1720687132,"model":"moonshot-v1-8k","choices":[{"index":0,"message":{"role":"assistant","content":"Hello! I am Mina, an AI language model. My main function is to help people generate human-like text. I can write articles, answer questions, give advice, and so on. I am trained on a lot of text data, so I can generate all kinds of text. My goal is to help people communicate and solve problems more effectively."},"finish_reason":"stop"}],"usage":{"prompt_tokens":11,"completion_tokens":59,"total_tokens":70}}

{"choices":[{"message":{"role":"assistant","content":"I am a large-scale language model from Alibaba Cloud. My name is Tongyi Qianwen. My main function is to answer user questions, provide information, and engage in conversations. I can understand user questions and generate an answer or suggestion based on natural language. I can also learn new knowledge and apply it to various scenarios. If you have any questions or need help, please feel free to ask me and I will do my best to support you."},"finish_reason":"stop","index":0,"logprobs":null}],"object":"chat.completion","usage":{"prompt_tokens":3,"completion_tokens":72,"total_tokens":75},"created":1720687164,"system_fingerprint":null,"model":"qwen-1.8b-chat","id":"chatcmpl-2443772b-4e41-9ea8-9bed-xxxxxxxxxxxx"}This article primarily introduces how to manage Large Language Model traffic using LLMProvider and LLMRoute in ASM. Through relevant configurations, applications can become further decoupled from large models. Applications only need to provide business-relevant information in their requests. ASM will dynamically select routing destinations based on the LLMRoute and LLMProvider configurations, add pre-configured request parameters, and then send them to the corresponding Provider.

Based on this, you can quickly change Provider configurations, select different models based on request characteristics, and perform canary traffic distribution among Providers, significantly reducing the cost of integrating large models into clusters. Additionally, all LLM-related capabilities mentioned in this article can not only take effect on Sidecar but can also be used on ASM egress and ingress gateways.

Comprehensive Analysis of Service Mesh Load Balancing Algorithm

223 posts | 33 followers

FollowAlibaba Container Service - May 14, 2025

Alibaba Cloud Native - February 20, 2024

Alibaba Container Service - August 16, 2024

Alibaba Cloud Native - August 7, 2024

Xi Ning Wang(王夕宁) - August 17, 2023

Alibaba Container Service - October 12, 2024

223 posts | 33 followers

Follow Alibaba Cloud Service Mesh

Alibaba Cloud Service Mesh

Alibaba Cloud Service Mesh (ASM) is a fully managed service mesh platform that is compatible with Istio.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Simple Log Service

Simple Log Service

An all-in-one service for log-type data

Learn MoreMore Posts by Alibaba Container Service