By Han Tao from Bixin Technology

After an application is containerized, it will inevitably encounter a problem. Insufficient node resources in a Kubernetes cluster will cause pods not to run in time. However, purchasing too many nodes will lead to idle resources and waste.

How do we take advantage of the container orchestration capability of Kubernetes and the flexibility and scale of cloud resources to ensure high elasticity and low cost of business?

This article mainly discusses how the Bixin platform uses Alibaba Cloud Container Service for Kubernetes (ACK) to build an application elastic architecture and further optimize computing costs.

Auto scaling is a service that can dynamically scale computing resources to meet your business requirements. Auto scaling provides a more cost-effective method to manage your resources. Auto scaling can be divided into two dimensions:

The scaling components and capabilities of the two layers can be used separately or in combination. Both layers can be decoupled through the capacity state in the scheduling layer.

There are three auto scaling strategies in Kubernetes: Horizontal Pod Autoscaling (HPA), Vertical Pod Autoscaling (VPA), and Cluster Autoscaler (CA). The scaling objects of HPA and VPA are pods, while the objects of CA are nodes.

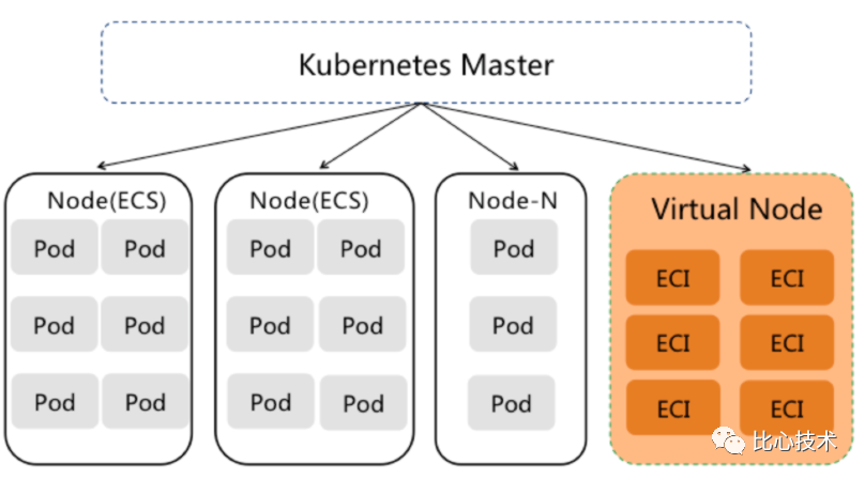

In addition, major cloud vendors (such as Alibaba Cloud) provide virtual node components to provide a serverless runtime environment. Instead of being concerned about node resources, you only need to pay for pods. A virtual node component is suitable for scenarios, such as online traffic spikes, CI/CD, and big data-based tasks. This article takes Alibaba Cloud as an example when introducing virtual nodes.

Horizontal Pod Autoscaler (HPA) is a built-in component of Kubernetes and is also the most commonly used scaling solution for pods. You can use HPA to automatically adjust the number of replicas for workloads. The auto scaling feature of HPA enables Kubernetes to have flexible and adaptive capabilities. It can quickly scale out multiple pod replicas within user settings to cope with the surge of service load. When the service load becomes smaller, it can also be appropriately scaled in according to the actual situation to save computing resources for other services. The entire process is automated without human intervention. It is suitable for business scenarios with large service fluctuations, a large number of services, and requirements for frequent scale-in and scale-out.

HPA applies to objects that support the scale interface, such as Deployments and StatefulSet. Unfortunately, it is not applicable to objects that cannot be scaled, such as DaemonSet resources. Kubernetes has built-in HorizontalPodAutoscaler resources. Usually, a HorizontalPodAutoscaler resource is created for workloads that need to be configured with horizontal auto scaling, and workloads correspond to HorizontalPodAutoscaler.

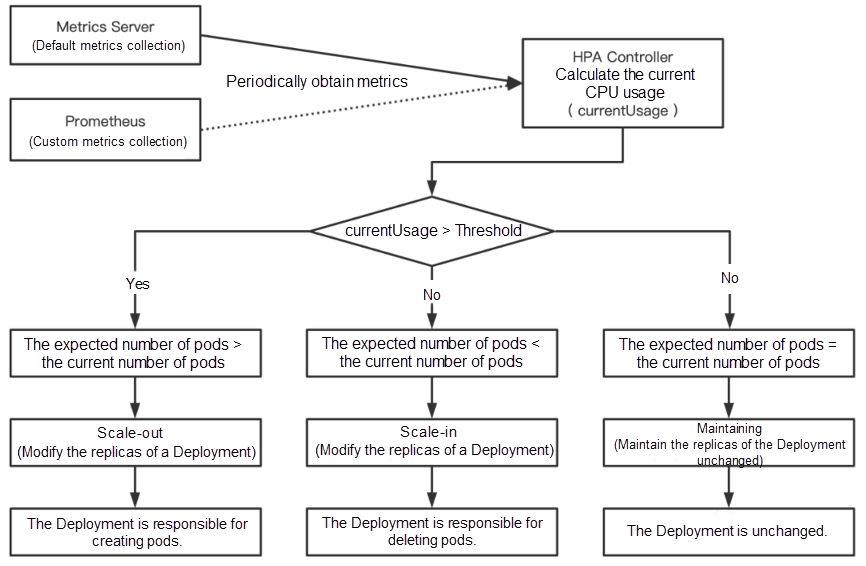

This feature of HPA is implemented by Kubernetes API resources and controllers. Resource utilization metrics determine the behavior of a controller. The controller periodically adjusts the number of replicas of the service pods according to the resource utilization of pods, so the measurement level of the workload matches the target value set by users. Let's take Deployment and CPU usage as examples. The following figure shows the scaling process:

HPA only supports CPU and memory-based auto scaling by default. For example, the number of application instances is automatically increased when the CPU usage exceeds the threshold, and the number of application instances is automatically decreased when the CPU usage falls below the threshold. However, the default HPA-driven elasticity has a single dimension and cannot meet daily O&M requirements. You can use HPA in combination with open-source Keda that can drive elasticity from dimensions, such as events, timing, and custom metrics.

VerticalPodAutoscaler (VPA) is an open-source component in the Kubernetes community. It needs to be manually deployed and installed on a Kubernetes cluster. VPA provides the feature of vertical pod scaling.

VPA automatically sets limits on the resource usage for pods based on the pod resource usage. This way, the cluster can schedule pods to the best nodes that have sufficient resources. VPA also maintains the ratio of the resource request and limit that you specify in the initial container configurations. In addition, VPA can be used to recommend more reasonable requests to users to improve the resource utilization of containers while ensuring containers have sufficient resources to use.

Compared with HPA, VPA has the following advantages:

Here are the limits and precautions of VPA:

HPA and VPA are used to enable the elasticity of the scheduling layer and deal with the auto scaling of pods. If the overall resource capacity of the cluster cannot meet the scheduling capacity of the cluster, the pods popped by HPA and VPA are still in the Pending state. At this time, the auto scaling of the resource layer is required. In Kubernetes, horizontal node auto scaling is implemented through an open-source component named ClusterAutoscaler (CA) in the community. CA supports the setting of multiple scaling groups and scale-in and scale-out policies. On the basis of CA in the community, major cloud vendors add some unique features to meet different node scaling scenarios, such as support for multi-zone, multi-instance specifications, and multiple scaling modes. In Kubernetes, the working principle of node auto scaling is different from the traditional model based on the usage threshold.

The traditional auto scaling model is based on usage. For example, if a cluster has three nodes, a new node pops up when the CPU and memory usage of the nodes in the cluster exceeds a specific threshold. However, you will encounter the following issues after deeply considering the traditional scaling model:

In a cluster, hot nodes have a higher resource usage than other nodes. If an average resource usage is specified as the threshold, auto scaling may not be triggered in a timely manner. If the lowest node resource usage is set as the threshold, it may cause a waste of resources.

In Kubernetes, an application uses pods as the smallest units. When a pod has high resource usage (even with the auto scaling triggered by the total number of nodes and clusters in which the pod is located), the number of pods of the application and the resource limits of these pods are not changed. As a result, the loads cannot be balanced to newly added nodes.

If scale-in activities are triggered based on resource usage, pods that request large amounts of resources but have low resource usage may be evicted. If the number of these pods is large within a Kubernetes cluster, resources of the cluster may be exhausted, and some pods may fail to be scheduled.

How does Kubernetes node scaling solve the issues above? Kubernetes schedules a two-layer scaling model that is decoupled from resources. According to resource usage, Kubernetes triggers the change of replicas that is the change in scheduling units (pods). When the scheduling level of the cluster reaches 100%, the auto scaling of the resource layer is triggered. When node resources are popped up, pods that cannot be scheduled are automatically scheduled to the newly popped nodes. Therefore, the load is reduced on the entire application.

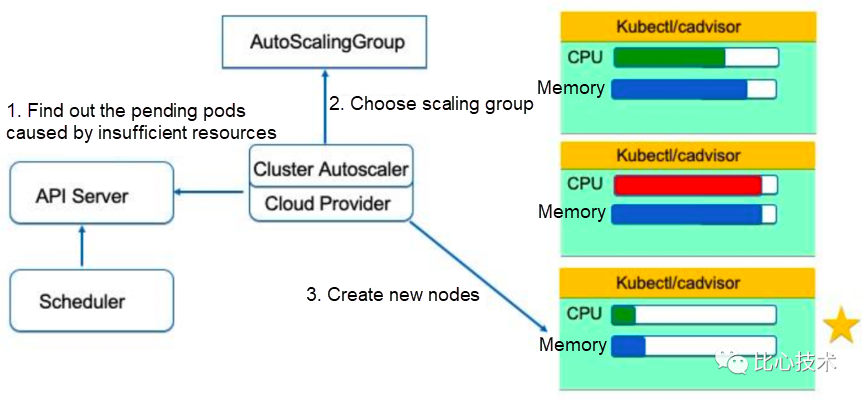

CA is triggered by listening to pods in the Pending state. If pods are in the Pending state due to insufficient scheduling resources, simulated scheduling of CA is triggered. During the simulated scheduling, the system calculates which scaling group in the configured scaling groups can be used to schedule these pending pods after nodes are popped up. If a scaling group meets the requirement, nodes will be popped up accordingly.

A scaling group is treated as an abstract node during the simulation. The model specifications of the scaling group specify the CPU and memory capacities of the node. The labels and taints of the scaling group are also applied to the node. The abstract node is used to simulate the scheduling by the simulation scheduler. If the pending pods can be scheduled to the abstract node, the system calculates the number of required nodes and makes the scaling group pop up nodes.

First, only nodes popped by the auto scaling will be scaled in, while static nodes cannot be taken over by CA. The scale-in is determined separately for each node. When the scheduling utilization of any node is lower than the specified scheduling threshold, the determination of node scale-in is triggered. At this time, CA attempts to stimulate to evict pods on the node to determine whether the current node can be completely drained. If there are special pods, such as non-DaemonSet pods of kube-system namespace, PDB-controlled pods, and non-controller-created pods, the node is skipped, and other candidate nodes are chosen. When a node evicts pods, the node is drained first. The pods on the node are evicted to other nodes, and the node is removed.

Choosing between different scaling groups is equivalent to choosing between different virtual nodes. Similar to scheduling policies, there is also a scoring mechanism. Nodes are first filtered by the scheduling policy. Among the filtered nodes, the nodes are chosen in line with policies, such as affinity settings. If none of the strategies above exist, CA will use the least-waste policy to make the decision by default. The core of the least-waste policy is to minimize the amount of resources left after the node is popped up to minimize waste. In addition, if a scaling group of GPU and a scaling group of CPU both meet the requirements, the scaling group of CPU takes precedence over GPU to pop up nodes by default.

Here are the limits and precautions when using CA:

The virtual node is a plug-in developed by major cloud vendors based on the open-source project Virtual Kubelet in the community. Virtual Kubelet is used to connect Kubernetes clusters and API from other platforms. Virtual Kubelet is mainly used to extend Kubernetes API to a serverless container platform.

Thanks to virtual nodes, Kubernetes clusters are empowered with high elasticity and are no longer limited by the computing capacity of cluster nodes. You can flexibly and dynamically create pods as needed to avoid the hassle of planning the cluster capacity.

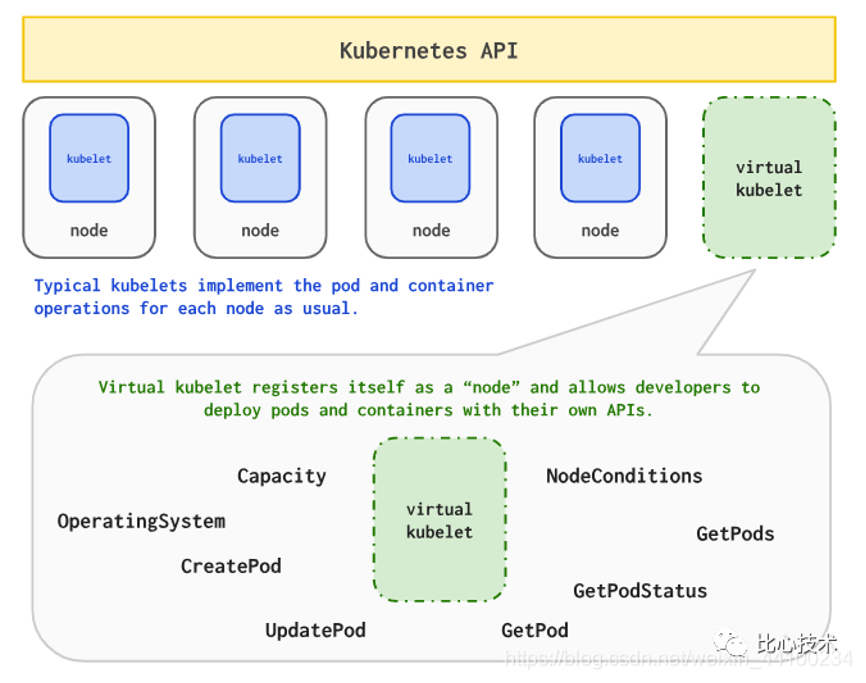

Each node in a Kubernetes cluster starts a Kubelet process. You can understand Kubelet as an agent in the Server-Agent architecture.

Virtual Kubelet is implemented based on the typical features of Kubelet. It is disguised as Kubelet upward, simulating node objects and connecting with native resource objects of Kubernetes. It provides API downward to connect with providers in other resource management platforms. Different platforms become serverless by implementing methods defined by Virtual Kubelet and allowing nodes to be supported by their corresponding providers. The platforms can also manage other Kubernetes clusters through providers.

Virtual Kubelet simulates node resource objects and manages the lifecycle of pods after the pods are scheduled to virtual nodes disguised by Virtual Kubelet.

Virtual Kubelet looks like a normal Kubelet from the perspective of the Kubernetes API Server. However, its key difference is that Virtual Kubelet schedules pods elsewhere by using a cloud serverless API rather than on a real node. The following figure shows the architecture of Virtual Kubelet:

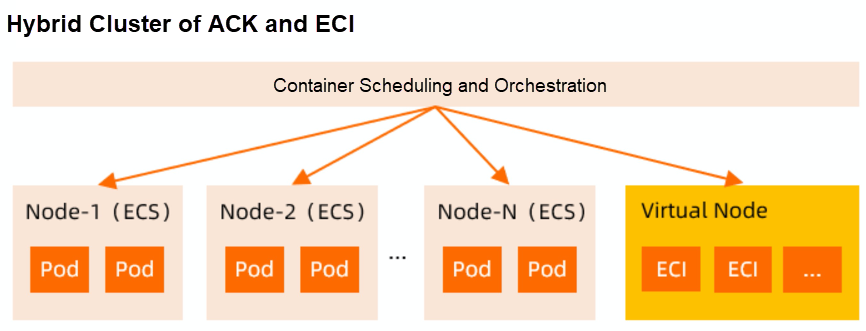

Major cloud vendors provide serverless container services and Kubernetes Virtual Node. This article uses Alibaba Cloud as an example to introduce its elastic scheduling based on Elastic Container Instance (ECI) and virtual nodes.

Alibaba Cloud ECI is a container running service that combines container and serverless technology. Using ECI through Alibaba Cloud Container Service for Kubernetes (ACK) can give full play to the advantages of ECI. Therefore, when you deploy containers on Alibaba Cloud, you can directly run pods and containers on Alibaba Cloud without purchasing and managing Elastic Compute Service (ECS). From purchasing and configuring ECS, deploying containers (ECS mode), and deploying containers directly (ECI mode), ECI eliminates the O&M and management of the underlying server and only needs to pay for the resources configured for the container (per-second pay-as-you-go billing), which can save costs.

The Kubernetes Virtual Node of Alibaba Cloud is implemented through ack-virtual-node components based on the community open-source project Virtual Kubelet. The components extend the support for Aliyun Provider and make a lot of optimizations to realize seamless connections between Kubernetes and ECI.

After you have a virtual node, when node resources in a Kubernetes cluster are insufficient, you do not need to plan the computing capacity of nodes. Instead, you can directly create pods under virtual nodes as needed. Each pod corresponds to an ECI. The ECI and pods on the real nodes in the cluster communicate with each other in the network.

Virtual nodes are ideal for running in the following scenarios, reducing computing costs but improving computing elasticity efficiency:

ECI and virtual nodes are like magic pockets of a Kubernetes cluster, allowing us to get rid of the annoyance of insufficient computing power of nodes and avoid the waste of idle nodes. Thus, you can create pods as needed with unlimited computing power, easily coping with the peaks and troughs of computing.

When you use ECI together with regular nodes, you can use the following methods to schedule pods to ECI:

(1) Configure Pod Labels

If a certain pod is scheduled to run on an ECI, you can directly add a specific label (alibabacloud.com/eci=true) to the pod, and the pod will run on the ECI of the virtual node.

(2) Configure Namespace Labels

If a kind of pod is scheduled to run on an ECI, you can create a namespace and add a specific label (alibabacloud.com/eci=true) to the namespace. All pods in the namespace will run on the ECI of the virtual node.

(3) Configure ECI Elastic Scheduling

ECI elastic scheduling is an elastic scheduling policy provided by Alibaba Cloud. When you deploy services, you can add annotations in the pod template to declare that only the resources of regular nodes or the ECI resources of virtual nodes are used, or when the resources of regular nodes are insufficient, ECI resources are used automatically. This policy can be used to meet the different requirements for elastic resources in different scenarios.

The corresponding configuration items of annotation are alibabacloud.com/burst-resource. The value is:

These methods are not intrusion-free and require modifications to existing resources. ECI supports configuring ECI Profile to deal with this problem.

In the ECI Profile, you can declare the namespaces or labels of pods that need to be matched. Pods that can be matched with labels will be automatically scheduled to ECI.

You can also declare annotations and labels to be appended to pods in the ECI Profile. For pods that can be matched with labels, the configured annotations and labels will also be automatically appended to the pods.

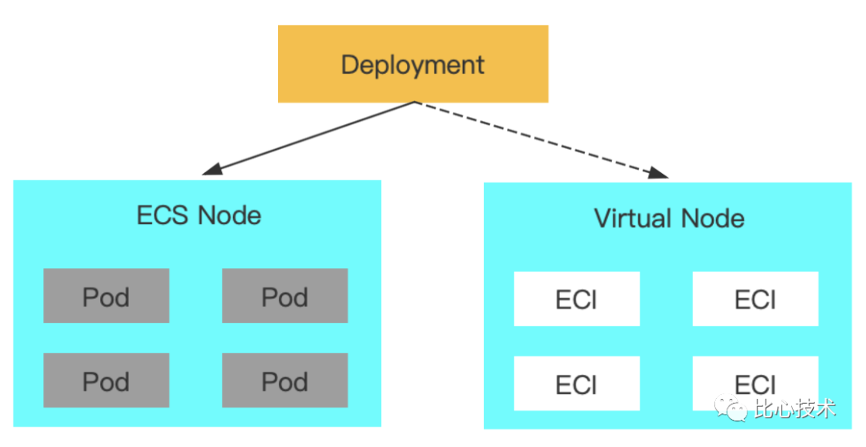

Let's take Alibaba Cloud as an example. Kubernetes clusters on Alibaba Cloud are deployed with virtual nodes and mix ECI and regular nodes.

Imagine a scenario where an application (Deployment) is configured with HPA and ECI elastic scheduling. In the case that regular node resources are insufficient, when HPA scale-out is triggered, some pods are scheduled to ECI. However, when HPA scale-in is triggered, it is not always the case to delete ECI, and it is possible to delete pods on common nodes to retain ECI. ECI is charged based on the pay-as-you-go billing method. Therefore, if it is used for a long time, the cost will be higher than ECS (Alibaba Cloud server) charged by subscription billing.

This leads to two issues that need to be solved:

Kubernetes native controllers and workloads cannot handle the preceding issues well. The non-open-source Elastic Workload component of Alibaba Cloud Kubernetes and the open-source OpenKruise of Alibaba Cloud provide good solutions.

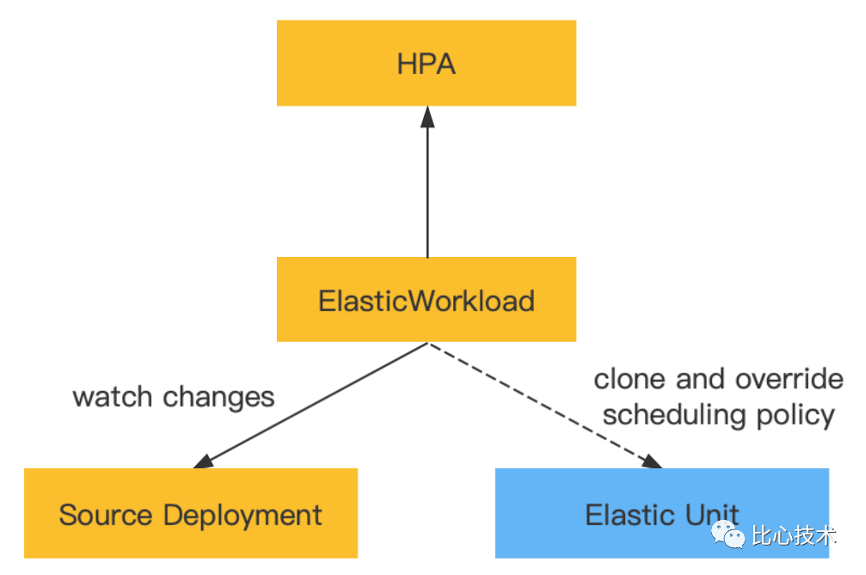

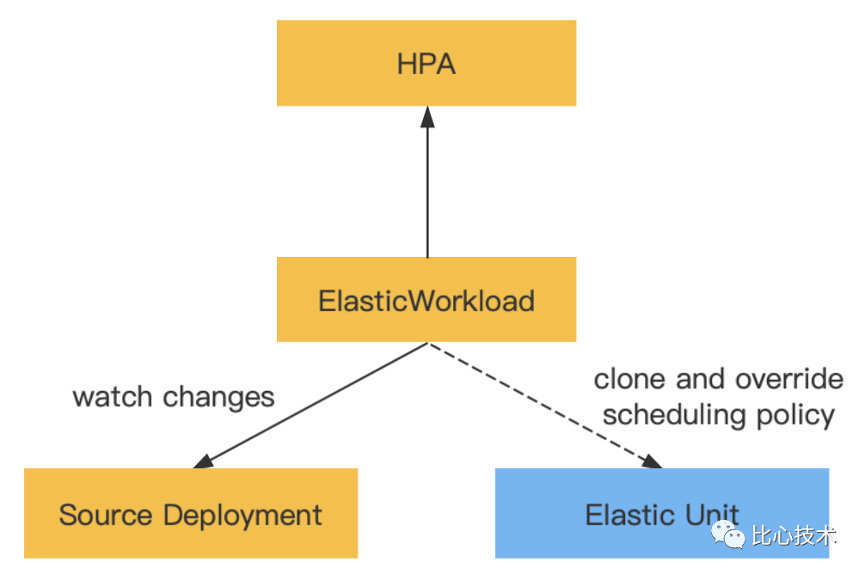

Elastic Workload is a unique component of Alibaba Cloud Kubernetes. After the component is installed, a new resource type named Elastic Workload is added. Elastic Workload is used in a similar way to HPA. It is used through external mounting and does not invade the original business.

A typical Elastic Workload is divided into two main parts:

The elastic workload controller listens to the original workload and clones and generates the workloads of elastic units based on the scheduling policies set by the elastic units. The number of replicas of the original workload and elastic units is dynamically allocated according to changes in the total replicas in the Elastic Workload.

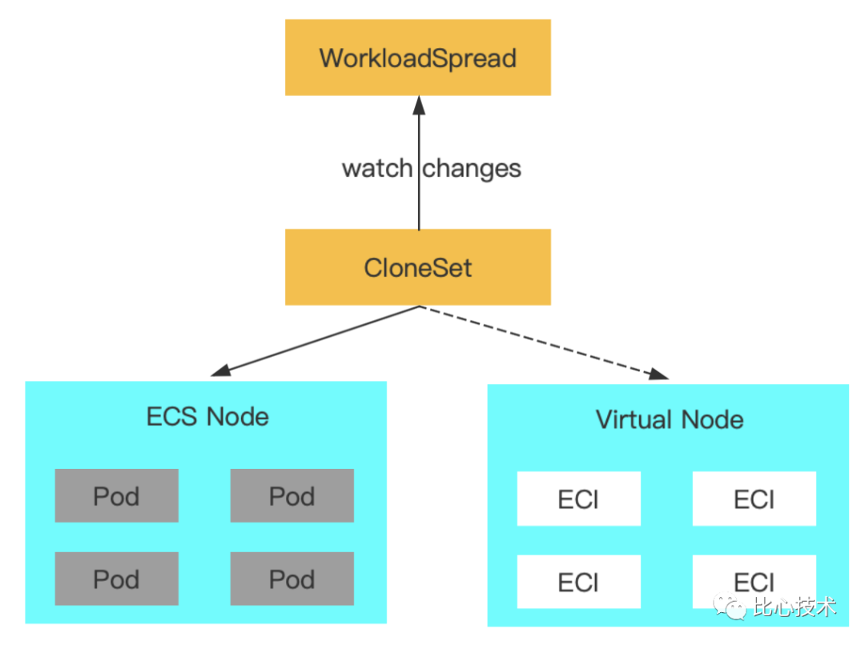

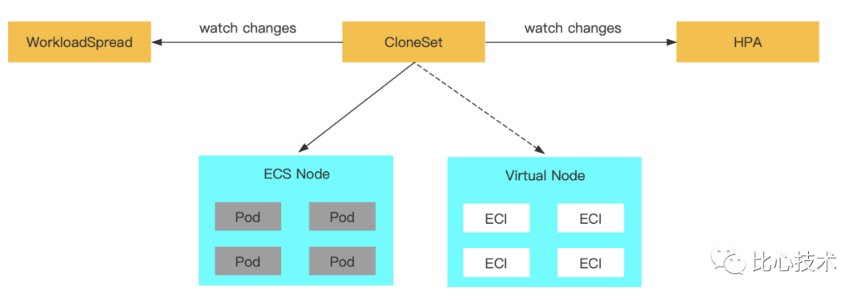

Elastic Workload also supports working with HPA. HPA can be used on Elastic Workload, as shown in the following figure:

Elastic Workload dynamically adjusts the distribution of replicas for each unit based on the status of HPA. For example, if the number of replicas is scaled in from six to four, Elastic Workload will first scale in the replicas of elastic units.

On the one hand, Elastic Workload generates multiple workloads by cloning and overriding scheduling policies to manage scheduling policies. On the other hand, Elastic Workload adjusts the replica allocation of original workloads and elastic units through upper-layer replica calculation to process certain pods first.

Now, Elastic Workload only supports Deployments.

OpenKruise is a suite of enhanced capabilities for Kubernetes, which has been made open-source by the Alibaba Cloud Container Service Team. It focuses on the deployment, upgrade, O&M, and stability protection of cloud-native applications. All features are extended by standard methods (such as CRD) and can be applied to any Kubernetes cluster of 1.16 or later versions.

OpenKruise has enhanced workloads, such as CloneSet, Advanced StatefulSet, Advanced DaemonSet, and BroadcastJob. They support basic features similar to Kubernetes native workloads and provide capabilities, such as in-place upgrades, configurable scale-in or scale-out and release policies, and concurrent operations.

OpenKruise provides a variety of methods to manage sidecar containers and multi-region deployments of applications using Bypass. Bypass means users can implement applications without modifying their workloads.

For example, UnitedDeployment can offer a template to define applications and manage pods in multiple regions by managing multiple workloads. WorkloadSpread can constrain the regional distribution of pods from stateless workloads, enabling a single workload to elastically deploy in multiple regions.

OpenKruise uses WorkloadSpread to solve the problem of mixing virtual nodes and regular nodes mentioned above.

OpenKruise has also made a lot of efforts to protect the high availability of applications. Currently, it can protect Kubernetes resources from the cascade deletion mechanism, including CRDs, namespaces, and almost all workload-based resources. Compared with native Kubernetes PDB (which only protects pod eviction), PodUnavailableBudget can protect pod deletion, eviction, and update.

After OpenKruise is installed in a Kubernetes cluster, an additional WorkloadSpread resource is created. WorkloadSpread can distribute pods of workloads to different types of nodes according to certain rules. It can give a single workload the capabilities for multi-regional deployment, elastic deployment, and refined management in a non-intrusive manner.

Common rules include:

1) Preferentially deploy pods to ECS. Otherwise, deploy pods to ECI when resources are insufficient

2) Preferentially deploy the fixed number of pods to ECS and the rest to ECI

1) Control workloads to deploy different numbers of pods to different CPU architectures

2) Ensure pods on different CPU architectures have different resource quotas

Each WorkloadSpread defines multiple regions as subsets, and each subset corresponds to the number of maxReplicas. WorkloadSpread uses webhooks to import domain information defined by subsets while controlling the order according to which pods are scaled.

Unlike ElasticWorkload (which manages multiple workloads), one WorkloadSpread only acts on a single workload. Workload and WorkloadSpread correspond to each other.

Workloads currently supported by WorkloadSpread include CloneSet and Deployment.

Elastic Workload is unique to Alibaba Cloud Kubernetes. It is prone to bind cloud vendors and is expensive to use. In addition, it only supports Deployment that is a native workload.

WorkloadSpread is open-source and can be used in any Kubernetes cluster of 1.16 or later versions. It supports native Workload Deployment and Workload CloneSet extended by OpenKruise.

However, the priority deletion rule of WorkloadSpread relies on the deletion-cost feature of Kubernetes, and CloneSet has already supported the deletion-cost feature. The version of Kubernetes must be later than or equal to 1.21 for the native Workload, and the version 1.21 needs to explicitly enable PodDeletionCost feature-gate that has been enabled by default since version 1.22.

Therefore, if you use Alibaba Cloud Kubernetes, you can refer to the following options:

The preceding section introduces the commonly used Auto Scaling components of Kubernetes and takes Alibaba Cloud as an example to introduce virtual nodes, ECI, Elastic Workload of Alibaba Cloud, and open-source OpenKruise. This section discusses how to use these components properly and how Bixin uses them in a low-cost and high-elasticity manner based on Alibaba Cloud ECI.

Scenarios where Bixin can use Auto Scaling:

You can combine the elastic components of Kubernetes to provide high-elasticity and low-cost business for these scenarios.

Since the delivery time of node horizontal scale-out is relatively long, we do not consider using horizontal auto scaling of nodes.

The overall idea of pod horizontal scaling is to use Kubernetes HPA, Alibaba Cloud ECI, and virtual nodes to mix ECS and ECI on Alibaba Cloud. Usually, regular business uses ECS carriers charged by subscription billing method to save costs. Elastic business uses ECI without planning capacity for elastic resources. In addition, pod horizontal scaling is combined with Elastic Workload or the open-source component OpenKruise of Alibaba Cloud to preferentially delete ECIs during the scale-in of applications.

The following section briefly describes the horizontal scaling of jobs, Deployment, and CloneSet with common resources used by Bixin. As for the vertical scaling of pods, the VPA technology is not mature and has many usage limits. Therefore, the auto scaling capability of VPA is not considered. However, the ability of VPA to recommend reasonable requests can be used to improve the resource utilization of containers and avoid unreasonable resource request settings for containers when the containers have sufficient resources to use.

For jobs, you can directly add a specific label alibabacloud.com/eci=true for pods so that all jobs run on ECI. If the jobs are complete, ECI is released. There is no need to reserve computing resources for jobs, allowing you to avoid the annoyance of insufficient computing power and expansion of clusters.

You can add annotations alibabacloud.com/burst-resource: eci to all pod templates of Deployment to enable ECI elastic scheduling. When ECS resources (regular nodes) in the cluster are insufficient, ECI elastic resources are used. The versions of he Kubernetes cluster are all earlier than 1.21. Therefore, if you want to delete ECI first when you need to scale in Deployments, you can only use the Elastic Workload component of Alibaba Cloud.

Only ECI elastic scheduling is used for applications without HPA. The expected results are listed below:

You can add Elastic Workload resources to applications configured with HPA. One application corresponds to one Elastic Workload. HPA acts on Elastic Workload.

The expected results are listed below:

You can create WorkloadSpread resource before creating CloneSet. One WorkloadSpread only acts on one CloneSet.

Neither the Subset ECS nor the Subset ECI of WorkloadSpread sets the maximum number of replicas for applications without HPA. The expected results are listed below:

For applications with HPA, HPA still acts on CloneSet. The maximum number of replicas of the Subset ECS of WorkloadSpread is set to be equal to the minimum number of replicas of HPA. The maximum number of replicas of the Subset ECI is not set. When you modify the minimum number of replicas of HPA, you need to synchronously modify the maximum number of replicas of the Subset ECS.

The expected results are listed below:

According to the horizontal Auto Scaling methods of Deployment and CloneSet mentioned above, ECI cannot be automatically and completely deleted in time.

ECI is charged with the pay-as-you-go billing method. If ECI is used for too long, it will be more expensive than ECS charged by subscription. Therefore, it is necessary to combine monitoring to scale out regular node resources in a timely manner when common node resources are insufficient. It is also necessary to monitor and alert ECI. If there are ECIs that have been running for a long time (for example, three days), you need to notify the application owners of these instances and require them to restart the ECIs. Thus, new pods will be scheduled to ECS.

Requests of some applications are set too large, and the resource utilization is still very low when scaling in to one pod. You can use VPA during this time to perform vertical scaling to improve resource utilization. However, field scaling of VPA is still in the experimental stage, thus it is not recommended. You can only use VPA to obtain reasonable request recommended values.

After VPA components are deployed on Kubernetes, a VerticalPodAutoscaler resource type is added. You can create a VerticalPodAutoscaler object whose updateMode is off for each Deployment. VPA periodically obtains the resource usage metrics of all containers under a Deployment from Metrics Server, calculates a reasonable Request recommended value, and records the recommended value in the VerticalPodAutoscaler object corresponding to the Deployment.

You can write your code to take out recommended values from the VerticalPodAutoscaler object and then aggregate and calculate them based on the application dimension. Finally, the results are displayed on the page. The application owners can directly see whether the request setting of applications is reasonable on the page, and O&M personnel can also push the application downgrade based on the data.

This topic introduces Auto Scaling components (such as HPA, VPA, CA, Virtual Kubelet, ACK, Alibaba Cloud ECI, Alibaba Cloud ElasticWorkload, and Openkruise WorkloadSpread) and discusses how Bixin uses these components to achieve low cost and high elasticity of Kubernetes. Currently, Bixin is actively implementing some components and using its Auto Scaling to reduce costs effectively. Bixin will also continue to pay attention to industrial dynamics and constantly improve elastic solutions.

1) Document of Alibaba Cloud ACK + ECI: https://www.alibabacloud.com/help/en/elastic-container-instance/latest/use-elastic-container-instance-in-ack-clusters

2) Official Website of CNCF OpenKruise: https://openkruise.io/

Alibaba Cloud ACK One and ACK Cloud-Native AI Suite Released

208 posts | 12 followers

FollowAlibaba Developer - September 6, 2021

Alibaba Clouder - July 15, 2020

Alibaba Container Service - November 21, 2024

Alipay Technology - May 14, 2020

Alibaba Cloud Native Community - November 5, 2020

Alibaba Developer - June 30, 2020

208 posts | 12 followers

Follow Auto Scaling

Auto Scaling

Auto Scaling automatically adjusts computing resources based on your business cycle

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Alibaba Cloud Native