Reporter: Hello again, Alibaba Cloud-Native readers. This is the last time our old friend Distribution of Alibaba Cloud Container Service for Kubernetes (ACK Distro) will discuss its experiences. In the previous interviews, it brought us wonderful explanations and interpretations. If you missed those interviews, do not forget to go back and review them. Since its launch in December 2021, ACK Distro has received much attention and support and has achieved satisfying downloads. Do you have any comments on this?

ACK Distro: Yes, it was fortunate to receive more than 400 downloads in the first three months after launch (December 2021-March 2022). I have also exchanged technologies with you through different channels. Thank you for your attention, and I hope you have a better experience with container service.

Reporter: OK, let's start this interview. I learned before that Sealer can help you achieve rapid building and deployment, and Hybridnet can help build a hybrid cloud unified network plane, so which versatile partner will you introduce to us today?

ACK Distro: As we all know, stateful applications in the context of cloud-native need to use a set of storage solutions for persistent data storage. Compared with distributed storage, local storage is better in cost, usability, maintainability, and IO performance. Today, I will introduce an open-source local storage management system of Alibaba, Open-Local, and I will explain how I use it to realize container local storage. First of all, I will explain how Open-Local is created. Although the advantages of local storage compared with distributed storage were mentioned just now, local storage still has many problems with the low-cost delivery of Kubernetes clusters:

However, Open-Local can avoid those problems to the greatest extent to give everyone a better experience. Using local storage on Kubernetes is as simple as using centralized storage.

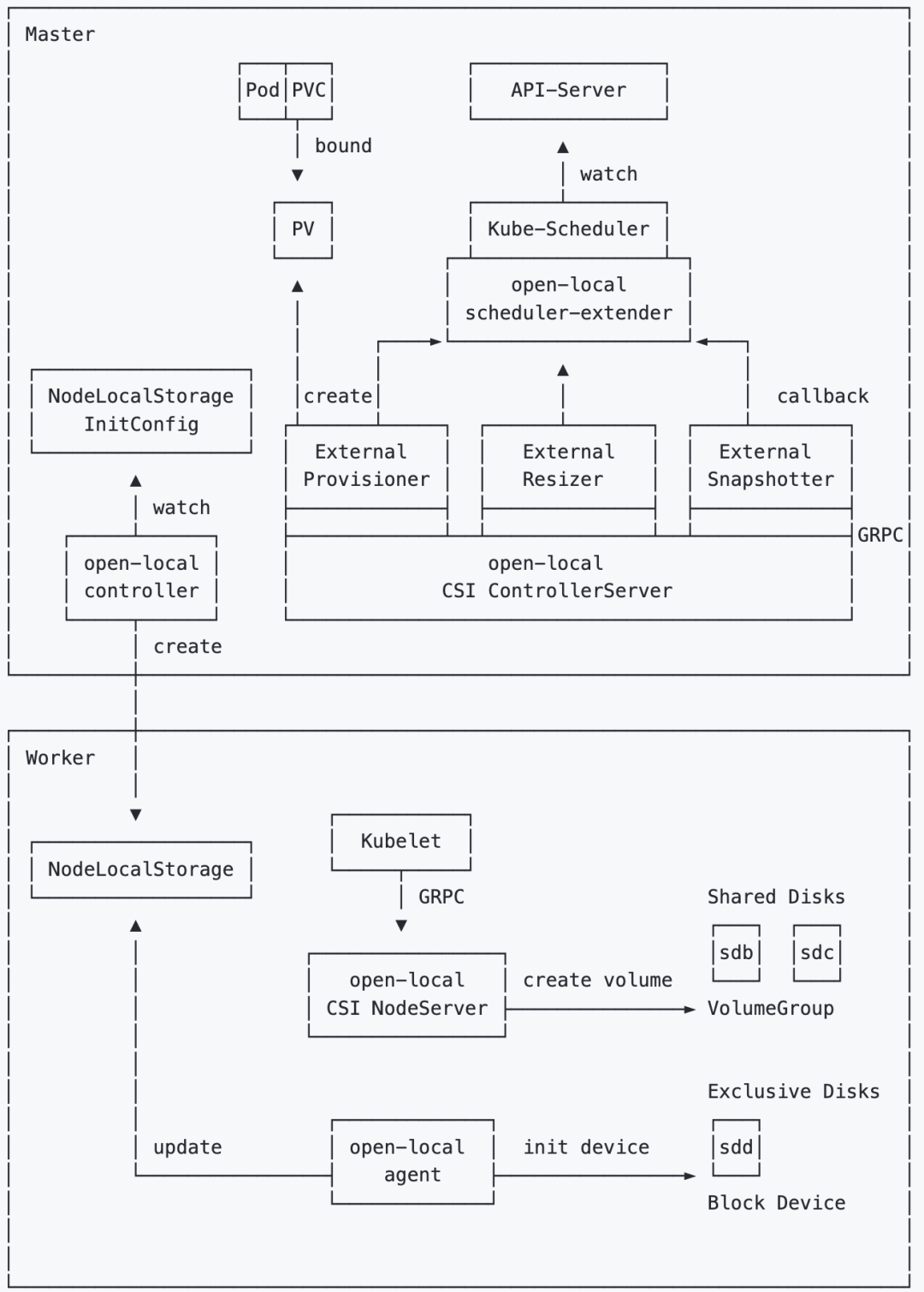

Reporter: Can you explain the architectural components of Open-Local?

ACK Distro: Yes, Open-Local contains four components:

At the same time, Open-Local contains two CRDs:

Please refer to the following figure for the architecture diagram of Open-Local:

Reporter: Which demand scenarios will developers use Open-Local?

ACK Distro: I have summarized the following cases. You can choose which one matches your situation.

Reporter: How does Open-Local exert its advantages? How do you use Open-Local to achieve best practices?

ACK Distro: Let me explain it in categories.

First, it should be ensured that LVM tools have been installed in the environment. During the installation and deployment step, I will install Open-Local by default, edit NodeLocalStorageInitConfig resources, and perform the initialization configuration of storage.

# kubectl edit nlsc open-localUsing Open-Local requires a VolumeGroup VG) in the environment. If a VG has already existed in your environment, and there is space left, you can configure Open-Local in a whitelist. If no VG exists in your environment, you need to provide a block device name for Open-Local to create a VG.

apiVersion: csi.aliyun.com/v1alpha1

kind: NodeLocalStorageInitConfig

metadata:

name: open-local

spec:

globalConfig: # The global default node configuration, which is populated into its Spec when the NodeLocalStorage is created in the initialization stage

listConfig:

vgs:

include: # The VolumeGroup whitelist, which supports regular expressions.

- open-local-pool-[0-9]+

- your-vg-name # If there is a VG in the environment, it can be written to the whitelist and managed by open-local

resourceToBeInited:

vgs:

- devices:

- /dev/vdc # If there is no VG in the environment, the user needs to provide a block device

name: open-local-pool-0 # Initialize the block device /dev/vdc to a VG named open-local-pool-0After the NodeLocalStorageInitConfig resources are edited, the controller and agent update the NodeLocalStorage resources of all nodes.

Open-Local deploys some storage class templates in the cluster by default. Let's take open-local-lvm, open-local-lvm-xfs, and open-local-lvm-io-throttling as examples:

# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

open-local-lvm local.csi.aliyun.com Delete WaitForFirstConsumer true 8d

open-local-lvm-xfs local.csi.aliyun.com Delete WaitForFirstConsumer true 6h56m

open-local-lvm-io-throttling local.csi.aliyun.com Delete WaitForFirstConsumer trueCreate a Statefulset that uses the open-local-lvm storage class template. In this case, the created PV file system is ext4. If the user designates an open-local-lvm-xfs storage template, the PV file system is xfs.

# kubectl apply -f https://raw.githubusercontent.com/alibaba/open-local/main/example/lvm/sts-nginx.yamlCheck the status of the pod, Persistent Volume Claim PVC), and PV to see that PV is created successfully:

# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-lvm-0 1/1 Running 0 3m5s

# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

html-nginx-lvm-0 Bound local-52f1bab4-d39b-4cde-abad-6c5963b47761 5Gi RWO open-local-lvm 104s

# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS AGE

local-52f1bab4-d39b-4cde-abad-6c5963b47761 5Gi RWO Delete Bound default/html-nginx-lvm-0 open-local-lvm 2m4s

kubectl describe pvc html-nginx-lvm-0The system edits the spec.resources.requests.storage field corresponding with PVC and expands the declared storage of the PVC from 5Gi to 20Gi.

# kubectl patch pvc html-nginx-lvm-0 -p '{"spec":{"resources":{"requests":{"storage":"20Gi"}}}}'Check the status of PVC and PV:

# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

html-nginx-lvm-0 Bound local-52f1bab4-d39b-4cde-abad-6c5963b47761 20Gi RWO open-local-lvm 7h4m

# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

local-52f1bab4-d39b-4cde-abad-6c5963b47761 20Gi RWO Delete Bound default/html-nginx-lvm-0 open-local-lvm 7h4mOpen-Local has the following snapshot classes:

# kubectl get volumesnapshotclass

NAME DRIVER DELETIONPOLICY AGE

open-local-lvm local.csi.aliyun.com Delete 20mCreate a VolumeSnapshot resource:

# kubectl apply -f https://raw.githubusercontent.com/alibaba/open-local/main/example/lvm/snapshot.yaml

volumesnapshot.snapshot.storage.k8s.io/new-snapshot-test created

# kubectl get volumesnapshot

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE

new-snapshot-test true html-nginx-lvm-0 1863 open-local-lvm snapcontent-815def28-8979-408e-86de-1e408033de65 19s 19s

# kubectl get volumesnapshotcontent

NAME READYTOUSE RESTORESIZE DELETIONPOLICY DRIVER VOLUMESNAPSHOTCLASS VOLUMESNAPSHOT AGE

snapcontent-815def28-8979-408e-86de-1e408033de65 true 1863 Delete local.csi.aliyun.com open-local-lvm new-snapshot-test 48sCreate a new pod. The PV data corresponding with the pod is the same as the previous application snapshot point:

# kubectl apply -f https://raw.githubusercontent.com/alibaba/open-local/main/example/lvm/sts-nginx-snap.yaml

service/nginx-lvm-snap created

statefulset.apps/nginx-lvm-snap created

# kubectl get po -l app=nginx-lvm-snap

NAME READY STATUS RESTARTS AGE

nginx-lvm-snap-0 1/1 Running 0 46s

# kubectl get pvc -l app=nginx-lvm-snap

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

html-nginx-lvm-snap-0 Bound local-1c69455d-c50b-422d-a5c0-2eb5c7d0d21b 4Gi RWO open-local-lvm 2m11sOpen-Local supports a created PV that is mounted to containers as a block device. (In this case, the block device is in the container /dev/sdd path.):

# kubectl apply -f https://raw.githubusercontent.com/alibaba/open-local/main/example/lvm/sts-block.yamlCheck the status of the pod, PVC, and PV:

# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-lvm-block-0 1/1 Running 0 25s

# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

html-nginx-lvm-block-0 Bound local-b048c19a-fe0b-455d-9f25-b23fdef03d8c 5Gi RWO open-local-lvm 36s

# kubectl describe pvc html-nginx-lvm-block-0

Name: html-nginx-lvm-block-0

Namespace: default

StorageClass: open-local-lvm

...

Access Modes: RWO

VolumeMode: Block # Mount to the container as a block device

Mounted By: nginx-lvm-block-0

...Open-Local supports setting IO throttling for PVs. The following storage class templates support IO throttling:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: open-local-lvm-io-throttling

provisioner: local.csi.aliyun.com

parameters:

csi.storage.k8s.io/fstype: ext4

volumeType: "LVM"

bps: "1048576" # The read/write throughput is limited to 1024KiB/s or so

iops: "1024" # The IOPS is limited to 1024 or so

reclaimPolicy: Delete

volumeBindingMode: WaitForFirstConsumer

allowVolumeExpansion: trueCreate a Statefulset that uses the open-local-lvm-io-throttling storage class template:

# kubectl apply -f https://raw.githubusercontent.com/alibaba/open-local/main/example/lvm/sts-io-throttling.yamlAfter the pod is in the running state, it enters the pod container:

# kubectl exec -it test-io-throttling-0 shAt this time, the PV is mounted to the /dev/sdd as a native block device and runs the fio command:

# fio -name=test -filename=/dev/sdd -ioengine=psync -direct=1 -iodepth=1 -thread -bs=16k -rw=readwrite -numjobs=32 -size=1G -runtime=60 -time_based -group_reportingThe result is listed below. The read/write throughput is limited to roughly 1024KiB/s:

......

Run status group 0 (all jobs):

READ: bw=1024KiB/s (1049kB/s), 1024KiB/s-1024KiB/s (1049kB/s-1049kB/s), io=60.4MiB (63.3MB), run=60406-60406msec

WRITE: bw=993KiB/s (1017kB/s), 993KiB/s-993KiB/s (1017kB/s-1017kB/s), io=58.6MiB (61.4MB), run=60406-60406msec

Disk stats (read/write):

dm-1: ios=3869/3749, merge=0/0, ticks=4848/17833, in_queue=22681, util=6.68%, aggrios=3112/3221, aggrmerge=774/631, aggrticks=3921/13598, aggrin_queue=17396, aggrutil=6.75%

vdb: ios=3112/3221, merge=774/631, ticks=3921/13598, in_queue=17396, util=6.75%Open-Local supports creating temporary volumes for pods. The lifecycle of a temporary volume is the same as a pod. Therefore, a temporary volume is deleted after a pod is deleted. It can be understood as the emptydir of the Open-Local version.

# kubectl apply -f ./example/lvm/ephemeral.yamlThe result is listed below:

# kubectl describe po file-server

Name: file-server

Namespace: default

......

Containers:

file-server:

......

Mounts:

/srv from webroot (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-dns4c (ro)

Volumes:

webroot: # This is a CSI temporary volume

Type: CSI (a Container Storage Interface (CSI) volume source)

Driver: local.csi.aliyun.com

FSType:

ReadOnly: false

VolumeAttributes: size=2Gi

vgName=open-local-pool-0

default-token-dns4c:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-dns4c

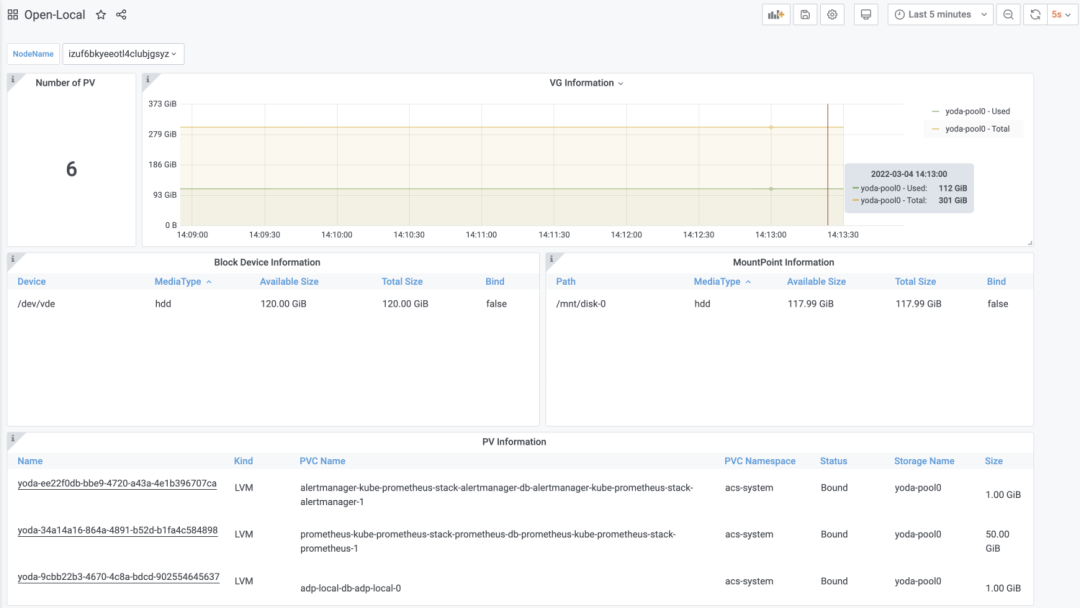

Optional: falseOpen-Local is equipped with a monitoring dashboard. You can use Grafana to view the information of the local storage in the cluster, including the information about storage devices and PVs. The dashboard is shown in the following figure:

ACK Distro: In short, you can reduce the labor costs of O&M and improve the stability of cluster runtime with the help of Open-Local. In terms of features, it maximizes the advantages of local storage to provide users with the high performance of local disks and enrich application scenarios with various advanced storage features. It allows developers to experience the benefits of cloud-native. It is a crucial step for applications to move to the cloud, especially for stateful applications to realize cloud-native deployment.

Reporter: Thank you for your wonderful explanation, ACK Distro. These three interviews have given us a deeper understanding of ACK Distro and its partners. I also hope that this interview can help anyone reading the article.

ACK Distro: Yes, members of the Project Team and I expect all of you to consult us in the GitHub community!

[1] The Open-Source Repository Link of Open-Local:

https://github.com/alibaba/Open-Local

[2] The Official Website of Alibaba Cloud Container Service for Kubernetes (ACK)

https://www.alibabacloud.com/product/kubernetes

[3] The Official GitHub of ACK Distro:

https://github.com/AliyunContainerService/ackdistro

[5] An In-Depth Interview with ACK Distro– Part 1:

https://www.alibabacloud.com/blog/an-in-depth-interview-with-ack-distro-part-1-how-to-use-sealer-to-achieve-rapid-building-and-deployment_598923

[6] An In-Depth Interview with ACK Distro – Part 2:

https://www.alibabacloud.com/blog/an-in-depth-interview-with-ack-distro-part-2-how-to-build-a-hybrid-cloud-unified-network-plane-with-hybridnet_598925

208 posts | 12 followers

FollowAlibaba Cloud Native - May 23, 2022

OpenAnolis - February 10, 2023

Alibaba Cloud Native - May 23, 2022

Aliware - November 4, 2021

Alibaba Cloud Community - December 9, 2021

Alibaba Cloud Community - February 10, 2023

208 posts | 12 followers

Follow Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Cloud Native