By Haoran Wang, Sr. Big Data Solution Architect of Alibaba Cloud

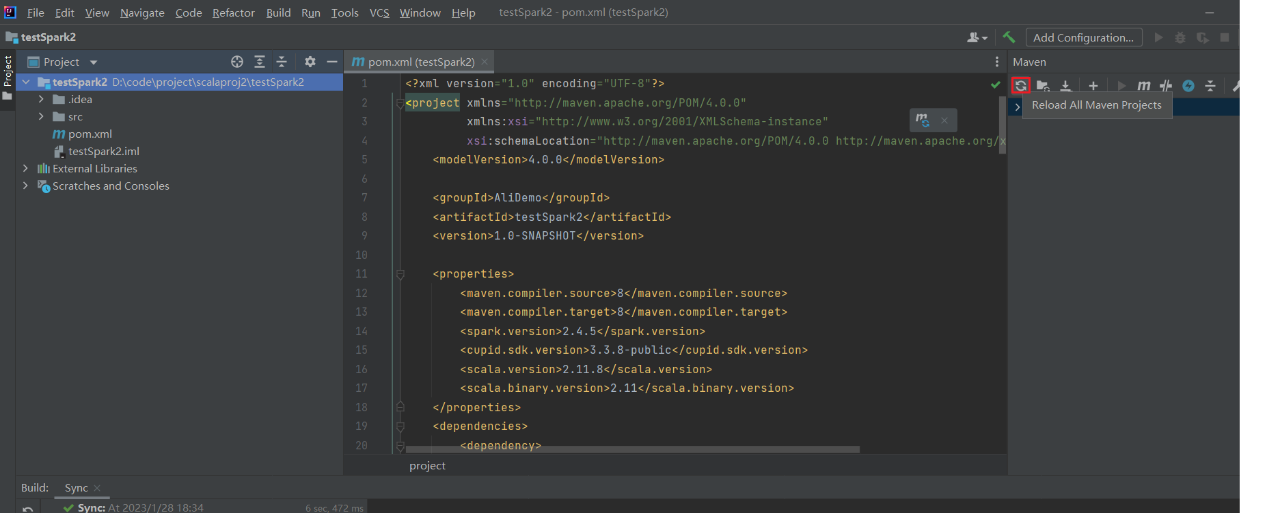

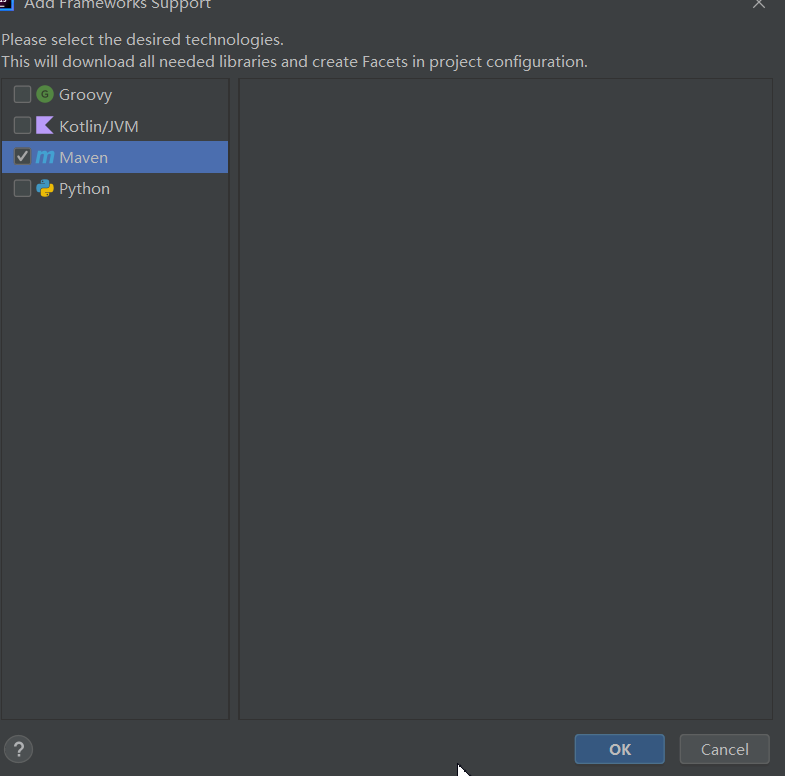

Add the following information, then click reload.

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>AliDemo</groupId>

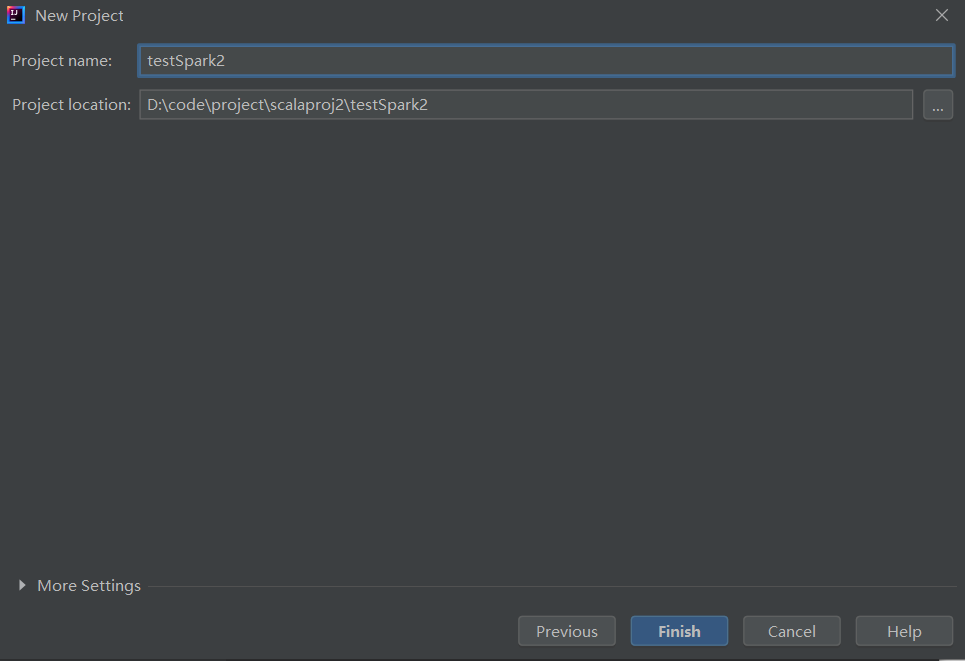

<artifactId>testSpark2</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

<spark.version>2.4.5</spark.version>

<cupid.sdk.version>3.3.8-public</cupid.sdk.version>

<scala.version>2.11.8</scala.version>

<scala.binary.version>2.11</scala.binary.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_${scala.binary.version}</artifactId>

<version>${spark.version}</version>

<scope>provided</scope>

<exclusions>

<exclusion>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

</exclusion>

<exclusion>

<groupId>org.scala-lang</groupId>

<artifactId>scalap</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_${scala.binary.version}</artifactId>

<version>${spark.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-mllib_${scala.binary.version}</artifactId>

<version>${spark.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_${scala.binary.version}</artifactId>

<version>${spark.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>com.aliyun.odps</groupId>

<artifactId>cupid-sdk</artifactId>

<version>${cupid.sdk.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>com.aliyun.odps</groupId>

<artifactId>hadoop-fs-oss</artifactId>

<version>${cupid.sdk.version}</version>

</dependency>

<dependency>

<groupId>com.aliyun.odps</groupId>

<artifactId>odps-spark-datasource_${scala.binary.version}</artifactId>

<version>${cupid.sdk.version}</version>

</dependency>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

</dependency>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-actors</artifactId>

<version>${scala.version}</version>

</dependency>

<!-- datahub streaming依赖 -->

<dependency>

<groupId>com.aliyun.emr</groupId>

<artifactId>emr-datahub_${scala.binary.version}</artifactId>

<version>1.6.0</version>

</dependency>

<dependency>

<groupId>com.aliyun.odps</groupId>

<artifactId>streaming-lib</artifactId>

<version>3.3.8-public</version>

<exclusions>

<exclusion>

<groupId>net.jpountz.lz4</groupId>

<artifactId>lz4</artifactId>

</exclusion>

<exclusion>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

</exclusion>

<exclusion>

<groupId>org.scala-lang</groupId>

<artifactId>scalap</artifactId>

</exclusion>

</exclusions>

</dependency>

</dependencies>

</project>

//package com.aliyun.odps.spark.examples.sparksql;

import com.aliyun.odps.Odps;

import com.aliyun.odps.cupid.CupidSession;

import org.apache.spark.sql.SparkSession;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.api.java.function.Function;

import org.apache.spark.sql.Row;

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.RowFactory;

import org.apache.spark.sql.types.*;

import java.util.ArrayList;

import java.util.List;

import org.apache.spark.sql.types.StructField;

public class SparkDemo2 {

public static void main(String[] args) {

SparkSession spark = SparkSession

.builder()

.appName("SparkSQL-on-MaxCompute")

.config("spark.sql.broadcastTimeout", 20 * 60)

.config("spark.sql.crossJoin.enabled", true)

.config("odps.exec.dynamic.partition.mode", "nonstrict")

.getOrCreate();

JavaSparkContext sparkContext = new JavaSparkContext(spark.sparkContext());

String tableName = "mc_test_table";

String tableNameCopy = "mc_test_table_copy";

String ptTableName = "mc_test_pt_table";

spark.sql("DROP TABLE IF EXISTS " + tableName);

spark.sql("DROP TABLE IF EXISTS " + tableNameCopy);

spark.sql("DROP TABLE IF EXISTS " + ptTableName);

spark.sql("CREATE TABLE " + tableName + " (name STRING, num BIGINT)");

spark.sql("CREATE TABLE " + ptTableName + " (name STRING, num BIGINT) PARTITIONED BY (pt1 STRING, pt2 STRING)");

List<Integer> data = new ArrayList<Integer>();

for (int i = 0; i < 100; i++) {

data.add(i);

}

JavaRDD<Row> dfRDD = sparkContext.parallelize(data, 2).map(new Function<Integer, Row>() {

public Row call(Integer i) {

return RowFactory.create(

"name-" + i.toString(),

Long.valueOf(i));

}

});

JavaRDD<Row> ptDfRDD = sparkContext.parallelize(data, 2).map(new Function<Integer, Row>() {

public Row call(Integer i) {

return RowFactory.create(

"name-" + i.toString(),

Long.valueOf(i),

"2018",

"0601");

}

});

List<StructField> structFilelds = new ArrayList<StructField>();

structFilelds.add(DataTypes.createStructField("name", DataTypes.StringType, true));

structFilelds.add(DataTypes.createStructField("num", DataTypes.LongType, true));

Dataset<Row> df = spark.createDataFrame(dfRDD, DataTypes.createStructType(structFilelds));

structFilelds.add(DataTypes.createStructField("pt1", DataTypes.StringType, true));

structFilelds.add(DataTypes.createStructField("pt2", DataTypes.StringType, true));

Dataset<Row> ptDf = spark.createDataFrame(ptDfRDD, DataTypes.createStructType(structFilelds));

// write regular table

df.write().insertInto(tableName); // insertInto语义

df.write().mode("overwrite").insertInto(tableName);// insertOverwrite语义

// read regular table

Dataset<Row> rdf = spark.sql("select name, num from " + tableName);

System.out.println("rdf count: " + rdf.count());

rdf.printSchema();

//create table as select

spark.sql("CREATE TABLE " + tableNameCopy + " AS SELECT name, num FROM " + tableName);

spark.sql("SELECT * FROM " + tableNameCopy).show();

// write partitioned table

// DataFrameWriter cannot specify partition writing, need to use temporary table and then write to a specific partition with SQL

df.registerTempTable(ptTableName + "_tmp_view");

spark.sql("insert into table " + ptTableName + " partition (pt1='2018', pt2='0601') select * from " + ptTableName + "_tmp_view");

spark.sql("insert overwrite table " + ptTableName + " partition (pt1='2018', pt2='0601') select * from " + ptTableName + "_tmp_view");

ptDf.write().insertInto(ptTableName);// dynamic partition insertInto semantics

ptDf.write().mode("overwrite").insertInto(ptTableName); // dynamic partition insertOverwrite semantics

// read partitioned table

Dataset<Row> rptdf = spark.sql("select name, num, pt1, pt2 from " + ptTableName + " where pt1 = '2018' and pt2 = '0601'");

System.out.println("rptdf count: " + rptdf.count());

rptdf.printSchema();

Odps odps = CupidSession.get().odps();

System.out.println(odps.tables().get(ptTableName).getPartitions().size());

System.out.println(odps.tables().get(ptTableName).getPartitions().get(0).getPartitionSpec());

}

}

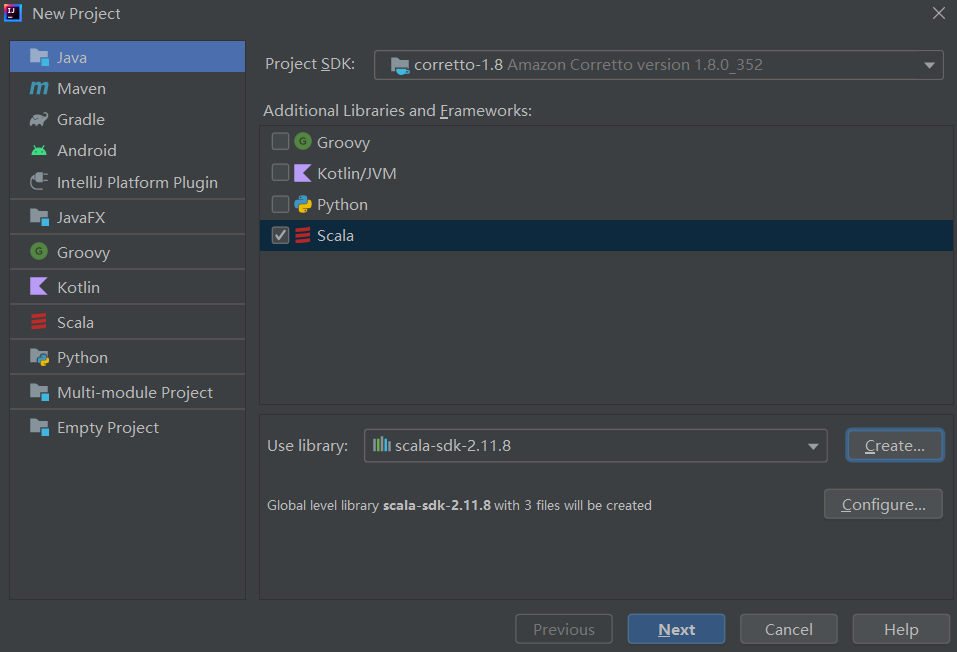

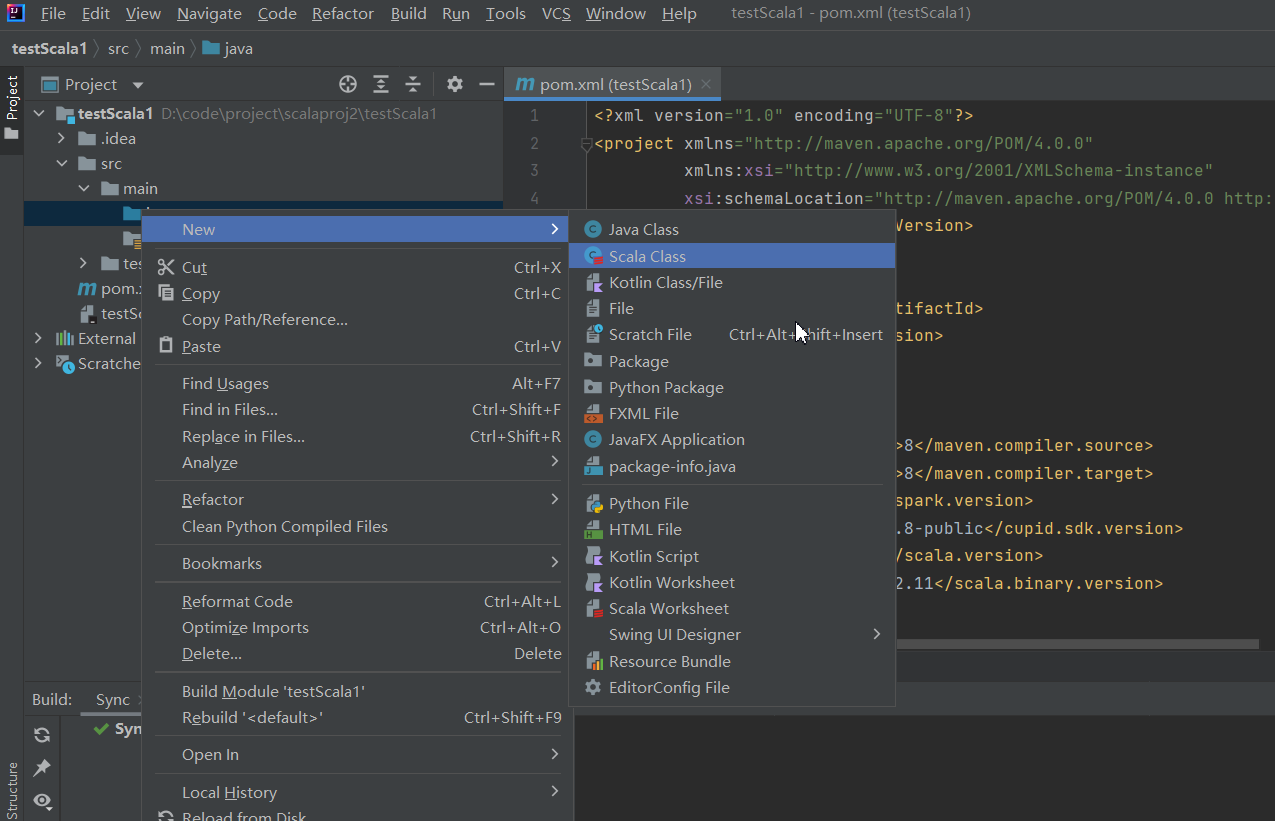

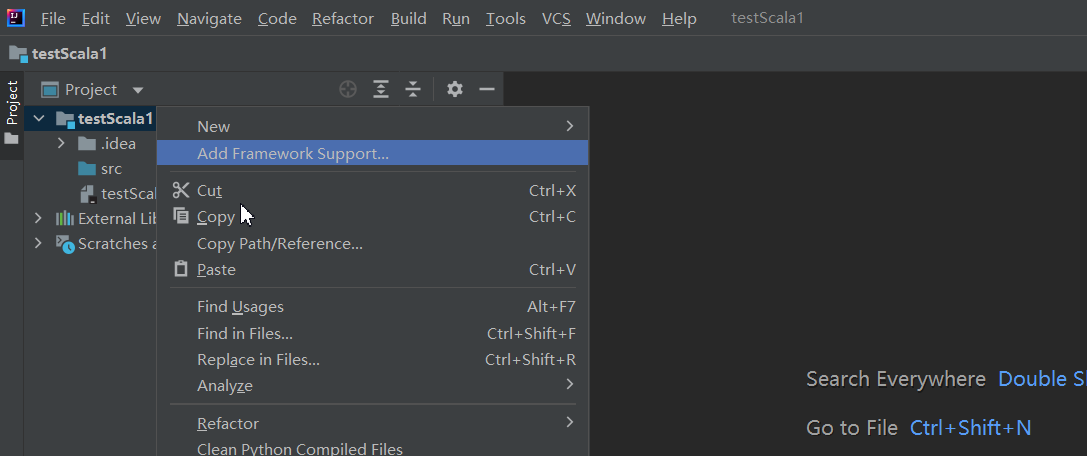

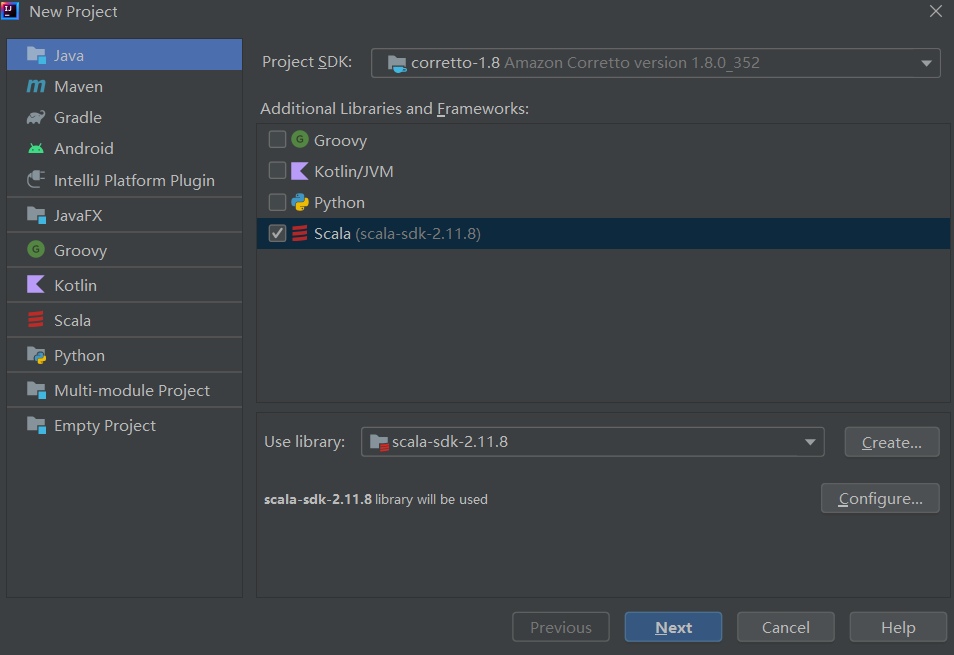

| Scala | 2.11.8 |

| JDK | 1.8 |

| Spark | 2.x |

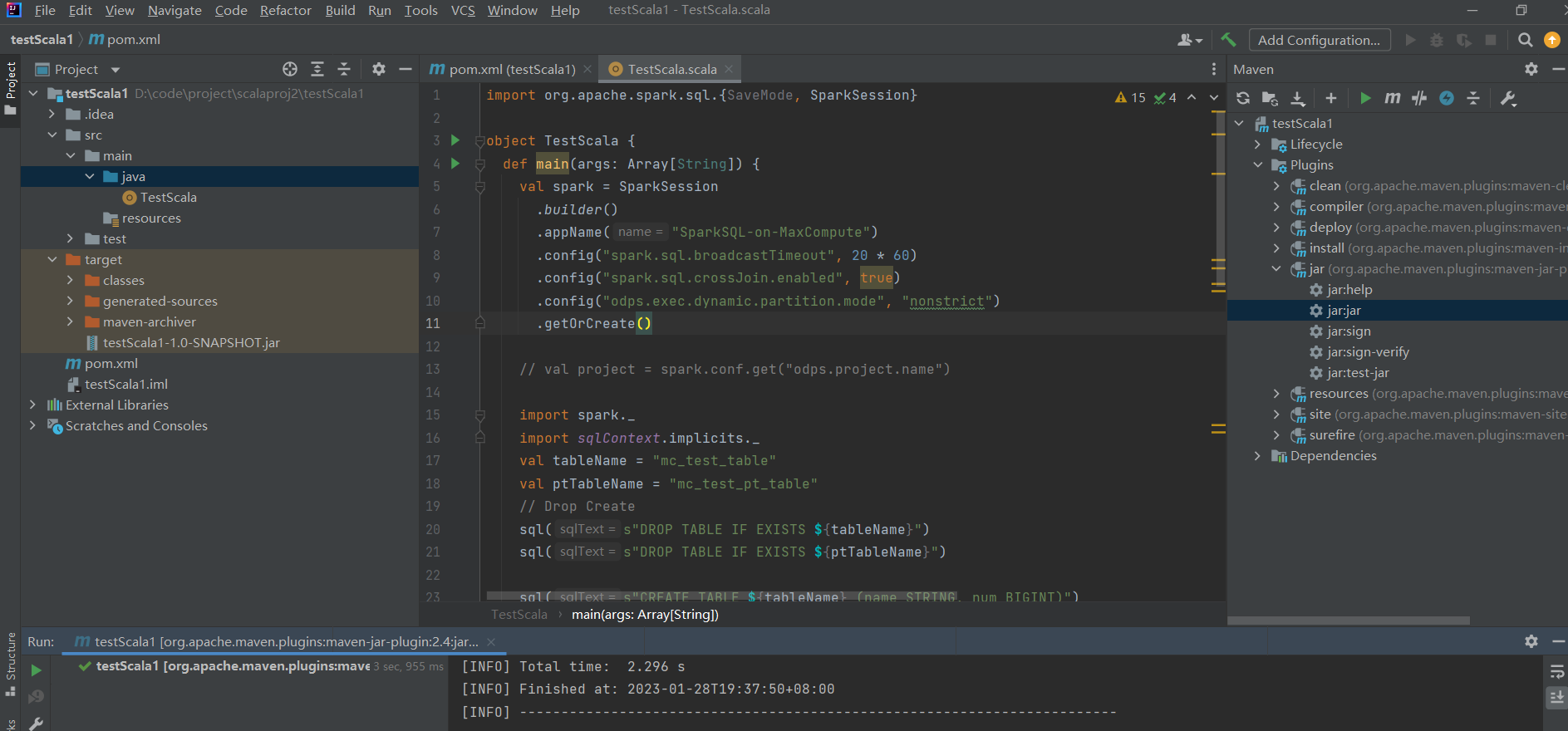

import org.apache.spark.sql.{SaveMode, SparkSession}

object TestScala {

def main(args: Array[String]) {

val spark = SparkSession

.builder()

.appName("SparkSQL-on-MaxCompute")

.config("spark.sql.broadcastTimeout", 20 * 60)

.config("spark.sql.crossJoin.enabled", true)

.config("odps.exec.dynamic.partition.mode", "nonstrict")

.getOrCreate()

// val project = spark.conf.get("odps.project.name")

import spark._

import sqlContext.implicits._

val tableName = "mc_test_table"

val ptTableName = "mc_test_pt_table"

// Drop Create

sql(s"DROP TABLE IF EXISTS ${tableName}")

sql(s"DROP TABLE IF EXISTS ${ptTableName}")

sql(s"CREATE TABLE ${tableName} (name STRING, num BIGINT)")

sql(s"CREATE TABLE ${ptTableName} (name STRING, num BIGINT) PARTITIONED BY (pt1 STRING, pt2 STRING)")

val df = spark.sparkContext.parallelize(0 to 99, 2).map(f => {

(s"name-$f", f)

}).toDF("name", "num")

val ptDf = spark.sparkContext.parallelize(0 to 99, 2).map(f => {

(s"name-$f", f, "2018", "0601")

}).toDF("name", "num", "pt1", "pt2")

// write regular table

df.write.insertInto(tableName) // insertInto semantics

df.write.mode("overwrite").insertInto(tableName) // insertOverwrite semantics

// write partitioned table

// DataFrameWriter cannot specify partition writing, need to use temporary table and then write to a specific partition with SQL

df.createOrReplaceTempView(s"${ptTableName}_tmp_view")

sql(s"insert into table ${ptTableName} partition (pt1='2018', pt2='0601') select * from ${ptTableName}_tmp_view")

sql(s"insert overwrite table ${ptTableName} partition (pt1='2018', pt2='0601') select * from ${ptTableName}_tmp_view")

ptDf.write.insertInto(ptTableName) // dynamic partition insertInto semantics

ptDf.write.mode("overwrite").insertInto(ptTableName) // dynamic partition insertOverwrite semantics

// read regular table

val rdf = sql(s"select name, num from $tableName")

println(s"rdf count, ${rdf.count()}")

rdf.printSchema()

// read partitioned table

val rptdf = sql(s"select name, num, pt1, pt2 from $ptTableName where pt1 = '2018' and pt2 = '0601'")

println(s"rptdf count, ${rptdf.count()}")

rptdf.printSchema()

}

}

| JDK | 1.8 |

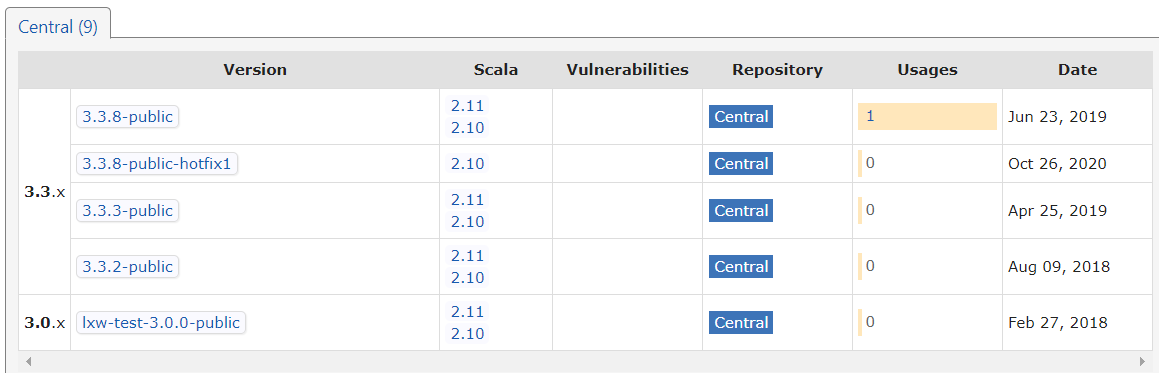

| Scala | 2.11 or 2.10 |

| Spark | 3.3.x or 3.0.x |

POM.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>AliDemo</groupId>

<artifactId>testSpark3</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

<spark.version>3.1.1</spark.version>

<cupid.sdk.version>3.3.8-public</cupid.sdk.version>

<scala.version>2.12.12</scala.version>

<scala.binary.version>2.12</scala.binary.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_${scala.binary.version}</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_${scala.binary.version}</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-mllib_${scala.binary.version}</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_${scala.binary.version}</artifactId>

<version>${spark.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/com.aliyun.odps/odps-spark-datasource -->

<dependency>

<groupId>com.aliyun.odps</groupId>

<artifactId>cupid-sdk</artifactId>

<version>${cupid.sdk.version}</version>

</dependency>

<dependency>

<groupId>com.aliyun.odps</groupId>

<artifactId>hadoop-fs-oss</artifactId>

<version>${cupid.sdk.version}</version>

</dependency>

</dependencies>

</project>SparkDemo3.java

import com.aliyun.odps.Odps;

import com.aliyun.odps.cupid.CupidSession;

import org.apache.spark.sql.SparkSession;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.api.java.function.Function;

import org.apache.spark.sql.Row;

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.RowFactory;

import org.apache.spark.sql.types.*;

import java.util.ArrayList;

import java.util.List;

import org.apache.spark.sql.types.StructField;

public class SparkDemo3 {

public static void main(String[] args) throws Exception {

SparkSession spark = SparkSession

.builder()

.appName("SparkSQL-on-MaxCompute")

.config("spark.sql.defaultCatalog","odps")

.config("spark.sql.catalog.odps", "org.apache.spark.sql.execution.datasources.v2.odps.OdpsTableCatalog")

.config("spark.sql.sources.partitionOverwriteMode", "dynamic")

.config("spark.sql.extensions", "org.apache.spark.sql.execution.datasources.v2.odps.extension.OdpsExtensions")

.config("spark.sql.catalogImplementation","hive")

.getOrCreate();

JavaSparkContext sparkContext = new JavaSparkContext(spark.sparkContext());

String tableName = "mc_test_table";

String tableNameCopy = "mc_test_table_copy";

String ptTableName = "mc_test_pt_table";

spark.sql("DROP TABLE IF EXISTS " + tableName);

spark.sql("DROP TABLE IF EXISTS " + tableNameCopy);

spark.sql("DROP TABLE IF EXISTS " + ptTableName);

spark.sql("CREATE TABLE " + tableName + " (name STRING, num BIGINT)");

spark.sql("CREATE TABLE " + ptTableName + " (name STRING, num BIGINT) PARTITIONED BY (pt1 STRING, pt2 STRING)");

List<Integer> data = new ArrayList<Integer>();

for (int i = 0; i < 100; i++) {

data.add(i);

}

JavaRDD<Row> dfRDD = sparkContext.parallelize(data, 2).map(new Function<Integer, Row>() {

public Row call(Integer i) {

return RowFactory.create(

"name-" + i.toString(),

Long.valueOf(i));

}

});

JavaRDD<Row> ptDfRDD = sparkContext.parallelize(data, 2).map(new Function<Integer, Row>() {

public Row call(Integer i) {

return RowFactory.create(

"name-" + i.toString(),

Long.valueOf(i),

"2018",

"0601");

}

});

List<StructField> structFilelds = new ArrayList<StructField>();

structFilelds.add(DataTypes.createStructField("name", DataTypes.StringType, true));

structFilelds.add(DataTypes.createStructField("num", DataTypes.LongType, true));

Dataset<Row> df = spark.createDataFrame(dfRDD, DataTypes.createStructType(structFilelds));

structFilelds.add(DataTypes.createStructField("pt1", DataTypes.StringType, true));

structFilelds.add(DataTypes.createStructField("pt2", DataTypes.StringType, true));

Dataset<Row> ptDf = spark.createDataFrame(ptDfRDD, DataTypes.createStructType(structFilelds));

// write regular table

df.write().insertInto(tableName); // insertInto semantic

df.writeTo(tableName).overwritePartitions(); // insertOverwrite use datasourcev2

// read regular table

Dataset<Row> rdf = spark.sql("select name, num from " + tableName);

System.out.println("rdf count: " + rdf.count());

rdf.printSchema();

//create table as select

spark.sql("CREATE TABLE " + tableNameCopy + " AS SELECT name, num FROM " + tableName);

spark.sql("SELECT * FROM " + tableNameCopy).show();

// write partitioned table

// DataFrameWriter cannot specify partition writing, need to use temporary table and then write to a specific partition with SQL

df.registerTempTable(ptTableName + "_tmp_view");

spark.sql("insert into table " + ptTableName + " partition (pt1='2018', pt2='0601') select * from " + ptTableName + "_tmp_view");

spark.sql("insert overwrite table " + ptTableName + " partition (pt1='2018', pt2='0601') select * from " + ptTableName + "_tmp_view");

ptDf.write().insertInto(ptTableName);// dynamic partition insertInto semantics

ptDf.write().mode("overwrite").insertInto(ptTableName); // dynamic partition insertOverwrite semantics

// read partitioned table

Dataset<Row> rptdf = spark.sql("select name, num, pt1, pt2 from " + ptTableName + " where pt1 = '2018' and pt2 = '0601'");

System.out.println("rptdf count: " + rptdf.count());

rptdf.printSchema();

// example for use odps

Odps odps = CupidSession.get().odps();

System.out.println(odps.tables().get(ptTableName).getPartitions().size());

System.out.println(odps.tables().get(ptTableName).getPartitions().get(0).getPartitionSpec());

}

}

| Scala | 2.11.8 |

| JDK | 1.8 |

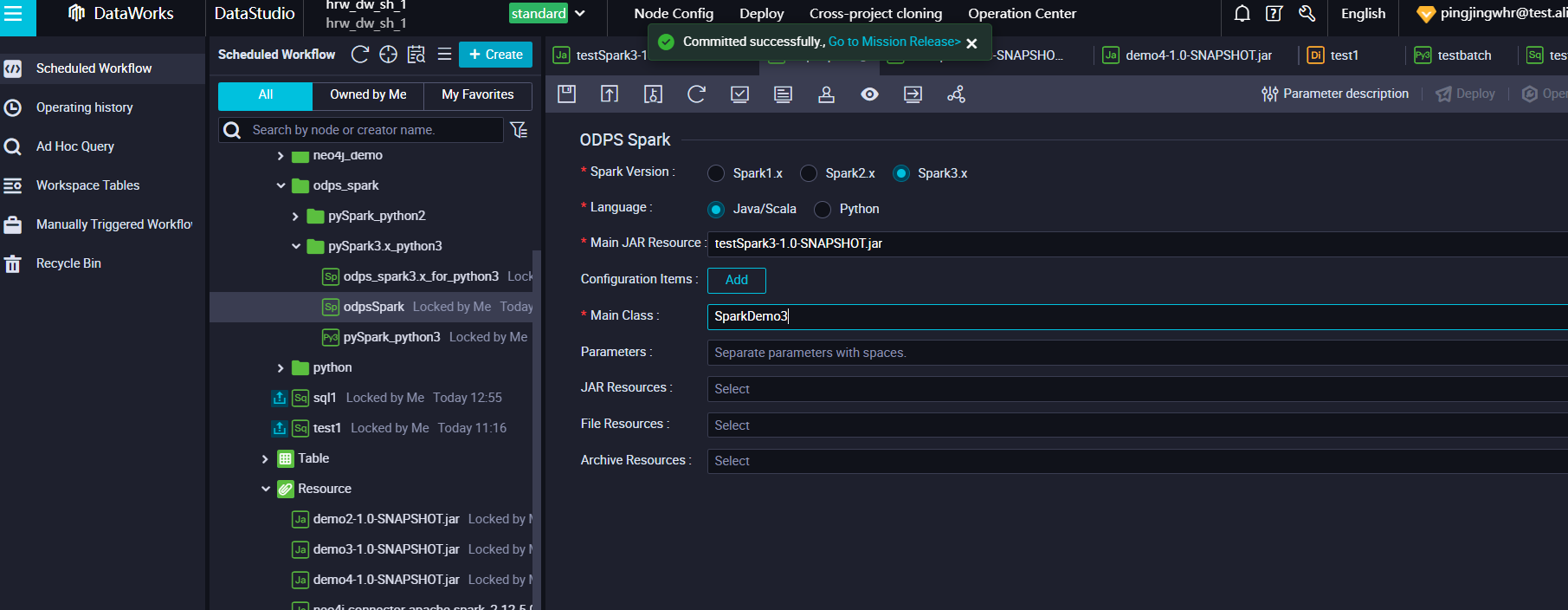

| Spark | 3.x |

import org.apache.spark.sql.{SaveMode, SparkSession}

object ScalaDemo {

def main(args: Array[String]) {

val spark = SparkSession

.builder()

.appName("SparkSQL-on-MaxCompute")

.config("spark.sql.broadcastTimeout", 20 * 60)

.config("spark.sql.crossJoin.enabled", true)

.config("spark.sql.defaultCatalog","odps")

.config("spark.sql.catalog.odps", "org.apache.spark.sql.execution.datasources.v2.odps.OdpsTableCatalog")

.config("spark.sql.sources.partitionOverwriteMode", "dynamic")

.config("spark.sql.extensions", "org.apache.spark.sql.execution.datasources.v2.odps.extension.OdpsExtensions")

.config("spark.sql.catalogImplementation","hive")

.getOrCreate()

import spark._

import sqlContext.implicits._

val tableName = "mc_test_table"

val ptTableName = "mc_test_pt_table"

// Drop Create

sql(s"DROP TABLE IF EXISTS ${tableName}")

sql(s"DROP TABLE IF EXISTS ${ptTableName}")

sql(s"CREATE TABLE ${tableName} (name STRING, num BIGINT)")

sql(s"CREATE TABLE ${ptTableName} (name STRING, num BIGINT) PARTITIONED BY (pt1 STRING, pt2 STRING)")

val df = spark.sparkContext.parallelize(0 to 99, 2).map(f => {

(s"name-$f", f)

}).toDF("name", "num")

val ptDf = spark.sparkContext.parallelize(0 to 99, 2).map(f => {

(s"name-$f", f, "2018", "0601")

}).toDF("name", "num", "pt1", "pt2")

// write regular table

df.write.insertInto(tableName) // insertInto语义

df.writeTo(tableName).overwritePartitions() // insertOverwrite use datasourceV2

// write partitioned table

// DataFrameWriter cannot specify partition writing, need to use temporary table and then write to a specific partition with SQL

df.createOrReplaceTempView(s"${ptTableName}_tmp_view")

sql(s"insert into table ${ptTableName} partition (pt1='2018', pt2='0601') select * from ${ptTableName}_tmp_view")

sql(s"insert overwrite table ${ptTableName} partition (pt1='2018', pt2='0601') select * from ${ptTableName}_tmp_view")

ptDf.write.insertInto(ptTableName) // dynamic partition insertInto semantics

ptDf.write.mode("overwrite").insertInto(ptTableName) // dynamic partition insertOverwrite semantics

// read regular table

val rdf = sql(s"select name, num from $tableName")

println(s"rdf show, ${rdf.count()}")

rdf.show()

rdf.printSchema()

// read partitioned table

val rptdf = sql(s"select name, num, pt1, pt2 from $ptTableName where pt1 = '2018' and pt2 = '0601'")

println(s"rptdf show, ${rptdf.count()}")

rptdf.show()

rptdf.printSchema()

}

}| Spark | 2.3 (Hardcoded) |

| Python | Python 2 or python 3 |

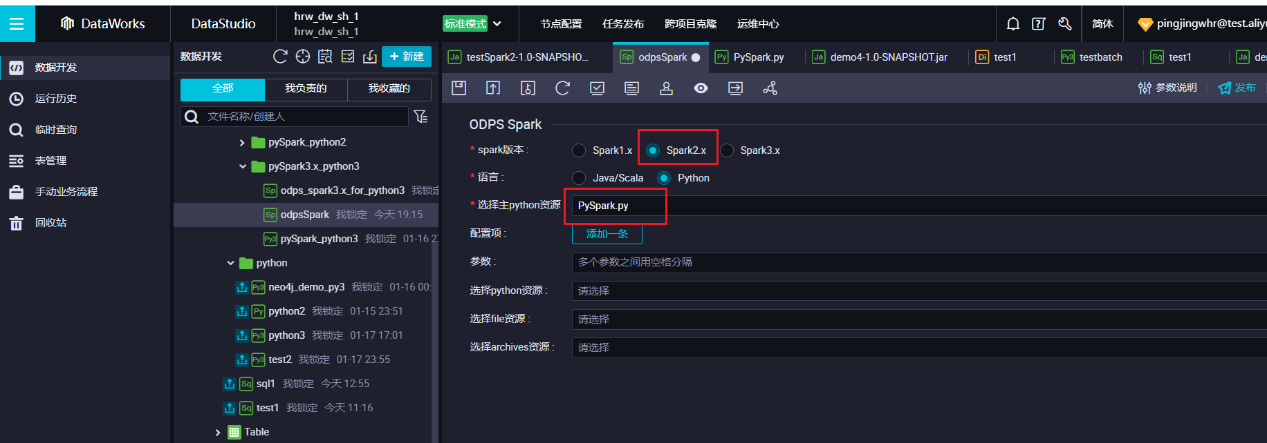

Only Spark 2.x is supported. The Python version does not matter.

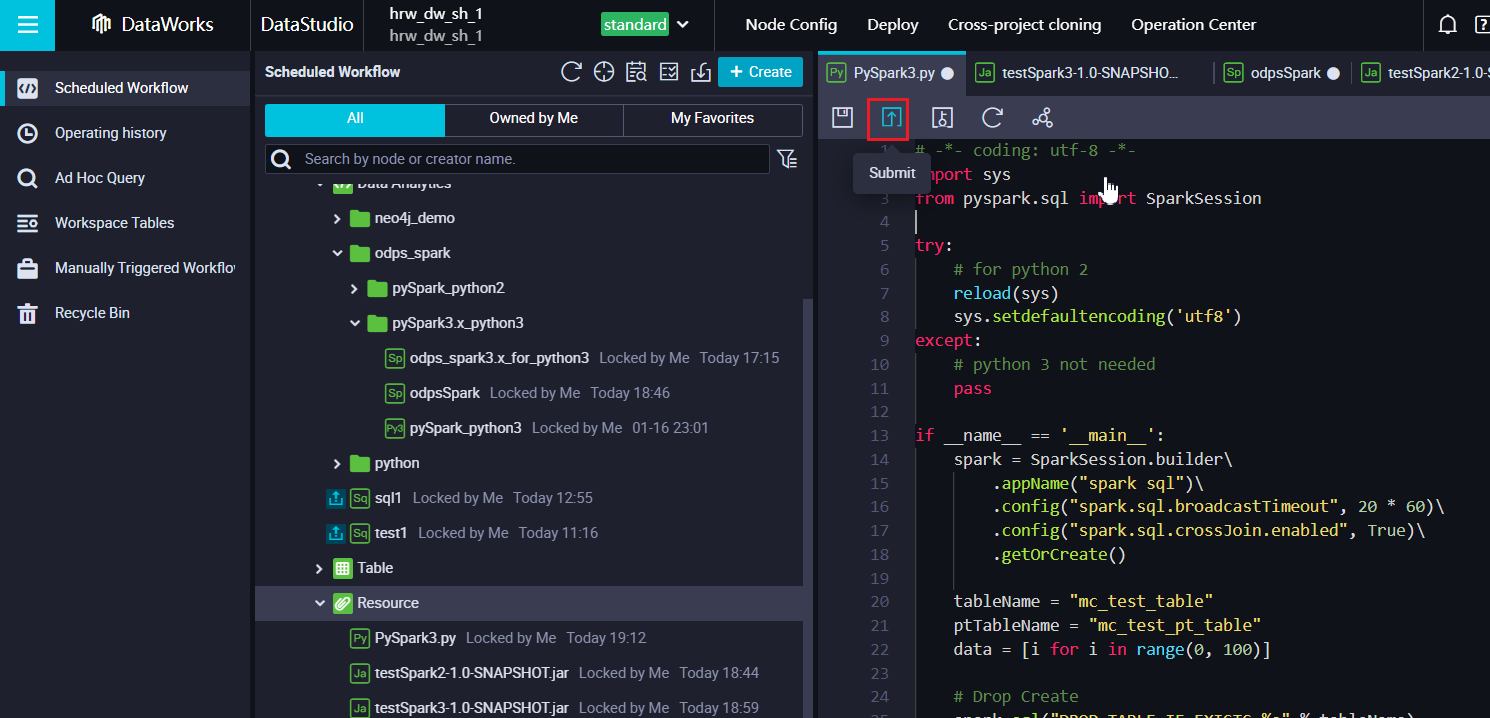

# -*- coding: utf-8 -*-

import sys

from pyspark.sql import SparkSession

try:

# for python 2

reload(sys)

sys.setdefaultencoding('utf8')

except:

# python 3 not needed

pass

if __name__ == '__main__':

spark = SparkSession.builder\

.appName("spark sql")\

.config("spark.sql.broadcastTimeout", 20 * 60)\

.config("spark.sql.crossJoin.enabled", True)\

.getOrCreate()

tableName = "mc_test_table"

ptTableName = "mc_test_pt_table"

data = [i for i in range(0, 100)]

# Drop Create

spark.sql("DROP TABLE IF EXISTS %s" % tableName)

spark.sql("DROP TABLE IF EXISTS %s" % ptTableName)

spark.sql("CREATE TABLE %s (name STRING, num BIGINT)" % tableName)

spark.sql("CREATE TABLE %s (name STRING, num BIGINT) PARTITIONED BY (pt1 STRING, pt2 STRING)" % ptTableName)

df = spark.sparkContext.parallelize(data, 2).map(lambda s: ("name-%s" % s, s)).toDF("name: string, num: int")

pt_df = spark.sparkContext.parallelize(data, 2).map(lambda s: ("name-%s" % s, s, "2018", "0601")).toDF("name: string, num: int, pt1: string, pt2: string")

# write regular table

df.write.insertInto(tableName) # insertInto semantics

df.writeTo(tableName).overwritePartitions() # insertOverwrite use datasourcev2

# write partitioned table

# DataFrameWriter cannot specify partition writing, need to use temporary table and then write to a specific partition with SQL

df.createOrReplaceTempView("%s_tmp_view" % ptTableName)

spark.sql("insert into table %s partition (pt1='2018', pt2='0601') select * from %s_tmp_view" % (ptTableName, ptTableName))

spark.sql("insert overwrite table %s partition (pt1='2018', pt2='0601') select * from %s_tmp_view" % (ptTableName, ptTableName))

pt_df.write.insertInto(ptTableName) # dynamic partition insertInto semantics

pt_df.write.insertInto(ptTableName, True) # dynamic partition insertOverwrite semantics

# read regular table

rdf = spark.sql("select name, num from %s" % tableName)

print("rdf count, %s\n" % rdf.count())

rdf.printSchema()

# read partitioned table

rptdf = spark.sql("select name, num, pt1, pt2 from %s where pt1 = '2018' and pt2 = '0601'" % ptTableName)

print("rptdf count, %s" % (rptdf.count()))

rptdf.printSchema()

How to Load OSS gz Data into MaxCompute with DataWorks Scheduled Job

Use Dataworks with Python3 to Import Data from MaxCompute to Neo4j

Alibaba Cloud Indonesia - February 15, 2024

Alibaba Cloud Indonesia - February 19, 2024

Alibaba Cloud MaxCompute - June 2, 2021

Alibaba Cloud MaxCompute - March 3, 2020

- November 23, 2017

Alibaba Cloud MaxCompute - August 15, 2022

Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More DataWorks

DataWorks

A secure environment for offline data development, with powerful Open APIs, to create an ecosystem for redevelopment.

Learn MoreMore Posts by Farruh