By Qu Ning (Shengyuan)

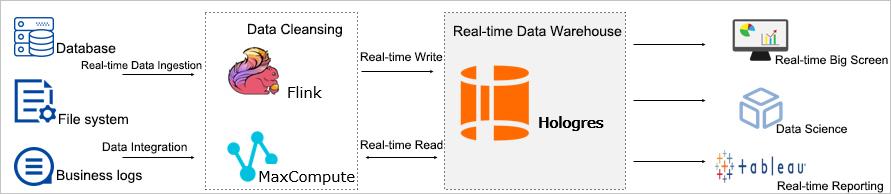

MaxCompute, a big data computing service, is a fully managed cloud data warehouse service that supports various analysis scenarios. Hologres is a real-time interactive analysis product that supports high-concurrency data writing and querying in real time. It also supports high-performance MaxCompute data analysis acceleration — without data migration — together with federated analysis of Hologres real-time data and MaxCompute offline data. Through this, you can implement solutions for offline and real-time unified data warehouse products. Many customers use the integrated solutions of MaxCompute and Hologres in many business scenarios at the same time, such as large-scale offline analysis, real-time operation analysis, interactive querying, and online serving.

The typical application scenarios are included in the Hologres official product documentation. You can refer Federated Analysis for Real-time and Offline Data, as shown in the following figure.

The data interactive process includes two parts between MaxCompute and Hologres:

This article mainly introduces the development practices of directly reading Hologres data source in MaxCompute under the combination solution of MaxCompute and Hologres.

There are two ways for MaxCompute to access the Hologres data source:

For details, you can refer to Hologres external table of MaxCompute product documentation. The following is only a brief introduction.

First, create a Hologres external table in MaxCompute.

When creating a Hologres external table in MaxCompute, you must specify StorageHandler in the DDL statement together with JDBC driver parameters configured to access the MaxCompute-Hologres data source. DDL statement for creating tables is defined as follows:

create external table <table_name>(

<col1_name> <data_type>,

<col2_name> <data_type>,

......

)

stored by '<com.aliyun.odps.jdbc.JdbcStorageHandler>'

location '<jdbc:postgresql://<accessid>:<accesskey>@<endpoint>:<port>/<database>?currentSchema=<schema>&preferQueryMode=simple&table=<holo_table_name>/>'

tblproperties (

'mcfed.mapreduce.jdbc.driver.class'='org.postgresql.Driver',

'odps.federation.jdbc.target.db.type'='holo',

['odps.federation.jdbc.colmapping'='<col1:column1,col2:column2,col3:column3,...>']

);For example:

create external table if not exists my_table_holo_jdbc

(

id bigint,

name string

)

stored by 'com.aliyun.odps.jdbc.JdbcStorageHandler'

LOCATION 'jdbc:postgresql://LTAI4FzxmCwzb4BJqFce****:hKZMEFjdLe8ngRT5qp75UYufvT****@hgprecn-cn-oew210utf003-cn-hangzhou-internal.MC-Hologres.aliyuncs.com:80/mc_test?currentSchema=public&preferQueryMode=simple&useSSL=false&table=holo/'

TBLPROPERTIES (

'mcfed.mapreduce.jdbc.driver.class'='org.postgresql.Driver',

'odps.federation.jdbc.target.db.type'='holo',

'odps.federation.jdbc.colmapping'='id:id,name:name'

);The Hologres external table will be mapped to a database table of the specified Hologres instance.

Then, use MaxCompute SQL to query the external table to obtain the Hologres table data.

Sample statement as follows:

--Add the following attributes to access MaxCompute external tables

set odps.sql.split.hive.bridge=true;

set odps.sql.hive.compatible=true;

--Query MaxCompute-Hologres external table data。

select * from my_table_holo_jdbc limit 10;MaxCompute SQL can write data to Hologres external tables to directly import the processed consumption data to Hologres. As such, you can obtain the best analysis experience with Hologres's high-performance storage analysis engine.

-- Add the following attributes to access MaxCompute-Hologres external tables

set odps.sql.split.hive.bridge=true;

set odps.sql.hive.compatible=true;

--Insert data into MaxCompute-Hologres external tables.

insert into my_table_holo_jdbc values (12,'alice');

--Query MaxCompute-Hologres external tables

select * from my_table_holo_jdbc;Native MaxCompute integrates Apache Spark into its analysis engine. In addition to directly analyzing MaxCompute data using Spark, MaxCompute Spark can also connect to Hologres data sources through JDBC. If you are familiar with Spark, you can implement more flexible business logic in Spark code.

Here, we use the following three submitting modes to verify how Spark accesses Hologres. For more information about the modes supported by MaxCompute Spark, you can refer to MaxCompute documentation. We don't discuss more details here.

Users who are familiar with MaxCompute Spark often use this mode for local tests to verify whether the code logic is correct. Introduced in this article, this mode is mainly used to verify the normal access to the Hologres data source through Spark JDBC.

Sample Code (PySpark):

spark = SparkSession \

.builder \

.appName("MC spark") \

.getOrCreate()

jdbcDF = spark.read.format("jdbc"). \

options(

url='jdbc:postgresql://hgpostcn-cn-xxx-cn-shanghai.hologres.aliyuncs.com:80/test_holo',

dbtable='test',

user='xxx',# e.g.Access_id

password='xxx', # e.g.Secret_key

driver='org.postgresql.Driver'). \

load()

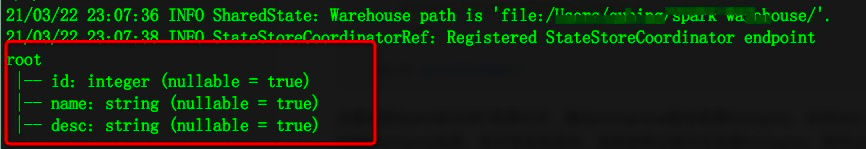

jdbcDF.printSchema()Here, Spark JDBC is used to connect to Hologres through PostgreSQL driver to access the test table in the test_holo database and print the schema information of this table. Public network is used to connect to Hologres as the test is performed locally. The URL is the access domain name of the public network in the Hologres instance.

Use spark-submit to submit a job:

#Allow local Spark to access Holo

spark-submit --master local --driver-class-path /drivers/postgresql-42.2.16.jar --jars /path/postgresql-42.2.16.jar /path/read_holo.pyThe PostgreSQL JDBC driver is accessible on the official repository of PG drivers.

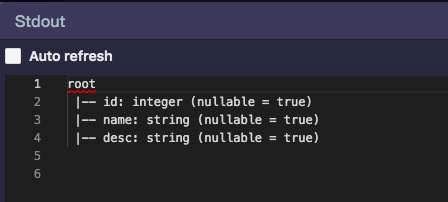

View the spark print log after submitting:

The access is successful if the printed schema information of the test table is consistent with that of the test table created in Hologres. For more information about the data processing of JDBC data source tables, you can refer to the Apache Spark documentation. This article describes how to interconnect with JDBC data sources with no details of the other processing logic.

Sample Code (PySpark):

spark = SparkSession \

.builder \

.appName("MC spark") \

.getOrCreate()

jdbcDF = spark.read.format("jdbc"). \

options(

url='jdbc:postgresql://hgpostcn-cn-xxx-cn-shanghai-internal.hologres.aliyuncs.com:80/test_holo',

dbtable='test',

user='xxx',# e.g.Access_id

password='xxx', # e.g.Secret_key

driver='org.postgresql.Driver'). \

load()

jdbcDF.printSchema()Due to the yarn-cluster submitting mode, you must upload the code to the MaxCompute cluster in the cloud for running. In the cluster, MaxCompute is accessed through Hologres's classic network domain name on the intranet instead of using public addresses.

Configure the MaxCompute Spark client in spark-defaults.conf and add the parameter item of spark.hadoop.odps.cupid.trusted.services.access.list and the classic network domain name addresses of the Hologres instance. With this, it aims to enable the network policy to the corresponding Hologres instance in the MaxCompute secure sandbox environment. Otherwise, the MaxCompute yarn cluster cannot access the external services by default. Here, you should notice that the MaxCompute Spark client is customized for MaxCompute. For more information, you can refer to the product documentation.

# OdpsAccount Info Setting

spark.hadoop.odps.project.name = your_maxc_project

spark.hadoop.odps.access.id = xxx

spark.hadoop.odps.access.key = xxx

# endpoint

spark.hadoop.odps.end.point = http://service.cn.maxcompute.aliyun.com/api

spark.hadoop.odps.runtime.end.point = http://service.cn.maxcompute.aliyun-inc.com/api

#Access holo instance

spark.hadoop.odps.cupid.trusted.services.access.list = hgprecn-cn-xxx-cn-shanghai-internal.hologres.aliyuncs.com:80Use the spark-submit method to submit a job:

#Allow the local Spark to access Holo

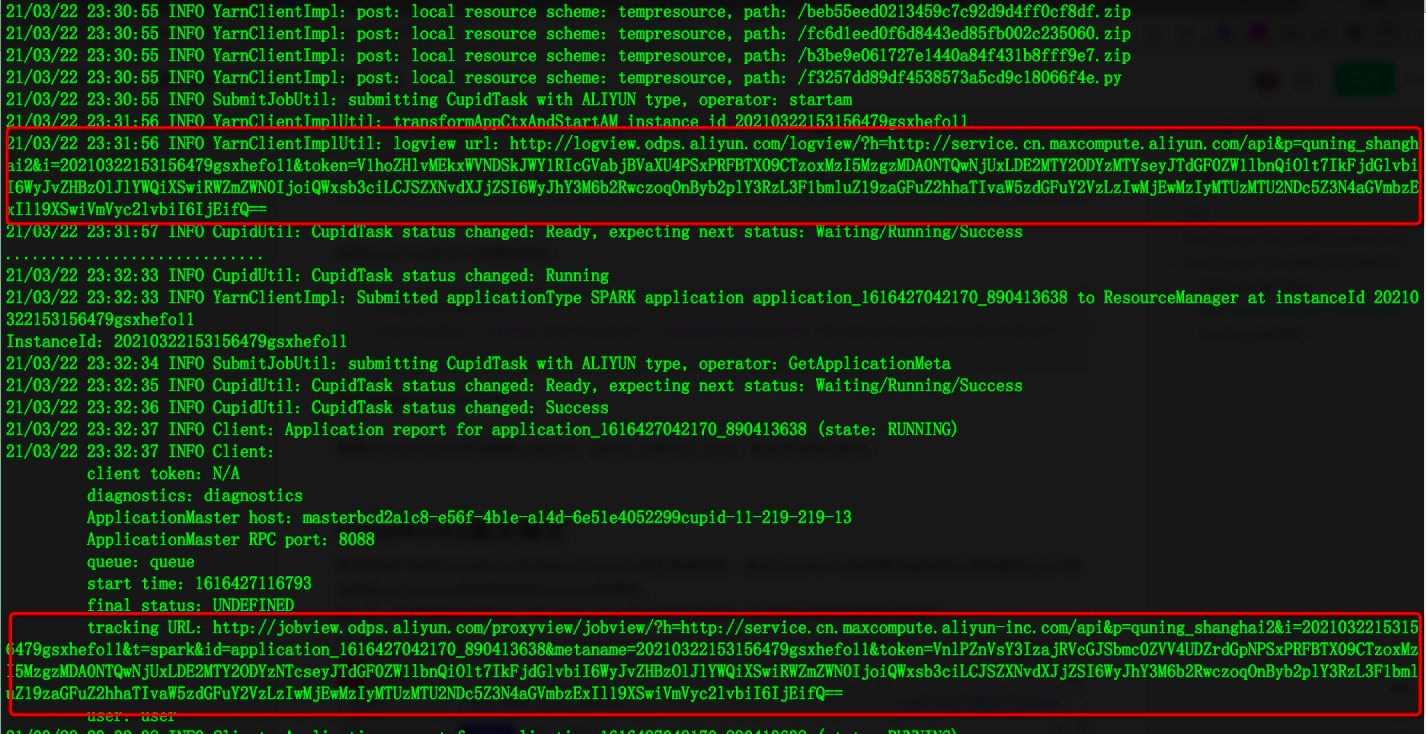

spark-submit --master yarn-cluster --driver-class-path /drivers/postgresql-42.2.16.jar --jars /path/postgresql-42.2.16.jar /path/read_holo.pyView the Spark and print logs after submitting. When the job is completed normally, the LogView and the job view link of Spark-UI are printed to help diagnose the job further.

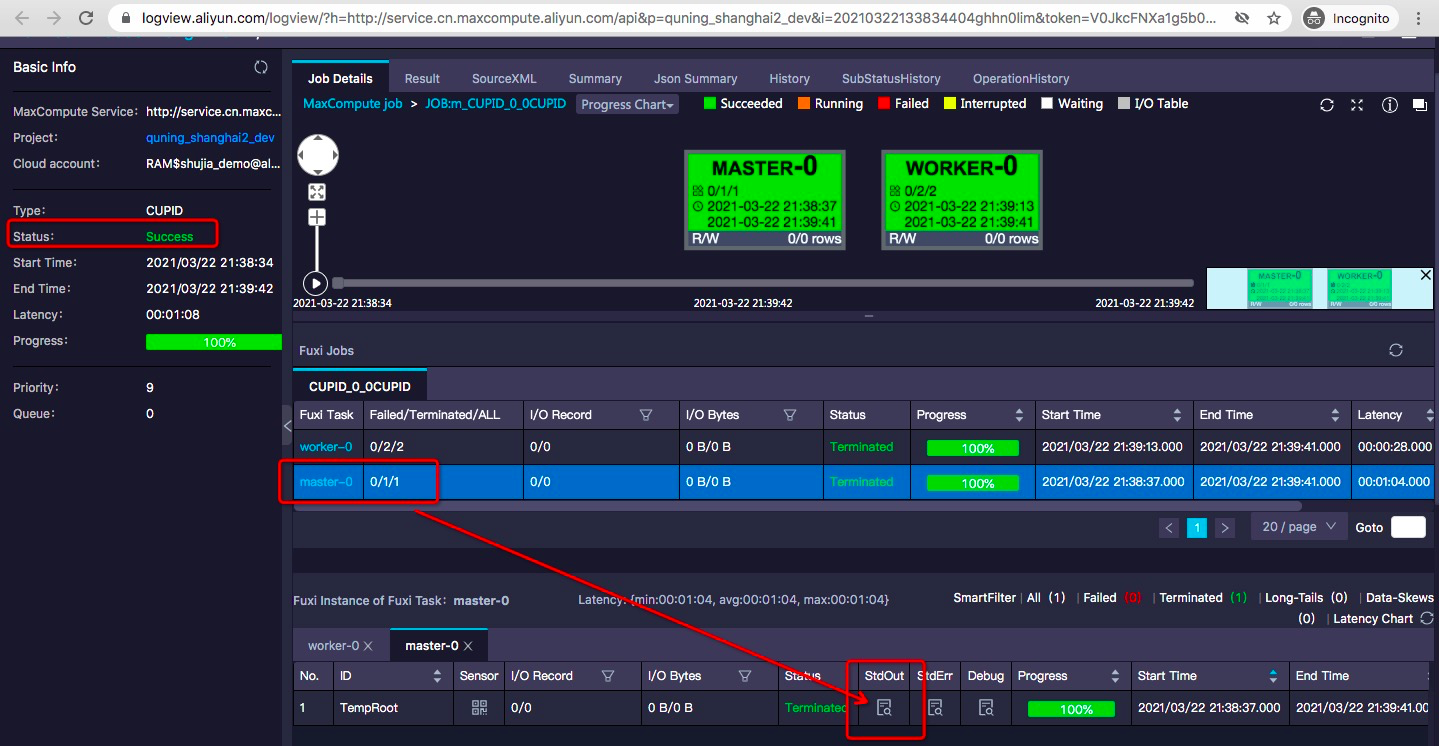

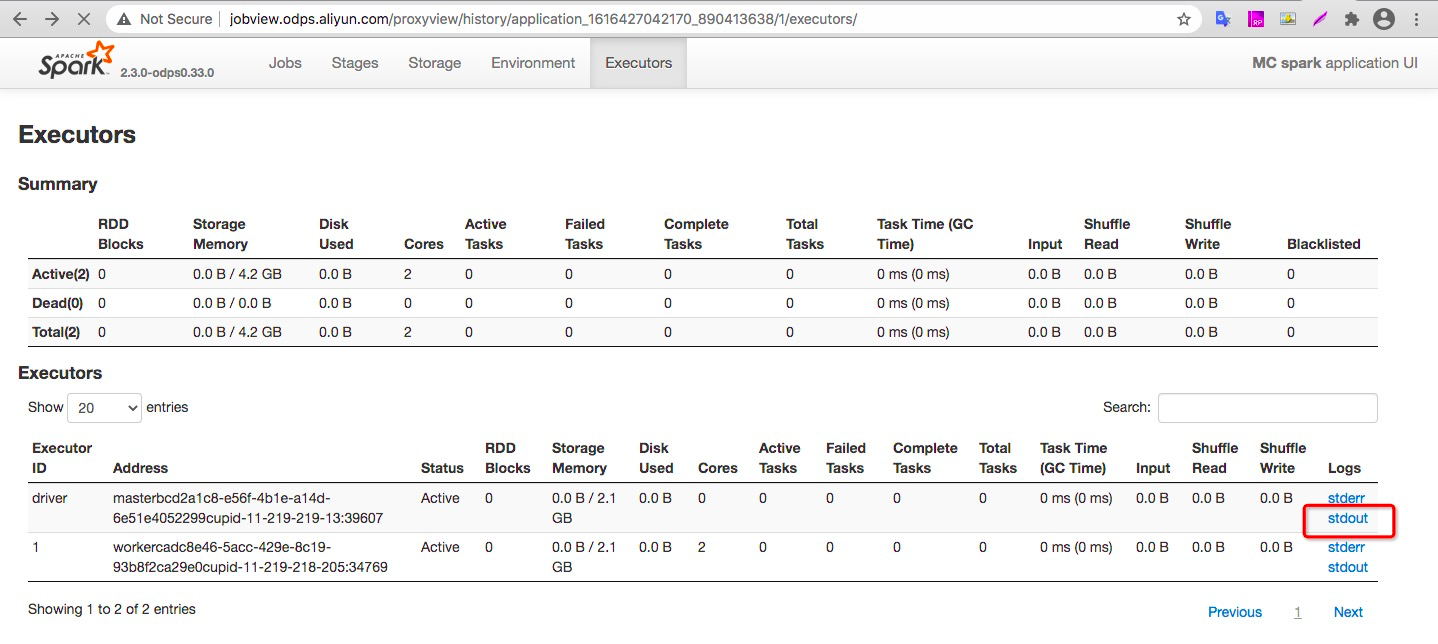

View the job view of LogView and Spark-UI in MaxCompute to verify whether the job is successfully executed.

Check for errors in the logs, and open the LogView link to see the job execution status.

When the job status is a success, click the StdOut button of the worker under the master-0 tag.

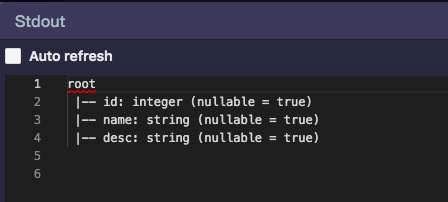

Here are the returned results of jdbcDF.printSchema() in the spark code, which is consistent with expectations. It means the verification is completed.

MaxCompute Spark also provides the Spark Web UI to diagnose. To obtain the information, open the job view link in the logs to check.

Click StdOut of the driver to check whether the application printout meets service expectations:

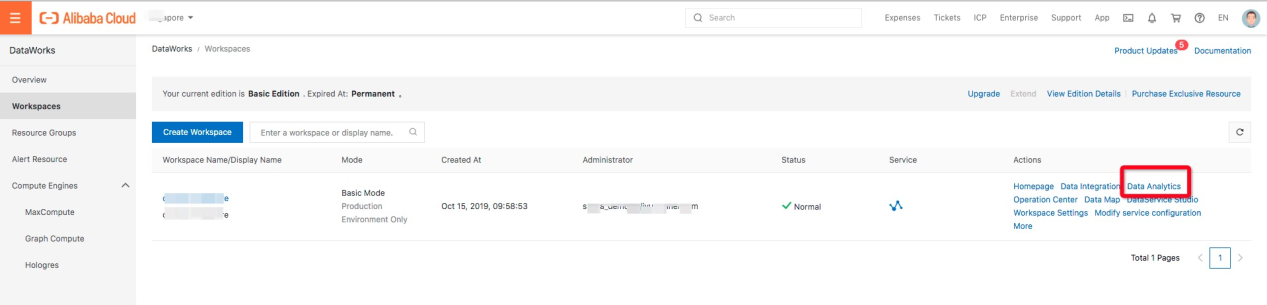

More users use DataWorks as the job scheduling system in MaxCompute. You can easily submit Spark jobs to MaxCompute through DataWorks to access the Hologres logic.

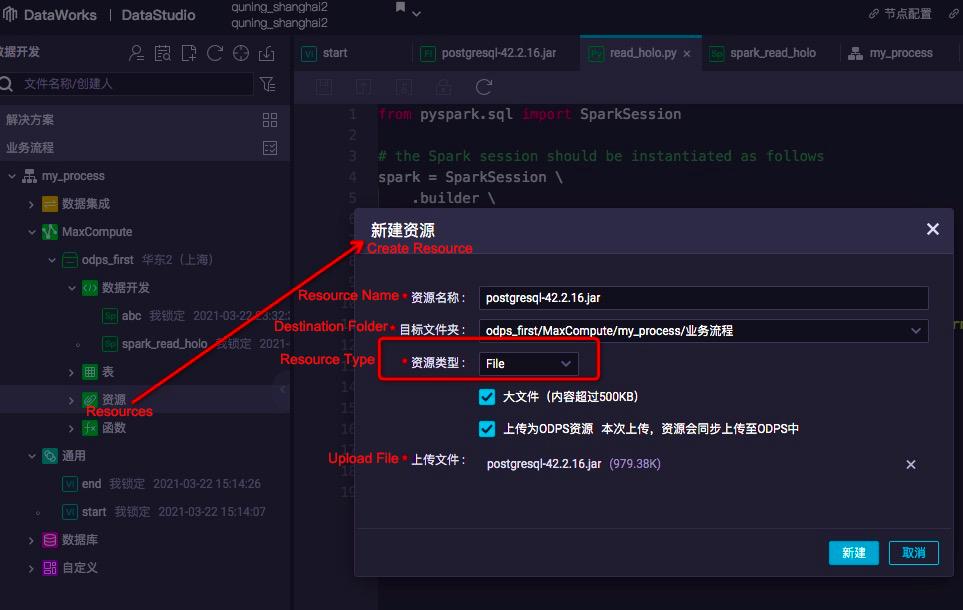

Step 1: Log on to the Alibaba Cloud console, access the specified DataWorks workspace, and enter the corresponding data development module.

Step 2: Create or adjust business processes.

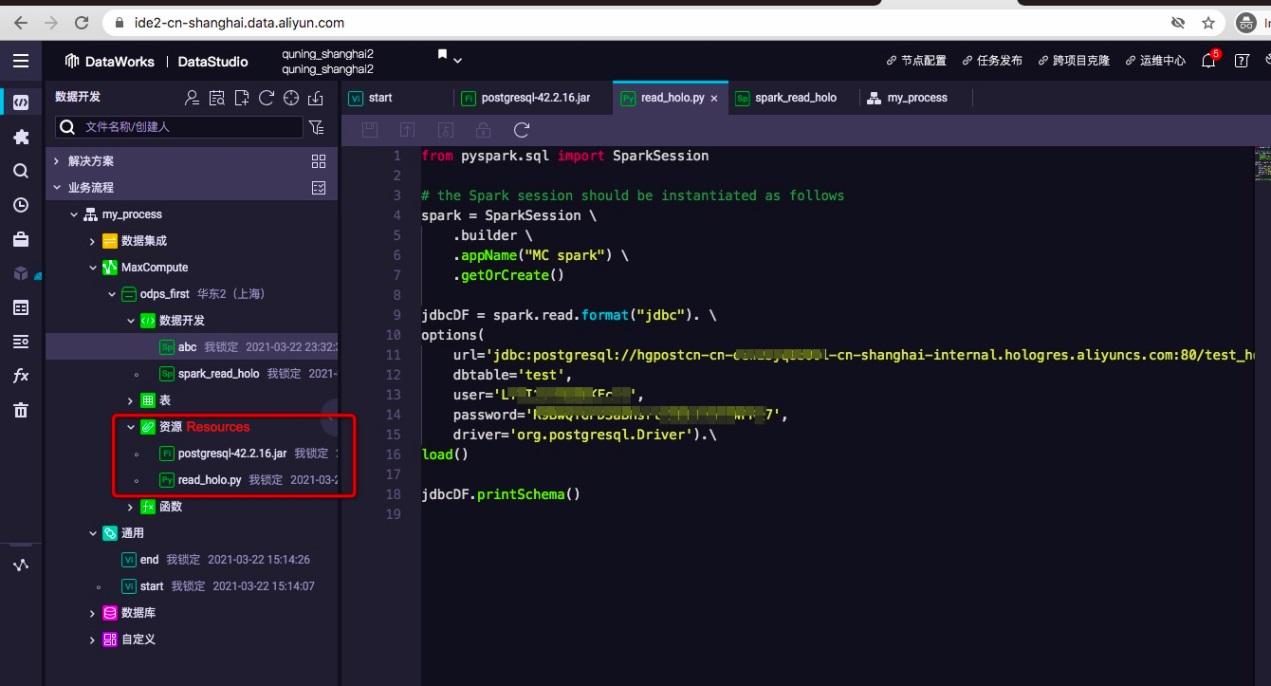

1) In the MaxCompute nodes of the business process, upload the PostgreSQL JDBC driver jar file for spark programs to drive. Note that, you must select the file resource type here.

2) Under the resource nodes, upload the python code. This article submits PySpark code for testing.

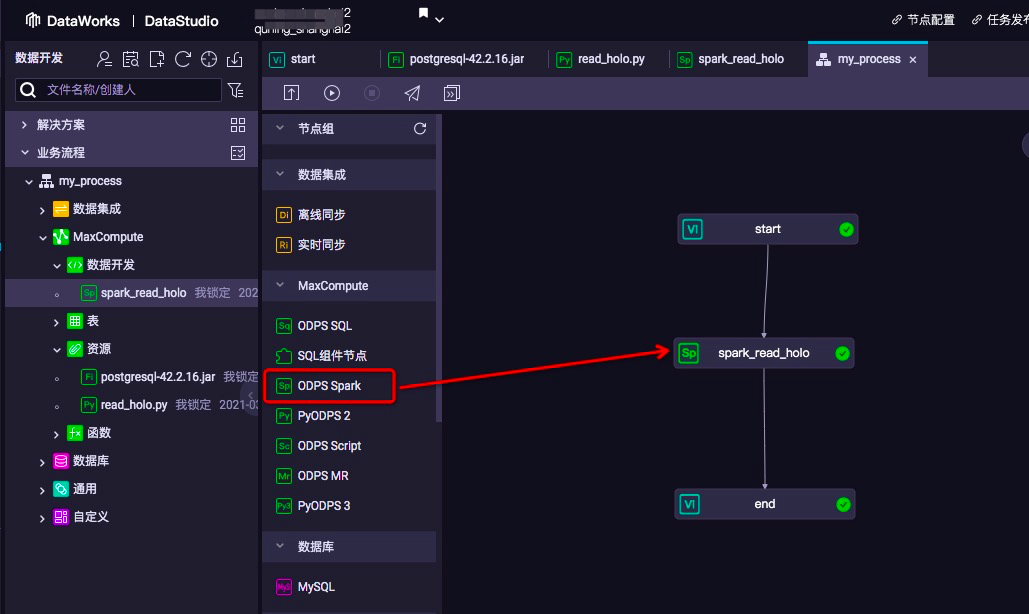

3) On the business flow canvas, select the ODPS Spark node and fill in the parameter information of the Spark job.

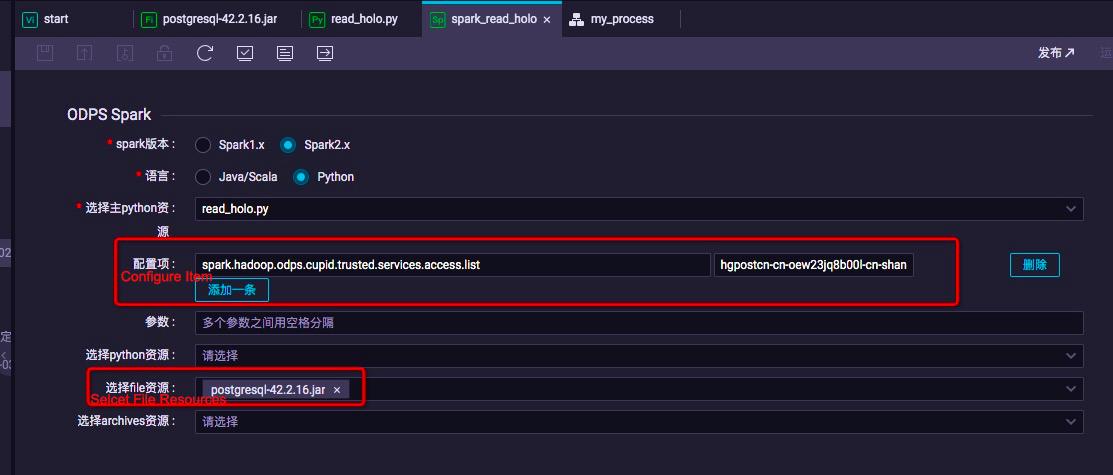

Python is used since PySpark is used as the sample code in this article. Select the uploaded python file resources from the "select main python resource" box.

At the same time, add a whitelist of Hologres destination addresses for this task in the configuration items. The classic network domain name of the Hologres instance is still used, as the following figure shows:

Configuration items: spark.hadoop.odps.cupid.trusted.services.access.list

Configuration item value: hgpostcn-cn-xxx-cn-shanghai-internal.hologres.aliyuncs.com:80

In the "select file resource" box, select the jar file driven by Postgres that was uploaded to the resource just now.

Click to save and submit.

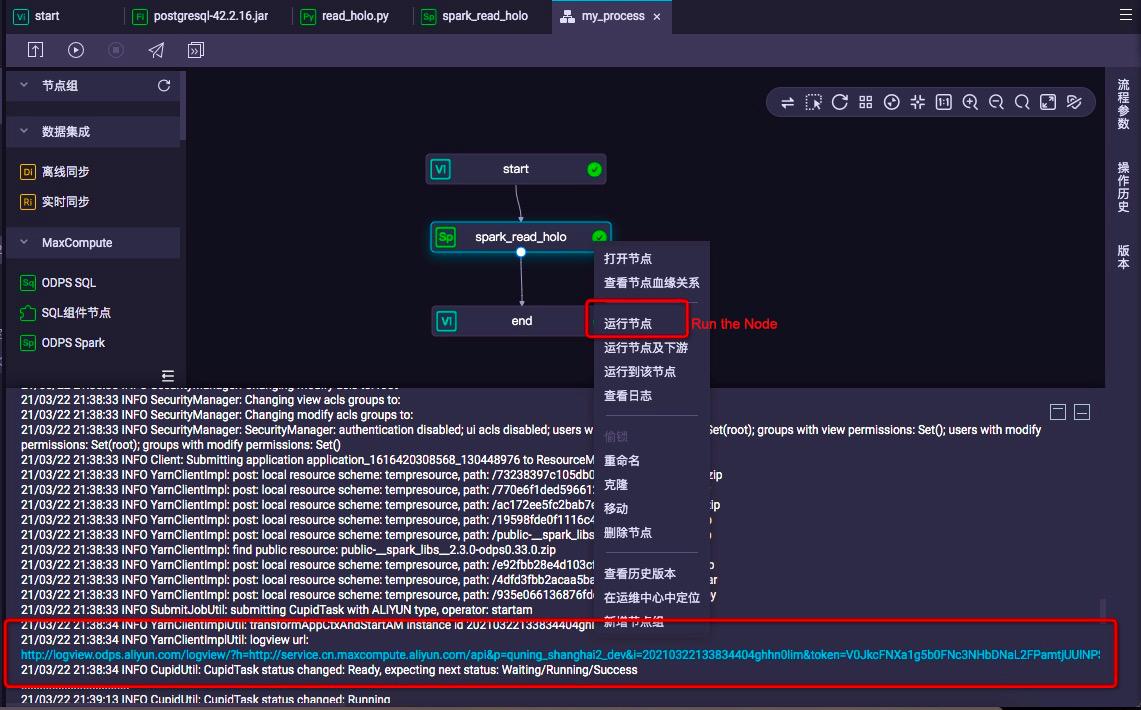

4) Run tasks on the ODPS Spark node on DataWorks for verification

Click "run the node". The logs will be printed under the DataWorks page, including the LogView links of the MaxCompute job diagnosis information.

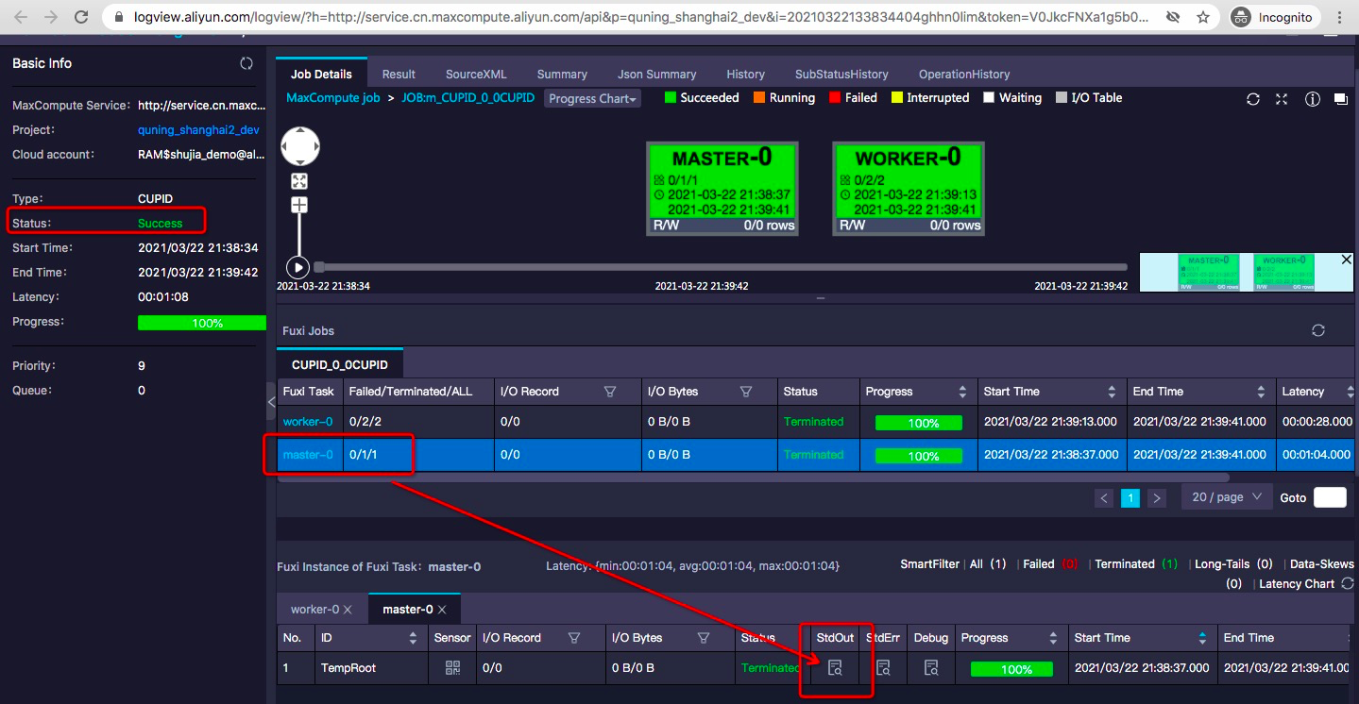

Check for errors in the log, and open the LogView link to view the job execution status.

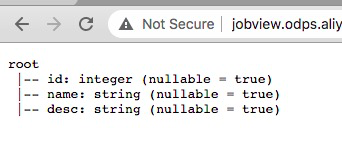

When the job status is a success, click the StdOut button of the worker node under the master-0 tag.

Here are the returned results of jdbcDF.printSchema() in the Spark code, which is consistent with expectations. It indicates that the verification is completed.

Continuous Evolution and Development of Data Warehouse Architecture

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - August 15, 2022

Alibaba Cloud MaxCompute - December 6, 2021

Alibaba Cloud MaxCompute - January 22, 2021

Apache Flink Community - August 14, 2025

Alibaba Cloud New Products - January 19, 2021

Hologres - June 30, 2021

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn MoreMore Posts by Alibaba Cloud MaxCompute