By Haoran Wang, Sr. Big Data Solution Architect of Alibaba Cloud

Check Windows Installation documents

https://neo4j.com/docs/operations-manual/current/installation/windows/

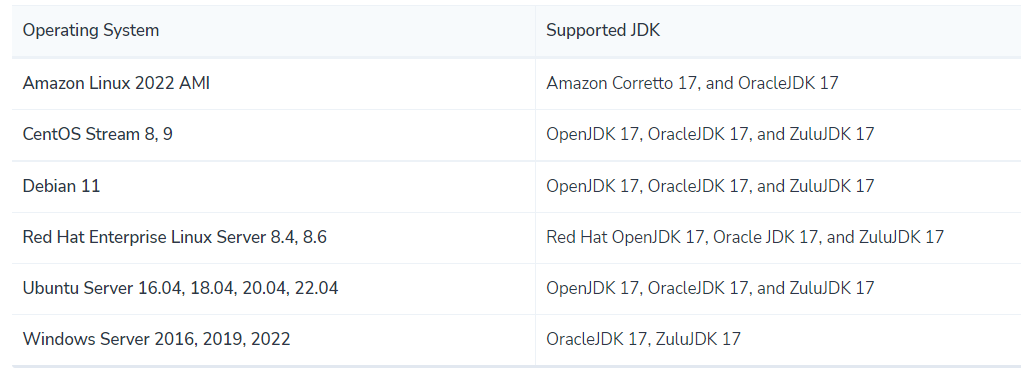

Check System Requirement

Install Oracle JDK 17

https://www.oracle.com/java/technologies/downloads/#jdk17-windows

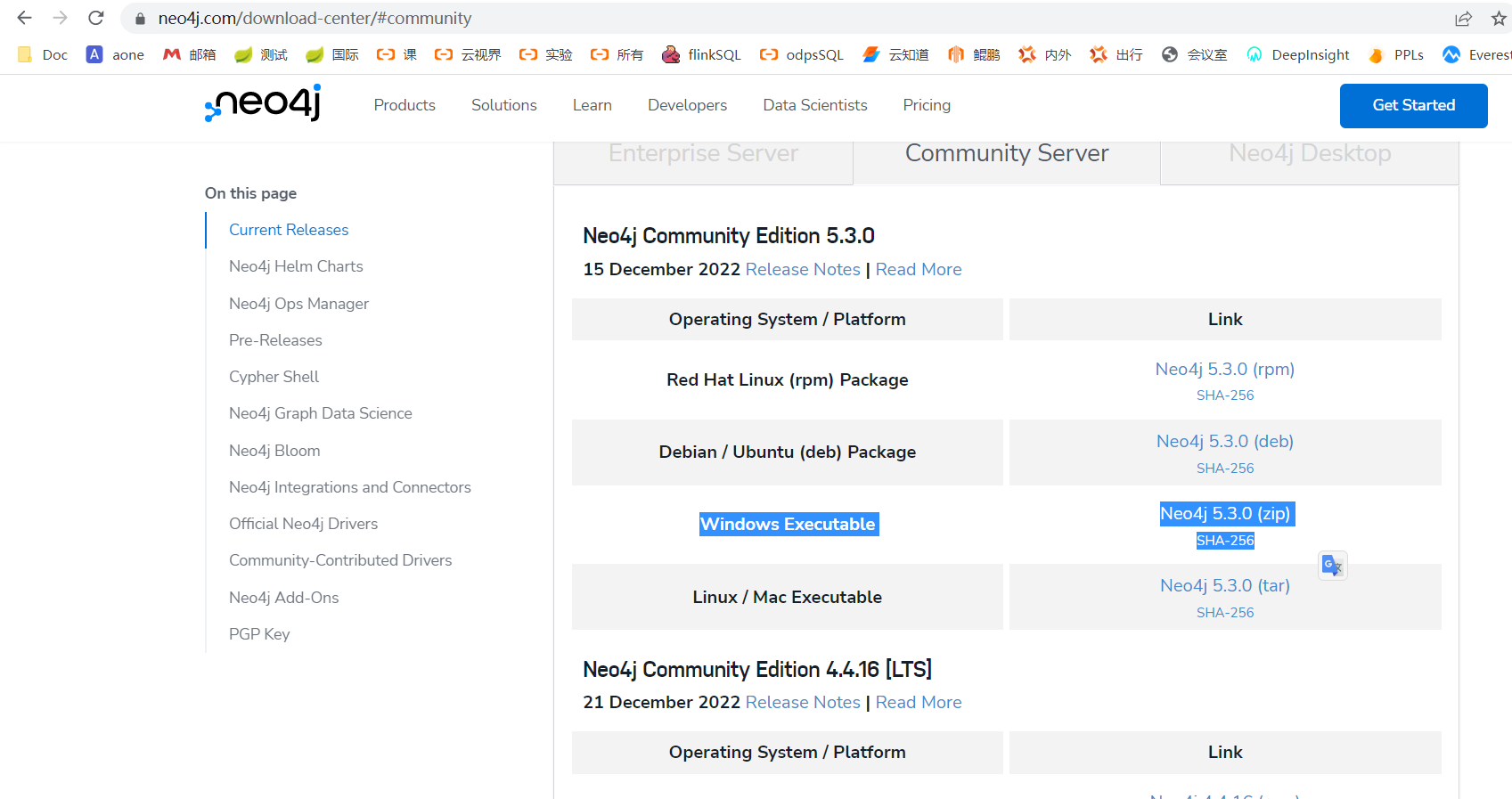

Get Download link of neo4j community

https://neo4j.com/download-center/#community

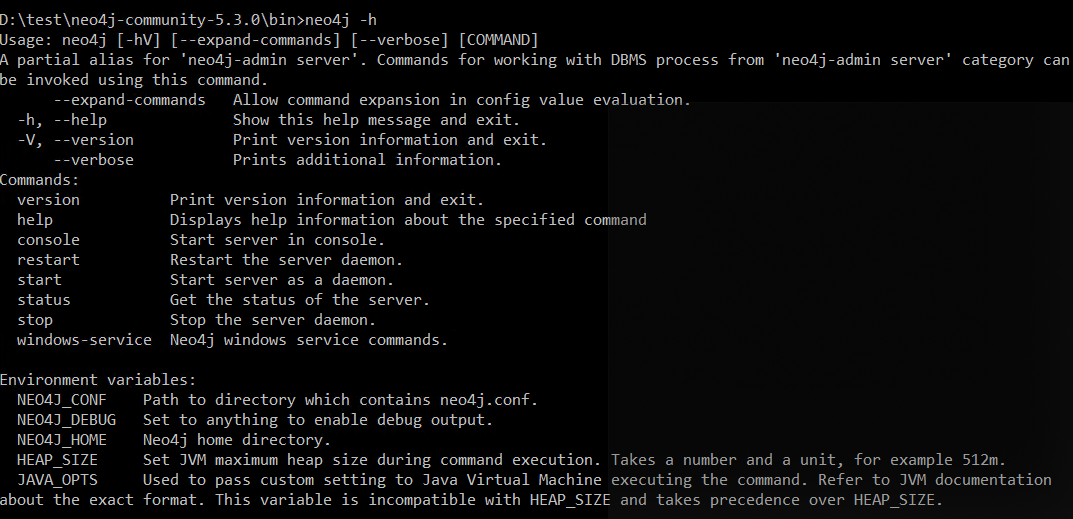

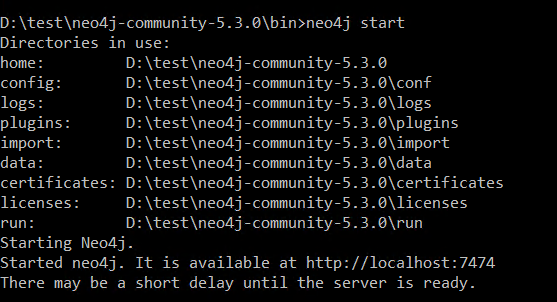

Check the neo4j command parameters

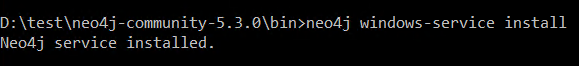

Install as Windows-service

Start the Service

Open a web explorer to access http://localhost:7474

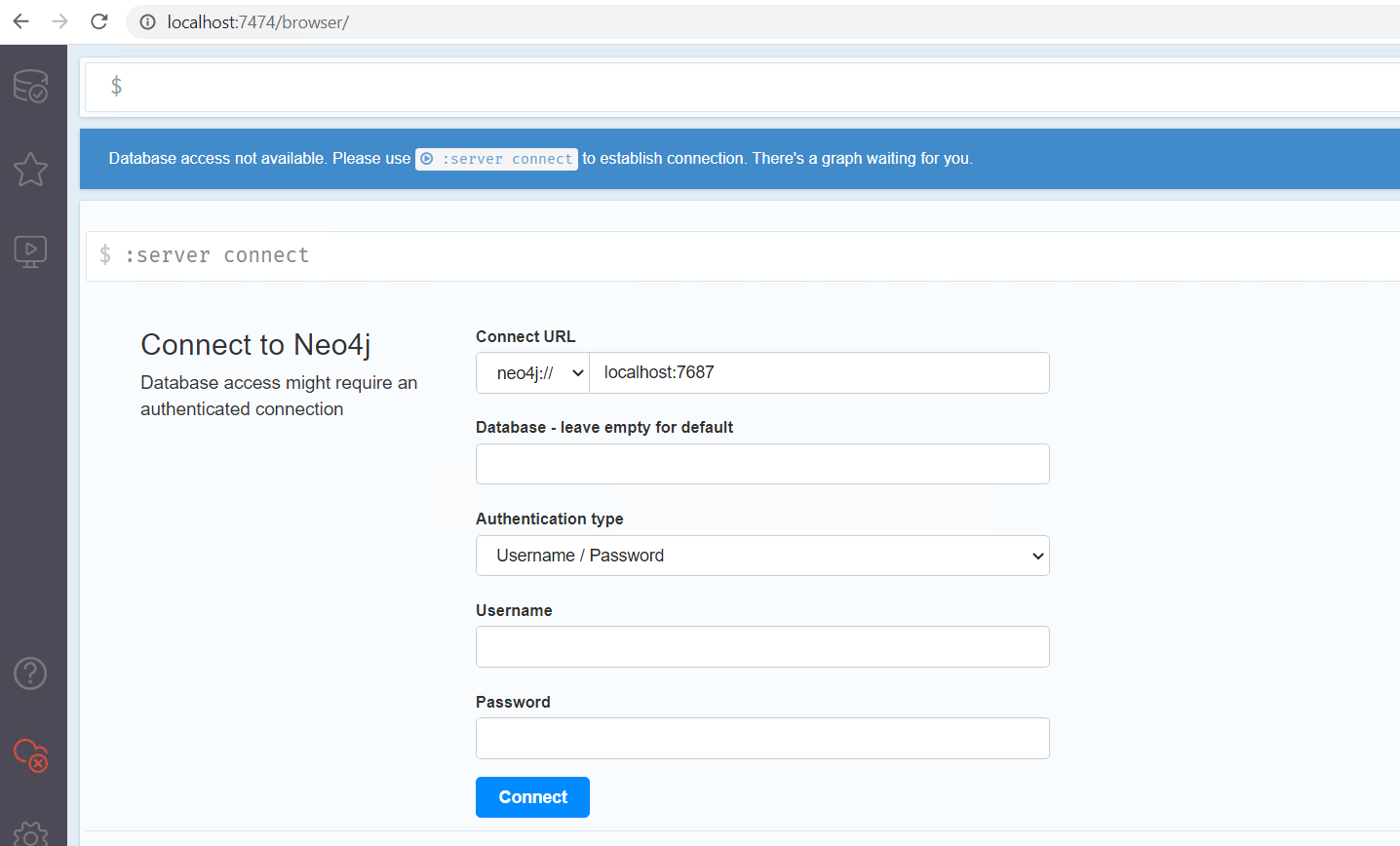

Connect database

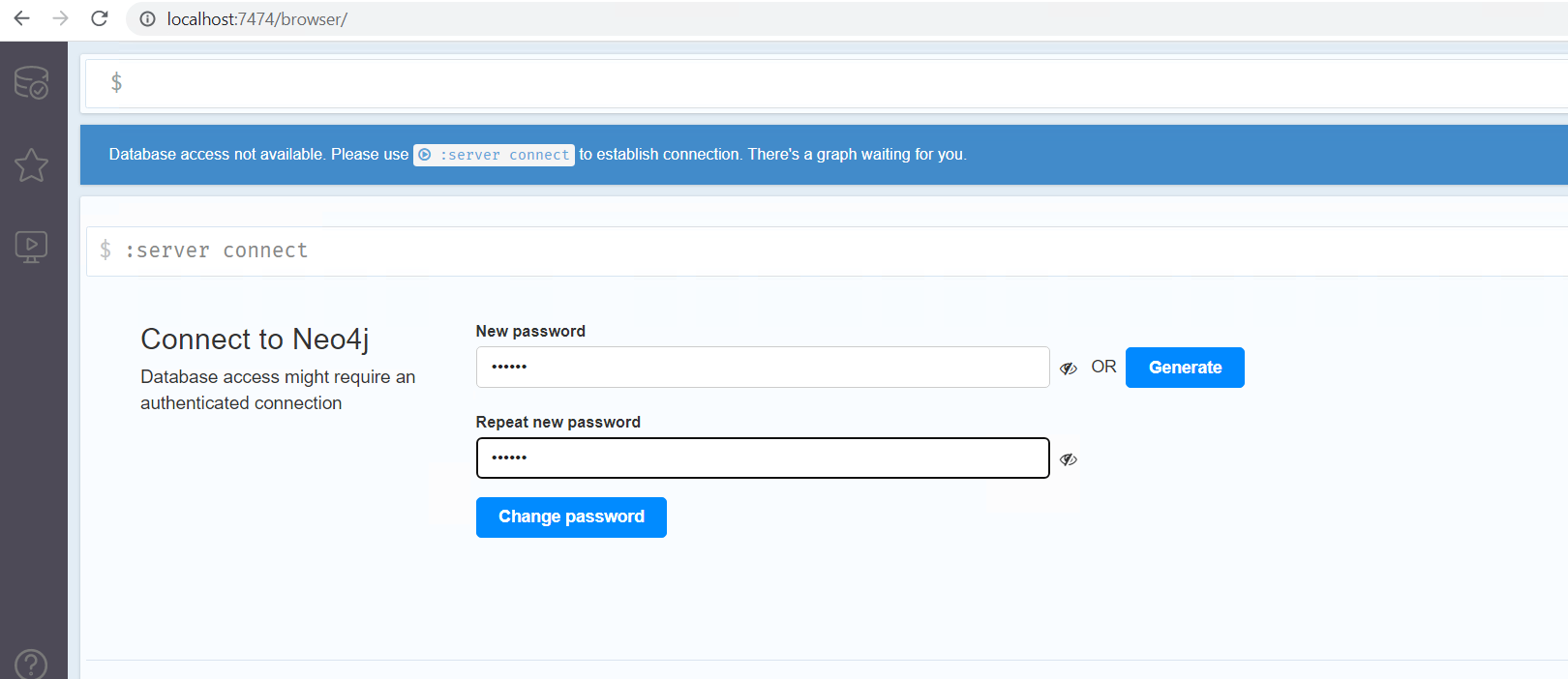

Input neo4j for both Username and Password, keep empty for database, then click connect. And change your password at the first time.

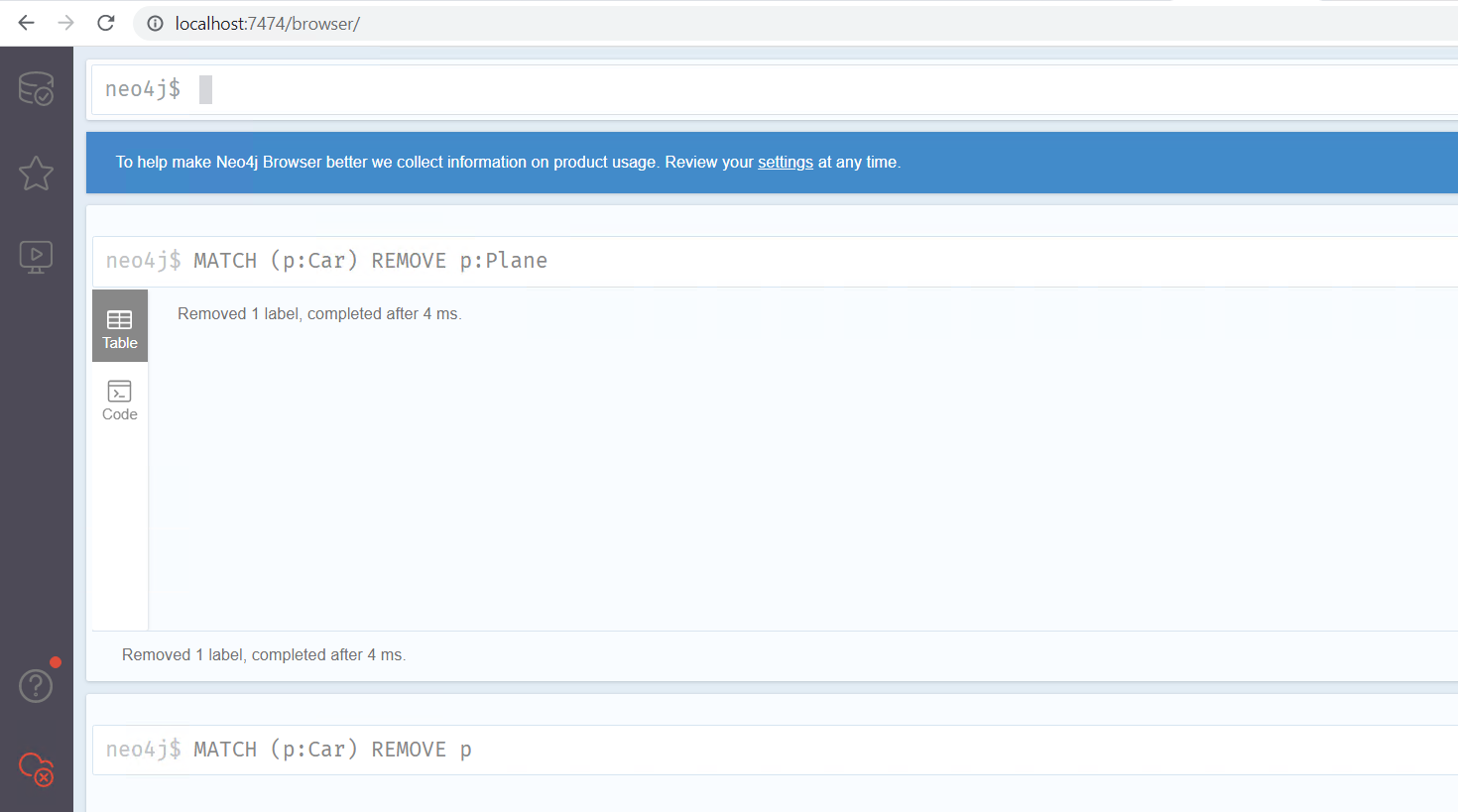

Test Cypher query command

After login successfully, you can see the following screenshot. Please note, for community version, you have only two database, one is sytem, one is neo4j. so it will use neo4j by default, and all the objects are in this database.

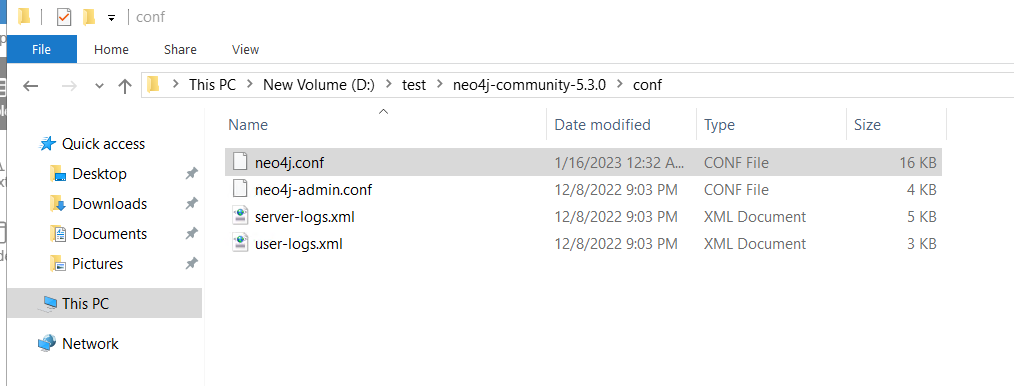

Find out the .conf file

Find out the .conf file

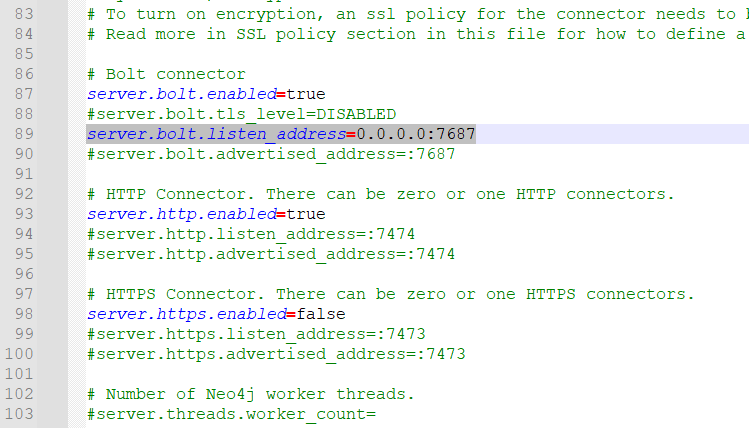

Uncomment the configuration and add 0.0.0.0 for remote access. And bolt is used for spark scala.

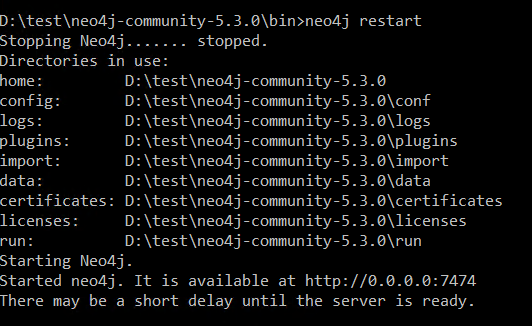

Restart the service to take effect.

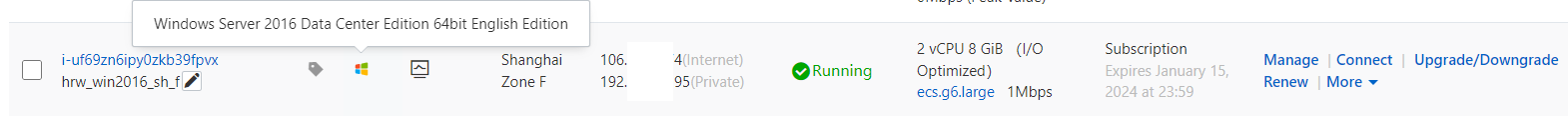

Check LAN IP for ECS, eg. 192.169.0.200

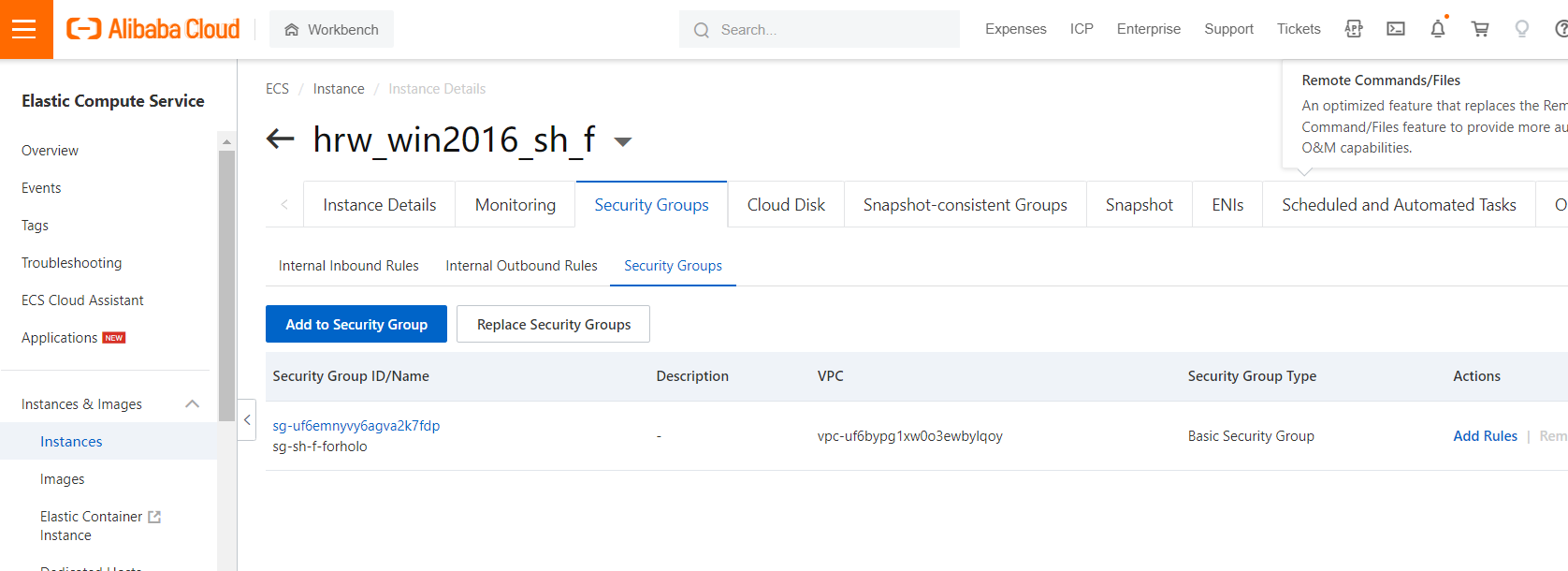

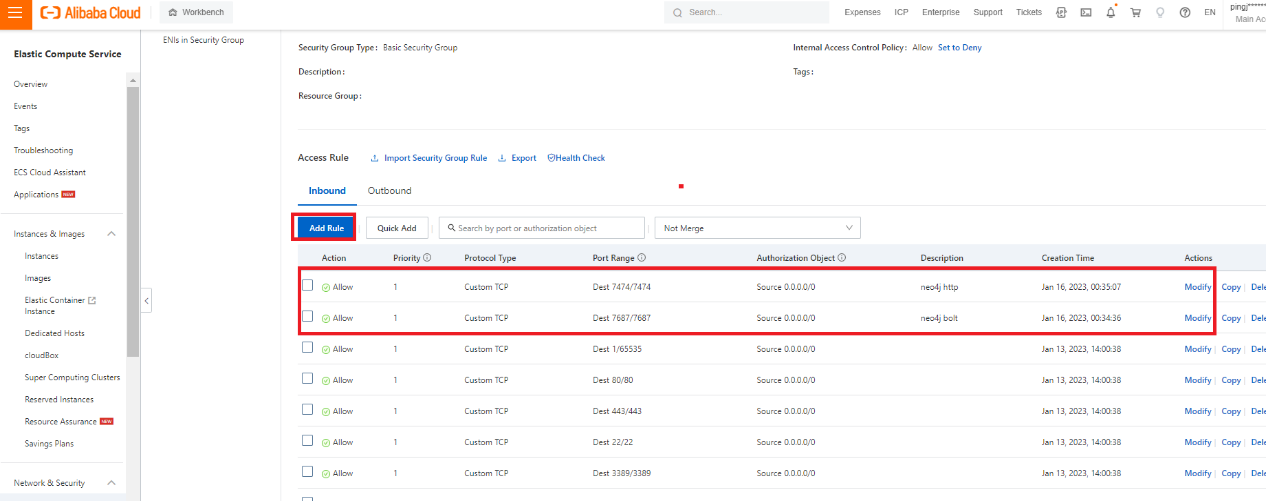

Enable 7474 and 7687 from security group

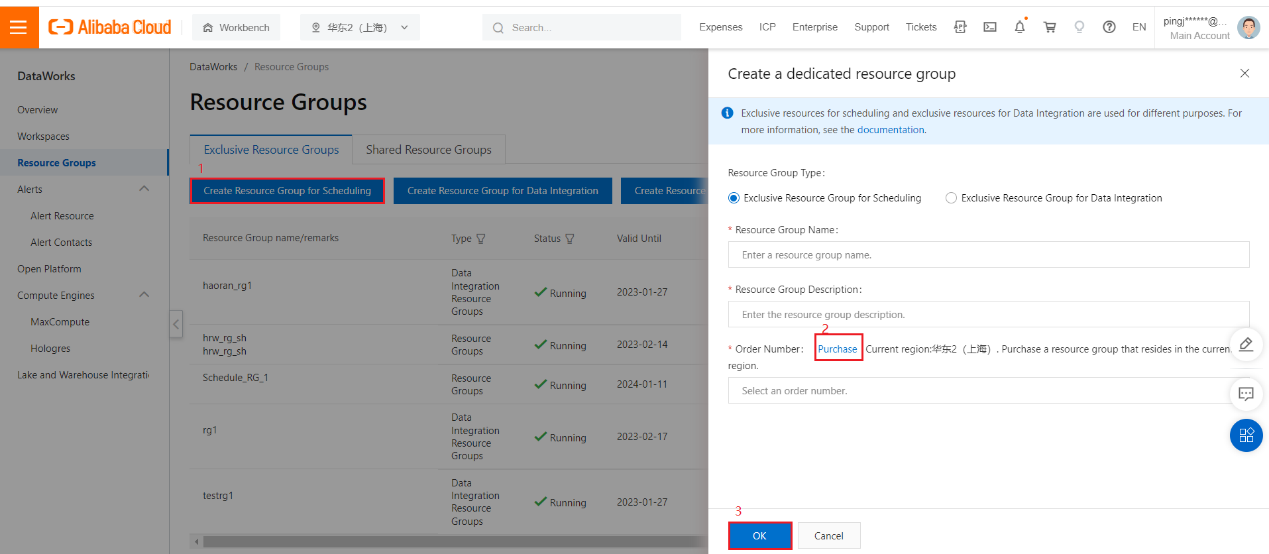

Purpose Exclusive Resource Group for Scheduling

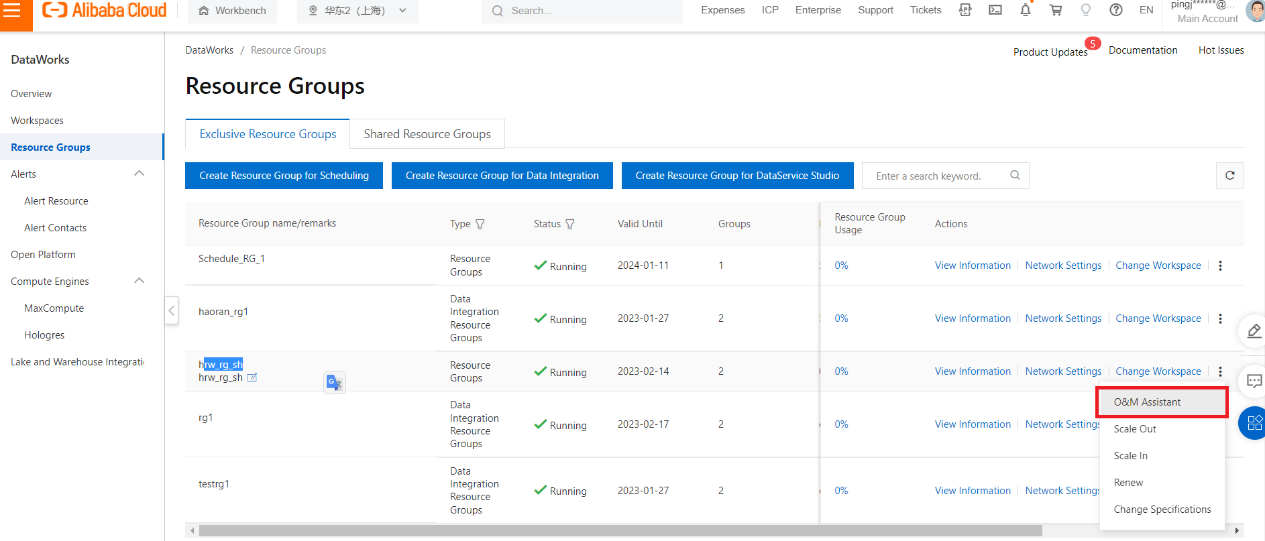

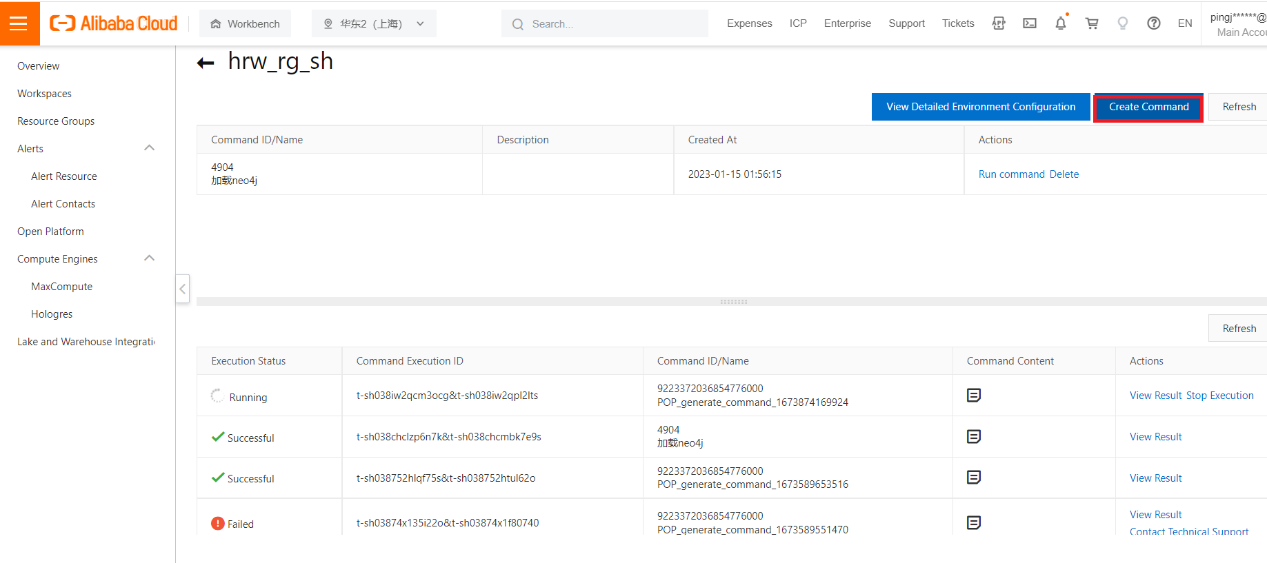

Find out the Resource Group and click O&M Assistant

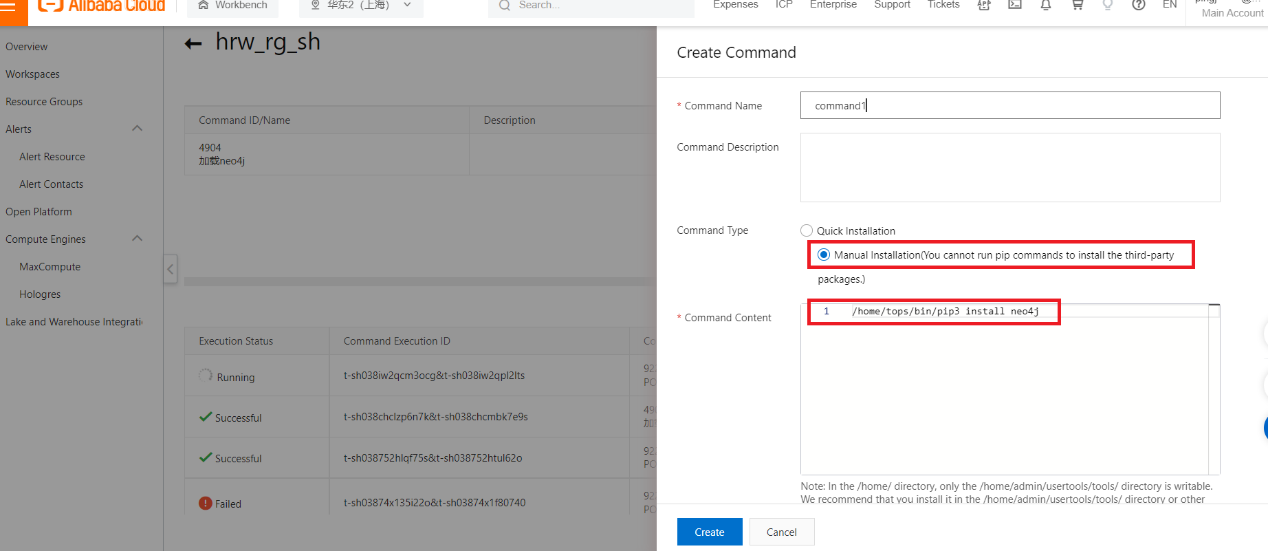

Click Create Command

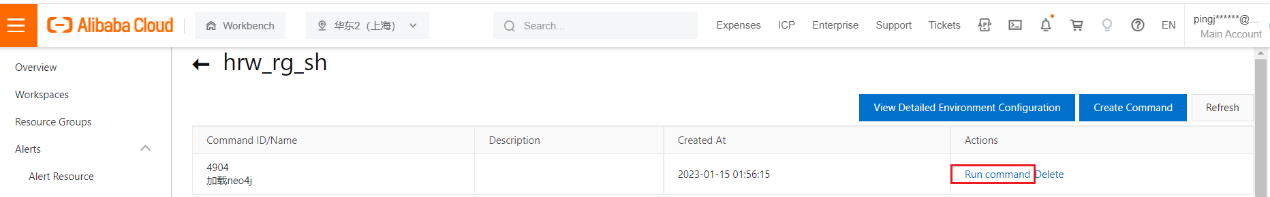

/home/tops/bin/pip3 install neo4jThen Create and then Run

After it is running successfully, the driver is imported.

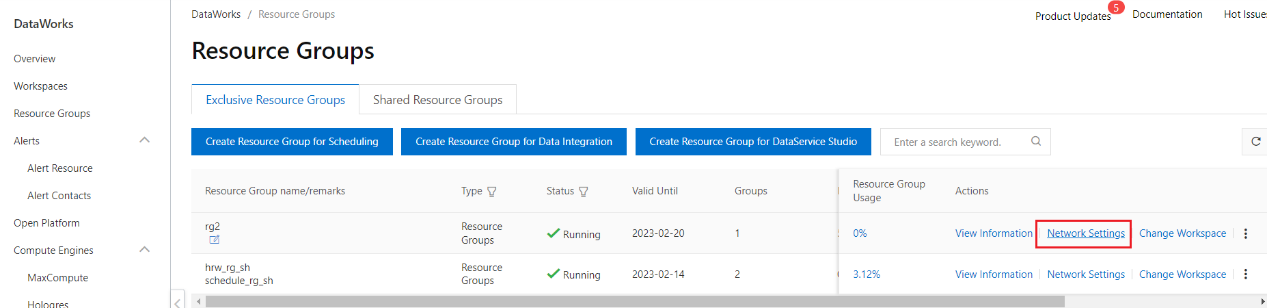

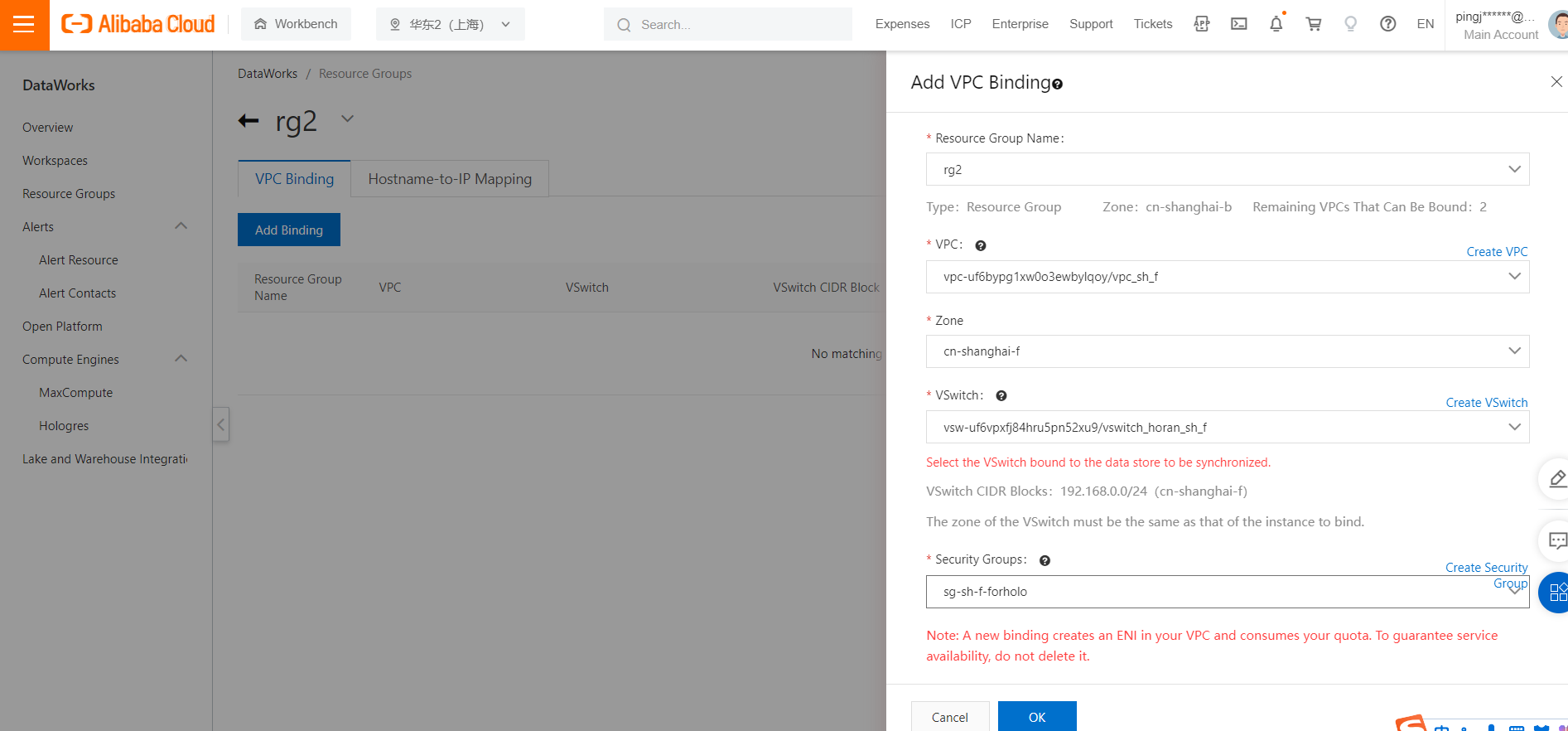

Find out the Network Settings of your new resource group.

Choose the correct VPC, VSwitch, Security group, which is in the same VPC settings with your ECS.

Run following sql in DW to create tables to initialize the data

--odps sql

--********************************************************************--

--author:pingjingwhr@test.aliyunid.com

--create time:2023-01-17 16:57:12

--********************************************************************--

CREATE TABLE ods_user

(

user_id STRING,

is_ekyc INT,

balance DOUBLE

);

INSERT INTO TABLE ods_user VALUES ('user1',1,30.7);

INSERT INTO TABLE ods_user VALUES ('user2',1,0);

INSERT INTO TABLE ods_user VALUES ('user3',0,222.23);

INSERT INTO TABLE ods_user VALUES ('user4',1,433.7);

CREATE TABLE ods_credit_card

(

user_card_id STRING

);

INSERT INTO TABLE ods_credit_card VALUES ('cc1');

INSERT INTO TABLE ods_credit_card VALUES ('cc2');

INSERT INTO TABLE ods_credit_card VALUES ('cc3');

INSERT INTO TABLE ods_credit_card VALUES ('cc4');

CREATE TABLE ods_p2p

(

p2p_id STRING,

amount DOUBLE

);

INSERT INTO TABLE ods_p2p VALUES ('p2p1',42);

INSERT INTO TABLE ods_p2p VALUES ('p2p2',572);

INSERT INTO TABLE ods_p2p VALUES ('p2p3',887);

drop table ods_user_cc_rel;

CREATE TABLE ods_user_cc_rel

(

user_id STRING,

user_card_id STRING

);

INSERT INTO TABLE ods_user_cc_rel VALUES ('user1','cc1');

INSERT INTO TABLE ods_user_cc_rel VALUES ('user2','cc2');

INSERT INTO TABLE ods_user_cc_rel VALUES ('user3','cc3');

INSERT INTO TABLE ods_user_cc_rel VALUES ('user1','cc4');

INSERT INTO TABLE ods_user_cc_rel VALUES ('user1','cc2');

CREATE TABLE ods_user_p2p_receive_rel

(

user_id STRING,

p2p_id STRING

);

INSERT INTO TABLE ods_user_p2p_receive_rel VALUES ('user2','p2p1');

INSERT INTO TABLE ods_user_p2p_receive_rel VALUES ('user2','p2p2');

INSERT INTO TABLE ods_user_p2p_receive_rel VALUES ('user3','p2p3');

CREATE TABLE ods_user_p2p_transfer_rel

(

user_id STRING,

p2p_id STRING

);

INSERT INTO TABLE ods_user_p2p_transfer_rel VALUES ('user1','p2p1');

INSERT INTO TABLE ods_user_p2p_transfer_rel VALUES ('user3','p2p2');

INSERT INTO TABLE ods_user_p2p_transfer_rel VALUES ('user4','p2p3');

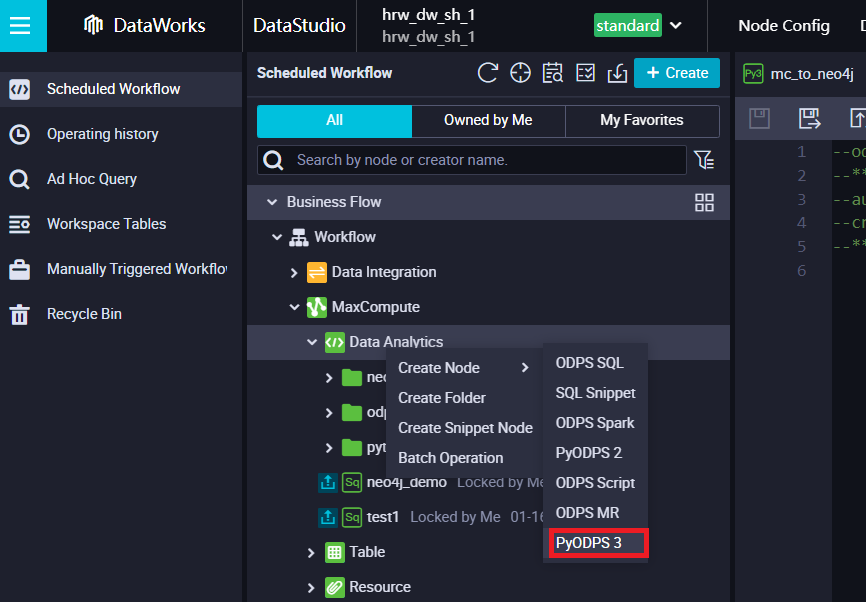

Create PyODPS3 node

Copy the following query

You need to change the URI and Auth based on the above method to get the public IP and your new password

#import Aliyun ODPS library

from odps.df import DataFrame

from odps.df import output

#import neo4j library

from neo4j import GraphDatabase

#unlimit 10,000 rows limitation

options.tunnel.use_instance_tunnel = True

options.tunnel.limit_instance_tunnel = False # Remove the limit restriction and read all the data.

#start

print('demo1: start')

URI = "bolt://192.168.0.200:7687"

AUTH = ("neo4j", "Password01!")

#define functions for Cypher command

def create_user(tx, user_id, is_ekyc, balance):

tx.run("CREATE (u:User {user_id: $user_id, is_ekyc: $is_ekyc, balance: $balance})",

user_id = user_id, is_ekyc = is_ekyc, balance = balance)

def create_cc(tx, user_card_id):

tx.run("CREATE (u:CC {user_card_id: $user_card_id})",

user_card_id = user_card_id)

def create_p2p(tx, p2p_id, amount):

tx.run("CREATE (p:p2p {p2p_id: $p2p_id, amount: $amount})",

p2p_id = p2p_id, amount = amount)

def create_rel_transfer(tx, user_id, p2p_id):

tx.run(

"""

MATCH (a:User), (b:p2p)

WHERE a.user_id = $user_id AND b.p2p_id = $p2p_id

CREATE (a)-[r:P2P_TRANSFER]->(b)

""",

user_id = user_id, p2p_id = p2p_id

)

def create_rel_receive(tx, user_id, p2p_id):

tx.run(

"""

MATCH (a:User), (b:p2p)

WHERE a.user_id = $user_id AND b.p2p_id = $p2p_id

CREATE (b)-[r:P2P_RECEIVE]->(a)

""",

user_id = user_id, p2p_id = p2p_id

)

def create_rel_user_cc(tx, user_id, user_card_id):

tx.run(

"""

MATCH (a:User), (b:CC)

WHERE a.user_id = $user_id AND b.user_card_id = $user_card_id

CREATE (a)-[r:HAS_CC]->(b)

""",

user_id = user_id, user_card_id = user_card_id

)

with GraphDatabase.driver(URI, auth=AUTH) as driver:

with driver.session(database="neo4j") as session:

#start to import data from ods_user

with o.execute_sql('select * from ods_user').open_reader() as reader:

for record in reader:

session.execute_write(create_user, user_id = record[0], is_ekyc = record[1], balance = record[2])

print('Node User completes')

#start to import data from ods_credit_card

with o.execute_sql('select * from ods_credit_card').open_reader() as reader:

for record in reader:

session.execute_write(create_cc, user_card_id = record[0])

print('Node CC completes')

#start to import data from ods_user

with o.execute_sql('select * from ods_p2p').open_reader() as reader:

for record in reader:

session.execute_write(create_p2p, p2p_id = record[0], amount = record[1])

print('Node p2p completes')

#start to import relationship from ods_user_p2p_transfer_rel

with o.execute_sql('select * from ods_user_p2p_transfer_rel').open_reader() as reader:

for record in reader:

session.execute_write(create_rel_transfer, user_id = record[0], p2p_id = record[1])

print('Relationship TRANSFER completes')

#start to import relationship from ods_user_p2p_receive_rel

with o.execute_sql('select * from ods_user_p2p_receive_rel').open_reader() as reader:

for record in reader:

session.execute_write(create_rel_receive, user_id = record[0], p2p_id = record[1])

print('Relationship RECEIVE completes')

#start to import relationship from ods_user_p2p_receive_rel

with o.execute_sql('select * from ods_user_cc_rel').open_reader() as reader:

for record in reader:

session.execute_write(create_rel_user_cc, user_id = record[0], user_card_id = record[1])

print('Relationship HAS_CC completes')

print('end of demo')

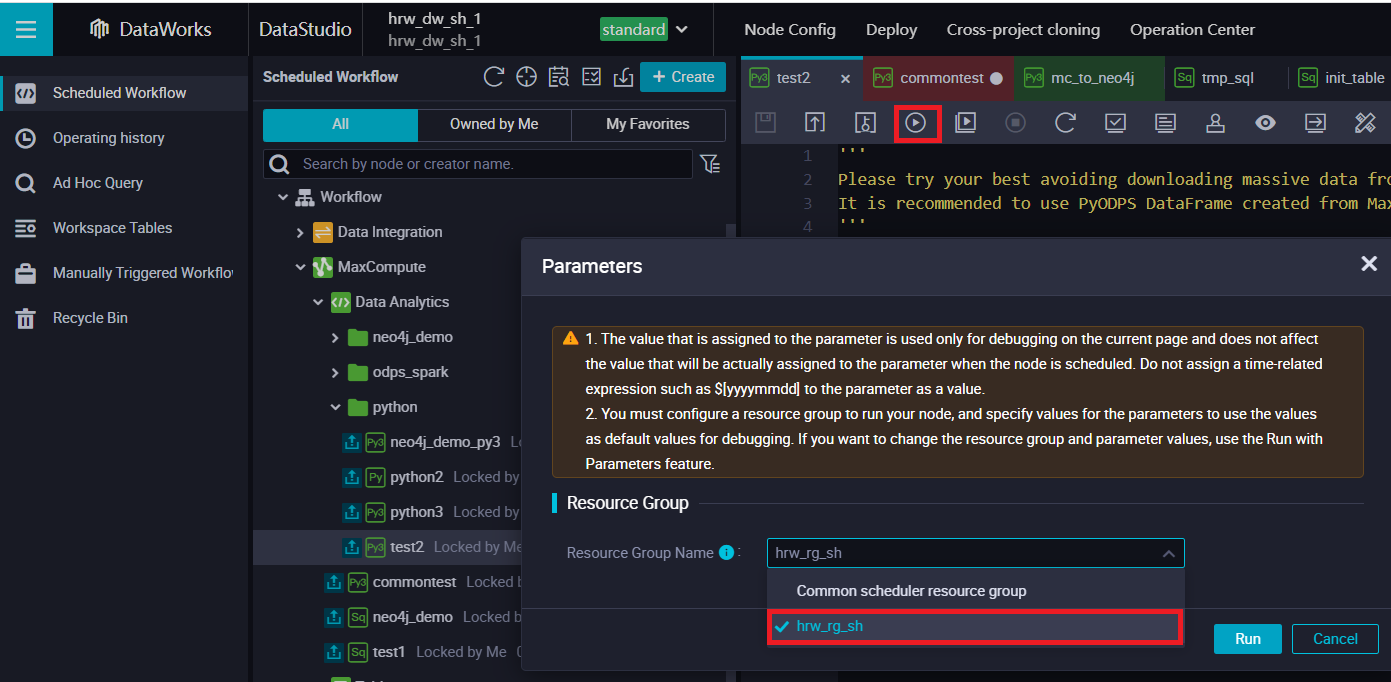

Make sure you choose the right resource group to run it. You must use the exclusive resource group which you use PIP installing the driver library.

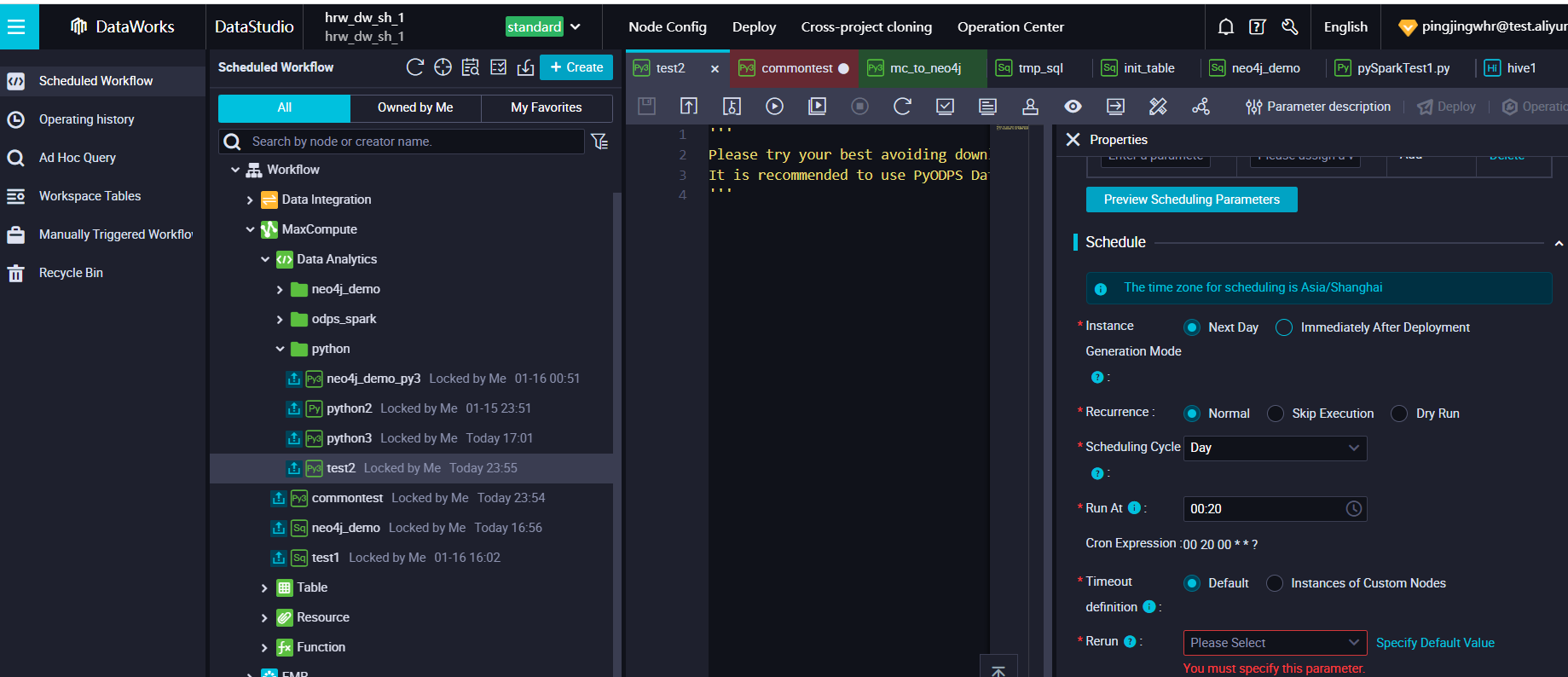

You can also have a schedule based on the configuration of dataworks

1) Run following sql in DW to create tables to initialize the data

--odps sql

--********************************************************************--

--author:pingjingwhr@test.aliyunid.com

--create time:2023-01-17 16:57:12

--********************************************************************--

CREATE TABLE ods_user

(

user_id STRING,

is_ekyc INT,

balance DOUBLE

);

INSERT INTO TABLE ods_user VALUES ('user1',1,30.7);

INSERT INTO TABLE ods_user VALUES ('user2',1,0);

INSERT INTO TABLE ods_user VALUES ('user3',0,222.23);

INSERT INTO TABLE ods_user VALUES ('user4',1,433.7);

CREATE TABLE ods_credit_card

(

user_card_id STRING

);

INSERT INTO TABLE ods_credit_card VALUES ('cc1');

INSERT INTO TABLE ods_credit_card VALUES ('cc2');

INSERT INTO TABLE ods_credit_card VALUES ('cc3');

INSERT INTO TABLE ods_credit_card VALUES ('cc4');

CREATE TABLE ods_p2p

(

p2p_id STRING,

amount DOUBLE

);

INSERT INTO TABLE ods_p2p VALUES ('p2p1',42);

INSERT INTO TABLE ods_p2p VALUES ('p2p2',572);

INSERT INTO TABLE ods_p2p VALUES ('p2p3',887);

drop table ods_user_cc_rel;

CREATE TABLE ods_user_cc_rel

(

user_id STRING,

user_card_id STRING

);

INSERT INTO TABLE ods_user_cc_rel VALUES ('user1','cc1');

INSERT INTO TABLE ods_user_cc_rel VALUES ('user2','cc2');

INSERT INTO TABLE ods_user_cc_rel VALUES ('user3','cc3');

INSERT INTO TABLE ods_user_cc_rel VALUES ('user1','cc4');

INSERT INTO TABLE ods_user_cc_rel VALUES ('user1','cc2');

CREATE TABLE ods_user_p2p_receive_rel

(

user_id STRING,

p2p_id STRING

);

INSERT INTO TABLE ods_user_p2p_receive_rel VALUES ('user2','p2p1');

INSERT INTO TABLE ods_user_p2p_receive_rel VALUES ('user2','p2p2');

INSERT INTO TABLE ods_user_p2p_receive_rel VALUES ('user3','p2p3');

CREATE TABLE ods_user_p2p_transfer_rel

(

user_id STRING,

p2p_id STRING

);

INSERT INTO TABLE ods_user_p2p_transfer_rel VALUES ('user1','p2p1');

INSERT INTO TABLE ods_user_p2p_transfer_rel VALUES ('user3','p2p2');

INSERT INTO TABLE ods_user_p2p_transfer_rel VALUES ('user4','p2p3');

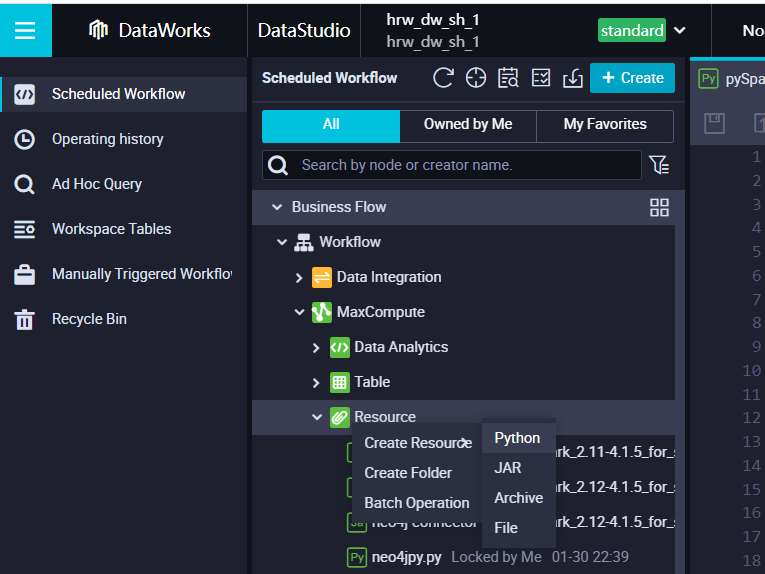

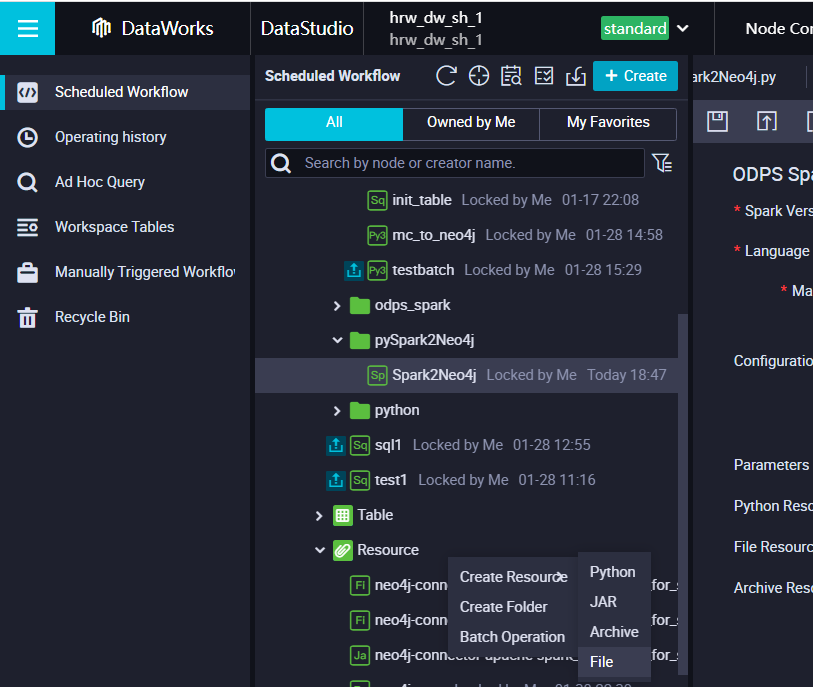

2) Create Python as a Resource

3) Copy the following query

You need to change the URI and Auth based on the above method to get the internal IP and your new password

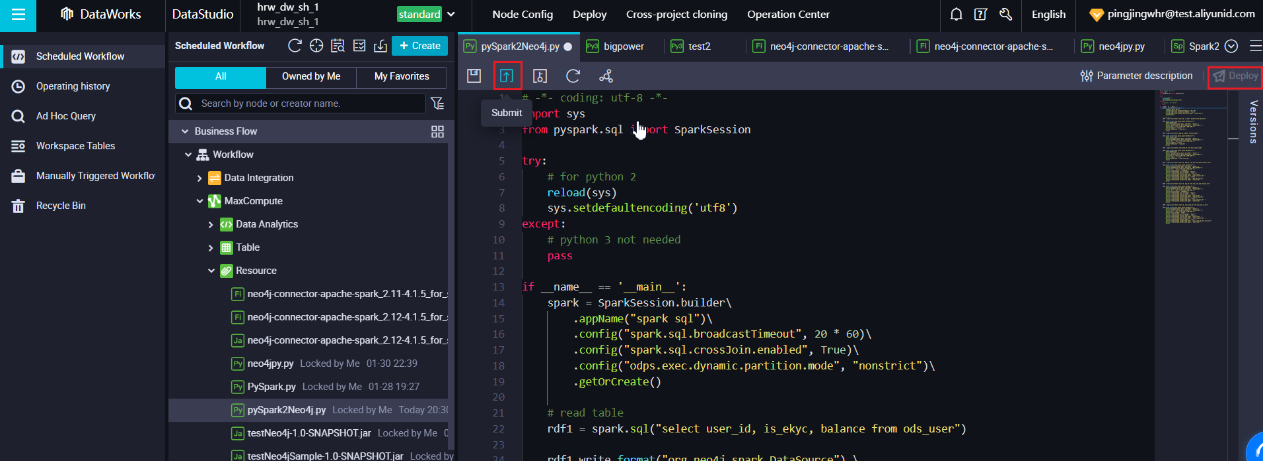

# -*- coding: utf-8 -*-

import sys

from pyspark.sql import SparkSession

try:

# for python 2

reload(sys)

sys.setdefaultencoding('utf8')

except:

# python 3 not needed

pass

if __name__ == '__main__':

spark = SparkSession.builder\

.appName("spark sql")\

.config("spark.sql.broadcastTimeout", 20 * 60)\

.config("spark.sql.crossJoin.enabled", True)\

.config("odps.exec.dynamic.partition.mode", "nonstrict")\

.getOrCreate()

# read table

rdf1 = spark.sql("select user_id, is_ekyc, balance from ods_user")

rdf1.write.format("org.neo4j.spark.DataSource") \

.mode("OverWrite") \

.option("authentication.basic.username", "neo4j") \

.option("authentication.basic.password", "Password01!") \

.option("url", "bolt://192.168.0.200:7687") \

.option("labels", ":User") \

.option("node.keys", "user_id") \

.save()

rdf2 = spark.sql("select p2p_id, amount from ods_p2p")

rdf2.write.format("org.neo4j.spark.DataSource") \

.mode("OverWrite") \

.option("authentication.basic.username", "neo4j") \

.option("authentication.basic.password", "Password01!") \

.option("url", "bolt://192.168.0.200:7687") \

.option("labels", ":P2P") \

.option("node.keys", "p2p_id") \

.save()

rdf3 = spark.sql("select user_card_id from ods_credit_card")

rdf3.write.format("org.neo4j.spark.DataSource") \

.mode("OverWrite") \

.option("authentication.basic.username", "neo4j") \

.option("authentication.basic.password", "Password01!") \

.option("url", "bolt://192.168.0.200:7687") \

.option("labels", ":CC") \

.option("node.keys", "user_card_id") \

.save()

rdf4 = spark.sql("select user_id, p2p_id from ods_user_p2p_transfer_rel")

rdf4.write.format("org.neo4j.spark.DataSource") \

.option("authentication.basic.username", "neo4j") \

.option("authentication.basic.password", "Password01!") \

.option("url", "bolt://192.168.0.200:7687") \

.option("relationship", "TRANSFER") \

.option("relationship.save.strategy", "keys") \

.option("relationship.source.labels", ":User") \

.option("relationship.source.save.mode", "overwrite") \

.option("relationship.source.node.keys", "user_id:user_id") \

.option("relationship.target.labels", ":P2P") \

.option("relationship.target.node.keys", "p2p_id:p2p_id") \

.option("relationship.target.save.mode", "overwrite") \

.save()

rdf5 = spark.sql("select user_id, p2p_id from ods_user_p2p_receive_rel")

rdf5.write.format("org.neo4j.spark.DataSource") \

.option("authentication.basic.username", "neo4j") \

.option("authentication.basic.password", "Password01!") \

.option("url", "bolt://192.168.0.200:7687") \

.option("relationship", "RECEIVE") \

.option("relationship.save.strategy", "keys") \

.option("relationship.source.labels", ":P2P") \

.option("relationship.source.save.mode", "overwrite") \

.option("relationship.source.node.keys", "p2p_id:p2p_id") \

.option("relationship.target.labels", ":User") \

.option("relationship.target.node.keys", "user_id:user_id") \

.option("relationship.target.save.mode", "overwrite") \

.save()

rdf6 = spark.sql("select user_id, user_card_id from ods_user_cc_rel")

rdf6.write.format("org.neo4j.spark.DataSource") \

.option("authentication.basic.username", "neo4j") \

.option("authentication.basic.password", "Password01!") \

.option("url", "bolt://192.168.0.200:7687") \

.option("relationship", "HAS") \

.option("relationship.save.strategy", "keys") \

.option("relationship.source.labels", ":User") \

.option("relationship.source.save.mode", "overwrite") \

.option("relationship.source.node.keys", "user_id:user_id") \

.option("relationship.target.labels", ":CC") \

.option("relationship.target.node.keys", "user_card_id:user_card_id") \

.option("relationship.target.save.mode", "overwrite") \

.save()

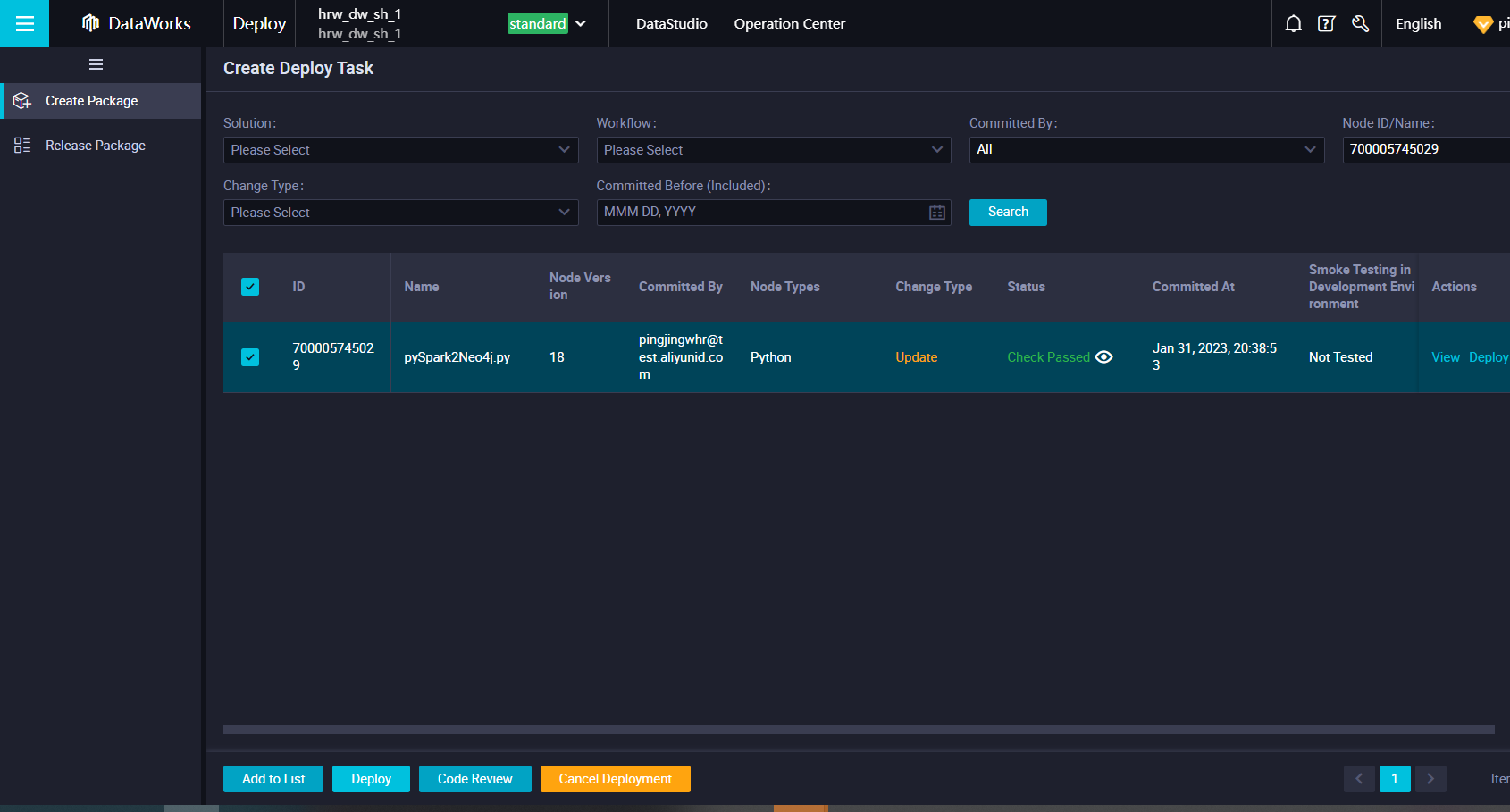

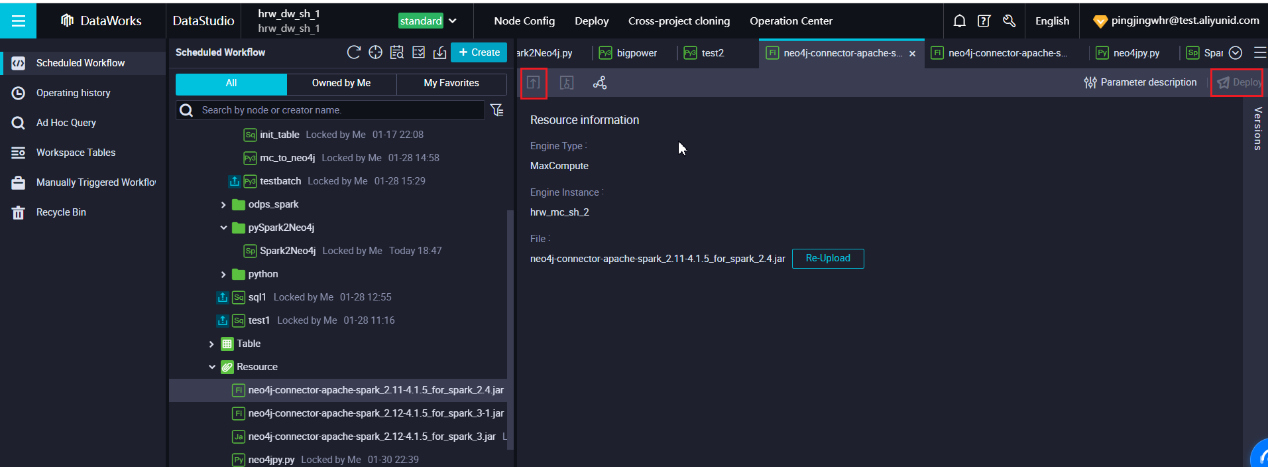

4) Submit & Deploy the py as a resource.

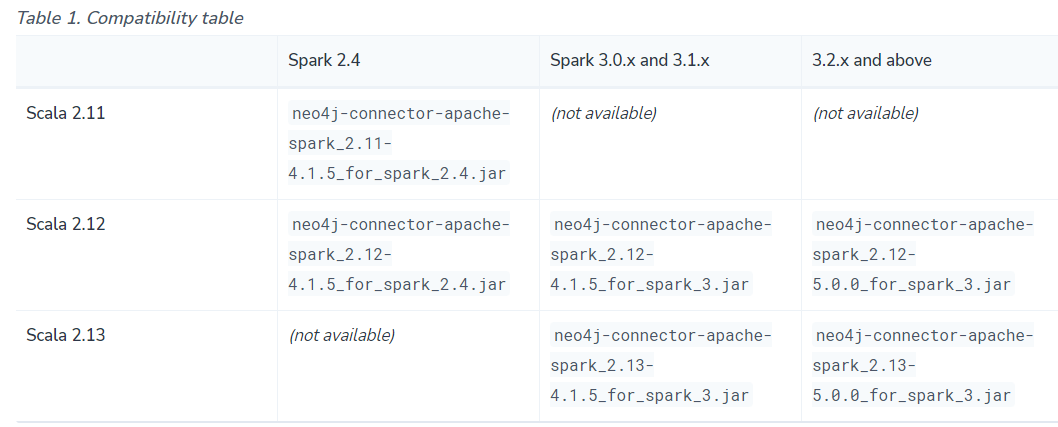

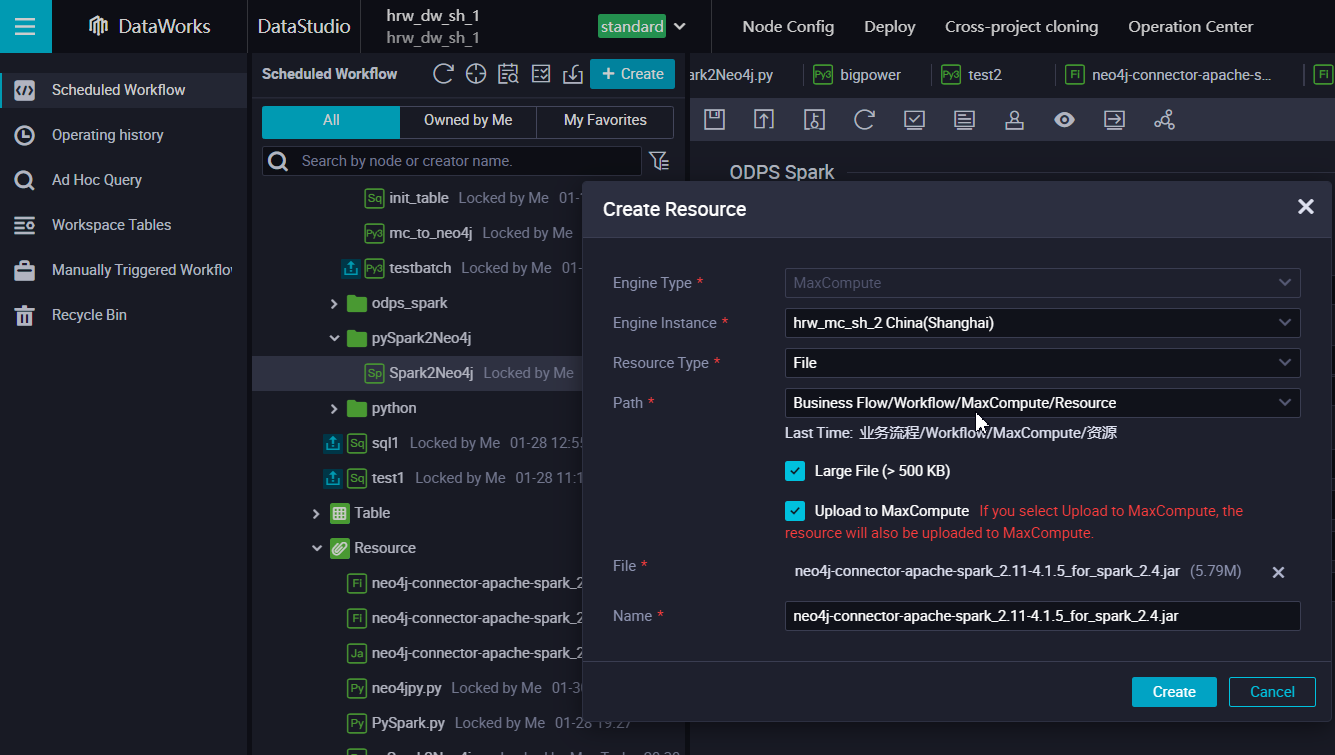

5) Import neo4j Connector Jar as a file resource

Download neo4j Connector:

https://neo4j.com/docs/spark/current/quickstart/

https://neo4j.com/docs/spark/current/overview/#_spark_compatibility

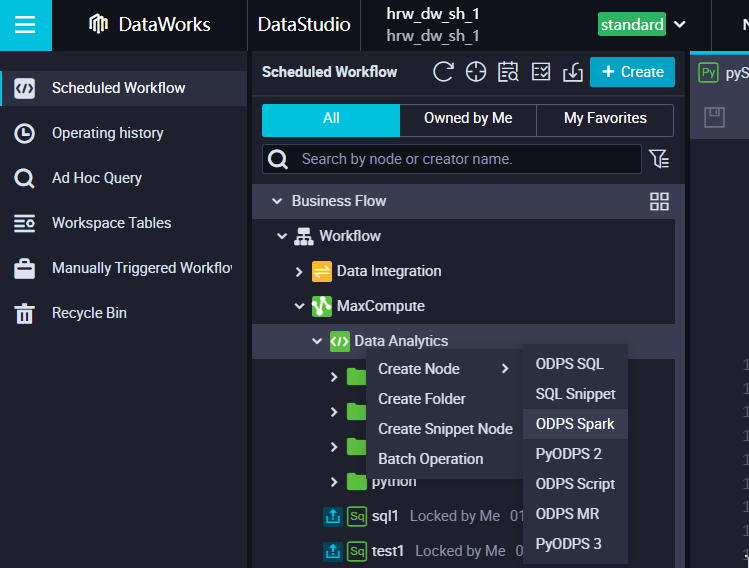

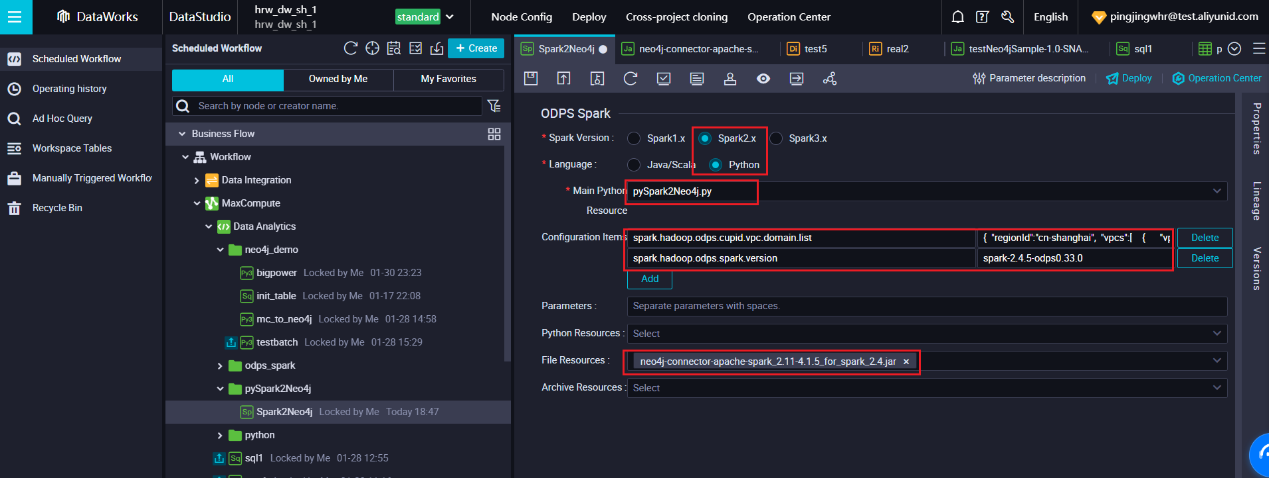

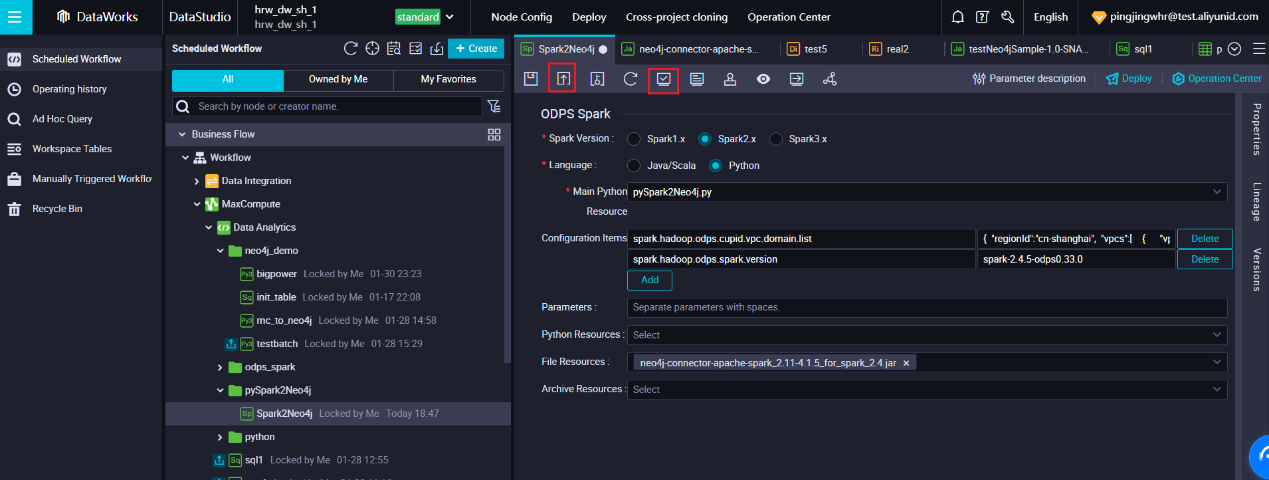

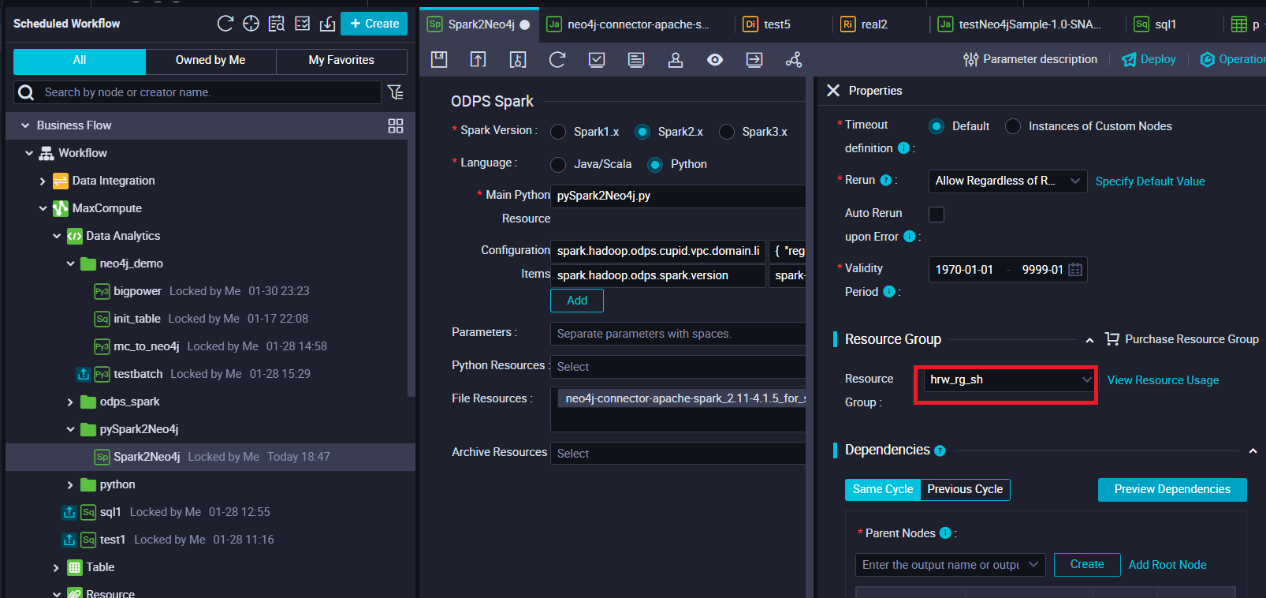

6) Create a Node with type “ODPS Spark”

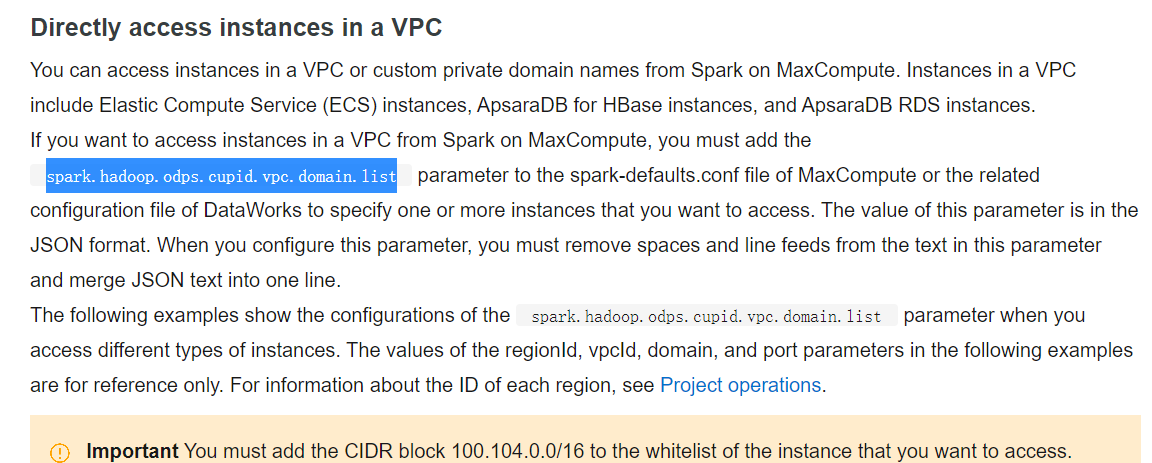

spark.hadoop.odps.spark.version=spark-2.4.5-odps0.33.0spark.hadoop.odps.cupid.vpc.domain.list=…The configuration for domain list is a one line json file.

{

"regionId":"ap-southeast-3",

"vpcs":[

{

"vpcId":"vpc-uf6bypg1XXXX",

"zones":[

{

"urls":[

{

"domain":"192.168.0.200",

"port": 7687

}

]

}

]

}

]

}You need to change the vpcid and domain info, domain ip is the ECS internal IP which has neo4j instance.

After that, use notepad++ to remove n to generate the json as one line, and put it into parameter.

Then click submit and smoke test

From the resource group, use the exclusive resource group which is in the same VPC

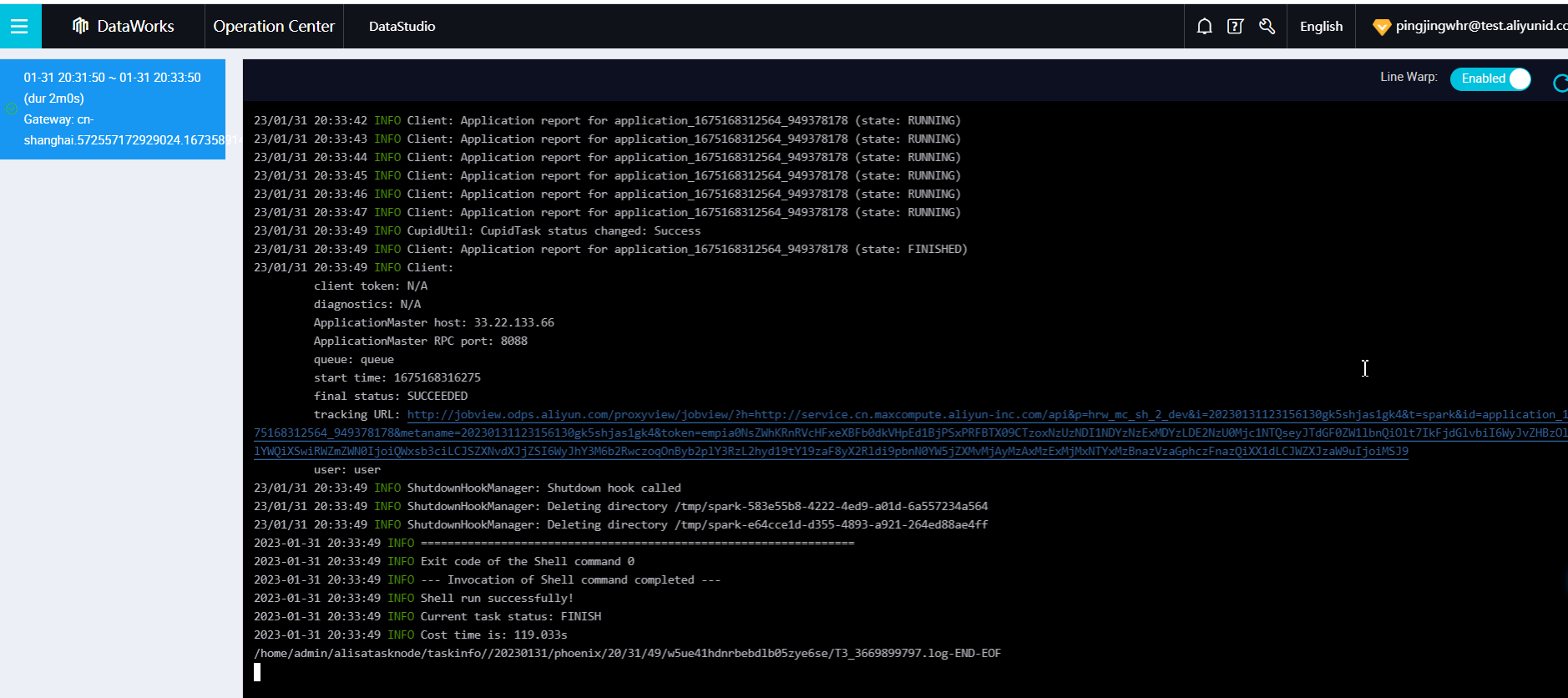

The success log looks like this one.

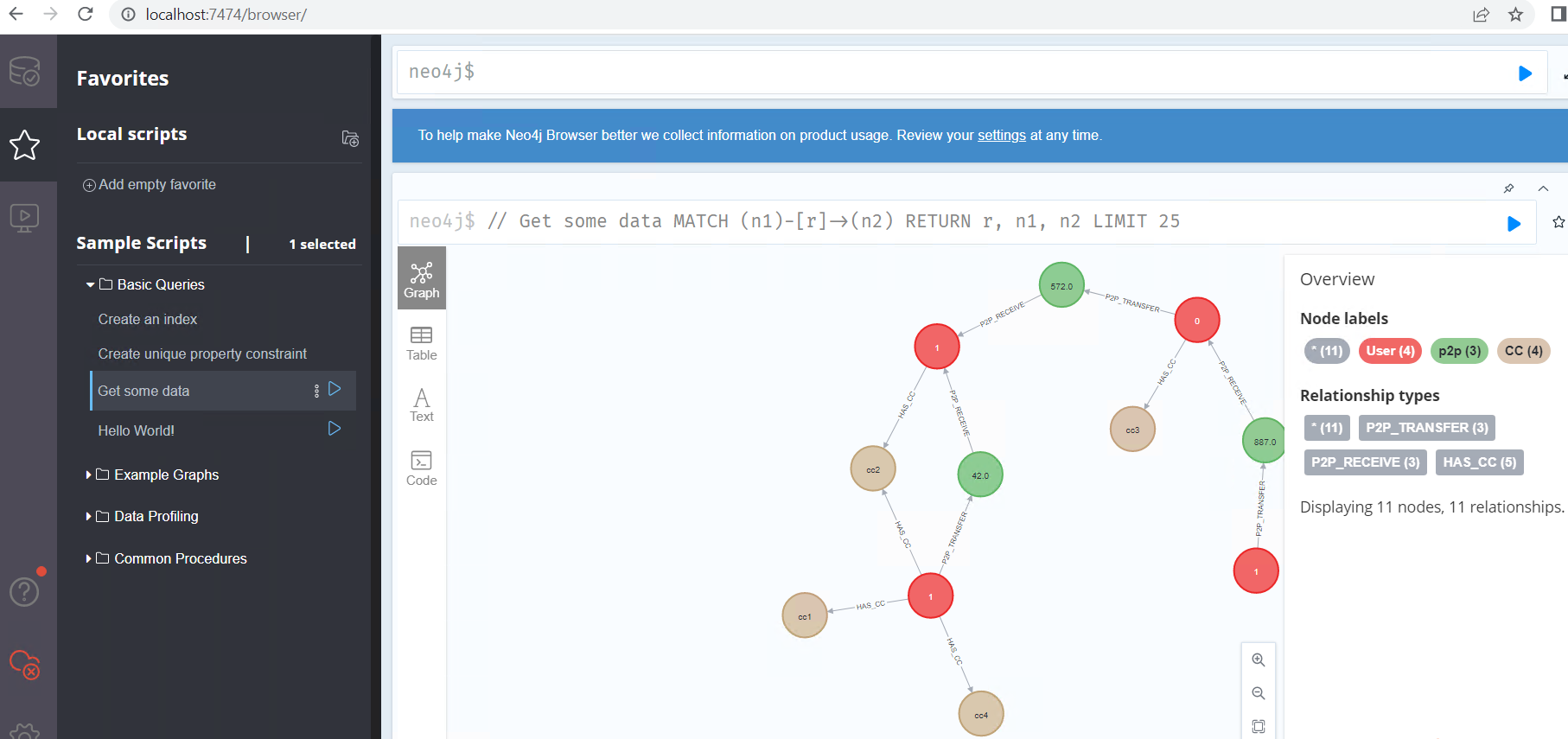

You can use Cypher to check the result now.

Run the following command directly, it will load public data into your user tables, it has 1.5M data.

INSERT INTO TABLE ods_user

SELECT c_custkey, 1, DOUBLE(c_acctbal) from MAXCOMPUTE_PUBLIC_DATA.odps_customer_10g;Then rerun the job, to check the performance result.

Based on the test, the import speed is 10,000 nodes/minute.

-- to quickly clean all data

MATCH (n) DETACH DELETE n

--to quickly check all nodes

MATCH (n) RETURN n

--to quickly check the relationship

MATCH (n1)-[r]->(n2) RETURN r, n1, n2 LIMIT 25Farruh - January 12, 2024

JDP - December 30, 2021

Alibaba Clouder - September 3, 2019

JDP - March 19, 2021

JDP - May 20, 2021

JDP - January 14, 2022

Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More DataWorks

DataWorks

A secure environment for offline data development, with powerful Open APIs, to create an ecosystem for redevelopment.

Learn MoreMore Posts by Farruh