By Haoran Wang, Sr. Big Data Solution Architect of Alibaba Cloud

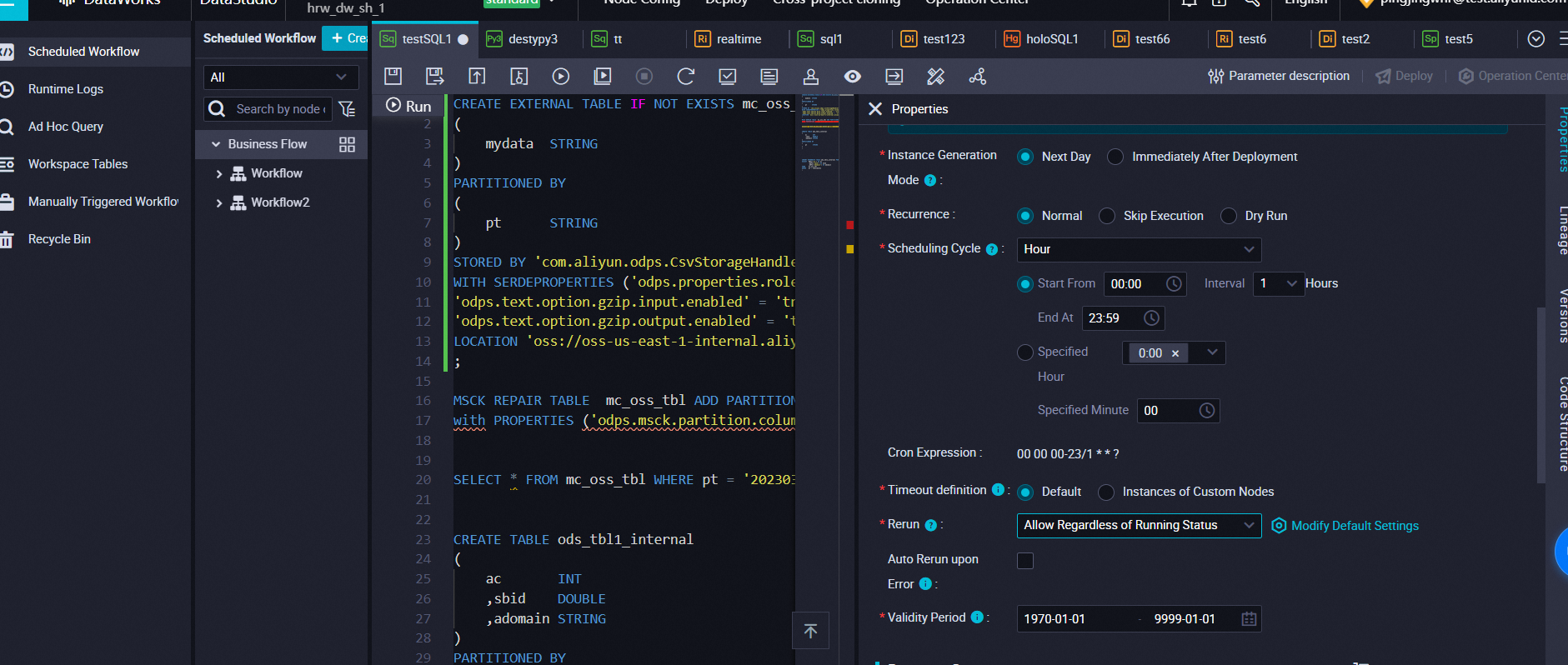

CREATE EXTERNAL TABLE IF NOT EXISTS mc_oss_tbl

(

mydata STRING

)

PARTITIONED BY

(

pt STRING

)

STORED BY 'com.aliyun.odps.CsvStorageHandler'

WITH SERDEPROPERTIES ('odps.properties.rolearn' = 'acs:ram::1753425463711063:role/aliyunodpsdefaultrole',

'odps.text.option.gzip.input.enabled' = 'true',

'odps.text.option.gzip.output.enabled' = 'true')

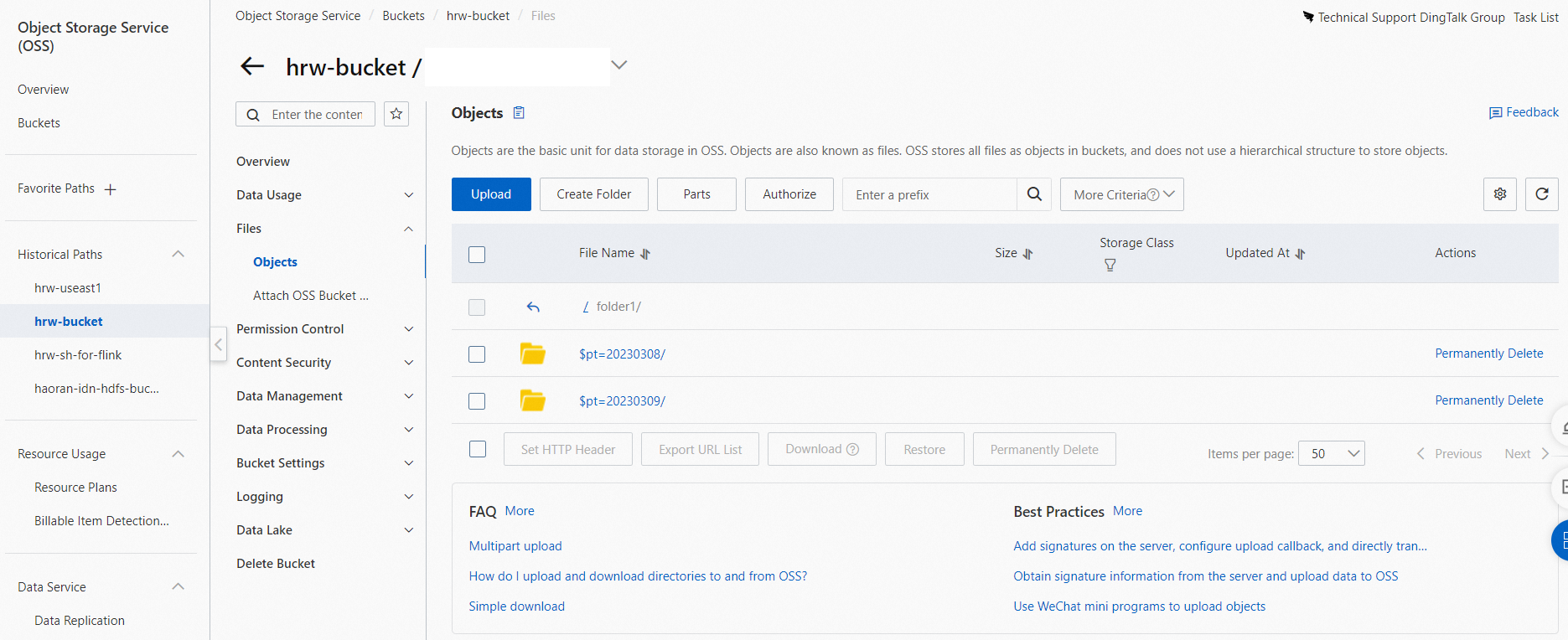

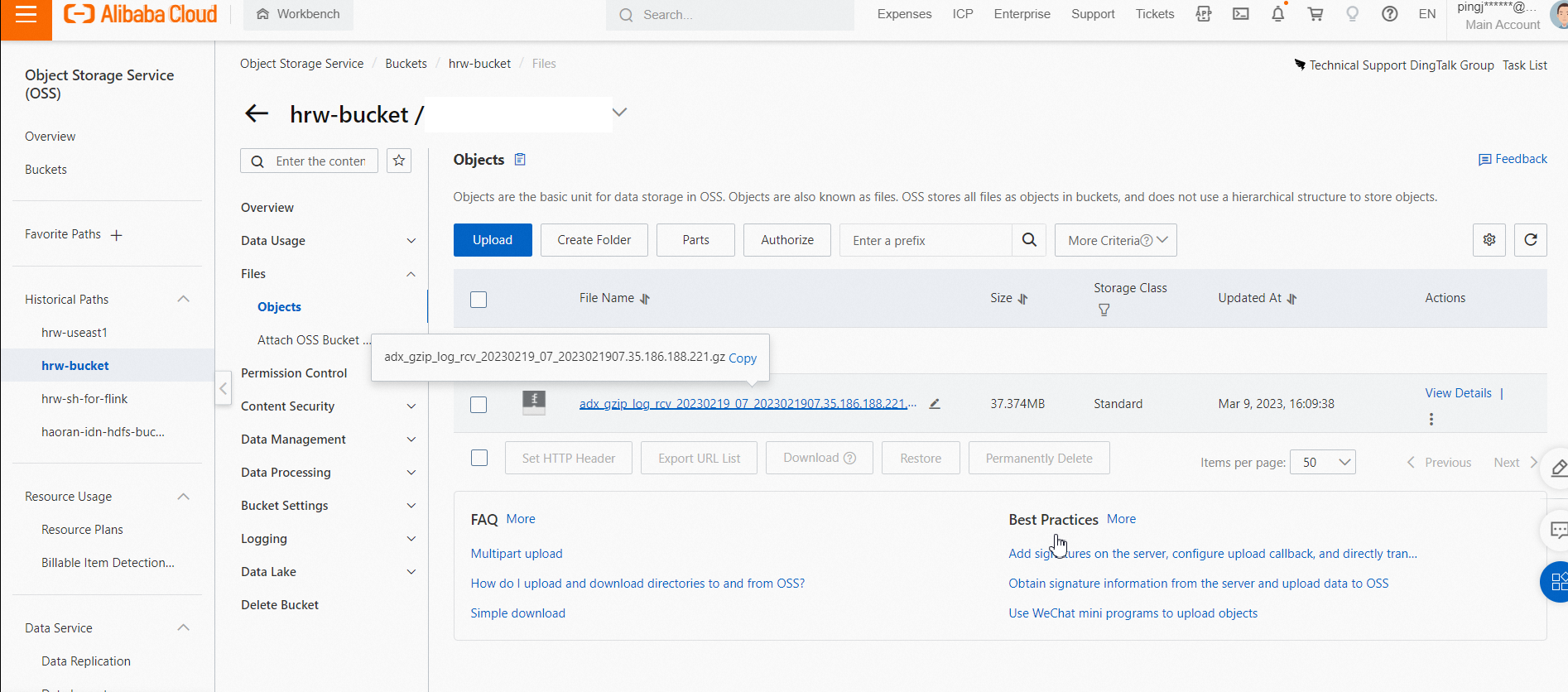

LOCATION 'oss://oss-us-east-1-internal.aliyuncs.com/hrw-bucket/folder1/'

;

MSCK REPAIR TABLE mc_oss_tbl ADD PARTITIONS

with PROPERTIES ('odps.msck.partition.column.mapping'='pt:$pt');

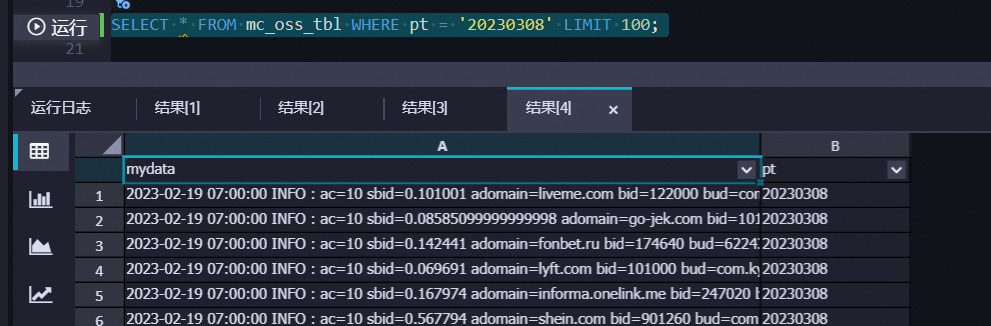

You can check the external table by running the DML directly.

CREATE TABLE ods_tbl1_internal

(

ac INT

,sbid DOUBLE

,adomain STRING

)

PARTITIONED BY

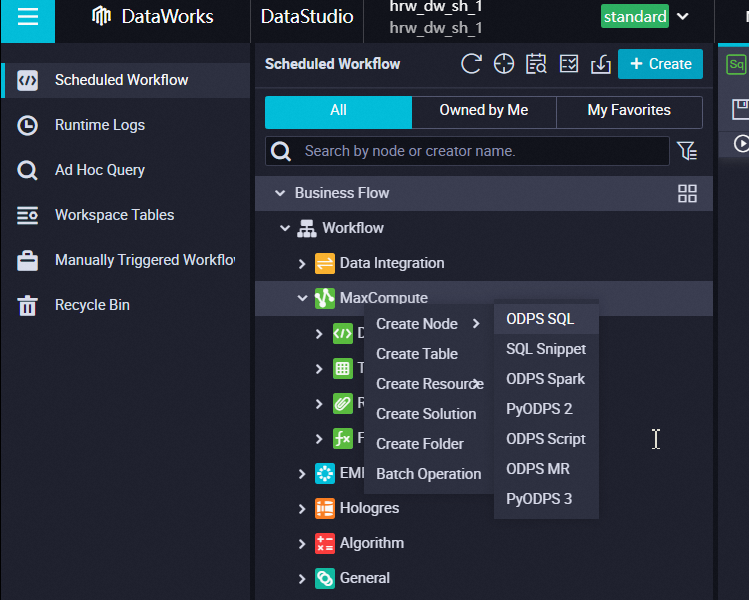

( pt STRING) ;Create a ODPS SQL job

INSERT OVERWRITE TABLE ods_tbl1_internal PARTITION (pt = ${bizdate})

SELECT UDF1('ac') AS ac

,UDF1('sbid') AS sbid

,UDF1('adomain') AS adomain

FROM mc_oss_tbl

WHERE pt = ${bizdate}

;Configure to run it hourly.

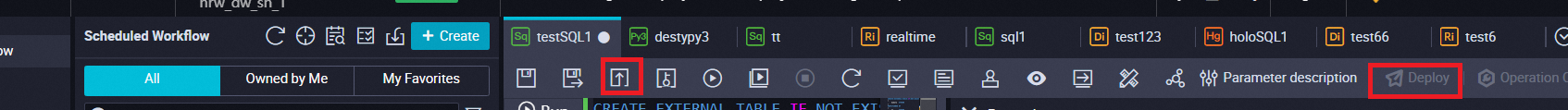

Then you need to submit the job the deploy to production env.

https://www.alibabacloud.com/help/en/maxcompute/latest/access-oss-data-by-using-a-built-in-extractor

How to Configure MySQL and Hologres Catalog in Realtime Compute for Apache Flink

JDP - May 20, 2021

Alibaba Clouder - March 31, 2021

Alibaba Clouder - August 14, 2020

JDP - December 30, 2021

Alibaba Cloud MaxCompute - March 25, 2021

JDP - January 14, 2022

Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More OSS(Object Storage Service)

OSS(Object Storage Service)

An encrypted and secure cloud storage service which stores, processes and accesses massive amounts of data from anywhere in the world

Learn MoreMore Posts by Farruh