by Aaron Handoko, Solution Architect Alibaba Cloud Indonesia

In this article we will discuss about Spark in general, its uses in the Big Data workflow and how to configure and run Spark in the CLI mode for CI/CD purposes.

Apache Spark is an open-source distributed computing system that provides a fast and general-purpose cluster-computing framework for big data processing and analytics. It was developed to address the limitations and inefficiencies of the MapReduce model, which was widely used in earlier big data processing frameworks.

The main advantages of using spark includes:

• Speed - Spark is designed for in-memory data processing, which significantly improves processing speed compared to the disk-based processing used in MapReduce.

• Scalability - Spark is designed to scale horizontally, meaning it can efficiently handle large amounts of data by distributing it across a cluster of machines. This scalability is crucial for processing big data sets.

• Unified Platform - Spark provides a unified platform for batch processing, interactive queries, streaming analytics, and machine learning.

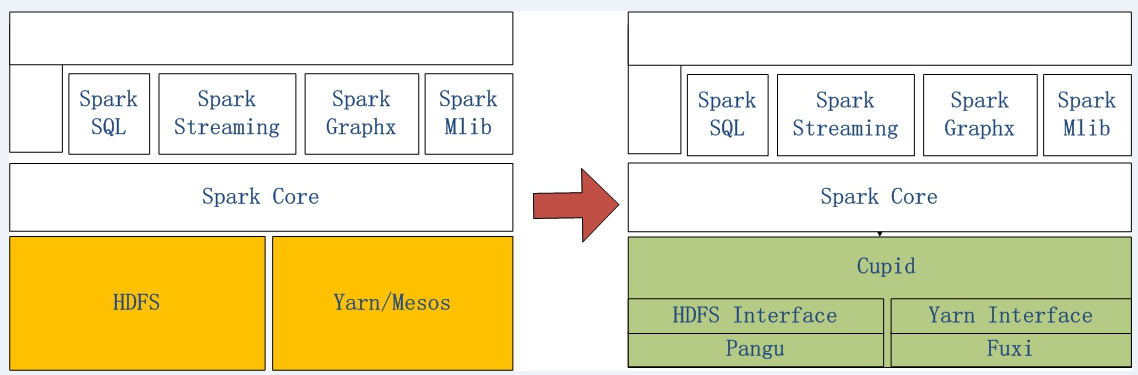

Spark on MaxCompute (ODPS) is a computing service that is provided by MaxCompute and compatible with open-source Spark. This service provides a Spark computing framework based on unified computing resource and dataset permission systems. The service allows you to use your preferred development method to submit and run Spark jobs. Spark on MaxCompute can fulfill diverse data processing and analysis requirements.

ODPS Spark runs on the Cupid platform developed by Alibaba Cloud. The Cupid platform is fully compatible with the computing framework supported by open-source YARN.

Figure 1. Architecture of Spark on native Spark (left) and ODPS Spark (right)

1). An active Alibaba Cloud account

2). Maxcompute Project Created

3). Familiarity with Spark

1). Install the required libraries needed to run spark

a. Git

sudo wget https://github.com/git/git/archive/v2.17.0.tar.gz

sudo tar -zxvf v2.17.0.tar.gz

sudo yum install curl-devel expat-devel gettext-devel openssl-devel zlib-devel gcc perl-ExtUtils-MakeMaker

cd git-2.17.0

sudo make prefix=/usr/local/git all

sudo make prefix=/usr/local/git install

b. Maven

sudo wget https://dlcdn.apache.org/maven/maven-3/3.9.6/binaries/apache-maven-3.9.6-bin.tar.gz

sudo tar -zxvf apache-maven-3.9.6-bin.tar.gz

c. Java (JDK)

sudo yum install -y java-1.8.0-openjdk-devel.x86_64

d. Download SPARK

sudo wget https://odps-repo.oss-cn-hangzhou.aliyuncs.com/spark/3.1.1-odps0.34.1/spark-3.1.1-odps0.34.1.tar.gz

sudo tar -xzvf spark-3.1.1-odps0.34.1.tar.gz

2). Configure environment variable by accessing / etc / profile file

vim /etc/profile

Below is the example of the environment variable configuration

# Set JAVA_HOME to the actual Java installation path.

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.392.b08-4.0.3.al8.x86_64

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH=$JAVA_HOME/bin:$PATH

# export SPARK_HOME=/root/spark-3.1.1-odps0.34.1

export SPARK_HOME=/root/spark-2.4.5-odps0.33.2

export PATH=$SPARK_HOME/bin:$PATH

export MAVEN_HOME=/root/apache-maven-3.9.6

export PATH=$MAVEN_HOME/bin:$PATH

export PATH=/usr/local/git/bin/:$PATH

For the variable to take into effect run this command

source /etc/profile

3). Building spark code (sample code can be found here)

cd MaxComputeSpark/spark2.x

mvn clean package

4). Edit Configuration file

spark.hadoop.odps.project.name =<YOUR MAXCOMPUTE PROJECT>

spark.hadoop.odps.access.id =LTAI5*************

spark.hadoop.odps.access.key =njl8xL*****************

spark.hadoop.odps.end.point = <YOUR MAXCOMPUTE ENDPOINT>

# For Spark 2.3.0, set spark.sql.catalogImplementation to odps. For Spark 2.4.5, set spark.sql.catalogImplementation to hive.

spark.sql.catalogImplementation=hive

spark.sql.sources.default=hive

# Retain the following configurations:

spark.hadoop.odps.task.major.version = cupid_v2

spark.hadoop.odps.cupid.container.image.enable = true

spark.hadoop.odps.cupid.container.vm.engine.type = hyper

spark.hadoop.odps.cupid.webproxy.endpoint = http://service.cn.maxcompute.aliyun-inc.com/api

spark.hadoop.odps.moye.trackurl.host = http://jobview.odps.aliyun.com

spark.hadoop.odps.cupid.resources=aaron_ws_1.hadoop-fs-oss-shaded.jar

# Accessing OSS

spark.hadoop.fs.oss.accessKeyId = <YOUR ACCESSKEY ID>

spark.hadoop.fs.oss.accessKeySecret = <YOUR ACCESSKEY SECRET>

spark.hadoop.fs.oss.endpoint = <OSS ENDPOINT>

5). Download the sample python code below and move it into the spark folder

Sample Python Code:

a. Creating and Inserting into Hive Table: https://github.com/aliyun/MaxCompute-Spark/blob/master/spark-3.x/src/main/python/spark_sql.py

b. Write + Read to OSS: https://github.com/aliyun/MaxCompute-Spark/blob/master/spark-3.x/src/main/python/spark_oss.py

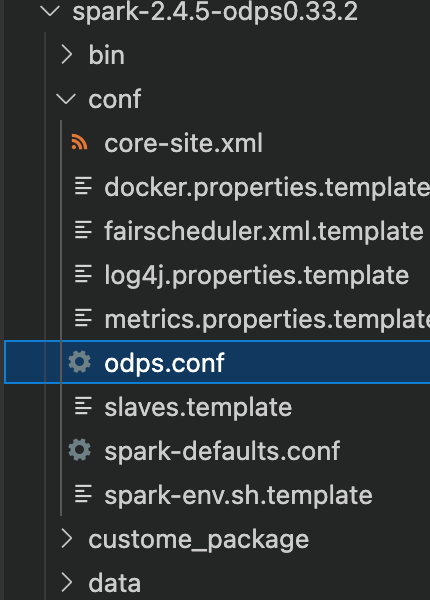

6). Create odps.conf in the conf folder in spark

Here is the example of the configuration required inside the odps.conf

odps.project.name = <MAXCOMPUTE PROJECT NAME>

odps.access.id = <ACCESSKEY ID>

odps.access.key = <ACCESSKEY SECRET>

odps.end.point = <MAXCOMPUTE ENDPOINT>

7). There are two types of running mode you can use; Local mode and Cluster Mode.

cd $SPARK_HOME

Running on Local Mode (on-premise engine)

1). Run the following command:

./bin/spark-submit --master local[4] /root/MaxCompute-Spark/spark-2.x/src/main/python/spark_sql.py

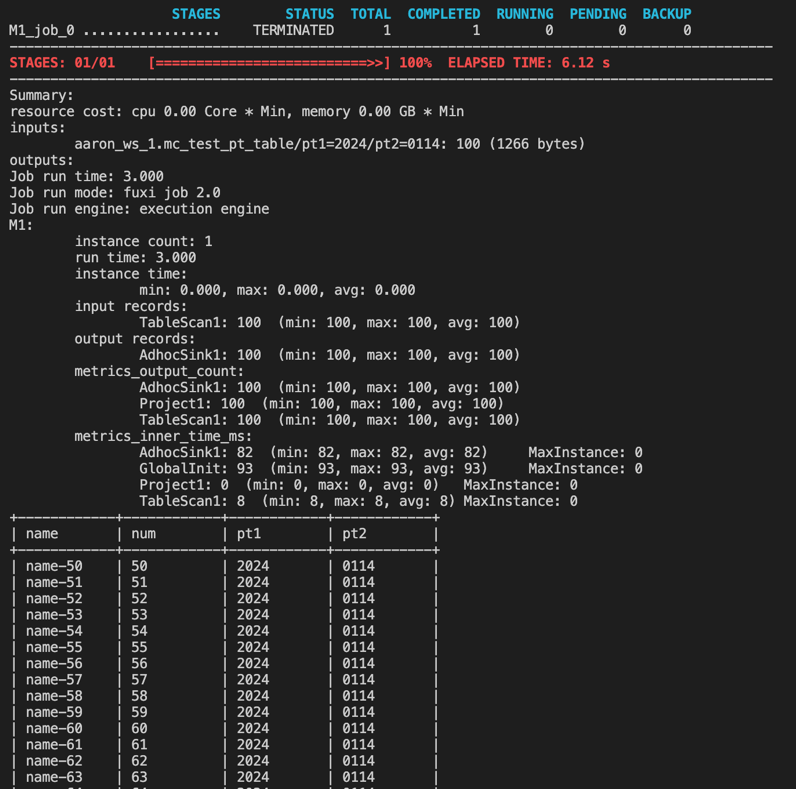

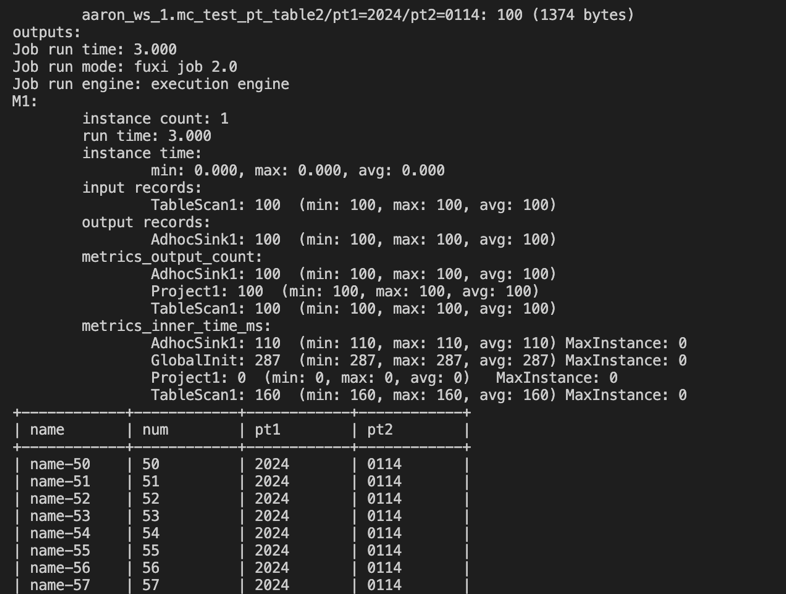

Spark can be successfully run using local mode in spark 2.4 (Picture above is the result query using MaxCompute Client)

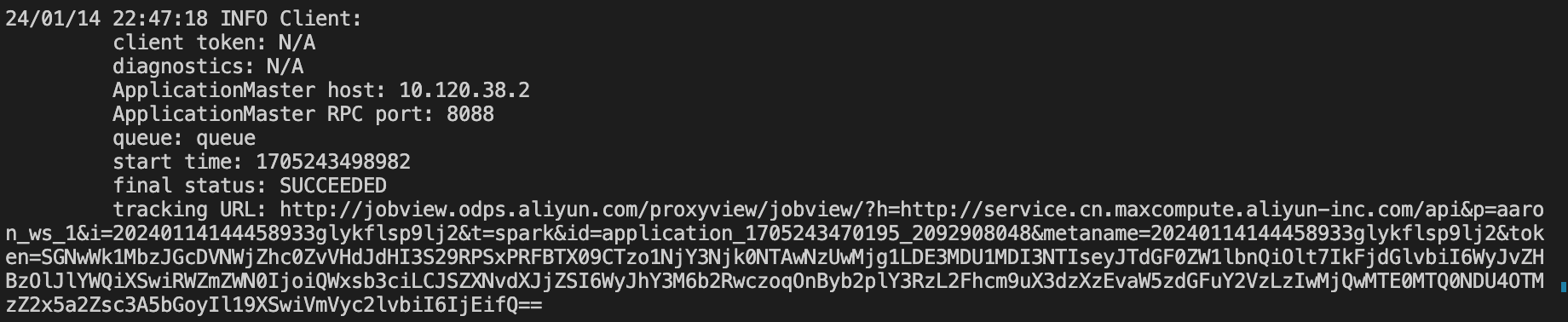

Running on Cluster Mode (ODPS engine)

export HADOOP_CONF_DIR=$SPARK_HOME/conf

bin/spark-submit --master yarn-cluster --class com.aliyun.odps.spark.examples.SparkPi /root/MaxCompute-Spark/spark-2.x/src/main/python/spark_sql.py

117 posts | 21 followers

FollowAlibaba Cloud Indonesia - February 15, 2024

Alibaba Cloud Community - February 23, 2024

Alibaba Cloud MaxCompute - June 2, 2021

Farruh - January 12, 2024

Farruh - January 12, 2024

Alibaba EMR - November 22, 2024

117 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Financial Services Solutions

Financial Services Solutions

Alibaba Cloud equips financial services providers with professional solutions with high scalability and high availability features.

Learn MoreMore Posts by Alibaba Cloud Indonesia