Summary: At the Alibaba Cloud MaxCompute session during the 2017 Computing Conference held in Hangzhou, Li Ruibo, Senior Technical Expert of Alibaba, shared the information about how MaxCompute supports and integrates with open-source systems as well as the problems and challenges encountered by Alibaba’s technical team when they embraced open-source ecosystems.

This article was written based on the video of the speech and the PPT document used for the speech.

Previously at the 2016 Computing Conference, the MaxCompute team already shared how MaxCompute supports and integrates with open-source systems. The topic was "MaxCompute's Journey to Open Ecosystems". In the early days of Alibaba, there had been a long period of time in which Hadoop and a self-developed big data platform coexisted, so there were a lot of debates on the choice between MaxCompute and open-source ecosystems. However, the computing service team has always kept an open mind about open source. Support for open source was discussed last year. Our goal for this year is to build MaxCompute into a one-stop data solution. To meet this goal, the word "support" is now replaced with "integration", and we have made a lot of efforts to drive the transformation from "support" to "integration".

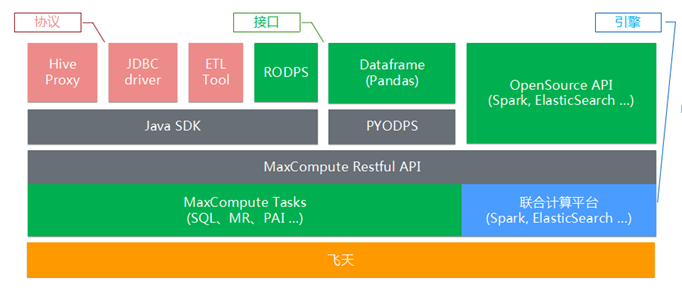

The following figure shows, from the perspective of technology stack, the steps we have gone through since an open-source system was built at the user interface level. Initially, connection was realized at the open-source or de facto standard tool level, so that the users could connect to the MaxCompute system more easily. Then compatibility was realized at the programming interface level, which aimed to protect the investment of the users, including to smooth the "moon landing" process within Alibaba Group. This year, one of our most recent efforts was the integration with open-source engines.

At the open-source protocol level, our focus was on JDBC and Hive protocols. In other words, the task was to allow the existing JDBC-compatible tools to integrate directly with the MaxCompute system. As shown in the following figure on the right, data analysis tools such as JDBC driver, Zeppelin, Workbench, Pentaho, and TalenD can be directly integrated with MaxCompute for data analysis. JDBC also provides a standard JDBC programming interface. In this way, the users can simply reuse the code they used in databases, which results in a lower programming cost. At the Hive Thrift level, a Hive Thrift–compatible interface was provided to support almost all Hive ecological connectivity tools at this level, including the Hive ODBC driver and Hive command line tools. The support for ETL tools was also available to allow data to be imported to and exported from multiple data sources.

In addition to the support for various protocols and tools, MaxCompute also smoothed out the first hurdle for the users to connect to the system. That means that the users can bypass Alibaba's own developed tool chain to integrate with the MaxCompute system directly.

The tool integration problem was solved, but another problem arose. A year or two ago, the MaxCompute-defined SQL must be included when writing SQL in tools. This SQL, however, was more or less different from Hive SQL, standard SQL, and Oracle SQL, which made it difficult to migrate the existing code. Last year, in response to the launch of MaxCompute 2.0, the MaxCompute SQL team put in a lot of efforts to develop a series of features to ensure full Hive compatibility. This has protected the users' investment in code while allowing them to migrate to MaxCompute more seamlessly. Now they can move fully Hive-compatible type systems, fully Hive-compatible built-in UDFs, and even user-developed UDFs for Hive directly to the SQL of MaxCompute. Moreover, external tables are also supported, which means that data can reside not only in MaxCompute, but also in OSS or anywhere else outside of MaxCompute. The MaxCompute SQL syntax was also refined in many aspects to make it fully compatible with the Hive syntax.

It is estimated that over 80% of the users use MaxCompute system via an SQL interface. However, many traditional data analysts perform data analysis using the R language, so many MaxCompute systems also come with RODPS, which is a plug-in on "R". When this plug-in is installed on R, a bridge that links R and MaxCompute is built, by means of which the tables of R can be directly converted into DataFrame. Subsequently, DataFrame, which is derived from R, can be used to conduct analysis and various report presentations based on the R ecosystem. This eliminates the need for users to export the data and import it into R.

Now that we have talked about "R", let us also talk about Python. Currently, the Python community is maintaining a great upward momentum in the areas of general programming and big data analysis. For instance, Pandas DataFrame, an API in the Python community, is also very popular among data analysts. Based on the Python SDK, MaxCompute provides a self-developed DataFrame, which is highly compatible with Pandas DataFrame. This self-developed DataFrame is different in that it is executed in MaxCompute. When it comes to R, however, data is imported into R for analysis on a single machine. A MaxCompute table can be constructed into a DataFrame, and then a DataFrame can be used to perform GroupBy or Filter computations. These all happen at the server side of MaxCompute. In this way, the existing code of the community is given the ability of big data computing, and hopefully this can be a way to equip the community with the computing capabilities of MaxCompute. Noticing that the Jupyter Notebook tool chain in the Python community is very useful, we integrated a lot of visualization models in DataFrame to allow for excellent data presentation on Notebooks.

After completing the preceding tasks, we saw a greater progress or better support in terms of protocols, tools, and programming interfaces. However, a great many problems still needed to be solved, such as MaxCompute's support for Spark API. That was because many open-source computing engines were available, and each engine was intended for a specific scenario. The advantage is that the users could build a scenario, get started quickly, and find many learning materials at the beginning of usage. However, when the users encounter the problems of scale or resource utilization efficiency, they will consider whether this job can be migrated to MaxCompute by using the existing system and be hosted by Alibaba Cloud, which is exactly the original intention of our "Moon Landing" program. We all wanted to achieve what the "Moon Landing" program was intended for, because data can produce huge power when it is put together, but it was also our hope that the process could be easier.

Previously, the computing service team made a lot of attempts for the compatibility with open-source APIs. Later, they realized that it was impracticable to use a self-developed engine to adapt to a variety of APIs, so they changed their thought to see if they could better embrace open-source ecosystems while maintaining the advantages of the self-developed platform. The main task for the service computing team this year is to run open-source computing engines directly on the MaxCompute platform while maintaining unified data storage, resource scheduling, and security control. In other words, we need to build an integrated computing platform to achieve better compatibility of MaxCompute by running open-source systems on the MaxCompute platform. That is to say, the attitude to open-source systems is evolved from "support" to "integration", and we hope to provide a one-stop big data solution with MaxCompute.

Next, we will share with you more details about the integrated computing platform. To achieve the three unifications mentioned earlier, we need to overcome the following four challenges.

First, we need to integrate the resource scheduling because an open-source system usually have a Spark cluster, a Flink cluster, or other independent clusters. Generally, the user access control in open-source systems is relatively weak, so that we may split one cluster into several ones to achieve isolation of permissions. Because MaxCompute is a unified SaaS service, so a lot of work needs to be done in terms of user authentication, permission control, and isolation at run time. Finally, after all the data is put together, it is also required that Spark API and other APIs can access the data already in MaxCompute, and this is what is called data integration. As for these challenges, we will explain how the integrated computing platform is implemented.

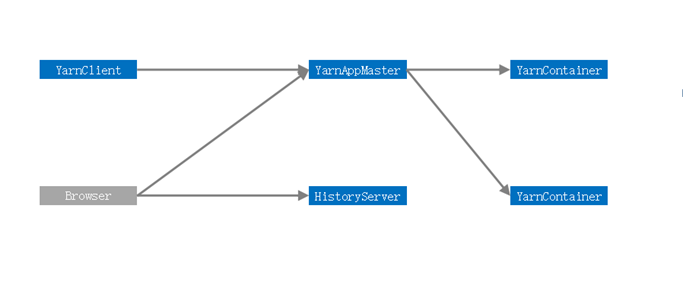

The following figure is a simple schematic diagram of standard SparkOnYarn jobs, which does not show all the roles for simplicity. Here, we can see that you can use the client to submit a Spark job, which has its own Master. The Master will apply for resources to the Resource Manager, which may notify the Node Manager to start the Container of Spark. Therefore, the Spark job has been turned into a structure with one Spark and several Containers. In addition, a Browser is available that can directly access to the WebUI of App Master, or sink to HistoryServer after completing the operation, which can be used to check the running status of the job. This is the standard running mode of Spark.

Integrated computing platform architecture

The integrated computing platform packages Spark or other open-source computing engines into a Task inside MaxCompute, which is called CupidTask here. Then, CupidTask is submitted by using the unified restfulAPI of MaxCompute. The verification and authentication of users are carried out in the unified access layer, so that users are prohibited from accessing the Spark resources without permissions. In addition, SparkTask will give manager roles to each role, called CupidMaster and CupidWorker. The layer of resource scheduling is actually managed by CupidMaster, so that the resource scheduling can be managed uniformly on the Apsara platform, and then CupidMaster takes over the resource management function of APPMaster.

Because open-source codes are relatively flexible to write, and the services which run together also need to protect the user's data security, the open-source codes need to be properly kept in an isolated environment. The user will not see the contents irrelevant to its own data, and the data can only be seen using open-source codes with the permissions of authorization and certification systems. The data of users is implemented and synchronized to the following containers using CupidWorker. In this way, the standard Spark job is turned into a MaxCompute job, thus achieving a certain degree of mixed running. Therefore, you can either use the MaxCompute SQL to process the data, or use the Spark data to process the same data.

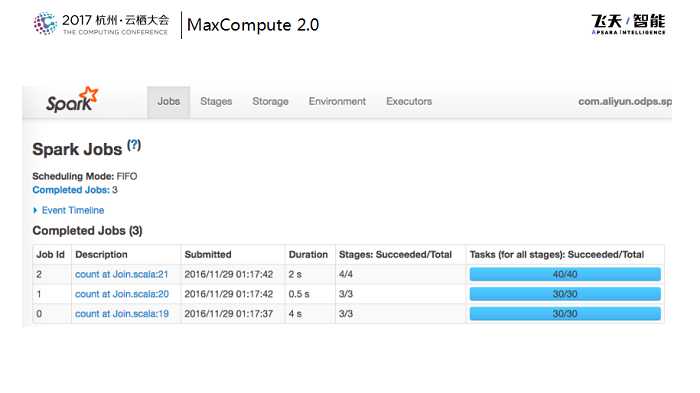

The following figure shows the actual running result, which is basically the same as native community in the run time.

The supports provided at the protocol and tool levels for the integration of MaxCompute and open-source systems were implemented using the SDK in the MaxCompute RestfulAPI service. The components in MaxCompute also provide supports to open-source systems, including using their own SQL to achieve Hive compatibility. Moreover, they migrate open-source systems to the MaxCompute platform by using the integrated computing platform. Currently, the version of Apsara Stack that supports Spark is available. With the help of the integrated computing platform, Spark and other systems can provide supports as open-source APIs in the periphery. The entire set of resources is built on the Apsara system, and it is hoped that the integrated architecture can provide users with more flexible choices. The user can move the existing data and applications to the platform, and run the system with the original Spark or machine learning jobs. If you encounter any problems, you can copy the same data to MaxCompute or directly compute the data by using the PAI engine, so that it can generate greater value.

A One-stop Big Data Solution with Open-source Systems

In the future, we also hope to gradually develop the capabilities of the integrated computing platform, so that we can easily apply the resources of the open-source community to the MaxCompute system.

2,593 posts | 793 followers

FollowAlibaba Cloud MaxCompute - December 8, 2020

Alibaba Cloud MaxCompute - March 25, 2021

Alibaba Cloud MaxCompute - March 24, 2021

Alibaba Clouder - September 27, 2020

Alibaba Cloud MaxCompute - January 22, 2021

Alibaba Cloud MaxCompute - May 5, 2019

2,593 posts | 793 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Alibaba Clouder