By Meng Shuo (Qianshu)

This article summarizes the essence integrating SaaS cloud-based data warehouses and real-time search, as shared by Meng Shuo, product manager of MaxCompute. The article contains the following three parts: Overview and Value (Why), Scenario (What), and Best Practices (How).

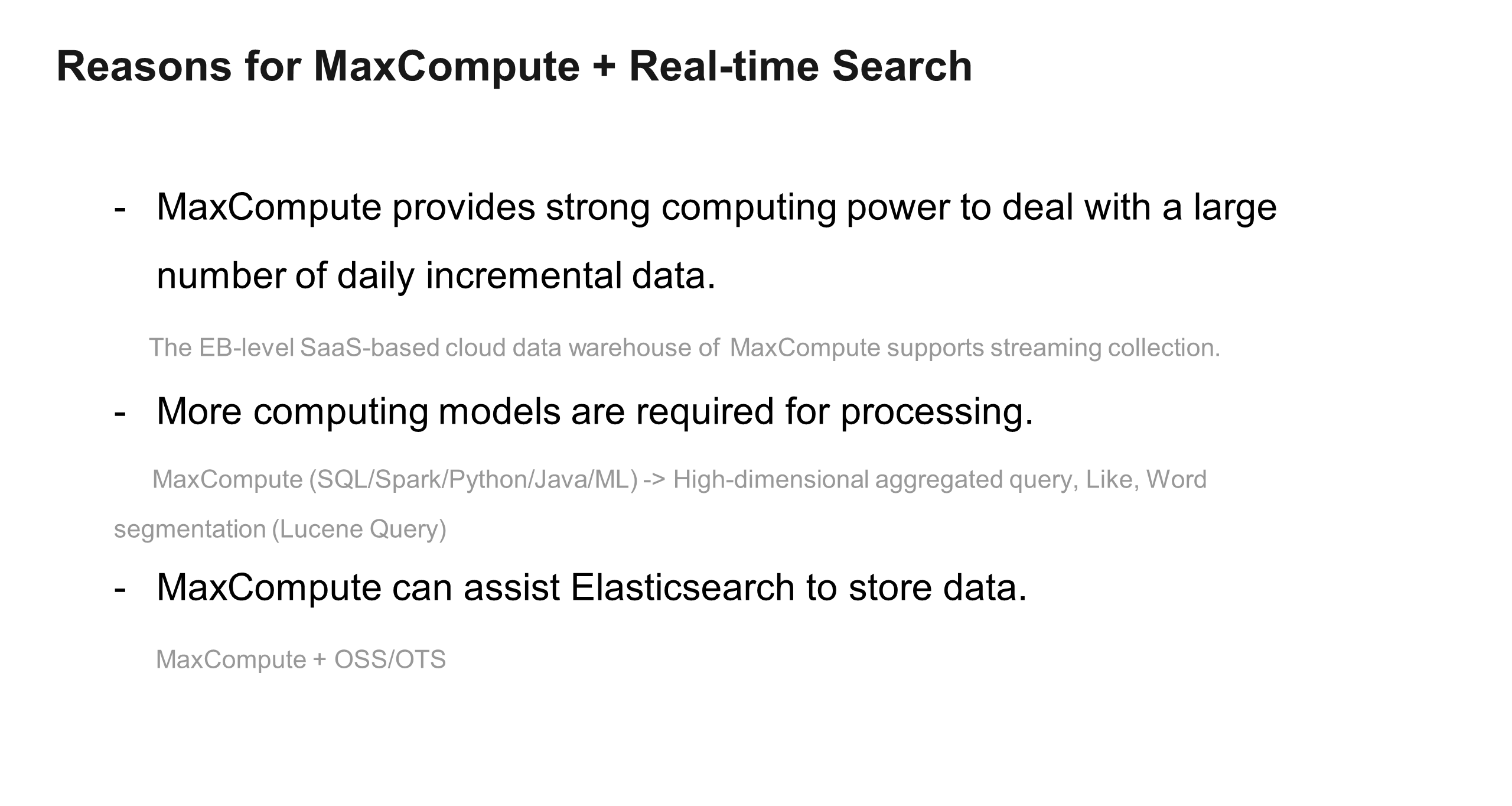

MaxCompute is an enterprise-level SaaS-based cloud data warehouse. At first glance, MaxCompute may appear as an engine for processing offline data, or in other words, a traditional data warehouse. However, MaxCompute is more than just a traditional data warehouse. Instead, MaxCompute can be seen as a data processing platform to process offline data, for example, the application of database and traditional data warehouse. With this platform, data collection and query can be achieved in near real-time as well. Now, by integrating MaxCompute and MC-Holegres components, the real-time data warehousing is also available.

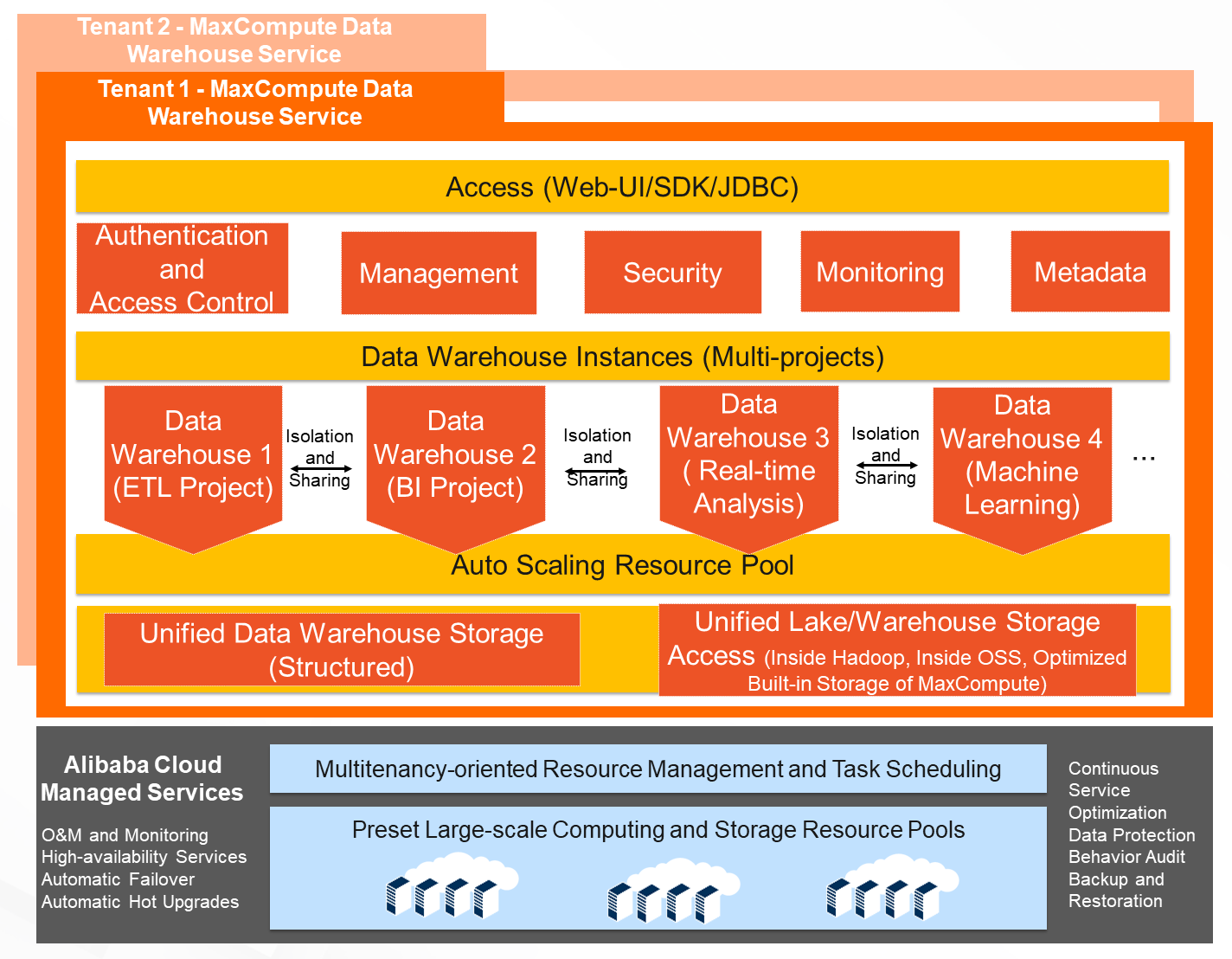

MaxCompute is a hosting service provided by Alibaba Cloud, relying on the powerful infrastructure of Alibaba Cloud to provide users with high-quality and easy-to-access services. The following figure shows its architecture.

MaxCompute can be applied in various scenarios. For what traditional data warehouses can do, MaxCompute can do it as well. MaxCompute is appropriate for the following scenarios:

Given the reliable architecture and strong technical strength, MaxCompute is well-equipped with excellent features, including the following.

(1) Online services accessed as APIs out of the box;

(2) Pre-populated and massive cluster resources are nearly unlimited resources that are used and paid for on demand;

(3) Minimum O&M investment without platform O&M.

(1) Separate scaling of storage and computing resources is supported. In addition, scalability of terabyte to exabyte level data scale is supported as well, allowing enterprises to store all data in MaxCompute for linked analysis and for the elimination of data silos;

(2) Serverless resources are allocated on-demand and in real-time, according to the changing demand brought about by business with automatic scaling;

(3) Single jobs can get thousands of cores in seconds as needed.

(1) Integrates with data lakes such as Object Storage Service (OSS) to process unstructured or open format data;

(2) Supported by the means such as external mapping or direct access to Spark;

(3) Implements data lake analysis and data warehouse association analysis under the same set of data warehouse services and user interfaces that is user-friendly.

(1) Seamless integration with Alibaba Cloud Machine Learning Platform for AI (PAI), providing powerful machine learning-based processing capabilities;

(2) User-friendly Spark-ML is available for intelligent analysis;

(3) Provides SQL ML to train a machine learning model and to perform predictive data analysis through standard SQL statements;

(4) The third-party Python-based machine learning library can be used, that is, Mars.

(1) The streaming tunnel is available and will analyze data in data warehouses;

(2) Deep integration with major streaming data services on the cloud to process data from various sources easily;

(3) High-performance second-level elastic concurrent queries to meet near real-time analysis scenarios.

(1) A full set of Spark features is provided with the built-in Apache Spark engine;

(2) Integrates Spark with the computing resources, data, and permission system of MaxCompute.

(1) Capability of offline computing, such as computing based on MapReduce (MR), DAG, SQL, ML, and graph computing models, is supported;

(2) Capability of real-time computing, such as streaming processing, in-memory computing, and iterative computing, is supported;

(3) Capabilities of data analysis of general relational big data, machine learning, unstructured data processing, and graph computing are covered.

(1) Unified tenant-level metadata is enabled for enterprises to easily access complete enterprise data catalogs;

(2) For a broader range of data sources, establish connections between the data warehouse and external data sources through the peripheral, that is, "to connect" rather than "to collect."

(1) Service-Level Agreement (SLA): 99.9% service availability can be ensured;

(2) Self-service and automated O&M is supported;

(3) Complete fault tolerance capabilities in terms of software, hardware, network, and manual operation are provided.

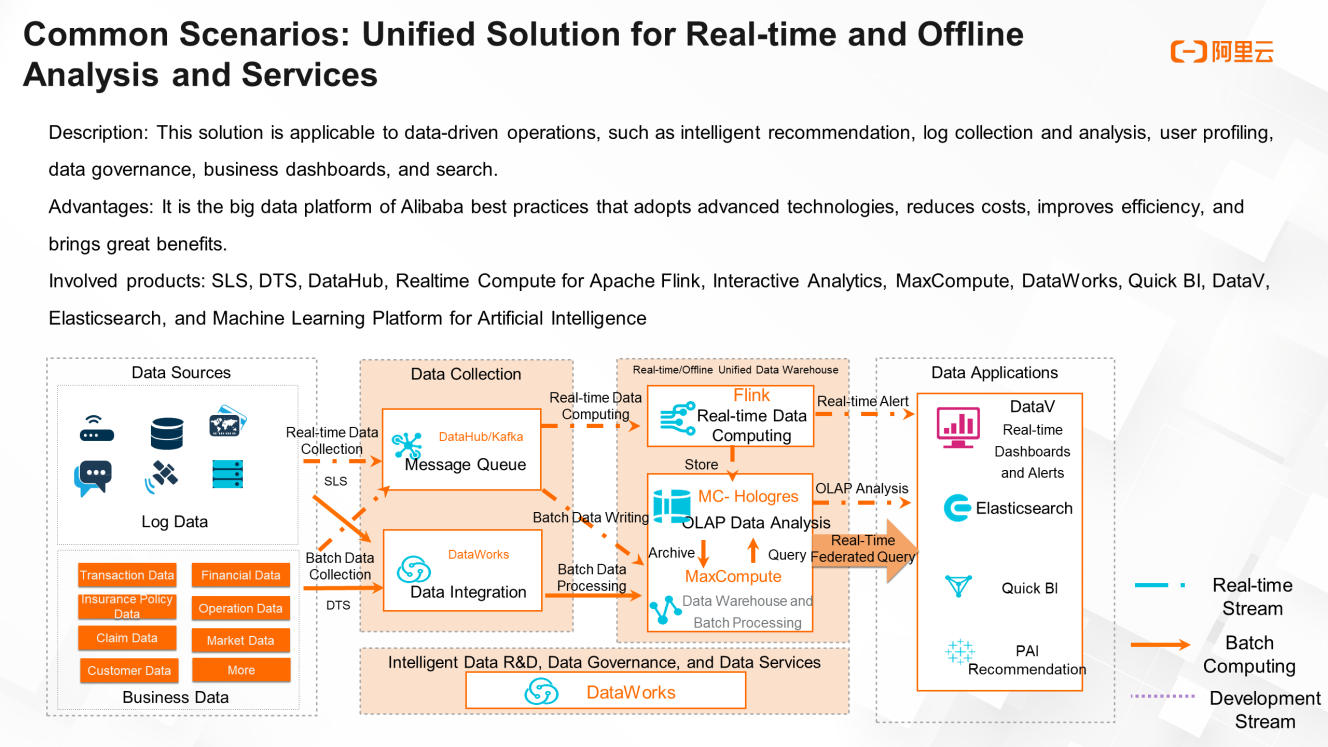

Generally speaking, to complete a big data project requires multiple components, including offline components and real-time components. The following figure shows a common scenario, presenting a solution that integrates real-time, offline, analysis, and service. This solution is applicable to data-based operations, such as intelligent recommendation, log collection and analysis, user profiling, data governance, business report generation and display, and data query.

Moreover, it serves as a big data platform for Alibaba's best practices with advantages of cutting-edge technology, cost reduction and efficiency improvement, and high value-added business revenue. Indeed, this solution involves many other services, including Log Service, Data Transmission Service (DTS), DataHub, and Realtime Compute for Apache Flink, as shown below.

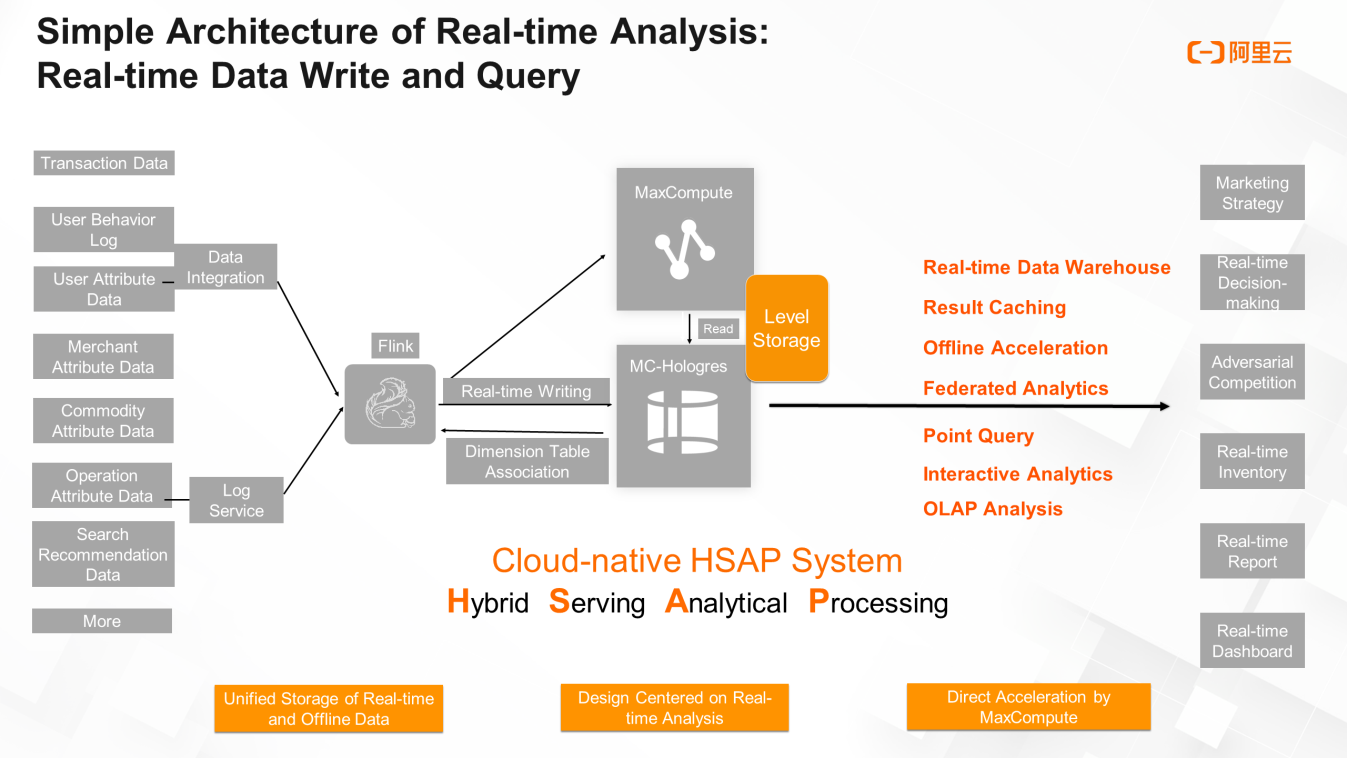

The following figure shows a simple architecture of real-time analysis formed by the integration of MaxCompute and Hologres, called the cloud-native HSAP system. This architecture supports real-time writing and query of data. Unlike other online analytical processing (OLAP) applications, Hologres and MaxCompute are integrated under this architecture and can share storage. That is to say, Hologres can directly read MaxCompute data, greatly reducing storage costs. With these two components, this architecture also supports offline acceleration, federated analytics, and interactive analysis.

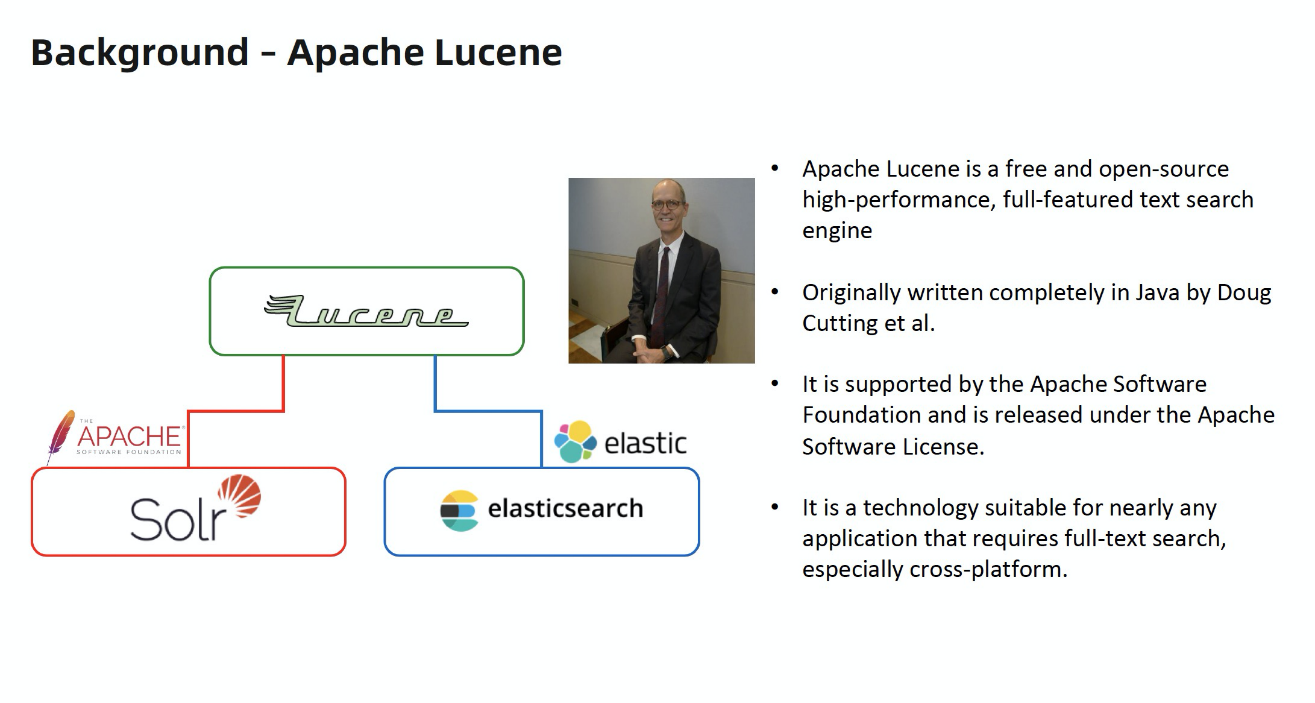

Elasticsearch is an open-source, distributed search and data analysis engine with RESTful style based on open-source library Apache Lucene. Elasticsearch is a powerful and easy-to-use solution for Lucene, providing real-time search services. Moreover, a wide range of scenarios is also supported. For example, under the car-hailing scenario, when you search for cars available nearby with Didi, it is Elasticsearch that supports the search in the backend. For another example, in GitHub, Elasticsearch supports users in retrieving the website content by using keywords. Essentially, Elasticsearch can also be applied to mobile apps as long as the service of searching in the website is required.

So why are search engines needed? Why is real-time search so popular now? Previously, data analysis could be done by writing programs. However, not every data analyst is capable of writing a program. It requires certain amount of learning costs to learn, for example, how to use SQL. With search engines then, users only need to filter by conditions to get the information they want, which greatly reduces learning costs.

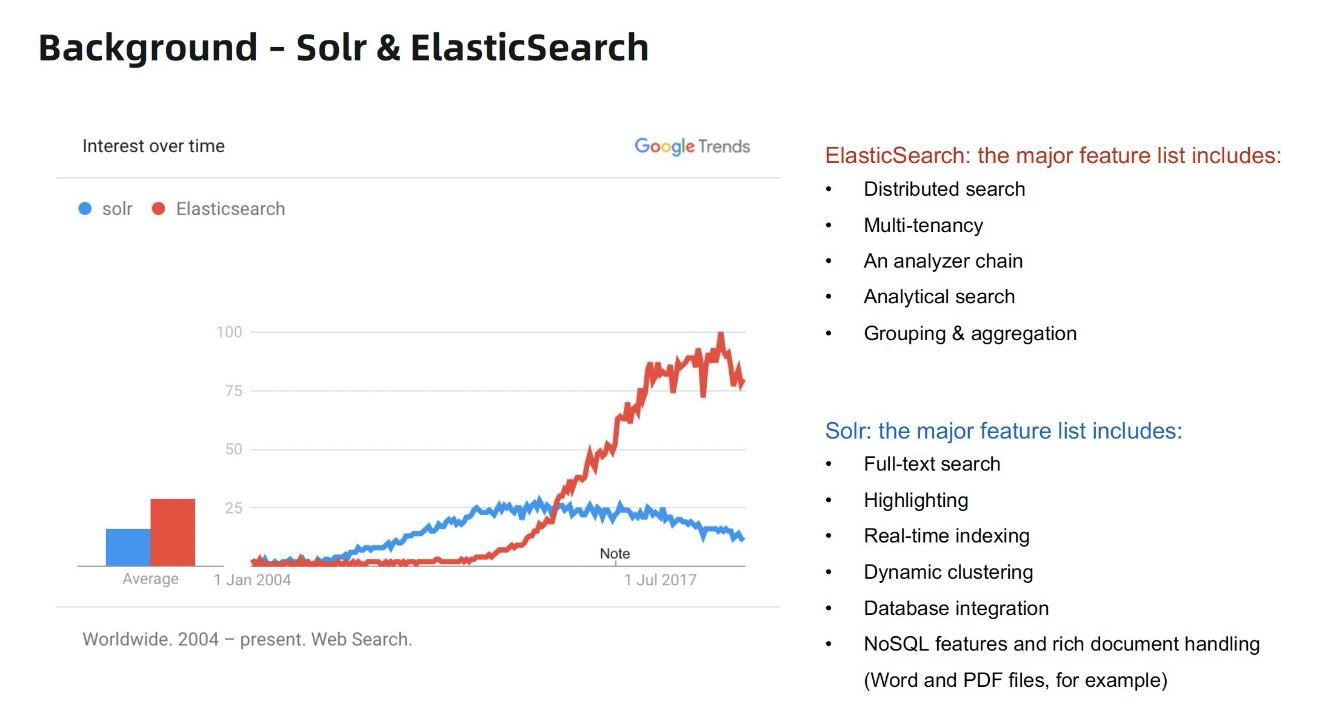

Nowadays, there are two mainstream search engines of Apache Solr and Elasticsearch. Both are emerging as Apache Lucene developed. Apache Lucene is the most advanced and efficient search engine framework that is full-function and open-source. However, it is nothing but a framework and relatively complicated. To make full use of it need further extension and development of Apache Lucene. To this end, Apache Solr and Elasticsearch come into being.

The following figure shows the trend analysis of the two search engines in Google Trends. It can be seen that in terms of real-time search, Elasticsearch has gained more popularity than Apache Solr in recent years, as Elasticsearch has better performance. However, it must be noted that Apache Solr can search faster based on the existing data.

Elasticsearch established a long-term strategic partnership with Alibaba Cloud since 2017. In the years to come, Alibaba Cloud Elasticsearch will be committed to scenarios such as data analysis and data query.

Moreover, we will be working on the compatibility with the open-source Elasticsearch and commercial features such as security, machine learning, graph, and application performance management (APM). Based on Elasticsearch, Alibaba Cloud will provide enterprise-level access control, security monitoring and alerting, and automatic reporting.

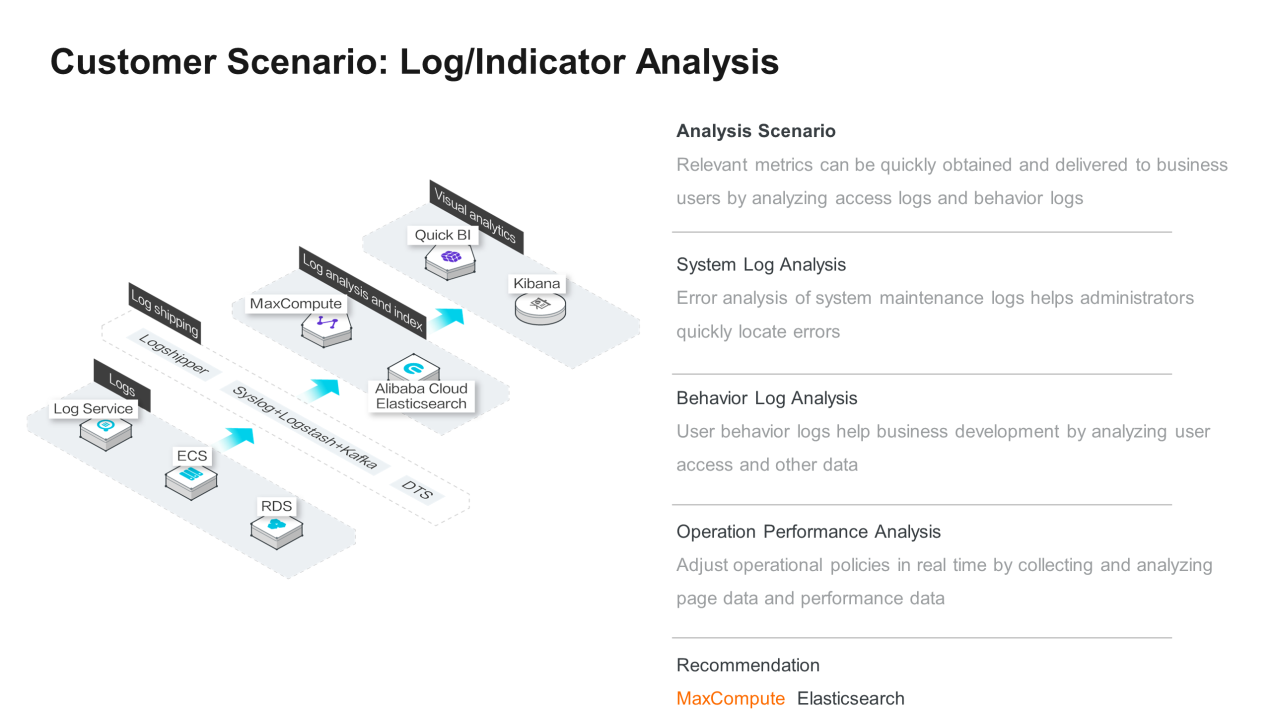

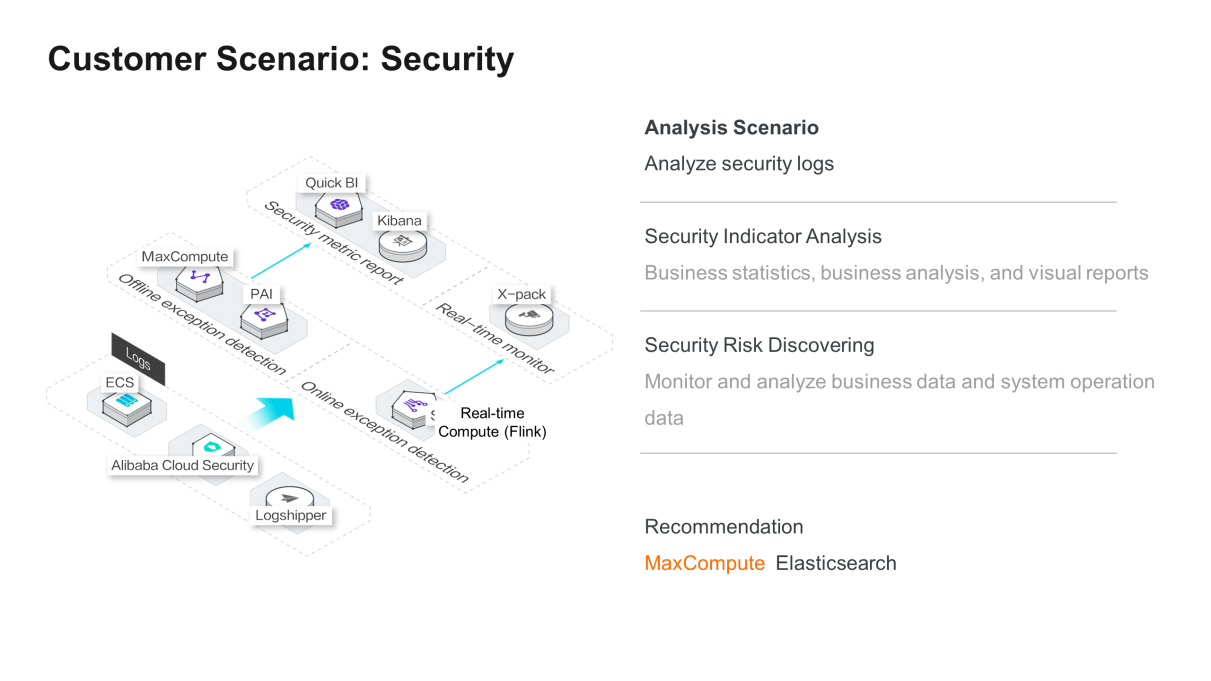

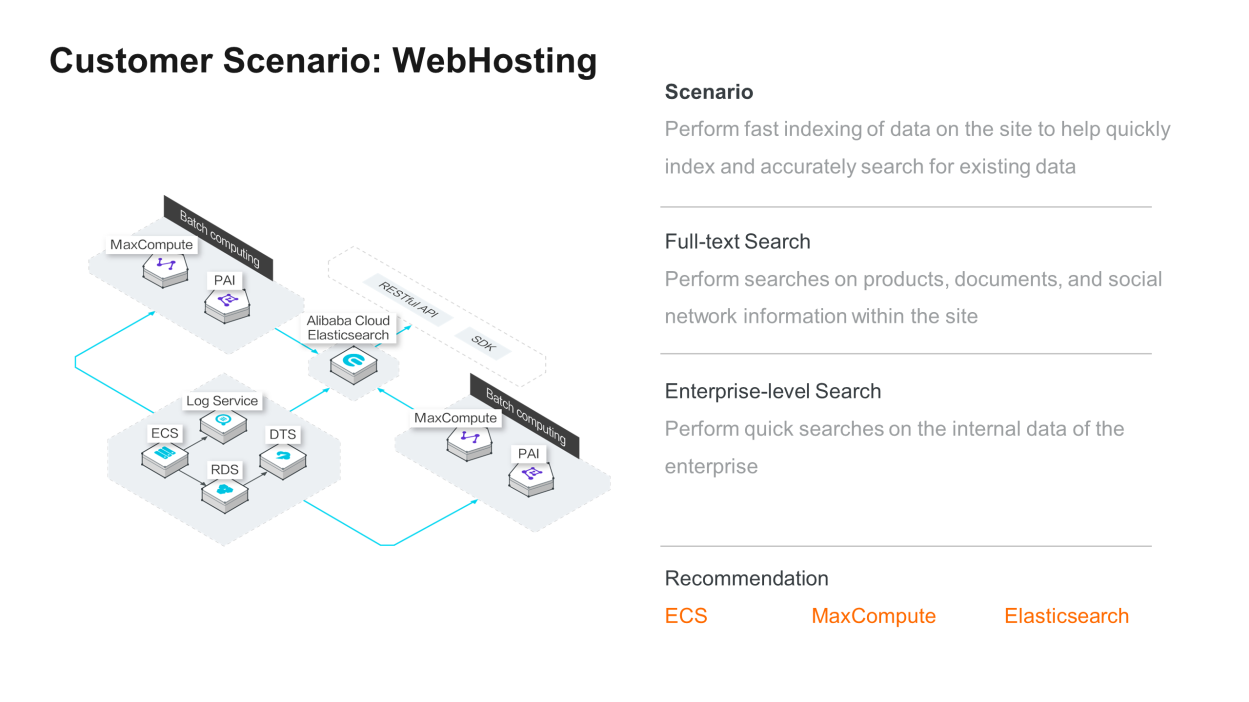

At present, the real-time search can be applicable to the following three scenarios:

The application logic of the three scenarios is shown in the following figures.

The best practices include data integration and data monitoring. Among them, data integration requires a data exchange between MaxCompute and Elasticsearch.

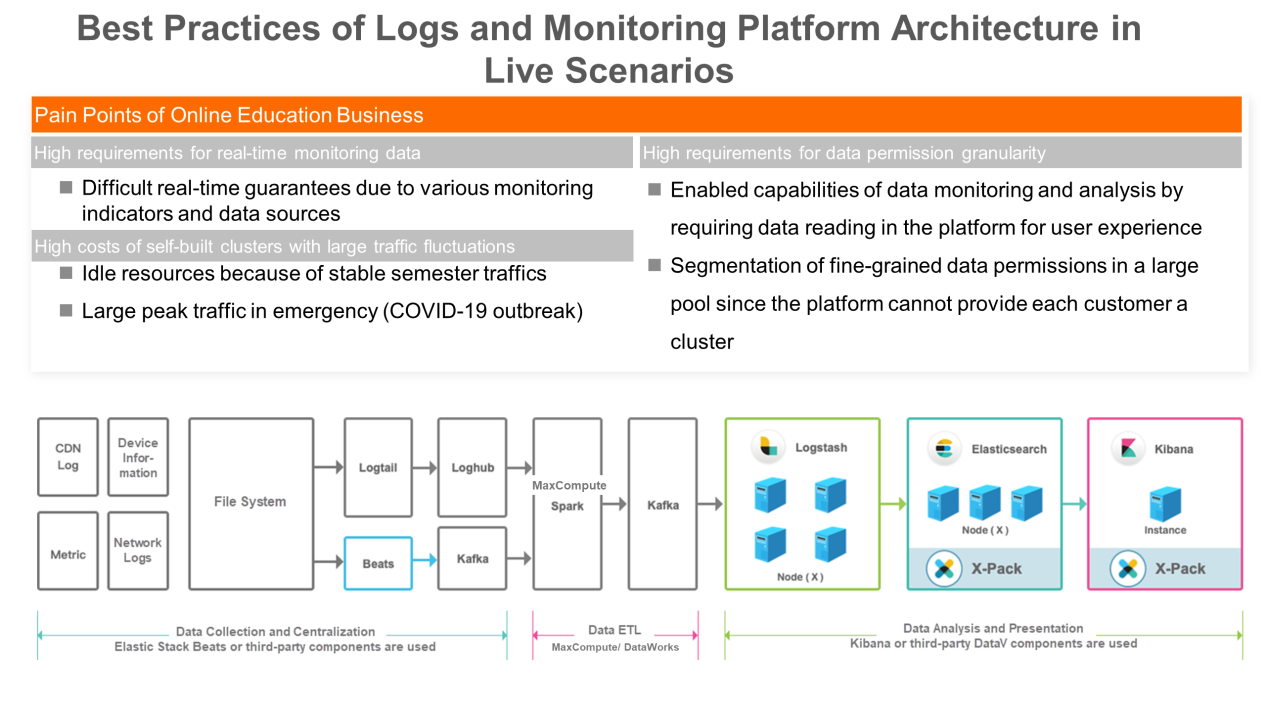

The following figure shows a case of online education. This case requires monitoring the logs generated by computer-based platforms and servers of the enterprise. Pre-analysis of data is performed by MaxCompute and then handed over to Elasticsearch for data monitoring.

However, this model has three obstacles:

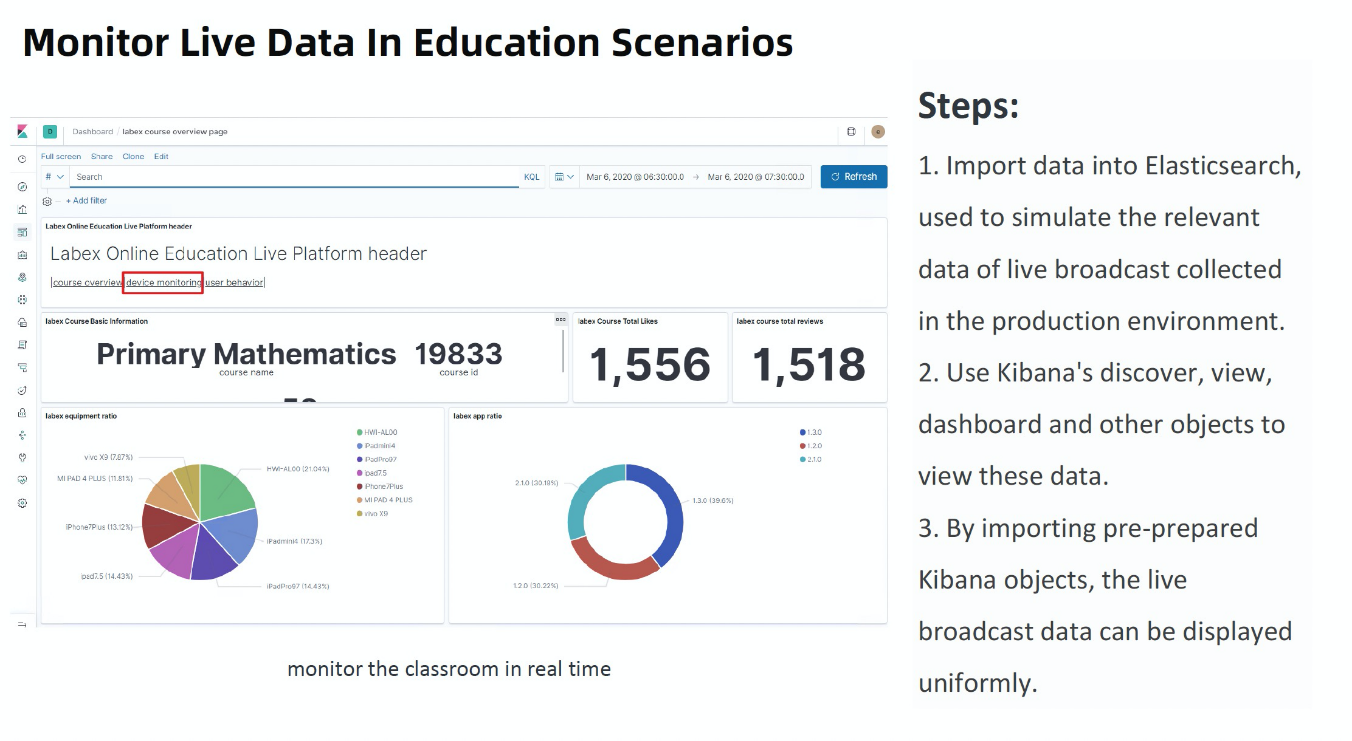

The preceding figure shows a general solution to overcome these three obstacles. The solution consists of three parts, including data collection and centralization, data extract, transform, and load (ETL), and data analysis and presentation. A DashBoard is generated accordingly, as shown in the following figure.

Data exchange between MaxCompute and Elasticsearch is very important. It takes five steps to import data from MaxCompute to Elasticsearch:

Preparations

Create DataWorks workspace and activate MaxCompute service, then prepare MaxCompute data source and create Alibaba Cloud Elasticsearch instance.

Step 1: Purchase and create an exclusive resource group

Purchase and create an exclusive resource group for data integration, and bind this exclusive resource group to a VPC and workspace. The exclusive resource group ensures fast and stable data transmission.

Step 2: Add a connection

Configure a MaxCompute and Elasticsearch connection with the data integration service of DataWorks.

Step 3: Configure and run a data synchronization task

Configure a data synchronization script to store the data synchronized in data integration to Elasticsearch. Then, register the exclusive resource group to the data integration service. This resource group will obtain data from Elasticsearch and run the task to write data to Elasticsearch. The task will be issued by data integration.

Step 4: Verify the data synchronization result

In the Kibana console, users can check the synchronized data and query data based on specified conditions.

The data has now been imported into Elasticsearch. To monitor the data, it only takes two steps:

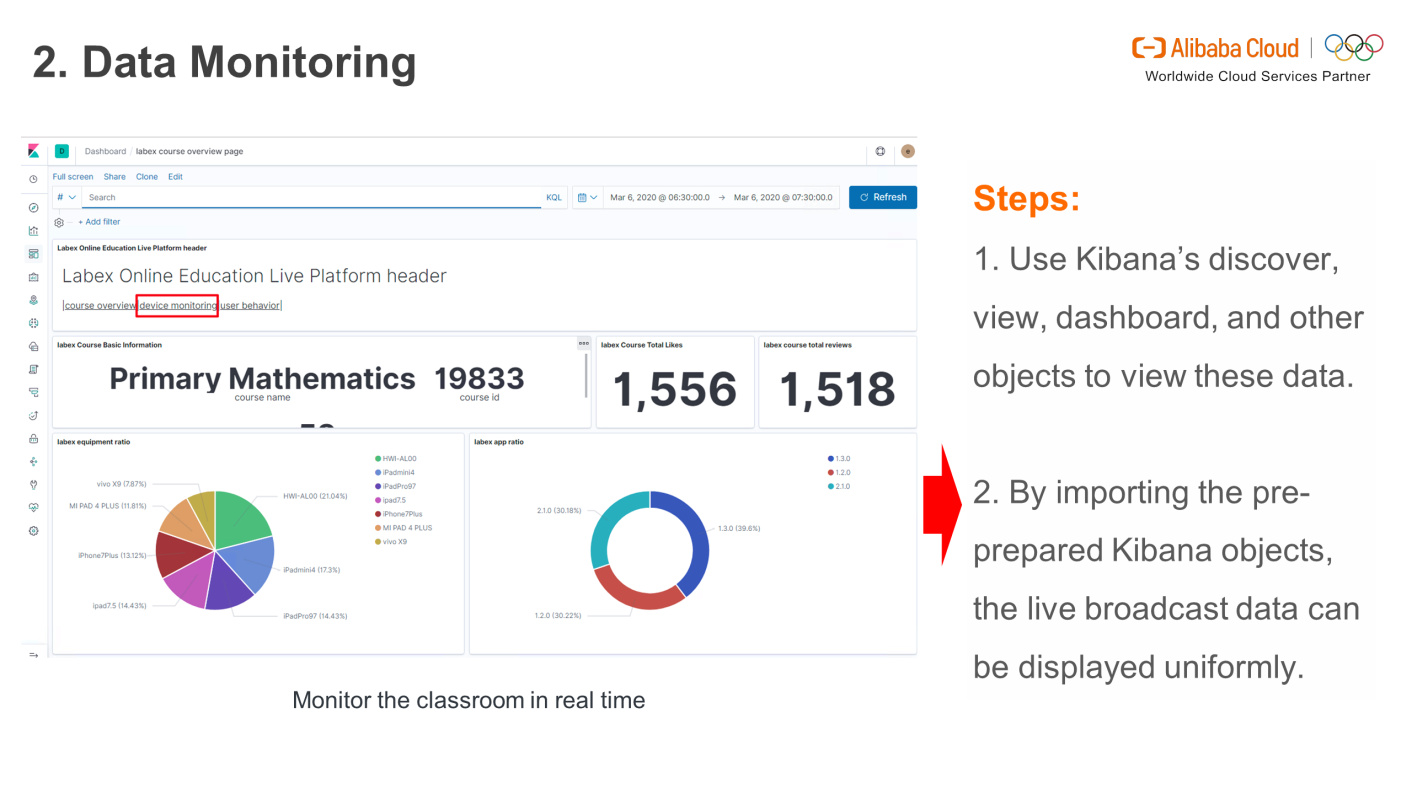

Step 1: Use Kibana's discover, view, dashboard, and other objects to view these data.

Step 2: By importing pre-prepared Kibana objects, the live broadcast data can be displayed uniformly.

You can learn more about the products discussed in this article at the following links:

Integrating Serverless with SaaS-based Cloud Data Warehouses

137 posts | 20 followers

FollowAlibaba Cloud MaxCompute - March 25, 2021

Alibaba Cloud MaxCompute - March 25, 2021

Alibaba Cloud MaxCompute - March 24, 2021

Alibaba Cloud MaxCompute - March 25, 2021

Alibaba Cloud MaxCompute - July 14, 2021

ApsaraDB - November 17, 2020

137 posts | 20 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Data Security on the Cloud Solution

Data Security on the Cloud Solution

This solution helps you easily build a robust data security framework to safeguard your data assets throughout the data security lifecycle with ensured confidentiality, integrity, and availability of your data.

Learn MoreMore Posts by Alibaba Cloud MaxCompute

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free