By Sheng Yuan

Users who are new to MaxCompute usually find it hard to quickly and comprehensively learn MaxCompute when they have so many product documents and community articles to choose from. At the same time, many developers with big data development experience hope to establish some associations and mappings between MaxCompute capabilities and open-source projects or commercial software based on their background knowledge, to determine whether MaxCompute meets their own needs. They also want to learn and use MaxCompute in an easier way by utilizing their existing experience.

This article describes MaxCompute in different topics from a broader perspective so that readers can quickly find and read their required information about MaxCompute.

MaxCompute is a big data computing service that provides a fast and fully hosted PB-level data warehouse solution, allowing you to analyze and process massive data economically and efficiently.

As indicated by the first part of the MaxCompute definition, MaxCompute is designed to support big data computing. In the meantime, it is a cloud-based service product. The latter part of the definition indicates the application scenarios of MaxCompute: large-scale data warehouses and processing and analysis of large amounts of data.

From the definition alone, we can't understand what computing capabilities and what kind of servitization MaxCompute actually provides. The phrase "data warehouse" in the definition shows that MaxCompute can process large-scale structured data (PB-level data as described in the definition). However, although the definition shows that MaxCompute can "analyze and process massive data", it remains to be verified whether it can process unstructured data or provide other complex analysis capabilities in addition to the common SQL analysis capabilities.

With these questions in mind, we will continue our MaxCompute introduction and hope that we can find answers to these questions later.

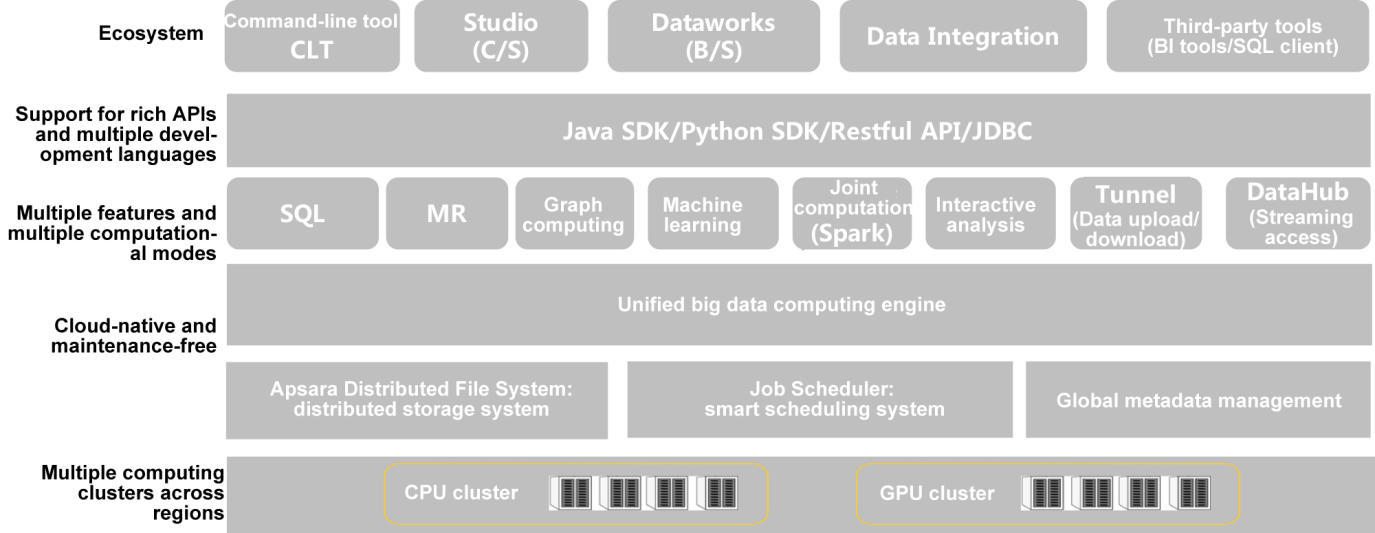

Before introducing the features of MaxCompute, we start with the overall logical architecture to give you an overview of MaxCompute.

MaxCompute provides a cloud-native and multi-tenant service architecture. MaxCompute computing services and service interfaces are pre-built on the underlying large-scale computing and storage resources, and a full set of security control methods and development kit management tools are provided. MaxCompute comes out-of-the-box.

In Alibaba Cloud Console, you can activate this service and create MaxCompute projects in minutes, without having to activate underlying resources, deploying software and maintaining infrastructure. Versions are upgraded and problems are fixed automatically (by the professional Alibaba Cloud team).

Note that in traditional data warehouse scenarios, most data analysis tasks are actually done by combining SQL and UDFs. As enterprises attach more importance to data value and more roles begin to use data, enterprises require more computing capabilities to meet needs of different users in different scenarios.

In addition to the SQL data analysis language, MaxCompute supports multiple computational models based on a unified data storage and permission system.

MaxCompute SQL:

It fully supports TPC-DS and is highly compatible with Hive. Developers with a Hive background can get started immediately. The performance in large-scale data scenarios is especially powerful.

MapReduce:

MaxCompute Graph:

PyODPS:

It uses familiar Python and the large-scale computing capability of MaxCompute to process MaxCompute data.

PyODPS is a Python SDK for MaxCompute. It also provides the DataFrame framework and Pandas-like syntax. PyODPS can use the powerful processing capabilities of MaxCompute to process ultra-large-scale data.

Spark:

MaxCompute provides the "Spark on MaxCompute" solution that allows MaxCompute to provide open-source Spark computing services and enables the Spark computing framework to be provided on a unified computing resource and dataset permission system. MaxCompute allows users to submit and run Spark jobs by using their familiar development method.

Interactive Analysis (Lightning):

The interactive query service of MaxCompute has the following features:

Machine learning:

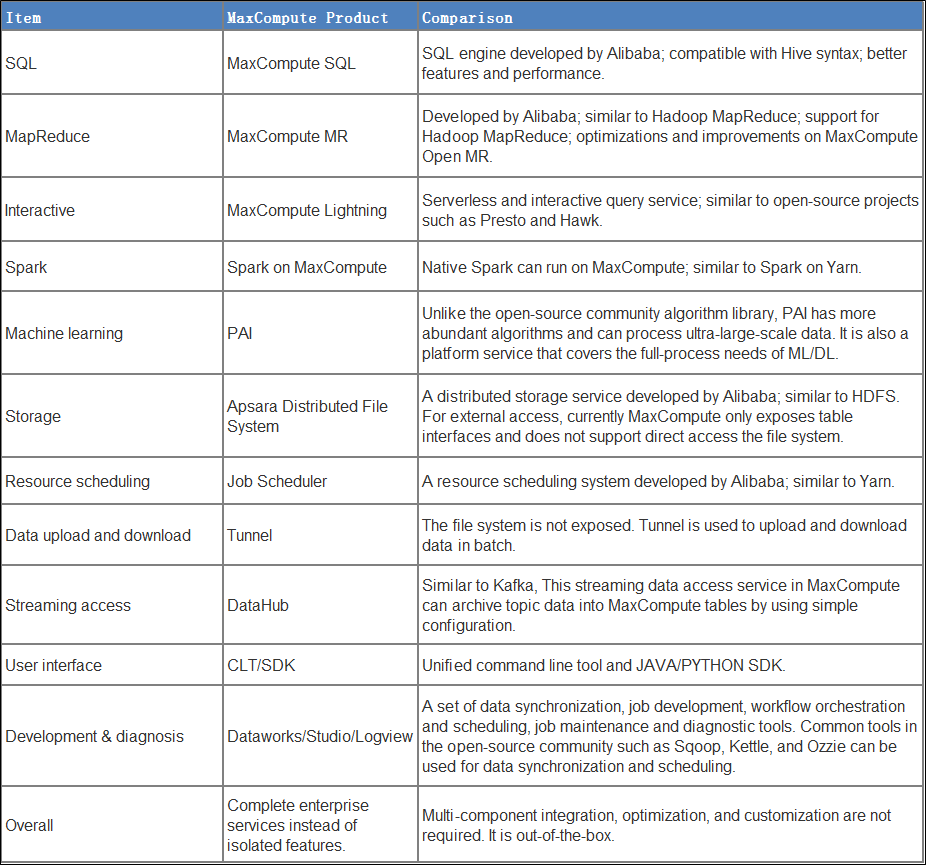

The following comparison and description is provided to help readers understand the main MaxCompute features, especially those who have experience in the open-source community.

What are the relationship and differences between DataWorks and MaxCompute?

These are two different products. MaxCompute is a computing service for data storage, processing, and analysis. DataWorks is a big data IDE toolkit that integrates features such as data integration, data modeling and debugging, job orchestration and maintenance, metadata management, data quality management, and data API services. Their relationship is similar to that between Spark and HUE. I hope this is an accurate analogy.

I am interested to try MaxCompute. Is it expensive?

No. Actually, the cost is very low. MaxCompute provides the Pay-By-Job billing method, by which the cost of a single job depends on the size of the data that job processes. Activate the Pay-As-You-Go billing plan and create a project. You can try MaxCompute after creating a table and uploading test data by using the MaxCompute client tool (ODPSCMD) or DataWorks. If it's dealing with small amounts of data, USD 1.50 is enough to try MaxCompute for quite a while.

MaxCompute also has the exclusive resource model. Subscription is provided for this model in consideration of the cost.

In addition, MaxCompute will soon release the "Developer Edition", giving developers a certain free quota each month for development and learning.

Currently MaxCompute only exposes tables. Can it process unstructured data?

Yes. Unstructured data can be stored on OSS. You can implement the logic that process unstructured data into structured data by using foreign tables and customizing Extractor. You can also useSpark on MaxCompute to access OSS, extract and convert files under the OSS directory by using the Spark program, and then write results into MaxCompute tables.

Which data sources can MaxCompute integrate data from?

You can integrate various offline data sources on Alibaba Cloud by using the Dataworks data integration service or DataX, such as databases, HDFS, FTP.

You can also use the command or SDK to batch upload and download dataSDK through MaxCompute Tunnel/SDK.

Streaming data can be stream-written into Datahub and archived into MaxCompute tables by using the Flume/logstash plug-in in MaxCompute.

Alibaba Cloud SLS and DTS service data can also be written into MaxCompute tables.

This article describes the basic concepts and features of MaxCompute and compares it with common open-source services to help you understand Alibaba Cloud MaxCompute.

Learn more about at MaxCompute at the official website: https://www.alibabacloud.com/product/maxcompute

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - September 18, 2019

JDP - June 25, 2021

Alibaba Clouder - September 10, 2018

Alibaba Cloud MaxCompute - September 12, 2018

Alibaba Cloud MaxCompute - September 18, 2019

Alibaba Cloud MaxCompute - January 29, 2024

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More DataWorks

DataWorks

A secure environment for offline data development, with powerful Open APIs, to create an ecosystem for redevelopment.

Learn MoreMore Posts by Alibaba Cloud MaxCompute