In this article, Yun Lang, a Senior Product Expert at Alibaba Cloud, shares some advice on the best practices of building the Cloud Data Warehouse (CDW) based on MaxCompute.

This article is based on his speech and the related presentation slides.

Hello, everybody. I am Yun Lang. I once worked for Oracle as an enterprise architect for eight years. Currently, I am the MaxCompute product manager.

As a product manager, I have to maintain good communication with our customers. I often get a lot of questions from our customers, including:

As we can see, different customers may encounter different problems at different stages. What kind of rules are behind these various problems from customers? My point is to find out whether we can summarize some best practices from these problems to help you avoid detours.

First, Alibaba itself has many business units. As mentioned previously, all the Alibaba data runs on MaxCompute to build the data warehouse and implement data processing. You may challenge me by saying that you will not encounter the problems that we solved at Alibaba. That is true-solving Alibaba's problems does not necessarily mean that we can solve our customer's problems.

Second, our customers are highly diversified, and our own problems may not necessarily reflect our customers' problems, because our customers may have a different size, current situation, capability level, and goal. MaxCompute also provides the services on the cloud. We have lots of customers on the cloud and many of you here are MaxCompute users. Therefore, I have extended the scope to all our existing customers. At this point, some of you may ask that your so-called best practices are based on your own product. Can't you analyze the best practices for companies that do not use your product?

That is why I also include the third type of customers. In China, many enterprises are using non-Alibaba technologies. What problems do they have with regard to big data? Many of you may have also shared some topics such as the big data practice of Company A and the big data evolution process of Company B. We will also base our analysis on these cases.

Fourth, many acquired companies are included in the Alibaba ecosystem. What are the best practices in the process of integrating external companies into the internal Alibaba companies?

What I want to share with you today is to analyze the essential best practices hidden behind various phenomena from a comprehensive view of all the four types of customers.

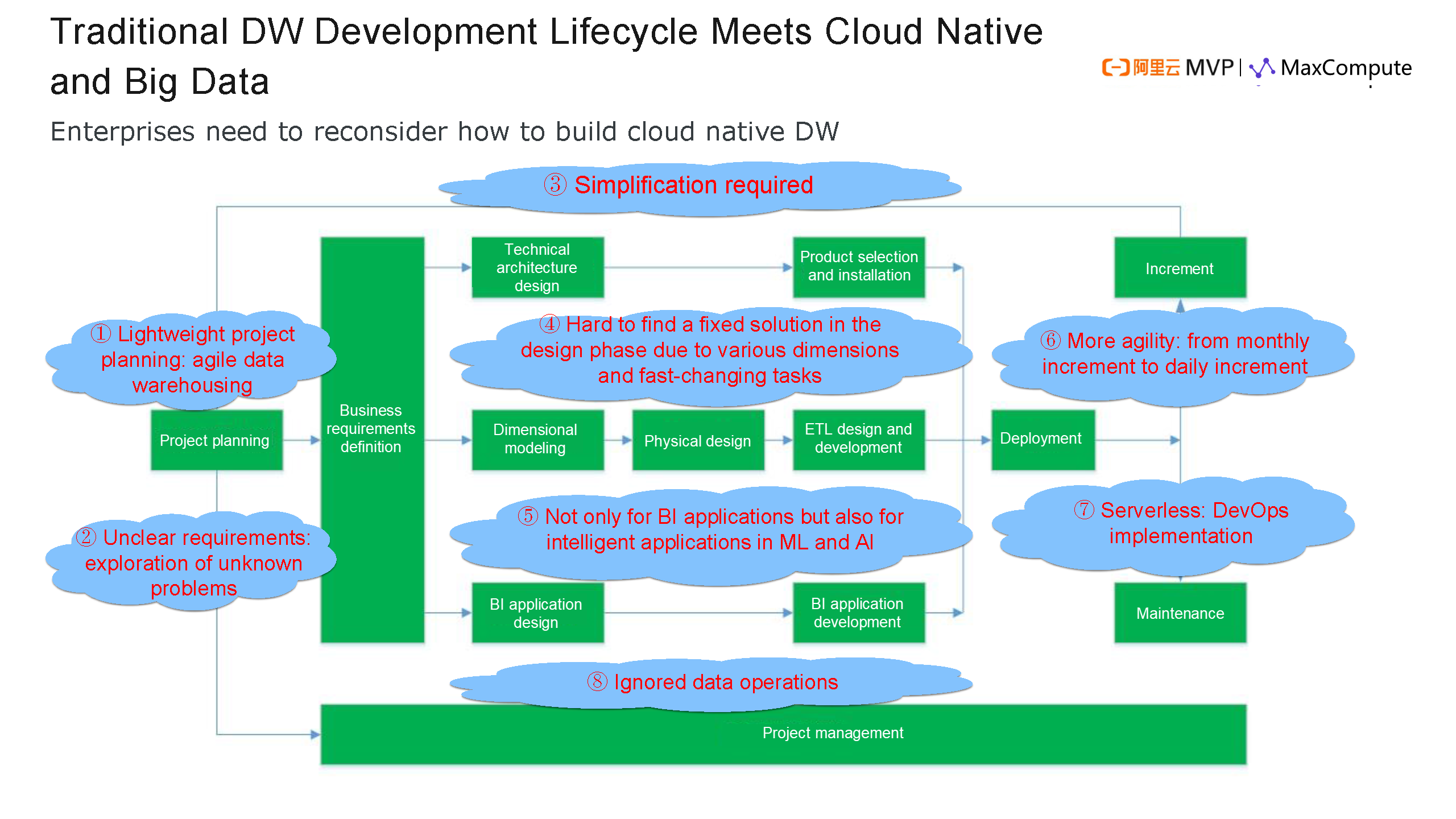

Currently, everyone is talking about big data. As early as 2013 when I was working on information solutions at Oracle, big data was already born. I used to work on DB (database). Now I work on the reverse of DB-BD (big data). In this process, huge technology changes have occurred. Guan Tao has shared a lot on this technological transformation, about which I think you have learned a lot. How should these technologies be applied in this process? Have these methods changed? Customers often ask me what they are going to do with MaxCompute. To give more convincing answers, I have done some analysis. I found that customers basically need to build their data warehouse on MaxCompute no matter what applications they are building. We call this data warehouse Cloud Data Warehouse. As is known to all of you, it is both a data architecture and an engineering process. More attention should be paid to the engineering aspect. This is a typical real-time lifecycle of data warehouses in the industry. We can find that many processes in this diagram are still widely applied when the key technology has shifted from DB to BD. When I talk with architects, big data developers or big data owners, I realize that their ideas are actually based on this lifecycle diagram. How does the data warehouse become a cloud-native data warehouse when it meets with cloud-native technology and the big data technology? We can see the entire set of processes include project engineering, business needs, small iteration and maintenance, completion of data warehouse development, and delivery.

During the entire process, creation is the center of a project in the traditional DB era. In the era of big data, maintenance is the key part following the creation of a project. What does maintenance mainly lie in? It mainly lies in the operations. In this process, we can see that the essence of big data is missing.

Let's take a look at different situations.

First, I believe many of you are from Internet companies. If you are working at a government-funded organization, you are lucky because you may not have the financial burden when making a plan. If you work at an Internet company, you may have to get all the data ready in only one day. Internet companies do not have much time for data preparation. Then what measures can we take? We can take a lightweight approach. In the entire lifecycle of our data warehouse, we want an agile data warehouse. In software engineering, we need agile development and an agile data warehouse.

Second, why can you plan your business needs? This is because your business basically follows a fixed pattern if you are from a government department or a central enterprise, and you can basically know what to expect down the road. However, if you are an employee of an Internet company, you may not even know whether your company will exist next year. The business may require transformation before your last planning is completed. The business needs are not clear, making it challenging for you to make a clear plan.

Third, if we make a perfect and complicated technology solution, but its implementation cycle is too long, we need to consider whether the solution can be simplified technically. Speed is of the essence.

The fourth situation is data modeling. You may want to create all these models at the very beginning. This is actually a problem that many data engineers may encounter. They may want to put all the data together to create a stable model. What are the results of falling into this trap? Your long-lasting technology is locked inside a door while it turns out that your business is outside the door and can never get in, because you are still working on your data models.

The application of the data warehouse is not converting traditional DBs into BD and then making reports after you have created your big data. The real application scenarios will not be this simple. Achieving the value to the fullest also requires the application of technologies such as machine learning, artificial intelligence, and prediction. This is an iterative process. The implementation in months is considered relatively fast because the cycle may be three months long. In the traditional approach, it may take months to implement a need after it is specified. Today, with the implementation within hours and days, how can you construct it to comply with data warehouse changes?

On the O&M side, developers and O&M persons need to implement what we call DevOps today. If you are a data developer, it is a big challenge to implement the DevOps of the entire big data platform.

With project management and creation being the center part, the real data operations is actually ignored. This brings up the topic of today-the data value must be revealed though data operations. What is the concept of operations?

The construction of an enterprise big data platform is similar to the development of human beings. For example, during courtship and honeymooning, a couple may not take into consideration many pragmatic living arrangements. However, when they get married, they have to consider having children and making a living. We need to consider different pain points in the face of different problems.

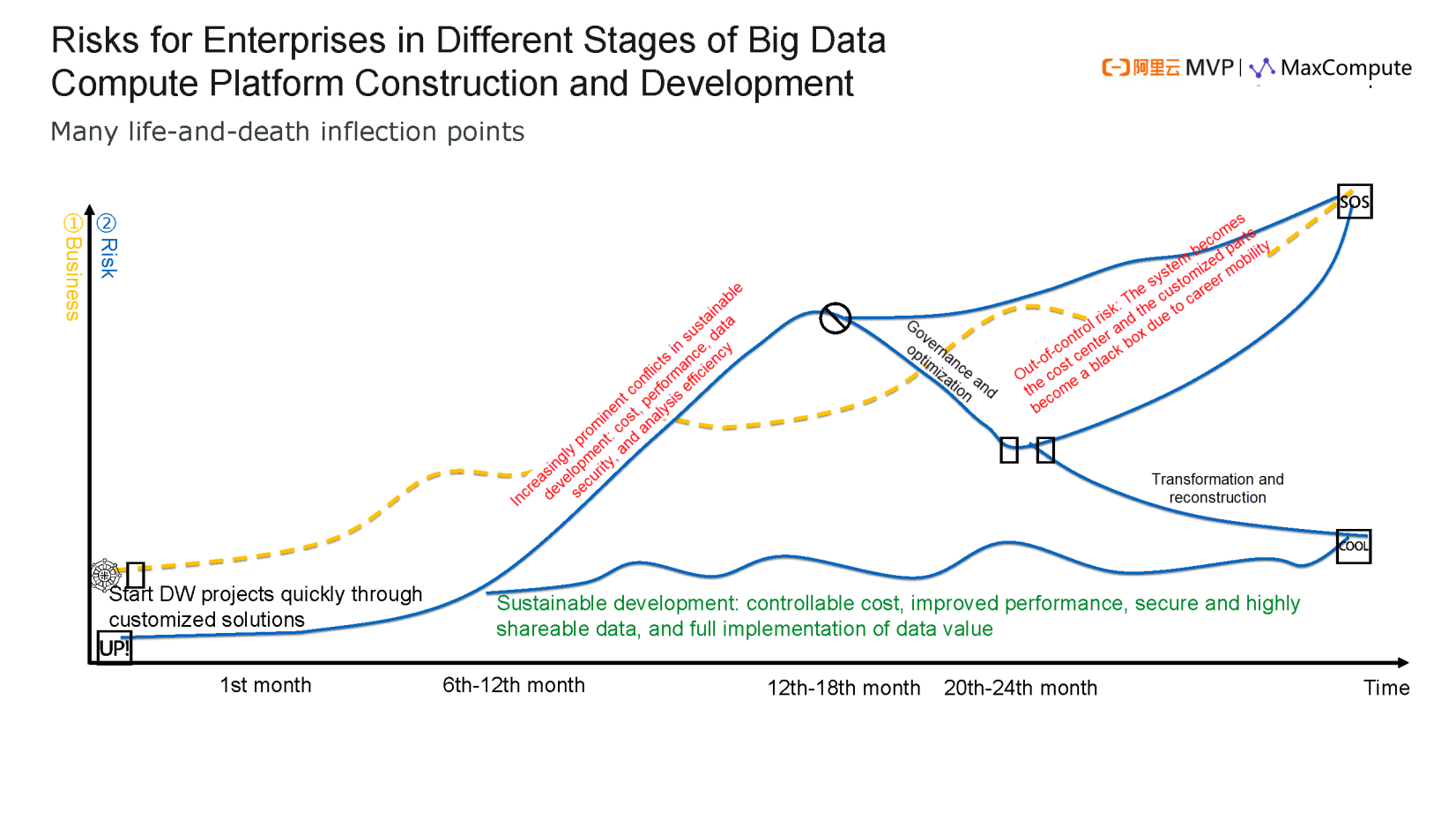

In the diagram, the x timeline has several time intervals: the 1st month, the 6th month to the 12th month, the 12th month to the 18th month, and the 18th month to the 24th month. After analyzing nearly one hundred enterprise customers, we know what kind of problems we may have in the cycle and that technical solutions are different. However, the pain and the risk are the same.

On the y coordinate, the yellow line represents the business scale and the blue line represents the risk. The radius that the business can reach in this diagram represents the value. The area included in the red line represents the risk. As Guan Tao previously mentioned, the success depends on whether the business area is bigger than the risk area. In this diagram, we can see that most customers can quickly customize their solutions and quickly start the data warehouse during the "honeymoon" period. Because they have enough enthusiasm, investment, and labor, they can quickly create the project in only one month. In the period from the 6th month to the 12th month, the business grows and the scale becomes larger. At this point, the business will either develop well if properly supervised, or may become unsuccessful due to improper supervision.

The large-scale expansion of the business and the sharp increase in the amount of data and computations bring great challenges and high requirements to the performance and cost. The system is required to solve the conflicts in the sustainable development, cost, performance, data security, and analysis efficiency. As the business grows, we need to consider how to solve these problems. As we can see, this line indicates the risk is increasing. After the risk increases, many companies, including our customers, may launch a new round of governance and phased optimization, including performance optimization and cost optimization.

As the business continuously develops, the two lines again show the risk is out of control. At this point, the system becomes the source of the cost. Many customized systems were developed when you had strong financial support and good ideas. When your staff changes, what will all your customized systems become? They are now black boxes. You have to wait for SOS efforts. This is the risk evolution process.

Apparently, our best practices cannot follow this method. We need to avoid this detour.

Additionally, governing and optimizing the platform that we are going to build is not enough. If we can transform the platform, it can go back to a better status that indicates healthy best practices. For example, the big cloud platform at Alibaba has two important transformation aspects. The first aspect is the technical transformation. We initially used Hadoop and then switched to MaxCompute in the technical transformation phase. In fact, the switch to MaxCompute began as early as 2013. During the transformation, our own technology was used for the replacement and upgrading.

The other aspect is business. With the business scale of Alibaba, our internal technology can be applied at Alibaba Cloud to implement the business transformation. We gained a new life. It is very important to clearly understand where you stand in this risk transfer process. We want sustainable development in both our technology and business. To do this, the key strategy is to control our cost and increase the performance. As the data volume increases, data processing has to be faster rather than slower. Data must be secure and can be shared. Collected data is useless if nobody has access to it. We need to maximize the data value.

Creating a data platform involves risks in different phases. In the process of creating a data platform, you may encounter some setbacks. We ourselves have also had many problems. I remember one line in the Three-body Problem novel-At these reflection points I can destroy you and you can do nothing about it. The situation is urgent. For customers that are subject to many problems, I put forward a theory that helps obtain essential insights. Most of you have probably gone fishing. When you fish for small fish, the hook and the fishing line are directly bound together, because small fish do not struggle fiercely. However, when you fish on a sea for big fish, you may find that a fish that bites your bait may impose so much force that your fishing line is immediately tightened or even broken if your hook and fishing line are directly bound together. Similarly, huge pressure and burden may cause a system to crash.

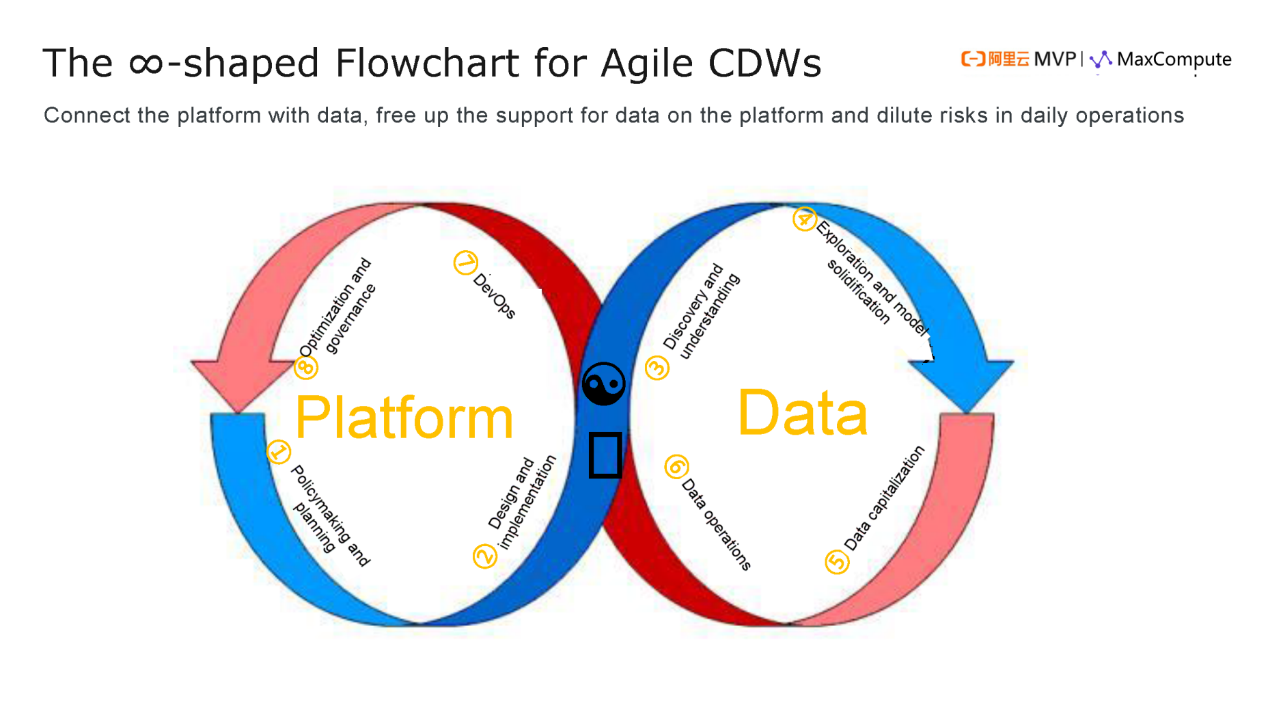

To successfully catch a big fish, we have some techniques. We can wind up a segment of the fishing line in a figure 8 to provide room for more elasticity and resistance. When a fish bites the hook, the rear segment of the fishing line is not directly pulled. When the fishing line is tightened, the figure 8 segment with multiple strands can disperse the sudden force imposed and provide better resistance performance. In this way, a big fish cannot break the fishing line.

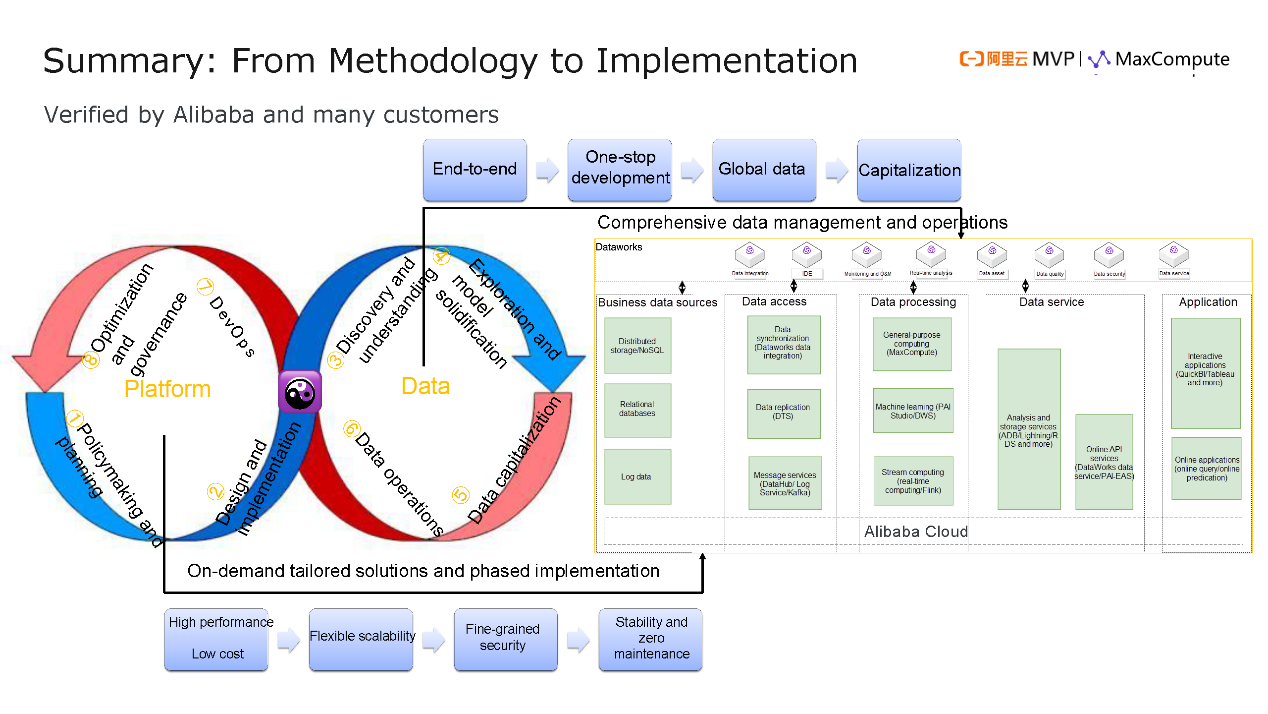

Can we apply a similar step to the platform and data? During that process, our platform and data are completely independent. We only want to build our platform, without the need to consider data. In more and more enterprises, a dedicated team is responsible for building the platform and another dedicated team processes data. or several groups are responsible for building the platform and another several groups are responsible for data processing. No matter what, the platform and data should be independent. The relationship between the platform and data is like the Yin-Yang diagram that I drew. As the figure 8 ring shows, we do not simply combine the platform and the data together. The essence of the figure 8 ring is that the policymaking and planning and the platform design and implementation are done in two steps. Once you have the initial prototype and the basic platform, you are ready to enter the data operations stage. In the third step, you need to discover some simple data, let data scientists analyze and understand data, explore data, implement data capitalization and perform operations. At this point, you need to perform D&M on our platform side and implement optimization and governance.

Are the platform and data mutually opposite? Or does the platform breed data and vice versa? What kind of ideas should we have in this regard? I think we need to think this through. If you are working on data, you need to consider its relationship with the platform. If you are working on the platform, you need to consider its relationship with data. If this relationship is not properly handled, basically you will not arrive at best practices in the future.

Therefore, the first problem to be solved is agility, because we can quickly enter the data phase and implement better agility when following procedures in this flow chart. At the same time, we need to avoid the "broken fishing line" problem mentioned earlier. We also need to connect the platform and data and release the platform support for data. The platform itself has good support for data, but how can we release that support? We need to consider this question.

Another consideration is risk. We need to continuously perform practices and verification to reduce or eliminate the risk. It would be a great waste of money and effort to find out that an already decided 3-year plan is highly risky one year in.

I would like to provide several suggestions on the aforementioned content.

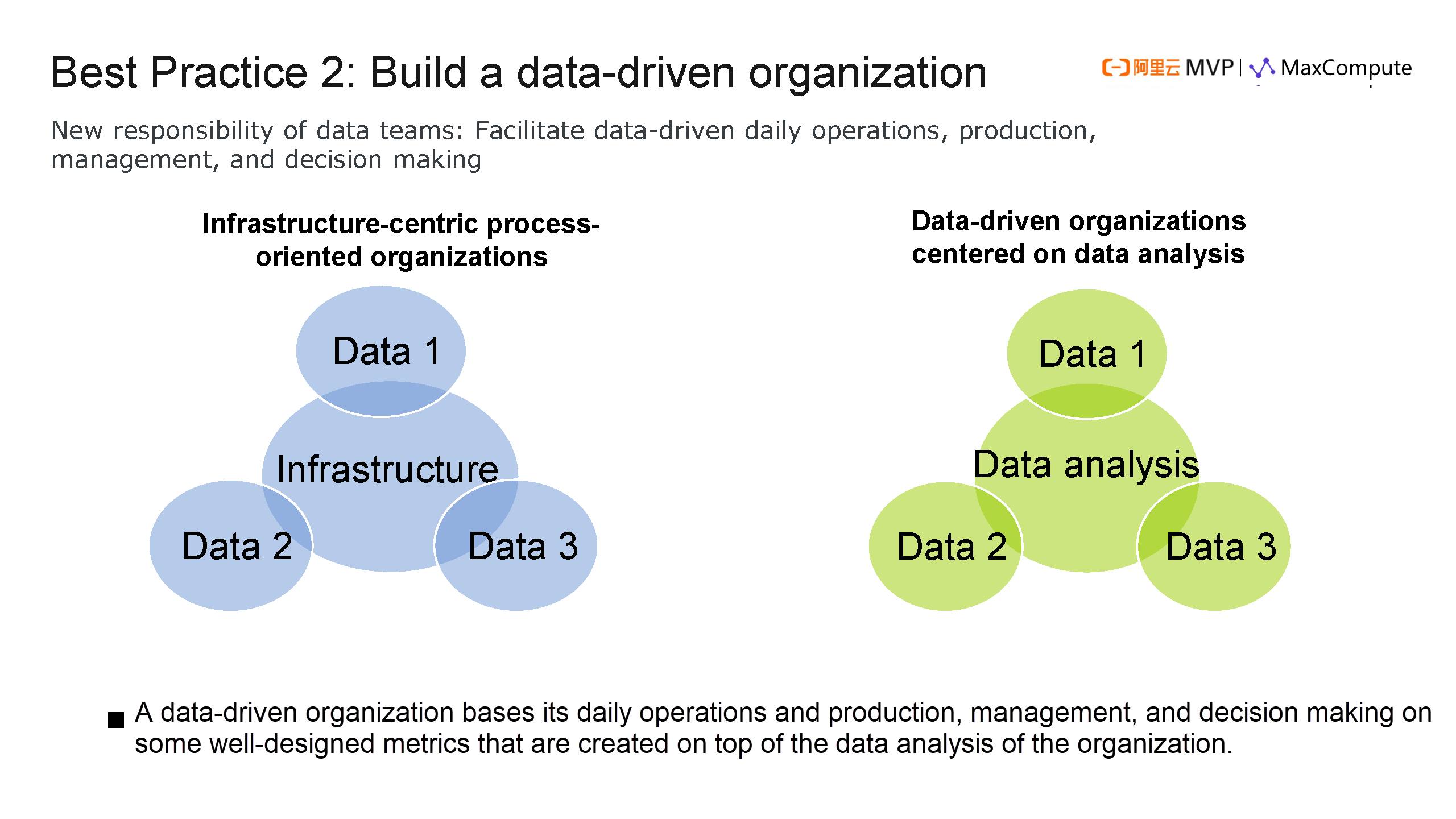

We have classified data-driven organizations. Which support does data provide: our operations, basic production activities, management, or decision making?

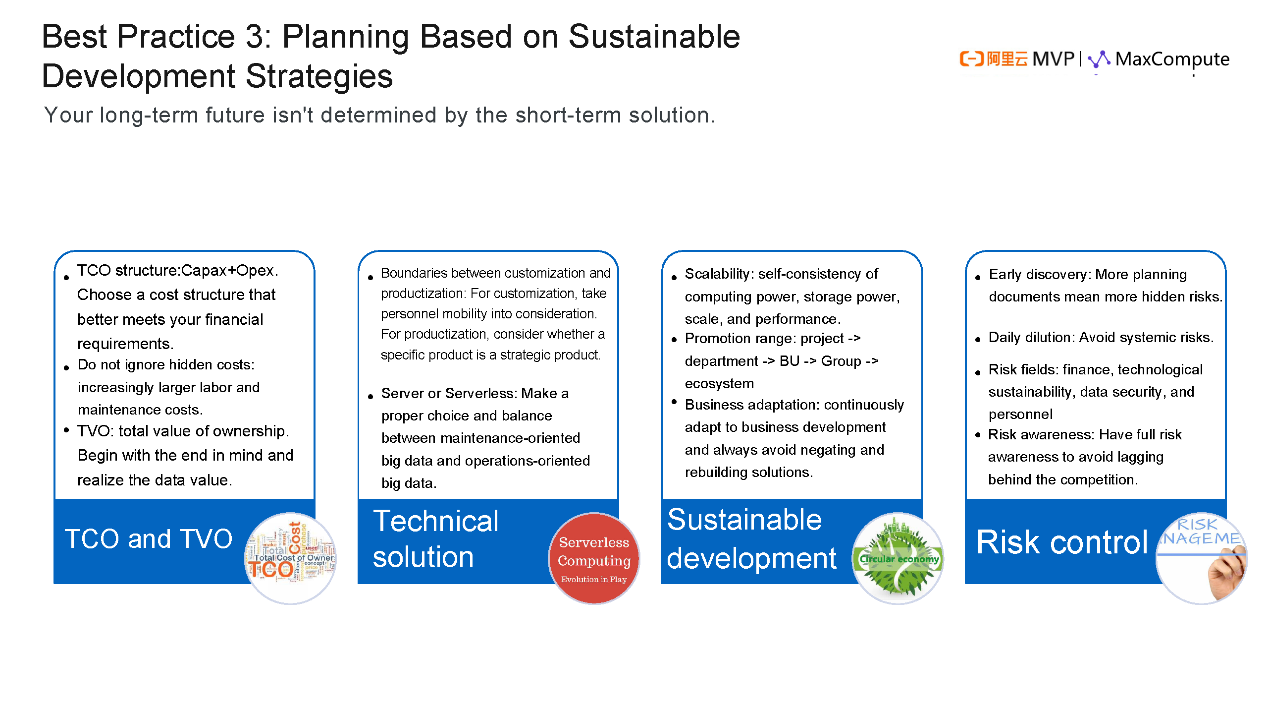

Planning must be based on the sustainable development policy. We need to think about this problem with our focus on the final results at the very beginning. It may take one to three years to obtain final results. At Alibaba, we have a motto-the long distance ahead does not matter if we have started on the right path. However, we chose the wrong path and moved toward the opposite direction. Four factors need to be considered in the planning phase.

I will talk about the most critical factors. First, when it comes to the TCO, I think that you need to pay attention to the financial structure. Different companies have different preferences. Some want more cash flow and other are inclined to spend their money immediately. Your spending plan needs to be in accordance with your financial cost structure. Do you want a one-time capital expenditure? Or do you want to use your money for daily operations? You need to know what your enterprise wants. If you cannot clearly understand this problem, you may encounter many conflicts later.

Second, in the planning process, do not ignore hidden cost. You need to know what your hidden cost includes. When it comes to the TVO, we need take the final results into account at the beginning to achieve the business value of data.

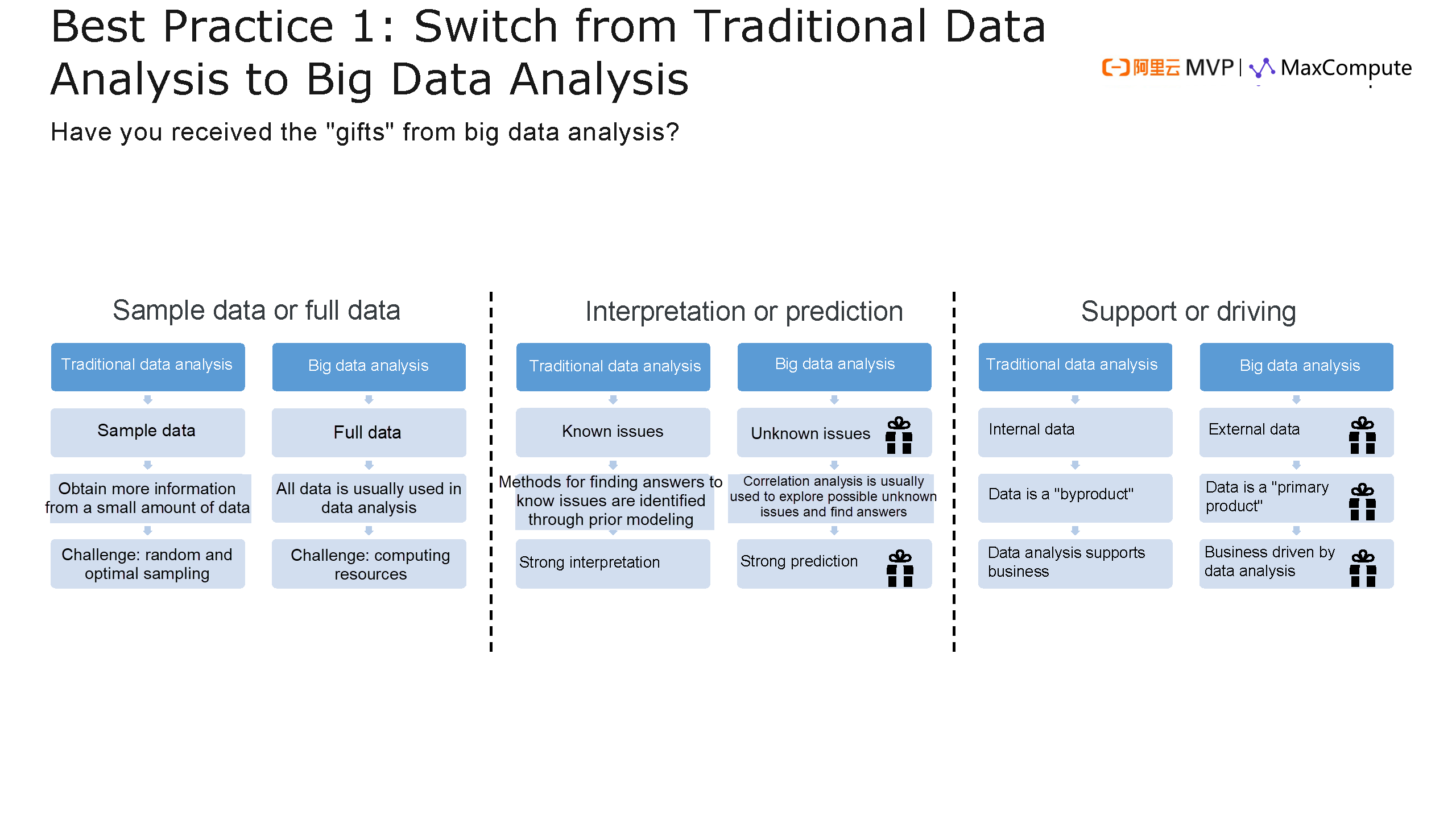

When working on big data (either platforms or data), you may have unwanted and disappointing results if you cannot help your enterprise implement the business value of data. You have to explore the value of your data whether you provide business support or driving forces

Now let's move on to technical solutions. Customization often becomes a black box when risks are identified later. We need to understand the boundary between productization and customization. When you continuously extend your customization boundaries, you need to consider what it means if these things become black boxes. In addition, you also need to consider whether you choose a server or serverless solution and whether you focus on your platform or data.

Another consideration is business adaption. You do not always have to rebuild a solution from scratch. Risks should be discovered as early as possible. A plan often comes in the form of a PPT presentation and may have lots of defects. The later the execution is, the longer the defects hide. It will take a high cost to solve the snowballing of risks at a later time. This is similar to solving software development defects. We need to have risk awareness in this regard.

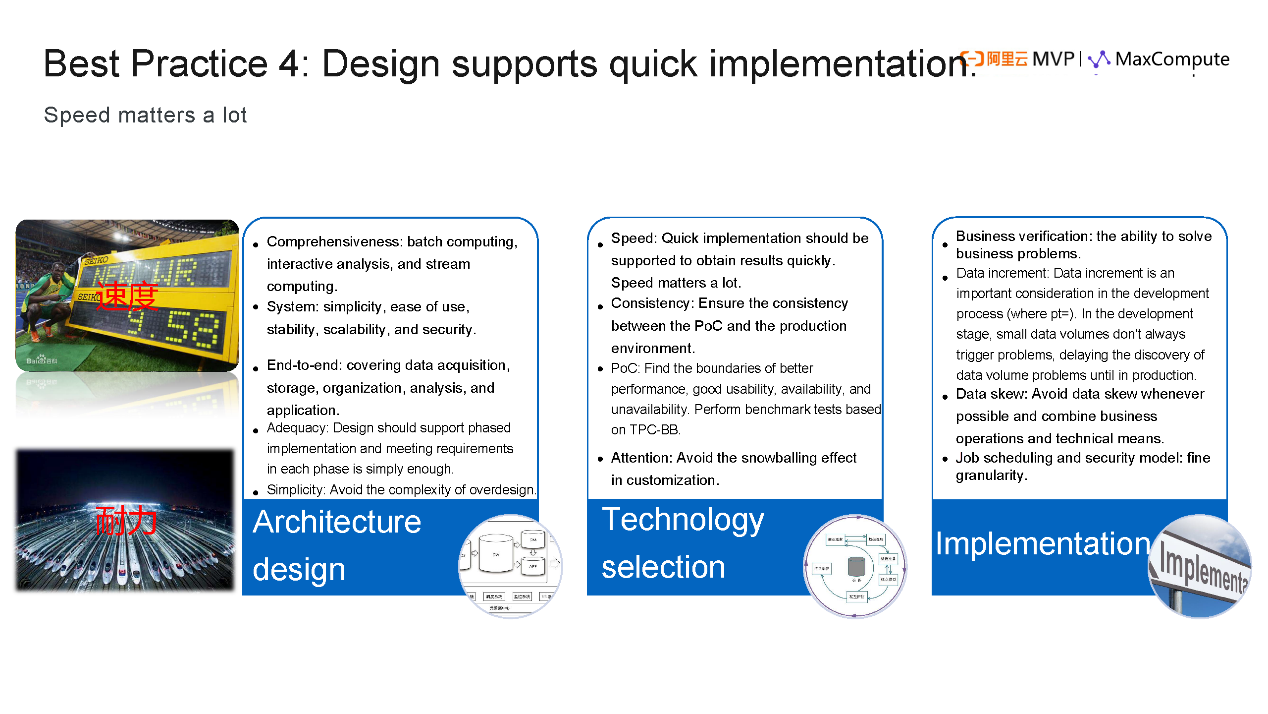

Design in the planning phase is required to support fast implementation. This does not mean you have to make it in just one day. However, in the Internet industry, it may take two weeks, one month or a longer period. Often three months is considered a phase. Three months is a really long period of time.

In the architecture design, we need to consider the comprehensiveness, system, and link. Architecture design is an interesting job. However, my suggestions are enough to design a proper architecture. Do not overdesign your architecture.

For the technical selection, what do we expect from a PoC? Which characteristic should we pay attention to, good usability, availability, or unavailability? You need to find their corresponding boundaries and inflection points. You may always try to find the optimal point in each system, but you do not know at which point the system is unavailable. Let me show you one example and you will understand what I mean. When you evaluate a system, you need to know which aspect of the system is better, which part is easy to use, where the system shows good usability and unavailability. Pay special attention if someone says that all aspects of the system are easy to use. You need to avoid snowballing and potential traps in the customization part.

In terms of implementation, we need to take incremental data into consideration. For example, you may not consider partitioning when writing a piece of data in the data development phase. After 180 days, that data volume is 180 times larger because you do not consider expansion at the beginning. You need to pay attention to this problem. Our team will find a technical best practice later. Considerations in the implementation phase include data skew, job scheduling and security model, and fine granularity.

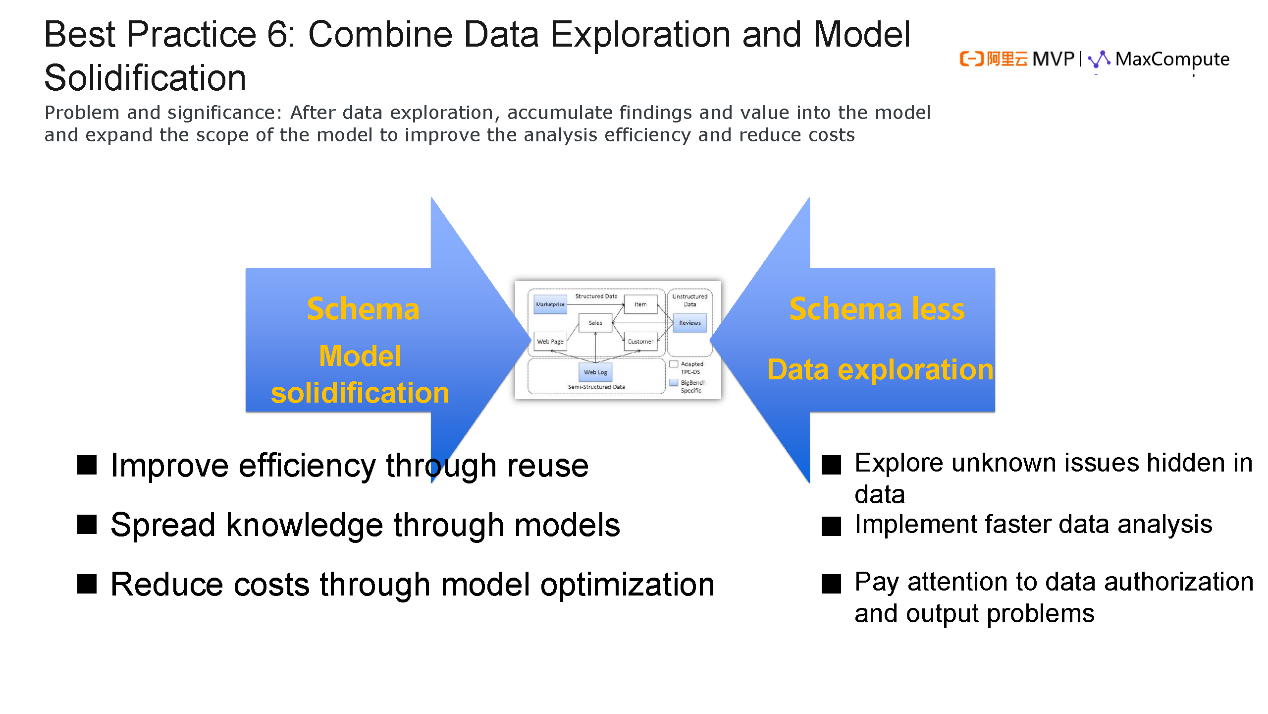

We need to combine the data exploration and model solidification in the data value presentation. Because all the data warehouses are related to the model solidification, models must be taken into consideration. However, for models, we all know that the cycles may become long and rigid and that business may have less inflexibility. Therefore, we need to combine model solidification with data exploration. As to the relationship between data blending and data warehouses mentioned before, I think data warehouses are more suitable for model-related work, while data blending is more suitable for data exploration. Data exploration allows you to quickly discover hidden problems and analyze data. However, this also brings some challenges, and you have to solve data authorization and output problems. You cannot put all your data together and let everyone see whatever they want to see. Despite huge efforts to ensure data security, data warehouses still have some security challenges. This is a real problem.

After you explore data, how can you make a data warehouse in a more agile way? You can increase efficiency by using the model built on your exploration to improve reuse. You can use a model to spread knowledge, for example, how to determine customer activeness. When we obtain this model, other developers can also understand that the model contains knowledge. We can also reduce the cost. The combination of schema and schemaless solutions will further improve the agility of data processing and analysis.

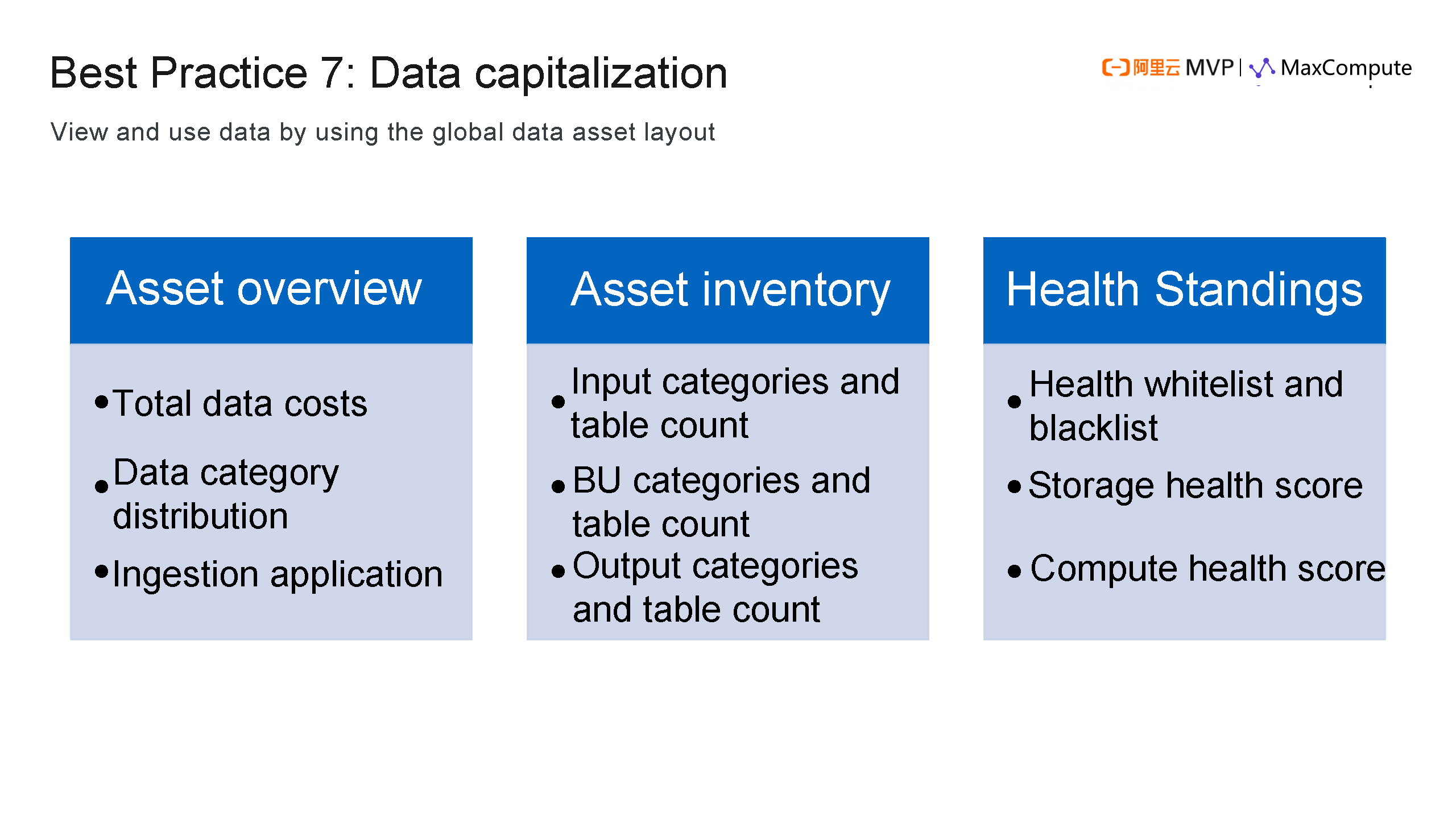

Another approach is to implement data capitalization. Otherwise, you would never understand your value. What are you actually helping others to achieve? You are helping others reduce the cost. As a data developer, you clearly know your value when the data is capitalized. What can you do through data capitalization? Data capitalization provides a basis for governance. When you list all the expenditures, you know where too much money is spent, which aspects have insufficient budgets and whether the expenditures are healthy or not. With the aforementioned information, we can perform data operations.

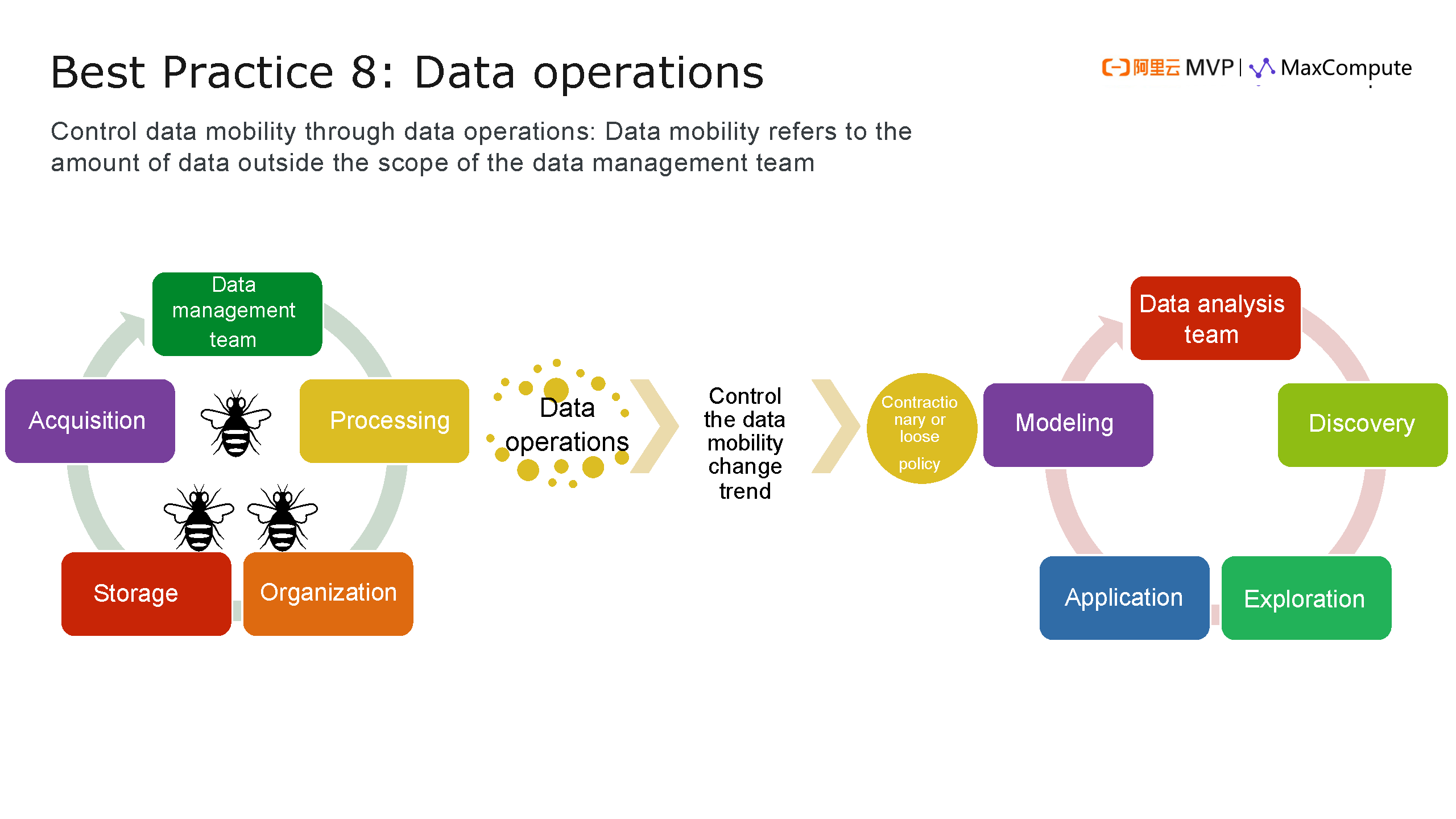

Data operations are often mistaken with data-driven operations. However, they are two completely different concepts. Data-driven operations means that operations are driven by and based on data. Data operations refers to the operations on data. To better understand this, we can take a look at a monetary concept-liquidity. We need to improve the data liquidity and supply amount outside the data management team (this is a term in the financial service industry). I actually referred to a financial model when I made preparation for this, because the financial service industry has the most experience in solving liquidity problems.

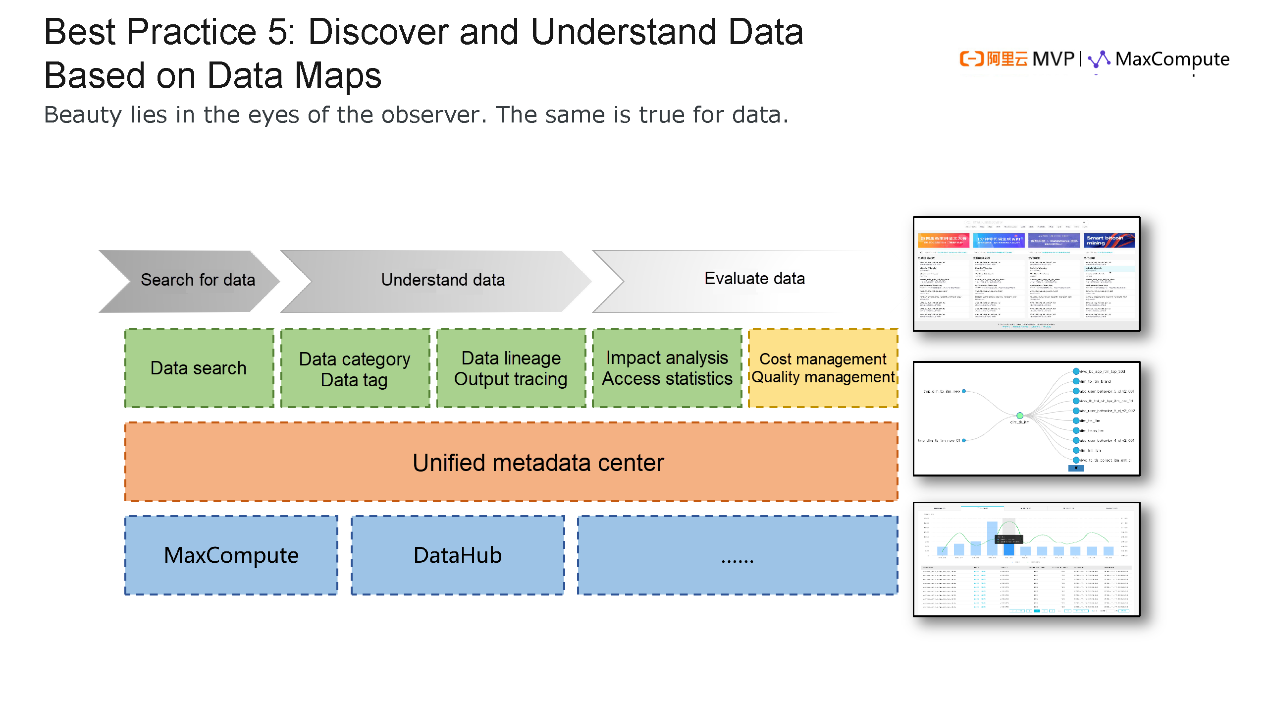

In the diagram, for the supply amount, we can see that our data management team works hard like bees to obtain, process, organize and store data. All the bees are hardworking. Where is the owl? Sorry, I do not know why the owl is not shown in the diagram. A data analyst should be like an owl with keen insights rather than a bee. In the data analysis phase, you need discovery, exploration, application, and modeling work. What are the most important things that you want to do through data operations? Control the trend of the data liquidity to know who will use your data? Where does your data flow? Do you adopt a contractionary policy or a loose policy to fit your company's current data liquidity? We have to perform data analysis. You can have it done by either your data security team or a dedicated team. If this process is omitted, you are subject to great risks.

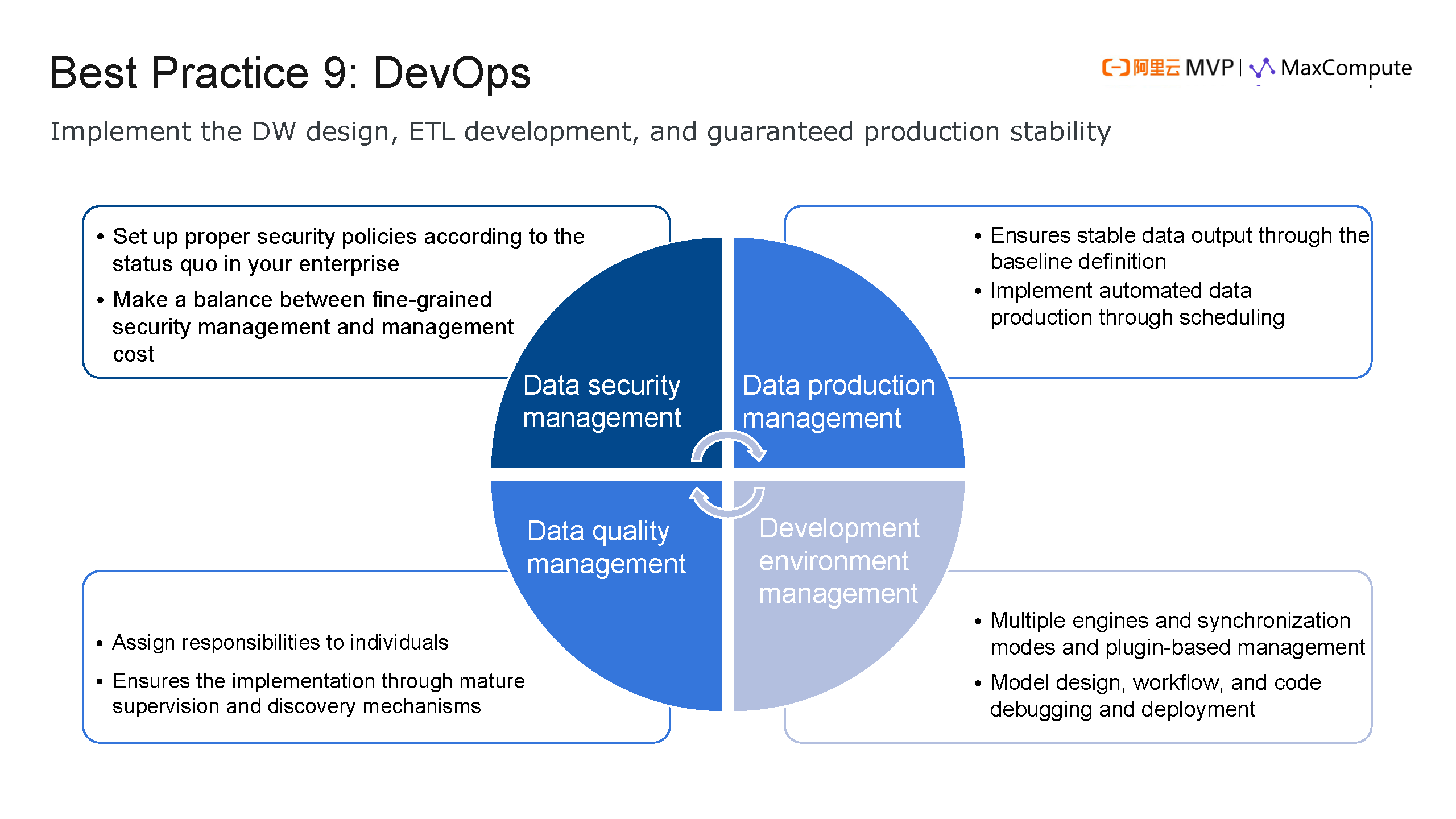

For data security, data production management, data quality management, and development management, we need to carry out work reasonably and properly and avoid excessive effort. Take data security for example. As the MaxCompute PD, I initially recommended MaxCompute to customers for fine-grained security management. Later, I gradually learned something from my customers. Although fine-grained security management is good, customers will not use it until they really need to. Before they really need fine-grained security management, they have a good reason for not adopting it. No matter what kind of management it is, the higher the fine granularity level, the higher the cost. A practical issue is whether your enterprise is willing to invest in this aspect or not. If you do not receive sufficient investment, you have to limit the data authorization scope to departments and teams, with a project-level authorization granularity. You have to reach a reasonable balance between the fine-grained security management and the management cost. You need to take production management baseline, development environment, and quality control into to account. Make sure that each responsibility is assigned to specific persons. In addition, you have to establish a mature supervision mechanism to guarantee the implementation and ensure data quality.

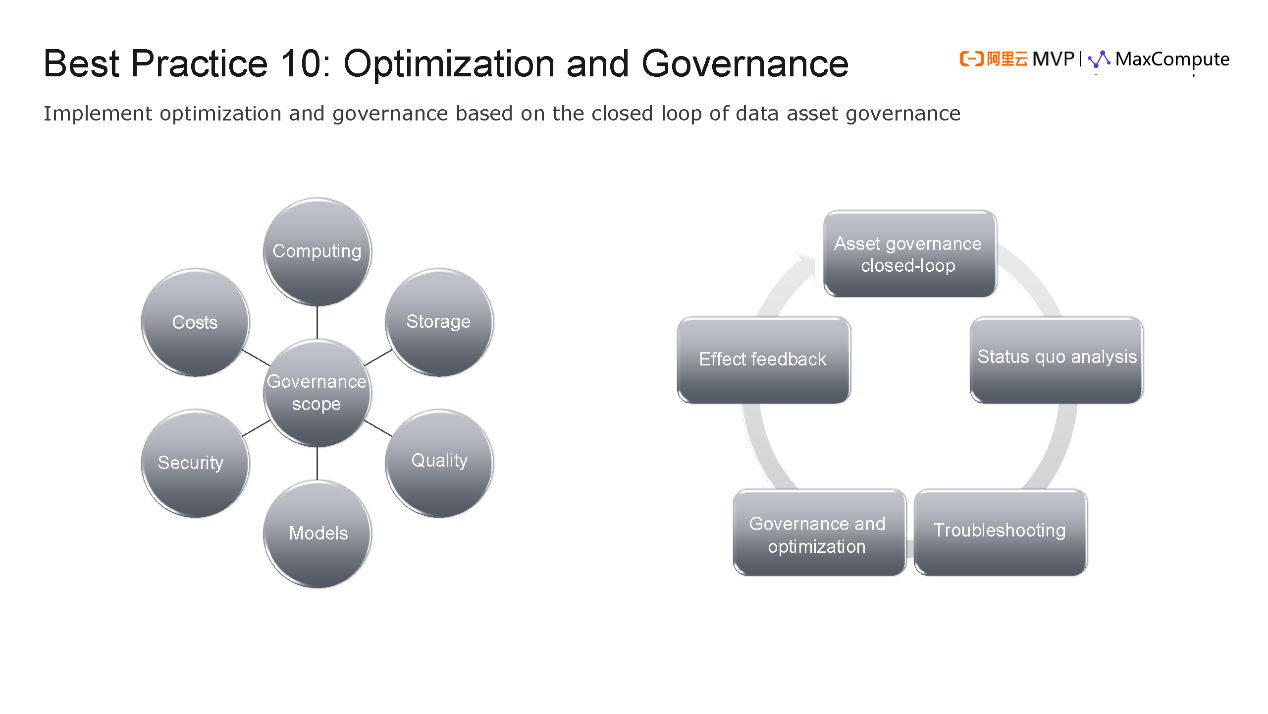

Optimization and governance are often two challenging tasks. Let's talk about our cities first. In the past, we used the term "city management". Today we use city governance more. When a city is small, management is enough to keep the city running. When it grows larger, governance is required. What problems does city governance mainly solve? Three problems: dirty, cluttered, and messy. Similarly, our system may become dirty, cluttered and messy when it grows large enough. Therefore, we need comprehensive governance in the aspects of the computing layer, storage, quality, modeling, security, and cost. Among all these governance aspects, cost governance is the most effective one. Many tasks are included in the closed loop of governance, including status quo analysis, problem diagnosis, governance, optimization, and effectiveness feedback. To fundamentally eliminate the dirty, cluttered and messy situation, we have to implement all these tasks.

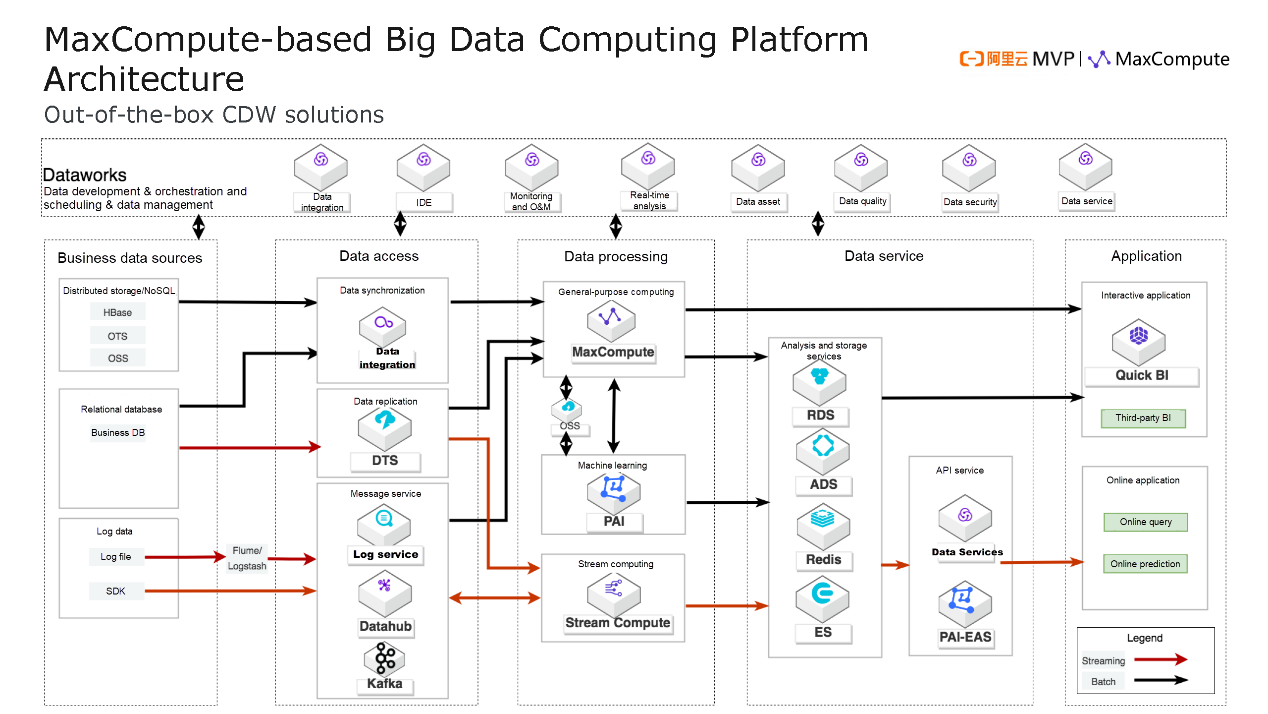

Now, let's see how to build a big data platform based on MaxCompute. In terms of data development, we use the DataWorks platform. DataWorks is a complete big data solution that covers areas from the connection to business data sources, data access, data processing, and data services to applications. On this big data platform, we emphasize small core and big peripherals. In fact, on the big data platform, data processing accounts for more than 80% of the total cost. Therefore, it is highly necessary to simplify data processing. Based on this policy, Alibaba has developed a complete set of solutions. We have MaxCompute for data processing, PAI for machine learning and StreamCompute for stream computing.

We discussed data before, and now we are talking about the platform. This diagram reflects the aforementioned design concept that data breeds the platform and vice versa. The upper layer is based on data and the underlying layer is platform-centric. The combination of the data and platform layers gives birth to our big data processing platform.

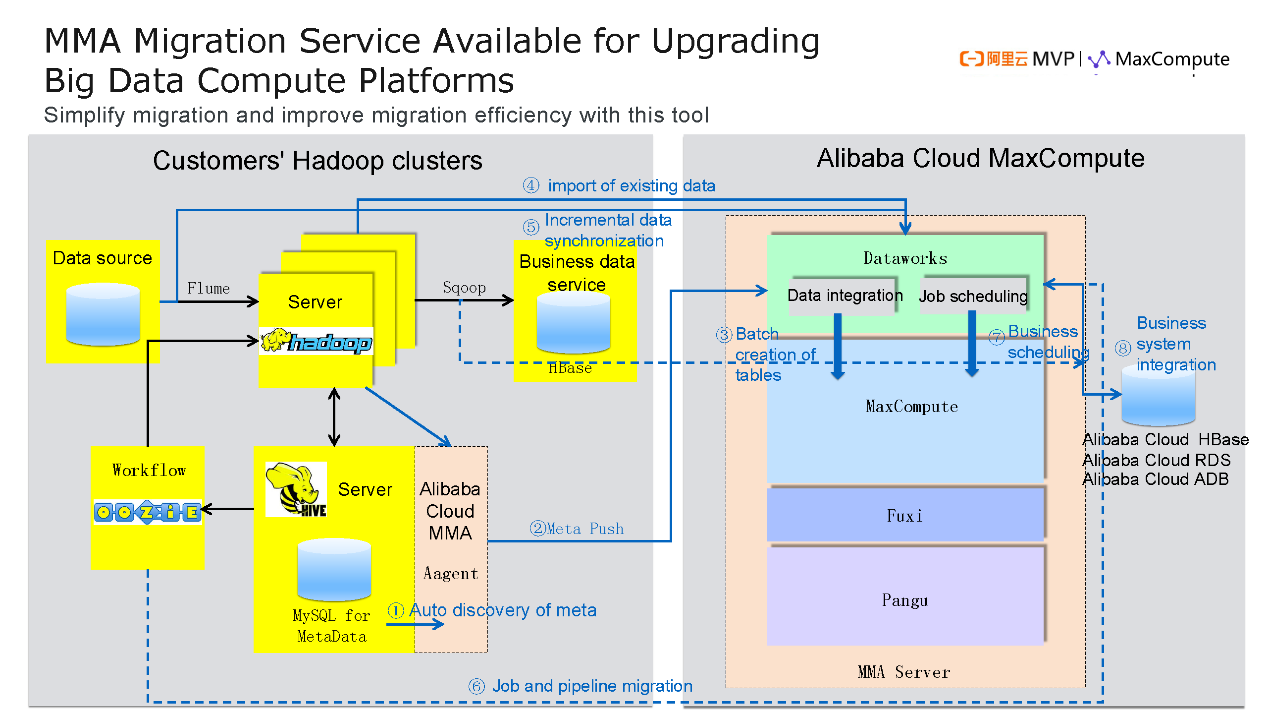

According to the customer analysis, we find that many customers are using Hadoop. Therefore, we released the MMA migration tool and services to help migrate Hadoop clusters to Alibaba Cloud MaxCompute and DataWorks, and machine learning PAI and StreamCompute to implement acceleration and improve the efficiency and precision.

In summary, behind our methodology and its implementation is my figure 8 ring theory. Data is on one side and the platform is on the other side. On the platform side, on-demand tailored solutions must be supported. The entire process is implemented in stages. We need to pay attention to performance, cost, flexible scalability, data security, and O&M complexity. On the data side, we need to focus on and support end-to-end data connections, global data, and data capitalization.

I hope our guidance and technical solutions can help you explore new best practices based on the data warehouse on the cloud and avoid detours. This is all that I want to share today. Thank you for your time!

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - July 14, 2021

Alibaba Cloud MaxCompute - January 21, 2022

Alibaba Cloud MaxCompute - October 9, 2023

Alibaba Cloud MaxCompute - December 8, 2020

Farah Abdou - October 23, 2024

Alibaba Cloud MaxCompute - October 18, 2021

137 posts | 21 followers

Follow MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Financial Services Solutions

Financial Services Solutions

Alibaba Cloud equips financial services providers with professional solutions with high scalability and high availability features.

Learn MoreMore Posts by Alibaba Cloud MaxCompute