This article is compiled from an interview with Wu Gang, Senior Technical Expert at Alibaba Cloud, to introduce the Apache ORC open-source project. Wu Gang discussed the differences between mainstream open-source storage formats ORC and Parquet, and the reason why MaxCompute chose ORC.

The following content is based on his speech and the related PowerPoint slides.

Wu Gang, a senior technical expert at Alibaba Computing Platform Department and the PMC of ORC (a top-level open-source Apache project), is mainly responsible for the current work related to the storage line of the MaxCompute platform. Previously, he worked at Uber headquarters and engaged in Spark and Hive related work.

As described on the official Apache ORC project website, Apache ORC is the fastest and smallest column-based storage file format in the Hadoop ecosystem. The three main features of Apache ORC include support for ACID (that is, support for transactions), support for built-in indexes, and support for various complex types.

Apache ORC has many adopters. For example, the well-known open-source software, such as Spark, Presto, Hive, and Hadoop. In addition, in 2017, the Alibaba MaxCompute technical team began to participate in the Apache ORC project, and took ORC as one of the built-in file storage formats of MaxCompute.

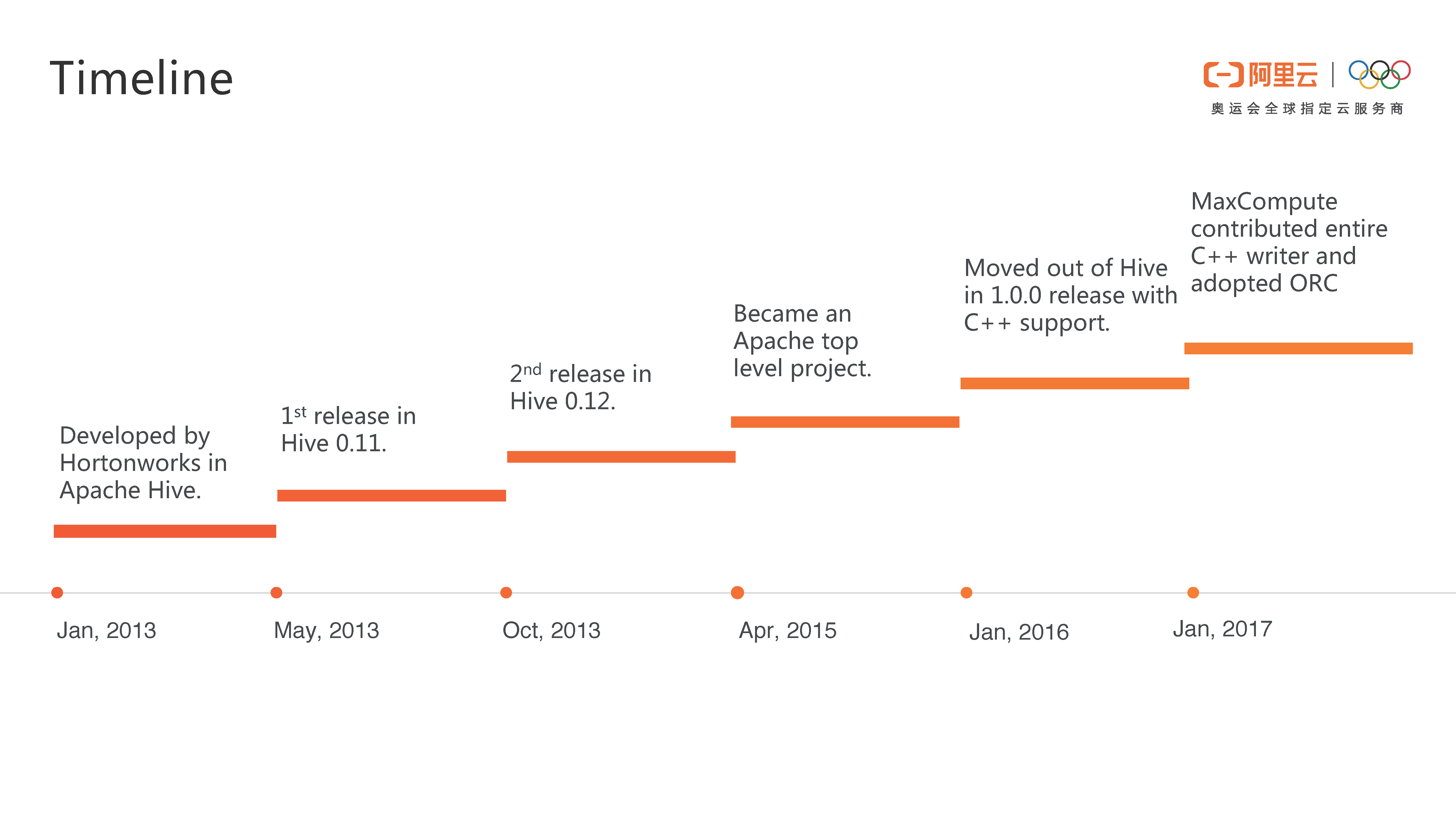

The general development history of the Apache ORC project is shown in the following figure. In early 2013, Hortonworks began to replace the RCFile file format. After two versions of iteration, ORC was incubated into a top-level Apache project, and successfully separated from Hive to become a separate project. In January 2017, the Alibaba Cloud MaxCompute team began to continuously contribute code to the ORC community, and made ORC one of the built-in MaxCompute file formats.

The Alibaba MaxCompute technical team has made significant contributions to the Apache ORC project, such as developing a complete C++ ORC Writer, fixing some extremely important bugs, and greatly improving ORC performance. The team has submitted more than 30 patches to Apache ORC project, totaling over 15,000 lines of code. And, Alibaba is still continuously contributing code to ORC. In addition, the team has 3 ORC project contributors, including 1 PMC and 1 Committer. At the Hadoop Summit in 2017, ORC Owen O'Malley also dedicated a page of his PPT to praise the contribution of Alibaba to the ORC project.

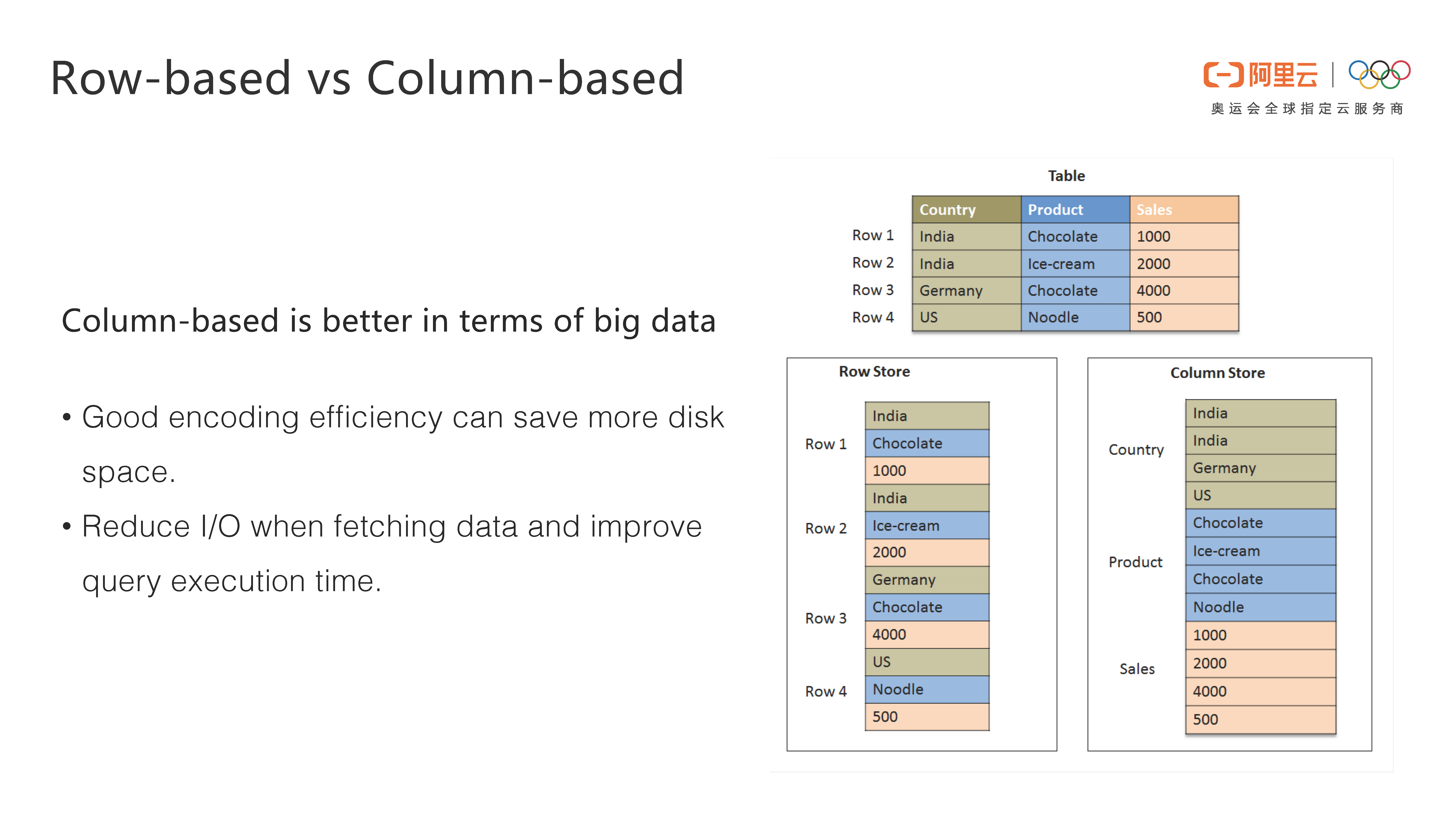

Two mainstream methods are available for file storage: row-based storage and column-based storage. Row-based storage stores each row of data in sequence, that is, stores the first row of data first, and then stores the second row of data, and so on. Column-based storage stores the data of each column in the table in sequence, that is, stores the data in the first column first, and then stores the data in the second column. In a big data scenario, only some columns of data need to be obtained. Therefore, using the column-based storage, only a small amount of data needs to be read, thus saving a lot of disk and network I/O consumption. In addition, the data attributes of the same column are very similar and the redundancy is very high, so the data compression ratio can be increased by using column-based storage, thus greatly saving disk space. Therefore, MaxCompute finally chose the column-based storage.

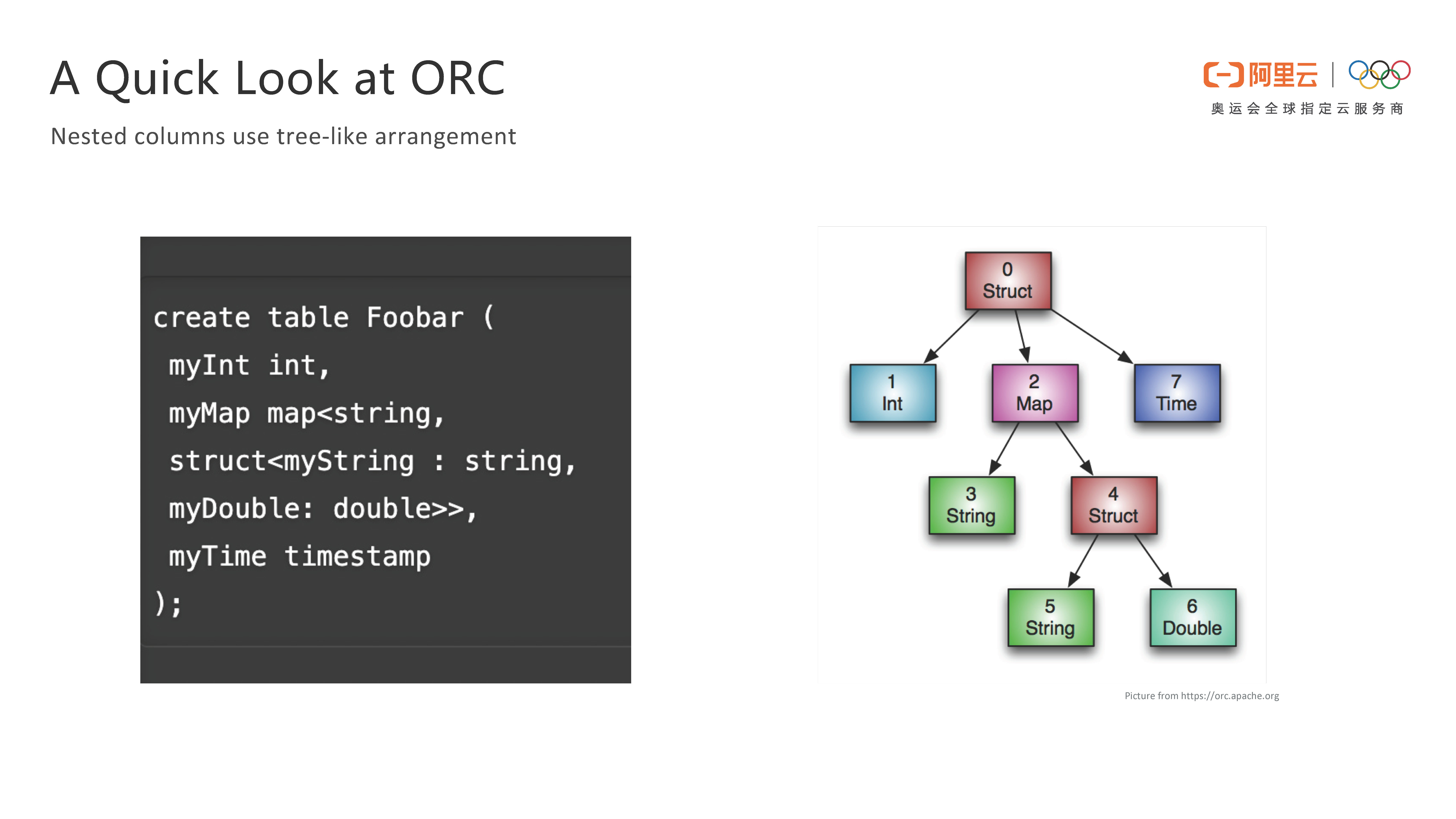

ORC modeling on the type system is a tree structure. For complex types, such as Struct, one or more child nodes exist. The Map type has two child nodes, key and value, while the List type has only one child node, and other common types are just leaf nodes. As shown in the following figure, the table structure on the left can be visually converted into the tree structure on the right, which is simple and intuitive.

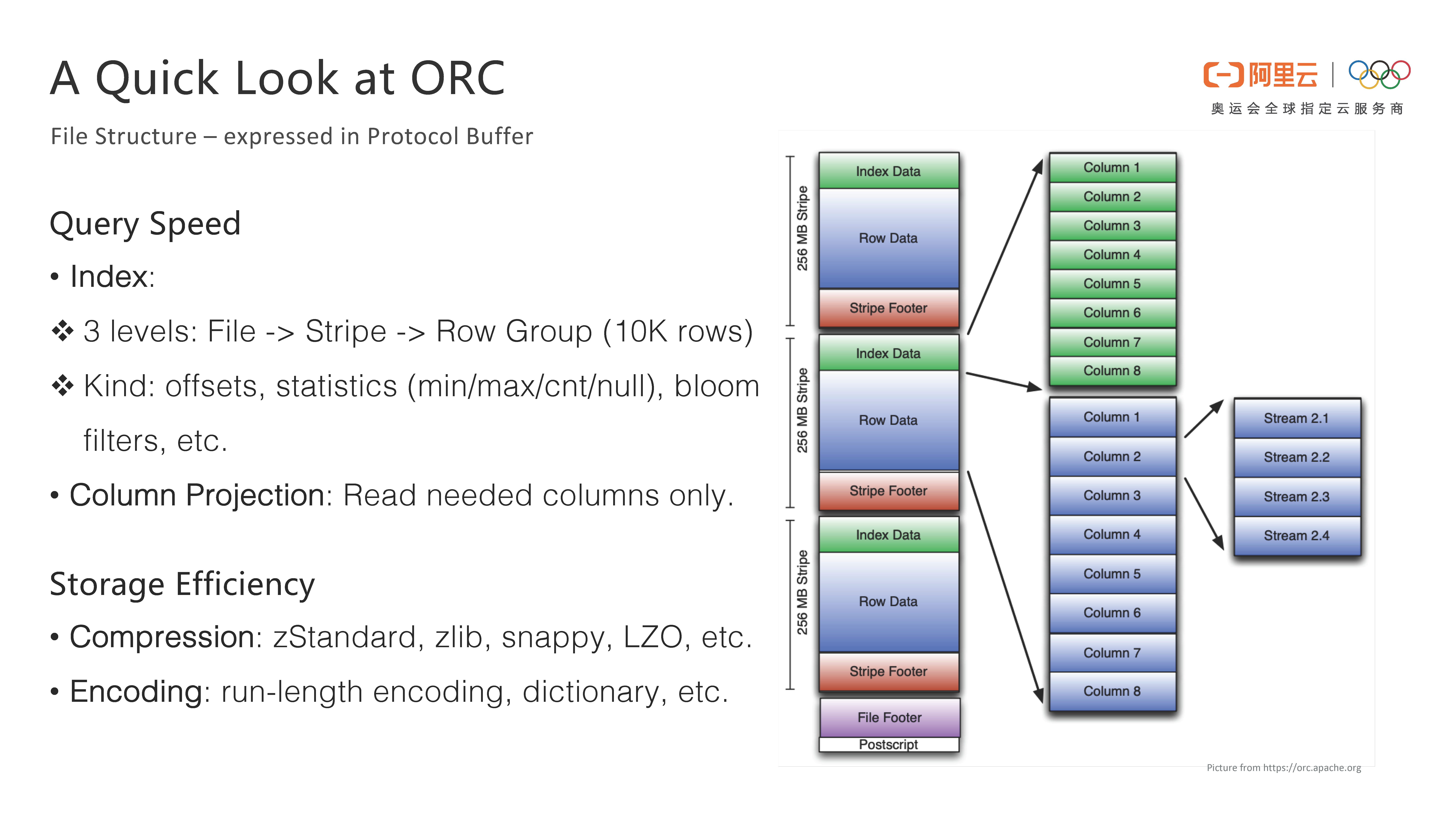

ORC mainly has two optimization metrics. The first is the query speed. ORC splits the file into blocks of similar sizes, and uses the column-based storage inside the blocks, that is, the data of the same column are stored together. For this data, ORC provides lightweight index support, including the minimum, maximum, count, and null values of data blocks. Based on these statistics, it is very convenient to filter out data that does not need to be read, thus reducing data to be transmitted. In addition, ORC supports column projection. If only some columns need to be read in the query, the reader only needs to return the required column data, further reducing the amount of data to be read.

The second optimization metric of ORC is the storage efficiency. ORC adopts common compression algorithms, such as the open-source zStandard, zlib, snappy, and LZO, to increase the file compression rate. ORC also uses lightweight encoding algorithms, such as run-length encoding and dictionary.

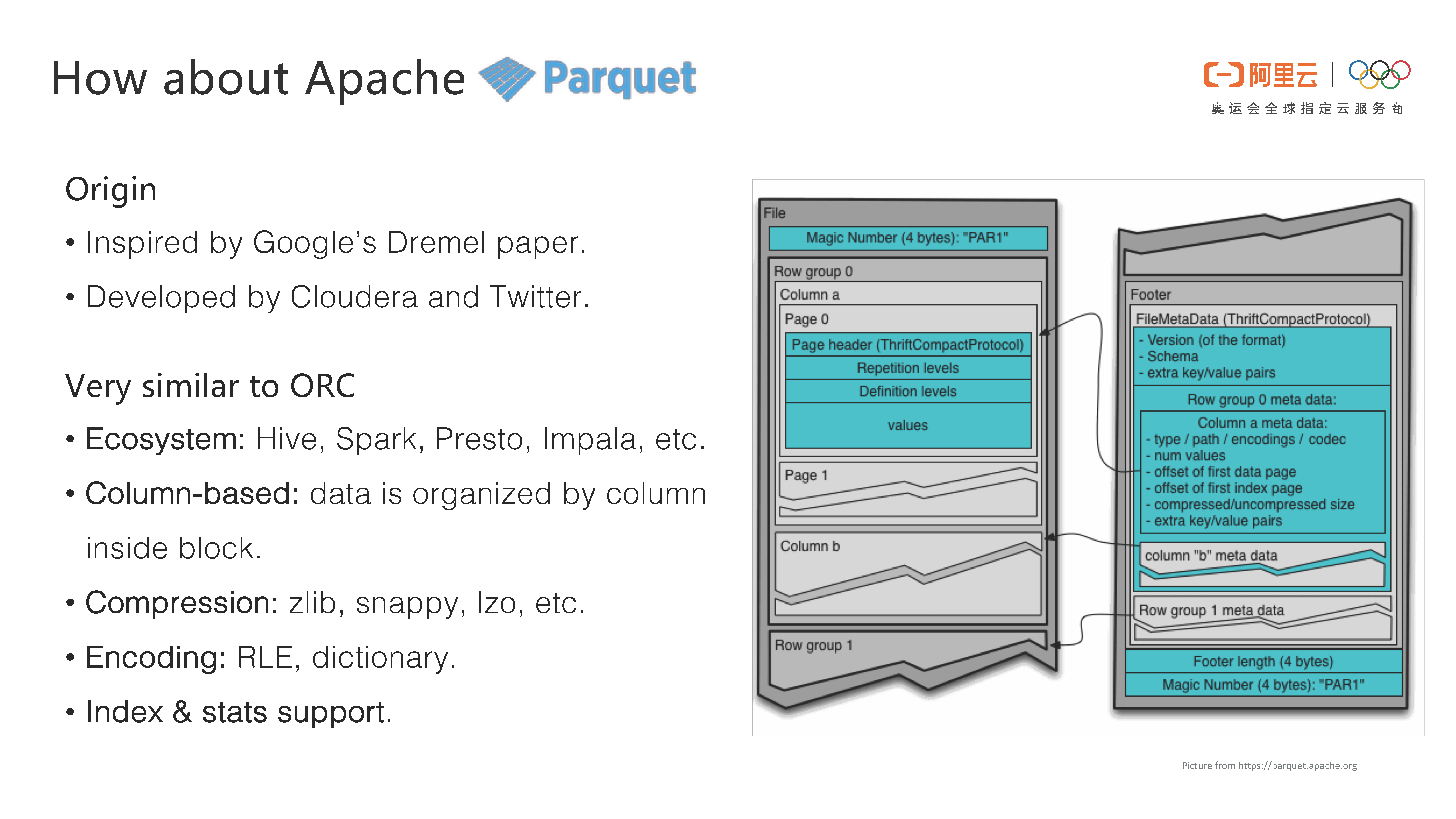

In the open-source software field, Apache Parquet is the benchmark for Apache ORC. Parquet was jointly developed by Cloudera and Twitter, and was inspired by the Dremel paper published by Google. The concept of Parquet is very similar to that of ORC. It also splits files into blocks with similar sizes, and uses the column-based storage in the blocks. Moreover, its support for open-source systems is almost the same as that of ORC, and it supports systems, such as Spark and Presto. It also uses the column-based storage and common compression and encoding algorithms, and can also provide lightweight indexes and statistical information.

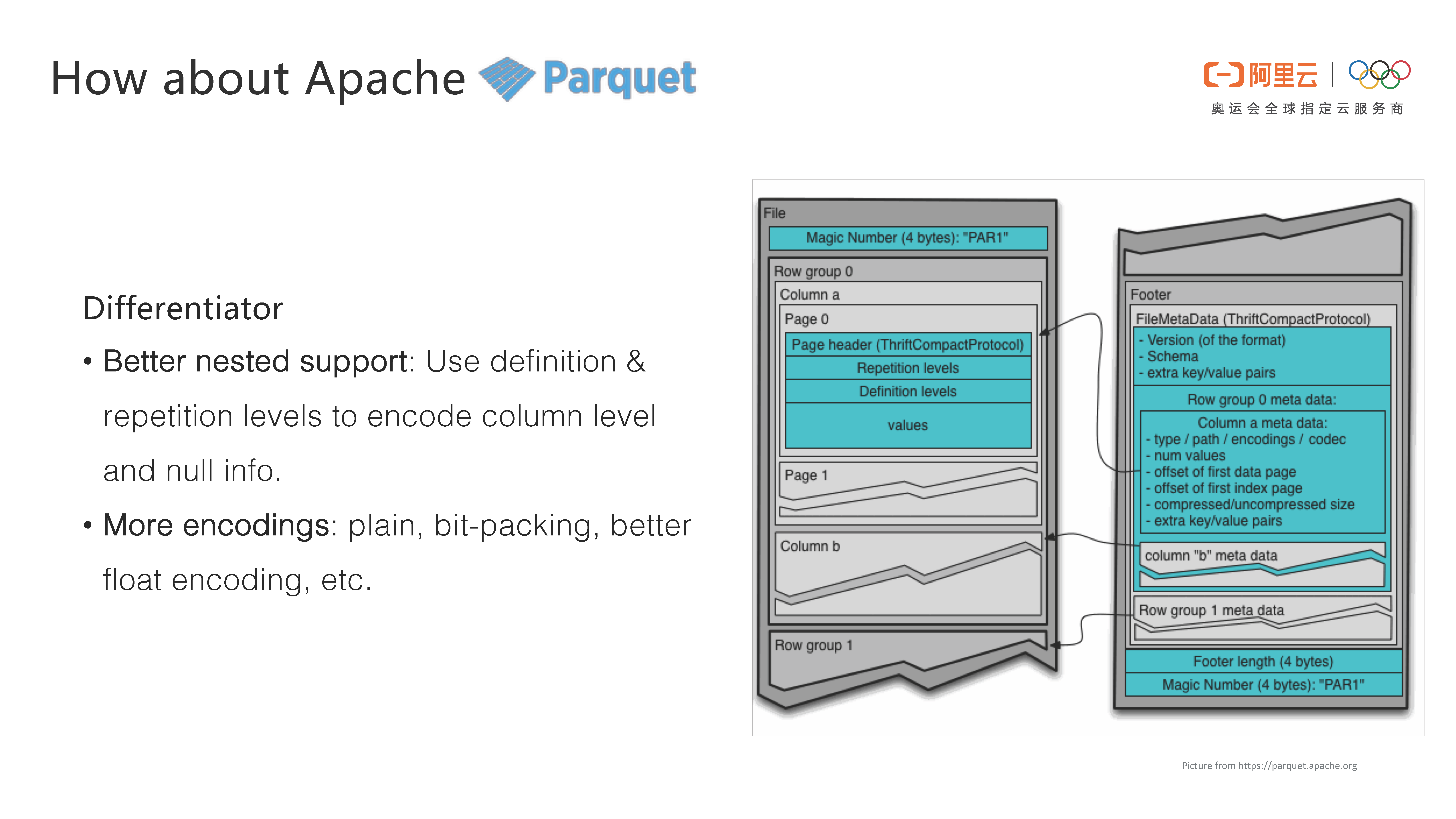

Compared with ORC, Parquet has two major differences. First, Parquet can better support nested types. Parquet can identify the information, such as the number of layers of complex types, by using definition and repetition levels. But such a design is very complicated, and even Google's paper uses a whole page to introduce this algorithm. However, in practice, most of the data is not nested very deeply. Therefore, this would be overkill. In addition, Parquet has more encoding types than ORC. It supports encoding methods, such as plain, bit-packing, and floating-point. Therefore, Parquet has a higher compression ratio than ORC for some data types.

Based on the Github log data and the New York City taxi data, the Hadoop open-source community has compared the performance of ORC and Parquet and obtained some statistical data.

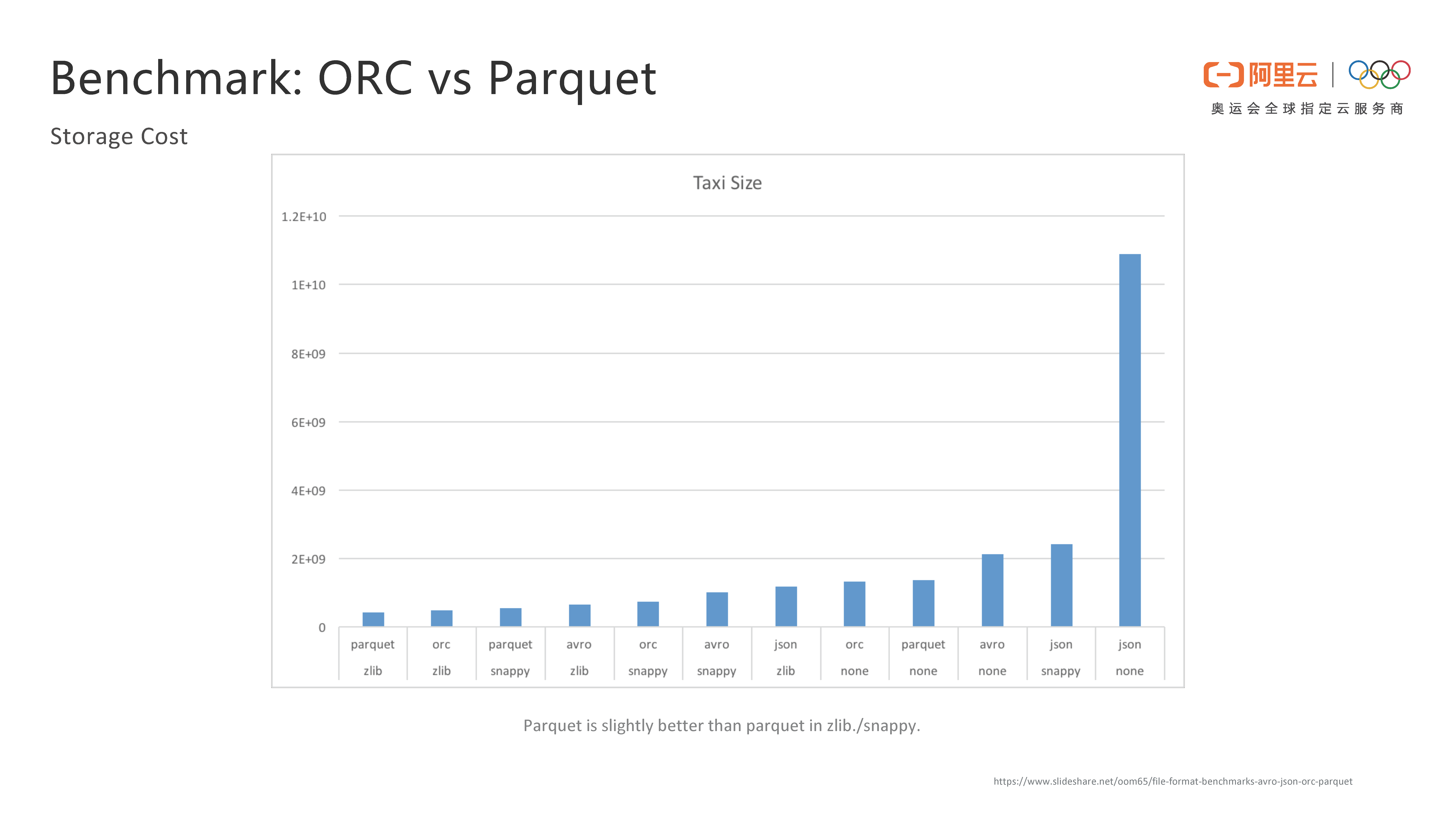

The following figure compares the performance efficiency of file storage methods, such as ORC, Parquet and JSON. As can be seen from this Taxi Size chart, Parquet and ORC have very similar storage performance.

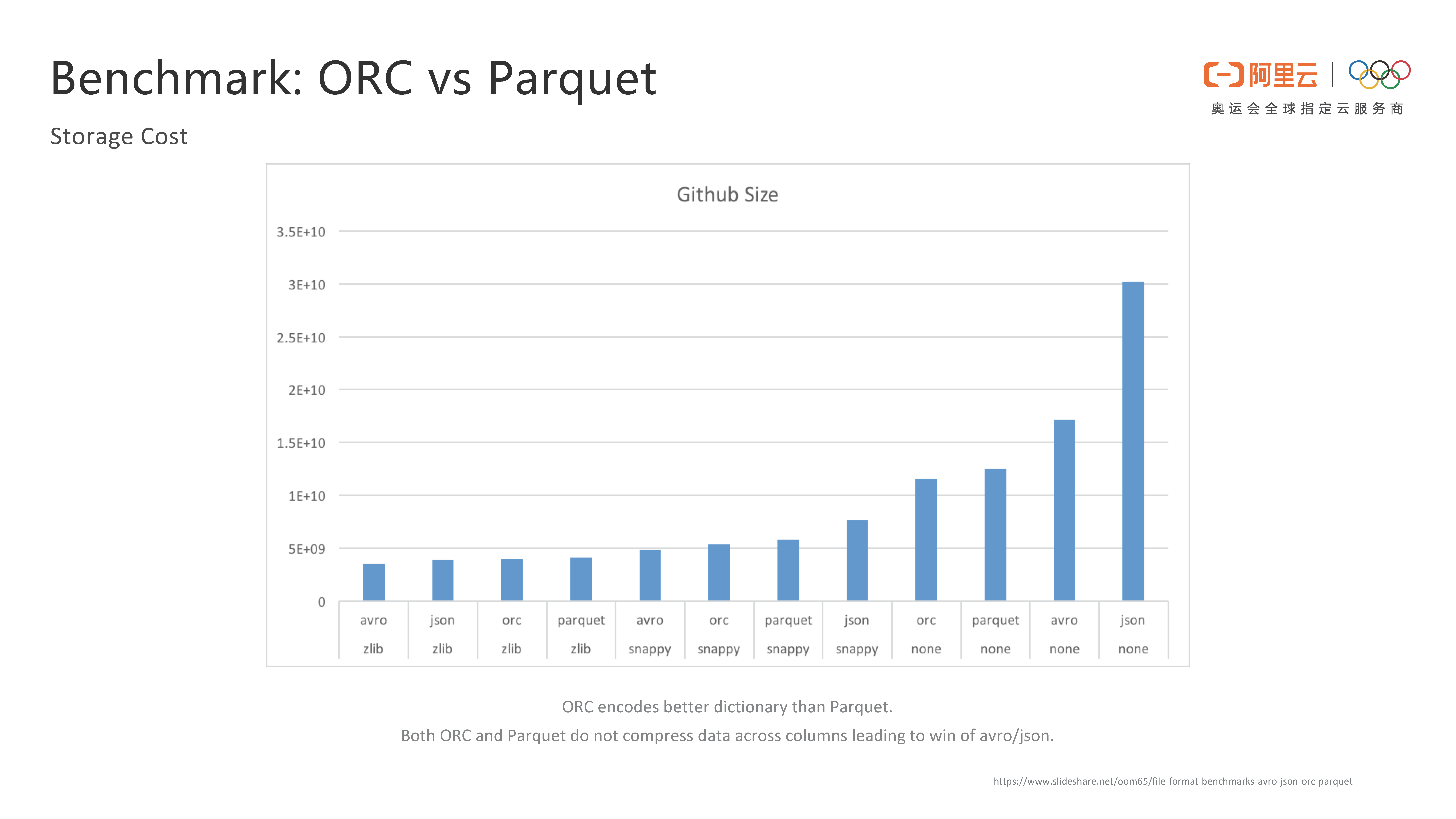

The following figure shows a comparison of storage efficiency under the GitHub project dataset. We can see that ORC has a higher compression ratio than Parquet, and the data size becomes smaller after compression.

In summary, ORC is about the same as Parquet in terms of storage efficiency, and ORC has greater advantages over some data.

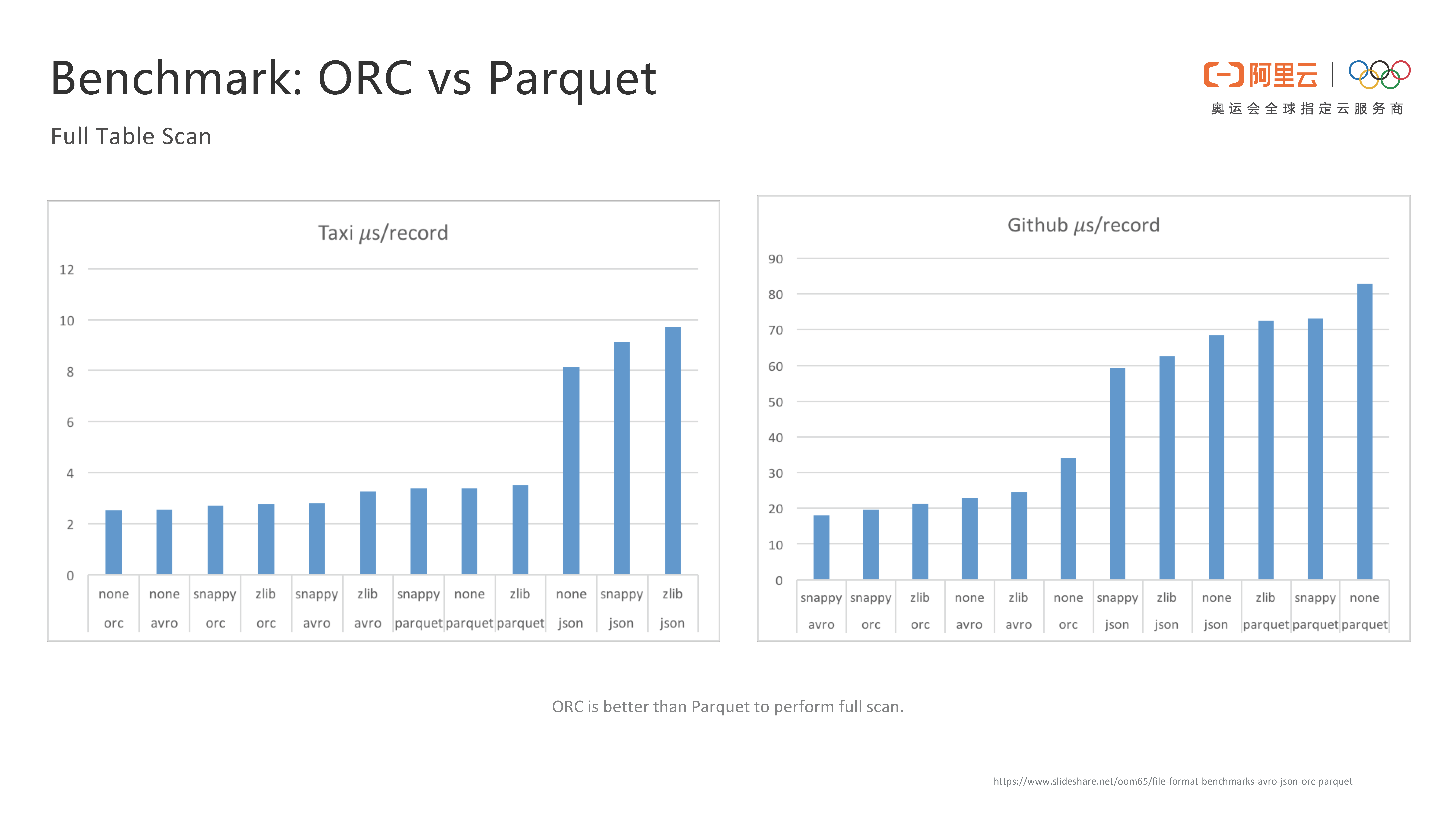

The following figure shows the table reading efficiency comparison between ORC and Parquet for two datasets. In general, ORC is faster than Parquet. Based on the above comparison, MaxCompute finally chose ORC because of its simpler design and higher table reading performance.

Through the above Benchmark comparison, MaxCompute chooses ORC based on performance considerations. From other aspects, ORC also has some advantages over Parquet, such as simpler design, better code quality, language independence, and efficient support for multiple open source projects, as mentioned earlier. In addition, the ORC R&D teams are relatively concentrated, and the founder has strong control over the project, so any demands and ideas raised by Alibaba can be quickly responded to and strongly supported, thus enabling it to become a leader in the community.

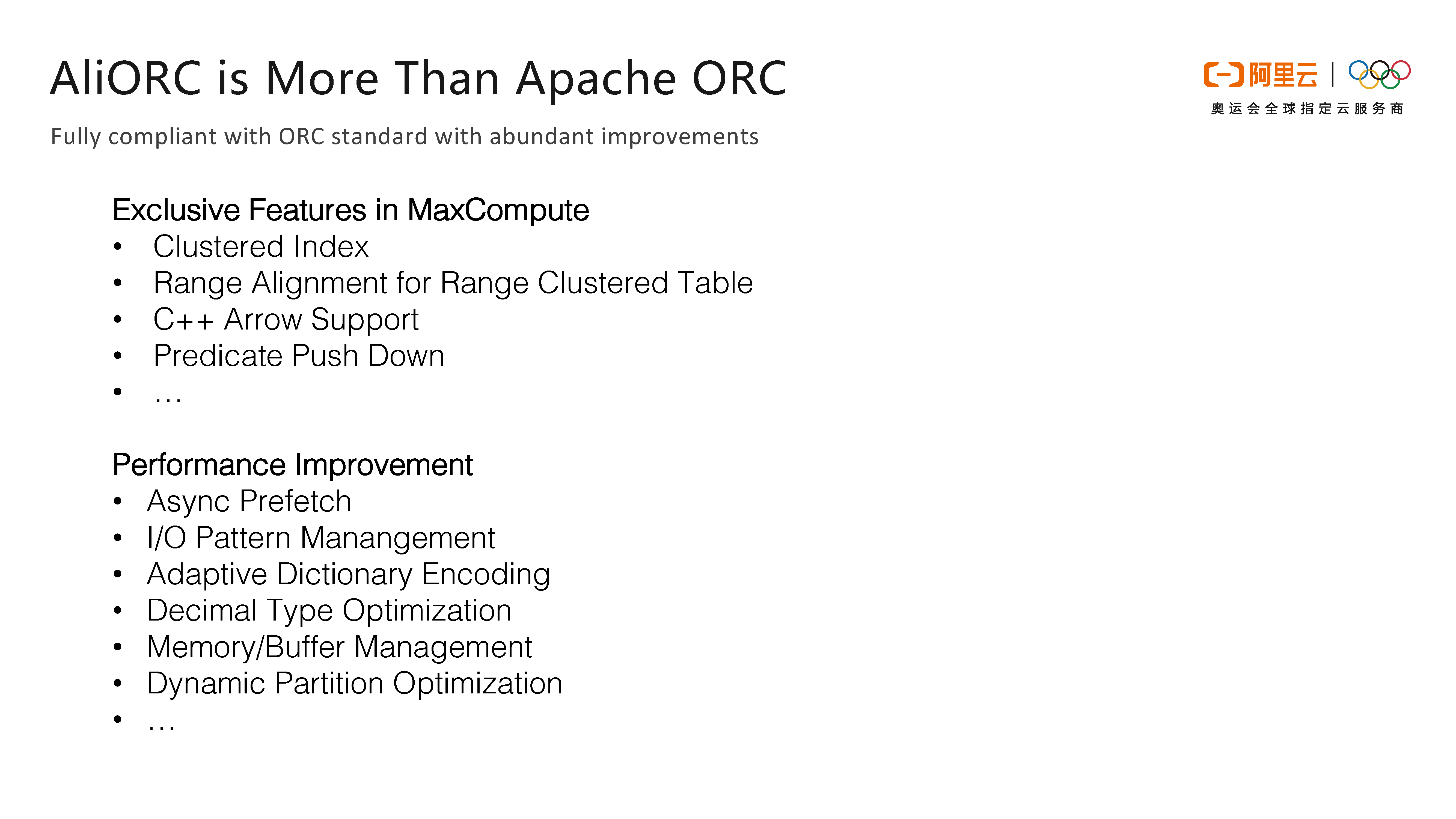

AliORC is a deeply optimized file format based on the open-source Apache ORC. The primary goal of AliORC is to be fully compatible with the open-source ORC, so that it is more convenient for users to use. AliORC optimizes the open-source ORC from two aspects. First, AliORC provides more extended features, such as support for Clustered Index and C++ Arrow, and predicate push-down. Second, AliORC has also optimized its performance to achieve Async Prefetch, I/O mode management and adaptive dictionary encoding.

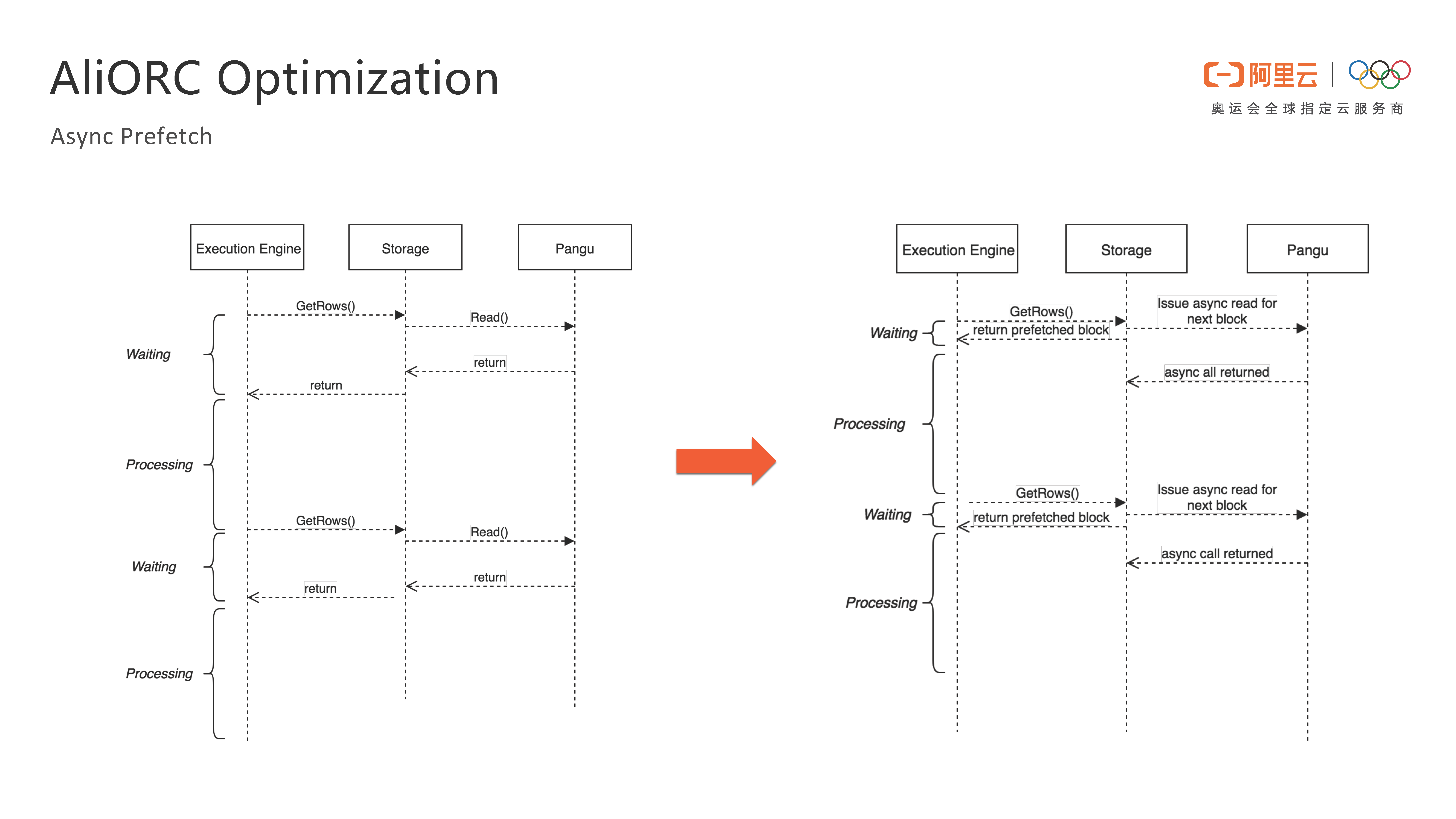

Here, several specifically optimized features of AliORC compared with the open-source ORC are shared with you. The first is Async Prefetch. The traditional method of reading files is to obtain the original data from the underlying file system first, and then decompress and decode the data. These two steps are I/O-intensive and CPU-intensive tasks respectively, and no parallelism exists between them, so the overall end-to-end time is lengthened, which is actually unnecessary and wastes resources. AliORC implements parallel processing of reading data from the file system, data decompressing and data decoding, thus all disk reading operations become asynchronous. That is, all data reading requests are sent out in advance, and when data is really needed, check whether the previous asynchronous request has returned data. If the data has been returned, decompression and decoding operations can be performed immediately without waiting for the disk to be read, which greatly improves the degree of parallelism and reduces the time required to read files.

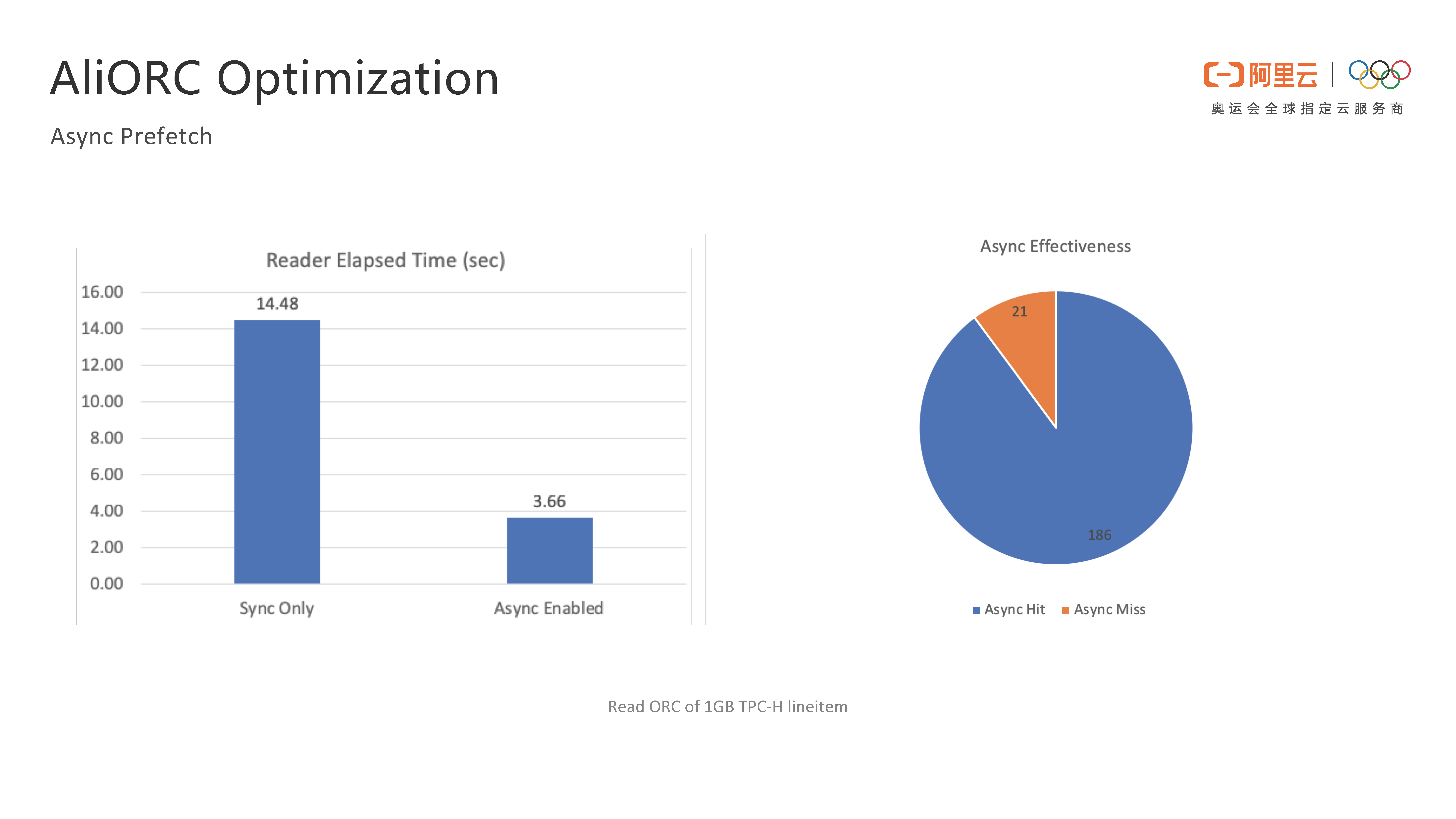

The following figure shows the performance comparison before and after enabling the Async Prefetch optimization. Before enabling Async Prefetch, it takes 14 seconds to read a file. While, after enabling it, it only takes 3 seconds to read a file, which is several times faster. All read requests are converted to asynchronous requests, but when asynchronous requests return data slowly, they will still become synchronous requests. As can be seen from the pie chart on the right, over 80% of asynchronous requests are valid in actual situations.

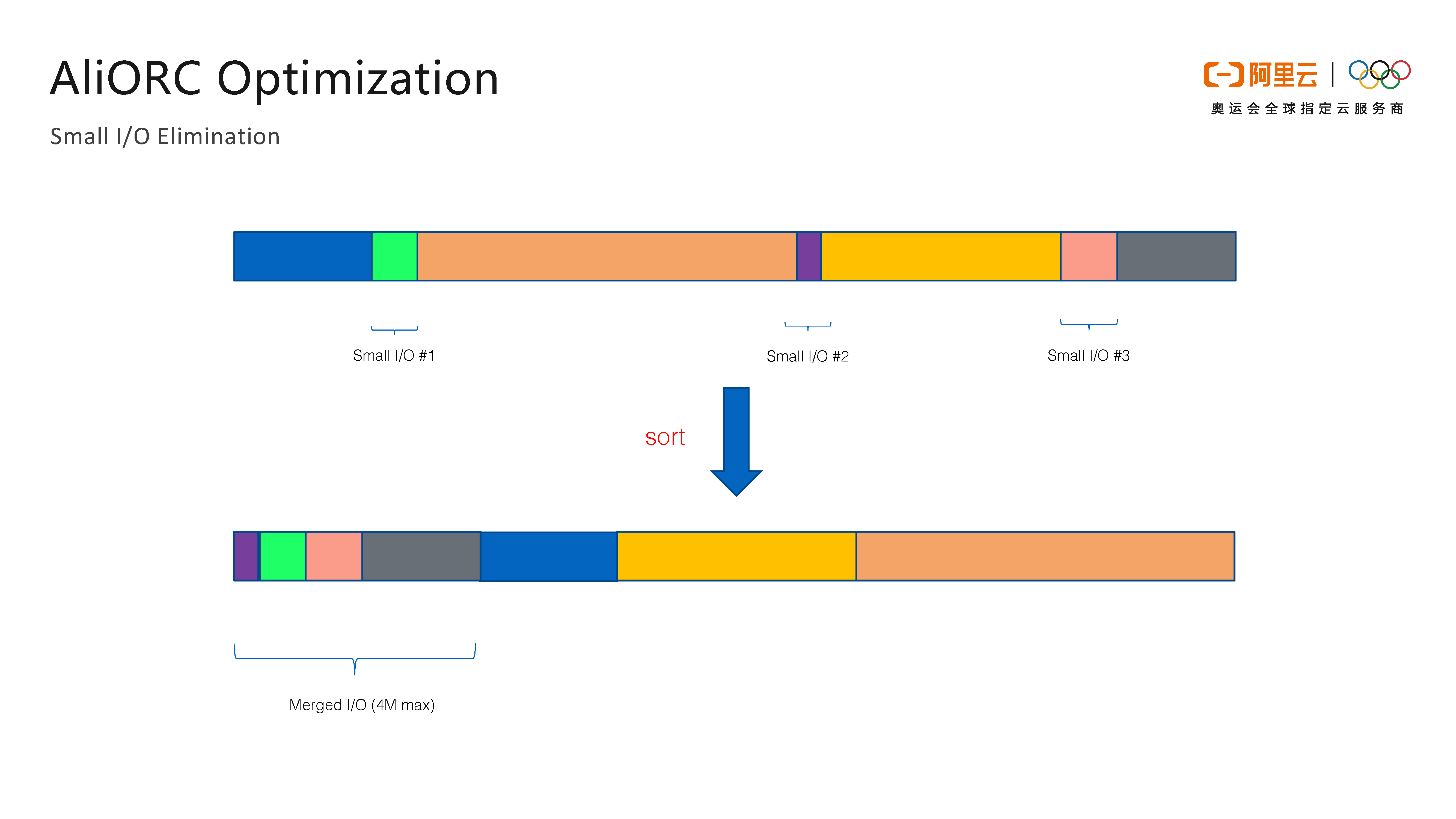

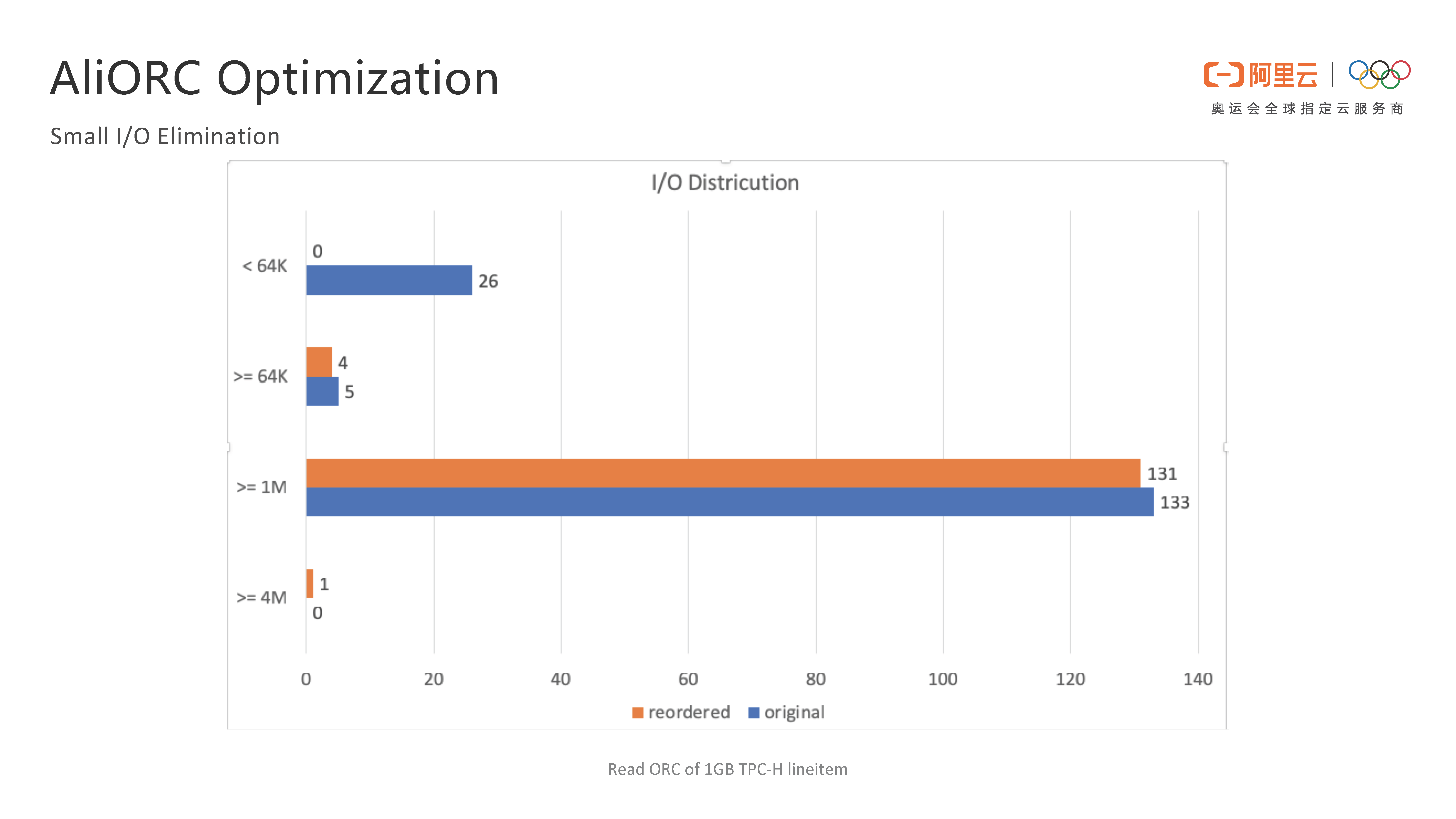

The second optimization of AliORC is the elimination of small I/O. In the ORC file, the sizes of different columns are completely different, but data is read from the disk in units of columns each time. As a result, for columns with small data volumes, the network I/O overhead during reading is very large. To eliminate these small I/O overheads, AliORC sorts the data volume of different columns in the Writer, and puts the columns with small amounts of data together to form a large I/O block in the Reader, which not only reduces the number of small I/O, but also greatly improves the degree of parallelism.

The following figure shows the comparison for AliORC before and after enabling Small I/O Elimination. The blue part indicates the I/O distribution before enabling Small I/O Elimination, and the orange part indicates the I/O distribution after enabling it. We can see that before enabling Small I/O Elimination, the number of I/O smaller than 64 KB is 26. And, after enabling it, the number of I/O smaller than 64 KB is 0. Therefore, the optimization effect of Small I/O Elimination is very significant.

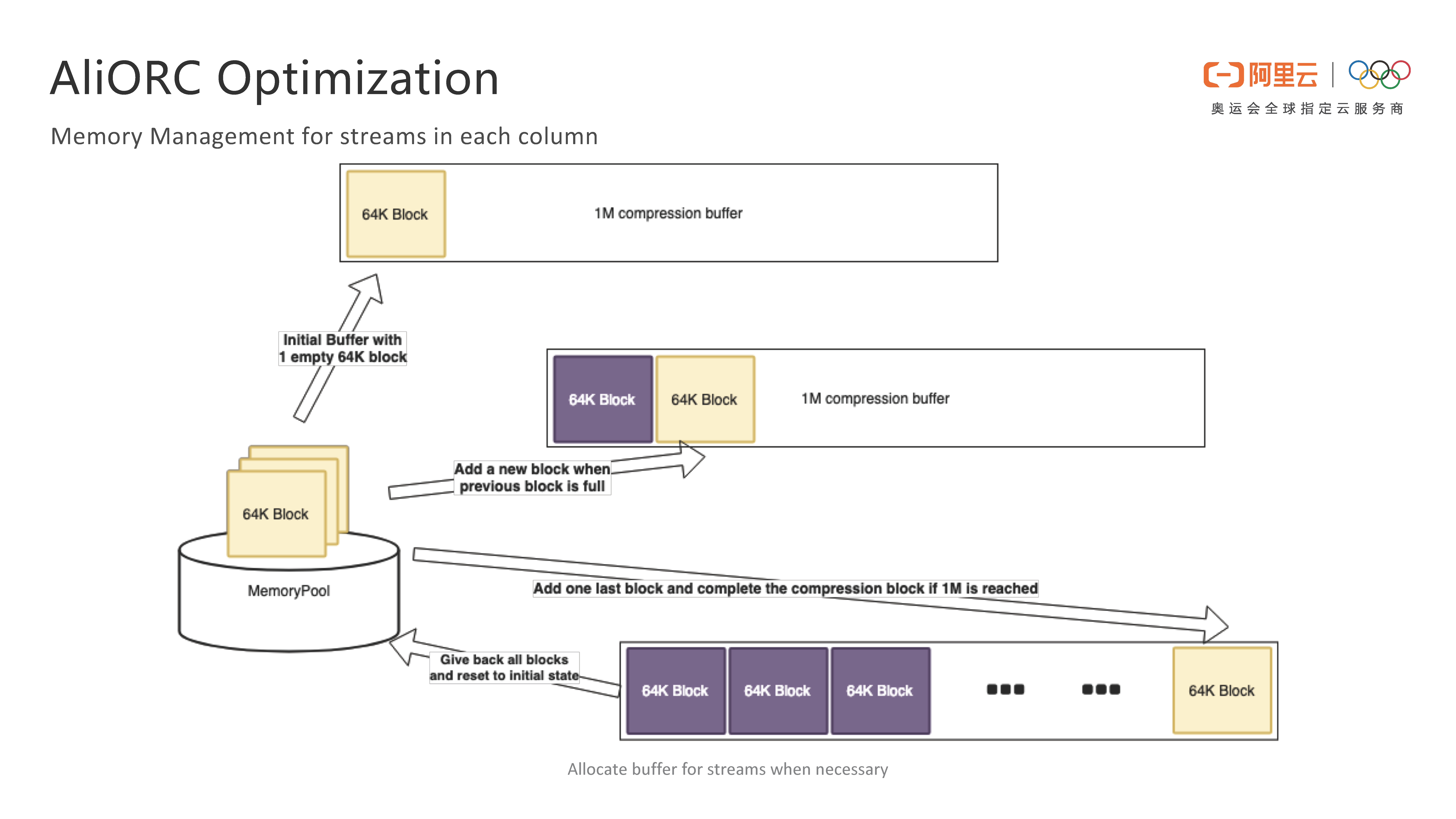

The third optimization of AliORC is memory management. In the open-source ORC implementation, a large buffer, with the default size of 1 MB, is used to store compressed data for each column of data in Writer. The purpose is that the larger the buffer is set, the higher the compression ratio is. However, as mentioned above, the data volume of different columns is different, and some columns simply use a buffer of less than 1 MB, resulting in significant memory waste. A simple way to avoid memory waste is to use only a small data block as a buffer at the beginning, and allocate it as needed. If more data needs to be written, then a larger data block is provided through the Resize method similar to C++std::vector. In the original implementation, an O(N) operation is required for one Resize operation, to copy the original data from the old buffer to the new buffer, which is unacceptable for performance. Therefore, AliORC has developed a new memory management structure to allocate 64 KB blocks, but blocks are not consecutive to each other, which may cause a lot of code changes, but the changes are worthwhile. In many scenarios, the original Resize method consumes a lot of memory, which may cause the memory to run out and thus the task cannot be completed. However, the new method can greatly reduce the memory peak, with very obvious effects.

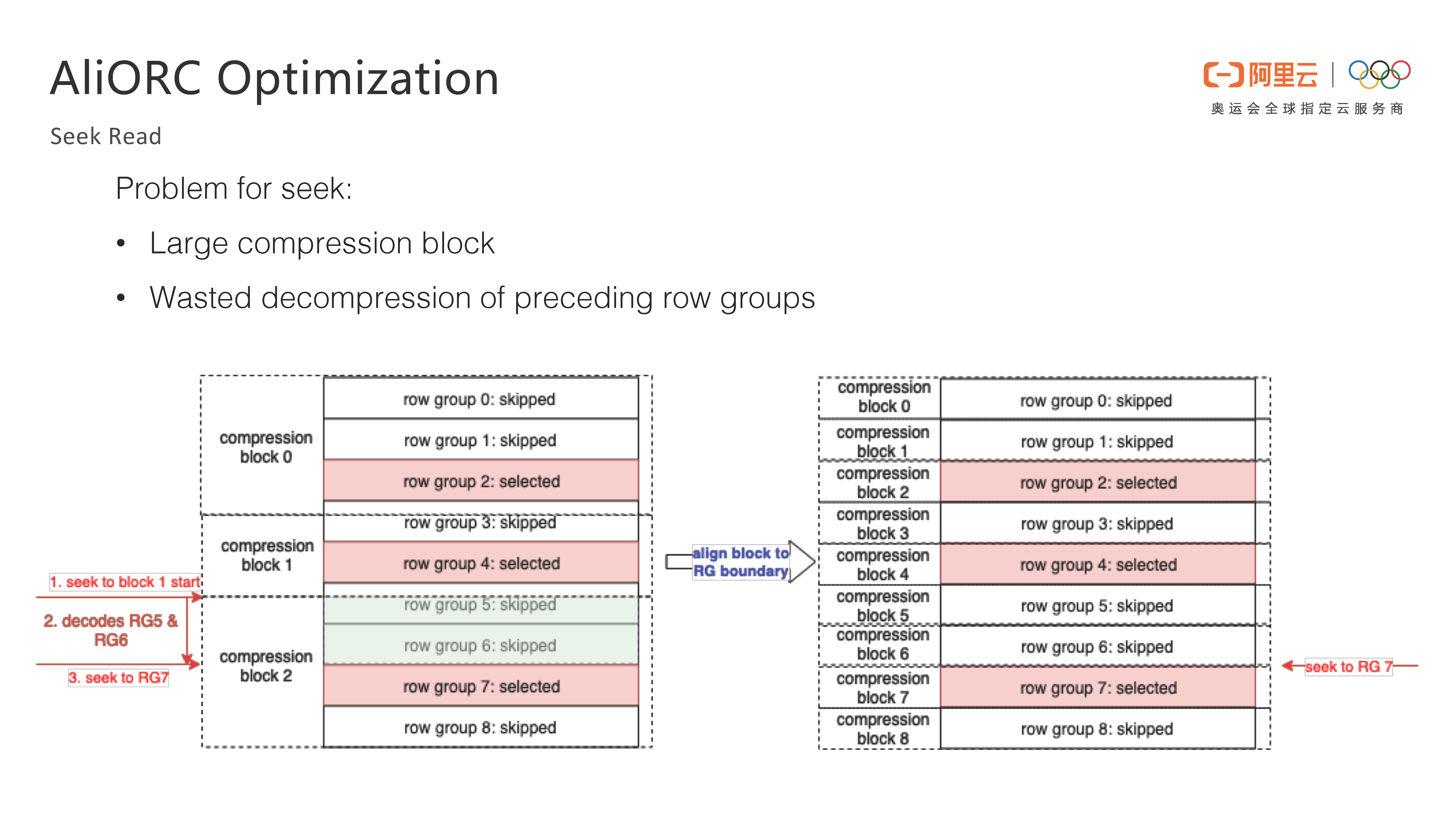

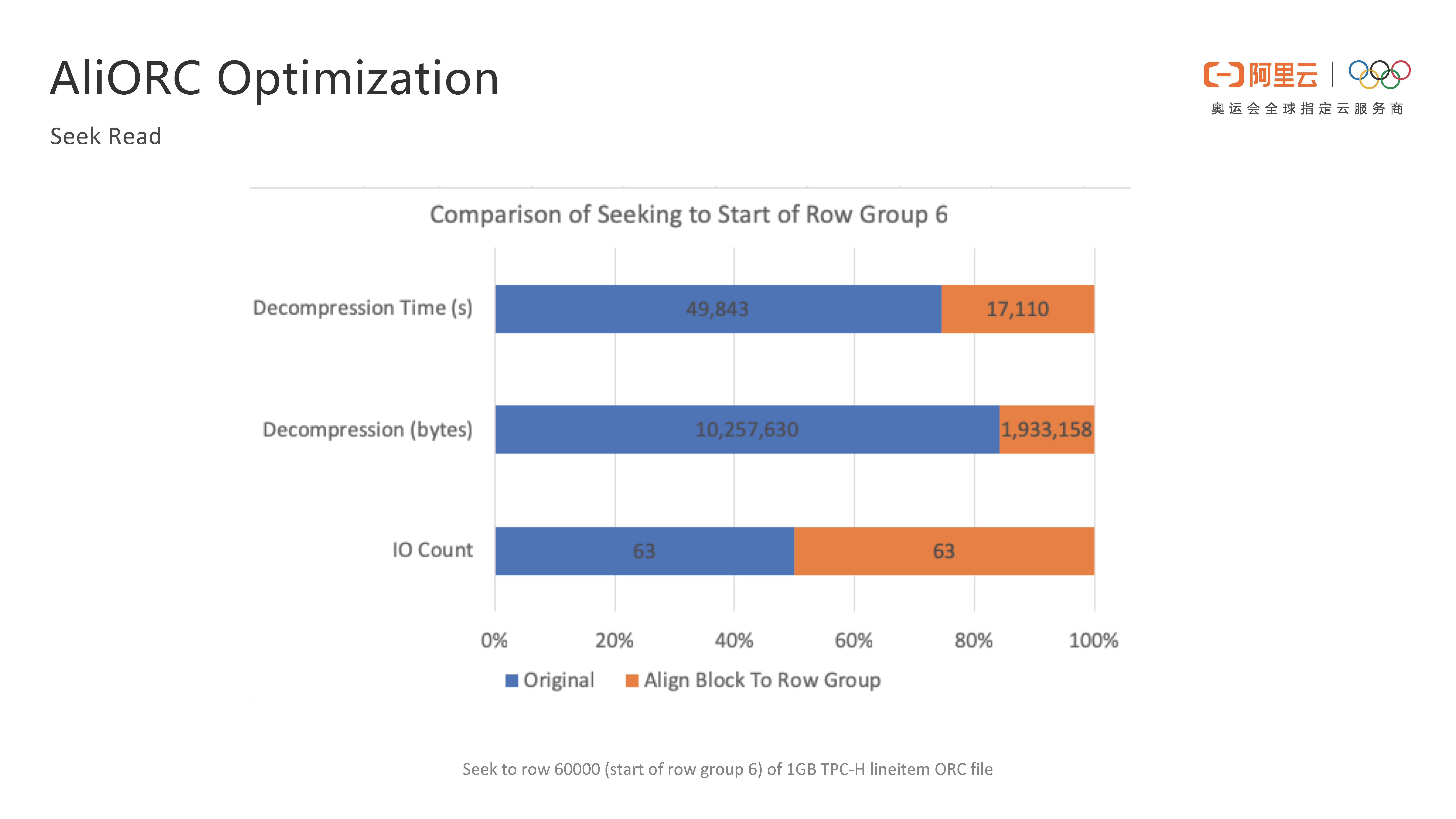

The fourth optimization of AliORC is the Seek Read optimization. This part is slightly complicated to explain. Therefore, an example is used here to summarize it. The original problem with Seek Read is that compression blocks are large, and each compression block contains many blocks. In the figure, every 10 thousand rows of data is called a row group. In the Seek Read scenario, it is possible to seek to a section in the middle of the file, which may be contained in the middle of a compression block. For example, in the figure, row group 7 is contained in block 2. The general Seek operation is to jump to the head of Block 2 first, decompress the data before row group 7, and then jump to row group 7. However, the data in the green part in the figure is not what we need, so the data is decompressed in vain, wasting a lot of computing resources. Therefore, the idea of AliORC is to align the boundary between the compression block and the row group "when writing a file", so seeking to any row group eliminates the need for unnecessary decompression.

The figure shows the effect comparison before and after performing the Seek Read optimization. The blue part is the case before optimization, and the orange part represents the case after optimization. We can find that, with the Seek Read optimization, the time required for decompression and the decompressed data volume are reduced by about 5 times.

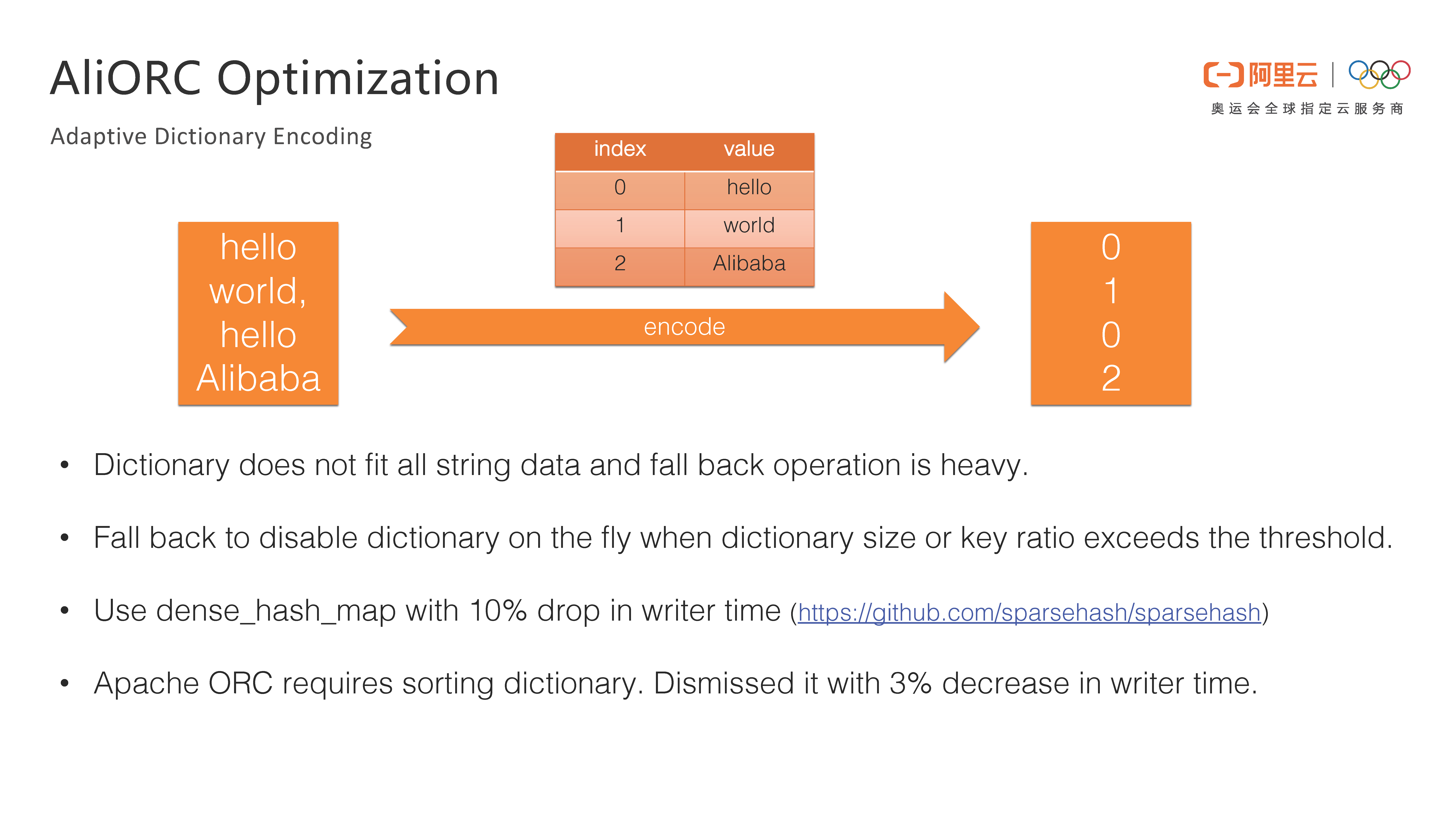

Dictionary encoding is to first sort out a dictionary for fields with high repetition, and then use the serial number in the dictionary to replace the original data for encoding. This is equivalent to converting the encoding of string data into the encoding of integer data, which can greatly reduce the data volume. However, ORC encoding has some problems. First, not all strings are suitable for dictionary encoding. In the original data, dictionary encoding is enabled for each column by default, and whether the column is suitable for dictionary encoding is determined at the end of the file. If not, it falls back to non-dictionary encoding. The fallback operation is equivalent to rewriting string data, so the overhead is very high. The optimization of AliORC is to use an adaptive algorithm to determine whether dictionary encoding is required for a column in advance, which can save a lot of computing resources. The open-source ORC uses std::unordered_map in the standard library to implement dictionary encoding, but its implementation method is not suitable for MaxCompute data. While the open-source dense_hash_map library of Google can improve writing performance by 10%, so AliORC adopts this implementation method. At last, the open-source ORC standard requires sorting dictionary types, but in fact it is not necessary. Removing this restriction can improve the performance of the Writer by 3%.

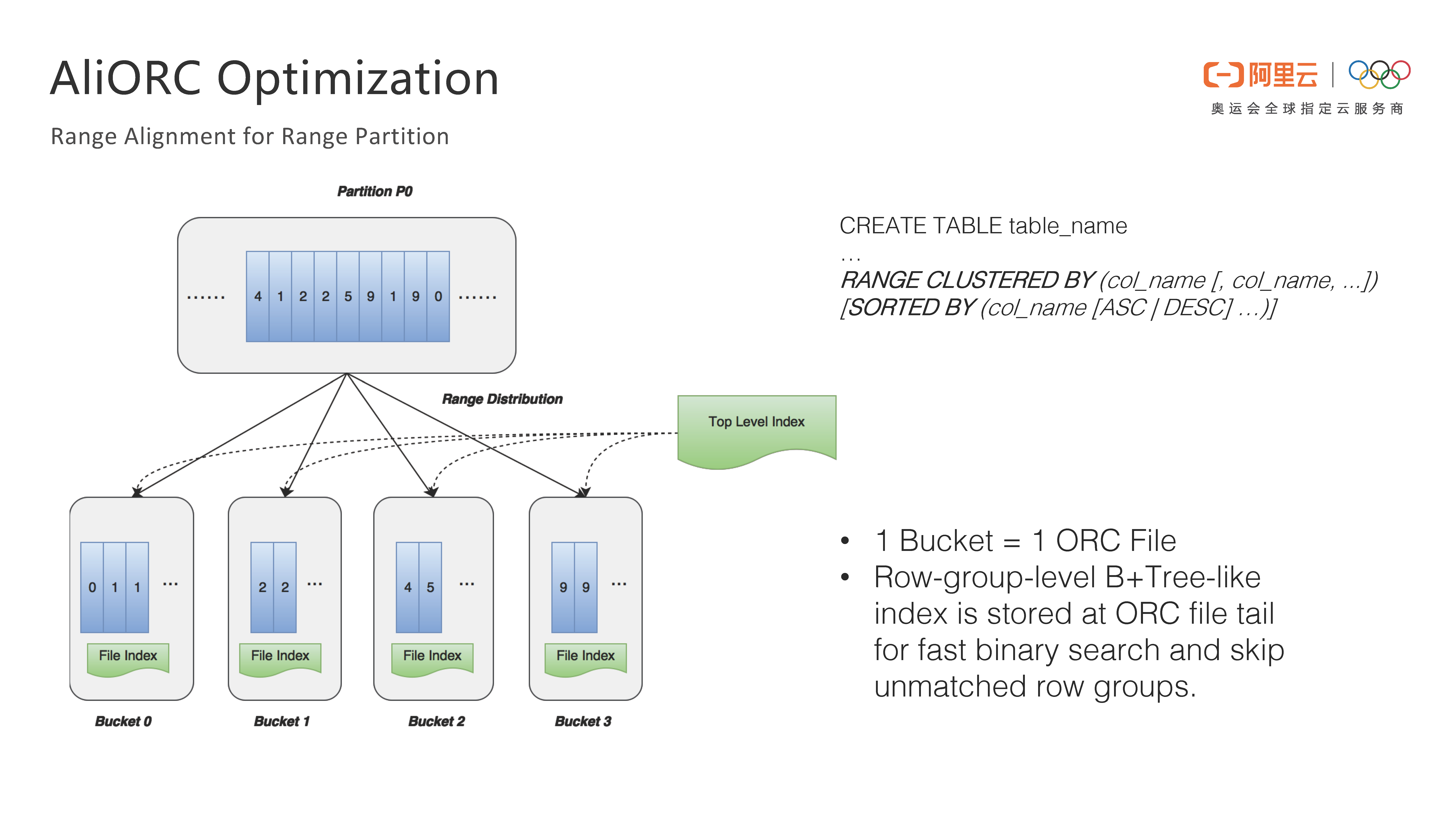

This part is mainly about the optimization of range partition. As shown in the DDL on the right of the following figure, a table is subjected to the RANGE CLUSTERED operation according to some columns, and the data of these columns is sorted. For example, the data is stored in 4 buckets, which store data of 0-1, 2-3, 4-8 and 9-infinity, respectively. The advantage of this is that, in the specific implementation process, each bucket uses an ORC file and stores an index similar to B+Tree at the end of the ORC file. When a query is required, if the filter is related to the range key for the query, the index can be used directly to exclude data that does not need to be read, thus greatly reducing the amount of data to be retrieved.

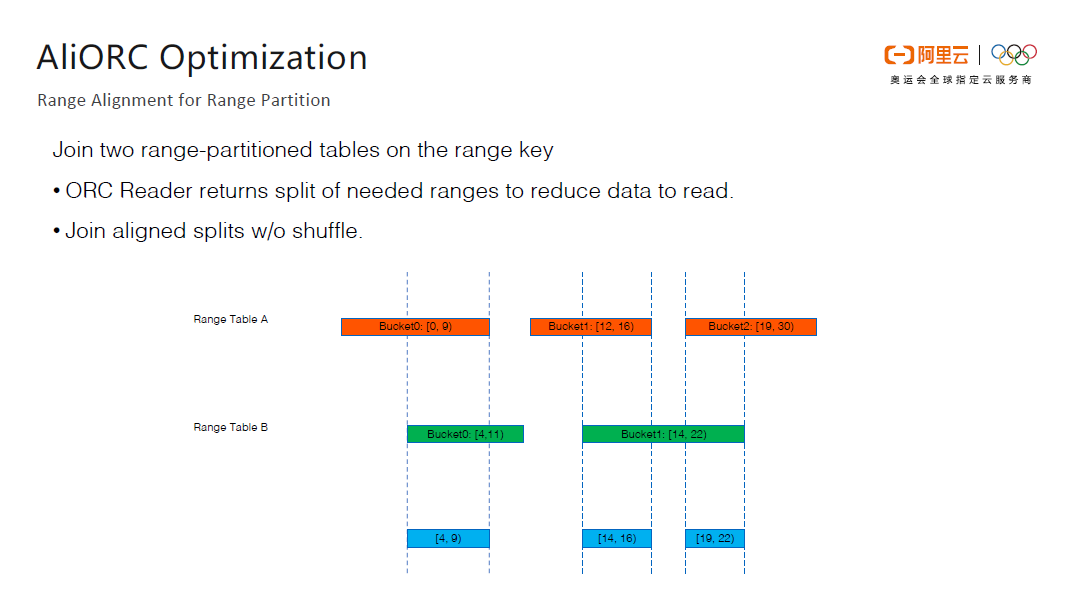

For range partition, AliORC has a powerful function called Range Alignment. Let's assume that we need to join two range-partitioned tables, and the join key is the range partition key. As shown in the following figure, table A has three ranges and table B has two ranges. In the case of common tables, joining these two tables will generate a large number of shuffles, and the same data needs to be shuffled to the same worker for join operation. In addition, the join operation consumes a lot of memory and CPU resources. With range partition, the information of ranges can be aligned, and the three buckets of table A and the two buckets of table B can be aligned to generate three blue intervals, as shown in the following figure. Then, it can be determined that it is impossible to produce join results for data outside the blue range, so the worker does not need to read the data at all.

After optimization, each worker only needs to open the data in the blue range for the join operation. In this way, the join operation can be completed in the local worker without the need for Shuffle operation, thus greatly reducing the data transmission volume and improving end-to-end efficiency.

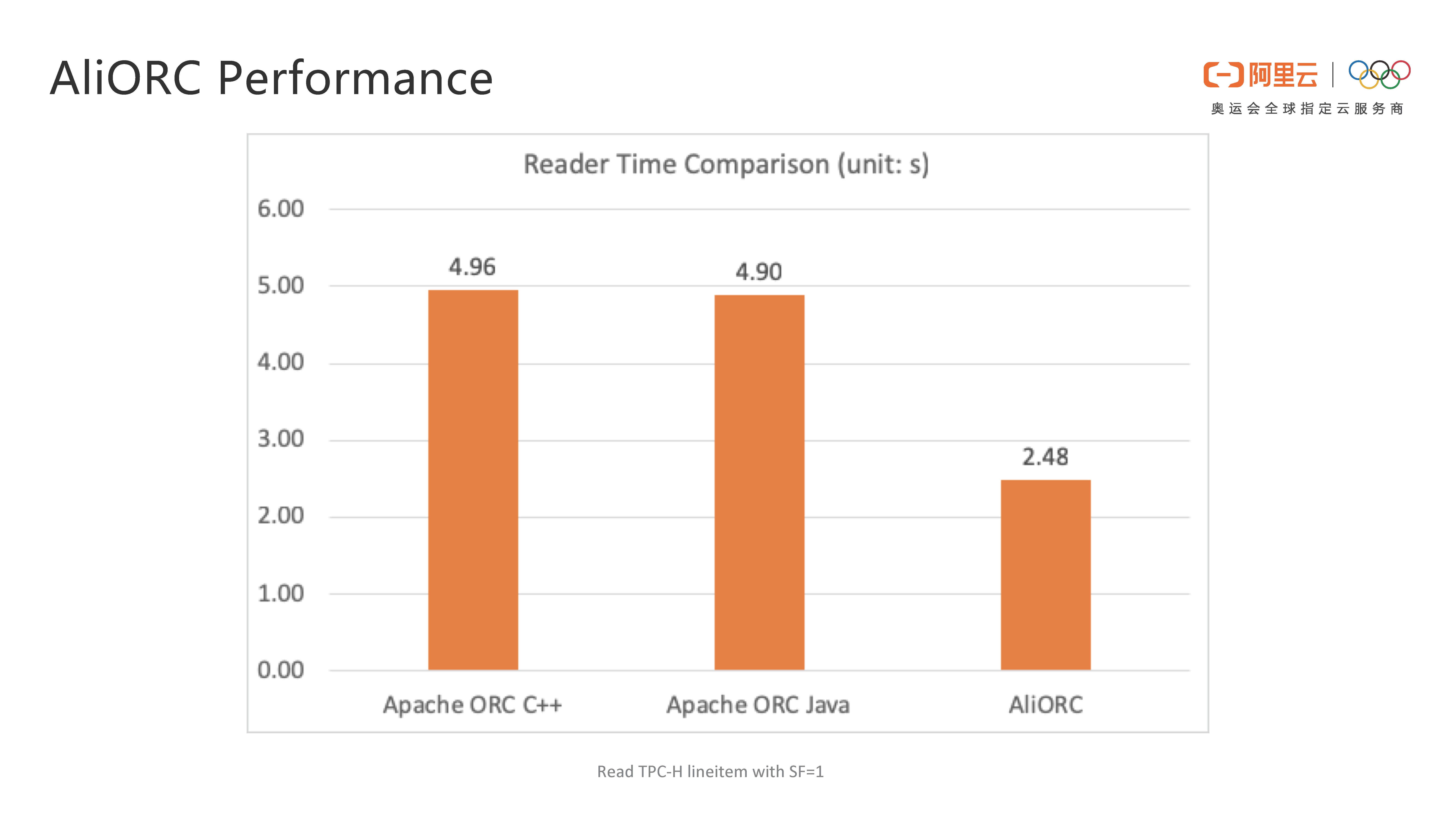

The following figure shows the comparison of the read time between AliORC, open-source ORC C++, and open-source ORC Java in the Alibaba internal test. As shown in the figure, the read speed of AliORC is twice as fast as open-source ORC.

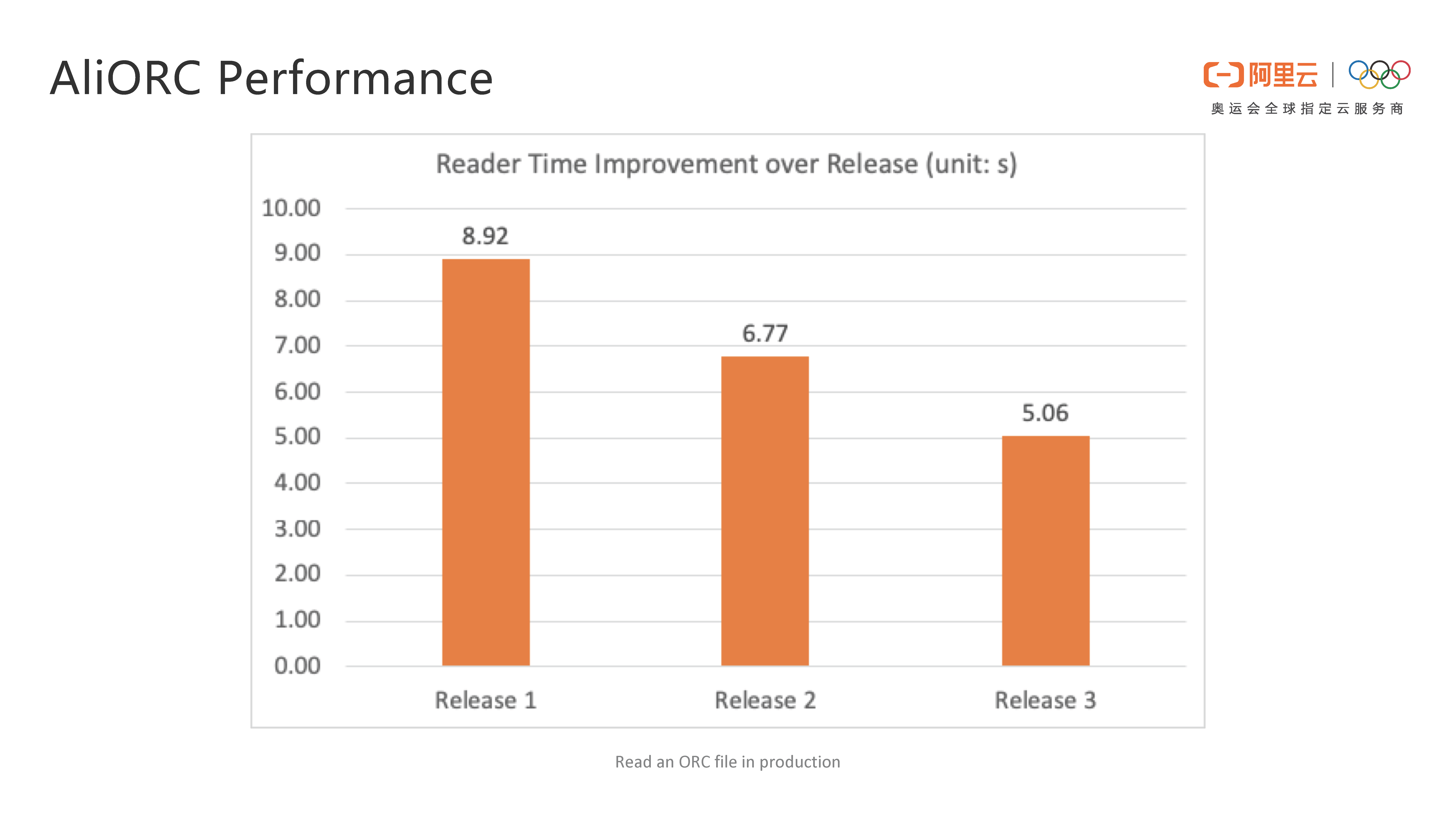

As of May 2019, three releases have been iterated within Alibaba. The following figure shows that each release has improved performance by nearly 30%, and is still being optimized. At present, AliORC is still in the internal use phase, and has not yet been released on the public cloud. AliORC will be available for everyone later to share the technical dividends.

First, MaxCompute is out-of-the-box. That is to say, users can directly start the MaxCompute service to run tasks on it without additional settings. However, the use of open-source software, such as Hive or Spark, may have many bugs, and it is extremely difficult to troubleshoot the bugs. Moreover, the bug fixing cycle of the open-source community is also very long. When users encounter problems when using MaxCompute, they can quickly receive feedback and complete bug fixes.

Second, MaxCompute has a low usage cost and can be paid by volume. However, the use of Hive or Spark often requires self-built data centers, which is very cumbersome. To build a data center, you not only need to pay for the cost of the machine, but also need to operate and maintain it yourself.

Third, the use of open-source Hive or Spark has a high requirement for technicians. Some engineers who are very familiar with Hive and Spark need to be recruited for maintenance. Some of the features developed by the company tend to conflict with the open-source version, and the conflicts need to be resolved each time. And, when the open-source software is upgraded every time, you need to download the new version of code, and then re-add the developed features of your own into the code. This process is extremely complicated. But with MaxCompute, Alibaba will help customers solve these problems without the need for users to care.

For stability, MaxCompute also performs very well. It has withstood traffic peaks during the Double 11 shopping carnival over the years. However, it is difficult to directly use the Hadoop ecosystem to support a large volume of tasks, which often requires various in-depth customization and optimization.

MaxCompute is the first platform to successfully pass the TPCx-BB benchmark with a 100 TB dataset, something which has not yet been achieved by Apache Spark.

In addition, for open-source products, the Chinese market is often not paid enough attention. The main target customers of companies, such as Cloudera and Databricks, are still in the United States. They tend to develop products based on the needs of American customers, while their support for the Chinese market is not good enough. MaxCompute follows the needs of Chinese customers, and is more suitable for the Chinese market.

The last point is that the file format AliORC can only be used in MaxCompute, which is also a unique advantage.

In summary, compared with open-source software, MaxCompute has the characteristics of more timely response to requirements, lower costs, lower technical thresholds, higher stability, better performance, and better suitability for the Chinese market.

Personally, I am more optimistic about the big data field. Although the prime time for a technology is usually only 10 years, and big data technology has already existed for 10 years, I believe that big data technology will not decline. Especially with the support of artificial intelligence (AI) technology, big data technology still has many problems to solve, and it is still not perfect. In addition, the Alibaba MaxCompute team is full of talent. Teams in Beijing, Hangzhou, and Seattle have strong technical strength, and I can learn a lot in these teams. Finally, for open-source big data products, they are basically developed abroad, while MaxCompute is a completely self-developed platform in China. Joining the MaxCompute team makes me very proud to have the opportunity to make a contribution to domestic software.

It was also a coincidence that I took the road of big data technology. What I learned in school had nothing to do with big data. My first job was also related to video coding, and I switched to a big data-related position at Uber later. Before I joined Uber, the Hadoop group was still in the early stage of formation. Basically, no one was actually using Hadoop, and everyone built their own services to run tasks. After I joined the Hadoop group at Uber, I followed the team to learn Scala and Spark from scratch, from learning how to use Spark to learning the Spark source code, and then slowly built up a big data platform to reach the big data field. After I joined Alibaba, I was able to learn about big data products through MaxCompute in all stages, including requirements, design, development, testing, and final optimization, which was also a valuable experience.

This is actually because the MaxCompute team needed ORC, but the open-source ORC C++ had only Reader but no Writer at that time, so we needed to develop our own Writer for ORC C++. After the MaxCompute team finished the development, we hoped to gather the power of open source to do a good job in the Writer for ORC C++, so we contributed the code back to the open source community, and won the recognition of the open source community. Based on these workloads, the ORC open-source community gave the MaxCompute team two Committer positions. After I became a Committer, my responsibilities became even greater. I not only had to write my own code, but also had to grow along with the community, review the code of other members, and discuss short-term and long-term issues. The ORC community recognized the work of the MaxCompute team and I, and therefore granted me the position of PMC. Personally, the ORC open-source work also represents the attitude of Alibaba towards open source, which not only needs to be enough in quantity, but also needs to be good enough in quality.

As long as you are interested in open source and willing to contribute continuously, no matter what kind of background and foundation you have, all your efforts will eventually be recognized.

MaxCompute Wins Science and Technology Award of Zhejiang Province

Best Practices of Building Enterprise Cloud Data Warehouse with MaxCompute

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - March 2, 2020

Alibaba Clouder - March 29, 2021

Alibaba Clouder - November 6, 2017

Alibaba Clouder - August 10, 2020

Alibaba Cloud MaxCompute - September 23, 2019

降云 - January 12, 2021

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn MoreMore Posts by Alibaba Cloud MaxCompute