By Yinlin

In a previous speech, Men Deliang, a data technical expert at Youku working on data middleware, shared benefits brought to the Youku business and platform by the migration from Hadoop and Alibaba Cloud MaxCompute.

This article is based on the video of his speech and the related presentation slides.

Hello, everybody! I am Men Deliang, currently working on middleware-related data work at Youku. It is my honor to see that Youku has switched to MaxCompute. I have been working for Youku for nearly five years. Right at the fifth year after I joined Youku, Youku migrated from Hadoop to MaxCompute. This shows the development process from May 2016 to May 2019. The upper section shows computing resources and the lower section shows storage resources. You can see that the number of users and the volume of table data are actually increasing exponentially. However, after Youku completely migrated from Hadoop to MaxCompute in May 2017, the computing and storage consumption has been decreasing. The migration continues to bring significant benefits to Youku.

The following section shows the business characteristics of Youku.

The first characteristic is the diversity of the big data platform users. Youku employees who are responsible for data, technology development, BI, and testing or even marketing will use this big data platform.

The second characteristic is the business complexity. Being a video site, Youku has very complicated business scenarios. From the log classification perspective, in addition to the page browsing data, video playback-related and performance-related data is also involved. From the entire business model, the business scenarios vary greatly, including broadcasting, membership, advertising, and big screen applications.

The third characteristic is the huge data volume. Youku has logs totaling up to hundreds of millions of lines per day and needs to perform complicated computations.

The fourth is the high cost awareness. This is a relatively interesting and common feature, regardless of the enterprise size. Youku follows a strict budget and Alibaba Group also has a strict internal budget system. However, we often need to deal with some important campaigns such as Double 11 promo events, World Cup broadcasts, and Spring Festival events. Therefore, we also require the computing resources to be highly elastic.

Based on these business characteristics, I summarized several features of MaxCompute that can perfectly support our business:

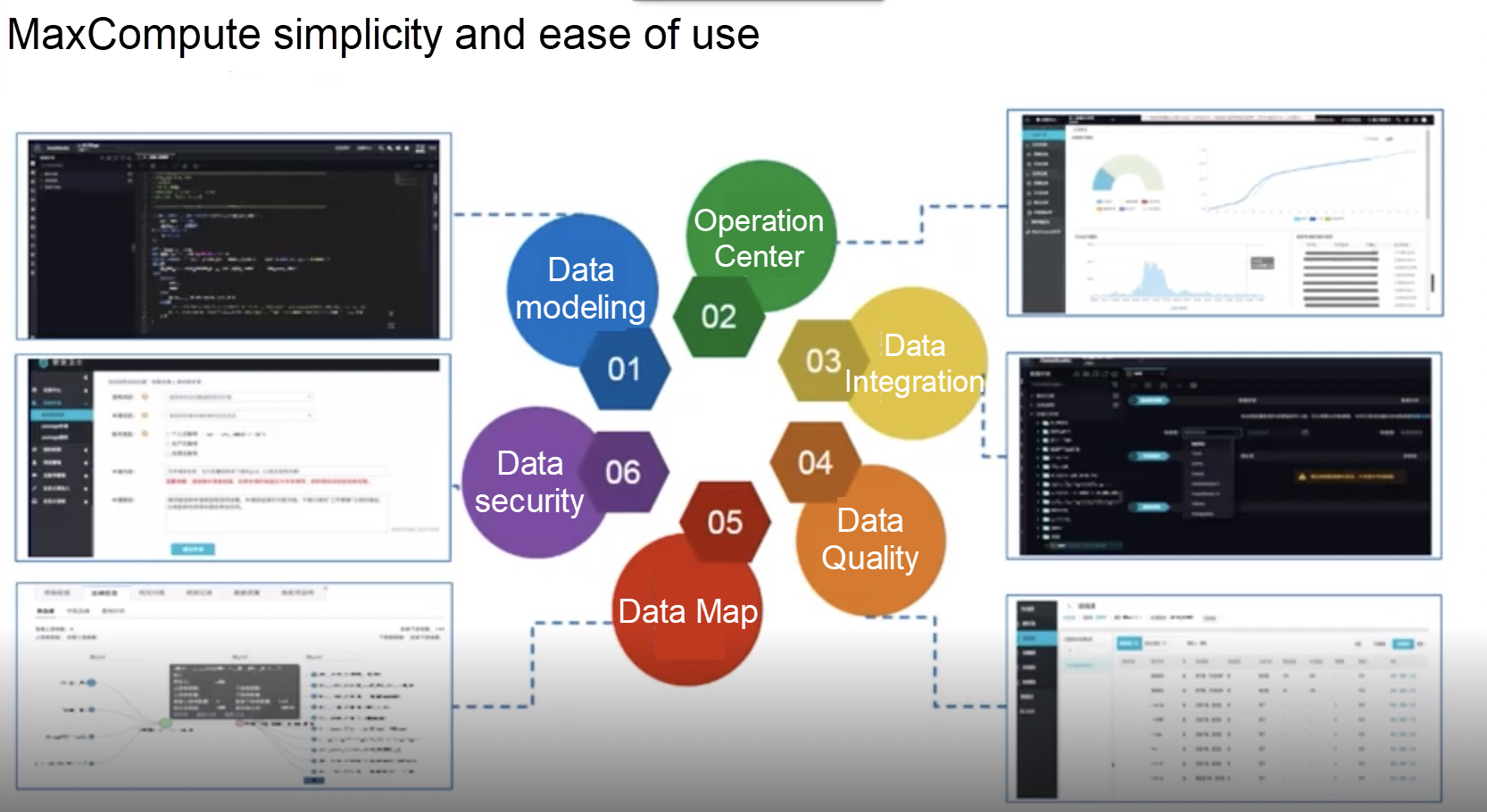

MaxCompute provides a complete link that covers data modeling, data administration (data integration and quality control), data map, and data security. After Youku migrated from Hadoop to MaxCompute that year, the biggest benefit is that we no longer have to maintain clusters and run tasks at midnight as we did before. Before the migration, I may have needed several weeks for a request proposed by one of my colleagues. However, now I can immediately run the task and obtain the result. Previously, to conduct BI analysis, analysts had to log on to the client and write scripts and scheduling tasks themselves. They often complained that the data they needed wasn't available yet. Data required by high-ranking executives may not be available until after noon. Nowadays, basically all the important data is produced by 7 a.m. Some basic business requirements can be implemented by analysts themselves, without having to send all the requests to the data department.

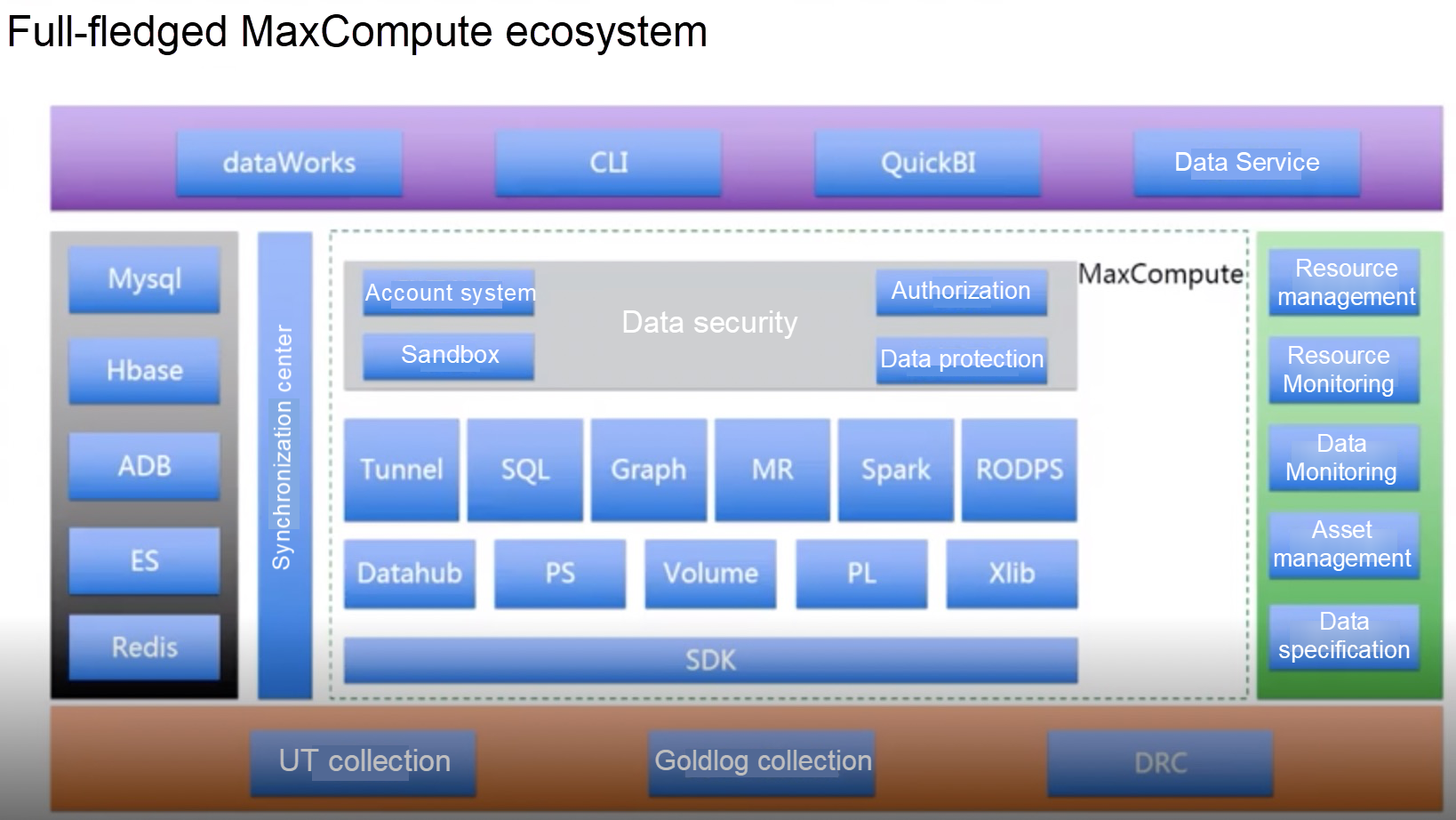

Before 2017, Youku was completely based on Hadoop. After migration to MaxCompute, Youku is based on the serverless big data service ecosystem provided by Alibaba Cloud. The components in the open-source community can also be found in the MaxCompute ecosystem and are better and simpler. As shown in the architecture diagram, MaxCompute is in the middle, and MySQL, HBase, ES, and Redis are on the left side and implement two-way synchronization from the synchronization center. Resource management, resource monitoring, data monitoring, data asset management, and data specifications are on the right side. Our underlying data input involves some collection tools provided by Alibaba Group. In the upper layer, DataWorks is provided for developers, including some command line tools, and QuickBI and Data Services are provided for BI developers.

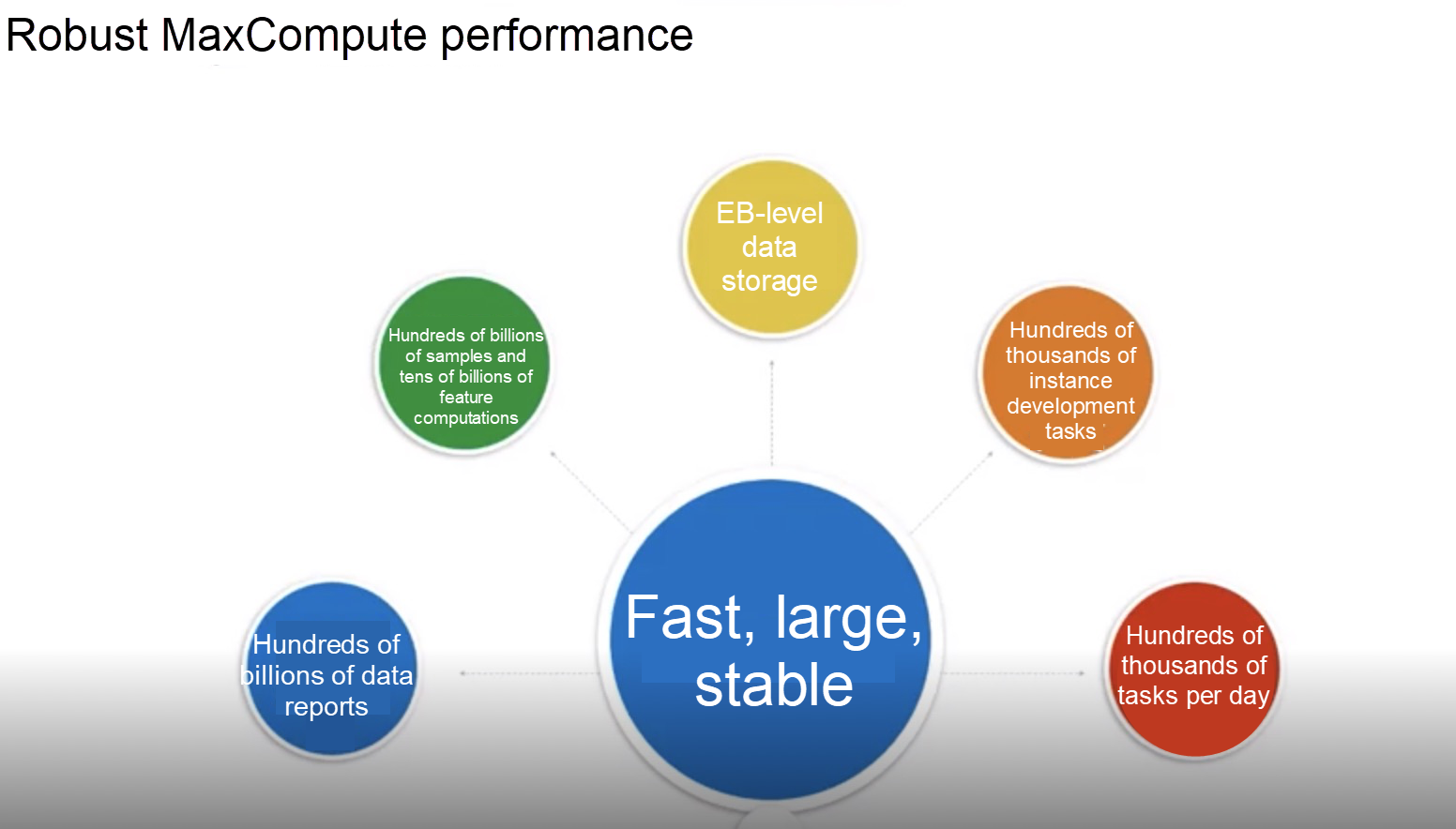

MaxCompute supports EB-level data storage at Youku and analysis of hundreds of billions data samples, hundreds of millions of data reports, and concurrency and tasks for hundreds of thousands of instances. This high performance was completely unimaginable when Hadoop was used.

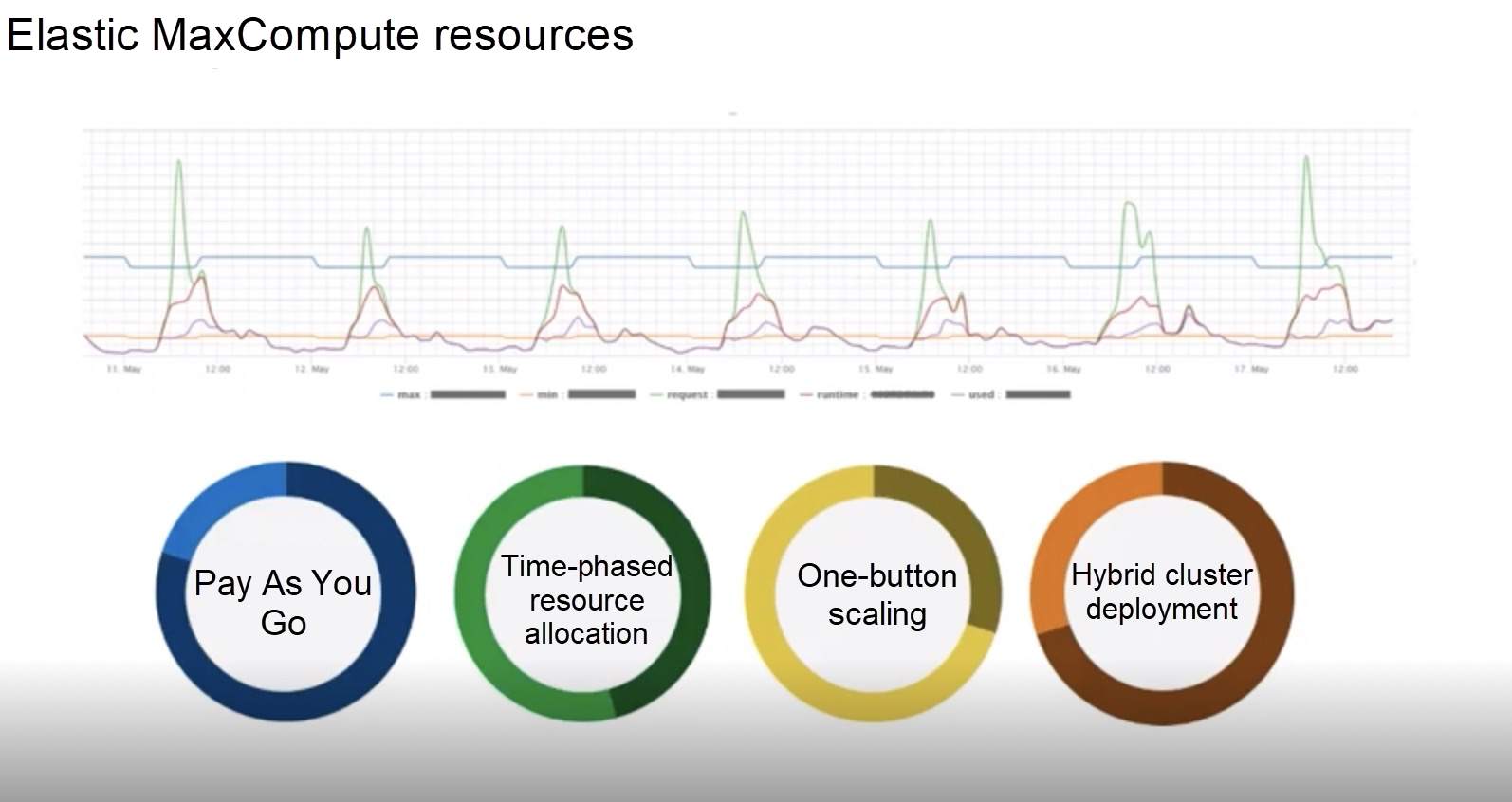

Before we started the migration in 2016, we had already included more than 1,000 machines in our Hadoop cluster, which was considered a large-scale cluster at that time. However, we had many annoying problems at that time, including the name mode memory problem, the inability to scale the data center, and some O&M and management problems. We had to constantly request resources from our O&M colleagues, who often replied that we had used lots of resources and money. The problem that we faced was how to use computing resources on demand. A large number of tasks run at night and requires lots of resources. In the afternoon, the entire cluster is idle, unnecessarily consuming many resources. MaxCompute can perfectly solve this problem.

First, MaxCompute offers pay-as-you-go billing. You are billed by the actual amount of resources consumed instead of the total number of machines. Compared with maintaining your cluster yourself, this may reduce the cost by 50%.

Second, MaxCompute computing resources can actually be scheduled based on different periods of time. For example, you can assign more resources in the production queue at night to produce reports as soon as possible. In the daytime, you can assign more computing resources to development tasks so that analysts and developers can run some temporary data tasks faster.

Third, MaxCompute provides fast scaling. Assume that you suddenly have a high usage demand and find that your data task stagnates due to insufficient computing resources and all the queues are blocked. At this point, you can inform your O&M colleague, who can implement one-click scaling with a command in seconds. This ensures that required resources are immediately available.

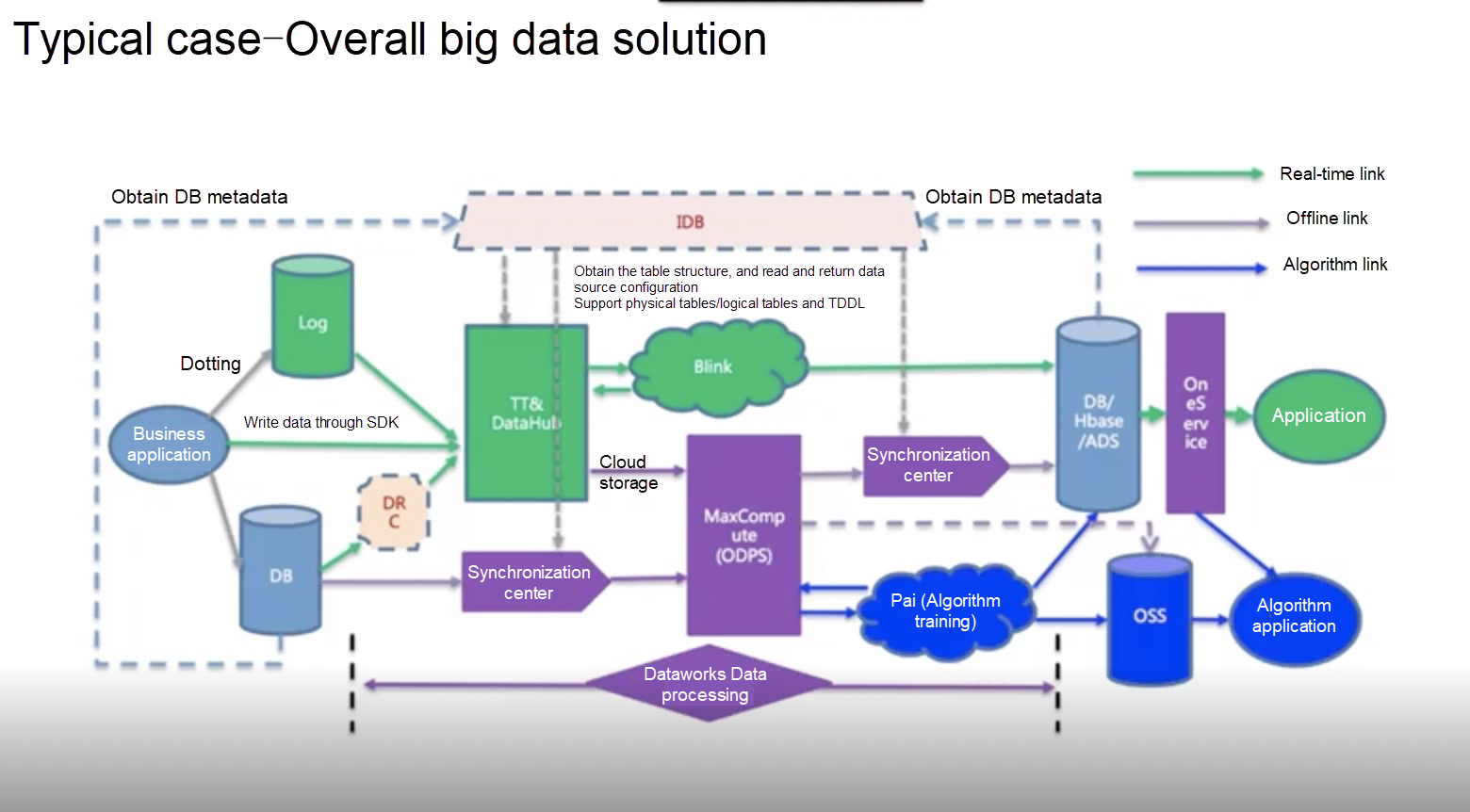

In the preceding section, I shared why Youku uses MaxCompute. Next, I will show some typical applications and solutions in Youku business scenarios. The preceding figure is a typical technical architecture diagram at Youku and possibly at many other companies including Alibaba Group. As shown in the diagram, MaxCompute is in the middle. The left section of the diagram represents input and the right section represents output. The green lines make up a real-time link. Data from all data sources, whether DB or local logs on the server, will be stored through TT and DataHub to MaxCompute for analysis. Flink, a popular real-time computing platform, actually acts as a real-time link.

In terms of the DB synchronization, in addition to using the real-time link, DB also synchronizes data to MaxCompute per day/hour. Data computing results can also be synchronized to databases such as HBase and MySQL. A universal service layer is established to provide services for applications. In the following diagram, the PAI machine learning platform performs algorithm training and uploads the training results through OSS to an application of an algorithm.

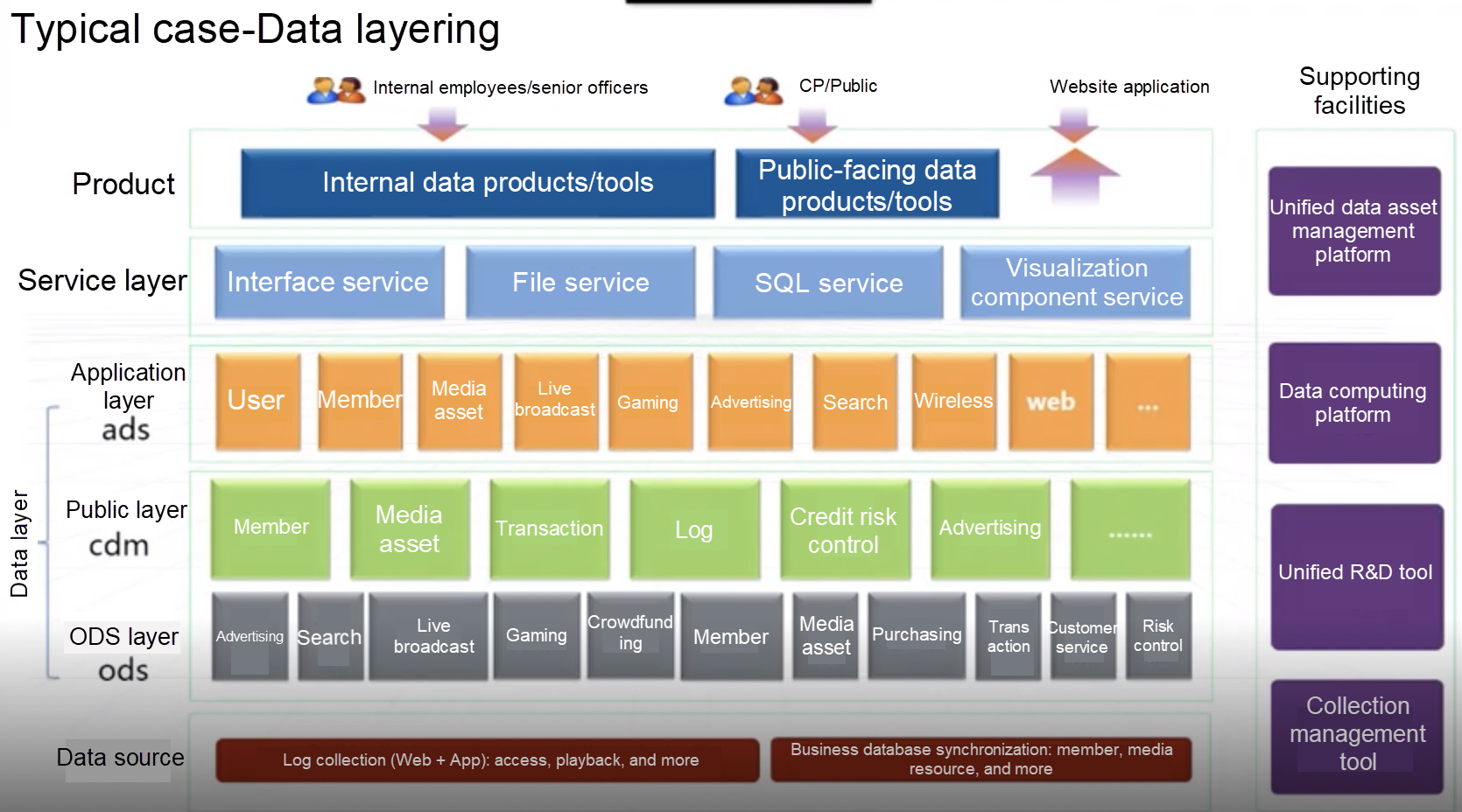

This is probably a popular data warehouse layering diagram in the industry. We deal with data middleware and all the data gets refined from the ODS layer, the CDM layer, the ADS layer to the bottom layer. Then we provide diversified services by using interface service, file services, and SQL services. Upward, we provide some internal data products for high-ranking officers and ordinary employees. We may also provide some external data, including Youku video views and popularity.

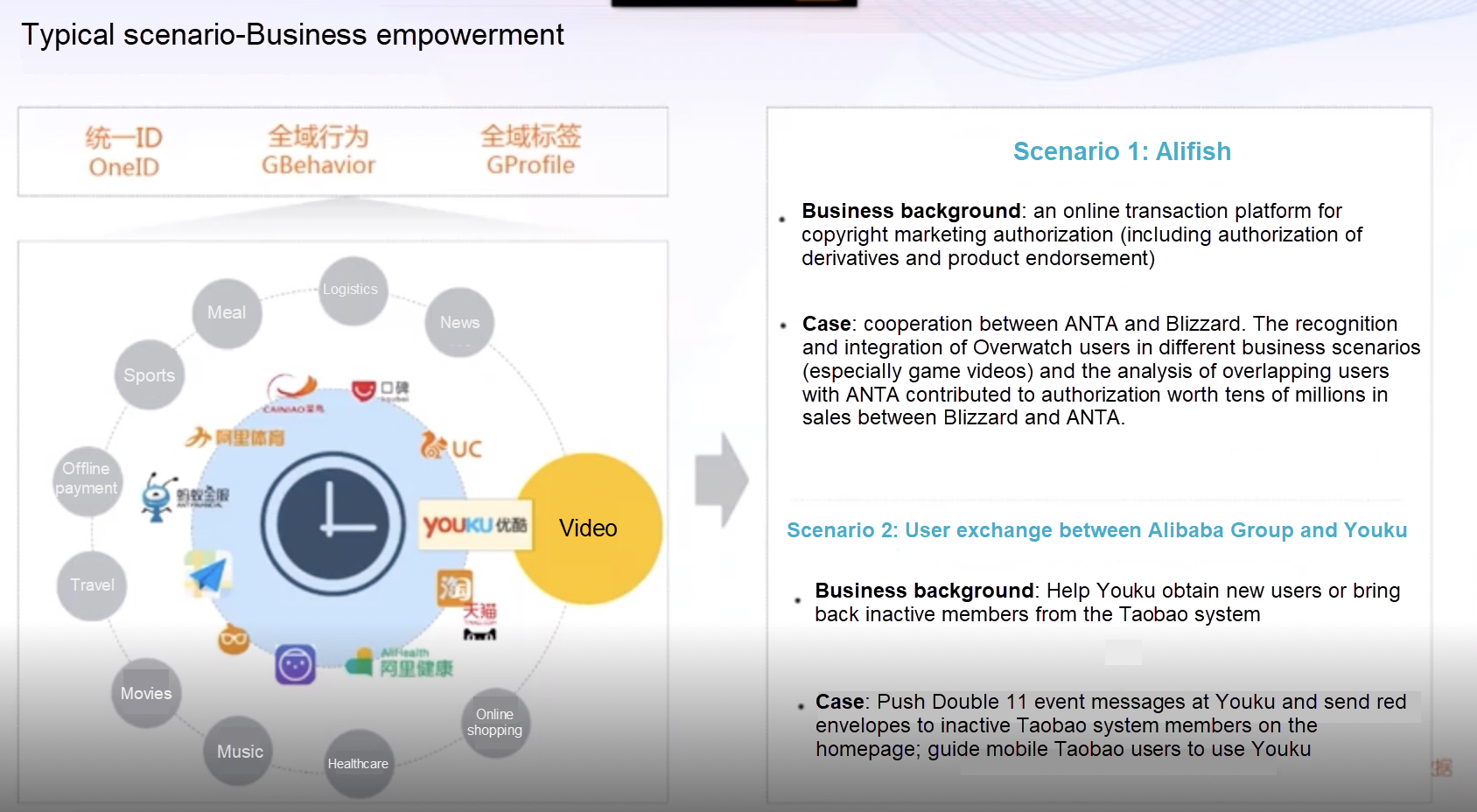

The preceding figure shows two typical cases that we have encountered after our migration form Hadoop to MaxCompute. In the first case, by using data middleware, we connect users in two different scenarios to empower the two business scenarios and improve the business value.

In the second case, which may be considered as one of our internal application scenarios, we use Youku and other internal BUs to implement traffic exchange. We use universal tags to enlarge samples and exchange traffic between Youku and other BUs to achieve a win-win situation.

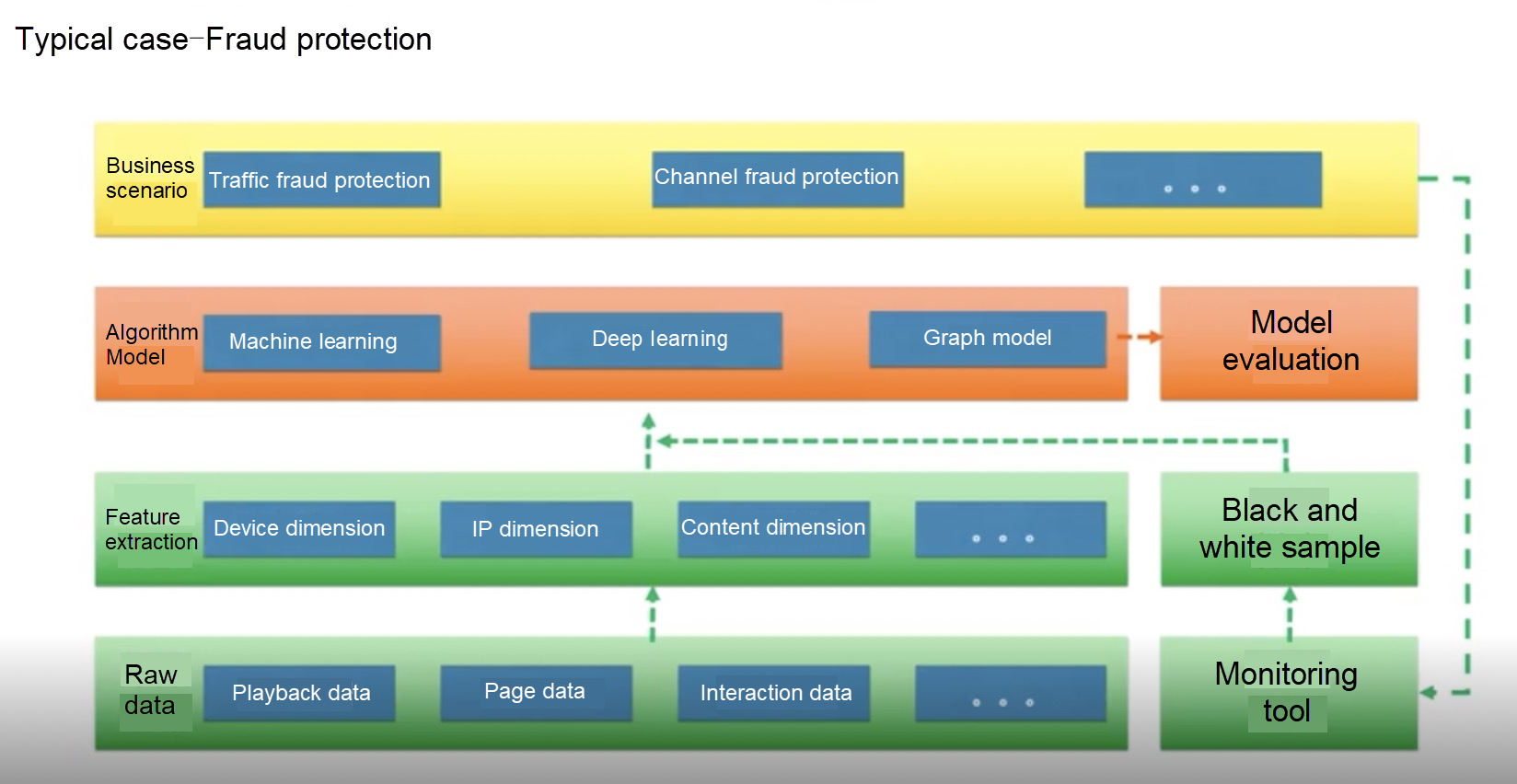

The preceding figure describes the fraud protection topic, which isn't relevant for most Internet companies. This is a fraud protection architecture based on MaxCompute. The features of the original data are extracted first and then algorithm models for machine learning and deep learning (including graph models) are used to support traffic fraud protection and channel fraud protection. Fraud-related information obtained from monitoring tools for fraud protection in business scenarios is put in a black-white sample, which is used together with features to iterate and optimization algorithm models. Algorithm models are also evaluated to constantly improve the fraud protection system.

The last point is also related to the cost. In everyday use, some beginners or new hires may use some resources incorrectly or carelessly. For example, some trainees or non-technical members like analysts may perform a highly resource-consuming SQL task, which forces other tasks to wait in queue. At this point, we need to govern all the resources.

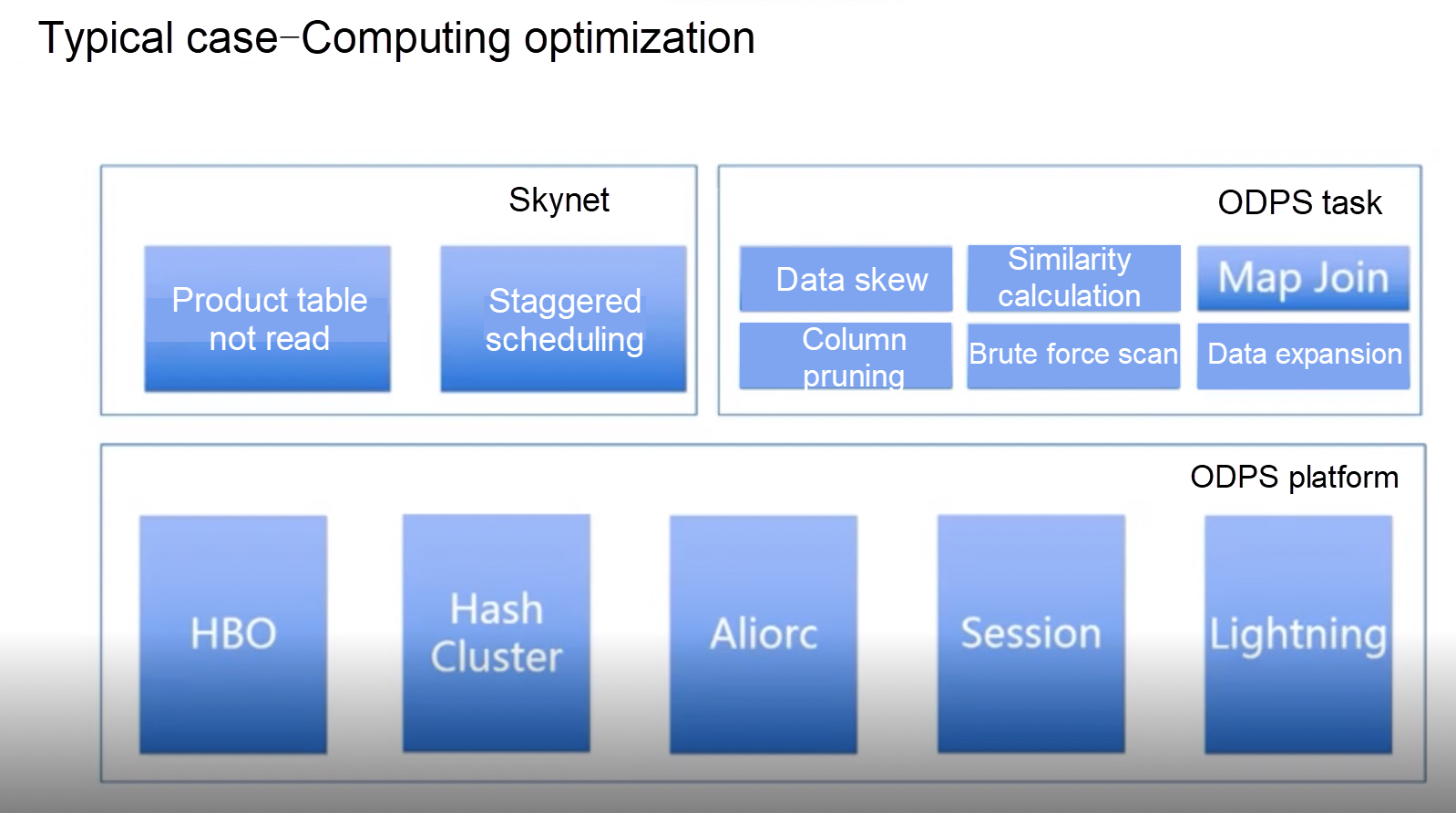

From the node perspective, big data governance based on big data itself allows us to determine which tables haven't been read for a certain number of days since they were created. For data with smaller access span, we can also deprecate it or perform proper governance. For business scenarios of less importance or with a lower time requirement, for example, algorithm training, we can make scheduling plans outside the traffic peak to ensure that the resource usage is extremely high. From the MaxCompute task perspective, we can calculate which tasks are experiencing data skew, which data may have similar operations, which tasks require MapJoin, and which tasks require cropping to save the I/O. We can also know which tasks will perform brute force scanning (for example, scanning data in a month or year) and which data is likely to have data surge, for example, due to complicated computations like CUBE or iterations of algorithm models. By using signs indicated by data computation results, we can deduce user characteristics to improve the quality of the data and reduce the usage of all the computing resources.

From the computing platform perspective, we are constantly releasing advanced methods, including history-based optimization (HBO), Hash Cluster, and Aliorc.

HBO is an optimized implementation of a historical version that saves users from having to tune parameters. A user may set a large parameter value to make that user's task run fast, which may waste lots of integrated resources. With this feature, users do not have to perform parameter tuning themselves. Instead, the cluster automatically does that work for them. Users only need to write their own business logic.

Released about two years ago, Hash Cluster is a great optimizer. Joining two large tables was often not able to be computed using Hadoop. We can implement some distribution and optimization when joining a large table and a small table. The join of two large tables involves sorting. Hash Cluster actually pre-sorts data and omits many intermediate computing steps to improve the efficiency.

Aliorc can stably improve the computing efficiency by 20% in some fixed scenarios.

Another optimization tool is Session. Session directly stores small volumes of data to SSD or cache and is very friendly in scenarios where under a node are 100 leaf nodes because results can be obtained in seconds. At the same time, Youku also uses Lightning for computational acceleration. Lightning is an optimization on computing architecture solutions. It is an MPP architecture.

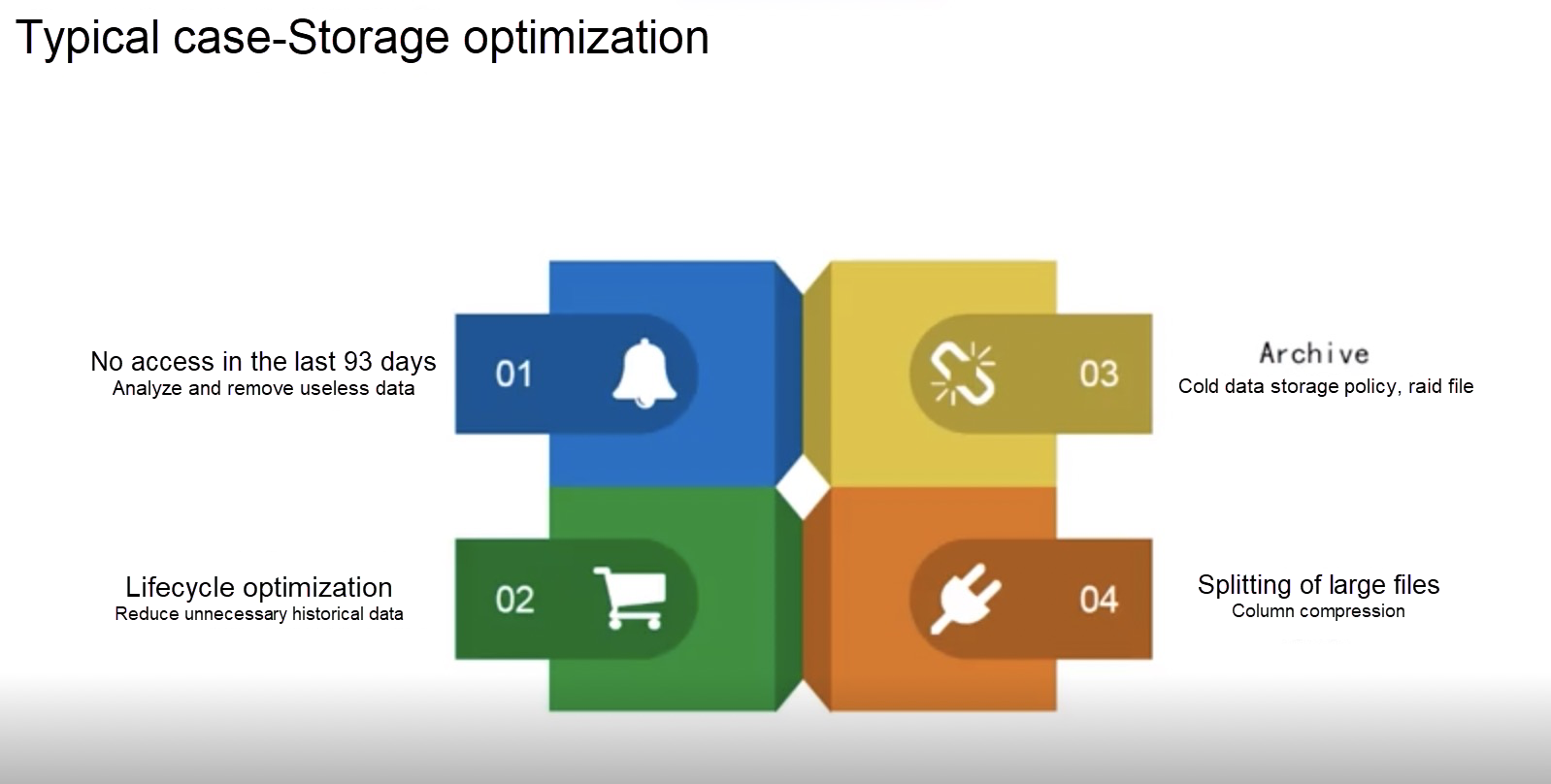

Finally, let's take a look at the storage optimization. Critical raw data or data that needs to be audited must be kept forever and cannot be deleted. This causes our data storage to keep increasing and computing will reach a balanced level at a specific point in time. The amount of computing resources currently used is not likely to surge later. For example, older business logic will be replaced with newer business logic to keep a relatively stable fluctuation. However, in the storage, some historical data can never be deleted and may increase exponentially. Therefore, we also need to pay continual attention to storage and implement big data governance based on big data to see which tables have small access span, optimize the lifecycle accordingly and control the data increase speed. In fact, the aforementioned Aliorc can also be used for compression. We can split some large fields to improve the compression ratio.

These cases are application scenarios of MaxCompute at Youku. Thank you all for listening to my speech.

137 posts | 21 followers

FollowAlibaba Clouder - October 27, 2020

Alibaba F(x) Team - June 20, 2022

Alibaba Clouder - October 27, 2020

Alibaba Clouder - March 2, 2021

Alibaba Clouder - October 27, 2020

ray - April 16, 2025

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn MoreMore Posts by Alibaba Cloud MaxCompute