Catch the replay of the Apsara Conference 2020 at this link!

By AliENT, Tiangan and Muli, Senior Developer of Alibaba's Digital Entertainment Group

If you've worked with metadata, you must have experienced spending nearly a third of your time on preparations for research data to check real data and its structure, and then picking out required data and restructuring them by coding. It's only after this lengthy process then can you enter the formal phase. Today, I will show you how to directly improve development efficiency through the metadata center.

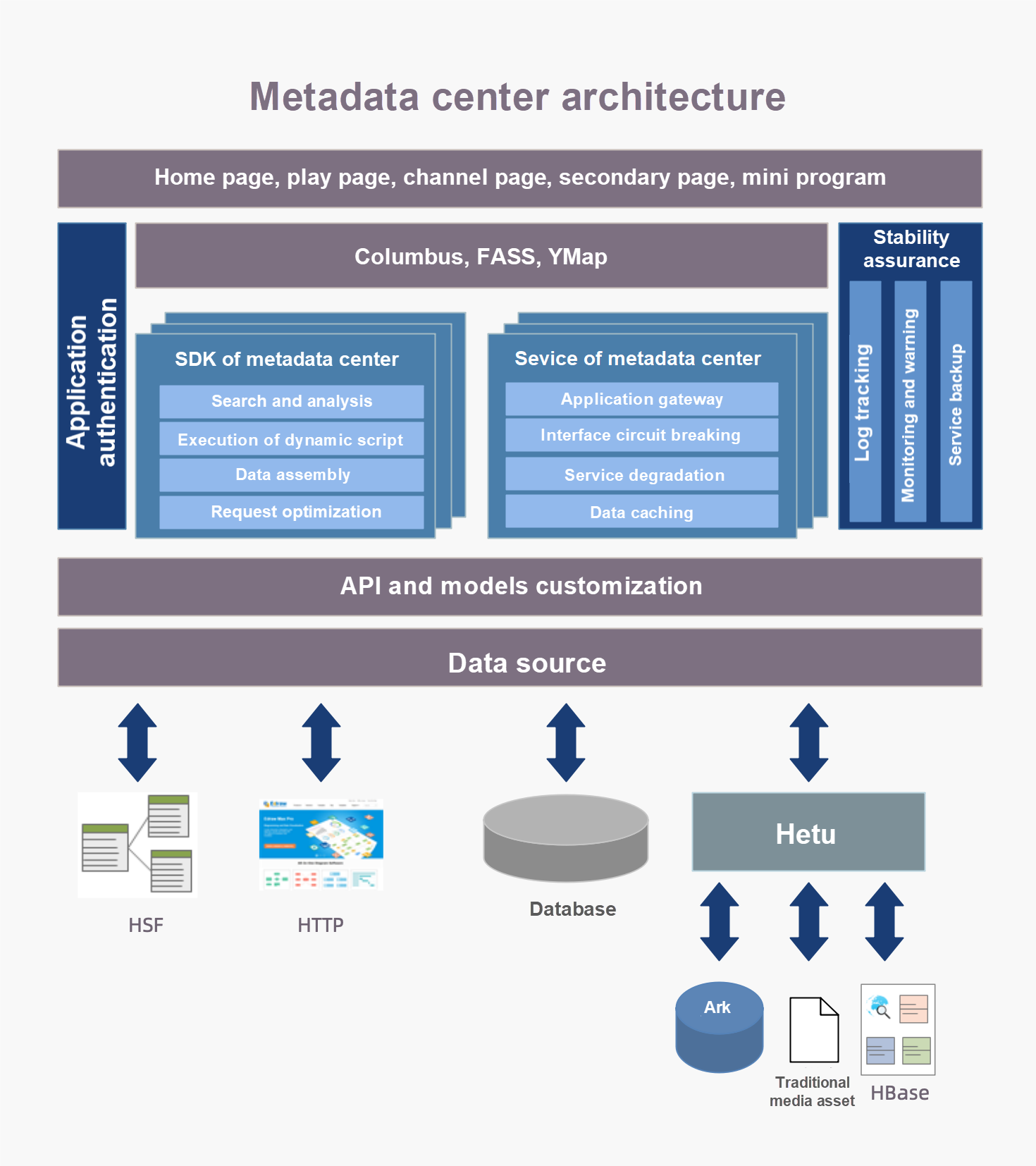

What is the metadata center? It has two main functions, to accumulate data sources from Square and build a custom interface.

Keywords search for interfaces related to basic platform services that can be manually and quickly invoked to check.

According to the custom fields in field names and types required by the business, the Software Development Kit (SDK) of metadata centers abstracts the logic of interface calling and develops the interface call engine. During business development, you only need to import the interface sign issued by the platform into the engine to complete the interface access and transform it into the configuration with hardcode.

In addition, the metadata center has established unified monitoring and circuit breaking capabilities with multi-thread pools to ensure the efficiency and stability of interface calling.

The metadata center contains two core modules. One is for data sources, making standard calls to interfaces of basic platform services, such as the ABCD system in demand. The other is for custom interfaces, performing choreography interface, including the definition of input and output parameters for business development.

The data source module standardizes the interfaces of basic platform service. Different from other platforms, the basic platform services do not need to implement java interfaces, which reduce the access to basic services. Currently, the metadata center supports HTTP and RPC interfaces. Later, more versions will be launched for supporting data sources in a distributed database, distributed cache, and mock. The data source module uses the following technologies to ensure the stability of callings.

The technology can ensure the stability of interface calls and dynamically adjust the use of thread pools according to the interface calling to ensure that the calling of other interfaces will not be affected because of the poor performance of one interface.

For many queries, such as queryByIdList, the count of query IDs must be limited. However, sometimes the count of business queries is more than the limit. You need to call it multiple times in batches to merge the results.

ThreadLocal.Some services can tolerate a long RT to obtain data, while some services are sensitive to RT. In this way, you can customize the timeout setting based on different services.

During business development, the interface mainly processes two things. The first thing is connecting the underlying data source. The second thing is combining the results of several data sources into a business interface for the upper business. Therefore, the preceding two points have been taken into account when designing the Schema. The Schema is divided into stages of call and return. According to different requirements, we determined the configuration of the database, the dependencies of the data source, and the return method of the interfaces. By doing so, simple maintenance of the results directly can be achieved through the return results of the data source. Also, you can control and intervene in the type and structure of the results by defining the results yourself and specifying the source of the values.

We resolve the dependencies and then process the data by the return configuration after the call to the data source. If the return value is configured as an expression, the engine directly executes the expression as the intermediate result and processes the intermediate result according to the special configuration. If there is no designated configuration expression, but specify the fields that you need, the engine sets them according to the expression of these field values. If this field is nested, nested schemas are processed recursively.

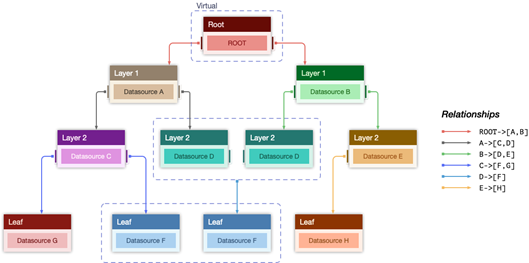

There are many use cases where one business interface needs the support of two data sources. For dependencies, it needs an abstraction of dependency resolver that traverses all data source calls, analyzes the call relationships, and generates a dependency tree. When using this method, you can perform hierarchy traversal and preorder traversal to a tree to make sure that the tree is run by non-dependent calls and the dependent. For example, a total of eight data sources need to be called: A, B, C, D, E, F, G, H, where C depends on A, D depends on A and B, E depends on B, G depends on C, F depends on C and D, and H depends on E. The generated dependency tree is represented by the following figure:

As shown in the figure, the ROOT is traversed hierarchically first, but this is a virtual node that doesn't need to be processed. Then, A and B are called for processing, which can be called concurrently or sequentially, sending to the call layer of the data source to process. After nodes A and B are processed, child nodes C, D, and F of node A and node B are processed until all nodes are processed.

After calling the data source, the engine needs to map the results based on the expression. If you need to customize each key returned, for example, calling a video query, the default key is id, and vid needs to be returned to the business side, which can set the value expression of the key. We want to make the expression as simple as possible during design, so an expression was designed, such as vid, which can be configured as datasource.video.response.id.

Since the overall technology stack is Java, the preferred scripting language is JVM-based. Groovy was chosen because it is a mature algorithm with simple syntax. It has easy interface access that only requires a jar package, and is compatible with most Java syntax with low learning costs.

The main problems involve security and performance. The issue of serious security for the script is handed over to the user for input, causing the appearance of risk code. The system makes a series of customizations to the Groovy compiler and uses a sandbox to intercept the code to filter out sensitive operations, avoiding the execution of risky code to a maximum.

The performance of Groovy is far slower than Java, so some optimizations were made. For example, the Groovy code was pre-compiled and cached to ensure that no compilation actions occurred during execution. By doing so, there is no performance problem when executing a single Groovy script.

After testing, it is found that the execution efficiency of Groovy is still low, because one request may require hundreds of times of script execution. The performance problem of one execution is not obvious. There are still problems arising from multiple executions, and it is still far behind the execution of native Java.

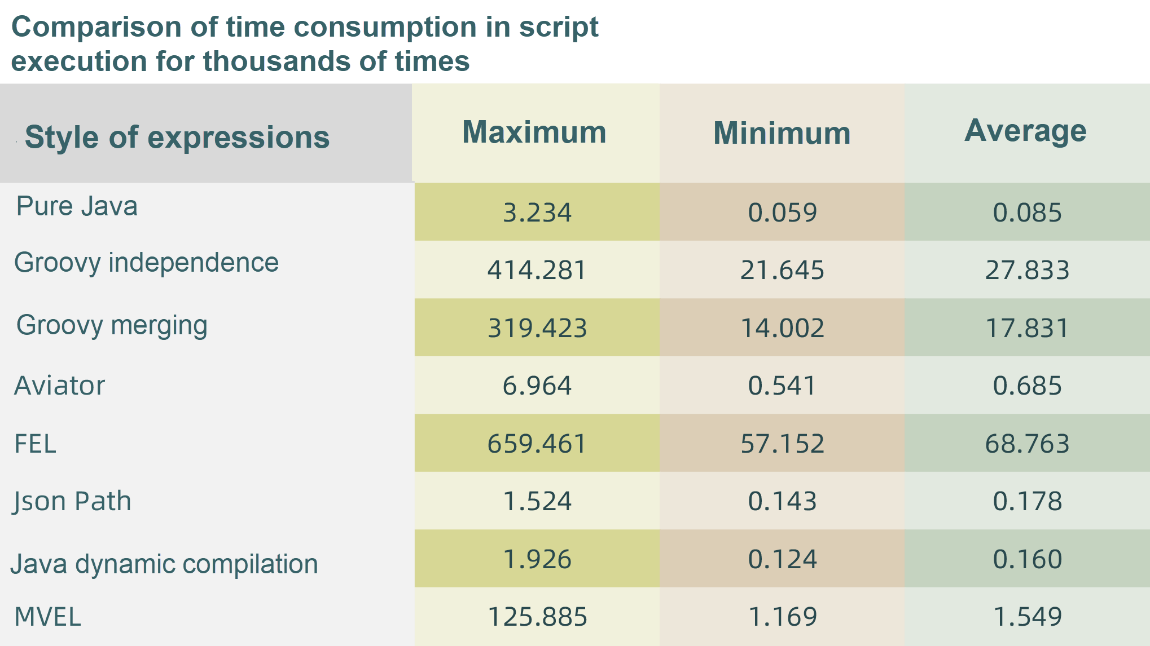

Since this is a problem with Groovy and there is no solution, we have considered other ways to replace Groovy. We studied various expression engines, including Aviator, FEL, MVEL, JSONPath, java dynamical compiles. In addition, we wrote different script versions using the two codes with the same semantics in the test and compared them with the Java native code. After a long period of running, the results are listed below:

The preceding figure shows the time it takes for the two scripts to run 1,000 times. After using Java dynamic compilation and JSON-Path, the performance was about 150 times better than Groovy. However, there are also disadvantages. JSON-Path can write a single line of value-taking statements, while Java has a complicated syntax and it is not convenient to write internal classes and methods. Considering the application scenarios, Java and JSON-Path can meet the requirements of more than 95% of scenarios. Then, you can write more complex scripts with Groovy.

Stability is the most important feature. The stability of the interface call is an important part. We can ensure stability through timely warning and automatic fusing.

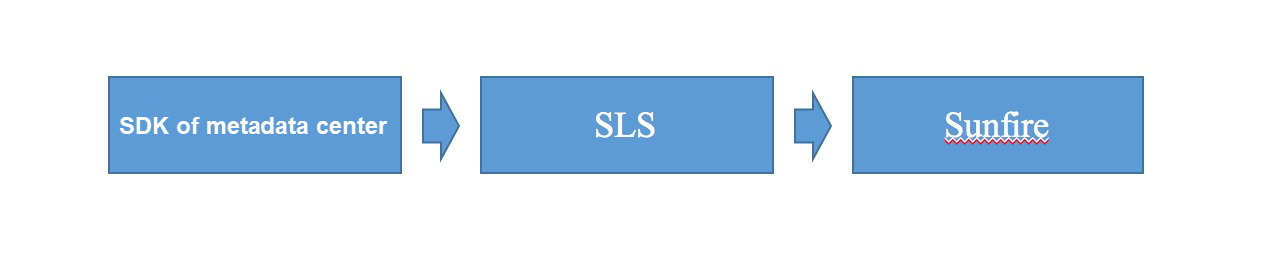

The SDK metadata center is decentralized. The call logic of the interface occurs in the local service application. The SDK uses the logback appender extension to asynchronously send logs to the log service. Then, it collects the logs in the monitoring platform and completes the construction of the monitoring alarm. The SDK metadata center also integrates a trace monitoring platform. You can check the call status on the trace monitoring platform of the business applications. In addition, you can easily check the comprehensive process by the unique ID in the log based on the ClassicConverter extension of logback to quickly locate the issues.

If your application has external dependencies, circuit breaking is an indispensable means to ensure system stability. Imagine that the RT of a dependency suddenly increases and the circuit breaker does not worked when required, the thread pool resources of the application will be consumed by this dependency. Then, the application will be unresponsive and will crash. So the importance of the circuit breaker is evident. The metadata center supports three breaking strategies: RT, second-level exception ratio, and minute-level exception.

The following shows how to use it in businesses to improve development efficiency.

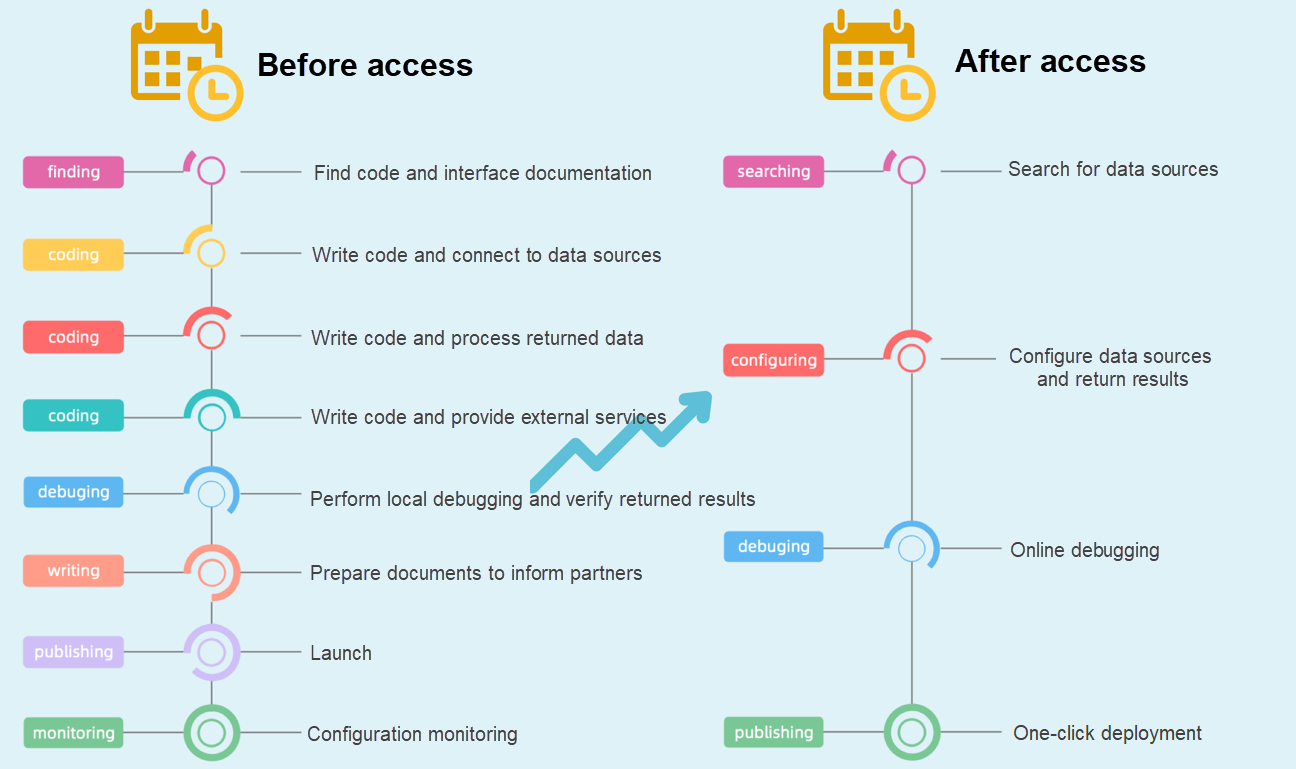

Youku's framework mainly develops by a unified content distribution. It is the best entry point for improving development efficiency. The development framework has powerful capabilities in configuration, but its data source development still faces a series of long and repeated operations. These operations include finding interfaces everywhere, learning how to call, introducing second-party data and hard code, testing, and aone publishing. Once the interface needs to switch or the business needs to add fields and other change operations, you need to change through hard code. This way, the method reduces development efficiency and increases system instability due to frequent releases. Through the combination of metadata, this development framework can easily solve the problems listed in the previous sections.

As for the feedback from developers, the development of new components requires some data reading. We configured a quick online verification from the development to publishing without hard code, based on the relevant interfaces and required fields of the center, and the combination with the configuration of the development framework.

As we all know, Internet products focus on fast iteration and trial and error. A quick launch is necessary for businesses to stay relevant and ahead of the competition. This requires a more efficient technical R&D process, which can be achieved by integrating the metadata center with FaaS. Since business development can be quickly accessed through the metadata center, we can directly write and save the business logic with the out-of-the-box features of FaaS, and the system can automatically construct and deploy it. To precisely meet the demands, the entire process is simple and efficient. Youku's previous mini program integrated the metadata center and FaaS mode.

The platform for the metadata center has been initially launched and has been applied in some business scenarios of the Youku app by integration with the development framework of content distribution and of mini programs with the integration of FaaS. From the feedback, we have implemented the business transformation from hard code mode to configuration mode at the access layer of the data source, improving development efficiency.

The basic functions of the platform have been built with better efficiency. However, platform construction still requires more effort to make full use of its capabilities, such as improving configuration efficiency and supporting more protocol data sources.

Youku's Digital Practice in the Supply Chain of Long Video Content

2,599 posts | 764 followers

FollowAlibaba Cloud Community - December 13, 2022

Alibaba Container Service - July 16, 2019

Alibaba Clouder - July 27, 2020

Alibaba Cloud Native Community - May 31, 2022

Alibaba Developer - January 9, 2020

Alibaba Clouder - November 8, 2018

2,599 posts | 764 followers

Follow IDaaS

IDaaS

Make identity management a painless experience and eliminate Identity Silos

Learn More Elastic Desktop Service

Elastic Desktop Service

A convenient and secure cloud-based Desktop-as-a-Service (DaaS) solution

Learn More Blockchain as a Service

Blockchain as a Service

BaaS provides an enterprise-level platform service based on leading blockchain technologies, which helps you build a trusted cloud infrastructure.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn MoreMore Posts by Alibaba Clouder