By Yi Xiu

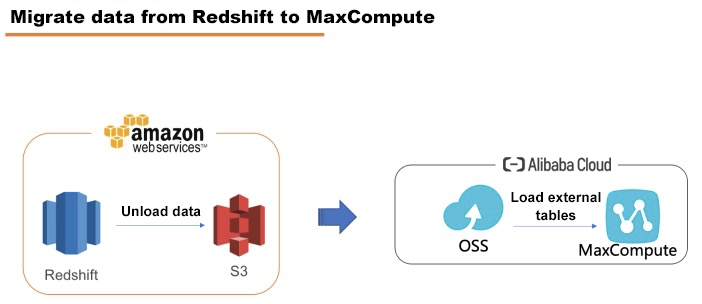

To migrate data from Amazon Redshift to Alibaba Cloud MaxCompute, the data usually needs to be unloaded to S3 first and then to Alibaba Cloud Object Storage Service (OSS). MaxCompute then directly reads data from OSS by using external tables.

The following figure describes the overall migration process from Redshift to MaxCompute:

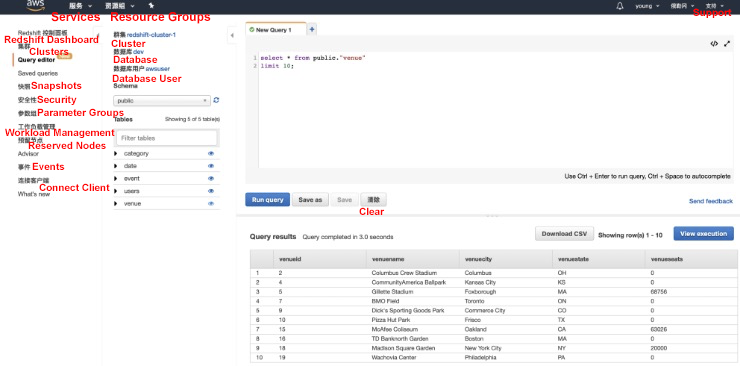

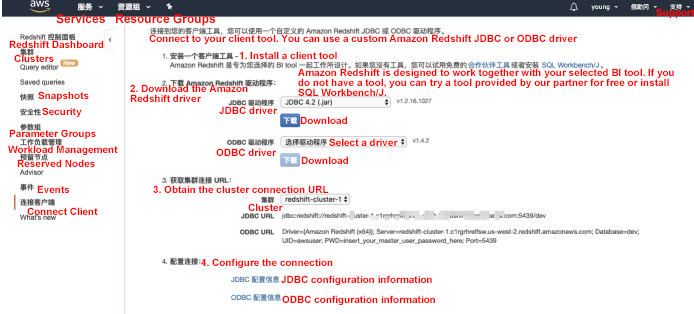

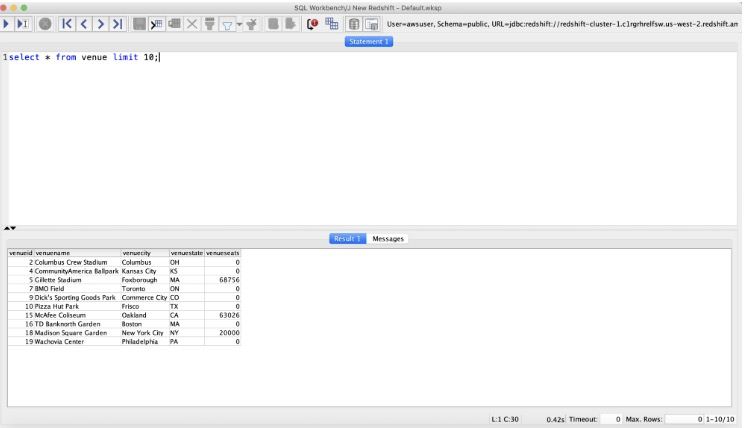

In this article, SQL Workbench/J is used to connect Redshift for case demonstration. The Amazon Redshift Query Editor may often report some strange errors. We recommend that you use SQL Workbench/J.

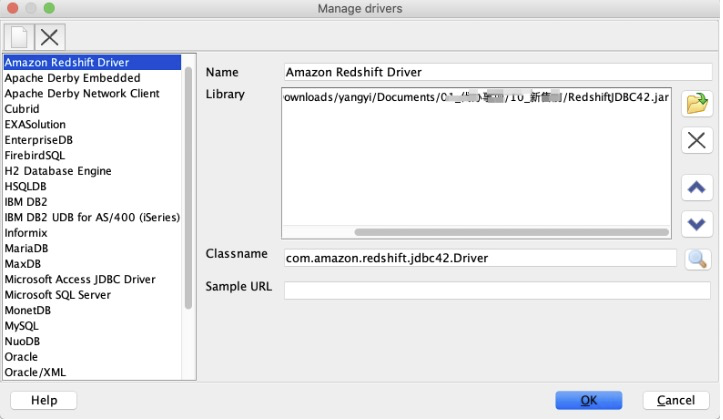

Download the Amazon Redshift JDBC Driver at https://s3.amazonaws.com/redshift-downloads/drivers/jdbc/1.2.16.1027/RedshiftJDBC42-1.2.16.1027.jar (version 4.2 is recommended).

Create a new driver in SQL Workbench/J, select the driver's downloaded jar file and enter "com.amazon.redshift.jdbc42. Driver" in the Classname text field.

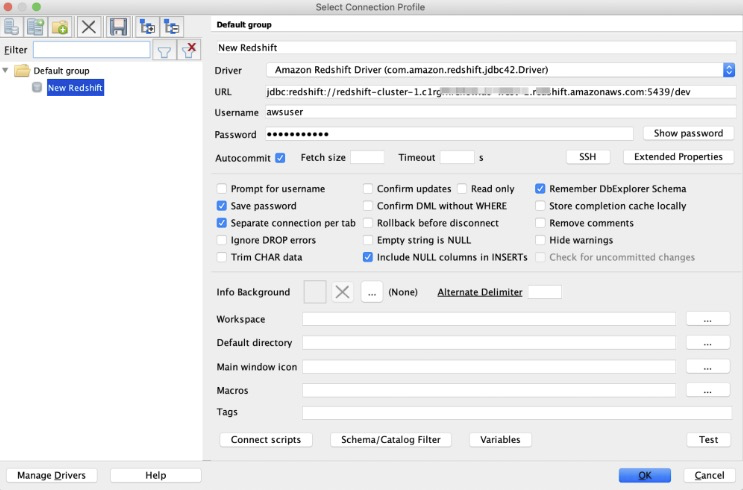

Configure a new connection, select the driver that you just created, copy JDBC URL, database username, and password, and check Autocommit.

If a connection times out during configuration, you must configure a security policy in the ECS VPC security group. Visit https://docs.aws.amazon.com/redshift/latest/gsg/rs-gsg-authorize-cluster-access.html for details.

For more information about downloading and configuring Workbench/J, visit https://docs.aws.amazon.com/redshift/latest/mgmt/connecting-using-workbench.html

The following screenshots show the pages where you can download the JDBC driver and view the JDBC URL.

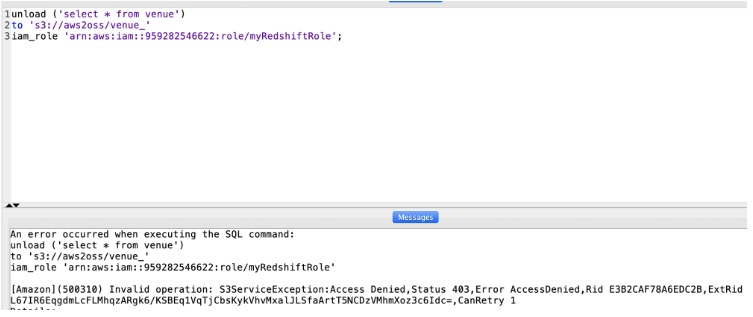

Before unloading data to S3, make sure that you have the required IAM permissions. If you receive the "S3ServiceException: Access Denied" error when you run the COPY, UNLOAD or CREATE LIBRARY command, your cluster does not have proper access to Amazon S3. The following figure shows the access error:

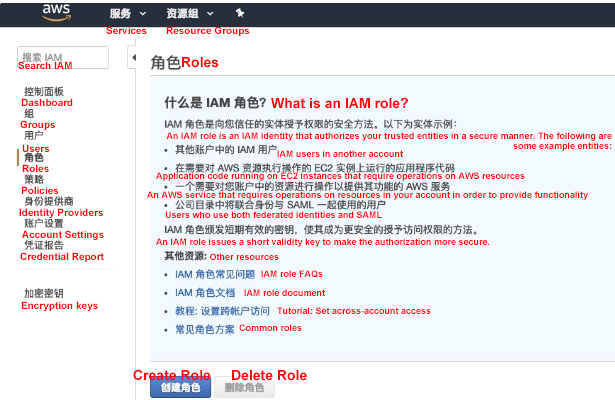

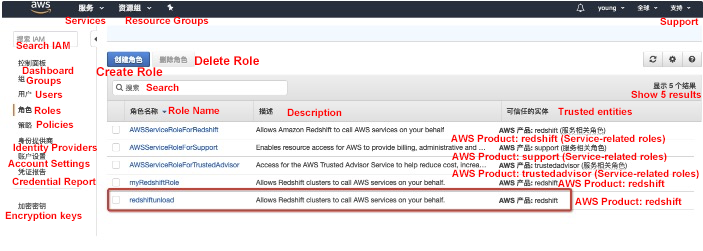

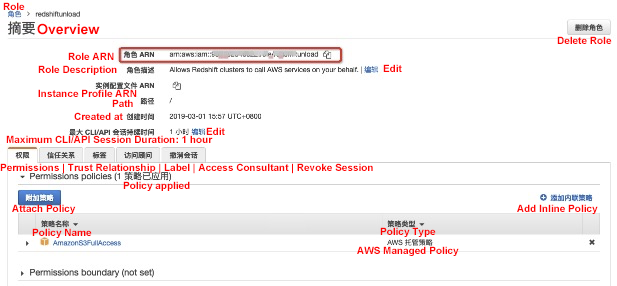

Visit https://console.aws.amazon.com/iam/home#/roles and create a role.

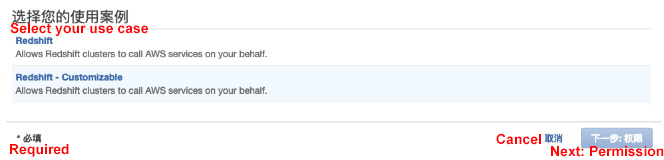

Select the Redshift service and Redshift - Customizable

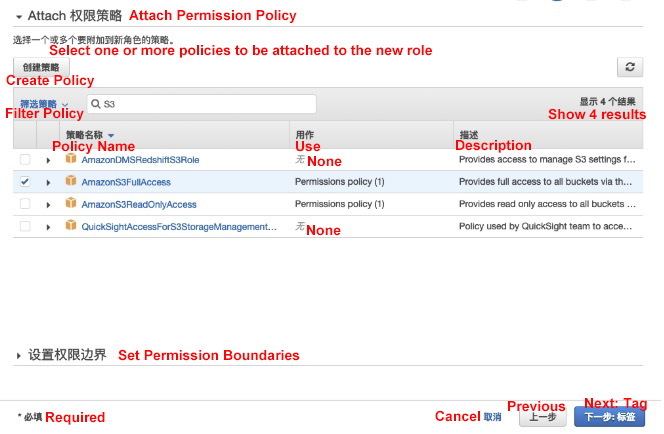

Search for S3 policies, check AmazonS3FullAccess and click Next.

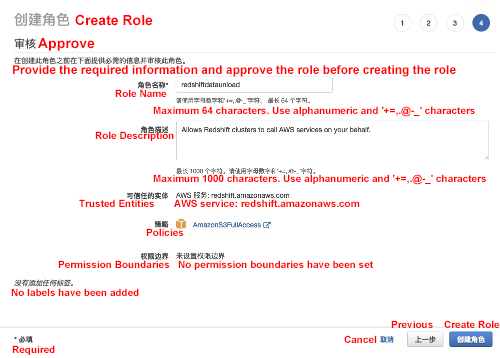

Name the role as redshiftunload.

Open the defined role and copy the role ARN. (You need to use the unload command.)

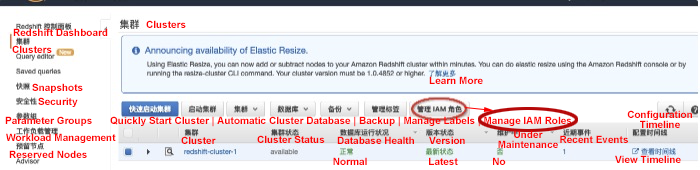

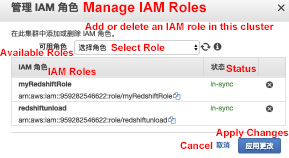

Go to the Redshift cluster and click Manage IAM Roles.

Select the redshiftunload role that you defined and apply the changes.

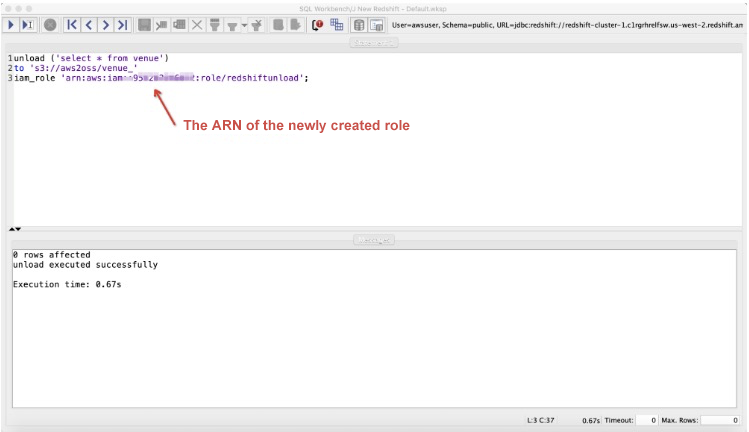

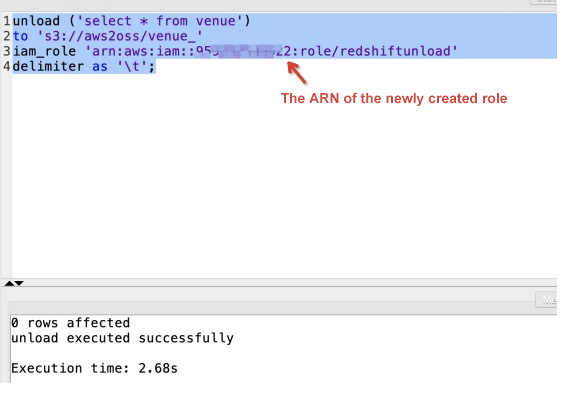

Run the Unload command to unload data.

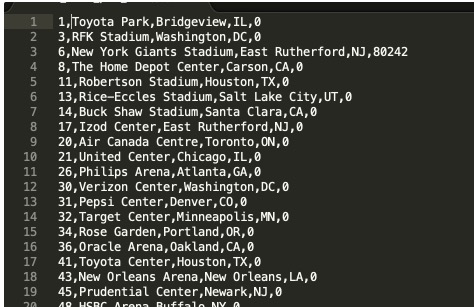

Unload data to the corresponding S3 bucket using the default pipe separator (|) and store the data by using the venue_ prefix:

unload ('select * from venue')

to 's3://aws2oss/venue_'

iam_role '<the ARN of the redshiftunload role that you created>';

--parallel off; --Unload data continuously. One file is unloaded each time. The maximum size of each file is 6.2 GB.The following figure shows the execution process.

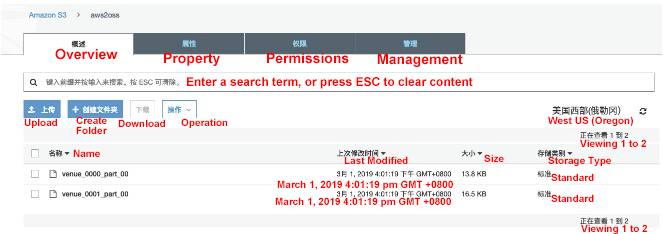

In the corresponding Amazon S3 bucket, you can see two files with the venue_ prefix. You can open the folder and check the data.

The data is separated by the pipe separator (|), as shown in the following figure.

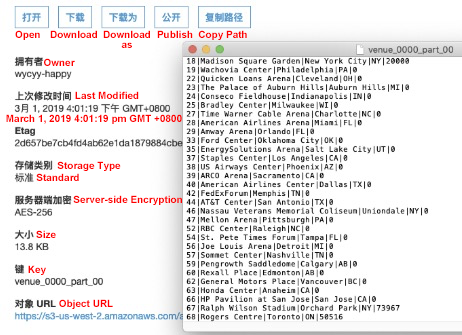

Run the following command to unload the same result sets into a tab-delimited file:

unload ('select * from venue')

to 's3://aws2oss/venue_'

iam_role '<the ARN of the redshiftunload role that you created>'

delimiter as '\t';

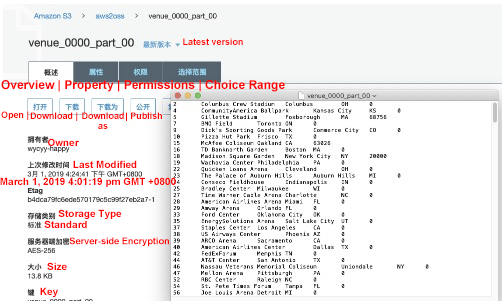

Open the file and you can see that the data file is as follows:

----To make it easier for MaxCompute to read data, we use the comma (,) delimiter. --`sql

unload ('select * from venue')

to 's3://aws2oss/venue_'

iam_role '<the ARN of the redshiftunload role that you created>'

delimiter as ','

NULL AS '0';

For more information about the unload command, see: https://docs.aws.amazon.com/redshift/latest/dg/r_UNLOAD.html

The online migration tool only supports data sources in the same country. To implement data migration for data sources in different countries, we recommend that you use the OSS migration tool (ossimport), deploy migration services yourself and purchase a private line. For more information, see https://www.alibabacloud.com/help/doc-detail/56990.htm

OSS is compatible with the S3 API and allows you to seamlessly migrate your data from AWS S3 to Alibaba Cloud OSS. After data is migrated to AWS S3, you can still access OSS using the S3 API. For more information, see our S3 migration tutorial.

During an online migration task, reading data from Amazon S3 will incur outbound Internet traffic fees, which are charged by Amazon.

By default, online migration does not support cross-border data migration. If you want cross-border migration, open a ticket to apply for the permission to configure the task.

Next, we will use a RAM subaccount to demonstrate how to migrate data from Amazon S3 to Alibaba Cloud OSS.

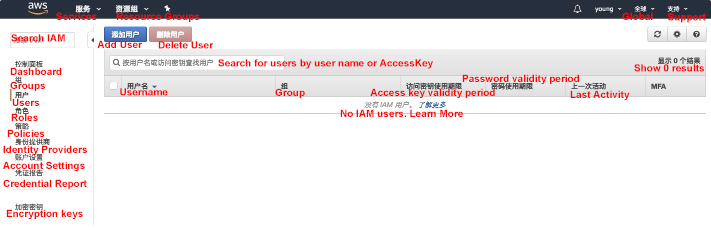

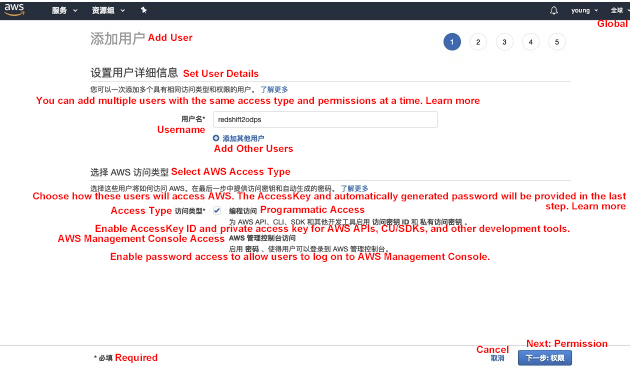

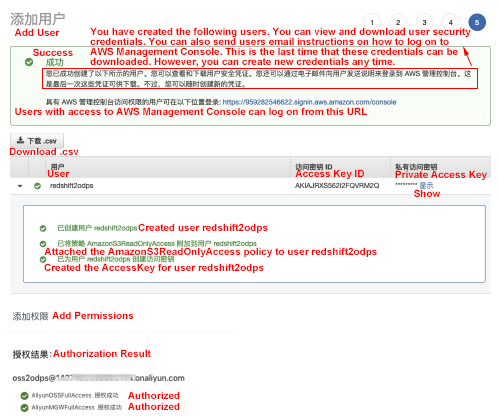

Step 1: Go to IAM and select Add User.

Step 2: Add a user and check Create AK.

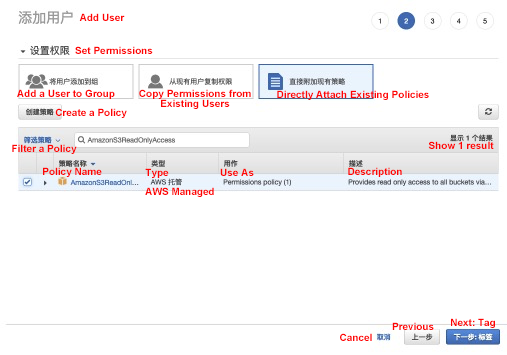

Step 3: Select Directly Attach an Existing Policy and grant AmazonS3ReadOnlyAccess.

Step 4: Record the AK information, which will be used for data migration later.

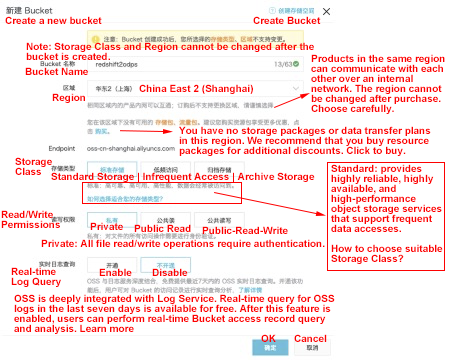

Create a bucket in Alibaba Cloud OSS:

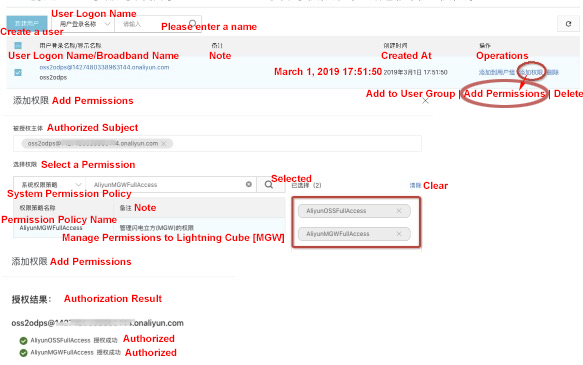

Create a RAM subaccount and grant the OSS bucket Read+Write permission and the online migration management permission.

Migration will use the network resources on the source side and the target side. File checks are performed on the source side and the target side during the migration process. If a file on the source side has the same name as the file on the target side and it was updated more recently than the file on the target side, the source side file will overwrite the target side file.

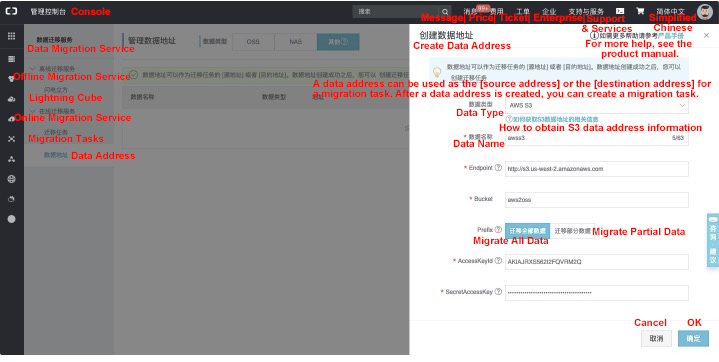

Go to the Alibaba Cloud online data migration console: https://mgw.console.aliyun.com/?spm=a2c4g.11186623.2.11.10fe1e02iYSAhv#/job?_k=6w2hbo and log on with the subaccount that you created in the "Preparations in Alibaba Cloud OSS" section.

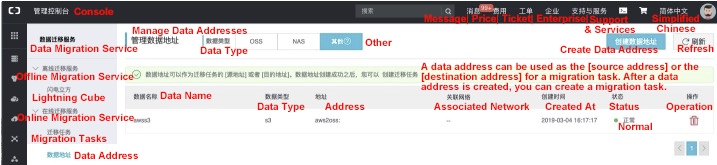

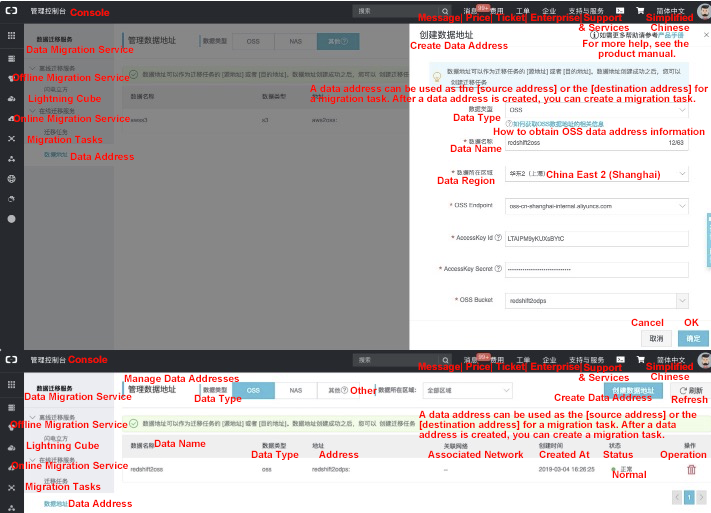

Go to Data Migration Service > Data Location > Data Type > Other

For more information about the configuration, visit https://www.alibabacloud.com/help/doc-detail/95159.htm

For more information about the configuration, visit https://www.alibabacloud.com/help/doc-detail/95159.htm

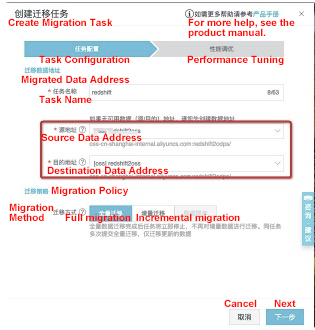

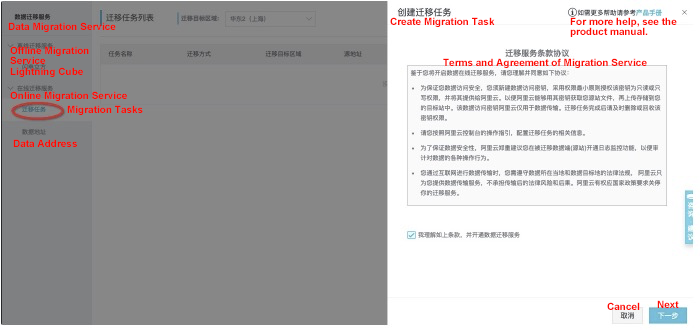

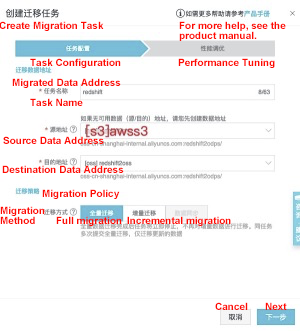

Click the Migration Task tab in the left panel and click Create Migration Task.

The data in OSS is as follows:

Before querying OSS data, you need to grant the MaxCompute account the permissions required for accessing OSS data first. For more information about authorization, see Authorization. You must grant the MaxCompute account the required permissions to access OSS data before MaxCompute can directly access data in OSS. You can grant permissions in two ways:

1. When the owner of MaxCompute and OSS is the same account, log on with the Alibaba Cloud account and click here to finish the authorization in just one click.

2. If the MaxCompute account and the OSS account are not the same, you need to finish the authorization by logging on to with the OSS account. For more information,see this document.

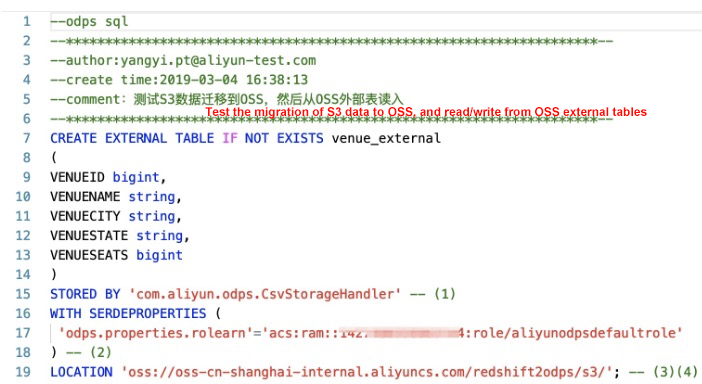

You can create an external table in DataWorks as follows:

DDL statements for creating an external table in MaxCompute:

CREATE EXTERNAL TABLE IF NOT EXISTS venue_external

(

VENUEID bigint,

VENUENAME string,

VENUECITY string,

VENUESTATE string,

VENUESEATS bigint

)

STORED BY 'com.aliyun.odps.CsvStorageHandler' -- (1)

WITH SERDEPROPERTIES (

'odps.properties.rolearn'='acs:ram::*****:role/aliyunodpsdefaultrole'

) -- (2)

LOCATION 'oss://oss-cn-shanghai-internal.aliyuncs.com/redshift2odps/s3/'; -- (3)(4)com.aliyun.odps.CsvStorageHandler is a built-in StorageHandler for processing CSV files. It defines how to read and write CSV files. You only need to specify this name. The relevant logic is implemented by the system. If you define a different delimiter when unloading data into S3, MaxCompute also supports the Handler for that customized delimiter. For more information, visit https://help.aliyun.com/document_detail/45389.html

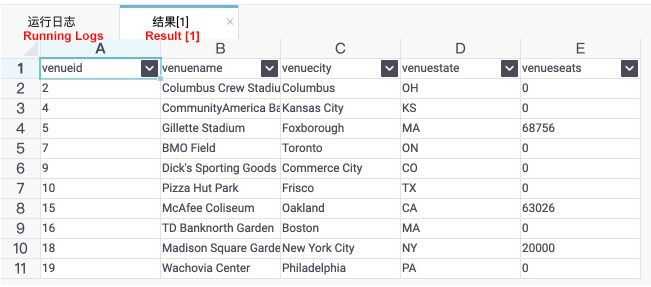

Results can be directly returned: select * from venue_external limit 10;

The following figure shows the results in DataWorks.

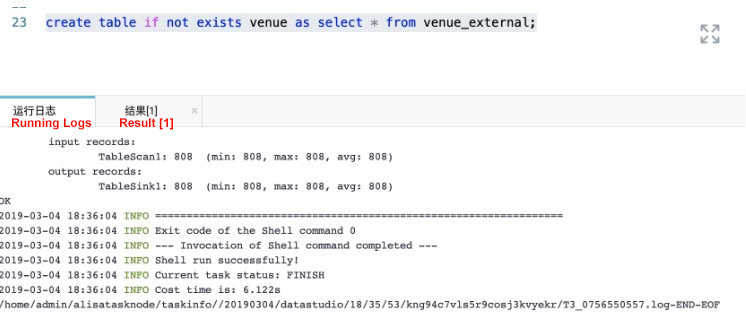

If you need to perform complex queries on very large volumes of data, we recommend that you convert the external table into an internal table. To do this, run the following: create table if not exists venue as select * from venue_external;

Archive Log Service Data to MaxCompute for Offline Analysis Using DataWorks

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - January 4, 2022

ApsaraDB - April 28, 2020

Alibaba Clouder - February 7, 2018

Alibaba Clouder - January 3, 2018

Alibaba Cloud MaxCompute - July 15, 2021

Alibaba Cloud MaxCompute - September 12, 2018

137 posts | 21 followers

Follow Oracle Database Migration Solution

Oracle Database Migration Solution

Migrate your legacy Oracle databases to Alibaba Cloud to save on long-term costs and take advantage of improved scalability, reliability, robust security, high performance, and cloud-native features.

Learn More Database Migration Solution

Database Migration Solution

Migrating to fully managed cloud databases brings a host of benefits including scalability, reliability, and cost efficiency.

Learn More Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn MoreMore Posts by Alibaba Cloud MaxCompute