By Guyi and Shimian

GitHub's software upgrade once caused more than six hours of global service interruption. Meta Platforms, Inc. (formerly known as Facebook, Inc.) also caused more than six hours of system paralysis worldwide due to configuration push errors.

Large IT system failures like these occur every once in a while. It is the primary responsibility of system architects to build a secure and reliable online application architecture for an enterprise. They should build a business system architecture to safely cope with the current business traffic and the capability to tackle the challenges brought by the business growth in the coming years. This capability has nothing to do with the technological trends but is mainly about the talent market capacity of the selected technology and the business pattern and growth direction of the enterprise.

Let's put aside the infrastructure of IT systems and the specific enterprise businesses. We can just abstract them into two key metrics for online applications: traffic and capacity. The goal of capacity is still to meet the basic needs of traffic. Our goal of continuous optimization is to find a balance point between the two metrics that can represent technological advancement. This balance point means that the existing resources (capacity) efficiently and accurately serve the existing and predictable business traffic. High efficiency means performance with precision and lossless.

This 3-part series aims to bring technology back to the essential problem that system architects need to solve: how to minimize traffic loss of online applications.

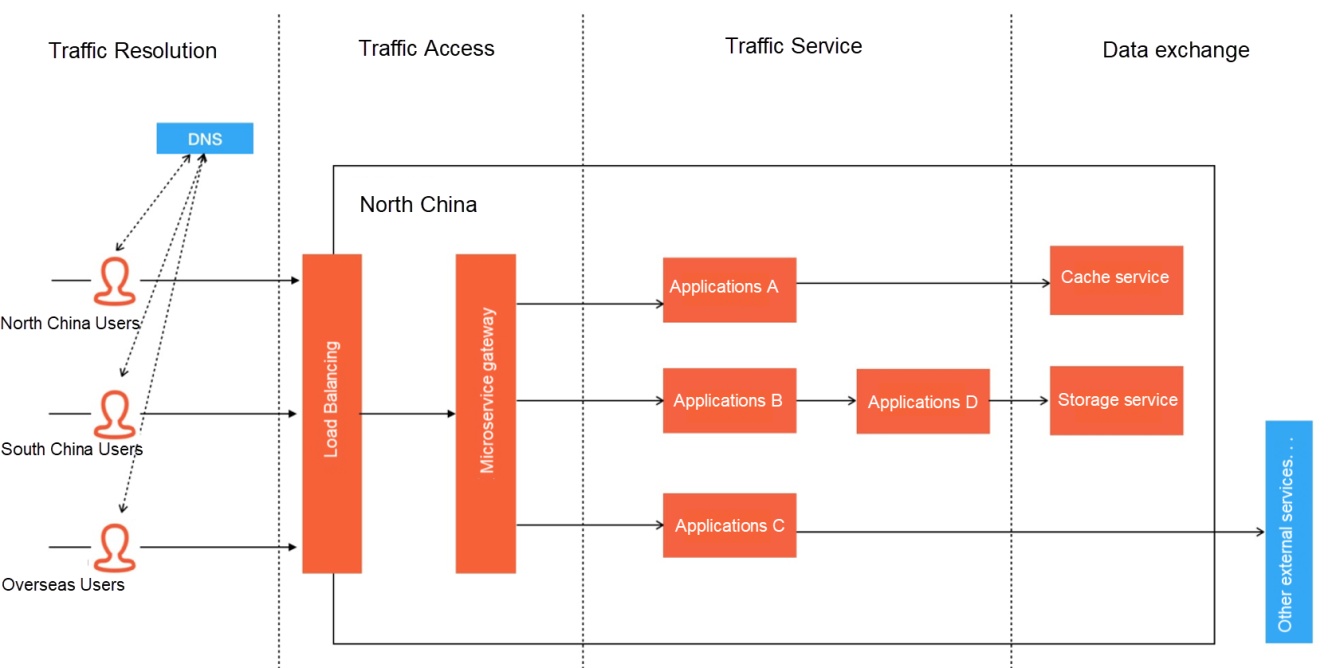

Let's first consider a general business deployment architecture. (Note: Since the authors are familiar with Java and cloud services, many examples used in this series to explain cloud services are technical frameworks in the Java system. Therefore, some details may not have the reference meaning for other programming languages.)

This diagram is a typical and simple business architecture. This business will serve users worldwide. When the user's request arrives, it will be transferred to the microservice gateway cluster through load balancing. After completing traffic cleaning, authentication, auditing, and other basic work, the microservice gateway routes the request to the microservice cluster according to the business form. The entire microservice cluster will eventually exchange (read/write) data with different data services.

According to the description above, we can temporarily divide the entire traffic request service process into four main stages: traffic resolution, traffic access, traffic service, and data exchange. There is a possibility of traffic loss in any of these four stages, and the solutions we adopt to tackle traffic loss in each stage are completely different. Some can be solved with framework configurations, while others may require the reconstruction of the overall architecture. We will analyze these four stages one by one throughout the three articles. This article mainly discusses traffic resolution and traffic access.

The essence of resolution is to obtain the address of a service by using a service name. This process is the Domain Name System (DNS) resolution in the general sense. However, the management policies of various service providers may affect traditional domain name resolution. There are problems with the DNS resolution in domain name caching, domain name forwarding, resolution latency, and cross-service provider access. In particular, in global-oriented Internet services, web service resolution in traditional DNS does not determine the source of end-users and randomly selects an IP address to return to users. This may reduce the resolution efficiency due to cross-service provider resolution and slow down remote access speed.

The problems above may lead to traffic loss. If you want to solve the problems above, common solutions include intelligent resolution and HTTP DNS technology, which are described below:

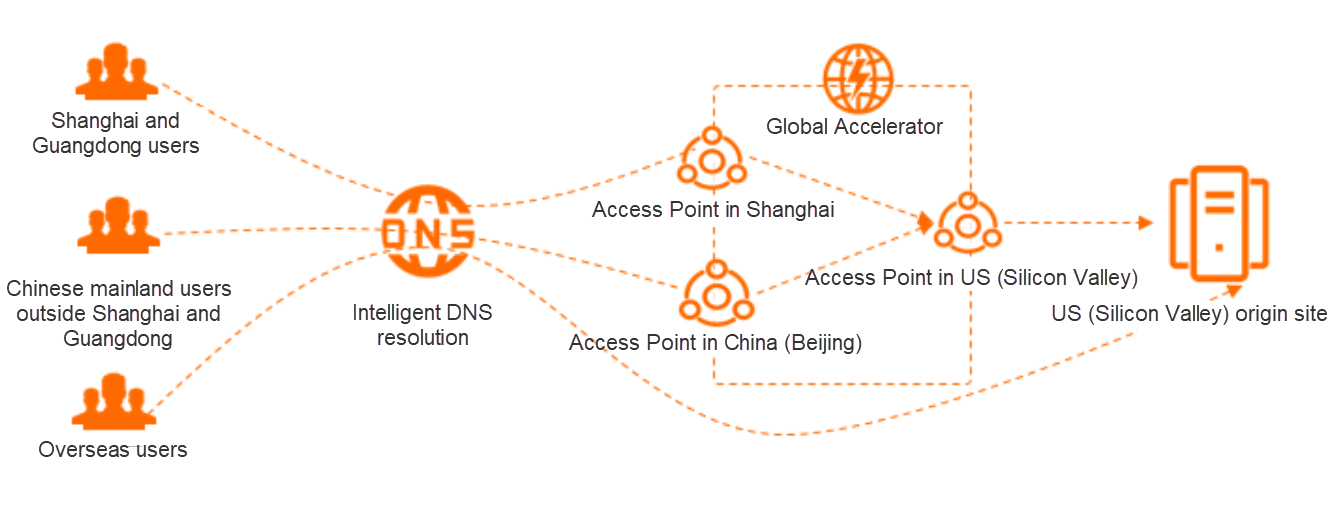

The intelligent resolution is mainly used to solve the problem of network blocks caused by a cross-network resolution between different operators. It selects the access point under the corresponding network according to the address of the access end to resolute the correct address. With the continuous iteration and evolution of this technology, some products have added network quality monitoring nodes of different regions on top of it. This enables more intelligent resolution according to the service quality of different nodes in a group of machines. Currently, the intelligent resolution on Alibaba Cloud relies on the cloud infrastructure. It can dynamically scale the nodes in the node pool with the application as its unit, maximizing the value of cloud elasticity in this field:

The image is from the Alibaba Cloud resolution document.

HTTPDNS (as its name implies) uses the HTTP protocol to replace the DNS discovery protocol. Generally, a service provider (or self-built) provides an HTTP server, which provides an extremely concise HTTP interface. Before the client initiates the access, the HTTPDNS SDK initiates resolution first. If the resolution fails, it falls back to the original LocalDNS for resolution. HTTPDNS is very effective in solving problems in DNS hijacking, domain name caching, and other scenarios. The disadvantage is that most solutions also require clients to intrude on the SDK. In addition, the construction cost of the server is a little high. However, with the continuous iteration of cloud providers in this field, more lightweight solutions have emerged. This will also help HTTPDNS become one of the mainstream trends in the evolution of DNS in the future.

When the DNS resolution reaches the correct address, it enters the core entrance of traffic access. The major role of this process is the gateway. A gateway usually plays important functions, such as routing, authentication, auditing, protocol conversion, API management, and security protection. A common combination in the industry is load balancing and microservice gateway. However, there are often some scenarios that are easy to be ignored by the combination. As shown in the following figure, the three easy to ignore scenarios are traffic security, gateway application control, and traffic routing.

Unsecure traffic is divided into two categories. The first category is attack-type traffic. The second category is traffic that exceeds the system capacity. The prevention measures of attack-type traffic are mainly focused on the firewall of software and hardware. This type of solution is quite mature, and we will not repeat it here. It is difficult to identify non-attack traffic that exceeds the system capacity here. In this scenario, ensuring that the normal traffic gets corresponding services to the maximum extent while protecting the entire business system that is likely to avalanche is the difficulty in our choice.

A simple attempt is to perform traffic control at the gateway similar to RateLimit based on request QPS, number of concurrencies, number of requests per minute, and interface parameters. Before that, the system architect is required to know the system capacity. We recommend performing an overall capacity evaluation before doing similar security protection. The evaluation here is not simply doing stress testing for some APIs but performing comprehensive-procedure stress testing for the production environment (there will be a special section dedicated to this in Part 3) and then making targeted adjustments to the security policy.

The gateway, in its essence, is still an application. The online systems of our customers are completely microservice-architecture business systems. The gateway will occupy 1/6 - 1/3 of the machine resources in the whole system, which is a very large-scale application. Since it is an application, it will perform some routine O&M control operations, such as startup, stop, deployment, and scale-out. However, since the gateway is the throat of a business system, it cannot be frequently operated, which means several principles must be followed within the development team:

In addition to the two development-side principles mentioned above, there are corresponding principles on the O&M side. In addition to routine monitoring and alerting capabilities, O&M requires adaptive elasticity. However, the elasticity of the gateway involves too many factors. It needs to be operated together with the previous load balancing (for instance, before application deployment, we need to make the corresponding node offline or perform authority downgrading on it at the load balancing and launch the node after successful application deployment). Meanwhile, elasticity needs to cooperate with the automation of the application control system to achieve traffic lossless online.

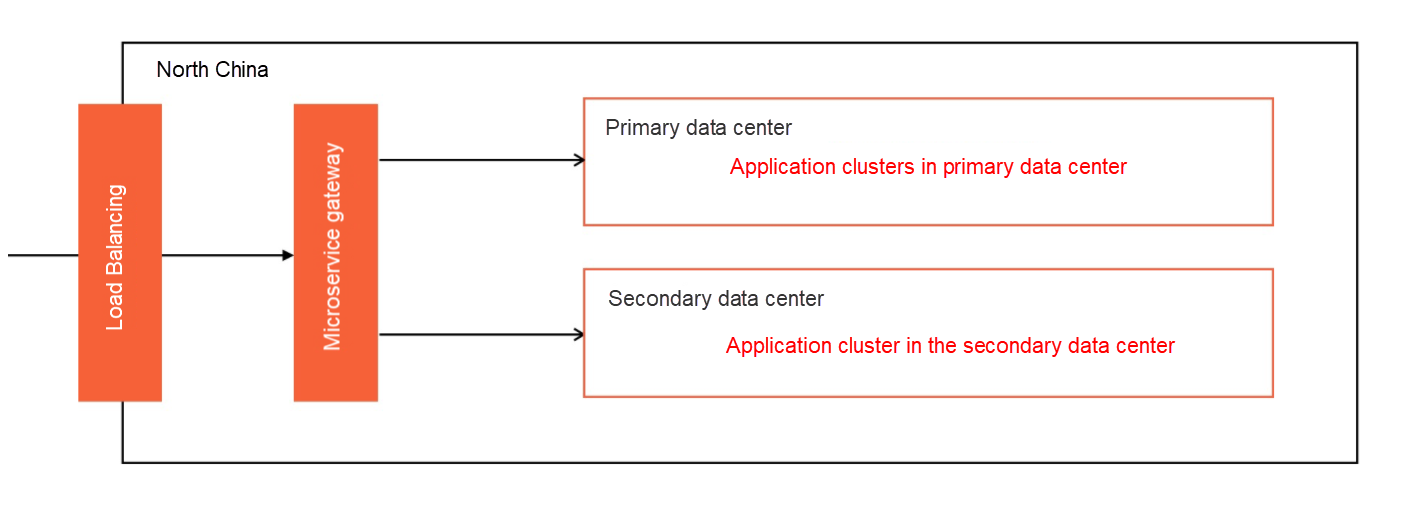

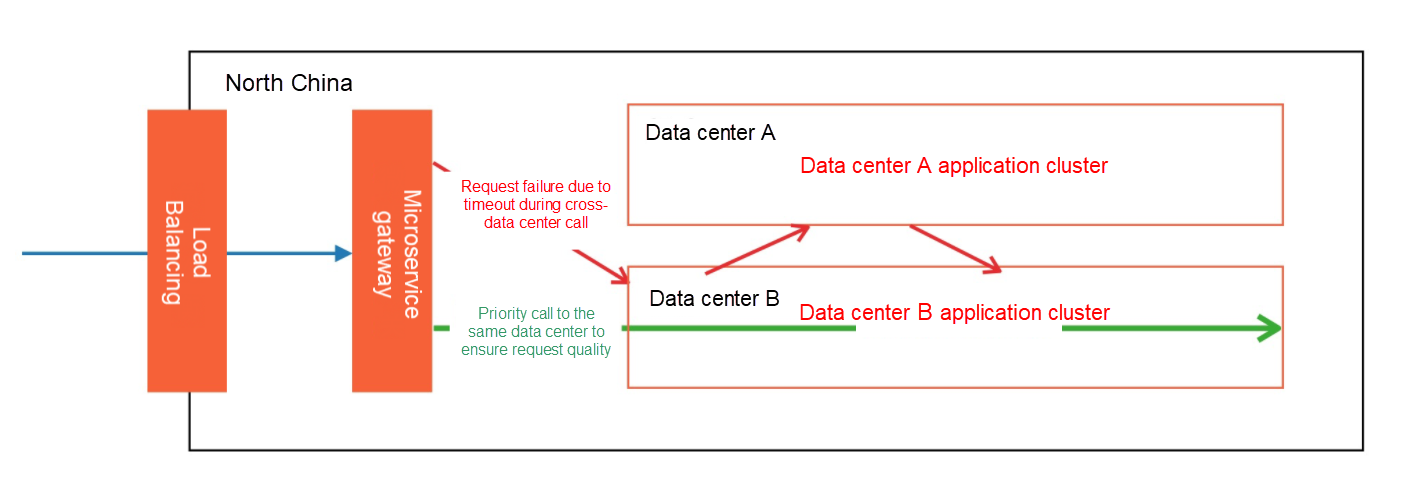

The next step after passing through the gateway is to route the application to the next node. In Internet scenarios, if there are high-availability and multi-data center deployment, the principle of the nearest routing is adopted for quality service. If the gateway entrance is the primary data center, we want the application to be routed to the node in the same data center in the next hop. Similarly, the application is still routed to the same data center for the next hop in a microservice cluster. Otherwise, if a request of cross-data center calls occurs, the RT will become very long, eventually resulting in the traffic loss due to request timeout, as shown in the following figure:

We need to have a good understanding of the selected service framework to achieve the effect of the same data center call. The core principle is that when routing for the next hop, the address of the same data center needs to be selected first. We need to make some targeted and customized development For different frameworks and the deployment environments in which the business is located. This way, we can achieve strict priority calls to the same data center.

This article is Part 1 of a 3-part series entitled How to Build a Traffic-Lossless Online Application Architecture. It aims to use the simplest language to classify the technical problems that affect the stability of online application traffic. Solutions to these problems are only code-level details. Some need tools to cooperate, and others require expensive costs. If you expect a traffic-lossless experience for your application on the cloud, please pay attention to Alibaba Cloud Enterprise Distributed Application Service (EDAS). Part 2 will discuss the perspective of online application publishing and service governance.

How to Build a Traffic-Lossless Online Application Architecture – Part 2

How to Build a Traffic-Lossless Online Application Architecture – Part 3

How to Build a Traffic-Lossless Online Application Architecture – Part 2

208 posts | 12 followers

FollowAlibaba Cloud Native - April 28, 2022

Alibaba Cloud Native - April 28, 2022

Alibaba Clouder - September 25, 2020

Alibaba Container Service - July 29, 2019

Alipay Technology - February 20, 2020

Wenson - August 4, 2020

208 posts | 12 followers

Follow Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More IT Services Solution

IT Services Solution

Alibaba Cloud helps you create better IT services and add more business value for your customers with our extensive portfolio of cloud computing products and services.

Learn More Enterprise IT Governance Solution

Enterprise IT Governance Solution

Alibaba Cloud‘s Enterprise IT Governance solution helps you govern your cloud IT resources based on a unified framework.

Learn MoreMore Posts by Alibaba Cloud Native