By Guyi and Shimian

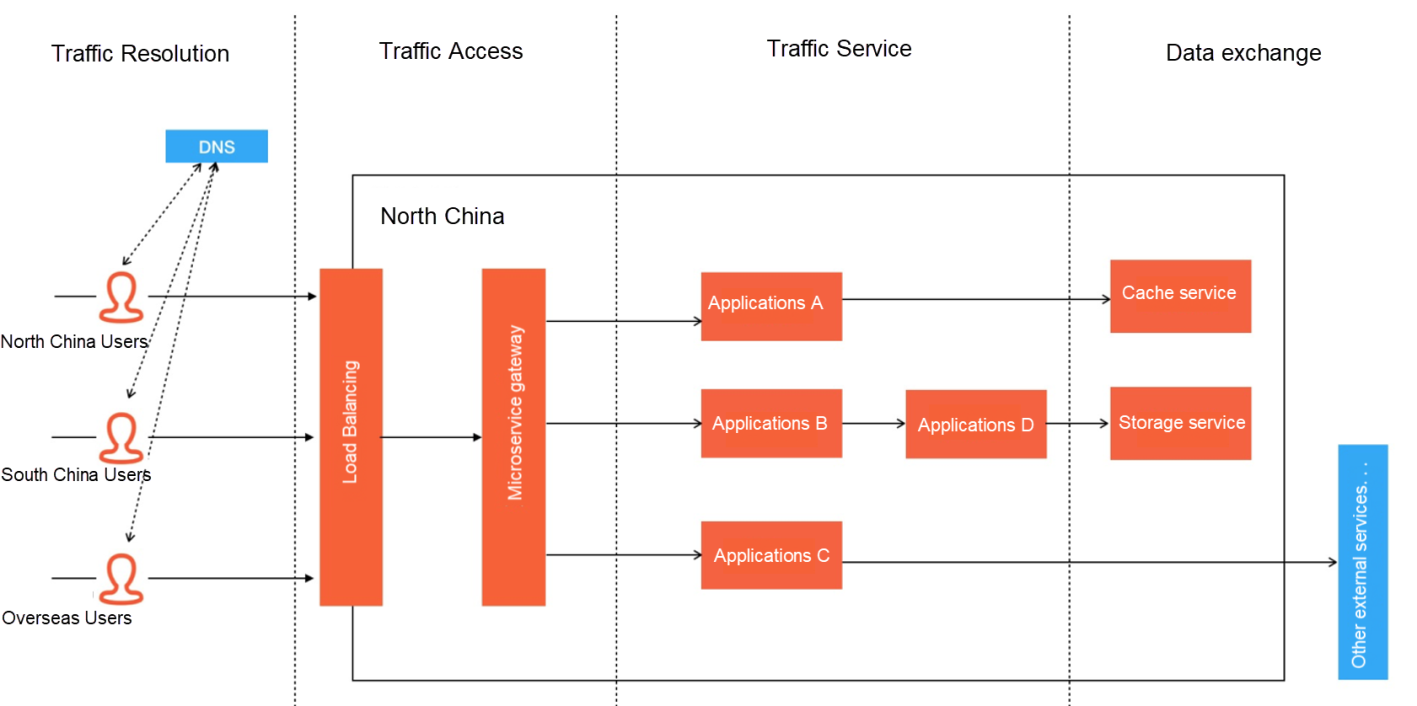

How to Build a Traffic-Lossless Online Application Architecture – Part 1 discussed the following figure. We explained some key technologies for traffic analysis and traffic access to achieve lossless traffic. This article discusses some technical details that will affect online traffic in the formal service process from the perspective of traffic service.

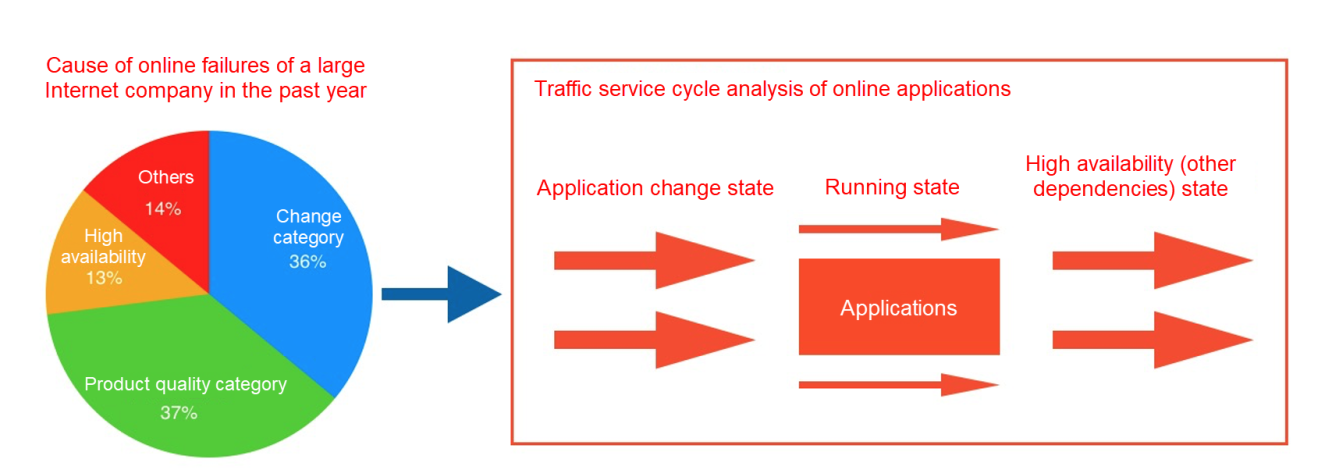

Recently, we analyzed the causes of online failures of a large Internet company in 2021. Among them, product quality problems (such as unreasonable design and bugs) accounted for the highest proportion, reaching 37%. Problems caused by online release, product, and configuration changes accounted for 36%. There are also high-availability problems with dependent services, such as equipment failure, upstream and downstream dependent service problems, or resource bottlenecks.

We can see from the cause analysis in the left part of the figure above that properly managing the problems of change, product quality, and high availability are the keys to achieving traffic-lossless service. We divide these reasons into several key stages according to the application lifecycle:

We will list relevant examples to explore the corresponding solutions and efficiently deal with these scenarios.

One step in the application change is to stop the original application. Before the online application in the production environment is stopped, the service of the service traffic needs to be offline. There are two main types of traffic we often refer to – synchronous call traffic (such as RPC and HTTP requests) and asynchronous traffic (such as message consumption and background task scheduling).

If the process is stopped when the server still has unprocessed requests, both of the preceding traffic types will cause traffic losses. We can follow these two steps to solve this situation:

1) Remove the existing node from the corresponding registration service.

Scenarios include removing the RPC service from the node in the registry, removing the HTTP service from the upstream load balancing, and trying to close the thread pool of background tasks (such as message consumption) so there are no new consumption or services performed.

2) Stop for a period (depending on the business situation) to ensure that the incoming traffic in the process can be properly handled before closing the process.

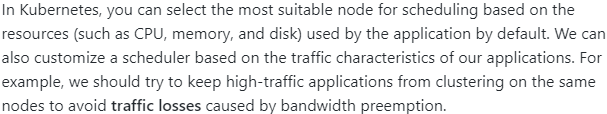

Another action in the change process is to initiate a deployment after selecting resources (machines or containers). Generally speaking, scheduling is how resources are selected. Traditional physical machines or virtual machines with limited scheduling capability do not have much potential in scheduling, as their resources are virtually fixed. However, the emergence of container technology (especially the popularity of Kubernetes) brought many changes to delivery and scheduling. It ushered in an era of flexible scheduling from traditional resource allocation planning.

After the needed resources are scheduled by the application, the next step is to deploy and start the application. Similar to the application stop scenario, our node is very likely to have been registered before the application is fully initialized, and the thread pool of background tasks is likely to have started. At this time, traffic is scheduled for upstream services (such as SLB starting routing and message starting consumption). However, the service quality of traffic cannot be guaranteed until the application is fully initialized. For example, the first few requests after starting a Java application are usually in a lagging state.

How can we solve this problem? How can we solve this problem? We need to wait until the application is fully initialized and then start to deal with the initialization of service registration, background task thread pool, and consumer thread pool of message. If there is an external load balancing routing traffic, the automation tools of application deployment are needed.

After the system go-live is completed, sometimes a surge in traffic may lead to an exceedingly high system water level, causing a system crash. Great promotion activity at midnight is a typical scenario. During peak traffic, a large amount of traffic rushes into application instances within a short time. This triggers underlying resource optimization issues, such as JIT compilation, framework initialization, and class loading. These optimizations cause high loads to the system in a short time, resulting in traffic loss. If we want to solve this problem, we need to control the traffic to make it slowly increase. We can realize the lossless traffic of operations such as scale-out and go-live in large traffic scenarios by enabling the class loader to load classes in parallel, initializing the framework in advance, and using log asynchronization to increase the business capacity of the newly started application.

Since 2020, we have seen a trend towards Spring Cloud and Kubernetes, which has become the most popular technology combination in microservice systems. Effectively combining the microservice system with Kubernetes is a challenge in a Kubernetes-based microservice system. Pod lifecycle management in Kubernetes provides two detection points:

If our application is not configured with readinessProbe, Kubernetes only checks whether the process in the container is started and whether it is running by default. However, the health state of the running businesses in the process is hard to determine. In the release process, if we use a rolling release strategy, when Kubernetes finds that the business process in the new Pod has been started, Kubernetes will start to destroy the old-version Pod. It seems there is no problem with the whole process. However, if we think about it carefully, "the business process in the new Pod has been started" does not mean it is ready. If there is a problem with the business code, our process is started, and the business ports are exposed, the service likely does not have enough time to register after the process is started due to abnormalities (such as the business code). However, the old-version Pod has already been destroyed at this time. Therefore, consumers may encounter a No Provider issue, resulting in a large amount of traffic loss during the release process.

Similarly, if our application is not configured with livenessProbe, Kubernetes only checks whether the process in the container is alive by default. However, when a process of application is in a suspended state due to resource competition, FullGc, full thread pool, or some unexpected logic, the process service quality is low or even 0, although it is alive. At this moment, all traffic entering the current application will report errors, resulting in a large amount of traffic loss. Therefore, our application should use livenessProbe to tell Kubernetes that the Pod of the current application is unhealthy and cannot be restored by itself. The current Pod needs to be restarted.

The configuration of readinessProbe and livenessProbe is to provide timely and sensitive feedback on the health status of the current application. It can ensure that all processes in the Pod are in a healthy state, thus ensuring that no traffic loss is caused in the service.

After a version iteration, it is difficult to ensure that the new code does not have any problems although it has been tested. Why are most failures related to releases? This is because the release is the last step of the overall business to go live. Often, some issues accumulated during the development process are only triggered in the final release stage. In other words, an unspoken rule is that bugs are inevitable in online publishing. It is just a matter of their size. Now that bugs cannot be avoided, there is one problem to be solved in the release stage. How can the impact be minimized? The answer is canary release. If there are some problems that have not been tested, and we also have a full batch of online releases, the errors will be amplified due to their spread in the entire network. It results in a large number of long-term online traffic losses. If our system has a canary release (even comprehensive-procedure canary release) capability, we can keep the impact of the problem to a minimum. If the system has complete observability capability in the canary release, the release will be much more stable and safer. If the canary release can support comprehensive procedures, lossless online traffic can be guaranteed even when multiple applications are released at the same time.

A downstream service provider may run into a performance bottleneck that even affects the business during peak hours. In this case, we can use the service degradation feature to degrade some service consumers. Unimportant service consumers do not actually call the service when using this feature. Instead, mocked responses are directly returned, including sample errors. Valuable resources of the downstream service provider are reserved for important service consumers. This way, the overall service stability is improved. We call this process service degradation.

If the downstream services on which the application depends are unavailable, the business traffic will be lost. You can configure the service degradation capability. When an exception occurs to a downstream service, the service degradation enables traffic to fail fast on the calling end, effectively preventing the avalanche effect.

Similar to service degradation, automatic outlier removal is the capability to automatically remove nodes when a single service is unavailable during traffic service. It is different from service degradation in two main points:

1) Automatic Completion: Service degradation is an operation and maintenance (O&M) action, which needs to be configured through the console, and the corresponding service name is specified to achieve the corresponding effect. However, the automatic outlier removal is to proactively detect the survival of upstream nodes and perform degradation operation on this procedure as a whole.

2) Removal Granularity: The service degradation target is service and node IP. Let's take Dubbo as an example. A process will publish a microservice with the service interface name as the service name. If the degradation of this service is triggered, this service of this node will not be called next time, but other services will still be called. However, all services of the node will not be called next time in the outlier removal mode.

As the core component of service registration discovery, the registry is an essential part of the microservices architecture. In the CAP model, the registry can abandon a little bit of data consistency. This way, the service address obtained by each node at the same time allows for short-term inconsistency, but the availability must be guaranteed. Once the registry is unavailable due to some problems, the nodes connected to it may cause a catastrophic blow to the entire system because the service address cannot be obtained. In addition to common high-availability methods, the unique disaster recovery methods of the registry include:

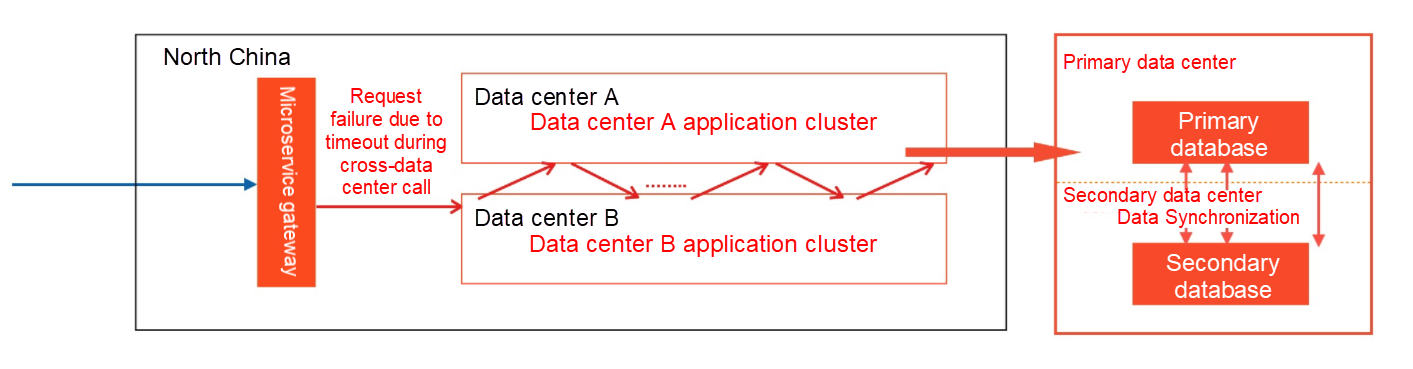

The characteristic of the same zone is that RT is generally within a relatively low latency (less than 3ms). Therefore, we can build a large Local Area Network (LAN) based on different data centers in the same zone by default. Then, we distribute our applications in multiple data centers to deal with the risk of traffic damage when a single data center fails. Compared with active geo-redundancy, the building cost of such infrastructure is lower, and the structural changes needed are also less. However, the links between applications are complicated in the microservice system. As the links go deeper, the complexity of governance will also increase. As shown in the following figure, the frontend traffic is likely to cause a surge in RT due to calls between different data centers, resulting in traffic loss.

The key to solving the problem above is supporting the capability of priority routing in the same data center at the service framework level. This means if the target service is in the same data center as the service provider, the traffic is preferentially routed to the nodes located in the same data center. The method to realize this capability is to report the information of the data center where the service provider is located when registering the service. The information of the data center is also pushed to the caller as meta information. The caller preferentially selects the addresses in the same data center as itself as the routing destination addresses through the Router capability of the customized service framework.

This article is Part 2 of a 3-part series entitled How to Build a Traffic-Lossless Online Application Architecture. It aims to use the simplest language to classify the technical problems that affect the stability of online application traffic. Solutions to these problems are only code-level details. Some require tools to cooperate, and others require expensive costs. If you expect to have a one-stop experience of traffic lossless for your application on the cloud, please pay attention to Alibaba Cloud Enterprise Distributed Application Service (EDS). Part 3 will explain the perspective of data service exchange and two keys to key prevention.

How to Build a Traffic-Lossless Online Application Architecture – Part 1

How to Build a Traffic-Lossless Online Application Architecture – Part 3

212 posts | 13 followers

FollowAlibaba Cloud Native - April 28, 2022

Alibaba Cloud Native - April 28, 2022

Alibaba Clouder - September 25, 2020

Alibaba Clouder - March 11, 2019

Alibaba Container Service - July 29, 2019

Wenson - August 4, 2020

212 posts | 13 followers

Follow Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More IT Services Solution

IT Services Solution

Alibaba Cloud helps you create better IT services and add more business value for your customers with our extensive portfolio of cloud computing products and services.

Learn More Enterprise IT Governance Solution

Enterprise IT Governance Solution

Alibaba Cloud‘s Enterprise IT Governance solution helps you govern your cloud IT resources based on a unified framework.

Learn MoreMore Posts by Alibaba Cloud Native