By Mujia

There is a table named user (without any indexes):

Suppose the SQL statement to be executed is: select * from user where age = 45;

This would require scanning from the very first row all the way to the last row, which is known as a full table scan with poor performance. Are there methods to improve performance and reduce search times?

In MySQL, an index is an ordered data structure that helps search data by referencing (pointing to) the data in some way.

Indexes in MySQL are implemented at the storage engine layer, so different storage engines have different index structures, mainly including the following:

| Index | InnoDB Engine | MyISAM Engine | Memory Engine |

|---|---|---|---|

| B+tree Index | Supported | Supported | Supported |

| Hash Index | Not Supported | Not Supported | Supported |

| R-tree Index | Not Supported | Supported | Not Supported |

| Full-text Index | Supported (from the 5.6 version onwards) | Supported | Not Supported |

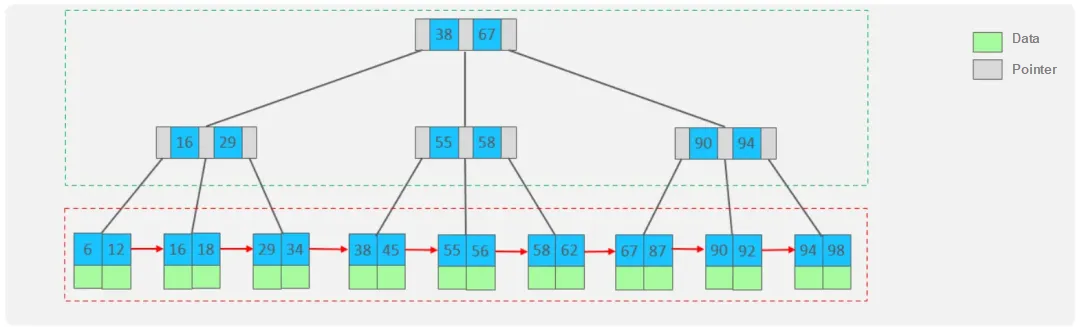

A brief introduction to a typical B+tree structure:

It can be seen that:

• All data will appear in the leaf nodes, which then form a one-way linked list.

• Non-leaf nodes only index data, with the actual data stored in the leaf nodes.

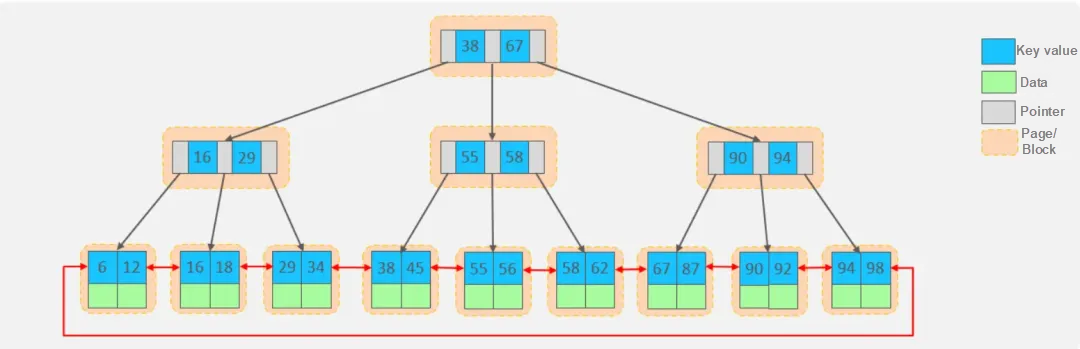

MySQL optimizes its index structure based on the classic B+tree structure by adding a linked list pointer to adjacent leaf nodes, creating a B+tree structure with sequential pointers. This enhancement improves range access performance and facilitates sorting.

MySQL indexes are mainly divided into the following categories:

| Category | Description | Characteristic | Keyword |

|---|---|---|---|

| Primary Key Index | Index created for the primary key of the table | Automatically created by default, only one allowed | primary |

| Unique Index | Prevent duplicate values in a column within the same table | Multiple allowed | unique |

| Regular Index | Quickly locate specific data | Multiple allowed | index |

| Full-text Index | Search for keywords in text without comparing indexed values | Multiple allowed | fulltext |

In the InnoDB storage engine, there are two types of indexes based on their storage formats:

| Category | Description | Characteristic | Selection Rule |

| Clustered Index | Data storage and indexes are put together. The data of this row is hung under the leaf node of the clustered index. |

Must have one. | • If a primary key exists, the primary key index is the clustered index. • If no primary key exists, use the first unique index as the clustered index. • If neither a primary key nor a suitable unique index exists, InnoDB automatically generates a rowid as a hidden clustered index. |

| Secondary Index | The data is stored separately from the index, and the leaf nodes of the index structure are associated with the corresponding primary key. The primary key value corresponding to the field value is hung under the leaf node of the secondary index. |

Multiple ones allowed | / |

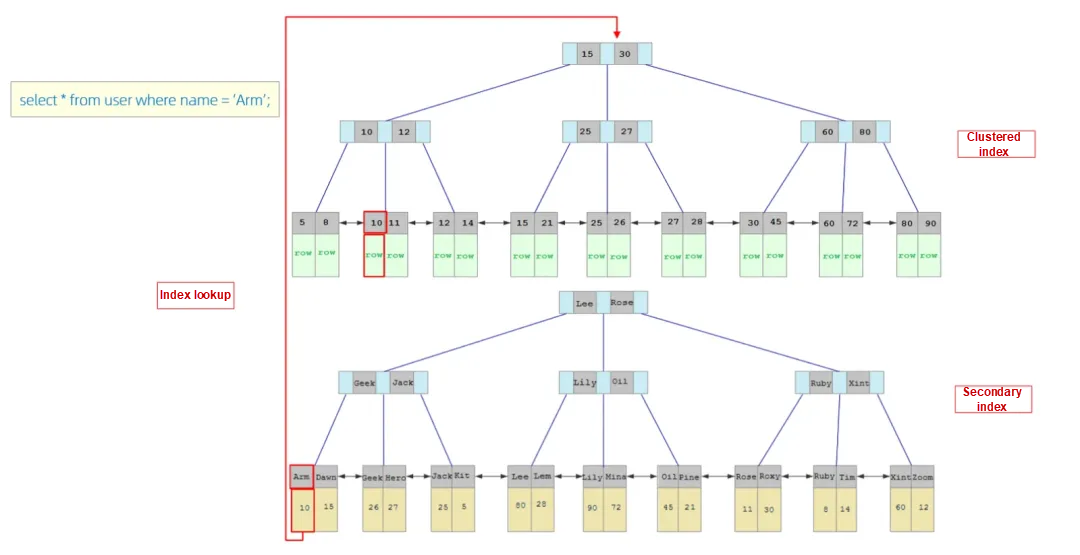

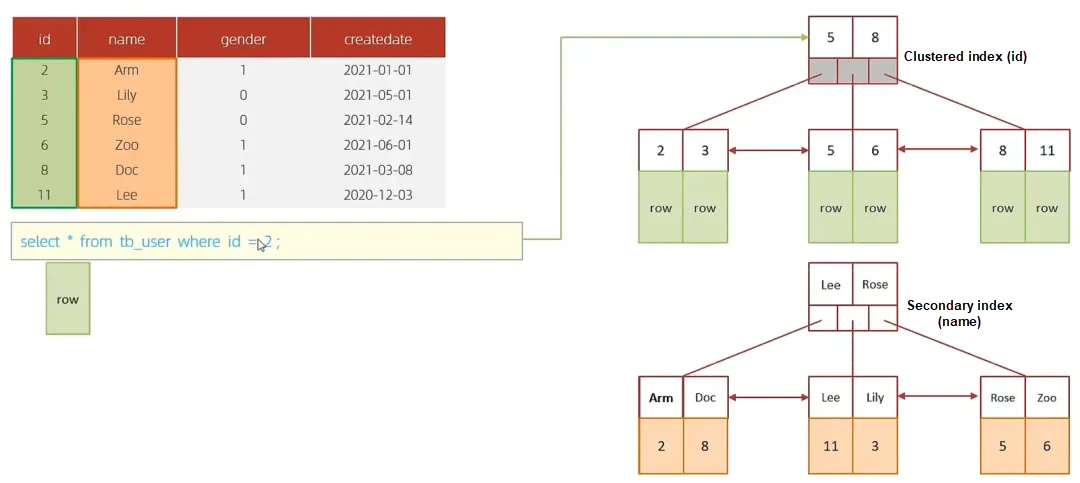

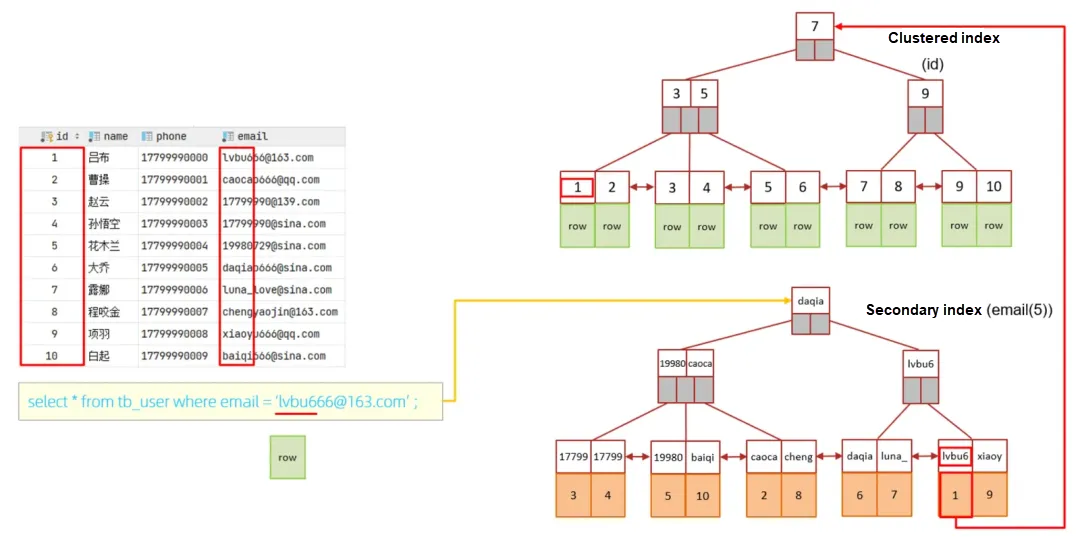

For the execution of a query statement, we can analyze the specific process:

The specific process is as follows:

1) Since the query is based on the name field, we first match name='Arm' in the secondary index of the name field. However, in the secondary index, we can only find the primary key value 10 that corresponds to Arm.

2) As the data returned by the query is *, we then need to look up the record corresponding to the primary key value 10 in the clustered index to find the row associated with 10.

3) Finally, we retrieve the data for this row and return it directly.

Create an index:

create [unique | fulltext] index name on table name (field name 1, field name 2,…);View an index:

show index from table name;Delete an index:

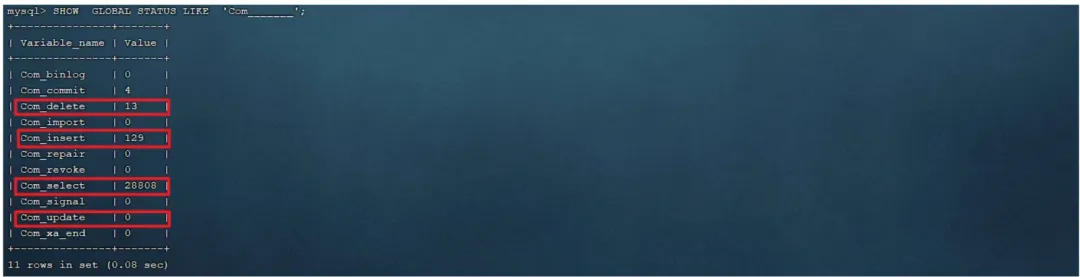

drop index name on table name;After successfully connecting the MySQL client, you can use the following command to view the frequency of insert, update, delete, and select operations in the current database:

show [session|global] status like 'com_____';

session: View the current session

global: View global data

com insert: The number of inserts

com select: The number of queries

com delete: The number of deletions

com update: The number of updates

A reference basis for database optimization can be provided by checking whether the current database is primarily used for queries or inserts, deletes, and updates. If the focus is on inserts, deletes, and updates, consider not optimizing the indexes; if the focus is on queries, consider optimizing the indexes.

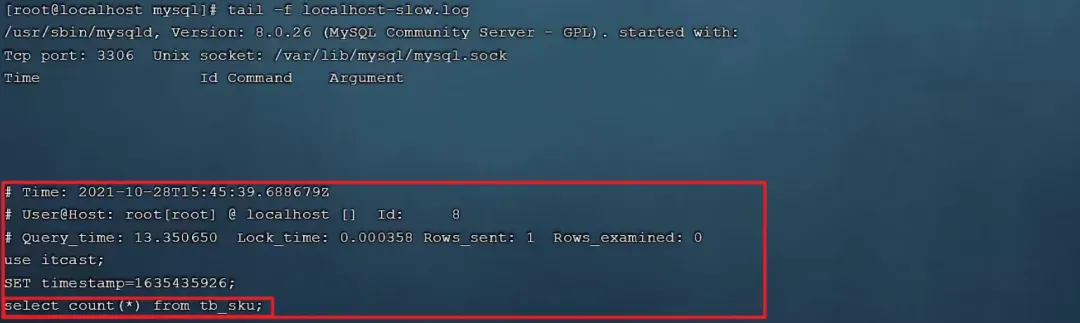

The slow query log records all SQL logs that take longer than a specified time (long_query_time, in seconds, default is 10 seconds) to execute:

Before enabling the slow query log, you need to configure the following information in the MySQL configuration file (/etc/my.cnf):

#1. Enable the MySQL slow query log:

slow_query_log = 1

#2. Set the slow query time, for example, to 2 seconds. Any query taking longer than 2 seconds will be considered a slow query and logged:

long_query_time=2

#3. After configuring, restart the MySQL server to test:

systemctl restarmysqld

#4. Check the system variable to verify if the slow query log is enabled:

show variables like “slow_query_log”;

#5. View the information recorded in the slow log file (/var/lib/mysql/localhost-slow.log):

Tail -f localhost-slow.logUltimately, you will find that the slow query log only records SQL statements that take longer than the preset time (2 seconds) to execute. Queries that execute faster are not recorded.

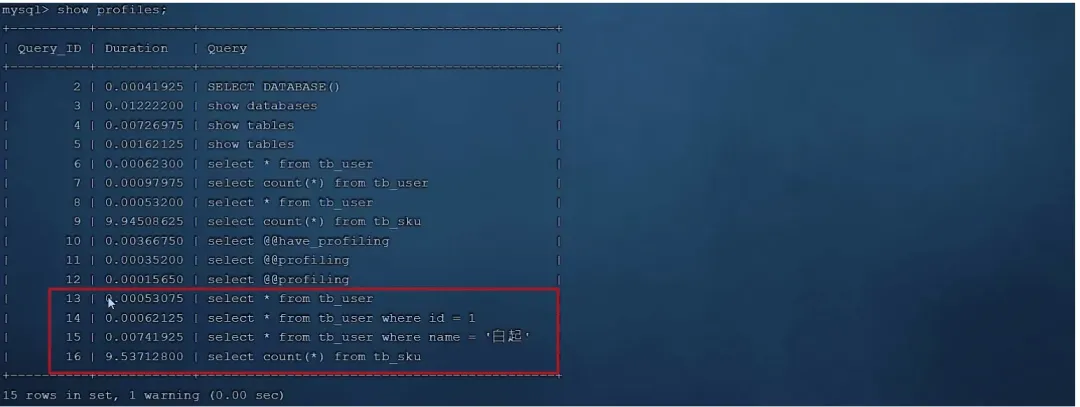

Show Profiles helps us understand where time is being spent during SQL optimization.

#1. Use the have_profiling parameter to check if MySQL supports profiling operations:

select @@have_profiling;

#2. Use the set statement to enable profiling at the session or global level:

set profiling =1;After the switch is enabled, all subsequent SQL statements executed are recorded by MySQL, along with the time consumed during their execution. For example, execute the following SQL statements:

select * from tb_user;

select * from tb_user where id = 1;

select * from tb_user where name = 'Bai Qi';

select count(*) from tb_sku;

#3. To view the time consumption of each SQL statement:

show profiles;

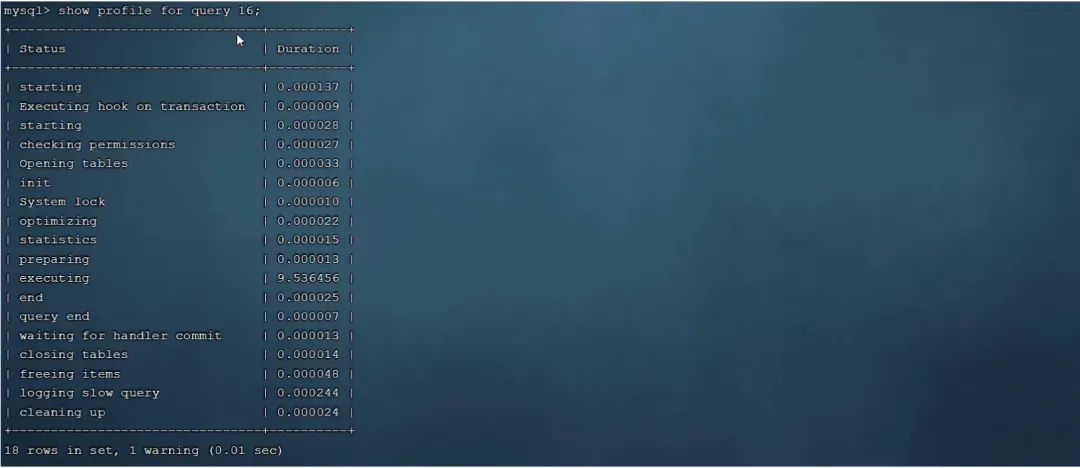

#4. To view the time consumption in specific stages of a particular SQL statement:

show profile for query Query_ID;

#5. To view the CPU usage of a SQL statement for the specific field:

show profile cpu for query Query_ID;The EXPLAIN or DESC command retrieves information on how MySQL executes a SELECT statement, including how tables are joined and the order in which they are connected during the execution of the SELECT statement.

Syntax: Add the keyword EXPLAIN or DESC before the SELECT statement.

# explain the select field from the table name where condition;| Field | Description |

|---|---|

| id | The sequence number of the select query indicates the order in which the select clause or table operation is executed. If the ID values are the same, the execution order is top-down, and the larger the ID value, the earlier the execution. |

| select_type | Represent the type of selection. Common values include: • simple: Simple table, in which no table join or subquery is used. • primary: The main query or the outer query. • union: The second or later query statement in the union. • subquery: Subquery after select or where. |

| type | Represent the join type, with performance ranging from best to worst: null, system, const, eq_ref, ref, range, index, all. |

| possible_key | Display one or more indexes that may be applied to this table. |

| key | The actually used index. If null, no index was used. |

| key_len | Indicate the number of bytes used in the index. This value is the maximum possible length of the index fields, not the actual used length. Shorter lengths are preferable, assuming no loss of precision. |

| flltered | The percentage of the number of returned rows accounted for the number of the rows read, with higher filtered values being better. |

| extra | • using where/using index: The search uses an index, and all required data can be found within the index columns, without the need to retrieve the data through an index lookup. • using index condition: The search uses an index, but requires an index lookup to retrieve the data. |

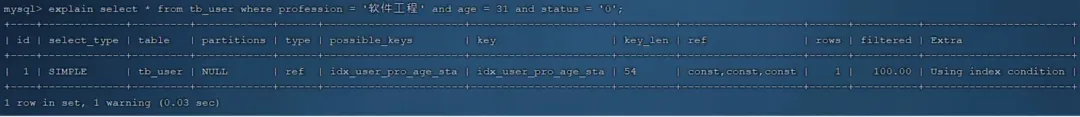

If a federated index exists, you need to follow the leftmost prefix rule. This means that the query starts from the leftmost column of the index and does not skip any index columns. If any column is skipped, the index will become partially ineffective (the following field index will be ineffective).

Assume that in the tb_user table

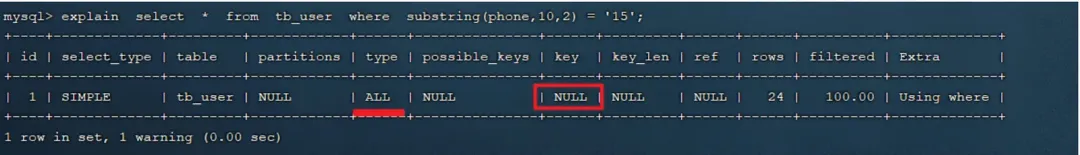

The federated index involves three fields in the following order: profession (index length 47), age (index length 2), and status (index length 5).

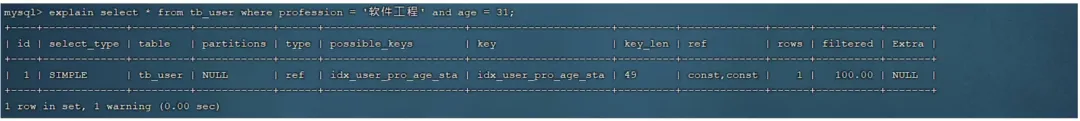

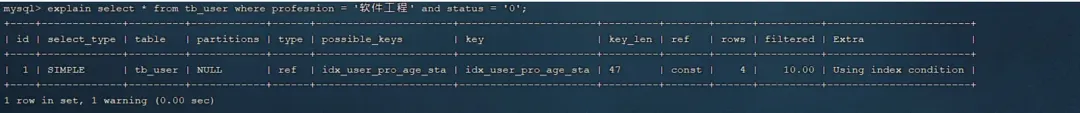

a) explain select * from tb_user where profession ='Software Engineering' and age=31 and status='0';

b) explain select * from tb_user where profession ='Software Engineering' and age=31;

c) explain select * from tb_user where profession ='Software Engineering';

In these three test cases, we find that as long as the leftmost field profession exists in the federated index, the index will be effective;

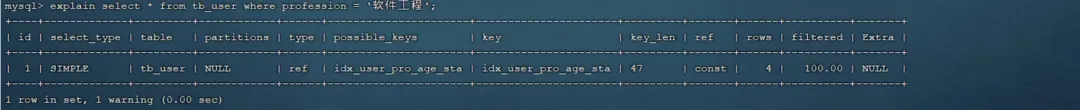

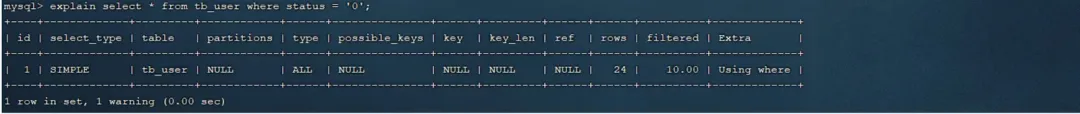

a) explain select * from tb_user where age = 31 and status='0';

b) explain select * from tb_user where status='0';

In these two test cases, we find that as long as the leftmost field profession is missing in the federated index, the index will be ineffective.

explain select * from tb_user where profession ='Software Engineering' and status='0';

For this SQL query, the leftmost field profession exists in the federated index, so the basic conditions for the leftmost prefix rule are met. However, since the age column is skipped during the query, the subsequent columns in the index will not be used, meaning the index is only partially effective. Therefore, the index length is 47.

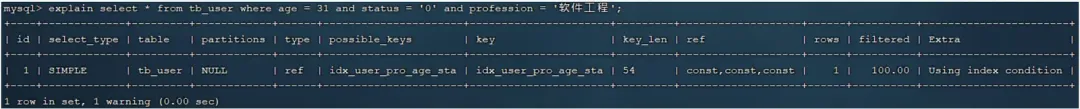

explain select * from tb_user where age=31 and status='0' and professor='Software Engineering';

Here, the index length is 54, fully satisfying the leftmost prefix rule, so the federated index is effective.

⚠ The leftmost prefix rule specifies that the leftmost field or the first field of the federated index must exist during a query, regardless of the order in which conditions are written in the SQL statement.

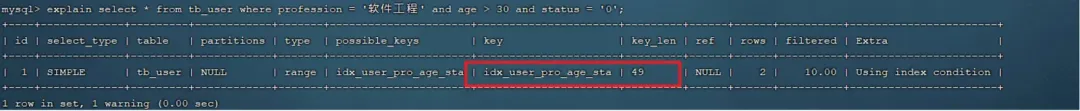

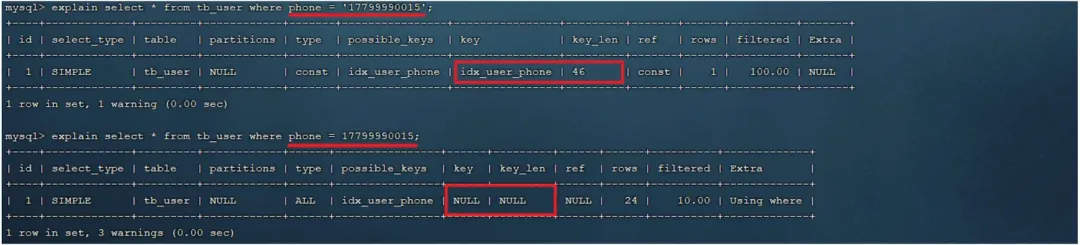

In a federated index, a range query (>,<) occurs, and the column index to the right of the range query becomes ineffective.

explain select * from tb_user where profession ='Software Engineering' and age >31 and status='0';

As seen above, when a range query uses > or <, the query uses the federated index, but the index length is 49, indicating that the status field to the right of the range query did not use the index.

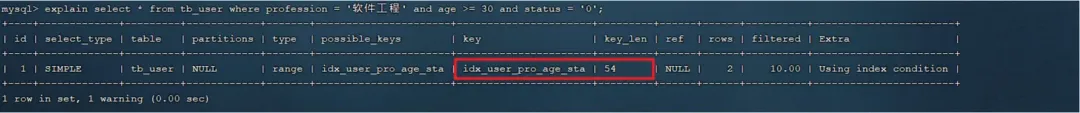

explain select * from tb_user where profession ='Software Engineering' and age >= 31 and status='0';

As seen above, when a range query uses >= or <=, the query uses the federated index, and the index length is 54, indicating that all fields used the index.

⚠️Therefore, when business requirements permit, prefer to use range queries like >= or <= instead of > or < whenever possible.

Performing operations on indexed columns will cause the index to become ineffective.

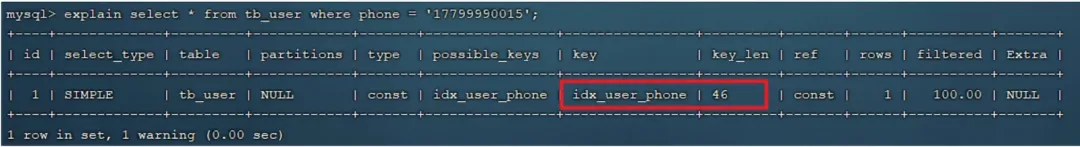

Assuming in the tb_user table, there is a single-column index: phone

explain select * from tb_user where phone = '17799990015';

As seen above, when performing an equivalent match on the phone field, the index is effective.

explain select * from tb_user where substring(phone,10,2)='15';

As seen above, when performing a function operation on the phone field, the index becomes ineffective.

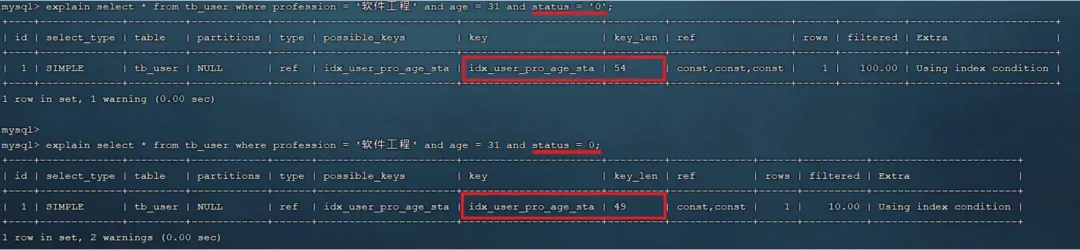

When using string-type fields without quotes, the index will become ineffective.

explain select * from tb_user where profession ='Software Engineering' and age=31 and status ='0';

explain select * from tb_user where profession ='Software Engineering' and age=31 and status = 0;

explain select * from tb_user where phone='1779990015 ';

explain select * from tb_user where phone = 1779990015;

As seen above, not using single quotes around strings does not affect the query results, but due to implicit type conversion in the database, the index will become ineffective.

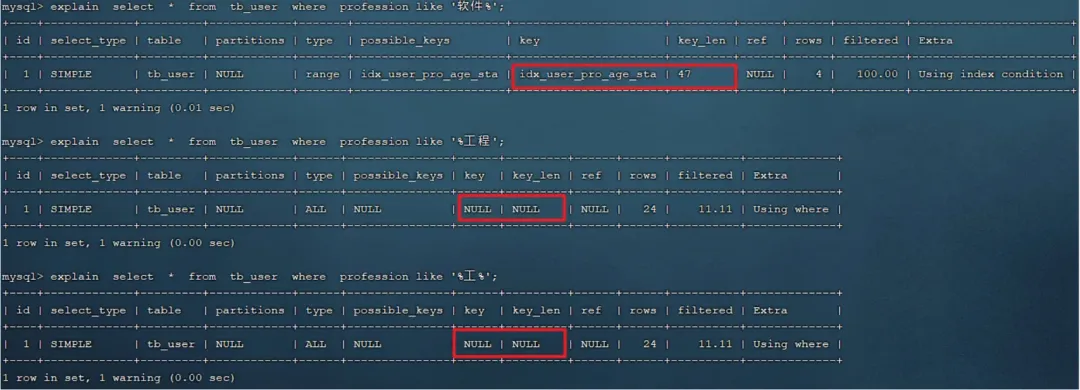

Tail-end fuzzy matching does not cause the index to become ineffective; head-end fuzzy matching causes the index to become ineffective.

explain select * from tb_user where profession like 'Software %';

explain select * from tb_user where profession like '% Engineering';

explain select * from tb_user where profession like '% Engi%';

As seen above, in like fuzzy queries, adding % after the keyword allows the index to be effective; adding % before the keyword causes the index to become ineffective.

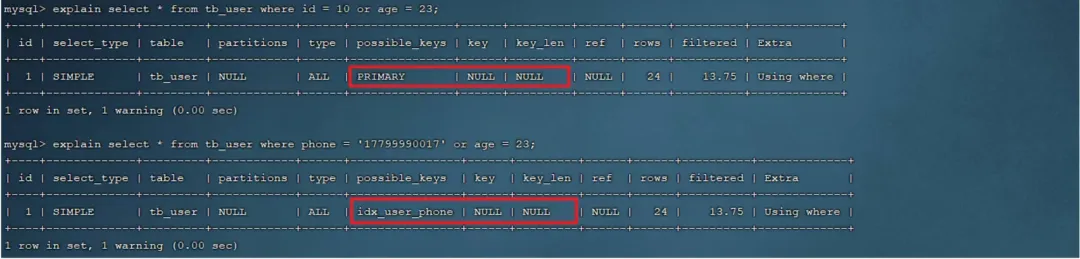

If the column in the condition before the OR has an index, and the column after the OR does not, then all involved indexes will not be used.

Step 1: There is a single-column index: phone

explain select * from tb_user where id=10 or age=23;

explain select * from tb_user where phone='1779990017' or age=23;

As seen above, because age does not have an index, even though ID and phone do, the indexes will become ineffective.

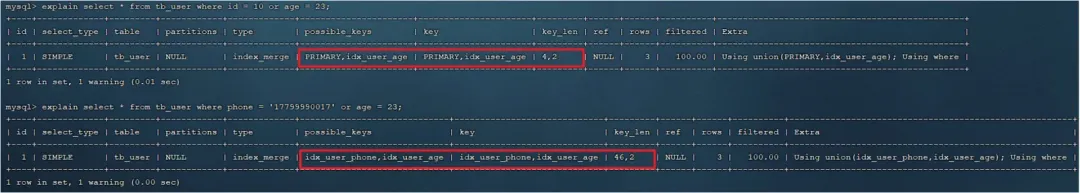

Step 2: Create an index on the age field

create index idx_user on tb_user(age);

Step 3: Execute the preceding SQL statements again

You can see that the indexes will be effective only when the left and right fields of the OR condition both have indexes.

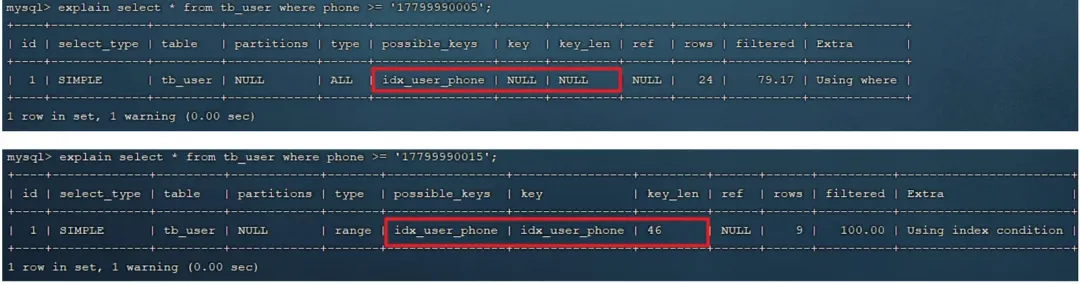

MySQL will not use the index if it evaluates that using an index would be slower than scanning the entire table.

explain * from tb_user where phone >= '1779999005';

explain * from tb_user where phone >= '1779999015';

As seen above, for the same SQL statement, different execution plans may occur based on the input field values. This is because:

When MySQL queries, it evaluates the efficiency of using an index versus scanning the entire table and will choose the more efficient option. Since indexes are designed to index small amounts of data, if querying through an index returns a large amount of data, a full table scan may be faster, and thus the index will become ineffective.

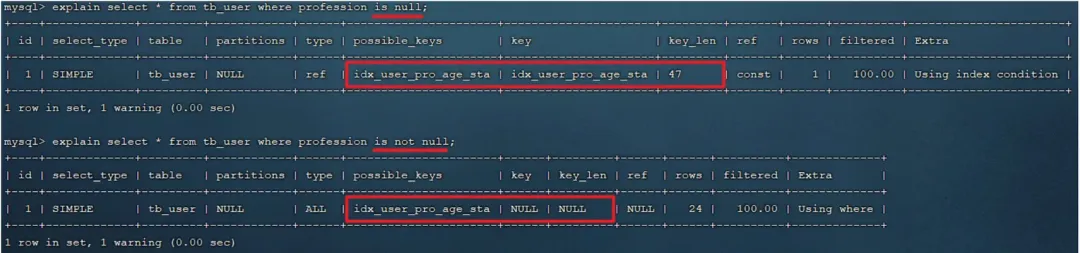

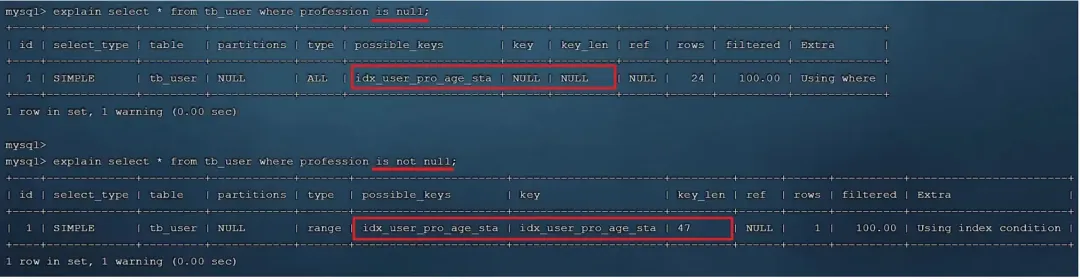

Let us also look at whether is null and is not null operations use an index:

Step 1:

explain select * from tb_user where profession is null;

explain select * from tb_user where profession is not null;

Step 2: Update all profession fields to null:

update tb_user set profession = null;

Step 3: Execute the preceding statement again

Finally, you can see that the same SQL statement, executed twice, has different query plans.

This is related to the data distribution in the database. When querying, MySQL evaluates whether to use an index or perform a full table scan. If a full table scan is faster, it will abandon the index and perform a full table scan. Therefore, whether is null and is not null use an index depends on the specific situation and is not fixed.

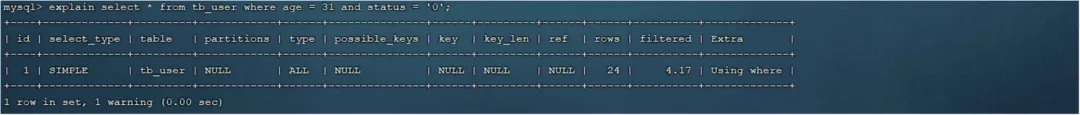

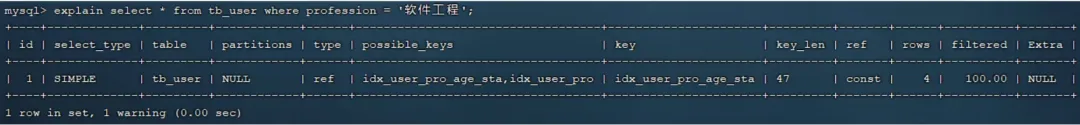

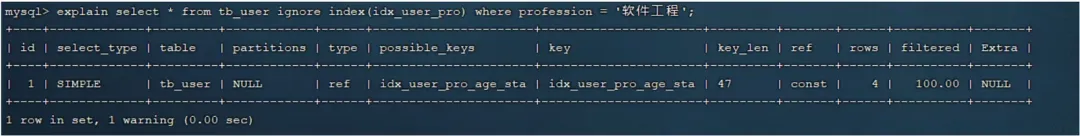

There is a federated index (idx_user_pro_sta) and a single-column index (idx_user_pro) on the profession field.

explain select * from tb_user where profession='Software Engineering';

It can be seen that MySQL ultimately chose the federated index idx_user_pro_age_sta. This is a result automatically selected by MySQL.

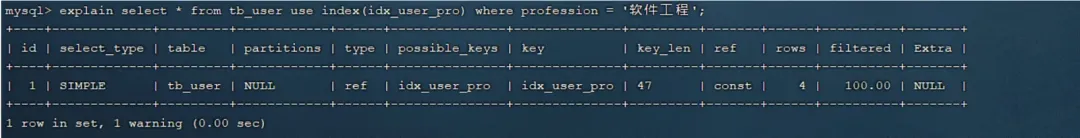

Then, you can use the SQL prompts of MySQL in your queries to add some manual guidance for optimization purposes:

• user index: Suggest which index MySQL should use for this query (this is merely a suggestion; MySQL will still internally evaluate the best option).

• ignore index: Ignore a specified index.

• force index: Force the use of a specific index.

Demonstration:

1) explain select * from tb_user use index(idx_user_pro) where professor='Software Engineering';

2) explain select * from tb_user ignore index(idx_user_pro) where professor='Software Engineering';

3) explain select * from tb_user force index(idx_user_pro) where professor='Software Engineering';

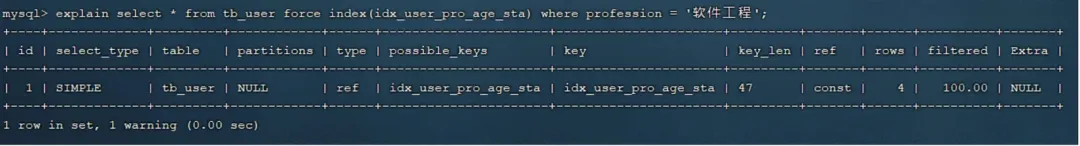

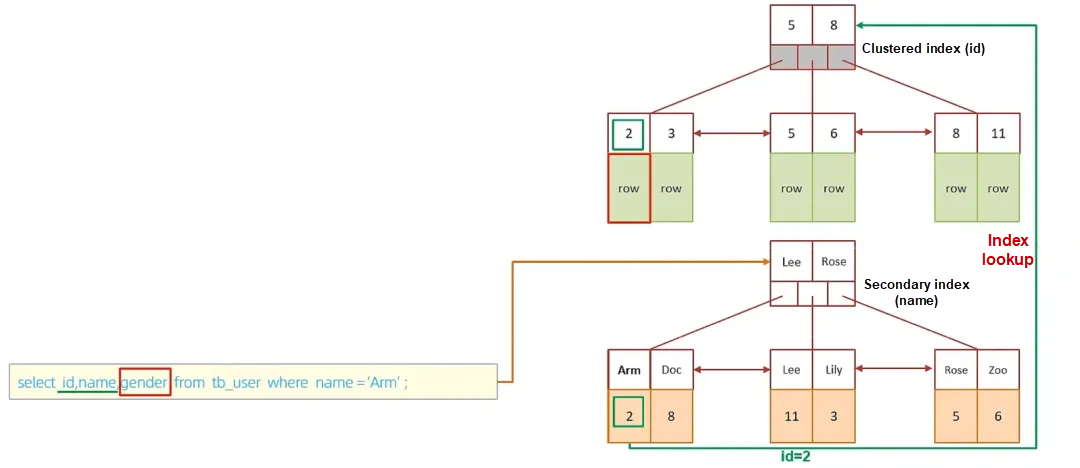

A covering index is one where the query uses an index and all the required columns can be found within that index.

When querying, try to use covering indexes to reduce select *.

Assuming there is a federated index idx_user_pro_age_sta (covering three fields: profession, age, status). This index is also a secondary index, with the leaf nodes containing the primary key ID. When the data returned by the query is in ID, profession, age, or status, the query can directly use the secondary index to return the data. If the query fields extend beyond this range, the primary key ID needs to be obtained, and then the additional data must be fetched by scanning the clustered index. This process is an index lookup.

Using select * to return all field values can easily lead to index lookups (unless querying by primary key, in which case only the clustered index is scanned).

Demonstration:

• explain select id, professor, from tb_user where profession='Software Engineering' and age=31 and status='0';

• explain select id, professor, age, status from tb_user where profession='Software Engineering' and age=31 and status='0';

• explain select id, session, age, status, name from tb_user where profession='Software Engineering' and age=31 and status='0';

• explain select * from tb_user where profession='Software Engineering' and age=31 and status='0';

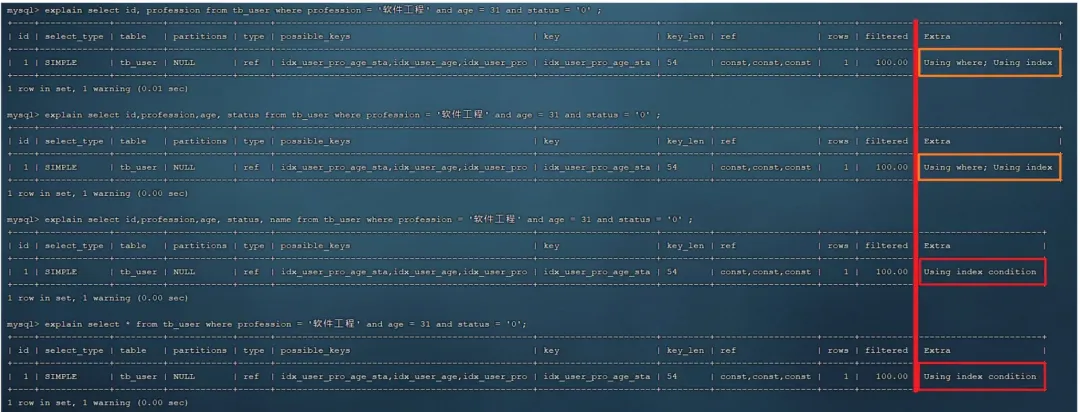

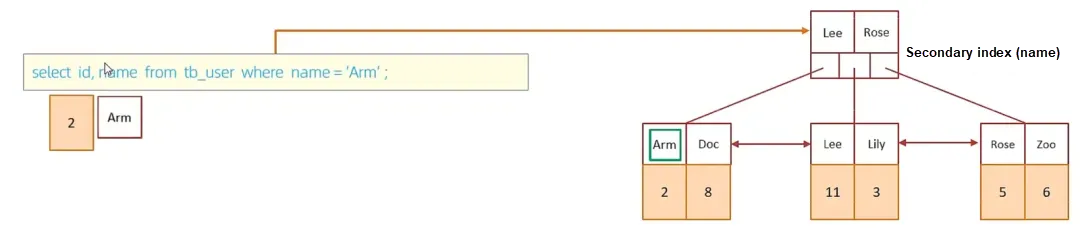

To help you better understand what a covering index and index lookup are, let us look at the execution process of a set of SQL statements:

A. Table Structure and Index Diagram:

ID is the primary key and is a clustered index. The name field has a regular index, which is a secondary index.

B. Execute SQL: select * from tb_user where id = 2;

Querying by ID directly uses the clustered index, requiring a single index scan to return the data, resulting in high performance.

C. Execute SQL: select id,name from tb_user where name='Arm ';

Although querying by the name field, the query returns the ID and name fields, which can be directly obtained from the secondary index on name as it is a covering index. Therefore, no index lookup is needed, leading to high performance.

D. Execute SQL: select id,name,gender from tb_user where name='Arm ';

Since the gender field is not included in the secondary index on name, two index scans are needed, including an index lookup, which results in lower performance.

Consider this: A table has four fields (id, username, password, status). How can you optimize the SQL?

select id, username, password from tb_user where username='itcast';Answer: Create a federated index on username and password to avoid index lookups.

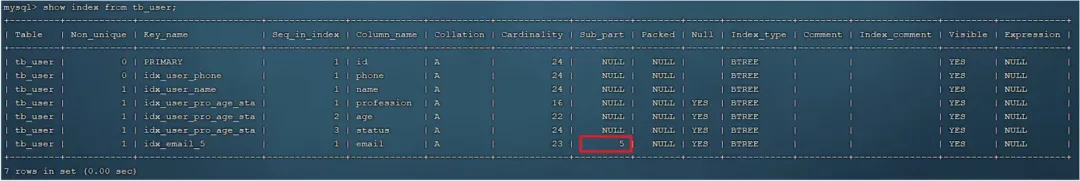

When the field type is a string such as varchar, text, and longtext, long strings in some cases need to be indexed, which can result in large indexes and waste a lot of disk I/O during queries, affecting query performance. In such cases, you can create an index on just a part of the string's prefix to save index space and improve index efficiency.

1) Syntax: create index idx_xxx on the table name (column(n));

create index idx_email on tb_user(email(5)); # Create a prefix index of length 5 on the email field in the tb_user table.

2) Prefix Length

The length can be determined based on the selectivity of the index, which is calculated as the number of distinct index values (cardinality) divided by the total number of records in the table.

The higher the selectivity of an index, the more efficient the query. A unique index has a selectivity of 1, which is the best index selectivity with the best performance.

select count(distinct email)/count(*) from tb_user;

select count(distinct substring(email, 1, 5)/count(*) from tb_user;3) Query Process of Prefix Indexes

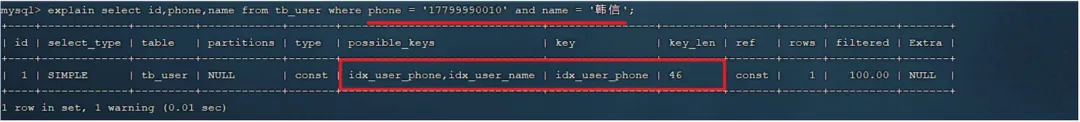

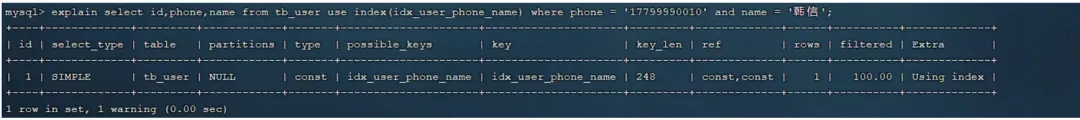

Single-column Index: An index that contains a single column.

Federated Index: An index that contains multiple columns.

When both fields phone and name, connected by AND, have single-column indexes, MySQL will ultimately choose only one index, meaning that only the index of one field can be used. In this case, an index lookup is needed.

At this point, we can create a federated index on the phone and name fields.

create unique index_user_phone_name on tb_user(phone, name);Check the execution plan for the following query:

In this case, the query uses a federated index, which includes information about phone and name. The corresponding primary key ID is hung under the leaf node, so the query does not require an index lookup.

⚠ In business scenarios where multiple query conditions exist, it is recommended to use federated indexes rather than single-column indexes when you consider creating indexes for the query fields.

The detailed structure diagram for using a federated index in a query is as follows:

1) Create indexes on tables with large data volumes and complex queries.

2) Create indexes on fields that are frequently used as query conditions (where), sorting (order by), or grouping (group by).

3) Prefer columns with high selectivity for indexing, and consider creating unique indexes. The higher the selectivity, the more efficient the use of the index.

4) For string-type fields with excessively long lengths, consider creating prefix indexes based on the characteristics of the field.

5) Use federated indexes instead of single-column indexes when possible. Federated indexes often provide covering indexes during queries, which saves storage space, avoids index lookups, and improves query efficiency.

6) Control the number of indexes. More indexes are not always better. The more indexes there are, the greater the cost of maintaining the index structure, which can impact the efficiency of insert, delete, and update operations.

7) If index columns cannot store null values, use the not null constraint when creating the table. When the optimizer knows whether each column contains null values, it can better determine which index is most effective for a query.

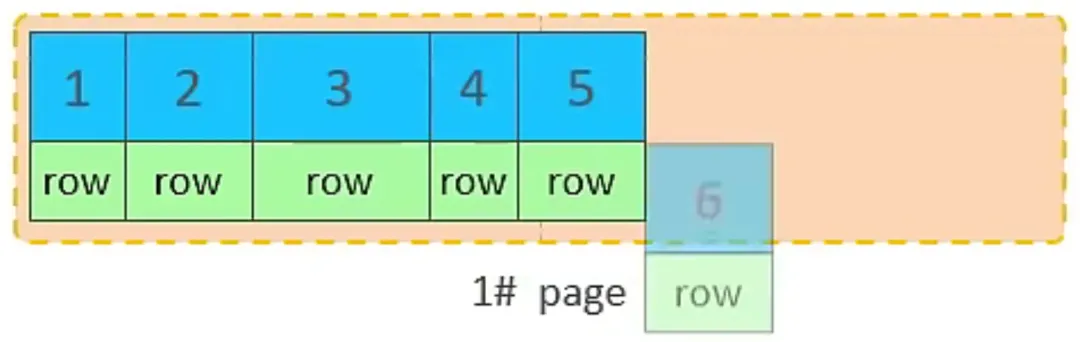

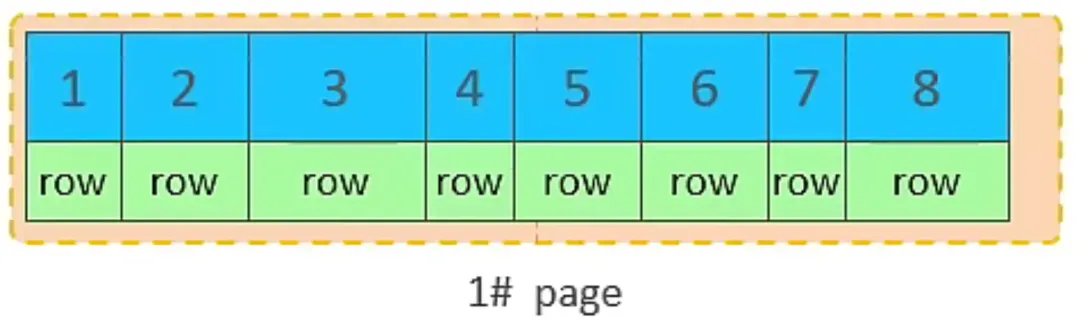

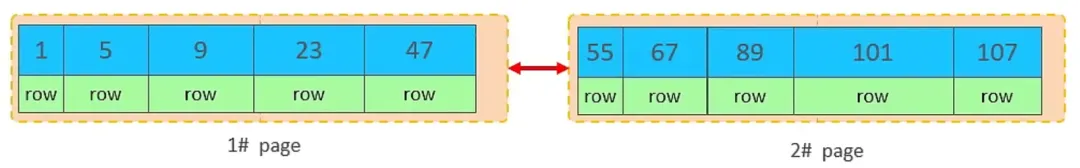

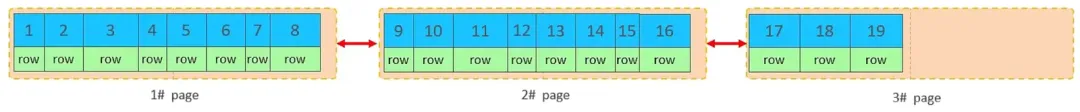

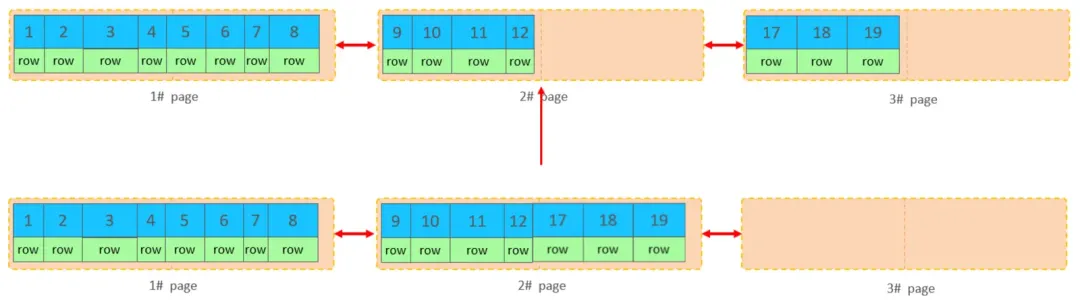

1) Request pages from disk, and sequentially insert primary keys.

2) If the first page is not full, continue inserting into the first page.

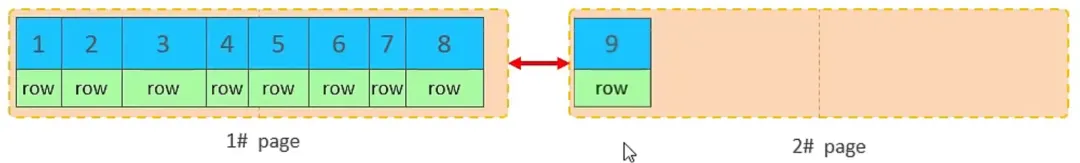

3) When the first page is full, write to the second page. Pages will be connected by pointers.

4) When the second page is full, write to the third page.

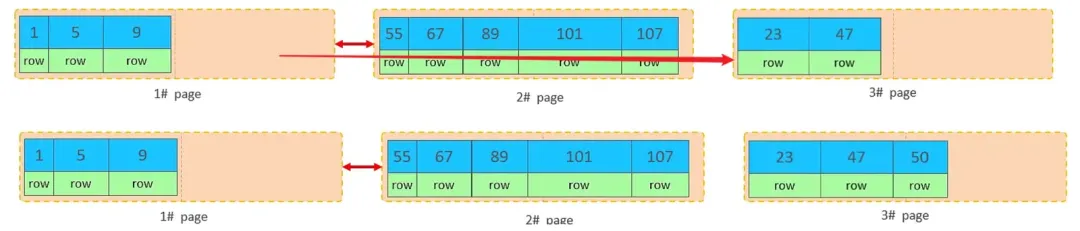

1) Suppose pages 1# and 2# are already full, storing the data in the below figure.

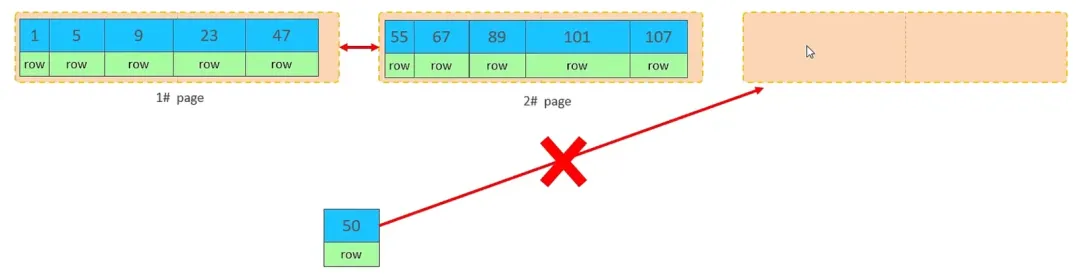

2) Now, insert a record with an ID of 50. Does this trigger opening a new page to write the new record?

No. That is because the leaf nodes of the index structure are in order. According to the order, the record should be stored after 47.

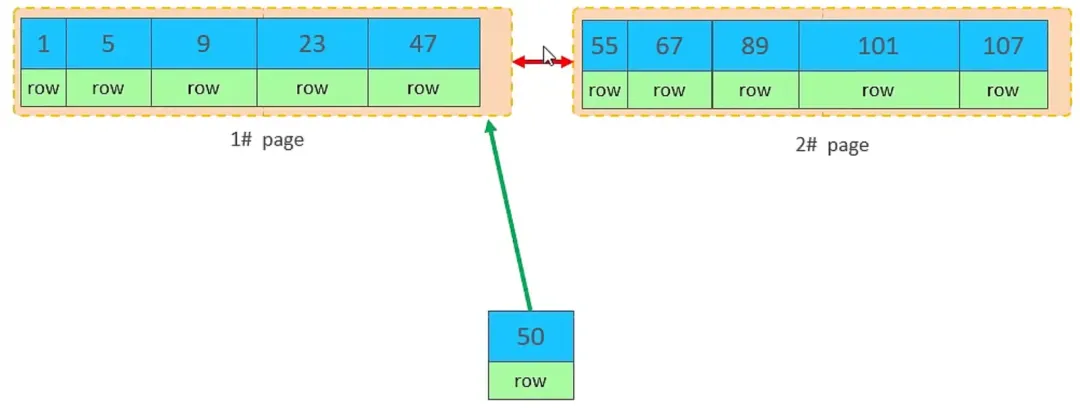

3) However, page 1#, where 47 is located, is already full and cannot store the data for ID 50. At this point, a new page 3# is opened.

4) Instead of directly storing 50 in page 3#, the second half of the data in page 1# is moved to page 3#, and then 50 is inserted into page 3#.

5) After moving the data and inserting the record with ID 50, the order of data among these three pages is incorrect. The next page of 1# should be 3#, and the next page of 3# should be 2#. Therefore, the linked list pointers need to be reconfigured. This phenomenon is page splitting, which is a performance-intensive operation.

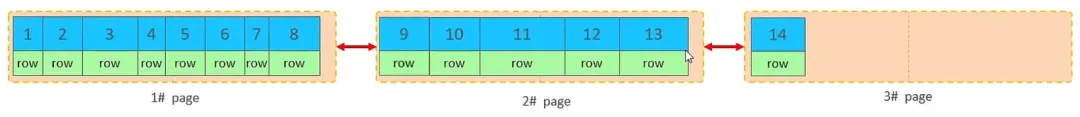

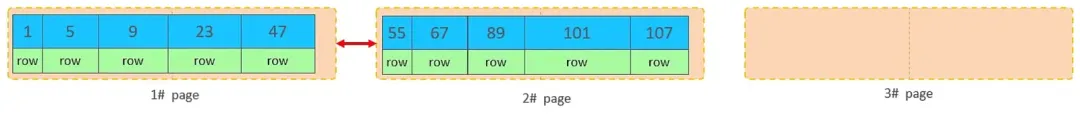

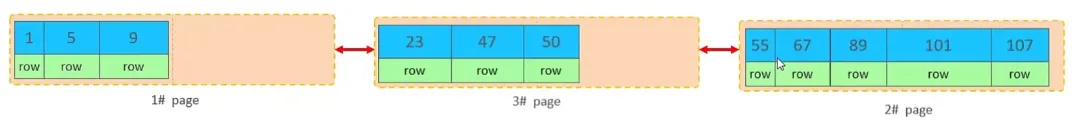

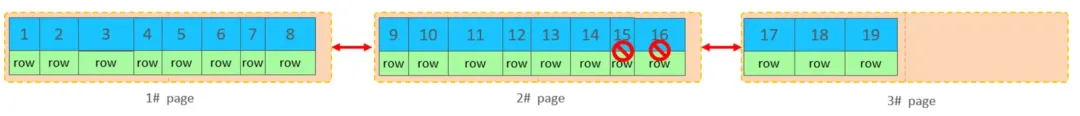

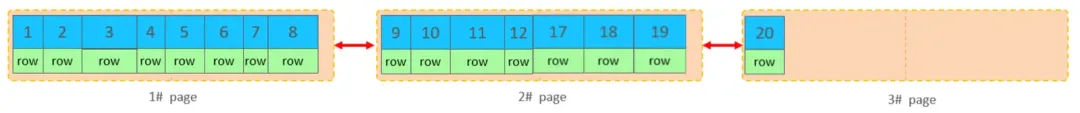

1) Currently, the index structure (leaf nodes) of existing data in the table is as follows:

2) When a row is deleted, the record is not physically removed; it is flagged as deleted and its space becomes available for other records to claim.

3) Continue deleting records from page 2#. When the number of deleted records in a page reaches the MERGE_THRESHOLD (default is 50% of the page), InnoDB will look for the nearest page (before or after) to see if the two pages can be merged to optimize space usage.

4) After deleting records and merging pages, the new data with an ID of 21 is directly inserted into page 3#.

The phenomenon that pages are merged is referred to as page merging.

• Minimize the length of the primary key as much as possible when business requirements are met.

• When inserting data, opt for sequential insertion and use AUTO_INCREMENT for the primary key.

• Avoid using UUIDs or other natural keys as the primary key.

• During business operations, avoid modifying the primary key.

There are two sorting methods in MySQL:

| Sorting Method | Characteristic |

|---|---|

| Using filesort | Through either a table index or full table scans, rows that meet the criteria are read, and then sorting operations are completed in the sort buffer. All sorts that do not directly return sorted results through an index are called FileSort sorts. |

| Using index | When ordered data is returned directly through a sequential scan of an ordered index, this is referred to as Using index, which does not require additional sorting and is highly efficient. |

For the above two sorting methods, the performance of Using index is high, while the performance of Using filesort is low. Therefore, we need to optimize the sorting operation to Using index when possible.

Next, let us conduct a test:

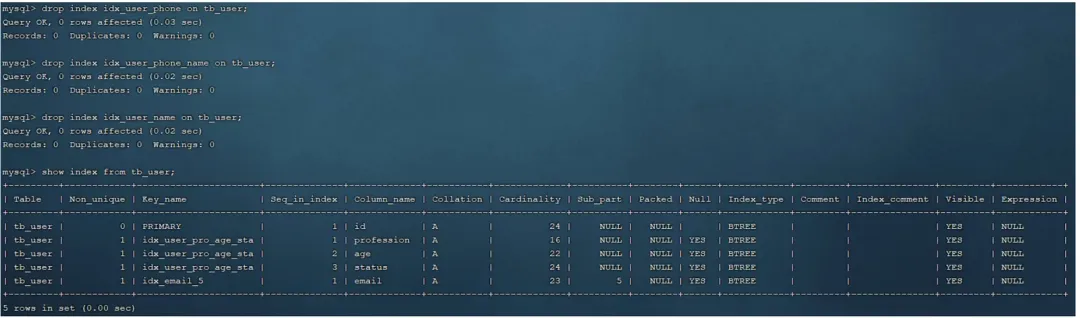

A. Remove some of the indexes created in the tb_user table.

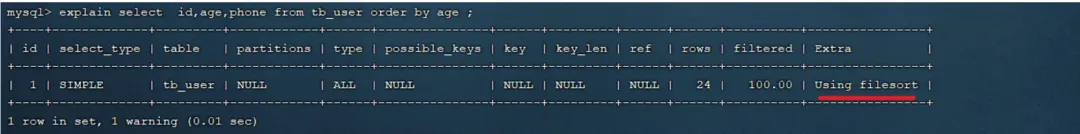

B. Execute sorting SQL

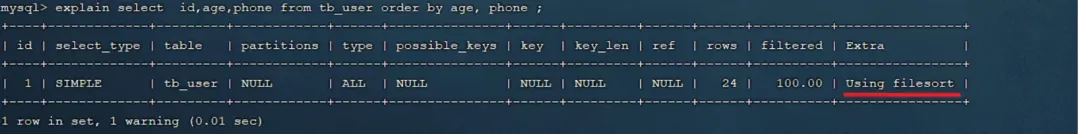

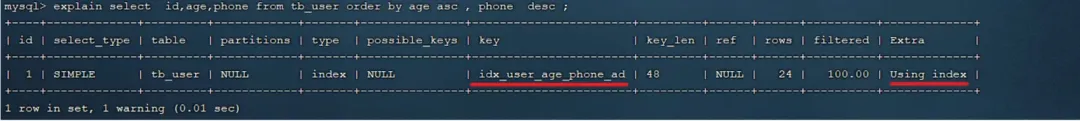

explain select id,age,phone from tb_user order by age ;

explain select id,age,phone from tb_user order by age, phone ;

Since neither age nor phone has indexes, Using filesort occurs during sorting, which results in lower sorting performance.

C. Create an index

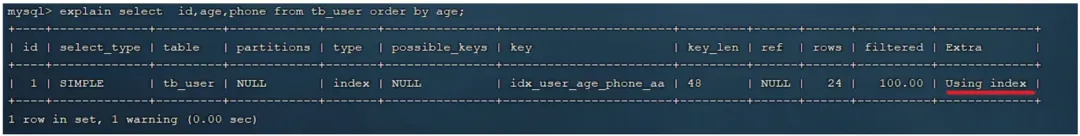

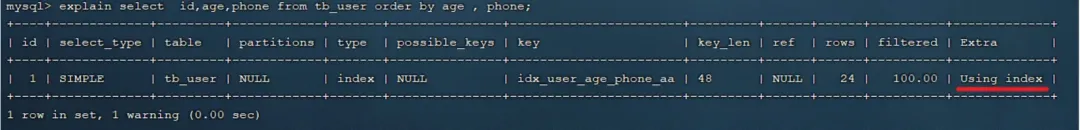

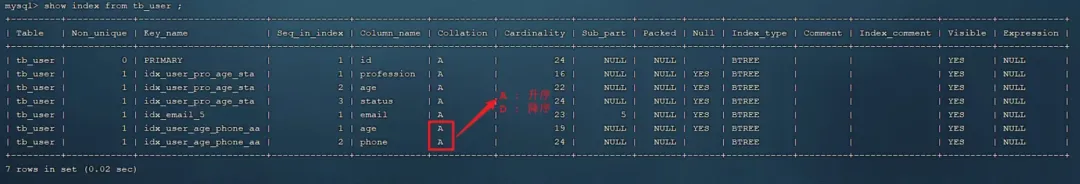

create index idx_user_age_phone_aa on tb_user(age, phone);D. After creating the index, perform ascending sorting based on age and phone

explain select id,age,phone from tb_user order by age;

explain select id,age,phone from tb_user order by age, phone;

After creating the index, sorting queries that previously performed Using filesort now perform Using index, which is more efficient.

E. After creating the index, perform descending sorting based on age and phone

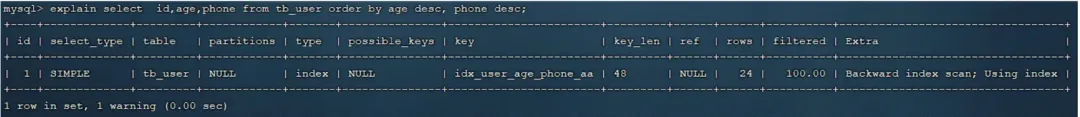

explain select id,age,phone from tb_user order by age desc , phone desc ;

Using index appears, but in the Extra column, there is a backward index scan, which indicates a reverse scan of the index. By default, the leaf nodes of the index in MySQL are sorted from smallest to largest, but when sorting the query in descending order, a reverse scan is performed, resulting in a backward index scan. In MySQL 8, descending indexes are supported, so we can create descending indexes.

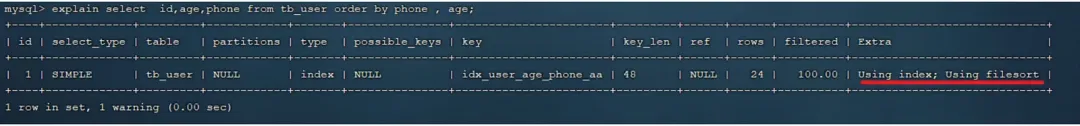

F. Perform ascending sorting based on phone and age, with phone first and age second

explain select id,age,phone from tb_user order by phone , age;

When sorting, the leftmost prefix rule also needs to be satisfied, otherwise Using filesort will appear. When creating the index, age was the first field and phone the second, so sorting should follow this order; otherwise, the Using filesort will occur.

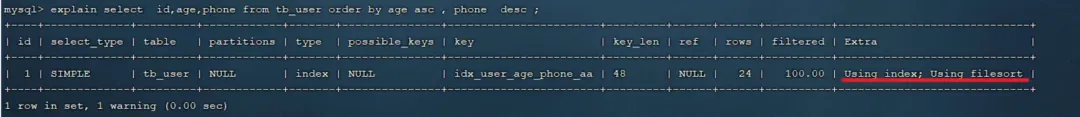

G. Perform one descending and one ascending sort based on age and phone

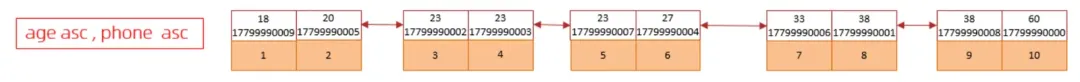

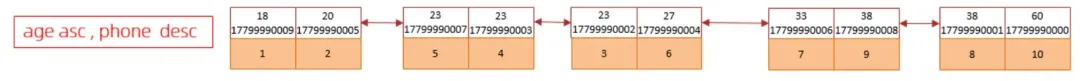

explain select id,age,phone from tb_user order by age asc, phone desc ;

Since the index was created without specifying the order, both fields are sorted in ascending order by default.

However, during querying, as one field is sorted in ascending order and the other in descending order, the Using filesort occurs. To address this issue, we can create a federated index where age is sorted in ascending order and phone in descending order.

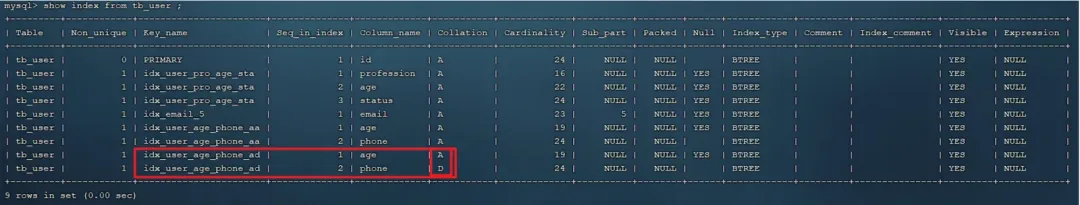

H. Create a federated index where age is sorted in ascending order and phone in descending order

create index idx_user_age_phone_ad on tb_user(age asc ,phone desc);

I. Then execute the following SQL statement again

explain select id,age,phone from tb_user order by age asc , phone desc ;

The ascending/descending federated index structure is shown in the following figure:

From the above tests, we derive the following principles for optimizing ORDER BY:

1) Create appropriate indexes for the sorting fields. Adhere to the leftmost prefix rule as well when sorting multiple fields.

2) Use covering indexes as much as possible.

3) When sorting multiple fields, with one ascending and one descending, pay attention to the rules (ASC/DESC) for creating the federated index.

4) If the Using filesort is unavoidable, especially during large data volume sorting, you can increase the size of the sort buffer sort_buffer_size (default 256K).

For grouping operations, we mainly examine how indexes affect them.

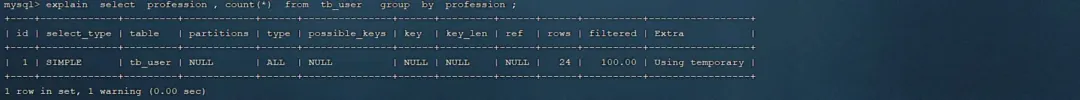

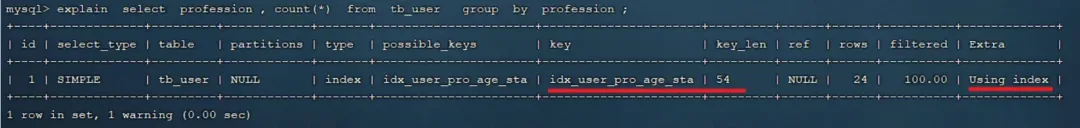

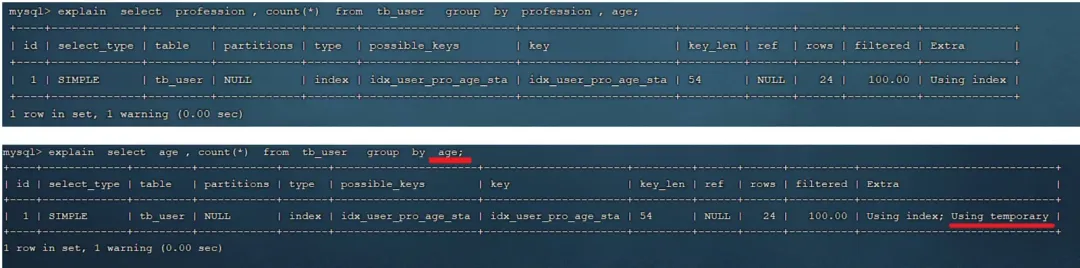

Step 1: Without any index, execute the following SQL statement and check the execution plan:

explain select profession , count(*) from tb_user group by profession ;

Step 2: Create a federated index on the profession, age, and status fields.

create index idx_user_pro_age_sta on tb_user(profession , age , status);Step 3: Execute the same SQL statement again to view the execution plan.

explain select profession , count(*) from tb_user group by profession ;

Step 4: Execute the following GROUP BY queries and check the execution plans:

explain select profession , count(*) from tb_user group by profession, age ;

explain select profession , count(*) from tb_user group by age ;

We find that the Using temporary appears if grouping is done solely by age; however, if grouping is done by both profession and age, the Using temporary does not appear. The reason is that for grouping operations, the federated index also adheres to the leftmost prefix rule.

⚠️ Therefore, for grouping operations, we can optimize performance by following these two points:

1) For grouping operations, indexes can be used to improve efficiency.

2) For grouping operations, the use of indexes also follows the leftmost prefix rule.

When dealing with large datasets, the efficiency of limit pagination queries tends to decrease as you move further through the pages.

Let us compare the execution times for limit pagination queries:

Through testing, we can see that the efficiency of pagination queries decreases as you move further through the pages. This is the problem with pagination queries:

During the pagination query, when executing LIMIT 2000000,10, MySQL needs to sort the first 2000010 records but only return records 2000000 to 2000010 with the rest discarded. Therefore, the cost of sorting and querying can be very high.

Optimization Strategy: Generally, creating a covering index can significantly improve performance for pagination queries. You can optimize using a covering index combined with a subquery.

explain select * from tb_sku t , (select id from tb_sku order by id limit 2000000,10) a where t.id = a.id;From previous tests, we found that the count operation can be time-consuming when dealing with large amounts of data. In the InnoDB engine, it reads each row one by one from the engine during a count(*) operation before accumulating the count.

To significantly enhance the efficiency of InnoDB table counts, the main optimization strategy is to perform counting yourself. For example, you can use databases like Redis. However, the approach may not be suitable if conditional counts are involved.

Count() is an aggregate function. It examines the returned result set row by row. If the parameter passed to the count function is not NULL, it increments the cumulative value by 1; otherwise, it does not increment. Finally, it returns the accumulated value.

Usage: count(*), count (primary key), count (field), and count (number)

| Count Usage | Description |

| Count (primary key) | The InnoDB engine traverses the entire table, extracting every primary key ID value and returning it to the service layer. After the service layer obtains the primary key, it directly accumulates the count by row (as the primary key cannot be null). |

| Count (field) | • Without not null constraint: The InnoDB engine traverses the entire table, extracting field values for each row and returning them to the service layer. The service layer checks whether the value is null and increments the count if it is not. • With not null constraint: The InnoDB engine traverses the entire table, extracting field values for each row, and returns them to the service layer, which accumulates the count directly. |

| Count (number) | The InnoDB engine traverses the entire table without extracting values. For each returned row, the service layer adds the number 1 and directly accumulates the count by row. |

If sorted by efficiency, count (field) < count (primary key ID) < count(1) ≈ count(*), so it is better to use count(*).

We mainly need to pay attention to the precautions when executing the update statement.

update course set name = 'javaEE' where id = 1 ;When we execute the deleted SQL statement, the data of the row with ID 1 is locked, and then the row lock is released after the transaction is committed.

If we start multiple transactions and then execute the following SQL statement:

update course set name = 'SpringBoot' where name = 'PHP' ;We find that the row lock escalates to a table lock. This causes a significant decrease in the performance of the update statement.

InnoDB row locks are applied to indexes, not records, and the index must remain valid; otherwise, the lock will escalate from a row lock to a table lock.

https://blog.csdn.net/weixin_42802447/article/details/124267211

https://blog.csdn.net/kybabcde/article/details/128680998

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

A Comprehensive Overview of the Apsara Conference 2024 Agenda

An In-Depth Understanding of RDMA Interaction Mechanism between Software and Hardware

1,319 posts | 463 followers

FollowApsaraDB - May 20, 2025

Alibaba Cloud Native Community - November 12, 2025

ApsaraDB - August 7, 2024

Alibaba Clouder - April 8, 2018

andy.zh - November 7, 2019

ApsaraDB - October 24, 2025

1,319 posts | 463 followers

Follow PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More AnalyticDB for MySQL

AnalyticDB for MySQL

AnalyticDB for MySQL is a real-time data warehousing service that can process petabytes of data with high concurrency and low latency.

Learn More ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by Alibaba Cloud Community