By Zhishu

MySQL Performance Schema (PFS) is a powerful tool MySQL provides for performance monitoring and diagnostics. It provides a special method to check the internal performance of the server during the runtime. PFS collects information by monitoring events registered in the server. An event can be any execution or resource usages in the server, such as a function call, a system call wait, the parsing or sorting state in SQL queries, or memory resource usage.

PFS stores the collected performance data in the performance_schema storage engine, which is a memory table engine. In other words, all collected diagnostic information is stored in the memory. The collection and storage of diagnostic information bring additional costs. The performance and memory management of PFS is crucial to minimize the impact of information collection and storage on the business.

This article explores the principles of allocating and freeing memory in PFS based on the source code of the memory management model, analyzes some of the existing problems, and provides some ideas for improvement. The analysis of source code in this article is based on MySQL 8.0.24.

The key features of PFS memory management are listed below:

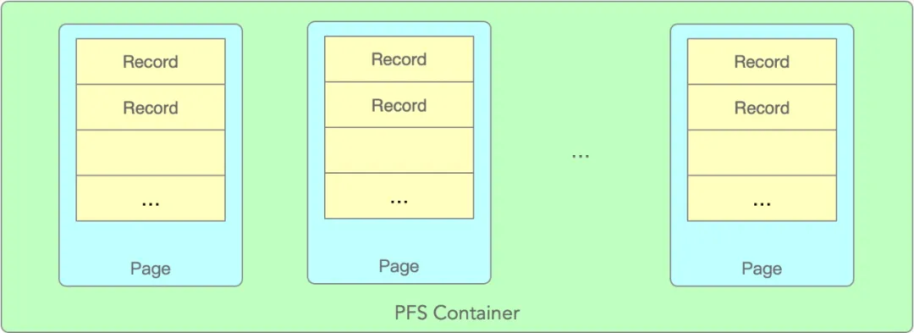

The following figure shows the overall structure of PFS_buffer_scalable_container, which is the core data structure of PFS memory management.

The container contains multiple pages, with a fixed number of records on each page. Each record corresponds to an event object, such as a PFS_thread. The number of records on each page is fixed, but the number of pages increases as the load increases.

PFS_buffer_scalable_container is the core data structure of PFS memory management.

The following code provides an example of the key data structure involved in memory allocation:

PFS_PAGE_SIZE // The maximum number of records on each page. In global_thread_container, the default value is 256.

PFS_PAGE_COUNT // The maximum number of pages. In global_thread_container, the default value is 256.

class PFS_buffer_scalable_container {

PFS_cacheline_atomic_size_t m_monotonic; // The monotonically increasing atomic variables that are used for the lock-free selection of pages.

PFS_cacheline_atomic_size_t m_max_page_index; // The index of the allocated page with the largest page number.

size_t m_max_page_count; // The maximum number of pages. No new pages will be allocated once it is exceeded.

std::atomic< array_type *> m_pages[PFS_PAGE_COUNT]; // Page array.

native_mutex_t m_critical_section; // The lock that is required to create a new page.

}First of all, m_pages is an array. Each page may have free records, or the whole page may be busy. MySQL adopts a simple strategy where the polling is performed to determine whether each page has free records one by one until the allocation is successful. If the allocation still fails after all pages are polled, a new page is created to expand the memory until the number of pages reaches the upper limit.

The polling does not start from the first page every time but from the position recorded by the atomic variable m_monotonic. The value of the m_monotonic variable increases by 1 every time the allocation fails.

The following code is the simplified version of the core code:

value_type *allocate(pfs_dirty_state *dirty_state) {

current_page_count = m_max_page_index.m_size_t.load();

monotonic = m_monotonic.m_size_t.load();

monotonic_max = monotonic + current_page_count;

while (monotonic < monotonic_max) {

index = monotonic % current_page_count;

array = m_pages[index].load();

pfs = array->allocate(dirty_state);

if (pfs) {

// Return pfs if the allocation is successful.

return pfs;

} else {

// Poll the next page if the allocation fails.

// The value of the m_monotonic variable is accumulated concurrently. Therefore, the value of one local monotonic variable may not increase linearly, but change from 1 to 3 or to an even larger number.

// Therefore, the current while loop may not poll all pages one by one, but jump from one page to another and skip the pages in between. In other words, all pages are polled by concurrent threads.

// This algorithm is actually problematic. Some pages are skipped, which increases the probability of creating new pages. This point will be elaborated later in this article.

monotonic = m_monotonic.m_size_t++;

}

}

// Create a new page if the allocation still fails after all pages are polled and the number of pages has not reached the upper limit.

while (current_page_count < m_max_page_count) {

// To prevent concurrent threads from creating new pages at the same time, the system deploys a synchronization lock, which is the only lock in the PFS memory allocation process.

native_mutex_lock(&m_critical_section);

// The lock is successfully obtained. If the value of array is not null, a new page has been created by one of the other threads.

array = m_pages[current_page_count].load();

if (array == nullptr) {

// The thread obtains the responsibility of creating a new page.

m_allocator->alloc_array(array);

m_pages[current_page_count].store(array);

++m_max_page_index.m_size_t;

}

native_mutex_unlock(&m_critical_section);

// Try to allocate data to the new page created.

pfs = array->allocate(dirty_state);

if (pfs) {

// Return pfs if the allocation is successful.

return pfs;

}

// Create a new page if the allocation still fails and the number of pages has not reached the upper limit.

}

}Let's analyze the problem of polling pages in detail. The value of the m_monotonic variable is accumulated concurrently. Some pages are skipped in the polling process, which increases the probability of creating new pages.

Let’s suppose the PFS container contains four pages. The first and fourth pages are busy, while the second and third pages have free records.

When four threads request allocation concurrently, they obtain an m_monotonic parameter valuing 0 at the same time.

monotonic = m_monotonic.m_size_t.load();All threads will fail to allocate records on the first page because the first page has no free records. Then, the value of the monotonic variable accumulates, and the threads try the next page.

monotonic = m_monotonic.m_size_t++;Here comes the problem. The value of the atomic variable increases by 1 based on the latest value returned. However, the values are returned for the four threads in sequence. As a result, the first thread obtains the monotonic variable valuing 2, the second thread obtains the monotonic variable valuing 3, etc. If we follow this logic, we can see that the third and fourth threads will skip page 2 and page 3. The two threads will fail to allocate data after the polling and create a new page. However, page 2 and page 3 do have free records at this time.

Although the preceding example displays an extreme situation, pages can be skipped due to simultaneous requests of PFS memory in MySQL concurrent access.

PFS_buffer_default_array is the administrative class a page uses to manage a set of records.

The following code provides an example of the key data structure:

class PFS_buffer_default_array {

PFS_cacheline_atomic_size_t m_monotonic; // The monotonically increasing atomic variable that is used to select free records.

size_t m_max; // The maximum number of records that can be stored on the page.

T *m_ptr; // The PFS object corresponding to the record, such as PFS_thread.

}Each page is an array of a fixed length. A record has three states: FREE, DIRTY, and ALLOCATED. FREE indicates that the record is free. ALLOCATED indicates that the record is allocated. DIRTY is an intermediate state, indicating the record is occupied but not yet allocated.

Selecting records is the process of finding and occupying the free records in polling.

The following code is the simplified version of the core code:

value_type * allocate(pfs_dirty_state *dirty_state) {

// Start polling from the position indicated by the m_monotonic variable.

monotonic = m_monotonic.m_size_t++;

monotonic_max = monotonic + m_max;

while (monotonic < monotonic_max) {

index = monotonic % m_max;

pfs = m_ptr + index;

// m_lock is a pfs_lock structure. It manages the transition among the three states: FREE, DIRTY, and ALLOCATED.

// The transition of the atomic state will be explained in detail later.

if (pfs->m_lock.free_to_dirty(dirty_state)) {

return pfs;

}

// If the record is not free, the value of the atomic variable increases by 1. The system tries polling from the next record.

monotonic = m_monotonic.m_size_t++;

}

}The overall process of selecting a record is similar to selecting a page. The difference is that the number of records on a page is fixed, so selecting a record includes no logic for expansion.

The same policy encounters the same problem. The value of the m_monotonic atomic variable increases by 1 for multiple concurrent threads. Records may be skipped if large numbers of concurrent threads exist. In this case, records may remain free on the page.

Therefore, even if a page is not skipped, the records on the page are still likely to be skipped and left unselected. This makes the situation worse, and the memory occupied grows faster.

Each record has a pfs_lock to manage its state and version information. The states include FREE, DIRTY, and ALLOCATED.

The following code provides an example of the key data structure:

struct pfs_lock {

std::atomic m_version_state;

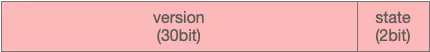

}A pfs_lock uses a 32-bit unsigned integer to save the version and state information in the following format:

The lower two bits indicate the state:

state PFS_LOCK_FREE = 0x00

state PFS_LOCK_DIRTY = 0x01

state PFS_LOCK_ALLOCATED = 0x11The version is initially 0 and increases by 1 after each allocation. Therefore, the version indicates the number of times the record is allocated. Take a look at the state transition code:

// The following three macros are used for bit manipulation, making it easy to manage state or version.

#define VERSION_MASK 0xFFFFFFFC

#define STATE_MASK 0x00000003

#define VERSION_INC 4

bool free_to_dirty(pfs_dirty_state *copy_ptr) {

uint32 old_val = m_version_state.load();

// Determine whether the current state is FREE. If not, the system returns false.

if ((old_val & STATE_MASK) != PFS_LOCK_FREE) {

return false;

}

uint32 new_val = (old_val & VERSION_MASK) + PFS_LOCK_DIRTY;

// The current state is FREE. The system tries to change the state to DIRTY. The atomic_compare_exchange_strong variable is an optimistic lock.

// Multiple threads may modify the atomic variable at the same time, but only one can succeed.

bool pass =

atomic_compare_exchange_strong(&m_version_state, &old_val, new_val);

if (pass) {

// The change from FREE to DIRTY is successful.

copy_ptr->m_version_state = new_val;

}

return pass;

}

void dirty_to_allocated(const pfs_dirty_state *copy) {

/* Make sure the record was DIRTY. */

assert((copy->m_version_state & STATE_MASK) == PFS_LOCK_DIRTY);

/* Increment the version, set the ALLOCATED state */

uint32 new_val = (copy->m_version_state & VERSION_MASK) + VERSION_INC +

PFS_LOCK_ALLOCATED;

m_version_state.store(new_val);

}The state transition process is easy to understand. The logic of the dirty_to_allocated and allocated_to_free processes is easy. State transition has the problem of concurrent overwriting only when the record state is FREE. Once the state is changed to DIRTY, the current record is considered to be occupied by a thread, and other threads will not try to allocate the record again.

The version grows when the state becomes PFS_LOCK_ALLOCATED.

Freeing PFS memory is a simple process. Each record records its container and page to which it belongs. If you want to free memory, call the deallocate interface and set the state of the record to free.

The bottom layer will modify pfs_lock to update the state:

struct pfs_lock {

void allocated_to_free(void) {

/*

If this record is not in the ALLOCATED state and the caller is trying

to free it, this is a bug: the caller is confused,

and potentially damaging data owned by another thread or object.

*/

uint32 copy = copy_version_state();

/* Make sure the record was ALLOCATED. */

assert(((copy & STATE_MASK) == PFS_LOCK_ALLOCATED));

/* Keep the same version, set the FREE state */

uint32 new_val = (copy & VERSION_MASK) + PFS_LOCK_FREE;

m_version_state.store(new_val);

}

}In the previous part, we found that pages and records can be skipped in the polling process. Free members in the cache can be unallocated, resulting in new pages being created and more memory occupied. The main problem is that once the memory is allocated, it will not be freed.

Here are some ideas to improve the hit rate of PFS memory allocation and prevent the problems mentioned above:

while (monotonic < monotonic_max) {

index = monotonic % current_page_count;

array = m_pages[index].load();

pfs = array->allocate(dirty_state);

if (pfs) {

// Record the allocated index.

m_monotonic.m_size_t.store(index);

return pfs;

} else {

// The value of the variable increases only for specific threads. This prevents page skipping caused by concurrent accumulation of the value of the monotonic variable.

monotonic++;

}

}Each polling starts from the position of the latest allocation, which inevitably leads to conflicts of concurrent access. Therefore, certain randomness can be added to the initial position to avoid a large number of conflict-triggered retries.

The biggest problem with freeing PFS memory is that once the memory is created, it cannot be freed until shutdown. During a hotspot business, multiple pages are allocated in the peak period, but the memory cannot be freed after the period.

It is rather complicated to implement a lock-free recycling mechanism, which regularly detects and recycles memory without affecting the efficiency of memory allocation.

Take the following thoughts into consideration:

PolarDB has provided the feature of regularly recycling PFS memory to optimize PFS memory freeing. This part will be introduced later to keep this article concise.

[2] Source code mysql / mysql-server 8.0.24

A Deep-Dive into MySQL: An Exploration of the MySQL Data Dictionary

Deploy Application Stack Parse Server with RDS for PostgreSQL on Alibaba Cloud

A Deep-Dive into MySQL: An Exploration of the MySQL Data Dictionary

ApsaraDB - February 22, 2022

Apache Flink Community - October 17, 2025

ApsaraDB - June 3, 2021

Alibaba Developer - January 21, 2021

ApsaraDB - February 29, 2024

Alibaba Cloud Community - December 9, 2024

PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More DBStack

DBStack

DBStack is an all-in-one database management platform provided by Alibaba Cloud.

Learn More AnalyticDB for MySQL

AnalyticDB for MySQL

AnalyticDB for MySQL is a real-time data warehousing service that can process petabytes of data with high concurrency and low latency.

Learn More ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn MoreMore Posts by ApsaraDB