By Jiye Tu (Hejun), from AnalyticDB for MySQL Storage Kernel R&D Team

AnalyticDB for MySQL and Rockset have many similarities in architecture design, such as storage-computing separation and real-time writes. However, AnalyticDB for MySQL shows more mature and advanced features in many aspects. AnalyticDB for MySQL supports richer scalability, highly consistent real-time data reads and writes, comprehensive index types, high-throughput writes, complete DML and Online DDL operations, and intelligent data lifecycle management. In terms of vector retrieval and analysis, AnalyticDB for MySQL provides higher retrieval accuracy. Design principles of AnalyticDB for MySQL include distributed tables, a synchronization layer based on the Raft protocol, an engine layer that supports DML and DDL, and a high-performance and low-cost persistence layer. Together, these ensure the high performance and cost-effectiveness of AnalyticDB for MySQL as a real-time data warehouse in the AI era.

After years of research and development within Alibaba Group and Alibaba Cloud, AnalyticDB for MySQL has continuously addressed the challenges of massive data analysis and met the diverse and differentiated needs of customers. Eventually, it has evolved into a real-time data warehouse that is most suitable for the AI era. This article will introduce the design and implementation principles of AnalyticDB for MySQL in depth, and compare it with Rockset accordingly.

AnalyticDB for MySQL is a real-time data warehouse service developed in-house by Alibaba Cloud. It can process petabytes of data and has been tried and tested in core businesses of ultra-large scales. It serves various online analysis systems including traditional large and medium-sized enterprises, government agencies, and Internet companies, covering many industries such as e-commerce, advertising, logistics, entertainment, tourism, and risk control. AnalyticDB for MySQL adopts a cloud-native architecture that horizontally or vertically scales components and separates computing from storage and hot data from cold data. It supports real-time data write operations that have high throughput, strong data consistency, high query concurrency, and high-throughput batch processing. The underlying XUANWU storage engine of AnalyticDB for MySQL is a self-developed column-oriented storage engine that supports real-time data write operations that have high throughput, high-performance real-time query, ultra-large data volumes, database with complete DML and OnlineDDL, automatic data tiering, and lifecycle elimination, so as to provide a low-cost solution. It is the basic support of AnalyticDB for MySQL.

Rockset is a real-time data warehouse company that has been in research and development since 2016. It provides external cloud services based on RocksDB, whose specific applications cover many fields such as recommendation engines, logistics tracking, financial technology, and e-commerce. Its main features include storage-computing separation, real-time writes, full indexes, schemaless, low-cost storage, and integrated vector retrieval.

In the AI era, model training, fine-tuning, deployment, and iteration of large models are extremely dependent on massive data with high quality as the basis. Multi-source collection, mass storage, low-cost management, quick import, and fast retrieval of these data are the core competitiveness in the AI era. As typical representatives of real-time data warehouses, AnalyticDB for MySQL and Rockset provide powerful support for data processing capabilities, but there are some differences, which will be described in detail below.

AnalyticDB for MySQL is similar to Rockset in completely separating computing from storage. The underlying storage nodes of AnalyticDB for MySQL are based on Alibaba Cloud Object Storage Service (OSS) and Alibaba Cloud Elastic Block Storage (EBS), and the upper-layer computing nodes are based on ECS and Kubernetes. Storage and computing resources can be independently expanded. In addition, the computing layer further supports multiple resource groups. Different resource groups are completely isolated from each other and can be used for different businesses without mutual interference.

AnalyticDB for MySQL differs from Rockset in that it supports a wider variety of scalability:

• Scheduled scaling: You can configure the rules for the scheduled upgrade and downgrade of computing resources based on business characteristics.

• On-demand scaling: Resources are pulled up only when ETL SQL statements are received, which minimizes costs.

• Auto-scaling Multi-Cluster within a single resource group: The scale of resources is adjusted in real time based on real-time resource usage, such as CPU utilization and memory usage.

The training of large AI models requires massive amounts of data, and the amount of data required will increase over time, which consumes an astonishing amount of storage, computing, and power resources.

Combined with the storage-computing separation architecture provided by AnalyticDB for MySQL, while continuously providing and increasing storage resources, there is no need to increase computing resources simultaneously, greatly reducing the consumption of CPU and memory. Moreover, compared with Rockset, since AnalyticDB for MySQL provides scheduled scaling and on-demand scaling, Multi-Cluster, and other capabilities that Rockset does not have, it will be able to maximize resource consumption equal to actual needs, so as to minimize the cost of AI enterprises.

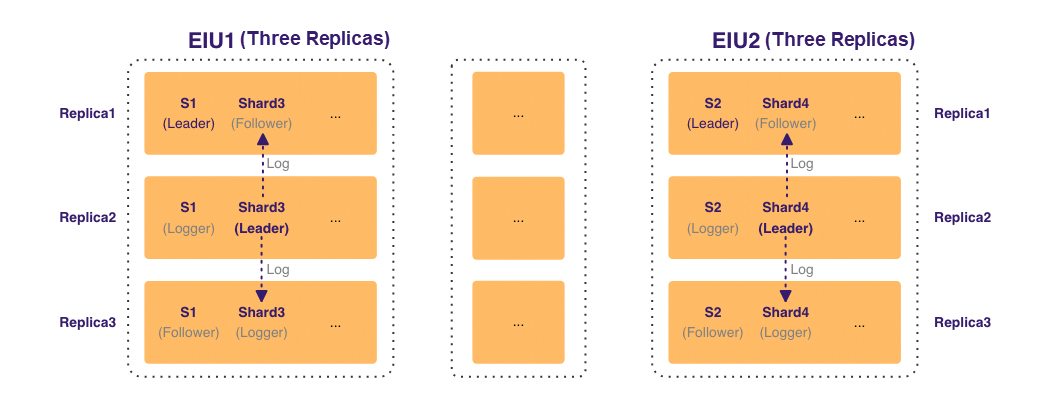

AnalyticDB for MySQL is a synchronous write model that supports strong consistency and data is visible immediately after write operations that have high throughput. It is implemented in AnalyticDB for MySQL based on log synchronization, real-time engine, primary key, DeleteSet, and row-level MVCC. All DML statements are visible in real time at the row level even in ultra-large datasets. When you query data, each shard takes a row-level snapshot corresponding to the latest offset of the leader, which ensures full visibility and strong consistency in real time.

However, the write operation of Rockset is asynchronous and only guarantees eventual consistency. The query can be performed 1 to 5 seconds after the write operation.

The data required for AI model training comes from a variety of data sources. Traditional offline import capabilities often require T +1 import every other day, which loses data freshness. In AI scenarios such as intelligent Q&A robots and news Artificial Intelligence Recommendations, the reduction in data freshness will seriously lower the business value. AnalyticDB for MySQL provides better real-time data reading and writing capabilities with stronger consistency than Rockset, and ensures data freshness through real-time visibility. In addition, strong consistency greatly simplifies the development difficulty of upper-layer data systems, and improves the efficiency of data cleaning, extraction, and conversion, so as to accelerate every aspect of model training.

In terms of indexing, AnalyticDB for MySQL is similar to Rockset in that both automatically index all columns. The differences are that:

1) Rockset makes three kinds of indexes for each column: inverted indexes for point query, in-memory column indexes for aggregation, and row indexes for data retrieval. While providing rich access modes, it also incurs large storage overhead.

2) AnalyticDB for MySQL provides richer and finer-grained indexing capabilities.

AnalyticDB for MySQL is designed for extreme query pushdown and delayed materialization. It supports almost all types of column-level indexes, including inverted indexes, BKD indexes, Bitmap indexes, vector indexes, full-text indexes, and JSON indexes. It covers structured and semi-structured data and is suitable for query performance in different scenarios. The default setting of full indexes enables the XUANWU engine to automatically select different indexes based on different column types. You only need to focus on SQL usage without manual intervention.

In addition to column-level secondary indexes, Clustering Key, a sort key for row data is also supported. This distributes data in a defined column order and enables I/O aggregation based on sort columns, so as to greatly improve the data reading efficiency on these sort columns. AnalyticDB for MySQL further provides intelligent indexing capabilities, combined with the user's SQL Pattern to automatically recommend indexes and delete useless indexes.

RAG (Retrieval-augmented-generation) is the most typical application scenario of large AI models and a key precondition of Q&A robots such as ChatGPT. High-quality and efficient retrieval can greatly improve the accuracy of Q&A and is an essential infrastructure for the entire AI era. Compared with Rockset, AnalyticDB for MySQL provides more types of finer-grained indexes and higher data search performance. It also provides Clustering Key to store data in a more orderly manner, which further improves the compression ratio and I/O performance. Finally, it can reduce the end-to-end response latency on the client side.

Both are capable of supporting high-throughput data import. AppendOnly and compaction in the background of AnalyticDB for MySQL are designed and implemented based on the primary key and DeleteSet. This enables high-performance real-time writes at the engine level. In addition, the sharding architecture allows you to horizontally scale out the write concurrency and supports high-throughput real-time warehousing at the instance level by using multiple concurrent data writes to multiple tables in real time. Rockset uses a Tailer-Leaf-Aggregator architecture and independent Tailer resources to support data writes.

In addition to real-time writes, both support using bulk load for batch import. AnalyticDB for MySQL allows you to import large-scale elastic data at a time by using third-party resources such as Spark.

The training of high-quality models depends on high-quality data input, while the cleaning, transformation, and loading of large training datasets often require repeated trial and error and continuous adjustment of cleaning strategies, which is time-consuming. High-throughput real-time writes and batch import are powerful tools to improve efficiency. The real-time write technology of AnalyticDB for MySQL based on mark-for-delete enables any update/deletion of petabytes of data can be completed within milliseconds. The elastic data import capability makes it easy to expand resources horizontally to ensure import efficiency when large amounts of data need to be imported.

AnalyticDB for MySQL, with its sharding and partitioning design, still implements the complete row-level DML semantics of the database on the basis of ultra-large datasets. In addition, the currently supported rich DDL (such as adding and subtracting columns and indexes and modifying column types, partition formats, and storage policies) are fully online. Users can flexibly use them as needed without additional maintenance or worrying about the impact of locking tables on online business.

Rockset, on the other hand, provides a Document data model, which does not require specifying the type of each field in advance. It can automatically add columns and infer types based on the written data. You can even write data of different types in the same column.

In contrast, AnalyticDB for MySQL is a strongly typed system but supports flexible subsequent changes. The early data modeling design will have a certain learning cost, but will provide great help for the later data governance, data quality, and query performance; Rockset is a weakly typed system, which is easy to get started in the early stage, but is more difficult in the later data governance and management.

For the massive datasets that need to be managed in the AI era, data governance is often required repeatedly over a long lifecycle to ensure data quality, and the AnalyticDB for MySQL strongly typed system can greatly ease the management burden.

Both support tiered storage of hot and cold data and TTL lifecycle management of data. The differences are that:

• Rockset stores all data on the OSS and additionally caches hot data on the SSD. AnalyticDB for MySQL, on the other hand, allows only cold data to be stored on the OSS and hot data to be placed directly on the SSD, thus reducing storage costs. AnalyticDB for MySQL supports a specified mixed storage policy. You can specify multiple closest partitions as hot partitions and historical partitions as cold partitions. Hot partitions are stored on [E]SSDs for online analysis and cold partitions are automatically archived to DFS to reduce costs. The archiving process is automatically performed in the backend without user intervention. For low-frequency cold data access, a cache is also provided to accelerate high-frequency access. Both hot and cold partitions are billed based on actual usage without incurring costs for allocated but unused disk space.

• Rockset can only eliminate data based on the _event_time field, while AnalyticDB for MySQL can flexibly specify any field as the partition key column and eliminate data at the partition level.

In AI scenarios such as financial time-series data prediction, body health monitoring, and network security protection, data is naturally defined as hot and cold. Cold data must be stored but is rarely accessed. In addition, the storage cost of massive data often accounts for the majority of the cost of data infrastructure. In this case, low-cost and reliable storage media and user-transparent automatic dump mechanism are essential capabilities, along with the need to ensure that hot data query performance is not reduced. Rockset can only manage the lifecycle based on time, while AnalyticDB for MySQL provides the ability to eliminate and dump data based on any column, which is more flexible to meet the needs of diverse AI business scenarios.

Both AnalyticDB for MySQL and Rockset provide vector retrieval capability and support integrated retrieval of the vector and data of basic data types.

The difference is that in addition to the vector indexes and inverted indexes, AnalyticDB for MySQL provides the full-text indexes specifically for text and functions such as custom tokenizers, custom dictionaries, and keyword highlighting to allow businesses to be flexibly adjusted in combination with the knowledge domain, providing more precise retrieval capabilities.

As mentioned earlier, high-quality retrieval is essential for the accuracy of AI Q&A models. AnalyticDB for MySQL not only provides vector and basic data type integrated retrieval capabilities comparable to Rockset, but also customizes token segmentation and dictionary configuration for texts in special fields such as security, technical investigation, and medical treatment, providing more accurate text retrieval results as model input for domain AI models.

The preceding section compares the key features of AnalyticDB for MySQL and Rockset, and the following section will describe in detail the design and implementation principles of AnalyticDB for MySQL.

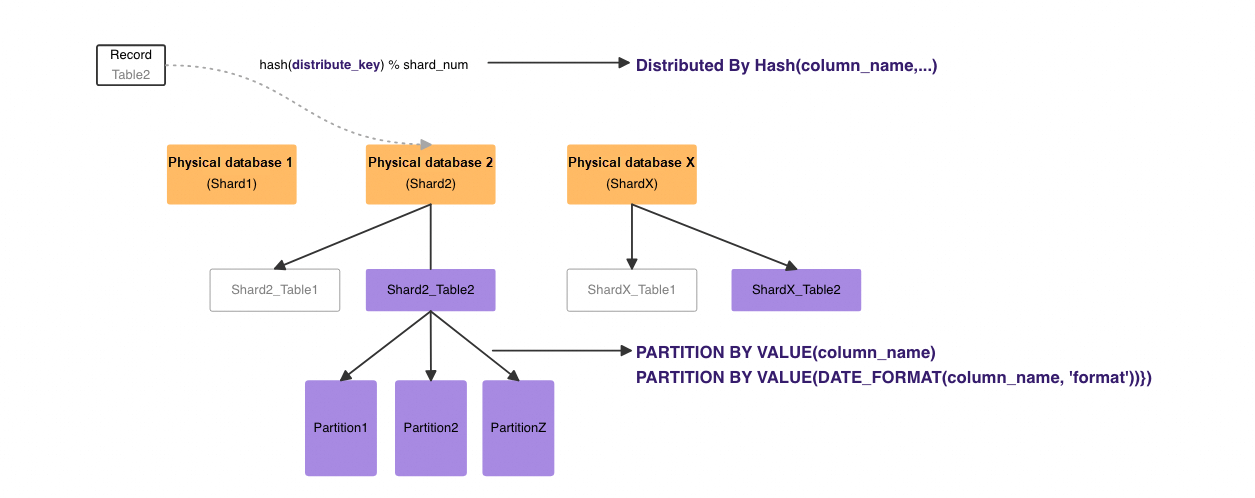

The data abstraction of the XUANWU engine is a distributed table, where the data is hashed to the corresponding shard (sub-table) based on the value of the distribution key, and is further partitioned based on the date (typical) within the shard. Concepts related to data distribution include databases, physical databases, tables, hash distribution, and partitions.

Here we only describe the macro data distribution method. Hash distribution and Partition by Value are used to distribute data across nodes of an instance and organize data by date. This method can meet the business requirements of ultra-large data volumes. For more information about table structure definitions, please refer to the official documentation.

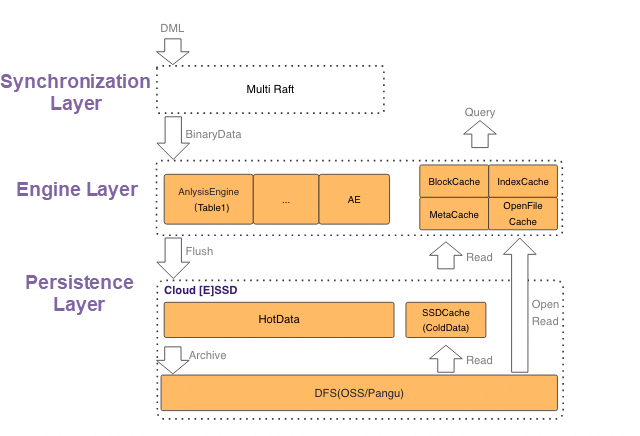

From a logical perspective, the XUANWU engine can be roughly divided into the synchronization layer, engine layer, and persistence layer from top to bottom.

The synchronization layer is based on the Raft protocol.

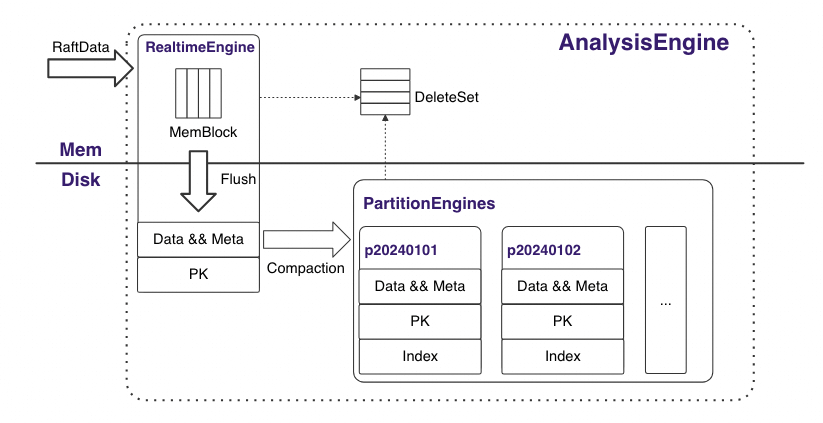

The overall table engine is based on the design of "append write" + "background merge modification" to reduce the random I/O overhead during the write process to improve the write performance. Complete DML and OnlineDDL are supported to enable the data management capability of databases in ultra-large datasets.

Due to the trade-off between performance and cost, the persistence layer has [E]SSDs with high performance and low latency, which are mainly used for hot data, and DFS options with high throughput and low cost, which are mainly used for cold data. In addition, user data is charged according to usage, without the need to intervene in the scale-out or scale-in operation of the disk.

When querying, each shard selects a leader to ensure strong consistency. When querying the table engine inside the shard, a lightweight snapshot is composed of the virtual row number and DeleteSet of the real-time engine. All data and deletions before the snapshot are visible, and data and deletions that are concurrently added after the snapshot are ignored. This ensures the semantics of row-level MVCC.

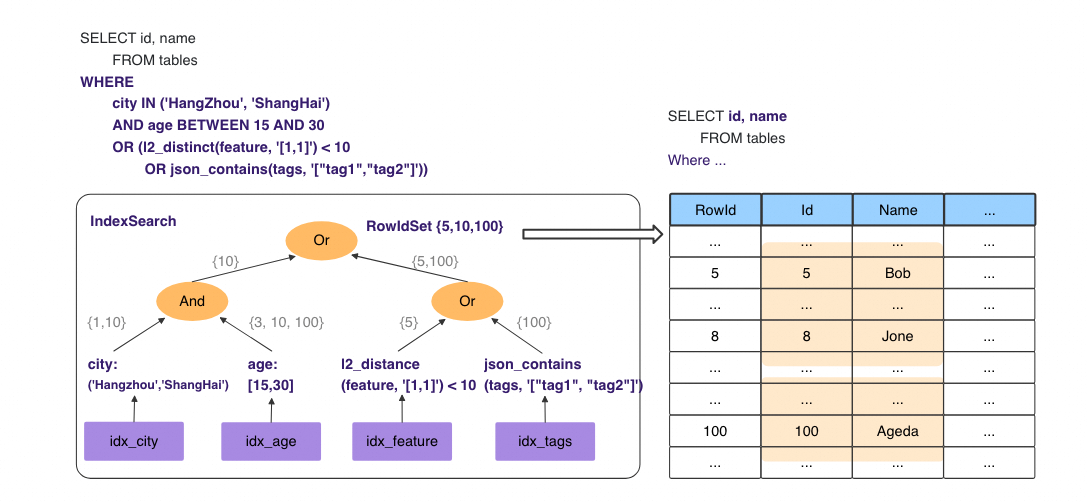

XUANWU engine is responsible for ScanFilterAndProject in queries. As the source of data, the input of the XUANWU engine is predicate conditions and projection columns with indexes, and the output is accurate projection column data that meets the predicate conditions. It pushes down projects and filters to storage based on column-oriented storage and indexes to minimize the amount of data involved in the calculation. In addition to common column pushdown, it also supports richer pushdown functions such as semi-structured data functions. The extreme near-storage pushdown provides an ultra-high-performance query experience.

The preceding figure shows a query example. The ScanFilterAndProject can be expressed as the SQL statement in the example. In this SQL statement, the XUANWU engine needs to match the results that meet the conditions of "Hangzhou" and "Shanghai" with age in the range of [15,30], or the results that the distance between the facial feature and the target feature is within a constant value or contain some labels at the same time, and finally retrieve the id and name that meet these conditions. XUANWU engine makes full use of indexes to accelerate the preceding queries, achieving extreme delayed materialization and minimizing I/O reads.

First, based on the column-level indexes, the row number set that meets each condition is obtained respectively, and then according to the composite condition of the query, the intersection and difference of the row number set is performed to obtain the final effective row set RowIdSet. XUANWU engine supports a wide variety of index types, including

Inverted indexes: The key of the index is the value of the column, and the value of the index is the virtual row number, which is more efficient for common filters. Compression based on ordered data can also reduce the space occupied by the index, such as the idx_city condition shown in the preceding figure.

BKD indexes: The tree structure is more advantageous than inverted indexes for range queries, thus being typically used for numeric and spatial data types, such as the idx_age condition shown in the preceding figure.

Bitmap indexes: Common indexes with a small number of Card values, such as gender and Null distribution.

Vector indexes: This type of index is used for face recognition and other vector analysis and supports the HNSW_PQ search algorithm and the SquaredL2 distance calculation formula, such as the idx_feature condition shown in the preceding figure, which also accordingly supports the pushdown of vector functions.

Full-text indexes: This type of index is used for full-text searches that support typical tokenizers such as IK, AliNLP, Ngram, and Standard and also supports custom dictionaries. The typical match against, phrase, and fuzzy full-text search functions are supported.

JSON indexes: This type of index is used to query data of the JSON type. It flattens nested JsonPath and automatically selects a specific index implementation based on its value type. It can optionally automatically flatten all nested JsonPaths or specify a JsonPath to reduce indexing overhead. In addition, for JSON arrays, the wildcard [*] is also supported, that is, the array elements are uniformly indexed, which is used to efficiently search for certain values in the array. For example, the idx_tags condition shown in the preceding figure, also accordingly supports the pushdown of JSON functions.

After obtaining the qualified RowIdSet, directly read the data of the required projection column (id,name) according to the virtual row number, and read the data by addressing the virtual row number, which requires the least I/O consumption.

This article compares AnalyticDB for MySQL with Rockset, and elaborates the design and implementation of AnalyticDB for MySQL. As a cloud-native data warehouse that has been developed by Alibaba Group and Alibaba Cloud for many years and has been verified by massive businesses, AnalyticDB for MySQL uses a storage-computing separation architecture and features strong consistency, real-time data write operations that have high throughput, full indexes of all types, flexible schemas, tiered storage of hot and cold data, and mixed load capabilities for high query concurrency and high-throughput batch processing. It provides a real-time data warehouse solution with extreme performance and high cost-effectiveness in the AI era.

Principle Interpretation of Serverless Technology of PolarDB for PostgreSQL

ApsaraDB - April 19, 2019

Alibaba Clouder - July 30, 2019

ApsaraDB - April 3, 2019

Alibaba Cloud New Products - August 10, 2020

Alibaba Clouder - September 28, 2020

ApsaraDB - October 21, 2020

AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More AnalyticDB for MySQL

AnalyticDB for MySQL

AnalyticDB for MySQL is a real-time data warehousing service that can process petabytes of data with high concurrency and low latency.

Learn More Managed Service for Grafana

Managed Service for Grafana

Managed Service for Grafana displays a large amount of data in real time to provide an overview of business and O&M monitoring.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn MoreMore Posts by ApsaraDB

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free