By Liang Geng (Suojiu)

Databases are an important part of the IT system of modern enterprises. When you create a database cluster, a conservative approach is adopted to configure resources, such as CPU, memory, storage, and connections, to ensure that the cluster can run smoothly even during peak hours. In this case, your resources are idle during off-peak hours and may be insufficient during peak hours. To resolve this issue, serverless clusters are provided by PolarDB for MySQL. Serverless clusters enable resource scaling depending on your workloads and free you from complex resource evaluation and O&M.

This article describes some key technical points to implement fast, accurate, stable, and wide elasticity in the construction of PolarDB for PostgreSQL serverless clusters.

To effectively cope with traffic bursts, serverless instances can perform faster specification scaling with fewer task steps, lighter configuration change operations, and more intelligent elastic scaling policies.

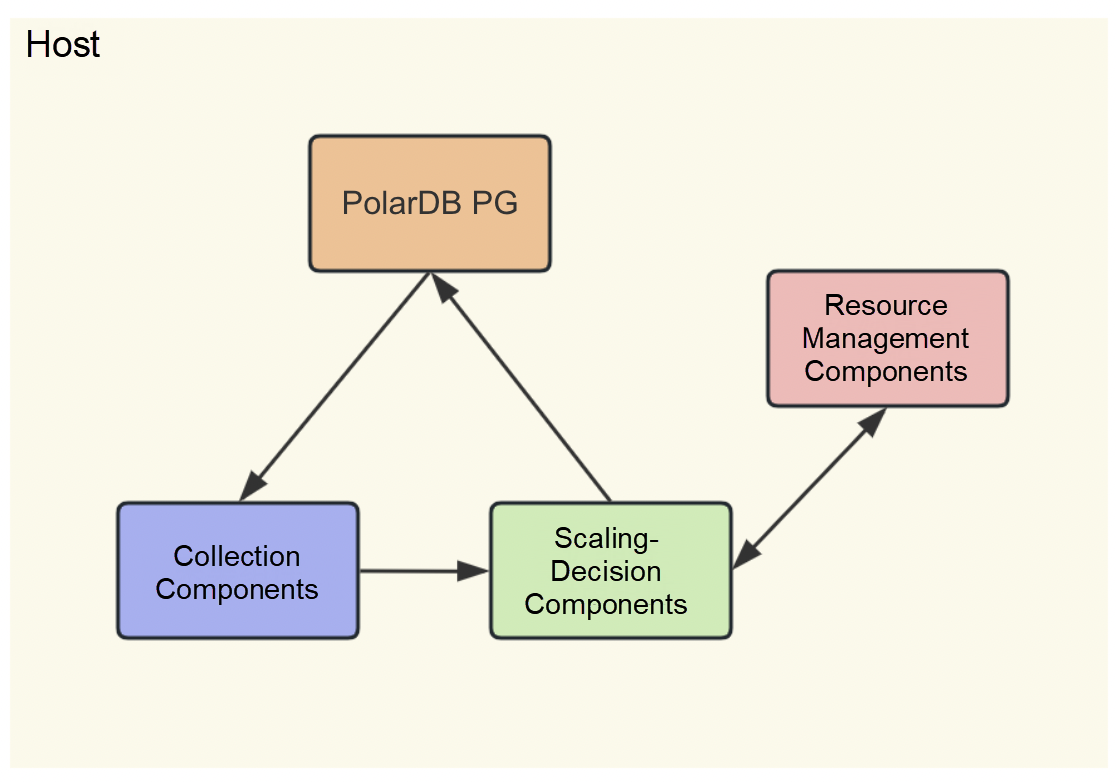

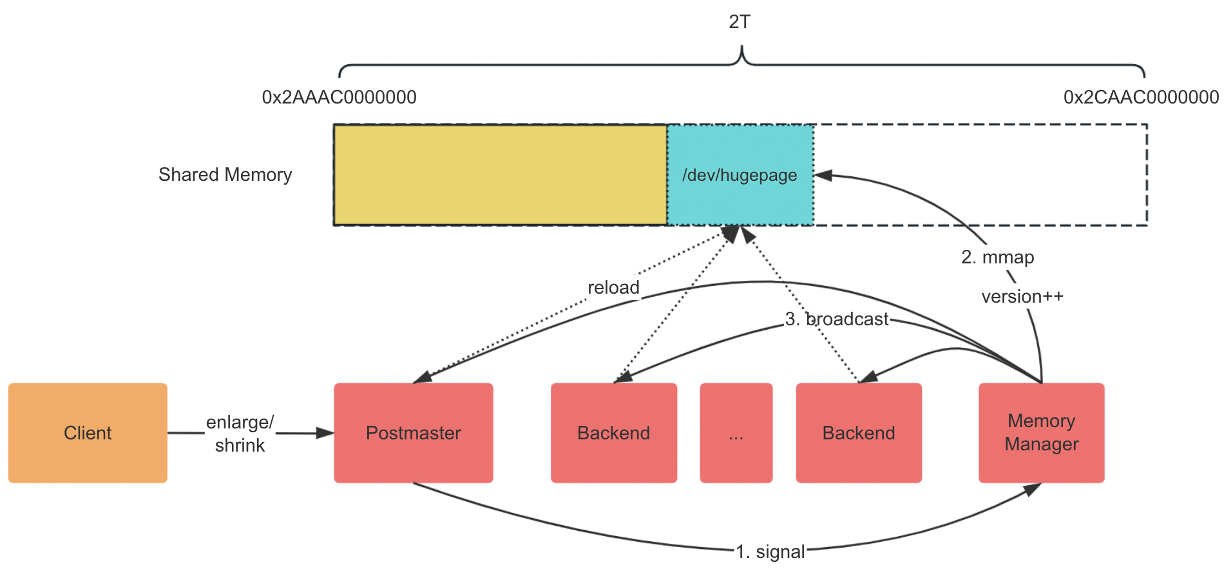

The overall serverless control process is as follows:

On the same host as the PolarDB database, a collection component is attached to obtain the performance data of the database instance. When the database resource usage continuously reaches a certain threshold, the collection component immediately notifies the scaling decision component to make a decision. The decision component adjusts the cgroup and specifications of the instance based on the local resources. PolarDB uses the following two optimizations to make this process more efficient.

PolarDB uses a monitoring component to collect the resource usage of nodes on the host in real time. When the resource usage reaches the alert value, PolarDB first attempts to apply for resources in the current host. (PolarDB has reserved resource pools and resource management components on the host where the serverless instance resides, and can successfully respond to more than 90% of requests for resources.)

After the resource application is completed, the instance node immediately expands the kernel resources (such as the shared buffer), and the entire in-situ scaling process can be easily performed within seconds.

When the host resources cannot meet the requirement, the instance node is immediately migrated from the current host to another host with sufficient resources. The entire migration process uses connection maintenance technology to migrate database connections between different nodes, thus reducing the performance jitter of the business.

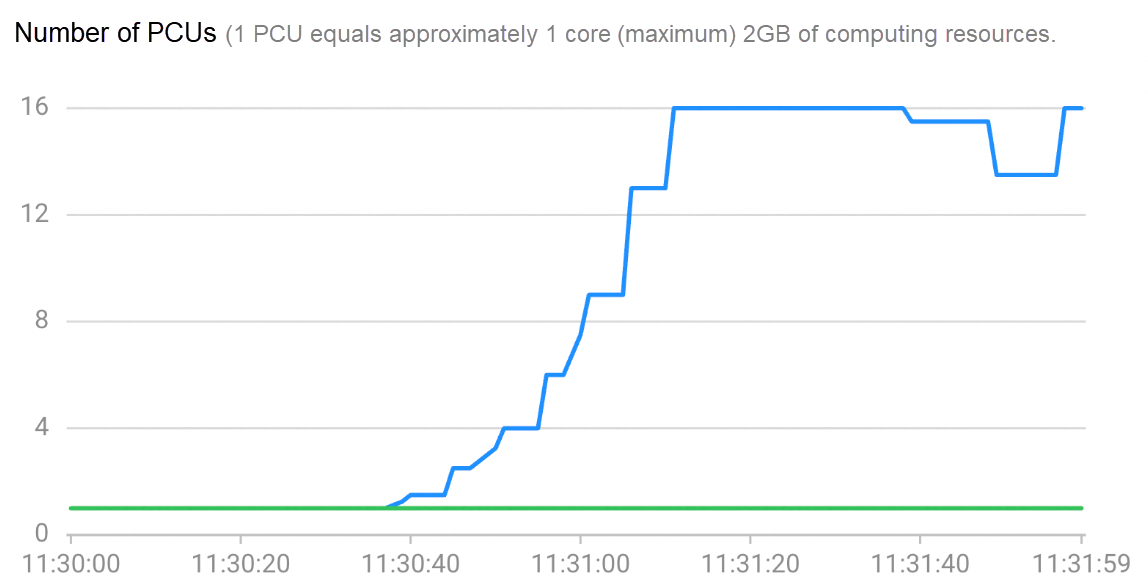

To cope with business traffic bursts, PolarDB for PostgreSQL serverless clusters determines the scaling step size: The next scaling step size is determined based on the current instance specifications and the extent to which the actual CPU utilization of the node is higher than the preset target.

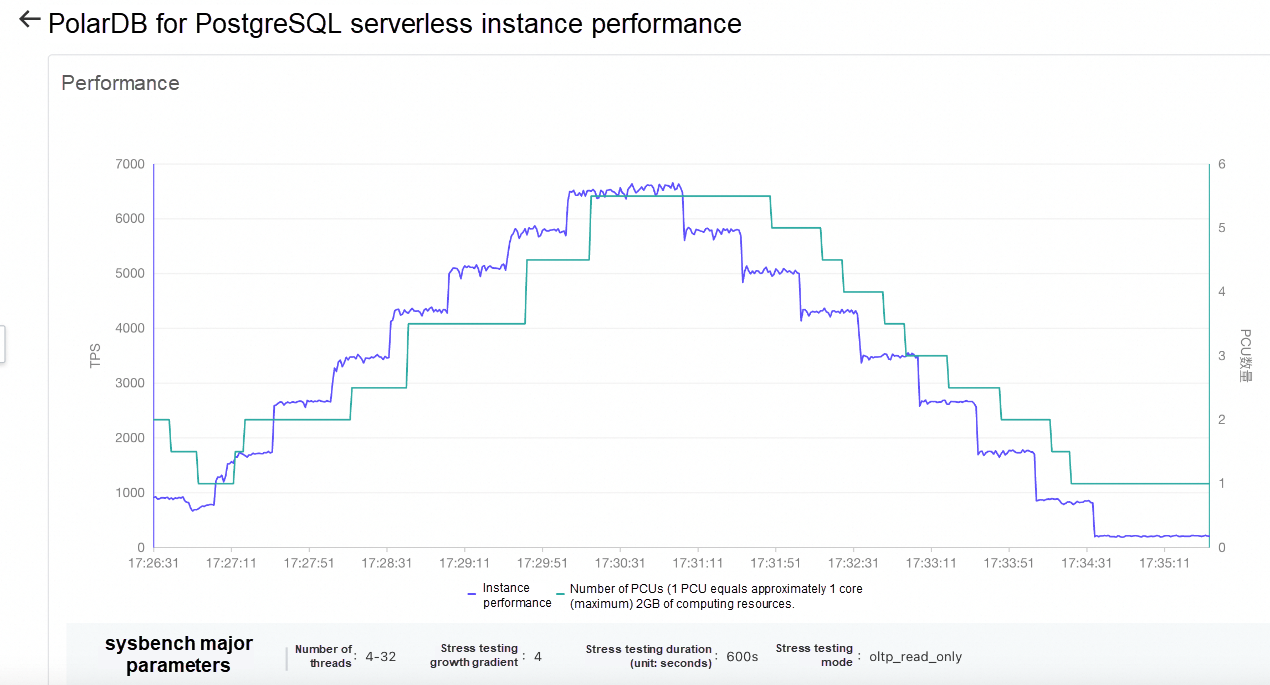

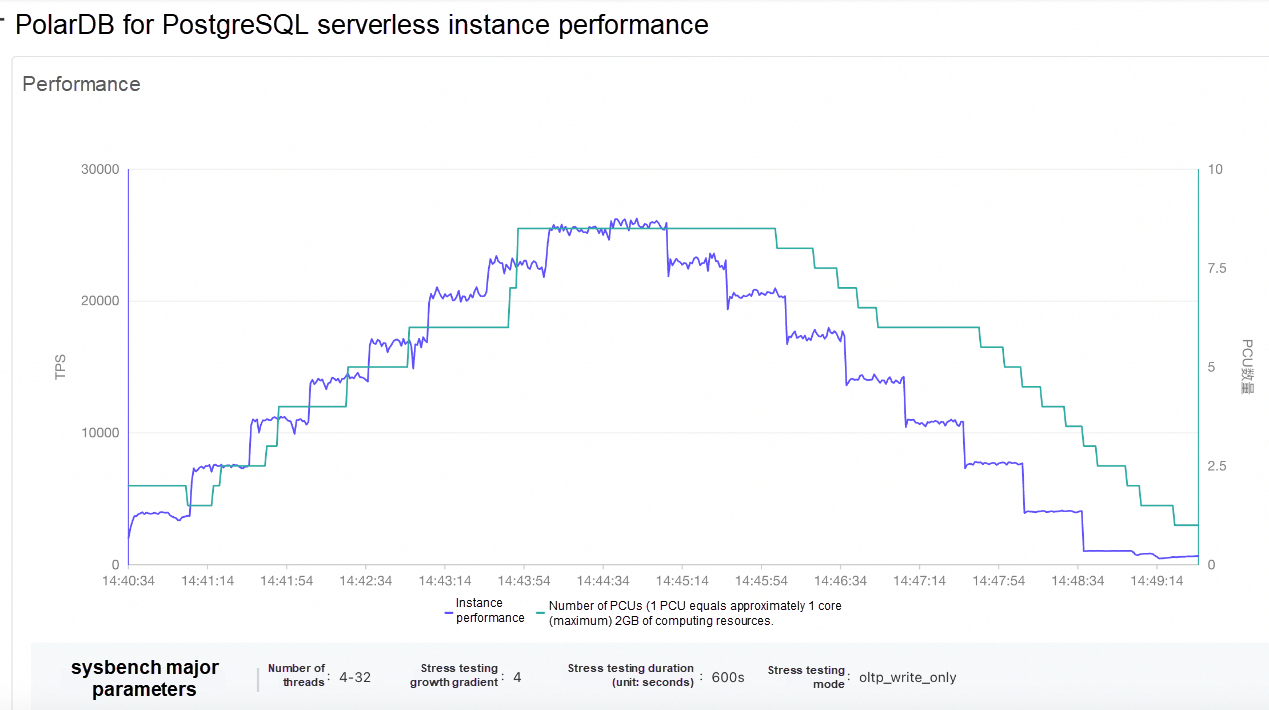

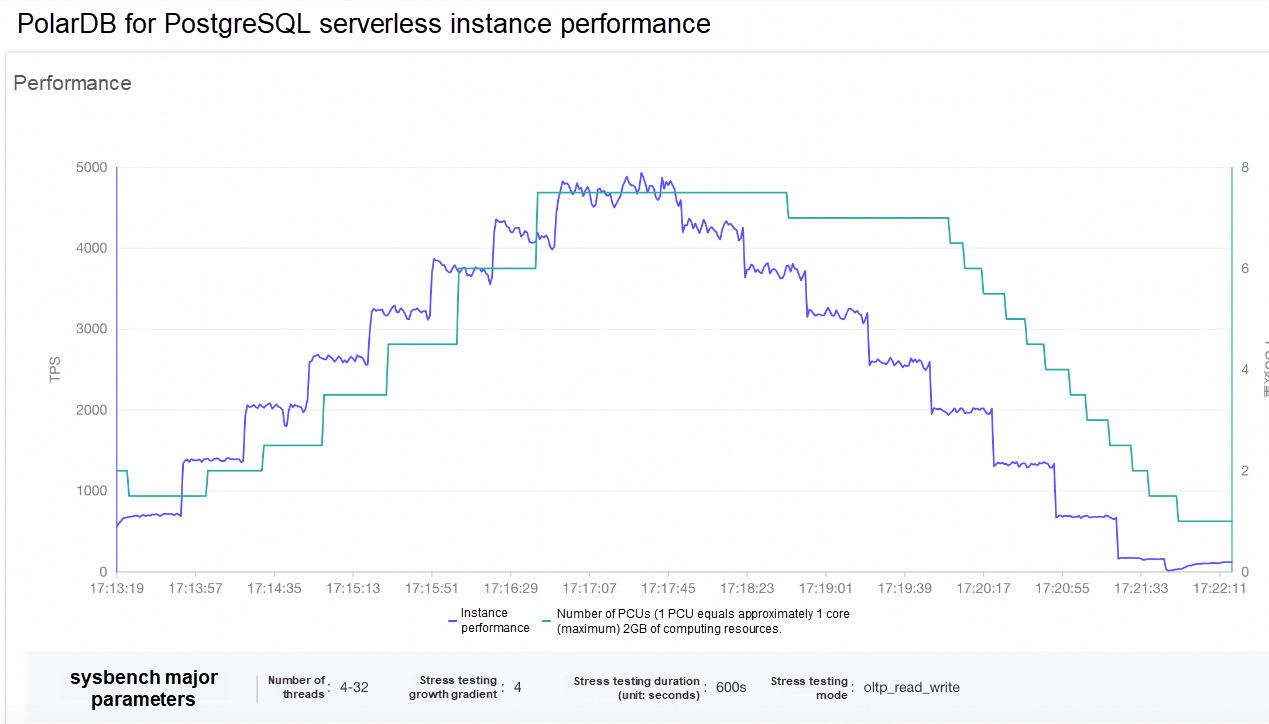

As shown in the preceding figure, PolarDB for PostgreSQL serverless clusters can gradually increase the scaling step size from the initial 1PCU to the preset upper limit of 16PCU within 30 seconds. During this period, the granularity of a single scaling is increased from 0.5PCU to 4PCU.

Compared with the previous fixed specification mode, the serverless mode not only makes resource configuration change faster but also accurately evaluates the resource usage of business loads, freeing technical support personnel from complex resource and cost allocation issues. All cost calculations are based on actual usage, which is truly on-demand and pay-as-you-go.

PolarDB for PostgreSQL evaluates resources in multiple dimensions, such as CPU usage, memory usage, and kernel resource usage, to achieve more accurate elasticity. The evaluation strategies are different based on different metrics in the scale-up and scale-down scenarios.

In the scale-up scenario, the evaluation is triggered when a single metric meets the conditions. In the scale-down scenario, the evaluation is triggered when multiple metrics meet the conditions. In terms of CPU usage, PolarDB for PostgreSQL uses the target CPU usage as a benchmark to regulate the granularity of a single scaling. This enables fast and accurate elasticity.

For example, the following basic conditions must be met for scaling (the scaling of nodes may be more complicated based on the actual usage of nodes):

Single-node scale-up:

• When the CPU usage of a single node exceeds 85%, a resource scale-up is triggered for the node.

• When the memory usage of a single node exceeds 85%, a resource scale-up is triggered for the node.

Single-node scale-down:

• CPU usage is lower than 55% and memory usage is lower than 40%.

Independent elasticity is also an important means for PolarDB for PostgreSQL serverless clusters to ensure accurate elasticity. It means that the elasticity between the database proxy and the database node is independent, and the elasticity between the database nodes is also independent. PolarDB for PostgreSQL implements independent elasticity in different component dimensions to achieve an accurate match between resource usage and business load.

In terms of resources, serverless clusters support CPU and memory elasticity. However, the native PostgreSQL does not implement online scaling on the buffer pool. As a result, the buffer pool cannot immediately use the memory that is scaled up.

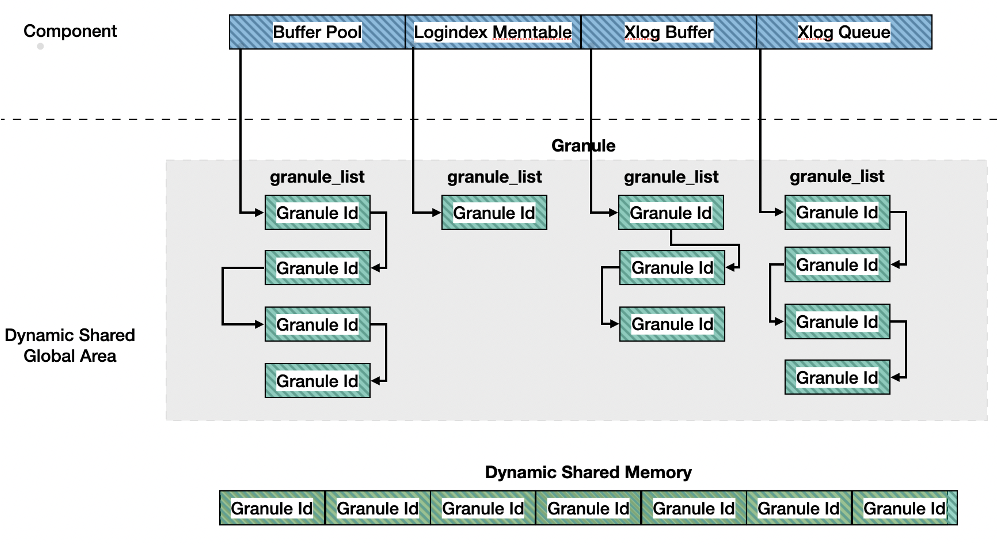

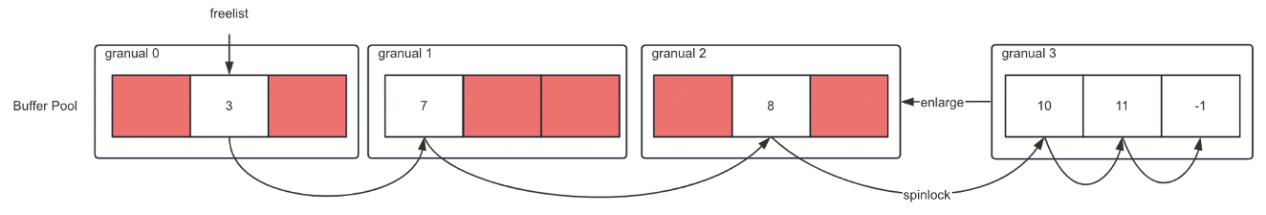

PolarDB for PostgreSQL is improved based on the DSM (Dynamic Shared Memory) mechanism of PostgreSQL to abstract the dynamic memory management layer DSGA (Dynamic Shared Global Area). As shown in the following figure, DSGA manages shared memory at the granularity of granules and supports online scaling of shared memory components with business semantics in the upper layer, such as Buffer Pool and logindex memtable. Each shared memory component can be scaled independently.

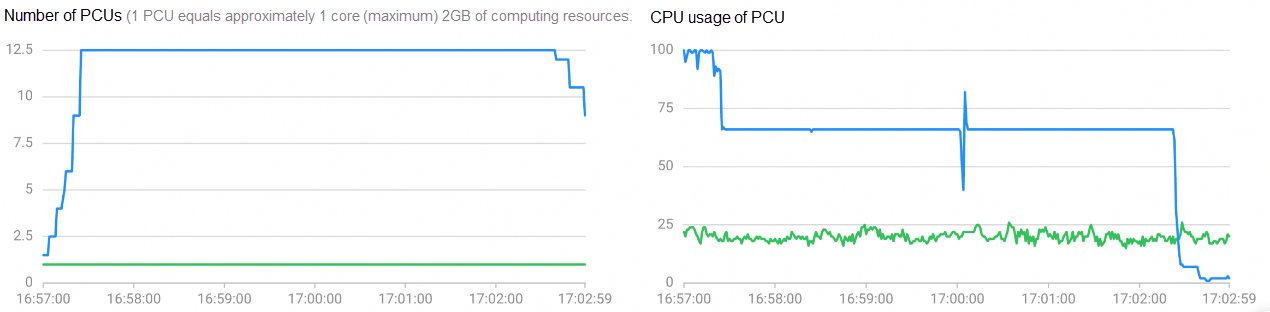

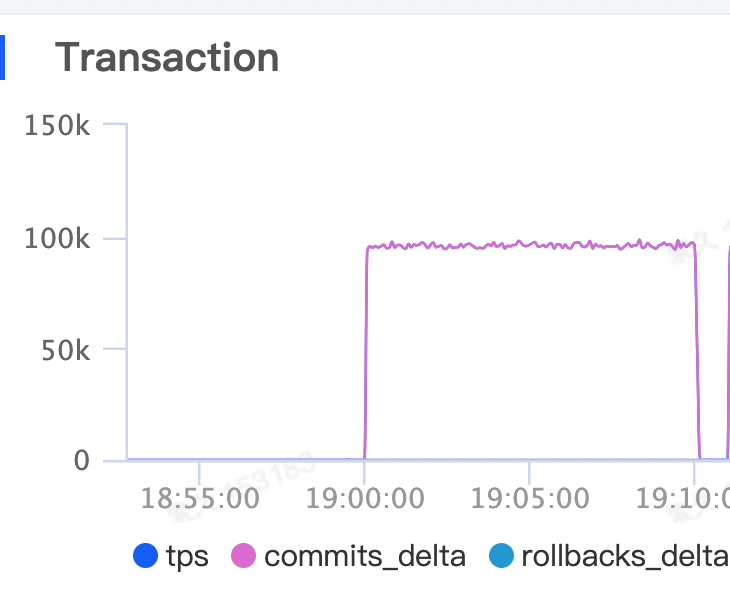

The most important feature of serverless instances is to change the resource usage according to changes in business load. Business traffic is the trigger for the elasticity. In business traffic burst scenarios, the goal of PolarDB for PostgreSQL serverless clusters is to provide stable scaling within seconds. When the business traffic is unstable, enabling stable instance performance is also the goal of PolarDB for PostgreSQL serverless clusters.

PolarDB for PostgreSQL evaluates the monitoring metrics within a time window during the elasticity-decision process. The decision-making algorithm identifies business traffic patterns and determines whether to scale up or scale down. For example, when stable business traffic experiences instantaneous jitter, serverless clusters maintain the original specifications to ensure stable instance performance.

As shown in the preceding figure, the number of PCUs increases rapidly when the CPU utilization of a serverless instance is full due to business pressure. During this period, the CPU utilization of the serverless instance jitters due to business instability. PolarDB for PostgreSQL serverless clusters can accurately determine the jitter based on historical business usage patterns and maintain the serverless specifications. When the CPU utilization of the serverless instance continues to be low, the serverless instance can trigger a scale-down within 10 seconds.

There are also two challenges in the scaling process of each component of the kernel shared memory: one is the timeliness of scaling, and the other is to control the impact on performance during scaling.

In terms of timeliness, due to the hierarchical design, the overall scaling process uses a two-phase awareness protocol: when scale-up, the DSGA layer first scales up and then notifies each component that uses shared memory to scale up; when scale-down, the DSGA layer first notifies the component to scale down and then releases the memory. To improve timeliness, first, the two phases are combined into one step in the implementation of a single process, and then the notification and polling method is used in the process of cross-process awareness, which improves the awareness efficiency and ensures that the overall scale-up can be completed within seconds.

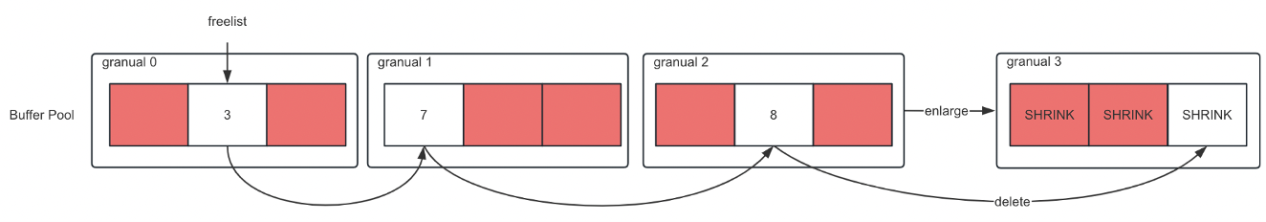

In terms of impact on performance, in the implementation of dynamic buffer pool scaling, to reduce the impact on performance during the scaling process, the lock-holding granularity of the buffer pool is controlled:

• During the scale-up process, reload is performed only when the Nbuffers logic is not traversed. To add a new buffer, only one spinlock is added when it is concatenated into the freelist.

• During the scale-down process, set all internal buffers of the to-be-scaled granule to the SHRINK state to ensure that it will not be reused again until the buffer lock is released. Then, the unused dirty pages are flushed and finally, the freelist is recycled. In the whole process, only the buffer of granual3 needs to be locked.

The overall test result shows that, under the 8 PCU specifications, pgbench stress testing is used for 20 consecutive scalings, and the performance curve is smooth, which indicates no impact on performance.

In addition to vertical scaling, PolarDB for PostgreSQL serverless clusters also supports horizontal scaling of RO nodes to make full use of the write-once-read-many capability of PolarDB, so as to achieve better linear scaling of reads.

Horizontal Scale-up: A serverless instance that initially has one primary node in the cluster is used as an example. The maximum number of PCUs is set to 32, and the range of automatic horizontal scaling of read-only nodes is set. For example, the range of read-only nodes is set to 1 to 4, and a maximum of seven read-only nodes can be scaled.

When the cluster endpoint is used to access the cluster, the primary node will independently scale to a maximum of 32 PCUs as the access pressure increases. If there is a need to further scale-up, an event of horizontally scaling the read-only node is triggered and a new read-only node is scaled. The newly added read-only node will also scale independently. When it scales up to the maximum number of 32 PCUs, the event of scaling a new read-only node is further triggered. Since each node scales independently and may trigger the decision to scale up a new read-only node at the same time, a silencing mechanism is introduced to avoid the frequent addition of read-only nodes in a short period of time.

Horizontal Scale-down: We monitor the load of each read-only node in the entire serverless cluster within a specified time window. If the load of a read-only node is low within a specified time window, the system automatically deletes the corresponding read-only node to implement horizontal scale-down, so as to reduce cluster costs.

AnalyticDB for MySQL: Your Choice for Real-time Data Analysis in the AI Era

[Infographic] Highlights | Database New Features in October 2024

ApsaraDB - August 1, 2022

ApsaraDB - December 25, 2023

ApsaraDB - August 15, 2024

ApsaraDB - September 21, 2022

Alibaba Cloud Community - December 20, 2022

ApsaraDB - June 12, 2024

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn MoreMore Posts by ApsaraDB