By Mengshi

This article attempts to answer the question of why table groups are important for distributed databases.

You can refer to the previous three articles:

In the Interpretation of PolarDB-X Data Distribution (3): TPC-C and Transparent Distribution, we mentioned that for distributed databases, the core of transparency lies in global indexes, and the key to global indexes lies in the performance of distributed transactions. Many distributed databases such as PolarDB-X have optimized the performance of distributed transactions to meet the maintenance requirements of global indexes.

Then, the questions are: is transparency the optimal solution for all scenarios (especially those that require high performance and the most resources)? Is manual partitioning valuable?

First, we need to compare distributed transactions and standalone transactions.

Distributed transactions have been studied for decades.

Many distributed transaction schemes can achieve good performance and functions under some benchmark conditions. However, in common scenarios (in other words, without special optimization for the business, whether in terms of transaction usage or business model), there is still an insurmountable gap in cost (or performance) between distributed transactions and standalone transactions.

We have done some tests and this gap is at least 3 times. For the same product database A, we compared its standalone transaction performance with its distributed transaction performance. Of course, this prerequisite is that database A has optimized its standalone transactions.

For all databases, including Google Spanner, TiDB, CockroachDB, ApsaraDB for OceanBase, and PolarDB-X, we can see this gap in actual tests, with the best being 3 times and some even exceeding 7 or 8 times.

In fact, this is the result as expected.

We can know the obvious performance differences from the costs such as increased response time (RT) caused by cross-core and cross-CPU, not to mention the distributed transactions communicated by network cable rather than buses. Distributed transactions definitely cost more. The database is similar to a typical cost center, so saving cost is the most important compared to generating profits.

Therefore, we can see that for a highly concurrent trading system, the optimized database-sharding and table-sharding middleware + standalone database must achieve maximum performance, which is somewhat strange. Since it forces the business to kill distributed transactions and optimize all operations into standalone transactions. Of course, it must be inconvenient to use.

Therefore, can distributed databases achieve maximum performance while improving ease of use and reducing usage costs?

To achieve this goal, distributed databases are required to eliminate distributed transactions.

First, we will introduce a tool Table Group.

Table groups are a concept that is not available in standalone MySQL.

As a distributed database compatible with standalone MySQL, introducing a new concept represents an additional learning cost, but we still introduce this concept in PolarDB-X. Why? Let's first understand what problems it is used to solve.

Consider the following scenarios:

There are two tables: the orders table and the order_details table. If an order contains 10 items, one record will be inserted into the orders table and 10 records will be inserted into the order_details table. For most e-commerce systems, the core query dimension of orders is the buyer. Therefore, both tables use the buyer ID (buyer_id) as the partition key, as follows:

create table orders(

order_id bigint auto_increment primary key,

buyer_id bigint,

...

) partition by hash(buyer_id);

create table order_details(

order_detail_id bigint auto_increment primary key,

order_id bigint,

buyer_id bigint,

...

) partition by hash(buyer_id);For an order operation, it is a transaction as follows (assuming buyer_id=88):

begin;

insert into orders (order_id,buyer_id, ...) values (null,88, ... );

select last_insert_id(); ## Obtain the generated order ID

insert into order_details (order_detail_id, order_id, buyer_id, ...) values (null, ${order_id}, 88, ...);

insert into order_details (order_detail_id, order_id, buyer_id, ...) values (null, ${order_id}, 88, ...);

insert into order_details (order_detail_id, order_id, buyer_id, ...) values (null, ${order_id}, 88, ...);

...

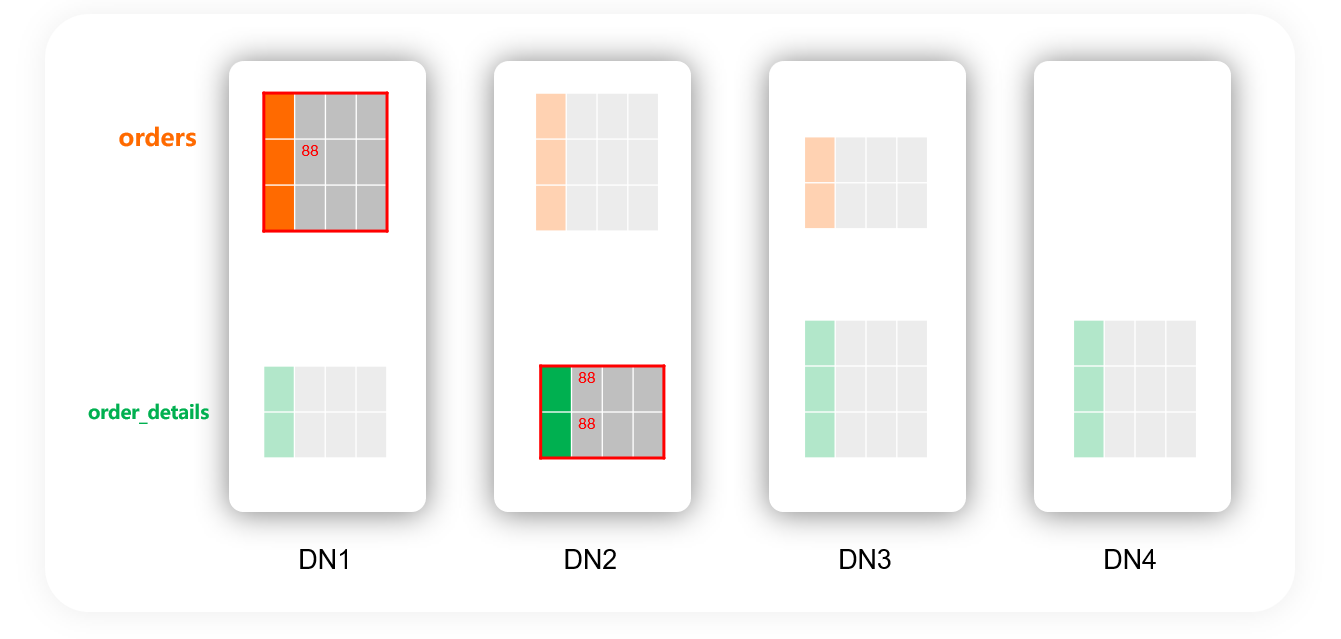

commit;If the partitions of these two tables are distributed in this way, it must be a distributed transaction:

In the maximum performance solution (database-sharding and table-sharding middleware + standalone database), the orders table where the order with buyer_id=88 is located and the order_details table must be placed in the same database, thus making this transaction a standalone transaction.

At the same time, we hope that this transaction will always be a standalone transaction. It means that:

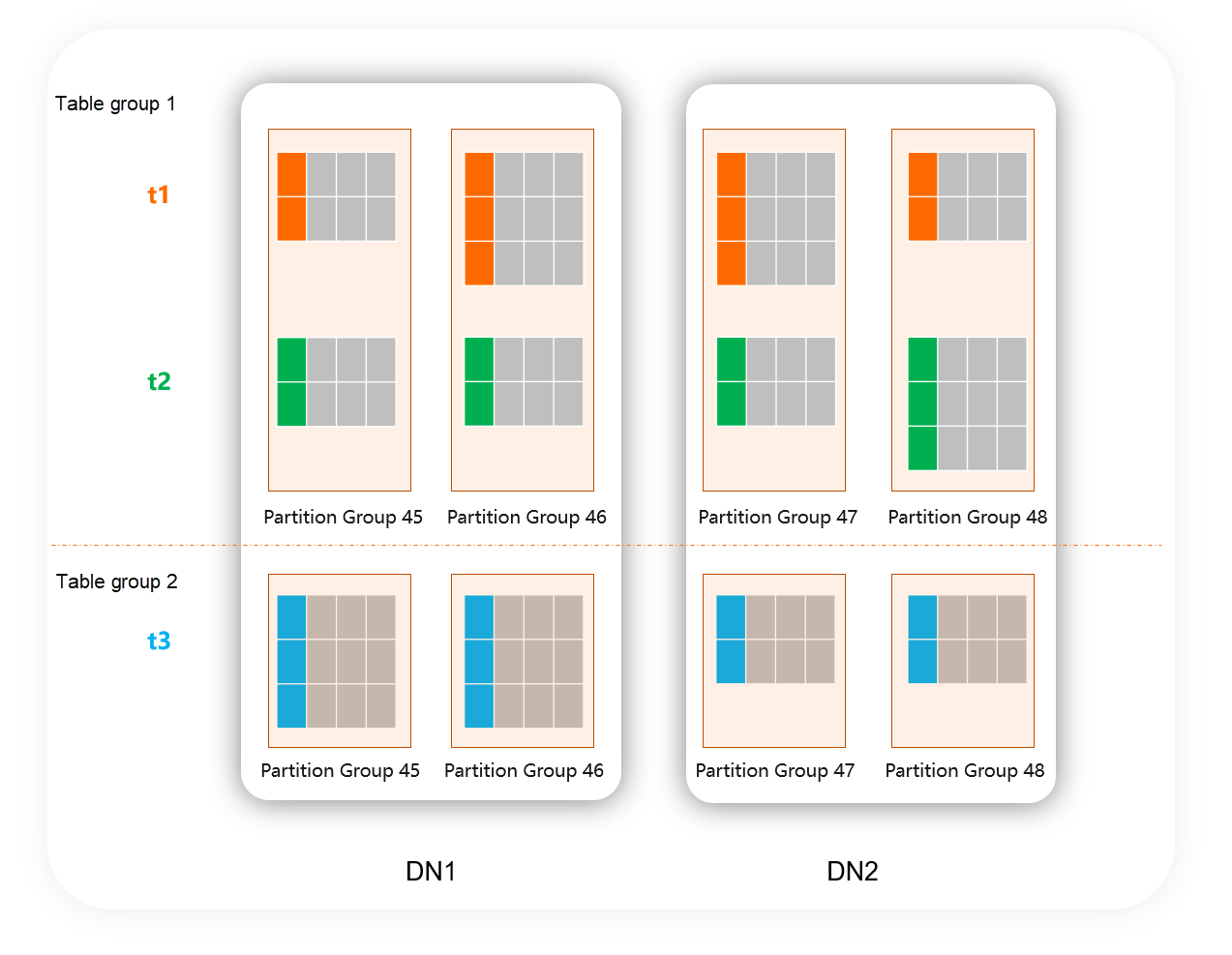

Therefore, we need to bind these two tables. This means that the partitioning algorithm, number of partitions, and partitioning of the two tables must be the same at all times. At the same time, partitions with the same buyer_id must be scheduled on the same machine at all times.

This binding relationship is referred to as a table group:

A distributed database can eliminate distributed transactions only if it has table groups (of course, it can also be called by other names. For example, in Spanner, interleaved tables achieve a similar effect). For example, this capability is not supported by TiDB and CockroachDB, so all their transactions are distributed transactions. Even if you are willing to design a reasonable partition key, the data cannot be routed together.

Besides, you can find that none of the open-source SQL + distributed KV databases on the market can provide such capabilities. It seems that they have a clear structure and clear layering, but when you want to further improve performance, they can provide particularly few optimization tools. They may not meet the expectations of database developers and users at the same time.

However, based on table groups, PolarDB-X provides a series of capabilities, allowing applications to enjoy the convenience of distributed databases and have a performance close to that of the middleware scheme at the same time.

In addition to providing a means to eliminate distributed transactions, table groups have another additional benefit: the pushdown of Join.

Consider the following SQL:

select *

from orders join order_details

on orders.order_id=order_details.order_id and

orders.buyer_id=order_details.buyer_id

where orders.order_id=xxx and

orders.buyer_id=xxxThe business is to find all the information of this order according to the order ID, including the item list information of the order.

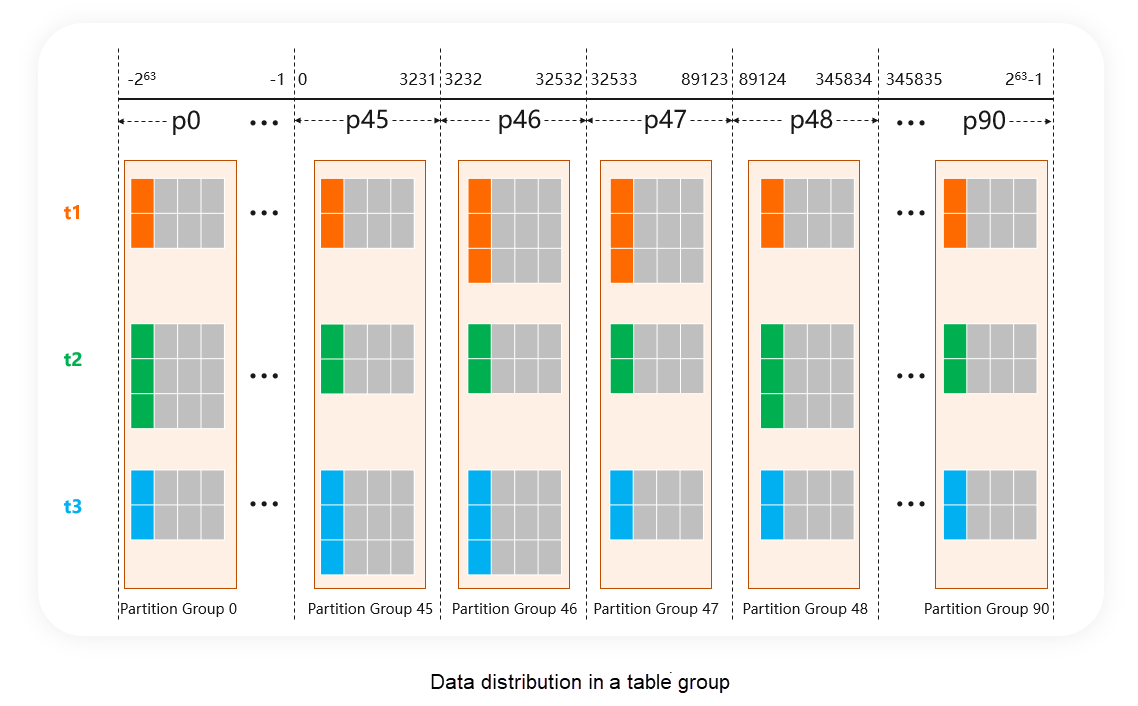

The condition for Join requires that the partition keys (buyer_id) of two tables be equal. If orders and order_details are in the same table group, the Join operation only occurs in the same partition group (partitions with the same number or aligned within a table group are referred to as a partition group). The partition groups must be on the same DN, which means that the Join operation can be completed by the DN. This is the pushdown of Join.

Since data is not transmitted to CN, there is no doubt that the pushdown execution strategy is better than the non-pushdown execution strategy for the same Join statement.

At the same time, the PoalrDB-X DN originated from MySQL and has strong computing power (including transactions, expression calculation, Filter, and JOIN). Table groups enable more SQL statements to push down calculations to DN, so PolarDB-X can further approach maximum performance.

With the concept of table groups, there is a new problem.

Take the orders table and the order_details table as an example. If the table group is not specified in the table creation statement, will they be divided into the same table group? In other words, if a user does not know the table group, can the distributed database automatically avoid distributed transactions in order operations?

The two corresponding routes are as follows:

For example, ApsaraDB for OceanBase uses Scheme 1. In other words, in the example of orders and order_details, although both are the same order with buyer_id=88, this transaction will still be a distributed transaction, unless you manually create a table group for orders and order_details.

According to the PolarDB-X design principle, Scheme 1 will raise the threshold for use because users must understand the concept of table groups and design table groups for each table to eliminate distributed transactions. It is not transparent enough, or its threshold should be further lowered. We believe that table groups should be an optional item for optimization instead of a required one that you must understand.

Therefore, PolarDB-X chooses Scheme 2 which automatically identifies and divides multiple tables into the same table group as much as possible.

In the preceding example, even if the user does not know the concept of table groups, nor specify table groups when creating a table, PolarDB-X will still divide orders and order_details into the same table group to eliminate distributed transactions in order operations. The operation on the data of the same user in the business is a standalone transaction, and this behavior is also more intuitive.

Table groups have a certain cost, which is mainly reflected in the load balancing.

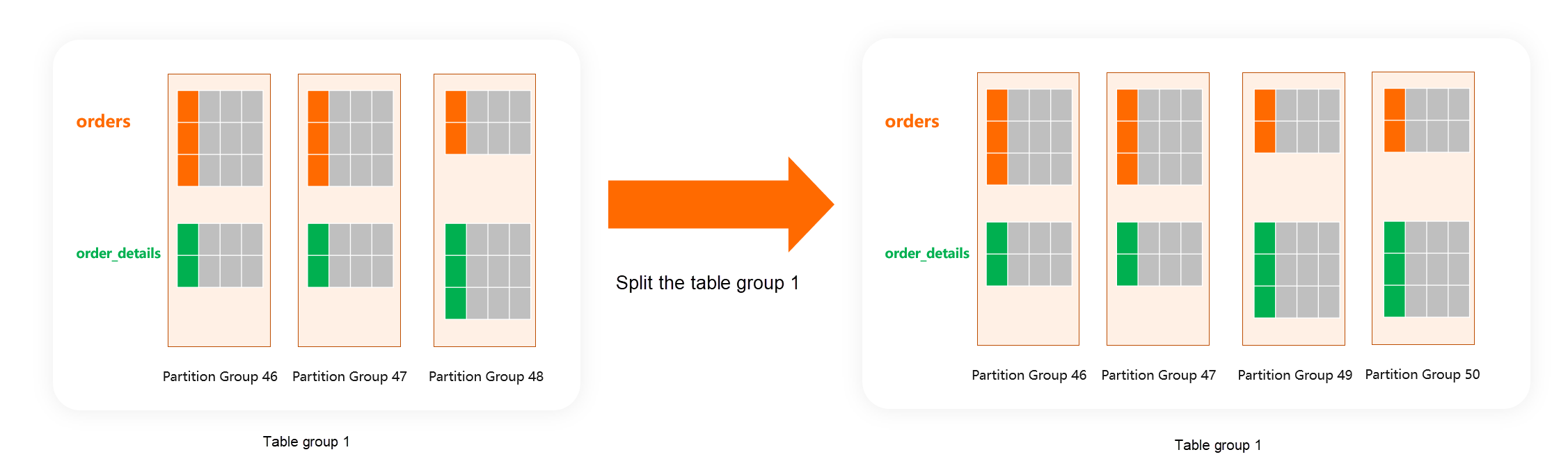

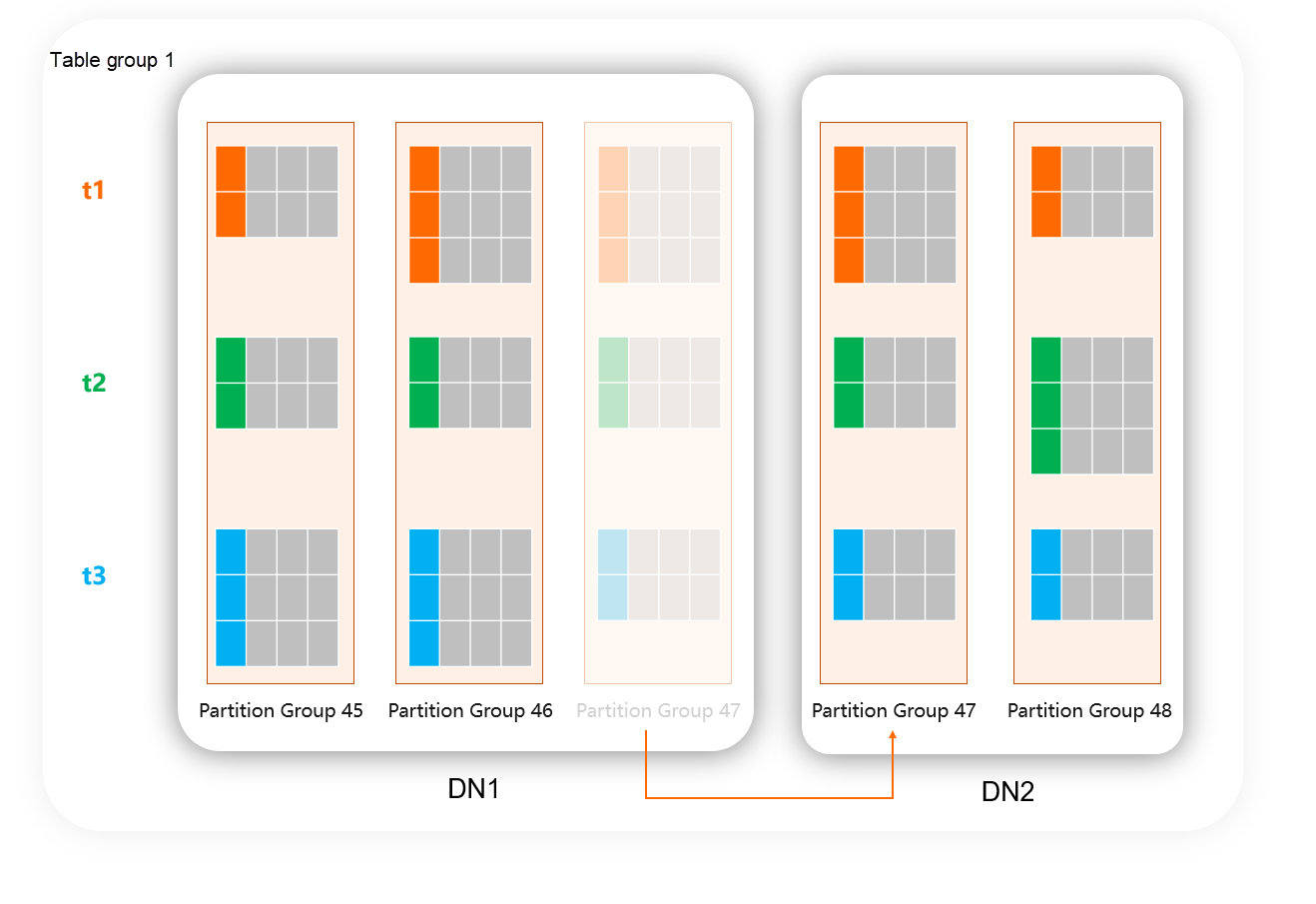

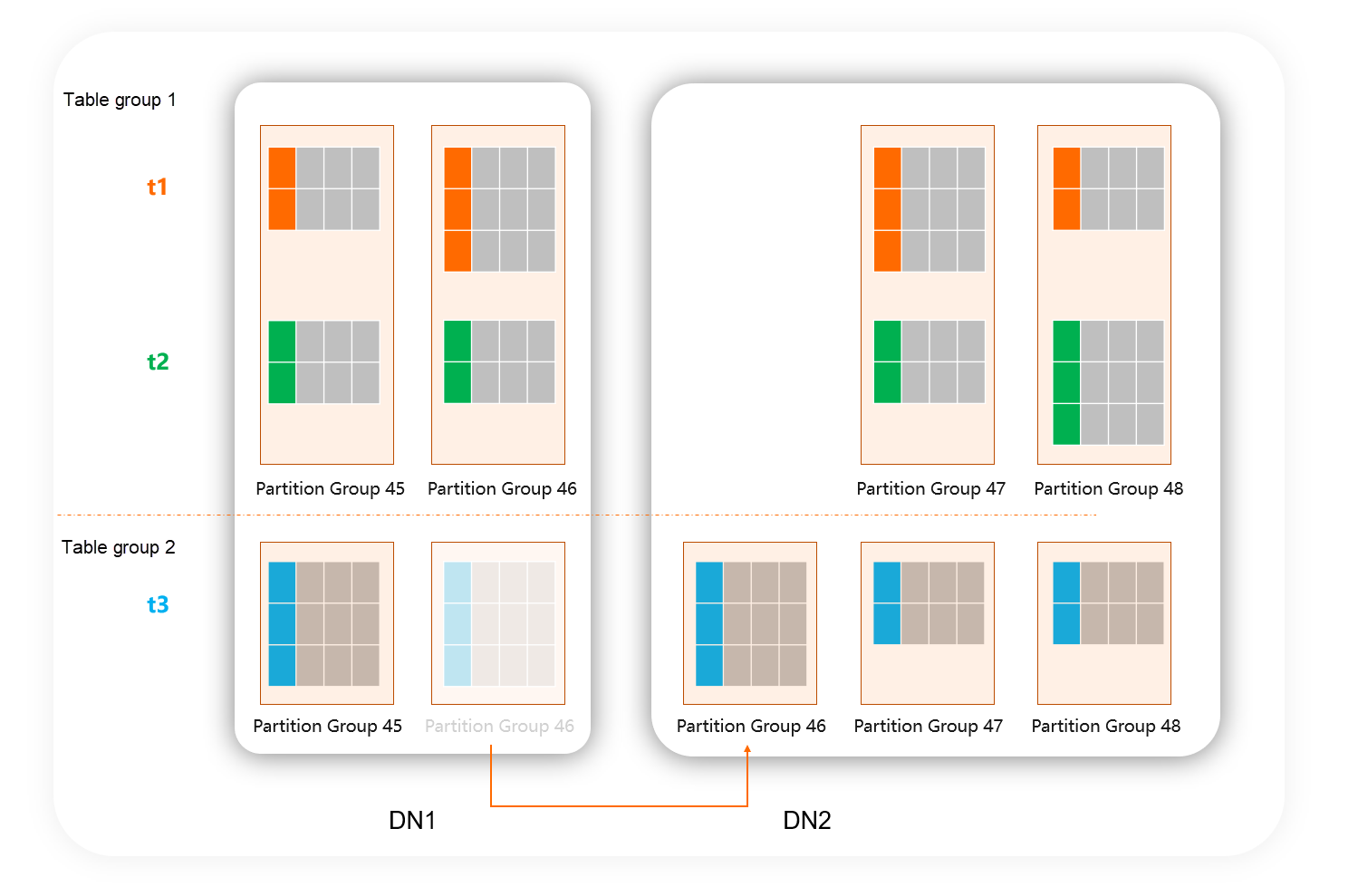

It is easy to understand that the load balancing of a database mainly depends on operations such as splitting, merging, and moving data shards. I’d like to repeat that table groups need to ensure performance consistency. In other words, when data shards are split, merged, or moved, tables in the same table group must also be aligned on shards. To achieve this effect, these operations must be performed on all tables in the same table group at the same time:

Therefore, compared with databases that do not provide table groups, table groups will increase the data granularity of operations during load balancing so that the database is less likely to reach a complete load balancing state.

At the same time, for the developers on the database kernel, this will also greatly increase the complexity of the load balancing algorithm.

We can also understand why some kernel enthusiasts prefer distributed KV-based databases: these databases have fewer constraints and simple structures and are easier to verify some of their ideas.

For example, if you want to implement a load balancing algorithm on a distributed KV-based database that does not provide table group capabilities, you do not need to consider the connection between tables at all, and you can freely perform various operations such as splitting and migrating for each table, which will simplify the problem a lot. However, the corresponding cost is that this kind of database cannot eliminate distributed transactions at all, which means these databases will cost more.

For the cost of table groups, PolarDB-X regards it as an optional item, but in many cases, it is not required. Especially in the early stages of application development, we believe that developers should focus more on business logic than on the design of table groups.

This is in line with another design principle of PolarDB-X mentioned in the previous article: "Hash is suitable for pre-sharding, which improves the certainty of the system, and PolarDB-X pays more attention to stability and certainty from the design principle". Due to the use of hash and pre-sharding, PolarDB-X users do not need to face load balancing problems too early and can postpone or even weaken the design of table groups.

According to the design principle of PolarDB-X, it is expected that users will adjust the table group of the table only when hot issues such as uneven load occur after the application is published.

The expected process is:

1. When you create tables, tables with the same partitioning algorithm and the same number of partitions are automatically distributed to the same table group:

2. When problems such as hot issues are found in the default allocation policy after the performance test or the application is published, create a new table group and move tables that do not appear in the same transaction or that do not need to be joined to other table groups to reduce the size of each table group:

CREATE TABLEGROUP TG2;

ALTER TABLE t3 SET TABLEGROUP=TG2;

Note that this adjustment only modifies the metadata but does not need to move the data, so the cost is extremely low, because moving a table to a new table group does not require the understanding of changes to its data distribution.

3. When the next operation such as rebalance is performed, it starts to be performed in units of new table groups:

The local index, as its name implies, only indexes the data within each shard. You must use some other methods to locate the shard before you can use the local index.

There is a question. We already have a global index that indexes the data of all shards. Why do we still need a local index?

The reason why a local index is required here is not that it only indexes the data within the shard but that the records in the local index can be in the same shard as the records in the base table, thus making index maintenance a standalone transaction. In other words, the local index will be meaningless if you use it but cannot perform a standalone transaction.

In performance-oriented scenarios, only the local index is needed for most secondary indexes due to the existence of partition keys. For example, the orders table has some other attributes such as order status and order type. These fields only need the local index instead of the global index that generates distributed transactions.

The use of a local index is very important to approach maximum performance. PolarDB-X uses MySQL as a DN with native local index capabilities.

In other words, for the same database, testing its local index and global index capabilities can reflect the gap between standalone transactions and distributed transactions.

Of course, do not limit the local index to syntax. The key is whether standalone transactions can be used for index maintenance. To give several examples:

Finally, let's back to the title, Transparent vs. Manual.

Based on the transparent or manual feature, common distributed databases on the market can be divided into:

• Transparent distributed databases: TiDB and CockroachDB.

• Manual distributed databases: ApsaraDB for OceanBase and YugabyteDB.

Now we can answer: is transparent distributed data better than manual distributed data?

For databases that only provide transparent usage, the migration cost will be lower and the initial experience will be better. However, when you further use the database, due to the inevitable use of a large number of distributed transactions, in the core scenario, the performance often cannot meet the requirements (or the same performance requires higher costs), and there is a lack of optimization methods such as eliminating distributed transactions and more adequate computing pushdown.

For databases that only provide manual usage, although a well-designed partition key makes it theoretically possible to achieve optimal performance, the threshold for use will be greatly increased (you need to design partition keys not only for 10% of the core table but also for the rest of the 90% non-core table).

We believe that both transparent and manual distributed databases cannot meet the business requirements for both cost and performance at the same time.

In addition to providing transparent mode, PolarDB-X also supports partition table syntax and provides tools such as table group, local index, and JOIN pushdown. This allows applications to push down more transactions and computations to data nodes when high performance is required.

PolarDB-X is the only distributed database on the market that can provide both transparent and manual modes. We recommend that you use the transparent mode in most scenarios. Then, you can perform stress tests on core business scenarios and use partition table syntax to manually optimize these scenarios, which helps achieve the highest performance.

The allocation strategy of table groups in PolarDB-X is complex, but some of the designed trade-offs are interesting. At the same time, we have done a lot of work to further improve the ease of use of table groups. In the next article, we will introduce all aspects of the table group in detail. Welcome to follow the subsequent updates.

Interpretation of PolarDB-X Data Distribution (3): TPC-C and Transparent Distribution

[Infographic] Highlights | Database New Features in July 2024

ApsaraDB - August 13, 2024

ApsaraDB - August 13, 2024

ApsaraDB - August 23, 2024

ApsaraDB - April 20, 2023

ApsaraDB - August 9, 2024

ApsaraDB - August 29, 2024

PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More LedgerDB

LedgerDB

A ledger database that provides powerful data audit capabilities.

Learn MoreMore Posts by ApsaraDB