By Wumu

Over the past few decades, relational databases have stood out in the field of databases for their ability to allow users to flexibly define and modify data models.

As a cloud-native relational database, PolarDB-X supports modifying data models through various DDL statements to meet the evolving needs of users' businesses. For instance, the ALTER TABLE statement can be used to add columns, delete columns, or modify column types. However, as a distributed database, PolarDB-X partitions the data of a logical table into multiple shards (also known as physical tables) across different data nodes 1, making the implementation of DDL statements more complex.

This article uses column type change as an example to explain how to execute the ALTER TABLE statement in PolarDB-X. There are two types of column type changes: changing the column type of a partition key and changing the column type of a non-partition key. For non-partition key column type changes, the logical DDL can be split into multiple physical DDLs and executed on the corresponding shards. However, for partition key column type changes, the process is more complex. In addition to modifying the column type, data repartitioning is required because changing the partition key column type affects shard routing. Simply pushing down the DDL would result in failed queries if the partition key is used, as the data would not be found.

In practice, as a distributed database, it is essential to ensure consistency across all shards and metadata when the column types of a table are changed, regardless of whether it affects partition keys or not. Therefore, even for changes in the column type of the non-partition key, it is not simply a matter of downward execution. A detailed explanation will be provided in a subsequent article. This article primarily focuses on changing the column type of partition keys.

Traditional distributed database middleware splits tables by sharding, and it usually does not allow for column type changes of the sharding key. To make such changes, you need to create a new table, stop writing to the old table, and then re-import the data.

If changes need to be made without stopping writes, it requires maintaining a double write logic and importing existing data at the same time. This approach is not only complex but also challenging to verify data correctness, often leading to potential data inconsistencies.

The implementation principle of PolarDB-X sharding rule change has been described in the previous section [3], which also requires data repartition. As a classic case of data repartition, the process of sharding rule change requires steps of creating a new table, double writing, importing existing data, verifying data, and switching traffic. It should ensure data consistency through TSO-based distributed transactions. The whole process is very mature. If you haven't read it, it is recommended to go through this article first and the paper on Online Schema Change [4].

Changing the column type of the partition key, like changing the sharding rules, requires data repartition. Therefore, can the process for changing the column type of the partition key be based on the process used for changing sharding rules? What should be considered? Those who have learned about DDL tools such as pt-osc and gh-ost should know that these tools change the table structure by creating new tables and then importing data. In PolarDB-X, changing the column type of the partition key can also be completed in a similar way to data repartition, but the following additional issues should be considered:

The following content will be divided into several sections to sequentially address the above issues.

For changes to sharding rules, this feature only modifies the sharding rules but does not change column definitions. Therefore, the structure of a newly created table remains exactly the same as that of the original table, without modifications to column definitions. However, changing the column type of the partition key requires modifying the column definition of the partition key. Therefore, the structure of the new table will not be completely consistent with that of the original table. Since the column definitions of the new table differ from those of the original table, both the original double write process for incremental data and the synchronization process for existing data involve implicit type conversions. Will these implicit conversions affect the accuracy of the results? In general, it will not affect the accuracy for the following two reasons:

Note that there is a prerequisite in the second analysis, that is, DML must be written strictly according to the type of the source table, otherwise, the accuracy of the implicit conversion result during double write cannot be strictly guaranteed. For example, the original type is VARCHAR and needs to be changed to INT type. Users always use binary mode to write this field. If the data x'61' written by users corresponds to the character 'a', then for the VARCHAR type, the character 'a' is written. But for the INT type, the value written is 97, which is inconsistent with the corresponding numeric value after converting the character 'a' into INT. The correct value should be 0. Therefore, it is very necessary to perform data verification on the final result.

To ensure the correctness of the changes, after creating the new table, enabling double writes for incremental data, and synchronizing existing data, it's necessary to perform a data verification step. After the data verification passes, the traffic switch and the graceful unpublishing of the original table can proceed.

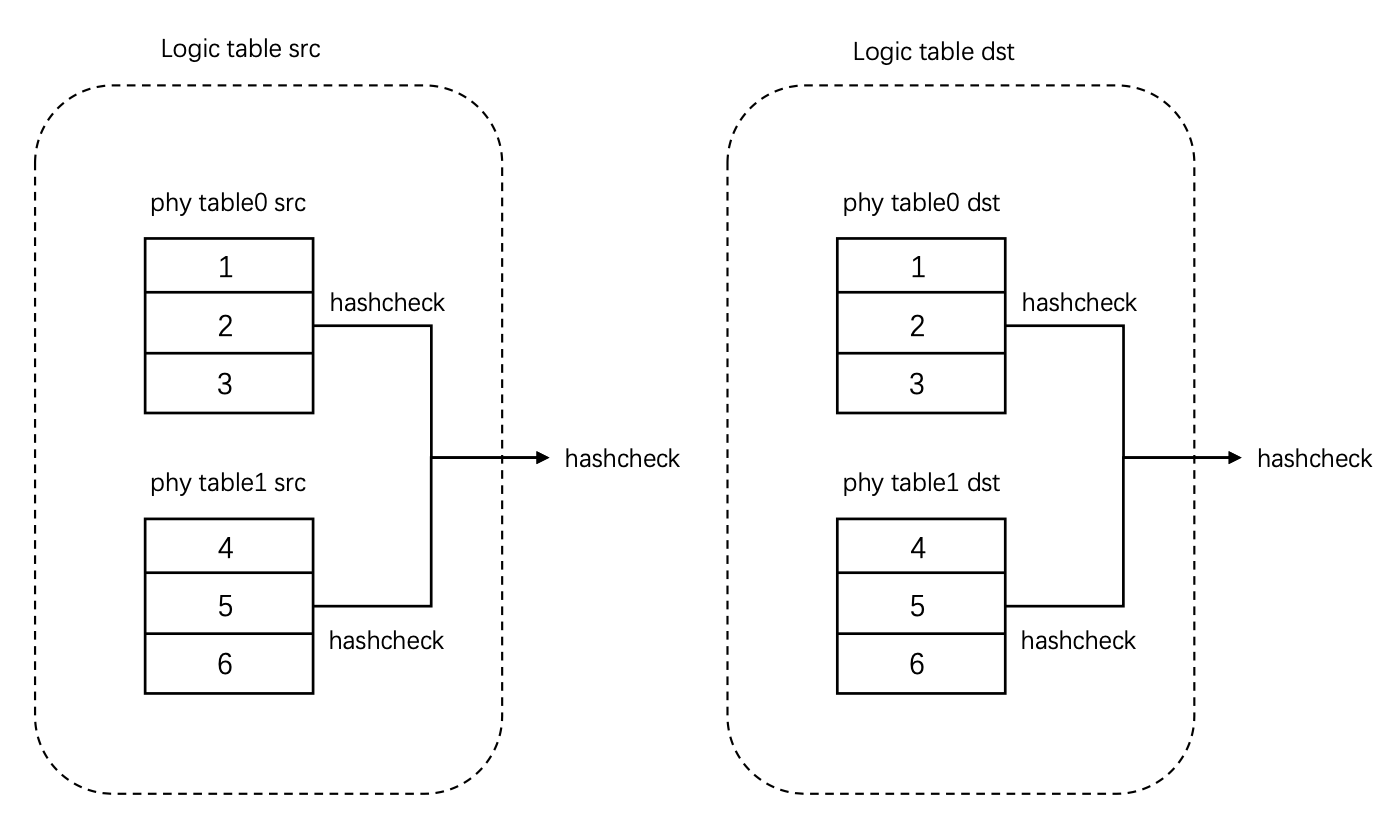

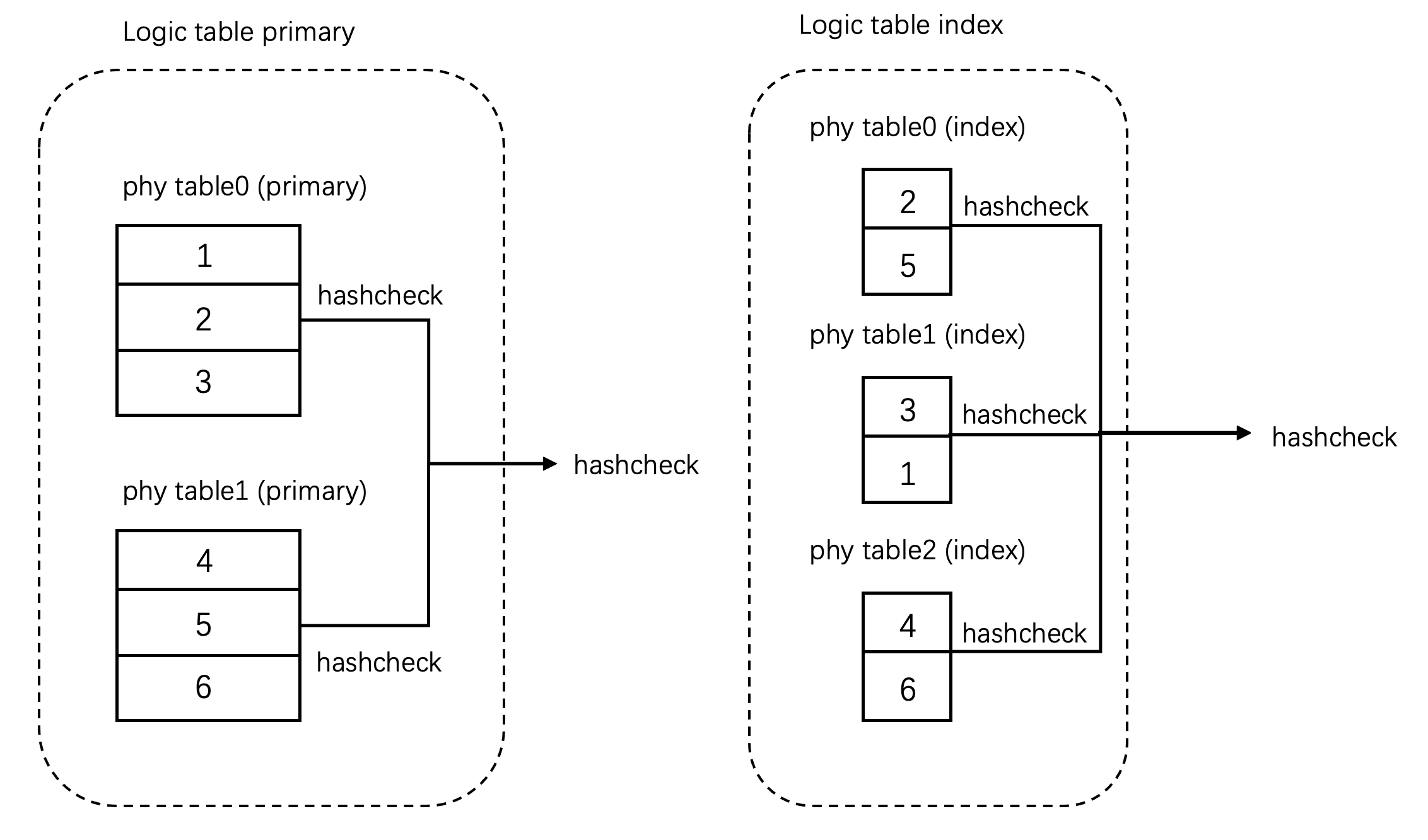

Here's a brief introduction to the logic of data verification. Firstly, we've implemented a hash algorithm insensitive to order on the DN side and packaged it into a UDF. When verification begins on the CN side, we first use TSO transactions to obtain consistent snapshots of the source and target tables [5]. Then, we perform a full-table hashcheck calculation (in parallel) on each shard of the source and target tables on the DN side and pull the results back to the CN node for aggregation. Finally, we compare the checksums of the source and target tables to ensure data consistency.

Homogeneous tables (parallel among shards):

Heterogeneous tables (parallel among shards):

For partition key column type changes, the column definitions of partition key are inconsistent on the source table and the target table and the physical values of data are generally inconsistent. Therefore, direct hashcheck will definitely lead to verification failure, and there is no way to determine the accuracy of type conversion.

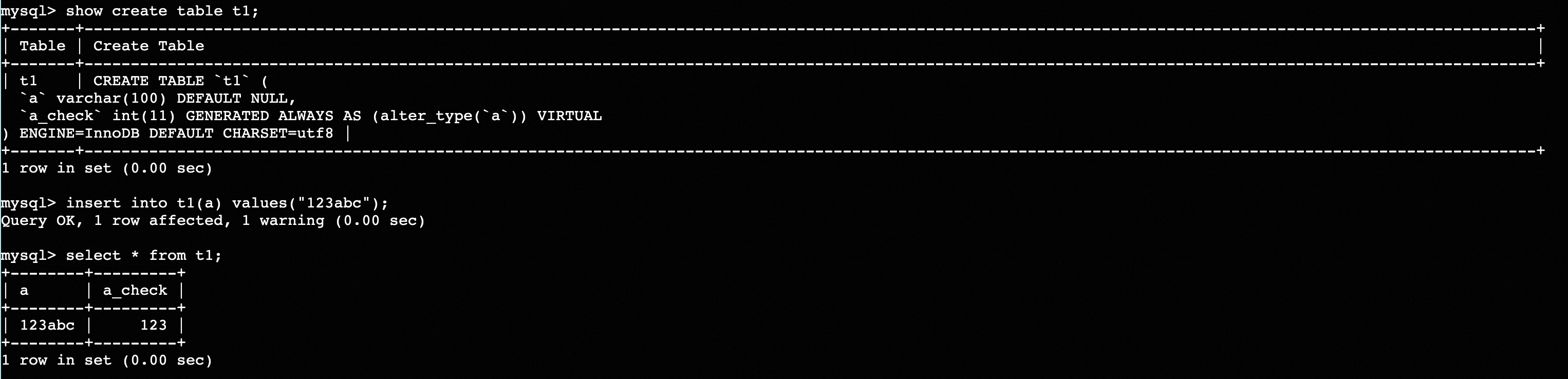

For example, if the partition key column type of the source table is VARCHAR and there is a piece of data '123abc', when the partition key column type is changed to INT, then the data corresponding to the target table partition key is converted into 123 which is naturally different from the '123abc' hashcheck value. Therefore, the verification will fail, and it cannot be completely confirmed that 123 in INT is the corresponding converted value of the string '123abc' in VARCHAR in MySQL.

To address the issues above, after creating the new table, a virtual column (not visible externally) used solely for data verification will be added to the source table. This virtual column calls the column type conversion function based on the partition key column. For example, in the preceding example, a virtual column is added to the source table. The value of the virtual column is the result 123 after '123abc' calls the conversion function, and the result is consistent with the target table, as shown in the following figure.

By adding a virtual column as described above, data verification can be completed. The column type conversion function called by this virtual column is consistent with the logic used by ALTER TABLE MODIFY COLUMN in MySQL. This also allows for the detection of inconsistency between DML implicit conversions and ALTER TABLE MODIFY COLUMN conversions.

In this article [6], the PolarDB-X global secondary index is introduced. To facilitate the index lookup, the global secondary index contains the primary key and partition key of the primary table as the Cover column by default. To ensure data consistency between a GSI and a primary table, when you change the column type of a partition key in the primary table, you must also change the types of the corresponding Cover columns of all GSIs. Therefore, if you change the column type of a partition key in the primary table, the data in the GSI table is redistributed. If the partition key of the primary table is inconsistent with that of the GSI, and the type of the partition key column of the GSI is changed, the same process is required to ensure data consistency.

Changing the column type of a partition key does not always require data repartition. For example, if you want to increase the length of a string column without changing its CHARSET and COLLATE, data repartition is not necessary. In this case, the process is similar to changing the column type of a non-partition key.

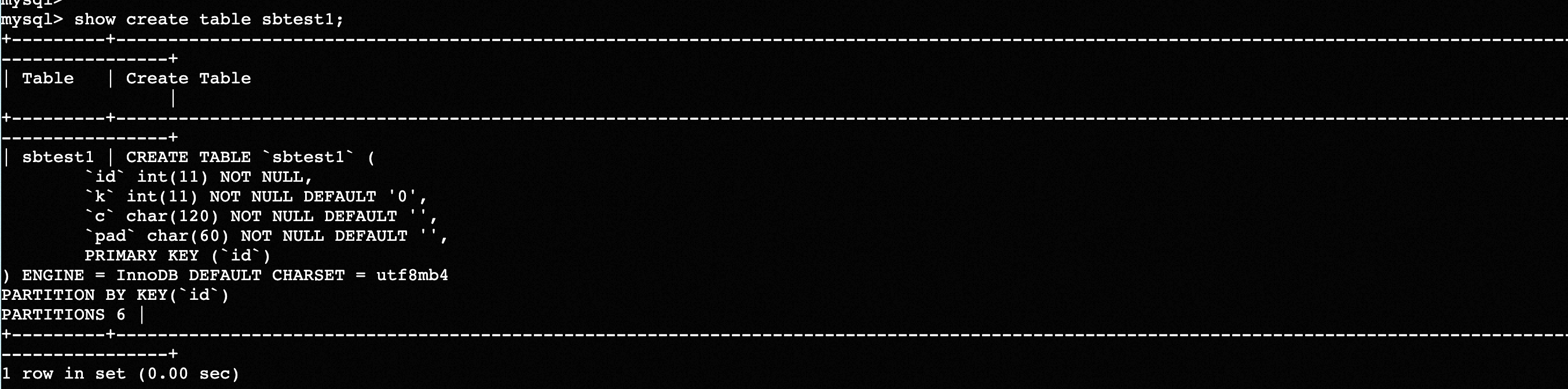

In addition, the user may just change the column type of the partition key of the GSI instead of the partition key of the primary table, which still causes data repartition. You can see that there are actually several scenarios for column type changes. In order to facilitate users to quickly distinguish the specific process of column type changes, we provide operations similar to explain for DDL statements. The following examples use the sysbench table.

Statements for creating tables:

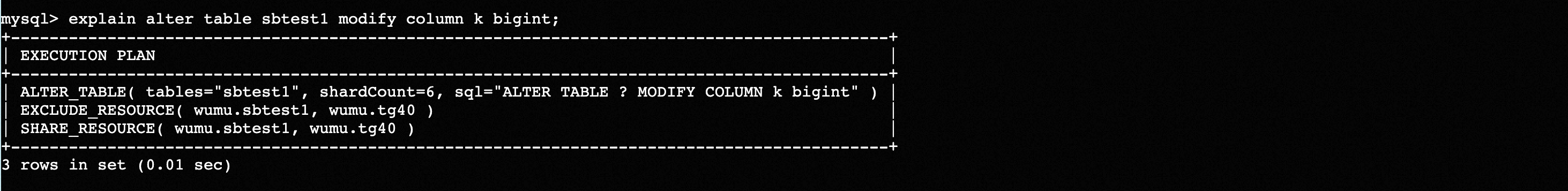

Example 1: Modify the column type of a non-partition key:

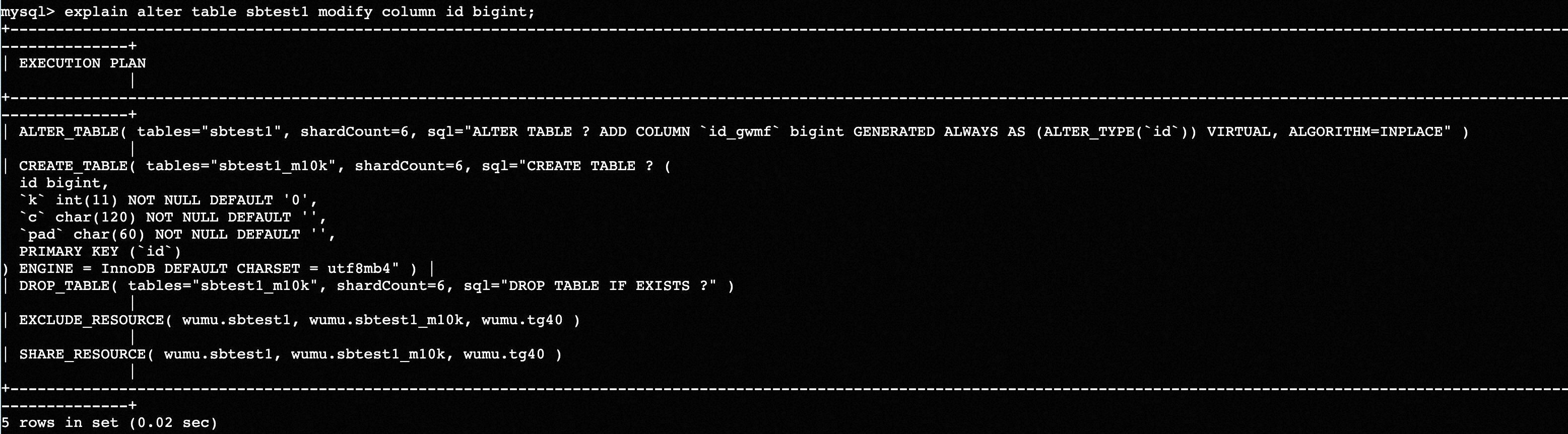

Example 2: Change the partition key column type with data repartition: CREATE TABLE is used to create a new table, DROP TABLE is used to delete the old table after the verification is completed, and ALTER TABLE is used to add virtual columns for data verification.

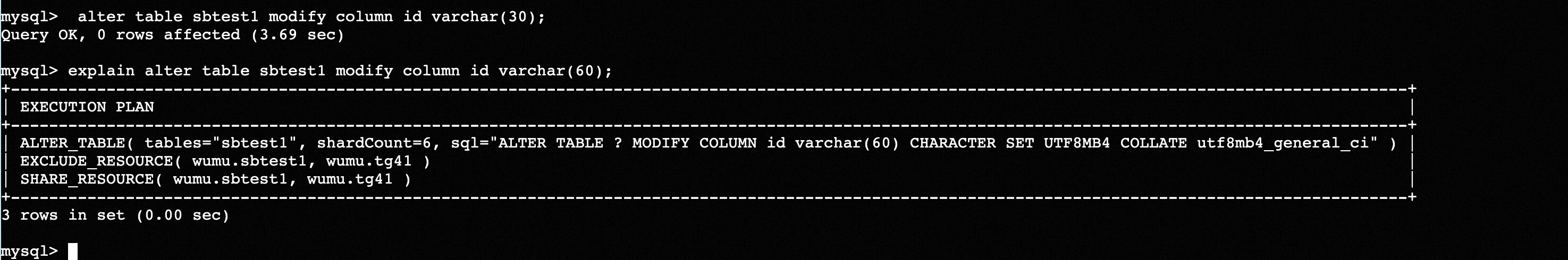

Example 3: Change the partition key column type without data repartition: First, change the partition key id column to varchar(30). This process requires data repartition. Then change its type to varchar(60). The following explain result shows that no data repartition is required (no table creation or deletion is required).

Flexibly changing table column types is an important feature for distributed databases. PolarDB-X supports the change of partition key column types and ensures strong data consistency, high availability, and transparency to businesses. It also removes the restrictions caused by distribution and is easy to use.

This article briefly explains the various technical points involved in changing the column type of a partition key, based on the sharding rule changes in PolarDB-X. The ability of PolarDB-X to support this function relies on features such as TSO transactions, which is also one of the key characteristics that distinguish distributed databases from distributed database middleware.

[1] Interpretation of PolarDB-X Data Distribution (1)

[2] Interpretation of PolarDB-X Data Distribution (2): Hash vs Range

[3] PolarDB-X Sharding Rule Changes

[4] Online, Asynchronous Schema Change in F1

[5] Implementation of PolarDB-X Distributed Transactions (1)

[6] PolarDB-X Global Secondary Index

ApsaraDB - August 23, 2024

ApsaraDB - January 3, 2024

ApsaraDB - December 12, 2025

ApsaraDB - June 19, 2024

ApsaraDB - December 12, 2025

ApsaraDB - January 17, 2025

PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by ApsaraDB