By Qifeng, team member of PolarDB-X, a cloud-native database developed by Alibaba Cloud

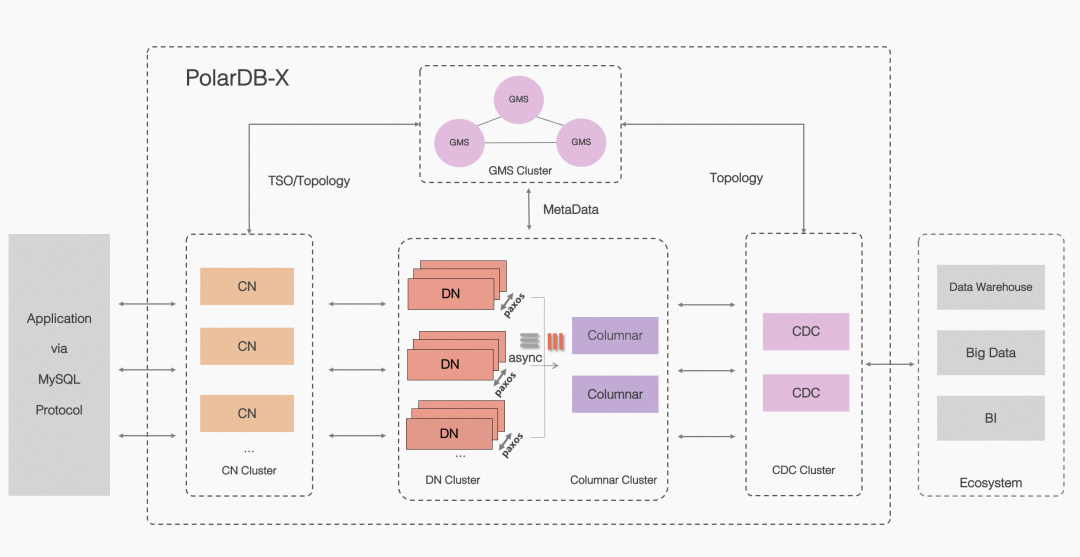

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud. It is designed with a shared-nothing architecture and storage-computing separation architecture. The system consists of five core components.

1. Compute Node (CN)

The compute node (CN) serves as the entry point of the system and adopts a stateless design. It includes modules such as SQL parser, optimizer, and executor. The CN supports distributed routing, computing, and dynamic scheduling. It uses the two-phase commit protocol (2PC) for coordinating distributed transactions and provides global secondary index maintenance. Additionally, it supports enterprise-level features like SQL throttling and three-role mode.

2. Data Node (DN)

Data nodes (DN) are responsible for persistently storing data in PolarDB-X. They provide highly reliable and strongly consistent data storage services based on the Paxos protocol. DN also maintains the visibility of distributed transactions through MVCC.

3. Global Metadata Service (GMS)

The global metadata service (GMS) maintains the global strong consistency of meta information, including tables, schemas, and statistics. It also maintains the global strong consistency of security information, such as accounts and permissions. GMS also provides a global timestamp distributor known as the Timestamp Oracle (TSO).

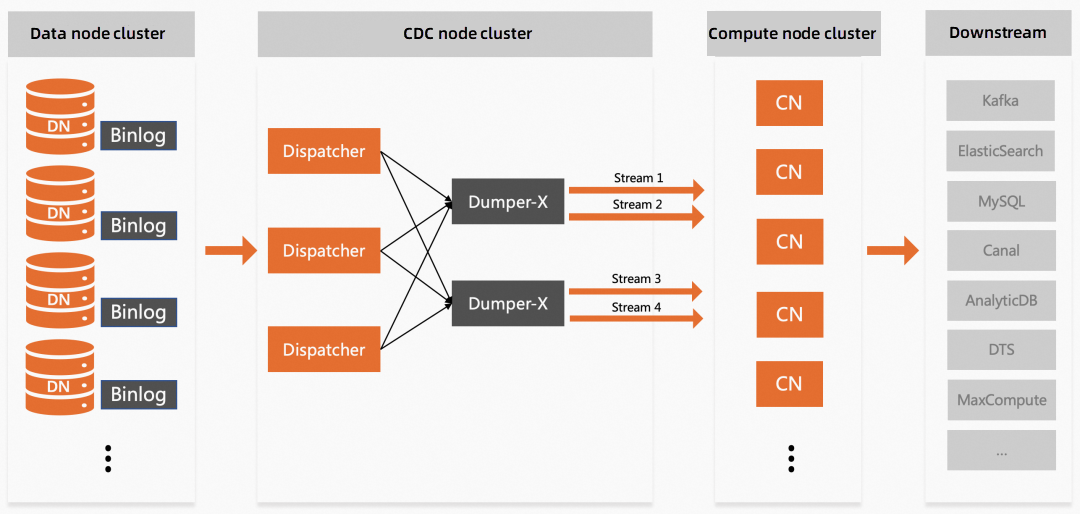

4. Change Data Capture (CDC)

Change data capture (CDC) offers incremental data subscription that supports MySQL binary log formats and protocols. It also supports primary/secondary replication using MySQL replication protocols.

5. Column Store Node

The column store node provides data storage in a columnar format and adopts an HTAP (Hybrid Transactional/Analytical Processing) architecture based on a combination of row-column hybrid storage and distributed compute nodes. This feature is expected to be officially open source in April 2024. For more information, please refer to the PolarDB-X Documentation on the Columnar Index.

Open source address: https://github.com/polardb/polardbx-sql

Timeline of Open-Source PolarDB-X:

▶ In October 2021, Alibaba Cloud announced the open-source release of the cloud-native distributed database PolarDB-X at the Apsara Conference. The open-source version includes the compute engine, storage engine, log engine, and Kubernetes.

▶ In January 2022, PolarDB-X 2.0.0 was officially released, marking the first version update since its open-source release. New features include cluster scaling, compatibility with binary log formats, and incremental log subscription supporting Maxwell and Debezium. Several issues were also addressed.

▶ In March 2022, PolarDB-X 2.1.0 was officially released, introducing four core features that enhance the stability and eco-compatibility of the database, including a triplicate consensus protocol based on Paxos.

▶ In May 2022, PolarDB-X 2.1.1 was officially released, introducing the key feature of tiered storage for hot and cold data. This feature allows users to store data in different storage media based on data characteristics, such as storing cold data in Object Storage Service (OSS).

▶ In October 2022, PolarDB-X 2.2.0 was officially released, representing a significant milestone in the development of PolarDB-X. This version focuses on the compatibility between enterprise-level and domestic ARM, complying with distributed database financial standards. The eight core features of PolarDB-X make it more versatile across sectors like finance, communications, and government administration.

▶ In March 2023, PolarDB-X 2.2.1 was officially released. Building upon the distributed database financial standards, this version further strengthens key capabilities at the production level, making the database-oriented production environment more accessible and secure. It includes features such as fast data import, performance testing, and production deployment suggestions.

▶ In October 2023, PolarDB-X 2.3.0 was officially released as a significant product. PolarDB-X Standard Edition (Centralized Architecture) provides independent services for data nodes and supports the multi-Paxos protocol. It includes a distributed transaction engine called Lizard that is 100% compatible with MySQL. With production-level deployment and parameters (double one plus multi-Paxos synchronization enabled), it offers a 30-40% performance improvement in read/write scenarios compared to open-source MySQL 8.0.34. As a result, it serves as an excellent alternative to open-source MySQL.

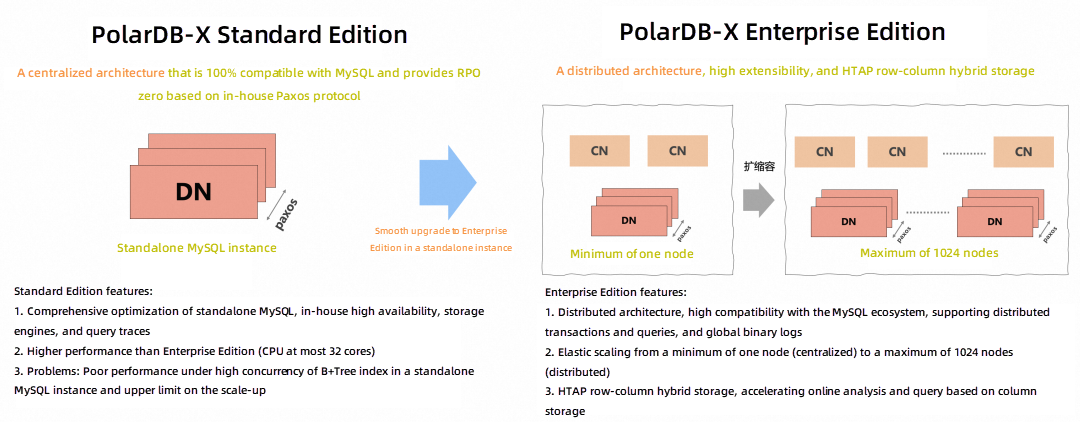

After the release of PolarDB-X 2.3 Standard Edition (Centralized Architecture), there are two editions of the open-source PolarDB-X available.

• PolarDB-X Standard Edition: Uses a centralized architecture that is 100% compatible with MySQL. It supports a standalone MySQL database and offers a Recovery Point Objective (RPO) of zero through our in-house distributed consensus algorithm (X-Paxos).

• PolarDB-X Enterprise Edition: Implements a distributed architecture that is highly compatible with MySQL. It supports distributed transactions and distributed parallel queries with high consistency. Moreover, it allows for distributed horizontal scaling, starting from as little as one node (centralized) and extending up to 1024 nodes (distributed). This integration of both centralized and distributed architectures provides greater versatility. Additionally, we plan to release an open-source version in the future with an HTAP row-column hybrid storage architecture. This version will facilitate the convenient creation of column-stored replicas and enable row-column hybrid queries to expedite online analysis.

The following table illustrates the architectural features and selection suggestions.

| Architecture | Standard Edition (Centralized Architecture) | Enterprise Edition (Distributed Architecture) |

| Advantages and disadvantages |

Advantages: 1. It is 100% compatible with MySQL. 2. For nodes with small specifications (for example, CPU at most 32 cores), it has a higher performance than Enterprise Edition. In case of a demand for large specifications in the future, you can smoothly upgrade your PolarDB-X to Enterprise Edition. Disadvantages: 1. As the B+Tree index used in a standalone MySQL instance suffers poor performance under high concurrency, it is recommended that a single table contain 5 million to 50 million row records. 2. There is an upper limit on the scale-up in a standalone instance. |

Advantages: 1. It uses a distributed architecture that supports linear expansion and at most 1024 nodes (PB-level data size). 2. It provides financial-level disaster recovery in different scenarios, such as three data centers in a region and three data centers across two regions. 3. It adopts HTAP row-column hybrid storage and uses built-in column-stored replicas to accelerate online analysis. |

| Selection suggestions | 1. Require MySQL, disaster recovery across data centers, and RPO zero. 2. Require low-cost MySQL and open source, and have a flexible business requirement. |

1. Require a distributed architecture that provides scaling to support high concurrency in order transactions. 2. Require a database to replace open-source sharded databases and tables to solve O&M problems. 3. Resolve the high concurrency in MySQL; data sharding based on a distributed architecture. 4. It needs to be upgraded to the distributed architecture and open source. |

The PolarDB-X Standard Edition adopts a cost-effective architecture consisting of one primary RDS instance, one secondary RDS instance, and one logger RDS instance. This three-node architecture ensures strong data consistency by synchronizing data across multiple replicas. It is specifically designed for online business scenarios that demand ultra-high concurrency, complex queries, and lightweight analysis.

Now, let's quickly deploy a PolarDB-X Standard Edition cluster that consists of only one data node with three replicas. Execute the following command to create such a cluster:

echo "apiVersion: polardbx.aliyun.com/v1

kind: XStore

metadata:

name: quick-start

spec:

config:

controller:

RPCProtocolVersion: 1

topology:

nodeSets:

- name: cand

replicas: 2

role: Candidate

template:

spec:

image: polardbx/polardbx-engine-2.0:latest

resources:

limits:

cpu: "2"

memory: 4Gi

- name: log

replicas: 1

role: Voter

template:

spec:

image: polardbx/polardbx-engine-2.0:latest

resources:

limits:

cpu: "1"

memory: 2Gi" | kubectl apply -f -You will see the following output.

xstore.polardbx.aliyun.com/quick-start createdRun the following command to view the creation status.

$ kubectl get xstore -wNAME LEADER READY PHASE DISK VERSION AGEquick-start quick-start-4dbh-cand-0 3/3 Running 3.6 GiB 8.0.18 11mNote: A memory size of 8 GB or larger is recommended when you deploy a production environment.

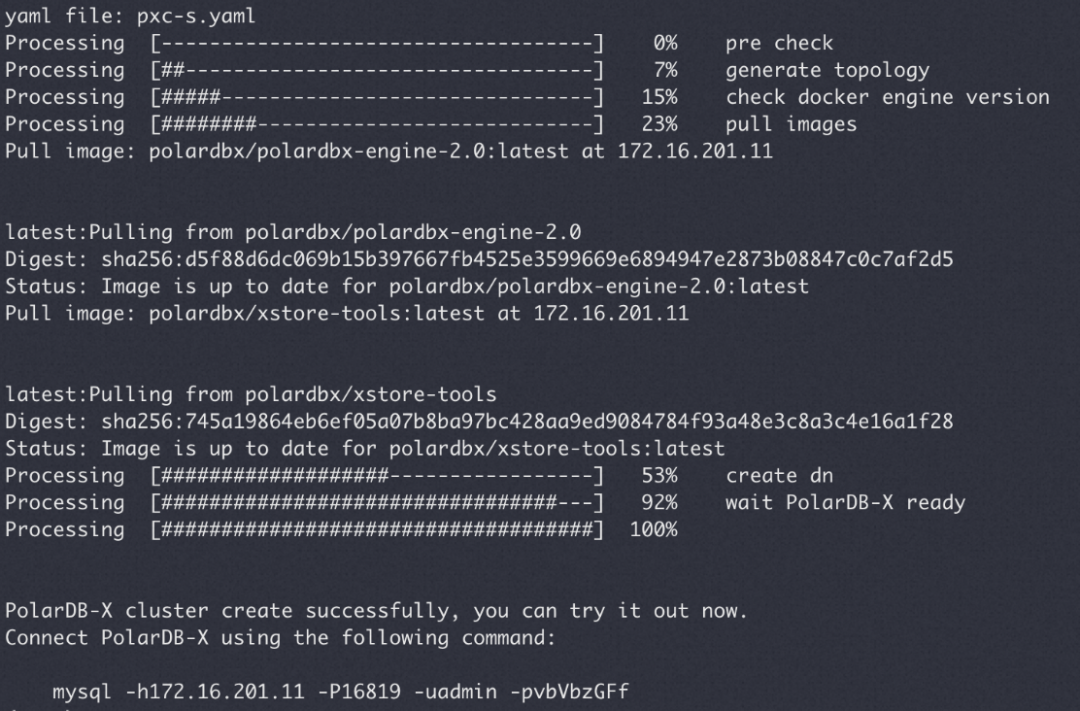

version: v1

type: polardbx

cluster:

name: pxc_test

dn:

image: polardbx/polardbx-engine-2.0:latest

replica: 1

nodes:

- host_group: [172.16.201.11,172.16.201.11,172.16.201.11]

resources:

mem_limit: 2GNote: The default value of the number of replica data nodes in the Standard Edition is one. In distributed PolarDB-X, you can specify it as one or more.

Run the following command to deploy a PolarDB-X cluster in a PXD cluster:

pxd create -file polardbx.yamlAfter deployment, PXD will return the connection mode of the PolarDB-X cluster. You can log on to the PolarDB-X database by using the MySQL command line.

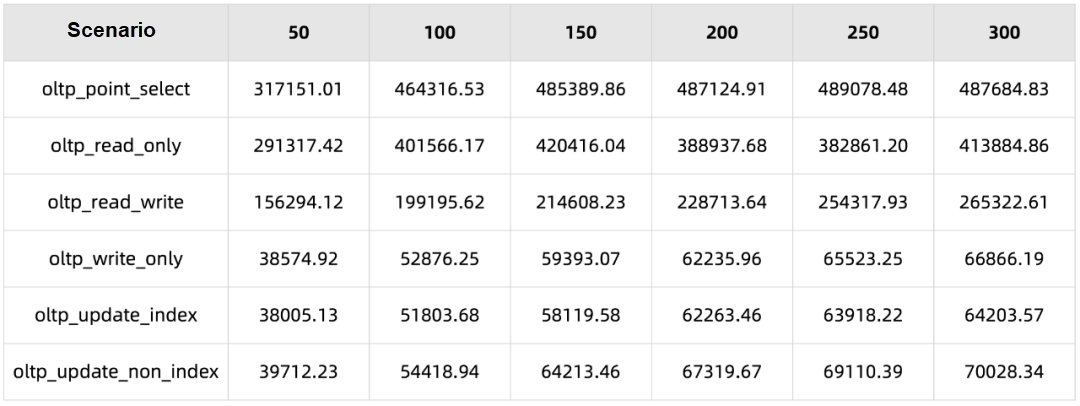

In a centralized PolarDB-X Standard Edition instance, the processing capability for concurrent transactions is improved based on the Lizard distributed transaction system. PolarDB-X uses production-level deployment and parameters (double one plus multiple replicas enabled). Compared with open-source MySQL 8.0.34, it improves the performance in read/write scenarios by 30-40%.

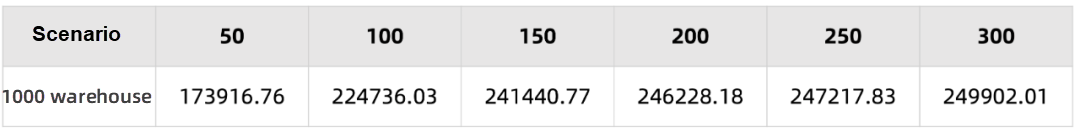

The detailed performance testing results are as follows:

| Purpose | Model | Specifications |

| Load generator | ecs.hfg7.6xlarge | 24c96g |

| Database machine | ecs i4.8xlarge * 3 | 32 C CPU + 256 GB RAM + 7 TB SSD, unit price: RMB 7,452/month |

| Scenario | Concurrency | MySQL 8.0.34 primary/secondary replica + asynchronous replication | Centralized PolarDB-X Standard Edition Paxos-based triplicate architecture |

Performance |

| sysbench oltp_read_write | 300 | 200930.88 | 265322.61 | ↑ 32% |

| TPCC 1000 warehouse | 300 | 170882.38 tpmC | 249902.01 tpmC | ↑ 46% |

The Distributed PolarDB-X 2.3 version continues to enhance compatibility with MySQL, making it easier for users to migrate their databases from MySQL.

Partitioned tables in MySQL refer to the ability to divide a large table into smaller logical units called partitions, which are stored on different physical storage media. This partitioning mechanism aligns well with the concept of Distributed PolarDB-X. Hence, PolarDB-X's partitioned tables are fully compatible with MySQL's partitioned tables. Furthermore, PolarDB-X extends the syntax of MySQL by enabling multiple partitions to be distributed across multiple nodes, enhancing the processing capability for concurrent transactions. The following are the common partitioning methods supported by PolarDB-X's partitioned tables:

1. Range partitioning (Range Columns partitioning): Divides a table into multiple partitions based on ranges of column values. It can distribute data to different partitions based on numeric ranges of column values, such as dates and prices.

2. List partitioning (List Columns partitioning): Divides a table into multiple partitions based on a list of column values. It matches the values of a specific column to a predefined partition, where each partition may contain multiple values.

3. Hash partitioning (Key partitioning): Partitions the table based on the hash value of column values. It evenly distributes the table's data across different partitions using a hashing algorithm.

In addition to partitioning, PolarDB-X also supports subpartitioning. Subpartitioning divides data within a partitioned table into subpartitions. As partitioning and subpartitioning are orthogonal to each other, any two partitions can be combined, allowing for a total of 36 types of composite partitioning supported by PolarDB-X. Moreover, subpartitioning can be further classified into modularized and non-modularized subpartitioning.

Modularized subpartitioning involves creating subpartitions using a template that consists of a set of partitioning rules that specify the number and names of subpartitions for each partition. This enables the quick creation of subpartitions with the same structure. The following example demonstrates the syntax for creating subpartitions using modularized subpartitioning:

CREATE TABLE partitioned_table (

...

)

PARTITION BY RANGE COLUMNS(column1)

SUBPARTITION BY HASH(column2)

SUBPARTITIONS 4

SUBPARTITION TEMPLATE (

SUBPARTITION s1,

SUBPARTITION s2,

SUBPARTITION s3,

SUBPARTITION s4

) (

PARTITION p1 VALUES LESS THAN (100),

PARTITION p2 VALUES LESS THAN (200),

...

);In the preceding example, the SUBPARTITION TEMPLATE keyword is used to define a subpartition template, which specifies the names of four subpartitions of each partition. Then, in each partition, the same subpartition template will be used by specifying the SUBPARTITION TEMPLATE.

Non-modularized subpartitioning refers to manually specifying the number and names of subpartitions of each partition. By using non-modularized subpartitioning, you can more flexibly create different numbers of subpartitions with different names for each partition. The following example shows the syntax for creating a subpartition by using non-modularized subpartitioning:

CREATE TABLE partitioned_table (

...

)

PARTITION BY RANGE COLUMNS(column1)

SUBPARTITION BY HASH(column2)

SUBPARTITIONS (

PARTITION p1 VALUES LESS THAN (100) (

SUBPARTITION s1,

SUBPARTITION s2,

SUBPARTITION s3,

SUBPARTITION s4

),

PARTITION p2 VALUES LESS THAN (200) (

SUBPARTITION s5,

SUBPARTITION s6,

SUBPARTITION s7,

SUBPARTITION s8

),

...

);In the preceding example, different numbers of subpartitions with different names for each partition are directly specified, instead of using a template.

Both modularized and non-modularized subpartitioning in PolarDB-X have their advantages and purposes. Modularized subpartitioning is suitable for creating partitioned tables with the same structure and can reduce the workload of creating tables. Non-modularized subpartitioning is more flexible. You can create different numbers of subpartitions with different names for each partition based on your needs. The traditional database sharding and table sharding is a special example of modularized subpartitioning (each database shard has the same number of table shards). The new version of PolarDB-X supports all the features of distributed partitioned tables. This can be combined with non-modularized subpartitioning to optimize distributed hotspots.

Here is an example to help you experience the benefits of non-modularized subpartitioning. In a transaction order management system, the platform serves many sellers of different brands. As the order volume varies greatly among different sellers, large sellers stand out. They are willing to become VIP paid members and expect to enjoy exclusive resources, while small sellers tend to use free shared resources.

Reference: How PolarDB Distributed Edition Supports SaaS Multi-Tenancy

/* Non-modularized composite partitioning: List Columns partitioning + Hash subpartitioning */

CREATE TABLE t_order /* Order table */ (

id bigint not null auto_increment,

sellerId bigint not null,

buyerId bigint not null,

primary key(id)

)

PARTITION BY LIST(sellerId/*Seller ID*/) /* */

SUBPARTITION BY HASH(sellerId)

(

PARTITION pa VALUES IN (108,109)

SUBPARTITIONS 1 /* There is a Hash partition under the partition pa that stores all seller data of big brand a */,

PARTITION pb VALUES IN (208,209)

SUBPARTITIONS 1 /* There is a Hash partition under the partition pb that stores all seller data of big brand b */,

PARTITION pc VALUES IN (308,309,310)

SUBPARTITIONS 2 /* There are two Hash partitions under the partition pc that store all seller data of big brand c */,

PARTITION pDefault VALUES IN (DEFAULT)

SUBPARTITIONS 64 /* There are 64 Hash partitions under the partition pDefault that store seller data of numerous small brands */

);Based on the preceding LIST + HASH non-modularized subpartitioning, it can directly bring the following effects to the application.

• For sellers of big brands (equivalent to tenants), data can be routed to a separate set of partitions.

• For small and medium-sized brands, data can be automatically balanced to multiple different partitions according to the hashing algorithm to avoid accessing hot spots. For example, 64 partitions are allocated by default to support all free users. For paying users, you can set the numerical value of subpartitions to 1 or 2 based on the business scale, and non-modularized subpartitioning can help you implement fine-grained data distribution.

MySQL generated column refers to adding one or more columns to a table by using virtual or computed columns when a table is created. You can define the feature by using the keyword GENERATED ALWAYS AS. You can use a variety of mathematical, logical, and string functions in an expression to calculate the value of a column. The generated column can be a virtual column or a stored column. The new version of PolarDB-X supports the syntax and feature of MySQL generated columns.

Reference: PolarDB-X Generated Column Syntax

col_name data_type [GENERATED ALWAYS] AS (expr)

[VIRTUAL | STORED | LOGICAL] [NOT NULL | NULL]

[UNIQUE [KEY]] [[PRIMARY] KEY]

[COMMENT 'string']You can create the following types of generated columns:

• VIRTUAL: The value of the generated column is not stored and does not occupy storage space. The value is calculated by the data node each time the column is read. Note: If you do not specify the type, a generated column of the VIRTUAL type is created by default.

• STORED: The value of the generated column is calculated by the data node when the data row is inserted or updated. The result is stored on the data node and occupies storage space.

• LOGICAL: Similar to the STORED type, the value of the generated column is calculated when the data row is inserted or updated. However, the value is calculated by the compute node and then stored on the data node as a common column. The generated column of this type can be used as a partition key.

Example:

CREATE TABLE `t1` (

`a` int(11) NOT NULL,

`b` int(11) GENERATED ALWAYS AS (`a` + 1),

PRIMARY KEY (`a`)

) ENGINE = InnoDB DEFAULT CHARSET = utf8mb4 partition by hash(`a`)Insert data:

# INSERT INTO t1(a) VALUES (1);

# SELECT * FROM t1;

+---+---+

| a | b |

+---+---+

| 1 | 2 |

+---+---+PolarDB-X is compatible with MySQL generated columns and indexes. You can create indexes on generated columns to accelerate queries. For example, you can query internal JSON keys. Example of creating an index on a generated column:

> CREATE TABLE t4 (

a BIGINT NOT NULL AUTO_INCREMENT PRIMARY KEY,

c JSON,

g INT AS (c->"$.id") VIRTUAL

) DBPARTITION BY HASH(a);

> CREATE INDEX `i` ON `t4`(`g`);

> INSERT INTO t4 (c) VALUES

('{"id": "1", "name": "Fred"}'),

('{"id": "2", "name": "Wilma"}'),

('{"id": "3", "name": "Barney"}'),

('{"id": "4", "name": "Betty"}');

// You can use the index of the virtual column g for pruning.

> EXPLAIN EXECUTE SELECT c->>"$.name" AS name FROM t4 WHERE g > 2;

+------+-------------+-------+------------+-------+---------------+------+---------+------+------+----------+-------------+

| id | select_type | table | partitions | type | possible_keys | key | key_len | ref | rows | filtered | Extra |

+------+-------------+-------+------------+-------+---------------+------+---------+------+------+----------+-------------+

| 1 | SIMPLE | t4 | NULL | range | i | i | 5 | NULL | 1 | 100 | Using where |

+------+-------------+-------+------------+-------+---------------+------+---------+------+------+----------+-------------+Starting from MySQL 8.0, expression indexing has been provided, and functional indexes that allow expressions to be used in indexes have been introduced. By specifying expressions in the CREATE INDEX statement, you can create indexes to optimize specific queries. The implementation of underlying internal storage is based on virtual columns. PolarDB-X 2.3 is compatible with MySQL 8.0 functional indexes. When you create an index, if the PolarDB-X finds that an index entry is not a column in the table but an expression, it will automatically convert the expression to a generated column of the VIRTUAL type and add it to the table. After all index entries are processed, PolarDB-X continues to create indexes based on your definitions. The expression in the index definition is replaced with the corresponding generated column. This functional index function is an experimental feature and can be used only after the laboratory parameter is enabled.

SET GLOBAL ENABLE_CREATE_EXPRESSION_INDEX=TRUE;Example:

1. Create table t7

> CREATE TABLE t7 (

a BIGINT NOT NULL AUTO_INCREMENT PRIMARY KEY,

c varchar(32)

) DBPARTITION BY HASH(a);

2. Create expression index i

> CREATE INDEX `i` ON `t7`(substr(`c`, 2));

3. The following code snippets describe the table schema after the expression index is created:

> SHOW FULL CREATE TABLE `t7`

# The following result is returned:

CREATE TABLE `t7` (

`a` bigint(20) NOT NULL AUTO_INCREMENT BY GROUP,

`c` varchar(32) DEFAULT NULL,

`i$0` varchar(32) GENERATED ALWAYS AS (substr(`c`, 2)) VIRTUAL,

PRIMARY KEY (`a`),

KEY `i` (`i$0`)

) ENGINE = InnoDB dbpartition by hash(`a`)

# As the index entry of index i is an expression, a generated column i$0 is added to the table, and the expression of this generated column is that of the index entry. After that, index i is created. The index entry is replaced with the corresponding generated column.

# After the expression index is created, you can use the expression index to speed up queries in the following SQL statements:

> EXPLAIN EXECUTE SELECT * FROM t7 WHERE substr(`c`, 2) = '11';

+------+-------------+-------+------------+------+---------------+------+---------+-------+------+----------+-------+

| id | select_type | table | partitions | type | possible_keys | key | key_len | ref | rows | filtered | Extra |

+------+-------------+-------+------------+------+---------------+------+---------+-------+------+----------+-------+

| 1 | SIMPLE | t7 | NULL | ref | i | i | 131 | const | 1 | 100 | NULL |

+------+-------------+-------+------------+------+---------------+------+---------+-------+------+----------+-------+MySQL foreign key is a feature of the rational database that is used to establish relationships between tables. Foreign keys define the reference relationship between columns in different tables. By using this feature, you can constrain the data integrity and consistency and establish the reference relationship between data. PolarDB-X 2.3 is compatible with the common use of foreign keys in MySQL. This allows you to establish cross-table (database) data connections in distributed databases by using foreign keys to ensure the consistency of data equivalent to foreign keys in standalone databases. However, as it is more complex to check and maintain foreign key constraints on distributed partitioned tables than on standalone databases, unreasonable use of foreign keys may lead to large performance overhead, hence resulting in a significant decline in system throughput. Therefore, the foreign key will be used as a long-term experimental feature. It is recommended that you use it with caution after sufficient verification of the data.

SET GLOBAL ENABLE_FOREIGN_KEY = TRUE;Foreign key syntax:

-- Create a foreign key

[CONSTRAINT [symbol]] FOREIGN KEY

[index_name](col_name, ...)

REFERENCES tbl_name (col_name,...)

[ON DELETE reference_option]

[ON UPDATE reference_option]

reference_option:

RESTRICT | CASCADE | SET NULL | NO ACTION | SET DEFAULT

-- Delete a foreign key

ALTER TABLE tbl_name DROP FOREIGN KEY CONSTRAINT_symbol;Example:

> CREATE TABLE a (

id INT PRIMARY KEY

);

> INSERT INTO a VALUES (1);

> CREATE TABLE b (

id INT PRIMARY KEY,

a_id INT,

FOREIGN KEY fk(`a_id`) REFERENCES a(`id`) ON DELETE CASCADE

);

> INSERT INTO b VALUES (1,1);

> CREATE TABLE c (

b_id INT,

FOREIGN KEY fk(`b_id`) REFERENCES b(`id`) ON DELETE RESTRICT

);

> INSERT INTO c VALUES (1);

# Delete the records of table A. The foreign key constraints of tables A, B, and C are checked in cascade.

> DELETE FROM a WHERE id = 1;

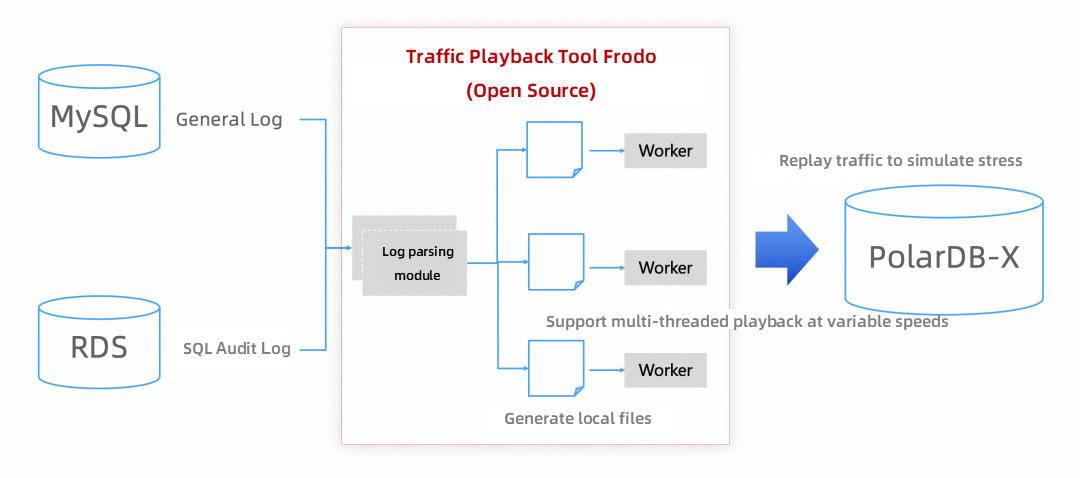

> ERROR 1451 (23000): Cannot delete or update a parent row: a foreign key constraint fails (`test`.`c`, CONSTRAINT `c_ibfk_1` FOREIGN KEY (`b_id`) REFERENCES `b` (`id`) ON DELETE RESTRICT)Frodo is an open-source PolarDB-X tool developed by the Alibaba cloud database team. It focuses on database traffic playback and is mainly used to solve business compatibility and performance evaluation during database delivery.

Open source address: https://github.com/polardb/polardbx-tools/tree/frodo-v1.0.0/frodo

Working principle:

Features:

1. SQL log collection. This feature is supported by Frodo for open-source MySQL and Aliyun RDS SQL audit. Frodo can parse these SQL logs into an internal data format and persistently store these data.

2. SQL traffic playback. Frodo replays the traffic to PolarDB-X based on the real business SQL statement. By introducing multiple threads, it can replay traffic at variable speeds to simulate peak traffic stress testing.

Example:

Step 1: Collect general logs from a user-created MySQL database

java -jar mysqlsniffer.jar --capture-method=general_log --replay-to=file --port=3306 --username=root --password=xxx --concurrency=32 --time=60 --out=logs/out.jsonStep 2: Replay the traffic to PolarDB-X

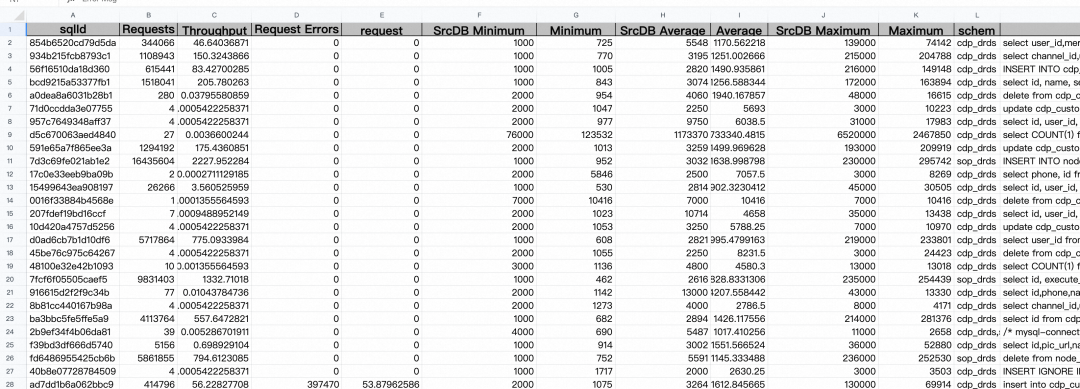

java -Xms=2G -Xmx=4G -jar frodo.jar --file=/root/out.json --source-db=mysql --replay-to=polarx --port=3306 --host=172.25.132.163 --username=root --password=123456 --concurrency=64 --time=1000 --task=task1 --schema-map=test:test1,test2 --log-level=info --rate-factor=1 --database=testAfter the traffic playback is completed, a data report for the SQL statement is generated, logging information such as SQL templates, success rate, and RT (Response Time).

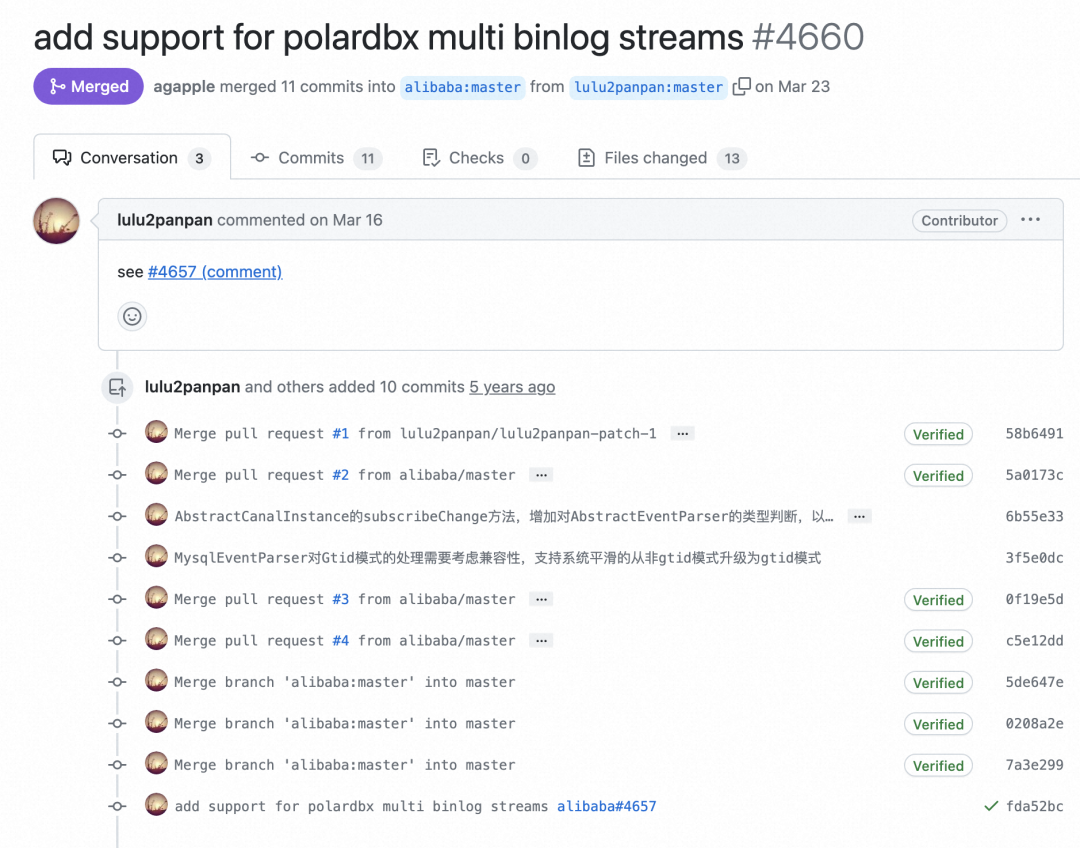

Canal is an open-source middleware developed by the Alibaba Cloud database team to synchronize data in real time by subscribing to binary logs. Based on the log parsing technology of the database, it can capture the incremental changes of the database and synchronize the change data to other systems to realize real-time data synchronization and subscription.

Canal 1.1.7 has been released recently, which supports PolarDB-X global single-stream binary logs and multiple-stream binary logs.

Reference: https://github.com/alibaba/canal/issues/4657

The preceding figure shows the two types of binary log consumption and subscription provided by PolarDB-X. These two types can coexist.

Canal open source address: https://github.com/alibaba/canal

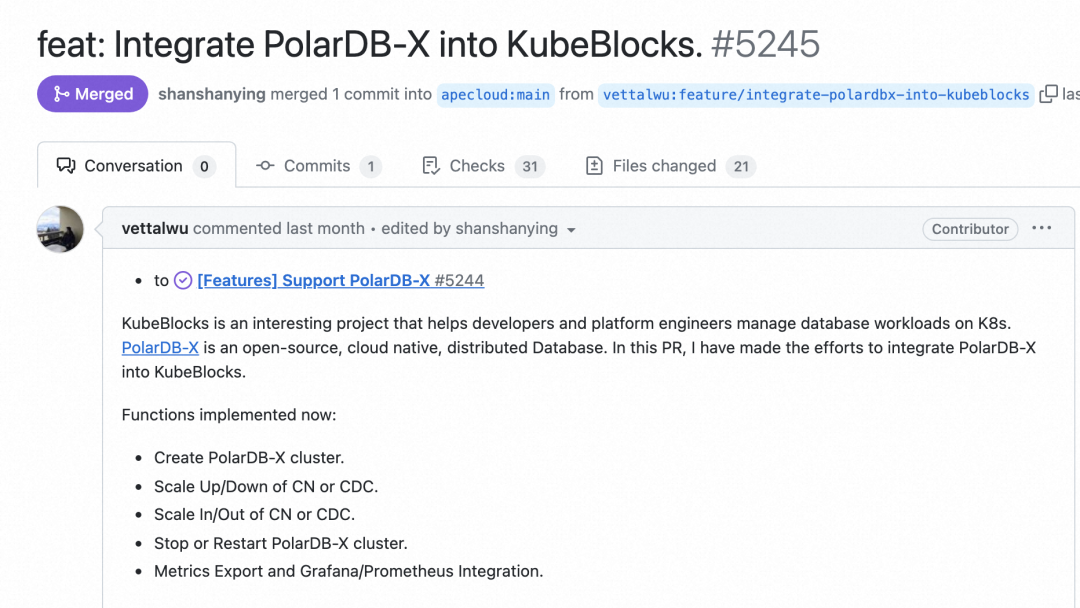

KubeBlocks is a Kubernetes-based open-source project that supports multi-engine integration and O&M. It helps users build containerized and declarative relational, NoSQL, stream computing, and vector databases in a BYOC (bring-your-own-cloud) manner. Designed for production, KubeBlocks provides a reliable, high-performance, observable, and cost-effective data infrastructure for most scenarios. The name KubeBlocks is inspired by Lego bricks, implying that you can happily build your data infrastructure on Kubernetes like building bricks. The upcoming KubeBlocks Version 0.7 will natively support PolarDB-X.

A quick installation of PolarDB-X based on KubeBlocks takes only three steps:

1. Create a cluster template

helm install polardbx ./deploy/polardbx2. Create a PolarDB-X instance

Method 1:

kbcli cluster create pxc --cluster-definition polardbx

Method 2:

helm install polardbx-cluster ./deploy/polardbx-cluster3. Perform port forwarding and log on to the database

kubectl port-forward svc/pxc-cn 3306:3306

mysql -h127.0.0.1 -upolardbx_rootCloudCanal is a data synchronization and migration tool that helps enterprises build high-quality data pipelines. It operates in real-time with efficiency and offers precise interconnection, stability, scalability, comprehensive features, hybrid deployment, and complex data transformation. CloudCanal enables users to reliably synchronize database data in a cloud-native environment. In comparison to the open-source Canal, CloudCanal supports a wider range of data source access and provides full and schema migration capabilities. It offers comprehensive data synchronization and migration features. The latest version of CloudCanal fully supports PolarDB-X as both the source and destination.

Reference: https://www.cloudcanalx.com/us/cc-doc/releaseNote/rn-cloudcanal-2-7-0-0#cloudcanal-2700

About Database Kernel | PolarDB HTAP Serverless: Build a Cost-efficient Real-Time Analysis System

ApsaraDB - June 19, 2024

ApsaraDB - July 23, 2021

ApsaraDB - January 17, 2025

ApsaraDB - June 19, 2024

ApsaraDB - March 27, 2024

ApsaraDB - October 17, 2024

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn MoreMore Posts by ApsaraDB