By Hua Yan

Alibaba Cloud PolarDB for Xscale (PolarDB-X), a distributed edition of PolarDB, is a high-performance cloud-native distributed database designed and developed independently by Alibaba Cloud. It adopts a shared-nothing and storage-compute separation architecture, integrating centralized and distributed architectures to provide financial-level data availability, distributed scalability, hybrid load, low-cost storage, and maximum flexibility. Designed to be compatible with the MySQL open-source ecosystem, it offers high-throughput, large storage, low latency, easy scalability, and ultra-high availability database services for users in the cloud era.

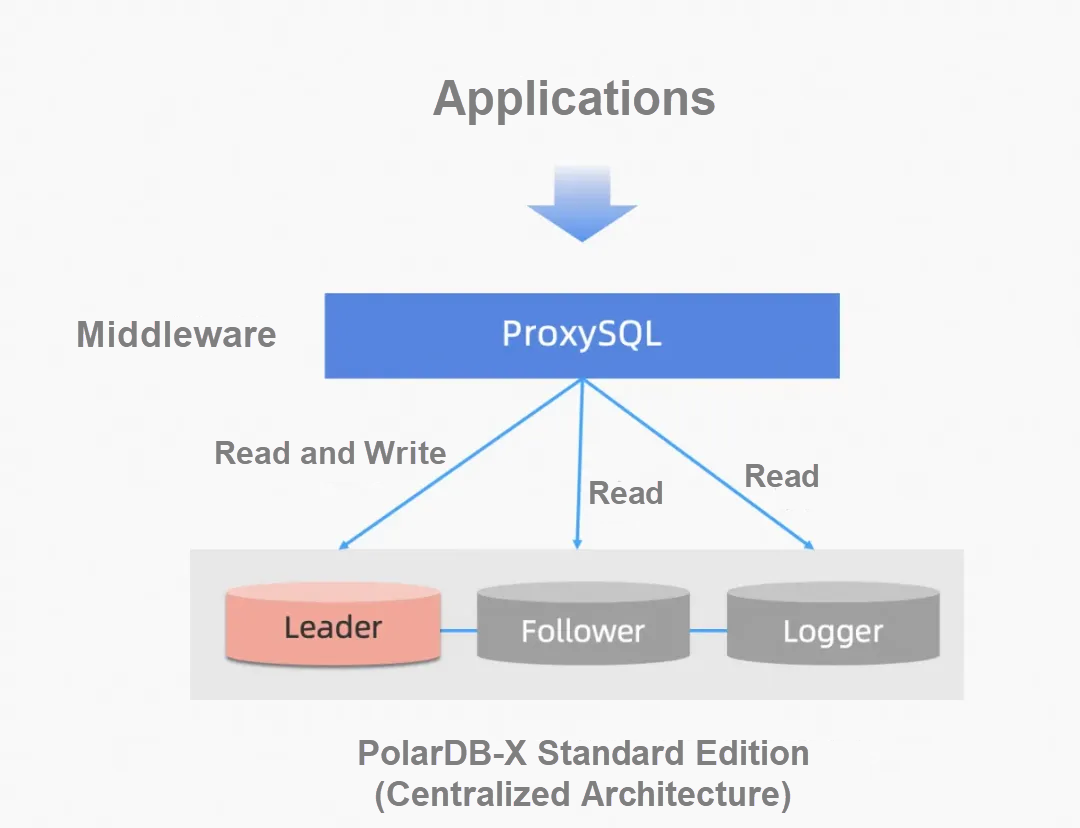

In October 2023, the open-source PolarDB-X officially released version 2.3.0, focusing on the PolarDB-X Standard Edition (centralized architecture) and providing independent services for PolarDB-X DNs. PolarDB-X v2.3.0 supports the multi-replica mode of the Paxos protocol and the Lizard distributed transaction engine, adopting a three-node architecture with one primary node, one secondary node, and one log node. It ensures strong data consistency (RPO=0) through synchronous replication of multiple replicas of the Paxos protocol and is 100% compatible with MySQL.

Due to the lack of corresponding open-source Proxy components in PolarDB-X Standard Edition, developers have been seeking simple and efficient solutions. Fortunately, ProxySQL, a mature MySQL middleware, can seamlessly integrate with the MySQL protocol to support PolarDB-X, providing high availability features like failover and dynamic routing, making it a reliable and easy-to-use proxy option.

This article will introduce how to quickly build and configure PolarDB-X Standard Edition and ProxySQL, and provide verification tests to verify the high-availability routing service.

For more information, see PolarDB-X Open Source | Three Replicas of MySQL Based on Paxos. PolarDB-X supports different forms of quick deployments to meet individual needs.

| Deployment Method | Description | Quick Installation Tool | Dependencies |

| RPM Package | Quick manual deployment with no dependencies on components | RPM download and installation | rpm |

| PXD | Self-developed quick deployment tool to configure quick deployment through YAML files | PXD installation | Python3 and Docker |

| K8S | Quick deployment tool based on Kubernetes operators | Kubernetes installation | Kubernetes and Docker |

1. Create a dependency view to enable ProxySQL to identify the metadata (Leader and Follower) of the PolarDB-X Standard Edition.

CREATE VIEW sys.gr_member_routing_candidate_status AS

SELECT IF(ROLE='Leader' OR ROLE='Follower', 'YES', 'NO' ) as viable_candidate,

IF(ROLE <>'Leader', 'YES', 'NO' ) as read_only,

IF (ROLE = 'Leader', 0, LAST_LOG_INDEX - LAST_APPLY_INDEX) as transactions_behind,

0 as 'transactions_to_cert'

FROM information_schema.ALISQL_CLUSTER_LOCAL;

# Create a monitoring account for ProxySQL, and ProxySQL runs a common dependency.

create user 'proxysql_monitor'@'%' identified with mysql_native_password by '123456';

GRANT SELECT on sys.* to 'proxysql_monitor'@'%';2. Create a test account, and the following high-availability test dependencies.

create user 'admin2'@'%' identified with mysql_native_password by '123456';

GRANT all privileges on *.* to 'admin2'@'%';3. Check whether the configuration takes effect.

# In this example, all three nodes of the PolarDB-X Standard Edition are deployed locally, and the listening ports are 3301/3302/3303.

mysql> select @@port;

+--------+

| @@port |

+--------+

| 3301 |

+--------+

1 row in set (0.00 sec)

mysql> select * from information_schema.ALISQL_CLUSTER_GLOBAL;

+-----------+-----------------+-------------+------------+----------+-----------+------------+-----------------+----------------+---------------+------------+--------------+

| SERVER_ID | IP_PORT | MATCH_INDEX | NEXT_INDEX | ROLE | HAS_VOTED | FORCE_SYNC | ELECTION_WEIGHT | LEARNER_SOURCE | APPLIED_INDEX | PIPELINING | SEND_APPLIED |

+-----------+-----------------+-------------+------------+----------+-----------+------------+-----------------+----------------+---------------+------------+--------------+

| 1 | 127.0.0.1:16800 | 1 | 0 | Leader | Yes | No | 5 | 0 | 0 | No | No |

| 2 | 127.0.0.1:16801 | 1 | 2 | Follower | Yes | No | 5 | 0 | 1 | Yes | No |

| 3 | 127.0.0.1:16802 | 1 | 2 | Follower | Yes | No | 5 | 0 | 1 | Yes | No |

+-----------+-----------------+-------------+------------+----------+-----------+------------+-----------------+----------------+---------------+------------+--------------+

3 rows in set (0.00 sec)

mysql> select * from sys.gr_member_routing_candidate_status ;

+------------------+-----------+---------------------+----------------------+

| viable_candidate | read_only | transactions_behind | transactions_to_cert |

+------------------+-----------+---------------------+----------------------+

| YES | NO | 0 | 0 |

+------------------+-----------+---------------------+----------------------+

1 row in set (0.00 sec)

mysql> select User,Host from mysql.user where User in ('admin2', 'proxysql_monitor');

+------------------+------+

| User | Host |

+------------------+------+

| admin2 | % |

| proxysql_monitor | % |

+------------------+------+

2 rows in set (0.01 sec)For detailed installation tutorials in ProxySQL documents, refer to ProxygSQL: Introduction.

Both RPM and yum methods are supported. However, currently, we only test and verify the availability of ProxySQL v2.4.8 (v2.5.0 and the latest version are not currently supported unless you have modified the ProxySQL code).

This article uses RPM installation, and RPM can be downloaded from the official website. The following code provides the relevant commands:

# Download rpm locally

wget https://github.com/sysown/proxysql/releases/download/v2.4.8/proxysql-2.4.8-1-centos7.x86_64.rpm

# Install it locally

sudo rpm -ivh proxysql-2.4.8-1-centos7.x86_64.rpm --nodeps

# Start ProxySQL

sudo systemctl start proxysql

# Check whether ProxySQL is started successfully

sudo systemctl status proxysql

● proxysql.service - High Performance Advanced Proxy for MySQL

Loaded: loaded (/etc/systemd/system/proxysql.service; enabled; vendor preset: disabled)

Active: active (running) since Mon 2024-04-29 00:30:46 CST; 6s ago

Process: 26825 ExecStart=/usr/bin/proxysql --idle-threads -c /etc/proxysql.cnf $PROXYSQL_OPTS (code=exited, status=0/SUCCESS)

Main PID: 26828 (proxysql)

Tasks: 25

Memory: 14.8M

CGroup: /system.slice/proxysql.service

├─26828 /usr/bin/proxysql --idle-threads -c /etc/proxysql.cnf

└─26829 /usr/bin/proxysql --idle-threads -c /etc/proxysql.cnf

# Check whether the ProxySQL port is enabled

# 6032 is the ProxySQL management port number

# 6033 is the ProxySQL external service port number

# Both the ProxySQL username and password are admin by default

# ProxySQL logs are /var/lib/proxysql/proxysql.log by default

# ProxySQL data directory is /var/lib/proxysql/ by default

sudo netstat -anlp | grep proxysql

tcp 0 0 0.0.0.0:6032 0.0.0.0:* LISTEN 26829/proxysql

tcp 0 0 0.0.0.0:6033 0.0.0.0:* LISTEN 26829/proxysqlThe ProxySQL configuration tutorial of PolarDB-X Standard Edition + ProxySQL is basically the same as that of MySQL MGR + ProxySQL.

1. Log in to the account

mysql -uadmin -padmin -h 127.0.0.1 -P 6032 –A2. Check to ensure that mysql_servers, mysql_group_replication_hostgroups, and mysql_query_rules are null.

# Check

mysql> select * from mysql_group_replication_hostgroups;

Empty set (0.00 sec)

mysql> select * from mysql_servers;

Empty set (0.00 sec)

mysql> select * from mysql_query_rules;

Empty set (0.00 sec)3. Update the monitoring account

UPDATE global_variables SET variable_value='proxysql_monitor' WHERE variable_name='mysql-monitor_username';

UPDATE global_variables SET variable_value='123456' WHERE variable_name='mysql-monitor_password';

# Check

mysql> select * from global_variables where variable_name in ('mysql-monitor_username', 'mysql-monitor_password');

+------------------------+------------------+

| variable_name | variable_value |

+------------------------+------------------+

| mysql-monitor_password | 123456 |

| mysql-monitor_username | proxysql_monitor |

+------------------------+------------------+

2 rows in set (0.00 sec)4. Add a test account

INSERT INTO mysql_users(username,password,default_hostgroup) VALUES ('admin2','123456',10);

# Check

mysql> select * from mysql_users;

+----------+----------+--------+---------+-------------------+----------------+---------------+------------------------+--------------+---------+----------+-----------------+------------+---------+

| username | password | active | use_ssl | default_hostgroup | default_schema | schema_locked | transaction_persistent | fast_forward | backend | frontend | max_connections | attributes | comment |

+----------+----------+--------+---------+-------------------+----------------+---------------+------------------------+--------------+---------+----------+-----------------+------------+---------+

| admin2 | 123456 | 1 | 0 | 10 | NULL | 0 | 1 | 0 | 1 | 1 | 10000 | | |

+----------+----------+--------+---------+-------------------+----------------+---------------+------------------------+--------------+---------+----------+-----------------+------------+---------+

1 row in set (0.00 sec)5. Set the read/write group, write group 10, secondary write group 20, read group 30, and offline group 40. The primary node can be used as the read node.

INSERT INTO mysql_group_replication_hostgroups (writer_hostgroup,backup_writer_hostgroup,reader_hostgroup,offline_hostgroup,active,writer_is_also_reader) VALUES(10,20,30,40,1,1);

# Check

mysql> select * from mysql_group_replication_hostgroups;

+------------------+-------------------------+------------------+-------------------+--------+-------------+-----------------------+-------------------------+---------+

| writer_hostgroup | backup_writer_hostgroup | reader_hostgroup | offline_hostgroup | active | max_writers | writer_is_also_reader | max_transactions_behind | comment |

+------------------+-------------------------+------------------+-------------------+--------+-------------+-----------------------+-------------------------+---------+

| 10 | 20 | 30 | 40 | 1 | 1 | 1 | 0 | NULL |

+------------------+-------------------------+------------------+-------------------+--------+-------------+-----------------------+-------------------------+---------+

1 row in set (0.00 sec)6. Add backend mysql_servers, define the leader node as write group 10, and define the follower node as the secondary write database 20. Note that here port is the listening port of the PolarDB-X Standard Edition of the node.

INSERT INTO mysql_servers(hostgroup_id,hostname,port) VALUES (10,'127.0.0.1',3301);

INSERT INTO mysql_servers(hostgroup_id,hostname,port) VALUES (20,'127.0.0.1',3302);

INSERT INTO mysql_servers(hostgroup_id,hostname,port) VALUES (20,'127.0.0.1',3303);

# Check

mysql> select * from mysql_servers;

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | gtid_port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 10 | 127.0.0.1 | 3301 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 20 | 127.0.0.1 | 3302 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 20 | 127.0.0.1 | 3303 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

3 rows in set (0.00 sec)7. Configure read/write splitting rules. SELECT FOR UPDATE is configured to the write database, and the pure SELECT is configured to the read database.

INSERT INTO mysql_query_rules(active,match_pattern,destination_hostgroup,apply) VALUES(1,'^select.*for update$',10,1);

INSERT INTO mysql_query_rules(active,match_pattern,destination_hostgroup,apply) VALUES(1,'^select',30,1);

# Check

mysql> select * from mysql_query_rules;

+---------+--------+----------+------------+--------+-------------+------------+------------+--------+--------------+----------------------+----------------------+--------------+---------+-----------------+-----------------------+-----------+--------------------+---------------+-----------+---------+---------+-------+-------------------+----------------+------------------+-----------+--------+-------------+-----------+---------------------+-----+-------+------------+---------+

| rule_id | active | username | schemaname | flagIN | client_addr | proxy_addr | proxy_port | digest | match_digest | match_pattern | negate_match_pattern | re_modifiers | flagOUT | replace_pattern | destination_hostgroup | cache_ttl | cache_empty_result | cache_timeout | reconnect | timeout | retries | delay | next_query_flagIN | mirror_flagOUT | mirror_hostgroup | error_msg | OK_msg | sticky_conn | multiplex | gtid_from_hostgroup | log | apply | attributes | comment |

+---------+--------+----------+------------+--------+-------------+------------+------------+--------+--------------+----------------------+----------------------+--------------+---------+-----------------+-----------------------+-----------+--------------------+---------------+-----------+---------+---------+-------+-------------------+----------------+------------------+-----------+--------+-------------+-----------+---------------------+-----+-------+------------+---------+

| 1 | 1 | NULL | NULL | 0 | NULL | NULL | NULL | NULL | NULL | ^select.*for update$ | 0 | CASELESS | NULL | NULL | 10 | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | 1 | | NULL |

| 2 | 1 | NULL | NULL | 0 | NULL | NULL | NULL | NULL | NULL | ^select | 0 | CASELESS | NULL | NULL | 30 | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | NULL | 1 | | NULL |

+---------+--------+----------+------------+--------+-------------+------------+------------+--------+--------------+----------------------+----------------------+--------------+---------+-----------------+-----------------------+-----------+--------------------+---------------+-----------+---------+---------+-------+-------------------+----------------+------------------+-----------+--------+-------------+-----------+---------------------+-----+-------+------------+---------+

2 rows in set (0.00 sec)8. Save the configuration and load it into memory so that it can take effect

save mysql users to disk;

save mysql servers to disk;

save mysql query rules to disk;

save mysql variables to disk;

save admin variables to disk;

load mysql users to runtime;

load mysql servers to runtime;

load mysql query rules to runtime;

load mysql variables to runtime;

load admin variables to runtime;

# Check

mysql> select * from runtime_mysql_servers;

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | gtid_port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 10 | 127.0.0.1 | 3301 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3301 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3302 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3303 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

4 rows in set (0.00 sec)Log in to the related account

#proxysql monitors the login account

mysql -uadmin -padmin -h 127.0.0.1 -P 6032 -A

#proxysql tests the login account

mysql -uadmin2 -p'123456' -h127.0.0.1 -P6033 –A# Use the monitoring account to log on

# Check the current server status. 127.0.0.1:3301 provides database writing service, while 127.0.0.1:3301, 127.0.0.1:3301, 127.0.0.1:3302, 127.0.0.1:3303 provides database reading service

mysql> select * from runtime_mysql_servers;

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | gtid_port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 10 | 127.0.0.1 | 3301 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3301 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3302 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3303 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

4 rows in set (0.00 sec)

# ProxySQL tests the login account and runs the following SQL

create database d1;

create table d1.t1(c1 int, c2 varchar(10));

insert into d1.t1 values(1,"hello");

begin;insert into d1.t1 values(2,"world");select * from d1.t1;commit;

select * from d1.t1;

select * from d1.t1 for update;

# Use the monitoring account to log on

# Check the actual routing rules

mysql> select hostgroup,digest_text from stats_mysql_query_digest;

+-----------+------------------------------------------+

| hostgroup | digest_text |

+-----------+------------------------------------------+

| 10 | select * from d1.t1 for update |

| 30 | select * from d1.t1 |

| 10 | insert into d1.t1 values(?,?) |

| 10 | commit |

| 10 | create table d1.t1(c1 int,c2 varchar(?)) |

| 10 | create database d1 |

| 10 | begin |

| 10 | select @@version_comment limit ? |

+-----------+------------------------------------------+

8 rows in set (0.01 sec)

# The actual manual test is performed to view the routing destination. Read-only queries are randomly selected from the three nodes in the read database, and non-read-only queries are performed only in the write database.

$mysql -uadmin2 -p'123456' -h127.0.0.1 -P6033 -e 'select @@port, c1, c2 from d1.t1;'

Warning: Using a password on the command line interface can be insecure.

+--------+------+-------+

| @@port | c1 | c2 |

+--------+------+-------+

| 3301 | 1 | hello |

| 3301 | 2 | world |

+--------+------+-------+

$mysql -uadmin2 -p'123456' -h127.0.0.1 -P6033 -e \

'select @@port, c1, c2 from d1.t1;'

Warning: Using a password on the command line interface can be insecure.

+--------+------+-------+

| @@port | c1 | c2 |

+--------+------+-------+

| 3303 | 1 | hello |

| 3303 | 2 | world |

+--------+------+-------+

$mysql -uadmin2 -p'123456' -h127.0.0.1 -P6033 -e \

'select @@port, c1, c2 from d1.t1;'

Warning: Using a password on the command line interface can be insecure.

+--------+------+-------+

| @@port | c1 | c2 |

+--------+------+-------+

| 3302 | 1 | hello |

| 3302 | 2 | world |

+--------+------+-------+

$mysql -uadmin2 -p'123456' -h127.0.0.1 -P6033 -e \

'select @@port, c1, c2 from d1.t1 for update;'

Warning: Using a password on the command line interface can be insecure.

+--------+------+-------+

| @@port | c1 | c2 |

+--------+------+-------+

| 3301 | 1 | hello |

| 3301 | 2 | world |

+--------+------+-------+Manually kill the current leader node and observe the availability changes of the cluster.

# Use the monitoring account to log on

# The leader starts from the 127.0.0.1:3301 node. Before the leader is killed, 127.0.0.1:3301 is the write group 10

mysql> select * from runtime_mysql_servers;

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | gtid_port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 10 | 127.0.0.1 | 3301 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3301 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3302 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3303 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

4 rows in set (0.00 sec)

# Execute the check of SQL, and the request will be forwarded to the leader 3301

mysql -uadmin2 -p'123456' -h127.0.0.1 -P6033 -e 'begin;select @@port,User from mysql.user for update;commit;'

Warning: Using a password on the command line interface can be insecure.

+--------+------------------+

| @@port | User |

+--------+------------------+

| 3303 | admin2 |

| 3303 | proxysql_monitor |

| 3303 | mysql.infoschema |

| 3303 | mysql.session |

| 3303 | mysql.sys |

| 3303 | root |

+--------+------------------+

# After the leader is killed, the 127.0.0.1:3301 node immediately degenerates to offline group 40, and then upgrades the status to SHUNNED, while the 127.0.0.1:3303 node evolves to write group 10

mysql> select * from runtime_mysql_servers;

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | gtid_port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 30 | 127.0.0.1 | 3302 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3303 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 40 | 127.0.0.1 | 3301 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

3 rows in set (0.00 sec)

mysql> select * from runtime_mysql_servers;

+--------------+-----------+------+-----------+---------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | gtid_port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+-----------+------+-----------+---------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 10 | 127.0.0.1 | 3303 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3302 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3303 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 40 | 127.0.0.1 | 3301 | 0 | SHUNNED | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+-----------+------+-----------+---------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

4 rows in set (0.00 sec)

# Execute the check of SQL, and the request will be forwarded to the leader 3303

mysql -uadmin2 -p'123456' -h127.0.0.1 -P6033 -e 'begin;select @@port,User from mysql.user for update;commit;'

Warning: Using a password on the command line interface can be insecure.

+--------+------------------+

| @@port | User |

+--------+------------------+

| 3303 | admin2 |

| 3303 | proxysql_monitor |

| 3303 | mysql.infoschema |

| 3303 | mysql.session |

| 3303 | mysql.sys |

| 3303 | root |

+--------+------------------+Manually restore the former leader node that has been killed and observe the status changes of the cluster.

# Use the monitoring account to log on

# 127.0.0.1:3301 is the former leader who has been killed, and 127.0.0.1:3303 is the new leader who has been elected

mysql> select * from runtime_mysql_servers;

+--------------+-----------+------+-----------+---------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | gtid_port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+-----------+------+-----------+---------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 10 | 127.0.0.1 | 3303 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3302 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3303 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 40 | 127.0.0.1 | 3301 | 0 | SHUNNED | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+-----------+------+-----------+---------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

4 rows in set (0.00 sec)

# Execute the check of SQL, and the request will be forwarded to the leader 3303

mysql -uadmin2 -p'123456' -h127.0.0.1 -P6033 -e 'begin;select @@port,User from mysql.user for update;commit;'

Warning: Using a password on the command line interface can be insecure.

+--------+------------------+

| @@port | User |

+--------+------------------+

| 3303 | admin2 |

| 3303 | proxysql_monitor |

| 3303 | mysql.infoschema |

| 3303 | mysql.session |

| 3303 | mysql.sys |

| 3303 | root |

+--------+------------------+

# Restart the primary leader node 127.0.0.1:3301 before restoration, observe that the node evolves to the read-only group 30, and the status is updated to ONLINE.

mysql> select * from runtime_mysql_servers;

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | gtid_port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 10 | 127.0.0.1 | 3303 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3301 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3302 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3303 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

4 rows in set (0.00 sec)

# The execution of write transactions can be correctly routed to the leader.

mysql -uadmin2 -p'123456' -h127.0.0.1 -P6033 -e 'begin;select @@port,User from mysql.user for update;commit;'

Warning: Using a password on the command line interface can be insecure.

+--------+------------------+

| @@port | User |

+--------+------------------+

| 3303 | admin2 |

| 3303 | proxysql_monitor |

| 3303 | mysql.infoschema |

| 3303 | mysql.session |

| 3303 | mysql.sys |

| 3303 | root |

+--------+------------------+Manually switch the leader node online and observe the status changes of the cluster.

# Use the test account to log on and run the SQL to manually switch the leader.

# Before the leader switch, the 127.0.0.1:3303 node is the leader.

mysql> select * from information_schema.ALISQL_CLUSTER_GLOBAL;

+-----------+-----------------+-------------+------------+----------+-----------+------------+-----------------+----------------+---------------+------------+--------------+

| SERVER_ID | IP_PORT | MATCH_INDEX | NEXT_INDEX | ROLE | HAS_VOTED | FORCE_SYNC | ELECTION_WEIGHT | LEARNER_SOURCE | APPLIED_INDEX | PIPELINING | SEND_APPLIED |

+-----------+-----------------+-------------+------------+----------+-----------+------------+-----------------+----------------+---------------+------------+--------------+

| 1 | 127.0.0.1:16800 | 24 | 25 | Follower | No | No | 5 | 0 | 24 | Yes | No |

| 2 | 127.0.0.1:16801 | 24 | 25 | Follower | Yes | No | 5 | 0 | 24 | Yes | No |

| 3 | 127.0.0.1:16802 | 24 | 0 | Leader | Yes | No | 5 | 0 | 23 | No | No |

+-----------+-----------------+-------------+------------+----------+-----------+------------+-----------------+----------------+---------------+------------+--------------+

3 rows in set (0.00 sec)

mysql> call dbms_consensus.change_leader("127.0.0.1:16801");

Query OK, 0 rows affected (0.00 sec)

mysql> select * from information_schema.ALISQL_CLUSTER_GLOBAL;

+-----------+---------+-------------+------------+------+-----------+------------+-----------------+----------------+---------------+------------+--------------+

| SERVER_ID | IP_PORT | MATCH_INDEX | NEXT_INDEX | ROLE | HAS_VOTED | FORCE_SYNC | ELECTION_WEIGHT | LEARNER_SOURCE | APPLIED_INDEX | PIPELINING | SEND_APPLIED |

+-----------+---------+-------------+------------+------+-----------+------------+-----------------+----------------+---------------+------------+--------------+

| 0 | | 0 | 0 | | | | 0 | 0 | 0 | | |

+-----------+---------+-------------+------------+------+-----------+------------+-----------------+----------------+---------------+------------+--------------+

1 row in set (0.00 sec)

# Manually switch the leader to the 127.0.0.1:3302 node.

mysql> select * from information_schema.ALISQL_CLUSTER_GLOBAL;

+-----------+-----------------+-------------+------------+----------+-----------+------------+-----------------+----------------+---------------+------------+--------------+

| SERVER_ID | IP_PORT | MATCH_INDEX | NEXT_INDEX | ROLE | HAS_VOTED | FORCE_SYNC | ELECTION_WEIGHT | LEARNER_SOURCE | APPLIED_INDEX | PIPELINING | SEND_APPLIED |

+-----------+-----------------+-------------+------------+----------+-----------+------------+-----------------+----------------+---------------+------------+--------------+

| 1 | 127.0.0.1:16800 | 25 | 26 | Follower | Yes | No | 5 | 0 | 25 | Yes | No |

| 2 | 127.0.0.1:16801 | 25 | 0 | Leader | Yes | No | 5 | 0 | 24 | No | No |

| 3 | 127.0.0.1:16802 | 25 | 26 | Follower | Yes | No | 5 | 0 | 25 | Yes | No |

+-----------+-----------------+-------------+------------+----------+-----------+------------+-----------------+----------------+---------------+------------+--------------+

3 rows in set (0.01 sec)

# Use the monitoring account to log on

# Before the leader switch, the 127.0.0.1:3303 node is the leader.

mysql> select * from runtime_mysql_servers;

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | gtid_port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 10 | 127.0.0.1 | 3303 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3301 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3302 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3303 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

4 rows in set (0.00 sec)

# After manually switching the leader to the 127.0.0.1:3302 node, ProxySQL responds in time, and 127.0.0.1:3302 changes into a write group

mysql> select * from runtime_mysql_servers;

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | gtid_port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 10 | 127.0.0.1 | 3302 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3301 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3302 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 40 | 127.0.0.1 | 3303 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

4 rows in set (0.00 sec)

# 127.0.0.1:3303 changes into read group 30 after a brief offline group 40 status

mysql> select * from runtime_mysql_servers;

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | gtid_port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 10 | 127.0.0.1 | 3302 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3301 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3302 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3303 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

4 rows in set (0.00 sec)

# The execution of write transactions can be correctly routed to the new leader

mysql -uadmin2 -p'123456' -h127.0.0.1 -P6033 -e 'begin;select @@port,User from mysql.user for update;commit;'

Warning: Using a password on the command line interface can be insecure.

+--------+------------------+

| @@port | User |

+--------+------------------+

| 3302 | admin2 |

| 3302 | proxysql_monitor |

| 3302 | mysql.infoschema |

| 3302 | mysql.session |

| 3302 | mysql.sys |

| 3302 | root |

+--------+------------------+# Use the monitoring account to log on

# The status of the secondary node before the failure. In this case, 127.0.0.1:3301 is a node of the secondary database

mysql> select * from runtime_mysql_servers;

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | gtid_port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 10 | 127.0.0.1 | 3302 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3301 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3302 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3303 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

4 rows in set (0.00 sec)

# Kill the 127.0.0.1:3301 node and ProxySQL will update it to offline group 40 soon

mysql> select * from runtime_mysql_servers;

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | gtid_port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 10 | 127.0.0.1 | 3302 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3302 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3303 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 40 | 127.0.0.1 | 3301 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

4 rows in set (0.00 sec)

# Recover the 127.0.0.1:3301 node and ProxySQL will update it to read group 30 soon

mysql> select * from runtime_mysql_servers;

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| hostgroup_id | hostname | port | gtid_port | status | weight | compression | max_connections | max_replication_lag | use_ssl | max_latency_ms | comment |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

| 10 | 127.0.0.1 | 3302 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3301 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3302 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

| 30 | 127.0.0.1 | 3303 | 0 | ONLINE | 1 | 0 | 1000 | 0 | 0 | 0 | |

+--------------+-----------+------+-----------+--------+--------+-------------+-----------------+---------------------+---------+----------------+---------+

4 rows in set (0.01 sec)By following the preceding steps, we have successfully configured ProxySQL as a proxy for the PolarDB-X Standard Edition. This setup not only enhances the maintainability and flexibility of the database system but also provides a more convenient access method for developers. The combination of PolarDB-X Standard Edition and ProxySQL enables developers to efficiently build cloud-native applications, whether they need to handle high-concurrency access or implement complex data routing policies.

Point-in-Time Recovery for PolarDB-X Operator: Leveraging Two Heartbeat Transactions

ApsaraDB - June 19, 2024

ApsaraDB - May 16, 2025

ApsaraDB - January 17, 2025

ApsaraDB - October 17, 2024

ApsaraDB - January 3, 2024

ApsaraDB - July 23, 2021

Application High Availability Service

Application High Availability Service

Application High Available Service is a SaaS-based service that helps you improve the availability of your applications.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More AnalyticDB for MySQL

AnalyticDB for MySQL

AnalyticDB for MySQL is a real-time data warehousing service that can process petabytes of data with high concurrency and low latency.

Learn MoreMore Posts by ApsaraDB