By Ke Yu

As the amount of data on user tables increases, the execution time of some SQL statements becomes increasingly longer. For example, for some SQL queries that require a full table scan, the data scan process takes a certain amount of time. The larger the table size, the longer the scan time. In addition, some DDL operations to create secondary indexes and rebuild tables will scan the whole table and perform sort operations. The larger the table size, the longer the scan and sort time.

To improve SQL query performance, MySQL Community Edition introduced the InnoDB parallel scan feature in version 8.0.14, which can control the number of threads for the parallel scan of clustered indexes through innodb_parallel_read_threads variables. In version 8.0.27, parallel index creation is supported and the number of parallel threads for creating secondary indexes can be controlled through innodb_ddl_threads to accelerate the index creation process. Parallel processing in MySQL is currently limited to the InnoDB layer for full table operations.

Based on the 8.0.37 code, this article provides some basic introduction and principle analysis of the parallel technologies at the InnoDB layer.

This parameter controls the number of threads for the parallel scan of primary key indexes. Currently, only a parallel scan of primary key indexes is supported, and that of secondary indexes is not supported. If you set this parameter to a value greater than 1, the parallel scan is enabled. The maximum value is 256, which is also the maximum value of the sum of parallel threads for all user connections. When the value reaches the limit, the user connection falls back to a single-threaded scan.

This parameter specifies the number of threads for parallel sort during secondary index creation, as well as the number of threads for parallel sort and parallel construction of the B+ tree during table rebuilds.

This parameter represents the total size of the sort buffer used during DDL operations. Memory sort is done by parallel scan threads, and the size of the sort buffer allocated to each parallel thread is innodb_ddl_buffer_size/innodb_parallel_read_threads. Therefore, when innodb_parallel_read_threads is increased, innodb_ddl_buffer_size also needs to be increased.

There are three involving parameters, which are summarized in the following table.

| Parameter | Description | Dynamic modification | Scope | Default value | Minimum value | Maximum value |

|---|---|---|---|---|---|---|

| innodb_parallel_read_threads | Number of threads for parallel scans of primary key indexes | Supported | Connection level | 4 | 1 | 256 |

| innodb_ddl_threads | Number of parallel threads during DDL operations | Supported | Connection level | 4 | 1 | 64 |

| innodb_ddl_buffer_size | Size of the sort buffer used by DDL operations | Supported | Connection level | 1048576 | 65536 | 4294967295 |

• SELECT COUNT(*) FROM table1;

The SELECT COUNT(*) statement supports parallel scans of primary key indexes but does not support scans of secondary indexes.

• CHECK TABLE table1;

During the execution of the CHECK TABLE statement, the primary key index is scanned twice. When the primary key index is scanned for the second time, the parallel scan is supported.

• CREATE INDEX index1 ON table1 (col1); ALTER TABLE table1 ADD INDEX index1 (col1);

When you execute the CREATE INDEX statement, parallel operations are supported during the scan and sort of primary key indexes. However, they are not supported during the B+ tree construction stage. Virtual column indexes, full-text indexes, and sparse indexes are not supported.

• ALTER TABLE table1 ENGINE=INNODB; OPTIMIZE TABLE table1; (hereafter referred to as REBUILD TABLE. optimize table = rebuild table + analyze table. At the REBUILD TABLE stage, parallel operations are supported.)

When the table is rebuilt, parallel operations are supported during the sorting stage and the construction of secondary indexes. During the scan of primary key indexes, parallel operations are not supported.

The following table summarizes the parallel support for the preceding SQL statements. Some SQL statements that require a full table scan of primary key indexes and DDL statements that create secondary indexes (CREATE INDEX) support parallel operations. Note that these SQL statements do not support parallel operations at all stages.

| SQL Statements Supporting Parallel Operations | Parallel Scan | Parallel Sort | Parallel Construction of B+ Tree Indexes |

|---|---|---|---|

| SELECT COUNT(*) FROM table1; | Supported | NULL | NULL |

| CHECK TABLE table1; | Supported | NULL | NULL |

| CREATE INDEX index1 ON table1 (col1); ALTER TABLE table1 ADD INDEX index1 (col1); |

Supported | Supported | Not supported |

| ALTER TABLE table1 ENGINE=INNODB; OPTIMIZE TABLE table1; |

Not supported | Supported | Supported |

MySQL's parallel query is completed at the InnoDB layer, which is actually a parallel scan process of the B+ tree.

The InnoDB layer provides an index scan interface row_scan_index_for_mysql for a full record scan of indexes. The SELECT COUNT(*) and CHECK TABLE statements share this interface for parallel scan of indexes.

row_scan_index_for_mysql // Full record scan of indexes

|-> row_mysql_parallel_select_count_star // Parallel count(*)

| |-> Parallel_reader reader; // Create a reader

| |-> reader.add_scan(count_callback); // Set the callback function for the COUNT(*) statement to perform pre-sharding.

| |-> reader.run // Start parallel scan

|

|-> parallel_check_table // Parallel CHECK TABLE

| |-> Parallel_reader reader; // Create a reader

| |-> reader.add_scan(check_callback); // Set the callback function for the CHECK TABLE statement to perform pre-sharding.

| |-> reader.run // Start parallel scanAt the stage of scanning primary key indexes for creating secondary indexes, a parallel scan is also used. The implementation is the same except for a different scan entry.

COUNT(*)

First, COUNT(*) is used as an example to describe how to perform a single-threaded scan.

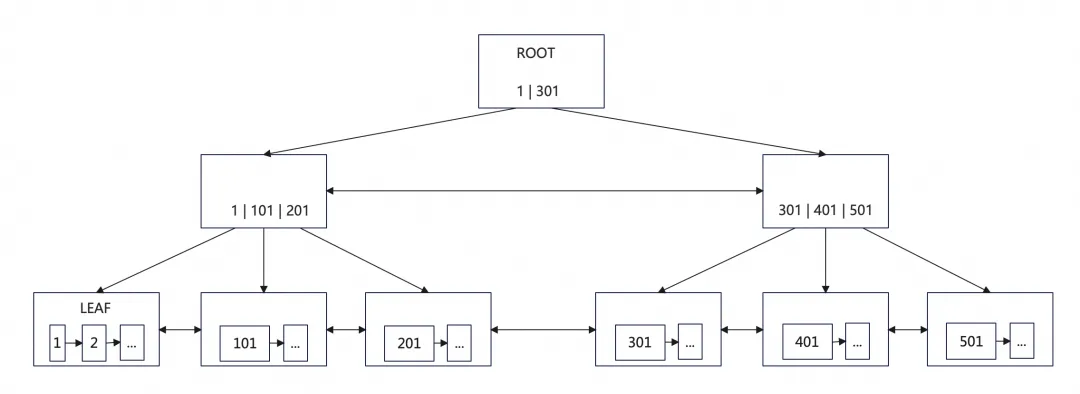

How does a single thread scan a B+ tree? Let's briefly review the structure of the B+ tree. The above figure shows a 3-layer B+ tree. The first layer is the root node page, and the third layer is the leaf node. Its characteristics are as follows:

Before parallel queries, the basic execution process of a single-threaded SELECT COUNT(*) full table at the InnoDB layer is as follows:

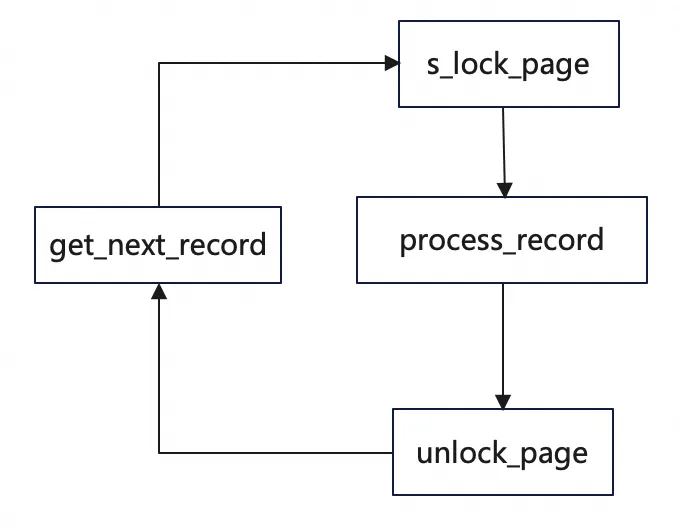

In the scanning process described above, the row_search_mvcc function completes the minimum record positioning and acquires the next record. During the scanning process, only a single LEAF PAGE S lock is held. Each time a record is scanned within the page, the page will be locked and unlocked:

After one page is scanned, the next page will be scanned. The preceding process is repeated until the last record.

InnoDB provides the parallel scan feature to accelerate that process. If you need to perform a full record scan on the B+ tree, you can use the parallel scan feature.

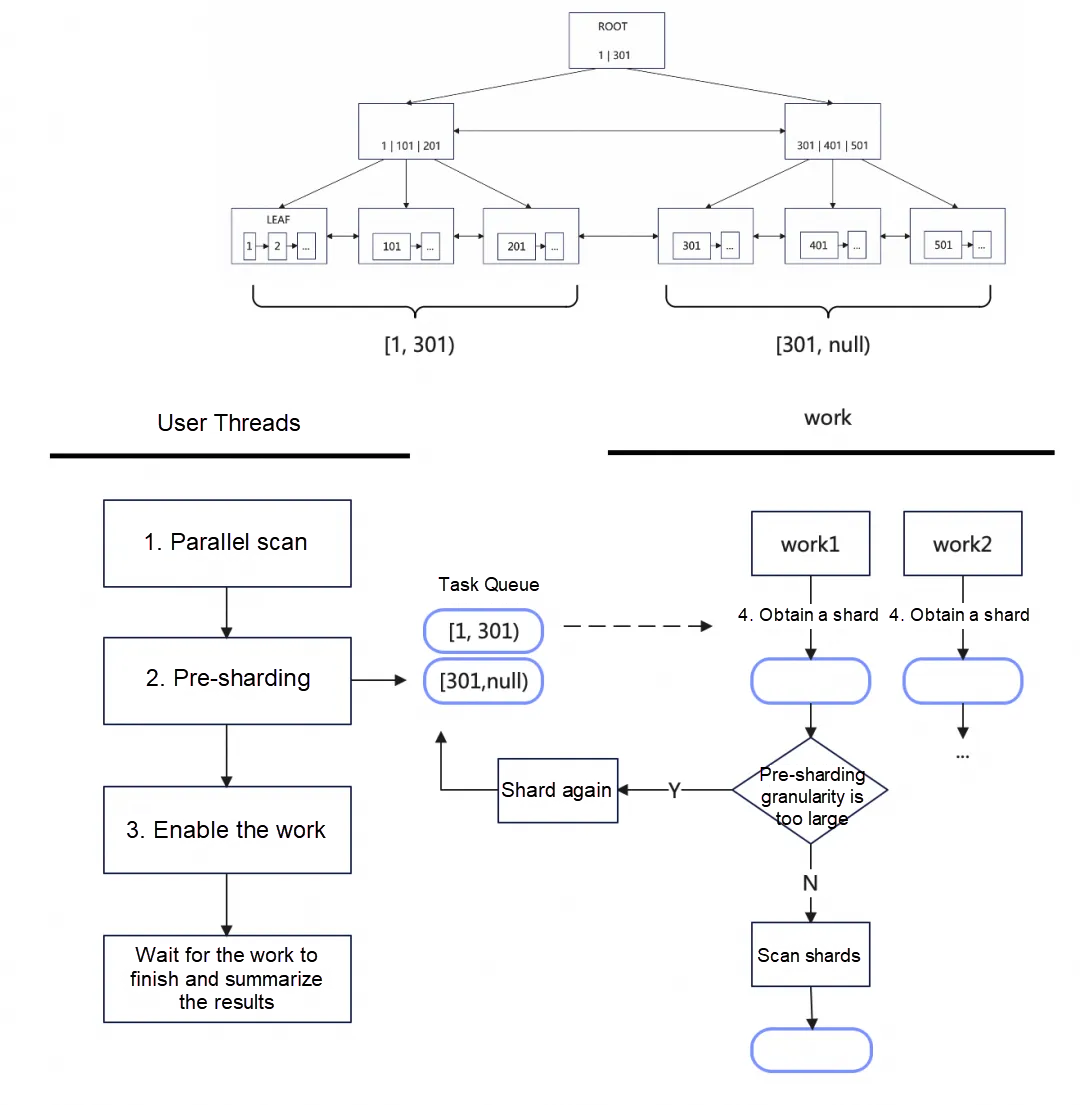

A B+ tree is composed of multiple subtrees, and the parallel scan is performed by dividing the B+ tree into multiple subtrees. The scanning of each subtree is a parallel task that is handled by multiple threads. The specific process is as follows:

COUNT(*) statement and then enters the parallel scan logic of the InnoDB layer.Each shard (Range) represents a set of primary key records with consecutive logic. It consists of two Iters forming a left-closed and right-open interval [start, end).

struct RANGE {

Iter start;

Iter end;

}Each Iter marks the location of a record.

struct Iter {

...

const rec_t *m_rec{}; // The record that marks the boundary of the shard

btr_pcur_t *m_pcur{}; // The B+ tree cursor that marks the page number of the record

...

}The key to parallel scan lies in how to perform sharding and how to lock the B+ tree during the sharding process. It is necessary to ensure that the shards are as evenly distributed as possible and that the shard boundaries are continuous and non-overlapping.

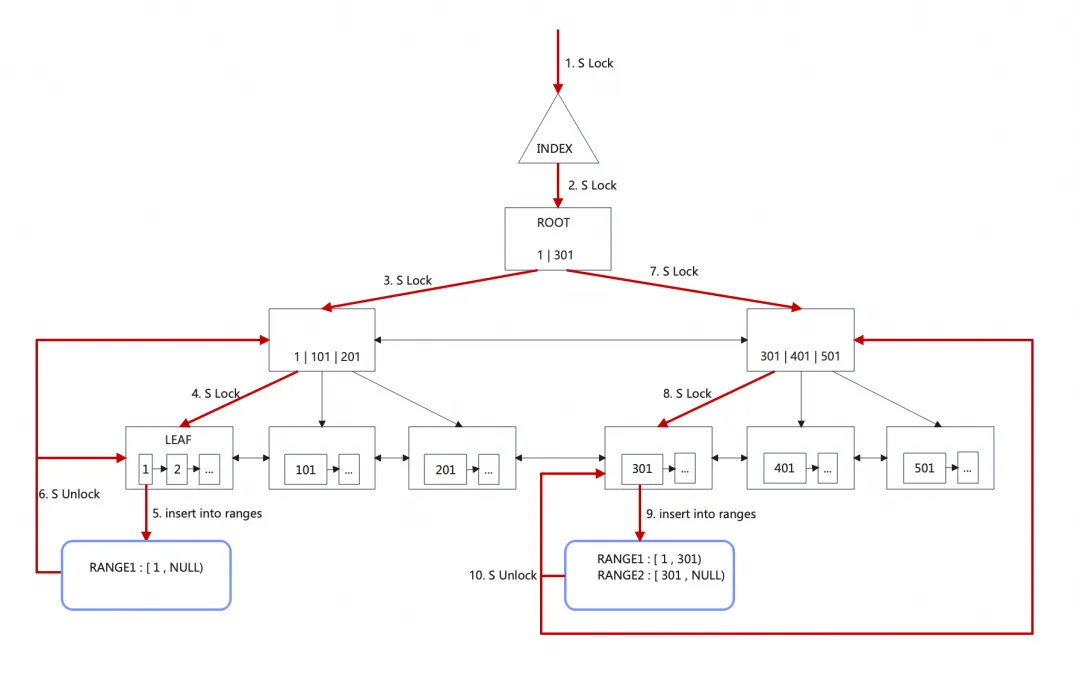

The sharding strategy of the user thread is to pre-shard based on the number of subtrees of the root node. For example, if the root node contains N subtrees (N records), it is divided into N shards. The sharding process is to locate the leaf node row records based on the node pointer of ROOT. The pre-sharding process is as follows:

1. INDEX S lock;

2. ROOT PAGE S lock. The whole sharding process holds the INDEX S lock and ROOT S lock to ensure that the ROOT

3. After the records of ROOT PAGE are traversed, release the ROOT S lock and INDEX S lock to complete the pre-sharding.

Assuming that the B+ tree is relatively balanced, pre-sharding can divide the B+ tree more evenly.

Why does the work thread need to perform additional sharding? How does the work thread determine that the sharding granularity is large enough to be divided again?

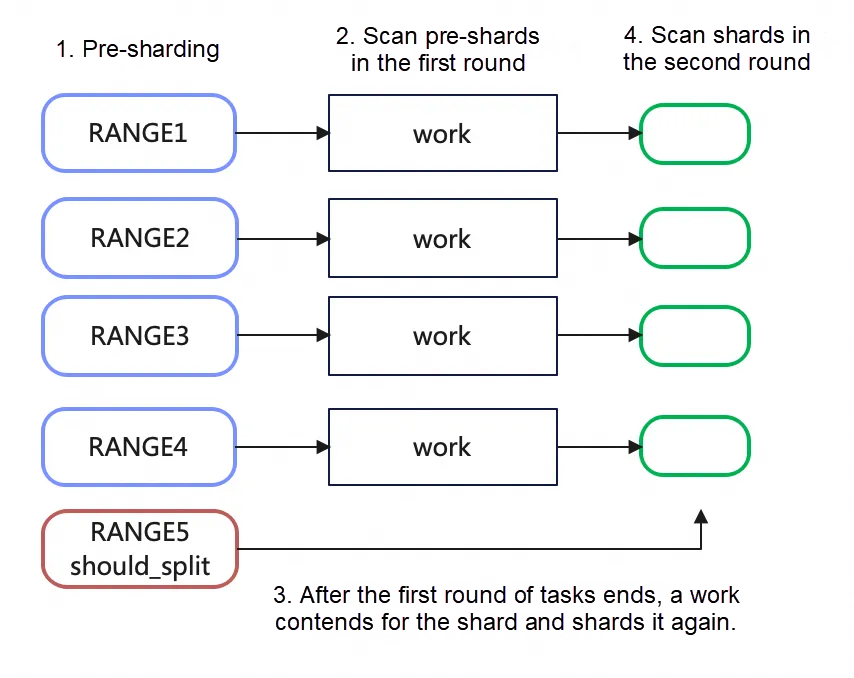

The initial design of parallel scan has the issue of large sharding granularity, which would lead to insufficient utilization of parallel threads for very large trees. Assume that there are four parallel scan threads and a B+ tree with five subtrees, the user thread divides it into five shards. Then, the first four subtrees can be scanned in parallel, and one thread needs to scan two subtrees. Assuming that it takes 1 minute to scan each subtree, the total parallel scan time would be 2 minutes.

The solution is to divide the B+ tree into subtrees with smaller granularity to improve the utilization of parallel threads. The user thread performs pre-sharding, and the work thread will divide some shards again. This way, the total scanning time in the above example can be reduced to 1 minute and 15 seconds (5min/4).

The optimized sharding strategy is:

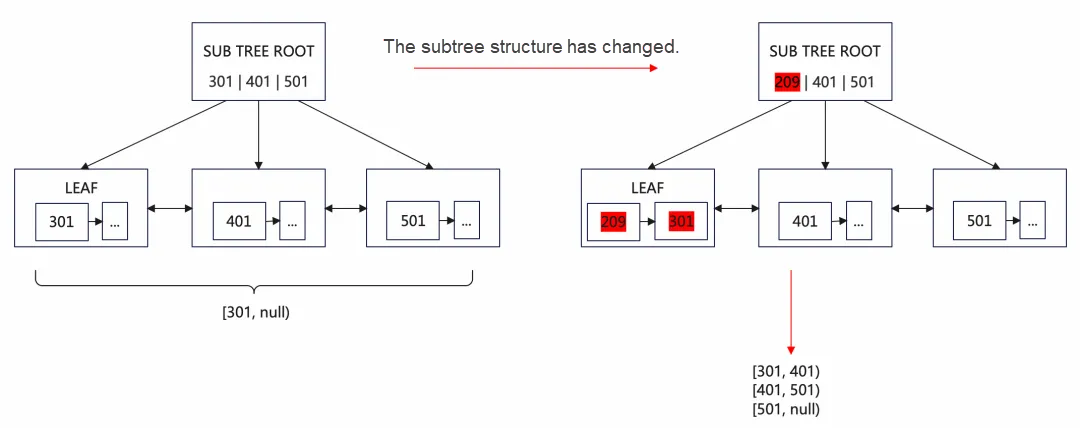

When sharding again, the sharding process and locking logic are basically the same as those of the ROOT PAGE. However, the subtree structure may change because the INDEX and ROOT PAGE S locks are released during the two sharding processes. Therefore, it cannot be divided directly by the number of subtrees of a shard but needs to be divided by the RANGE of the shard.

The following content describes the details:

After secondary sharding, the tree is divided into two layers. The root node is divided once, and the subtree of the root node is divided again. The B+ tree is divided twice at most since it is short and wide without a very high level. The large table can be divided into many subtrees after being divided twice, which can improve the utilization of parallel threads.

The scanning process of each work thread on the subtree shard [start, end) is basically the same as the process of scanning the entire B+ tree with a single thread:

1. Restore the leaf node page corresponding to the cursor of start.

2. Scan the records within the page, check the MVCC visibility for each record, and construct the old version of the data.

3. Reach the maximum record of the page, obtain the next page, and add an S lock to the next page.

4. Release the S lock of the page and repeat the preceding scanning process for the next page until the end of the shard is scanned.

Each thread only holds a single LEAF PAGE S lock during the scanning process. After the S lock is added to the page, it processes all records within the page at one time, and then unlocks the page to process the next page. This is more efficient than locking and unlocking each record within the page during a single-threaded scan.

parallel_work

|--> process one row

| |--> callback

| | |--> counter++Parallel scan has designed a set of common interfaces. During parallel scan, the row records are processed through callback functions. Each work thread calls the callback function once every time a row of records is scanned.

For the COUNT(*) statement, the callback function increments 1 to the thread-level counter. After the parallel scan ends, all counters are accumulated to obtain the result.

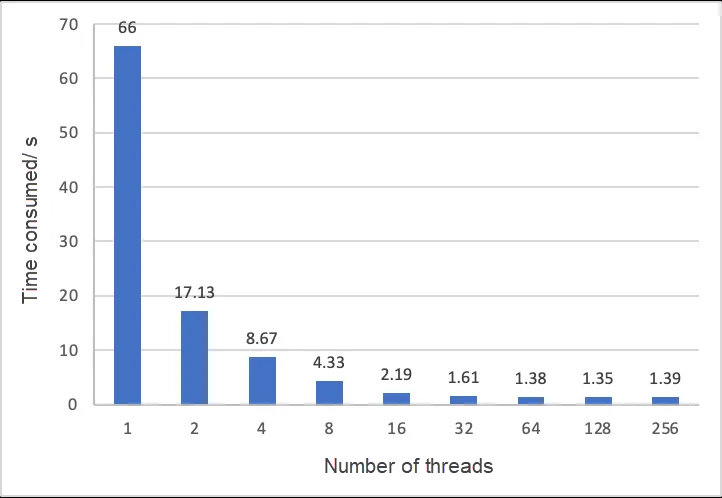

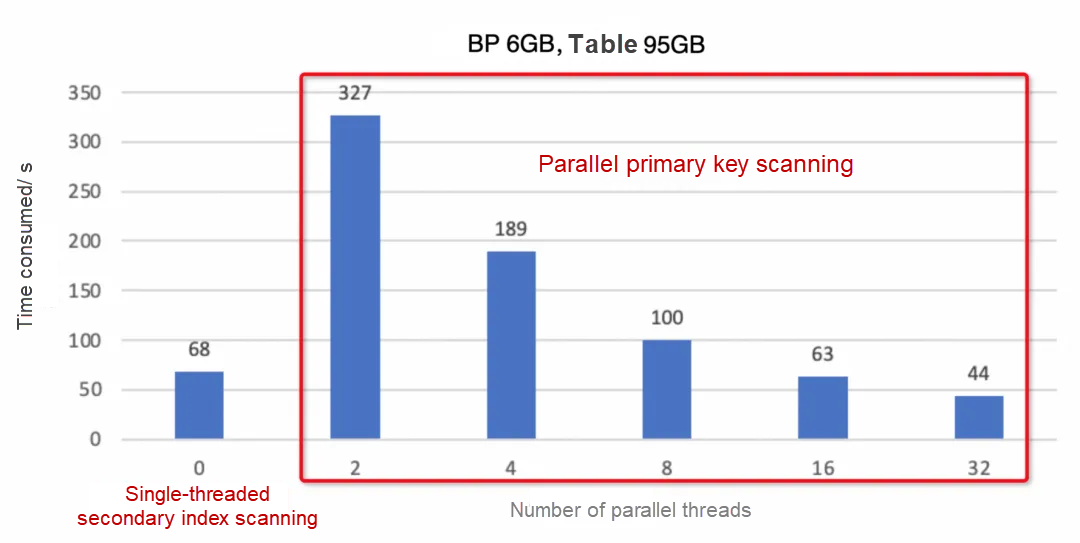

Let's take a look at the effect of COUNT(*) parallel scan. We test it through a Sysbench table containing 400 million rows of records with the following configuration:

• The parallel effect is evident. When the number of threads increases between 2 and 32, each time the number of threads doubles, the time consumed decreases by half, showing a linear downward trend.

• However, under high concurrency, there is no linear trend, and issues with the utilization of concurrent threads still exist.

• When the number of threads increases from 1 to 2, the time becomes 1/4 of the original. The reason is that when the number of threads is 1, it does not use a single worker thread for scanning but reverts to the original serial scanning logic of the user thread. After each row is scanned, the row_search_mvcc function (1600 rows) is used to locate the next row. The row_search_mvcc function is less efficient in this scenario, as many variable definitions and if conditions do not need to be executed, and the locking efficiency is low. The parallel work thread scans and locates the next line of records with only 100 lines of code and has higher locking efficiency, making it more efficient than row_search_mvcc.

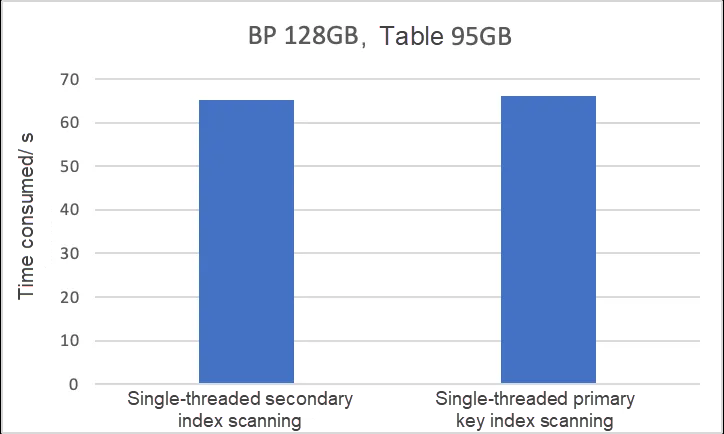

In official versions 8.0.14 to 8.0.36, the COUNT(*) statement supports parallel scans of primary key indexes instead of parallel scans of secondary indexes or single-threaded scans. Even if the indexes selected by the optimizer are secondary indexes, InnoDB forces primary key indexes to perform parallel scans. This results in a performance fallback: The time required to scan a primary key index by using multiple threads may be longer than that required to scan a secondary index by using a single thread.

Why is there a performance fallback? The time complexity of single-threaded primary key scanning and single-threaded secondary index scanning is o(n). In a pure memory scenario, only the COUNT++ operation is performed on each row of data, and no performance fallback occurs. The following table describes the time complexity.

However, when the buffer pool is small, the time is mainly spent on reading data pages into the buffer pool. Because the primary key index occupies much more disk space than the secondary index, the I/O times for scanning the primary key are much higher than that for scanning the secondary index, so multi-threaded scanning of the primary key index is not as fast as single-threaded scanning of the secondary index. The larger the table's primary key index is, the more serious the performance impact will be.

The preceding table shows the test results of a 95GB Sysbench table with a buffer pool configuration of 6GB. Among these results, parallel scanning with 16 threads on the primary key index outperforms single-threaded scanning of the secondary index. The larger the table's primary key index is, the more serious the performance impact will be.

AliSQL 8.0.25 discovered and fixed this issue. If the specified innodb_parallel_read_threads is 0, the index selected by the optimizer is used for scanning.

This issue is officially fixed in 8.0.37, where the primary key index is no longer forced to execute COUNT(*) statements. When the optimizer selects a secondary index, a single-threaded scan is performed; when the optimizer selects a primary key index, a parallel scan is performed.

release note: "MySQL no longer ignores the Optimizer hint to use a secondary index scan, which instead forced a clustered (parallel) index scan.".

This section describes how to execute the CHECK TABLE statement at the InnoDB layer. When the CHECK TABLE statement is executed at the InnoDB layer, it traverses each index of the table and performs the following checks on each index in turn:

1. The first index scan verifies the correctness of the B+ tree structure, scanning layer by layer from the root layer to the leaf layer.

• Starting from the leftmost node of the current level, traverse to the rightmost node in sequence.

• Check page consistency: FLAG checks, PAGE_MAX_TRX_ID checks, PAGE directory, and RECORD verification. It will traverse from the minimum record to the maximum record within the page.

• Check the direction of the node pointer.

• Check the direction of the parent node.

• Check the order of records between adjacent pages: the maximum record of the node is smaller than the minimum record of its right node.

• …

2. The second index scan is a row-by-row record scan, traversing from the minimum record in the B+ tree to the maximum record, and comparing the size of adjacent records in turn to see if they meet requirements.

In this example, the first index scan is single-threaded. During the second scan, if the index is a primary key index, the parallel scan is supported. For the secondary index, the single-threaded scan is performed.

The implementation of the CHECK TABLE parallel scan is the same as that of the SELECT COUNT(*) statement. The difference lies in the callback function.

COUNT*(*): The work thread counts each subtree, and the counts are aggregated at the end.

CHECK TABLE: The work thread compares the order of leaf node records within each subtree. There is no check for the order between subtrees because the order between pages is checked during the first scan.

When the CHECK TABLE work thread scans the subtree, a callback function is executed every time a row of primary key records is scanned. The logic of the callback function is to compare the order of the current row with the previous row.

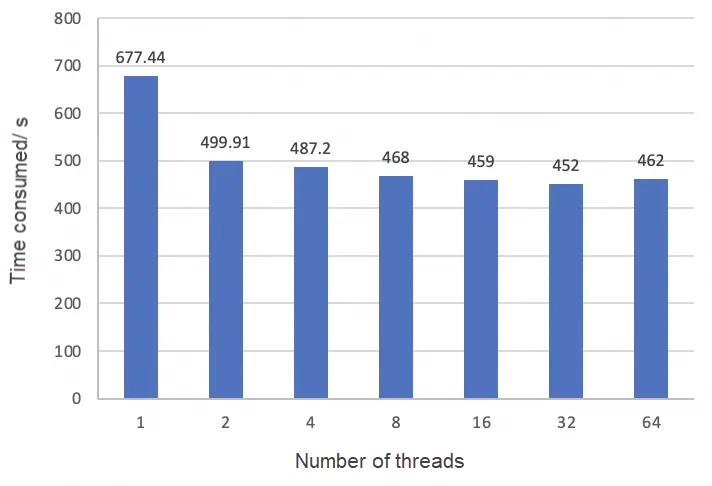

First, let's look at the effect of the CHECK TABLE parallel scan. A Sysbench table containing 400 million rows of records is used for testing. The test configuration is the same as that in COUNT(*). A Sysbench native table contains one primary key index and one secondary index. Each index is scanned twice when you execute the CHECK TABLE statement.

• When the number of threads increases from 1 to 2, the time consumed is significantly reduced. However, the benefit here is not brought by parallel scans. The reason is the same as the scenario where the number of threads in COUNT(*) changes from 1 to 2: the original single-threaded scan efficiency is poor.

• After the number of threads increases from 2, the effect is improved but not obvious, indicating that the majority of time consumption during CHECK TABLE is on the first scan.

After viewing the parallel process of CHECK TABLE, we can find some areas that can be optimized.

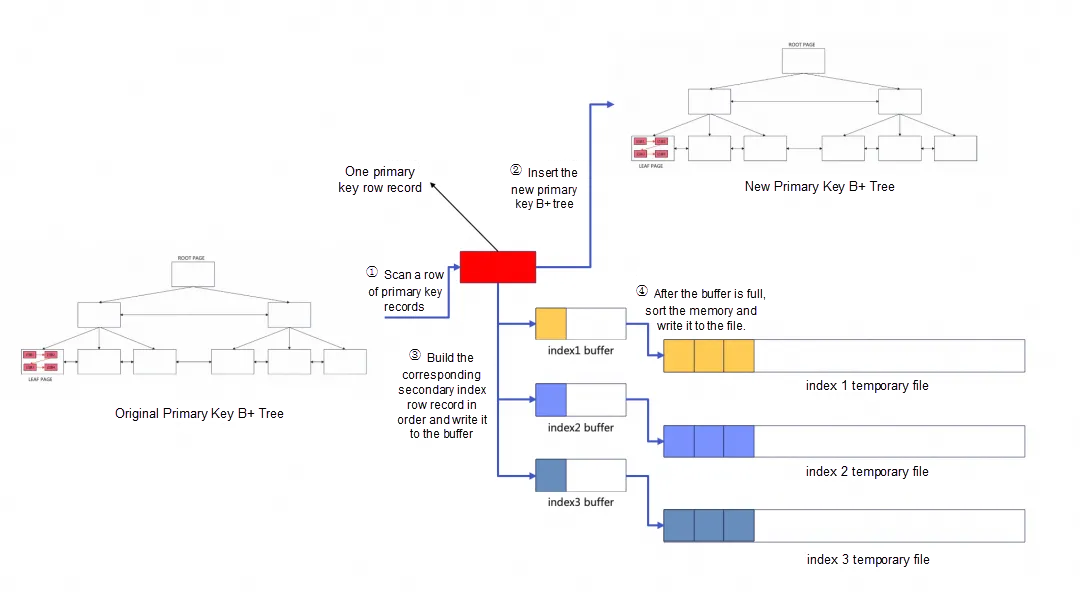

First, we briefly describe the execution process of creating a secondary index statement at the InnoDB layer, which can be divided into three stages:

1. Scan: Scan the clustered index from the minimum record to the maximum record in turn to build the secondary index row records, and then perform a few memory sort operations on the secondary index row records. If the memory is full, write the records to the temporary files.

2. Sort: Sort the temporary files.

3. Build: Insert the sorted data into the secondary index tree.

The granularity and implementation of parallel operations in each stage are different:

ddl::Context::build // Create a secondary index and rebuild the table entry.

|-> ddl::Loader::build_all

| |-> scan_and_build_indexes // There are multiple indexes when rebuilding the table.

| | |-> scan // The primary key index is scanned in parallel during CREATE INDEX and is scanned in a single thread when rebuilding the table.

| | | |-> Parallel_reader.add_scan(bulk_inserter) // The callback function for scanning each row

| | | |-> Parallel_reader.run // Parallel scan

| | |-> load // Multi-threaded sort and build

| | | |-> mt_execute

| | | | |-> Loader::Task::operator()

| | | | | |-> Builder::merge_sort // Sort,Builder::State::SORT

| | | | | |

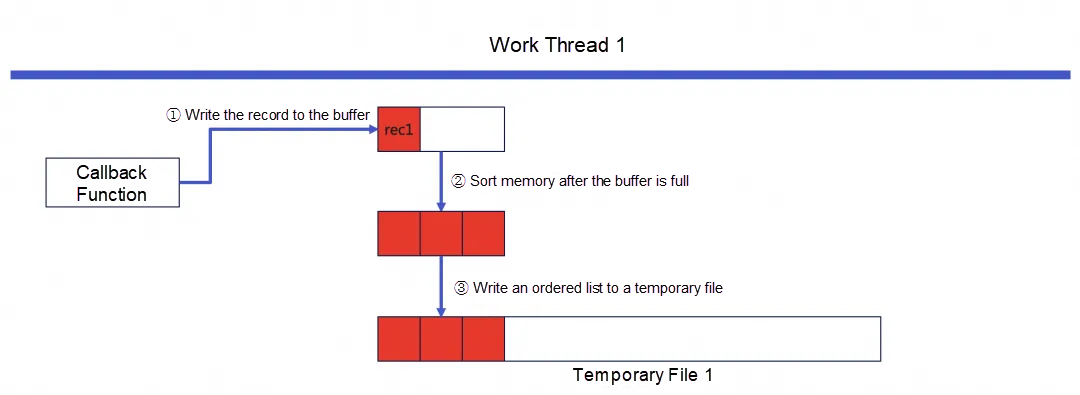

| | | | | |-> Builder::btree_build // Build,Builder::State::BTREE_BUILDDuring the parallel scan, each work thread will create a temporary file and a sort buffer. Each time the work thread scans a row of primary key records, it will call a callback function. The callback function is responsible for:

The following figure shows the process of writing the first ordered list to a temporary file. The size of an ordered list is the buffer size.

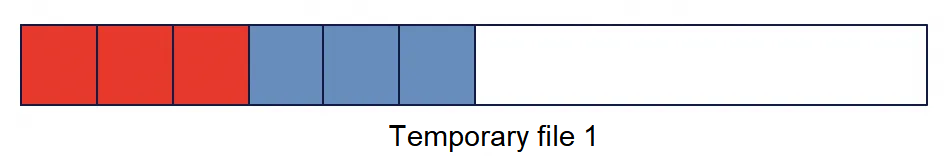

After work thread 1 scans a shard, there are multiple ordered lists on temporary file 1.

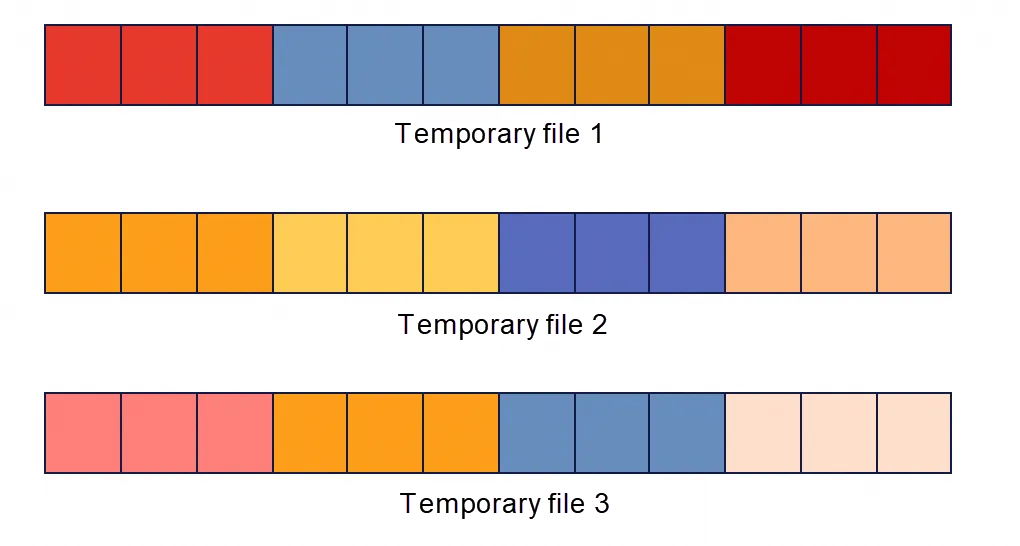

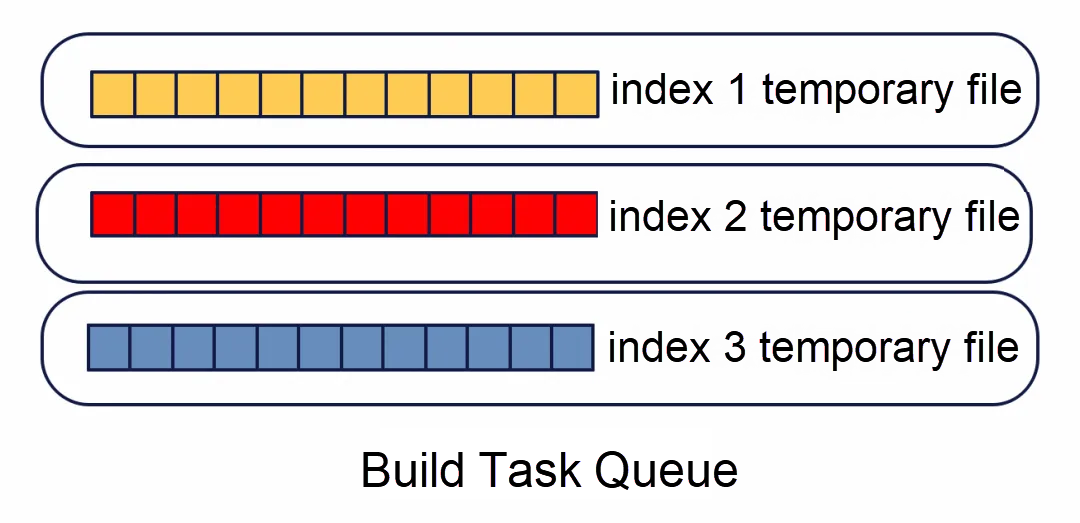

The work thread continuously retrieves shards from the queue for scanning. During the scanning process, ordered lists are written to corresponding temporary files. Assume that the innodb_parallel_read_threads is 3, then there are three parallel scan threads and three temporary files in total. After all shards are scanned in parallel, three partially ordered files will be obtained, each of which consists of multiple ordered lists.

Here is a summary of the parallel scan. The parallel scan work thread executes a callback function every time it scans a row of primary key records. The corresponding callback functions are designed for different upper-level SQL statements to complete the corresponding parallel tasks. The parallel scan execution processes of COUNT(*) full table, CHECK TABLE, and secondary index creation are the same. The parallel scan thread is controlled by the innodb_parallel_read_threads parameter. The main difference between the three lies in the callback function, which is summarized in the following table.

| COUNT(*) Full Table | CHECK TABLE | Secondary Index Creation | |

|---|---|---|---|

| Callback Function | Increment the count by 1. | Check the sequence of the current record and the previous record. | Write records to the sort file and do a small amount of sorting. |

Theoretically, any process that requires scanning a B+ tree can use this set of parallel interfaces to divide the tree into multiple subtrees for parallel scan, such as:

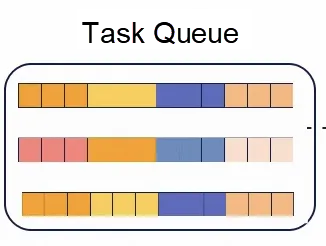

The sort and build of DDL are completed as tasks by the work thread. The work thread takes the tasks from the task queue for execution and determines whether to sort or build based on the task status.

DDL_parallel_work

|-> Loader::Task::operator() // Execute the task.

| |-> Builder::merge_sort // Sort,Builder::State::SORT

| |

| |-> Builder::btree_build // Build,Builder::State::BTREE_BUILD

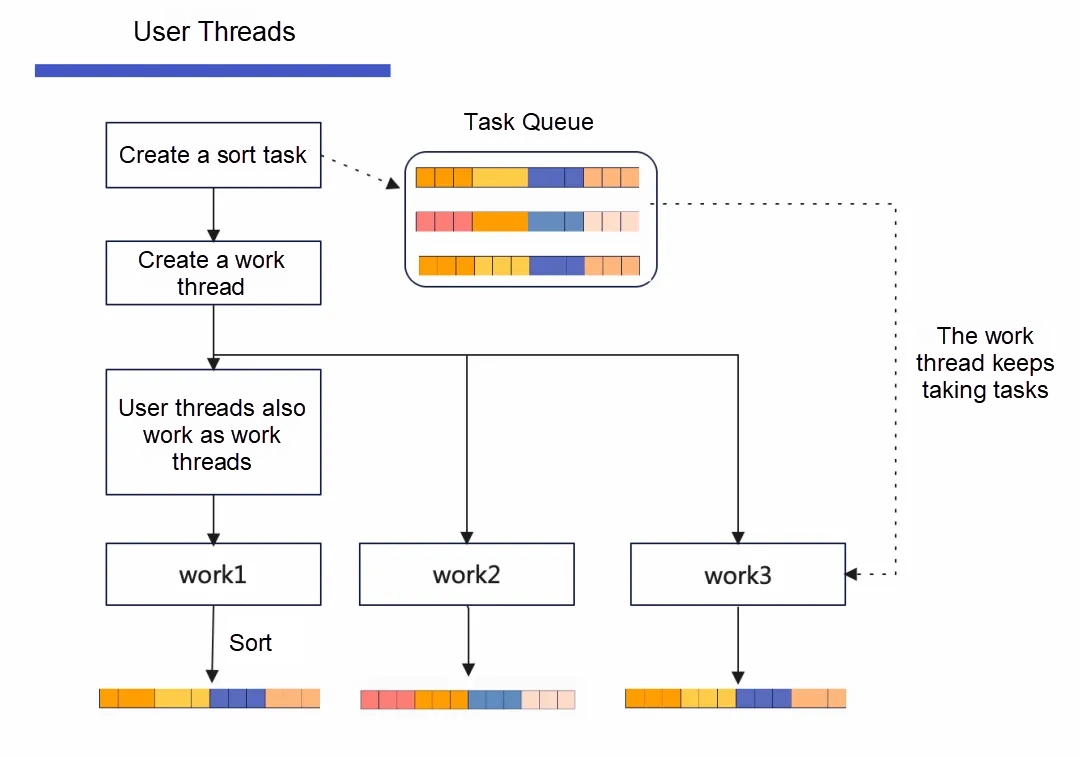

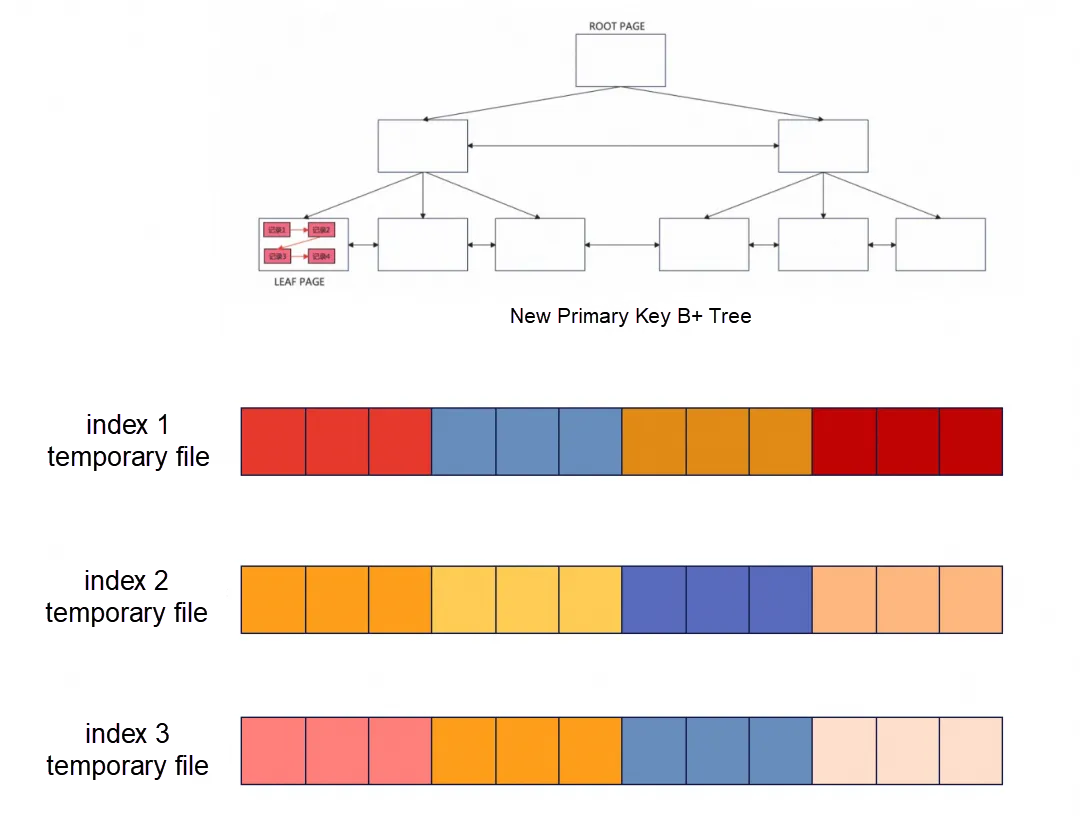

After the parallel scan is completed, three partially ordered temporary files are obtained, and the collection of the three files constitutes all records of a secondary index. The user thread will create three sort tasks (in fact, sort tasks may also be created by the work thread, which is simplified here) and set the task status to Builder::State::SORT.

The parallel granularity is at the temporary file level, and each temporary file corresponds to a sort task. After the user thread creates a work thread, it obtains tasks from the queue for execution. The work thread continuously retrieves tasks from the queue to execute. This is different from the parallel scan, where the user thread is not used as the worker thread.

It can be found that the number of parallel sort tasks is determined by the number of temporary files, while the number of temporary files is determined by the number of parallel scan threads. In the preceding section, it is assumed that the innodb_parallel_read_threads is 3. Here, even if the innodb_ddl_threads is set to 6, there are only three tasks, and the number of DDL sort threads actually executed is also three. If you set the innodb_ddl_threads to 2, then one DDL thread will process two temporary files. Therefore, it is recommended to set innodb_parallel_read_threads and innodb_ddl_threads both to the same value.

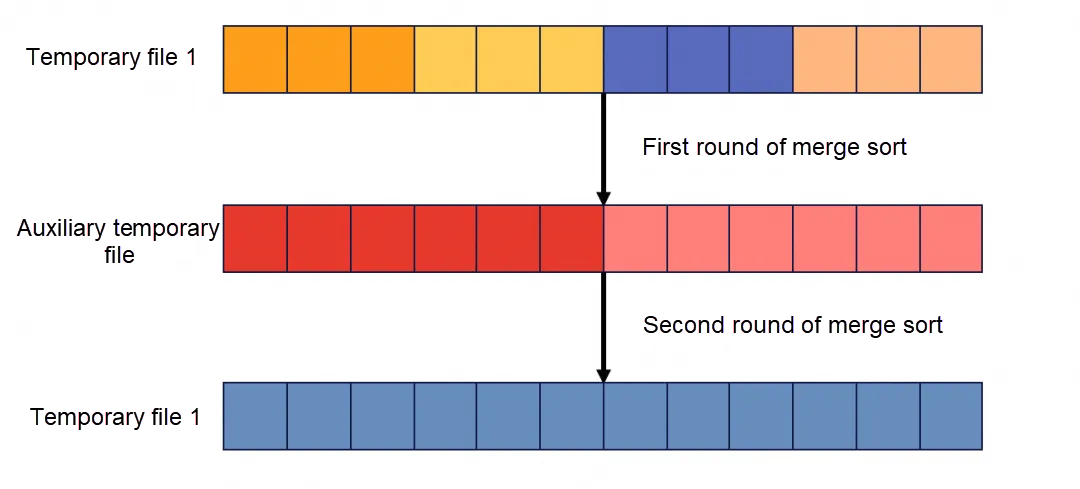

The DDL thread file sort process uses the merge sort algorithm, which creates a new temporary file to assist the sort. Assuming that temporary file 1 contains four ordered lists, a file containing two ordered lists is obtained after the first round of merge sort, and a globally ordered temporary file is obtained after two rounds.

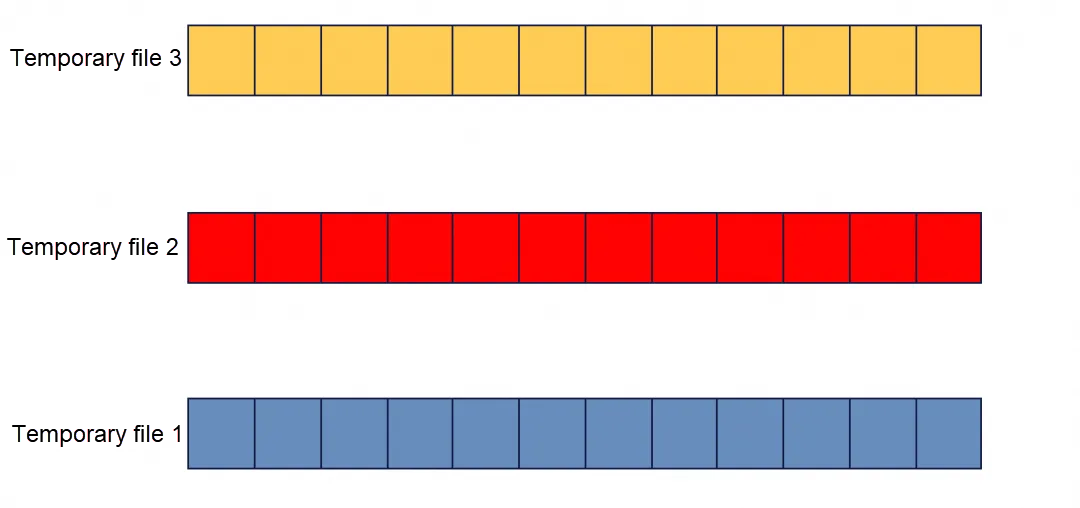

After all parallel sort tasks are completed, three ordered temporary files are obtained. These three files are no longer merged and sorted into one file.

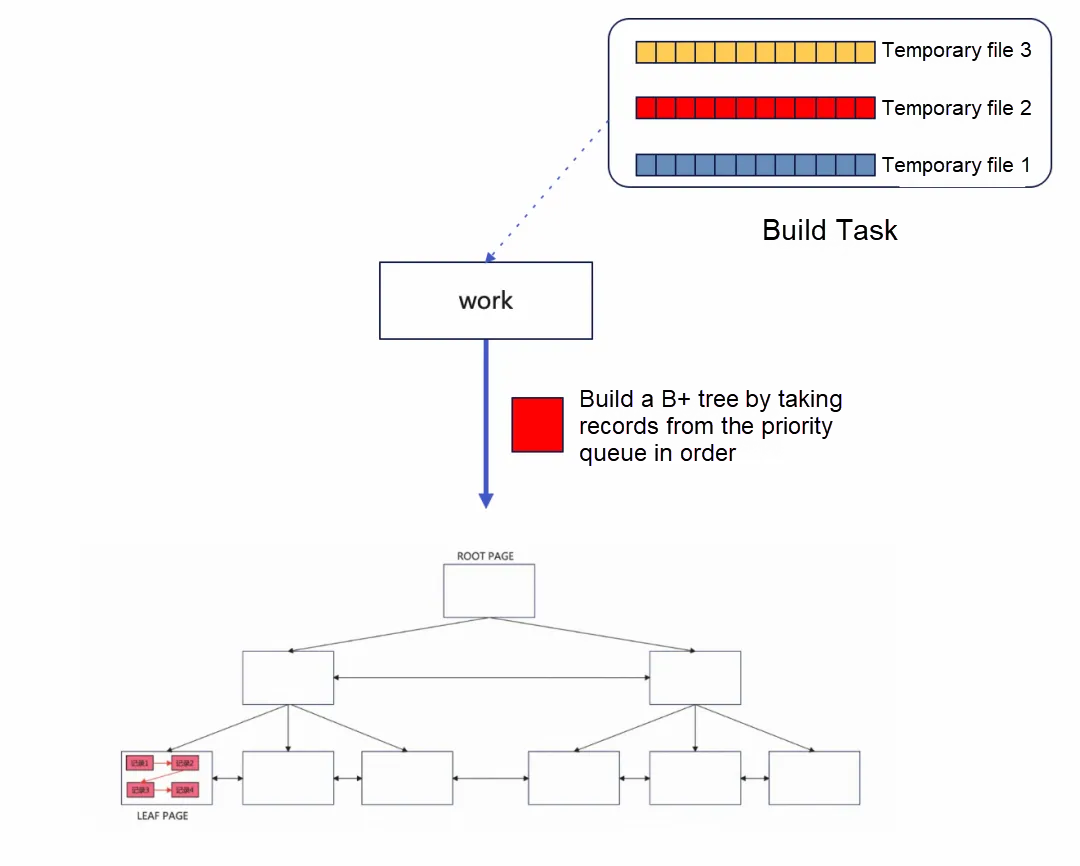

After all DDL work threads complete parallel sort, three ordered temporary files are obtained, and the three files are not merged and sorted into one ordered file. The three temporary files are constructed as a priority queue, adding a build task, and the task status is set to Builder::State::BTREE_BUILD. The build task is then executed by a work thread, which takes records from the priority queue in order and writes them to the B+ tree row by row to complete the build process. The specific build process is not described in this article.

To see the effect of parallel indexing, a Sysbench table containing 40 million rows of records (about 9.5GB in size) was used for testing. The remaining configuration is the same as that in section 3.1. Test SQL to add a secondary index to the pad column of the Sysbench table:

create index idx1 on sbtest1 (pad);To observe the parallel effect of each stage, several control experiments were conducted.

| innodb_parallel_read_threads | innodb_ddl_threads | Time consumed (seconds) | Difference from the previous row |

|---|---|---|---|

| 1 | 1 | 174.3 | NULL |

| 2 | 1 | 114.1 | 60.2 |

| 4 | 1 | 82.8 | 31.3 |

| 8 | 1 | 69.3 | 13.5 |

| 16 | 1 | 64.3 | 5 |

| 32 | 1 | 63.7 | 0.6 |

To analyze the effect of the parallel scan in the first stage, the above table sets innodb_ddl_threads to 1 and innodb_ddl_buffer_size to 4GB. Setting this value as large as possible can make all data sorted in memory, which can minimize the time required for sorting files in the second stage, and reduce the interference with the parallel scan in the first stage. After increasing innodb_parallel_read_threads:

• The time consumed has decreased, indicating that the parallel scan in the first stage is effective, and the time consumed in the second and third stages is not significant.

• The time consumed does not decrease linearly with the increase of innodb_parallel_read_threads, indicating that the second and third stages will consume a portion of the time.

• The time difference between each two experiments basically decreases linearly with the increase of innodb_parallel_read_threads, indicating that the parallel scan effect in the first stage is in line with expectations.

• Unlike COUNT(*) and CHECK TABLE parallel scans, when innodb_parallel_read_threads is 1, a single parallel work thread is used to scan the index, so the efficiency of COUNT(*) in single concurrency is not poor.

| innodb_parallel_read_threads | innodb_ddl_threads | Time consumed (seconds) |

|---|---|---|

| 1 | 1 | 168.3 |

| 1 | 2 | 170.2 |

| 1 | 4 | 173.7 |

| 1 | 8 | 171.8 |

To analyze the effect of the parallel sort in the second stage, the above table sets innodb_parallel_read_threads to 1 and innodb_ddl_buffer_size to 32MB. After increasing innodb_ddl_threads, it can be found that DDL parallel threads do not work. The reason will be explained later.

| innodb_parallel_read_threads | innodb_ddl_threads | Time consumed (seconds) | Difference from the previous row |

|---|---|---|---|

| 16 | 1 | 182 | NULL |

| 16 | 2 | 137.7 | 44.3 |

| 16 | 4 | 117.5 | 20.2 |

| 16 | 8 | 109.1 | 8.4 |

| 16 | 16 | 105.5 | 3.6 |

| 16 | 32 | 108.3 | -2.8 |

To further analyze the effect of the parallel sort in the second stage, the above table sets innodb_parallel_read_threads to 16, so that the parallel scan time in the first stage is as small as possible, reducing the interference to the second stage.

The innodb_ddl_buffer_size is 32MB, and the buffer size allocated to each parallel thread is 32MB/16=2MB. Since the buffer is used for memory sort, setting a relatively small buffer size can reduce the amount of memory sorting, thus increasing the time spent on file sorting tasks. This makes it easier to observe the performance improvement of parallel sort (it can simulate large table scenarios online, where creating a secondary index requires extensive file sorting). Then gradually increase the innodb_ddl_threads.

• The time difference between each two experiments basically decreases linearly as innodb_ddl_threads increases, indicating that parallel sort in the second stage is in line with expectations.

The following section describes the execution process of the InnoDB REBUILD TABLE statement, which can be divided into three stages:

1. Scan: Traverse the B+ tree from the first record of the primary key B+ tree to the last record, skipping empty records. Perform the following operations after scanning each row:

2. Sort: Sort the secondary index temporary files.

3. Build: Insert the sorted secondary index data into the secondary index tree.

Compared with the CREATE INDEX statement, there is an additional operation of 1.a to build a primary key B+ tree, and multiple secondary indexes may be created. If only one secondary index is rebuilt, steps 1.b, 2, and 3 are a CREATE INDEX operation.

When rebuilding a table, whether each stage supports parallel operations and the granularity of parallel operations are quite different from those of creating secondary indexes in parallel.

One of the main purposes of rebuilding a table is to rebuild the primary key index B+ tree, reclaim holes, and shrink the table space. The primary key B+ tree itself is ordered, so there is no need to sort it in parallel. It is only need to scan the primary key index serially in ascending order and then insert the primary key row records into the new B+ tree to obtain a compact primary key B+ tree.

It seems unnecessary to parallelize the process of rebuilding primary key indexes. MySQL itself does the same: primary key indexes cannot be scanned in parallel when rebuilding tables, and the innodb_parallel_read_threads parameter does not take effect on rebuilding tables.

The side effect of this is that for a single secondary index to be rebuilt, multiple temporary files cannot be written in parallel during the scan stage. In addition, you cannot perform parallel sort on a single index during the sort stage.

However, the sort and build stages of secondary indexes still support parallel operations, but the granularity becomes the index level. Assume that there are four secondary indexes to be rebuilt, the sort and build of the four indexes can be processed in parallel by DDL parallel threads.

The scanning process for rebuilding the primary key B+ tree is completed by the user thread in a single thread. Assuming that the original table has one primary key index and three secondary indexes, the scanning process creates a temporary sort file for each secondary index, resulting in a total of three temporary files. Each time a row of primary key records is scanned, the following operations are performed:

After the single-threaded scan is completed, a new compact primary key B+ tree and three partially ordered temporary files are obtained, each of which corresponds to a set of secondary index records:

The parallel sort of REBUILD TABLE is the same as that of CREATE INDEX in implementation except that the parallel granularity is different.

As mentioned earlier, when creating a secondary index, the number of parallel tasks for multi-threaded sorting is determined by the innodb_parallel_read_threads, and the corresponding number of temporary files is created for a single index, which is sorted in parallel within the index. If a single-threaded scan is used to rebuild the table, the innodb_parallel_read_threads is 1. Then, there is only one temporary file for a single secondary index. In this parallel sort architecture, parallel sort cannot be performed inside the secondary index.

However, multiple secondary indexes support parallel operations. In other words, the granularity of parallel operations is at the index level. The temporary files of the three indexes here are added to the task queue as three sort tasks. The work thread takes out the tasks to sort the temporary files. The task queue is as follows: each task corresponds to an index and a partially ordered temporary file.

Assuming that the innodb_ddl_threads is configured to 3, then there will be three threads in parallel, each thread corresponding to an index and a temporary file. Each thread will merge and sort the temporary files. After that, three files are obtained, and each file records all the records of the corresponding secondary index in sequence.

There is no need to create a new work thread here. The work thread created in the previous step will not exit. The thread still retrieves the task from the task queue for execution, but the task is changed from sorting to building. In the previous step, each sort task corresponds to an index. After each sort task is completed, a build task is automatically added and put into the task queue with the task status set to Builder::State::BTREE_BUILD. Three build tasks are added here, each of which corresponds to the build of an index.

Each DDL thread is responsible for scanning the build of an index, reading row records in sequence, and building a new compact secondary index B+ tree row by row. Finally, after the process of rebuilding the table, one new compact primary key B+ tree and three new compact secondary index B+ trees are obtained.

Parallel build and sort of REBUILD TABLE and parallel sort and single-threaded build of CREATE INDEX have similar implementations, the difference lies in the parallel granularity and parallel task. Both parallel build and sort of REBUILD TABLE are performed at the index granularity, and the number of threads is determined by the innodb_ddl_threads. The summary is as follows.

| Number of parallel threads | Parallel granularity | |

|---|---|---|

| Parallel sort stage for creating secondary indexes | min (innodb_parallel_read_threads, innodb_ddl_threads) | The granularity is a single temporary file. The number of temporary files is determined by innodb_parallel_read_threads. |

| Build stage for creating secondary indexes | Single work thread | Single work thread |

| Parallel sort stage for rebuilding the table | min (the number of secondary indexes in the original table, innodb_ddl_threads) | The granularity is INDEX. |

| Parallel build stage for rebuilding the table | min (the number of secondary indexes in the original table, innodb_ddl_threads) | The granularity is INDEX. |

Let's take a look at the effect of the parallel rebuild of the table. A Sysbench table containing 40 million rows of records (about 9.5GB in size) was used for testing. The innodb_ddl_buffer_size is set to 65,536, and the rest of the configuration is the same as that in section 3.1. The test includes two types of tables:

1. Sysbench native table: contains one primary key index and one secondary index.

2. Sysbench table with two additional indexes: contains one primary key index and three secondary indexes. Add two secondary indexes to the pad and c columns based on the original Sysbench table:

create index idx1 on sbtest1 (pad);

create index idx2 on sbtest1 (c);The test SQL statement is a REBUILD TABLE statement:

ALTER TABLE sbtest1 ENGINE = INNODB;|

innodb_parallel_read_threads |

innodb_ddl_threads |

Time consumed (seconds) |

|

|

Sysbench native table: contains one primary key index and one secondary index |

1 |

3 |

186.6 |

|

2 |

3 |

185.8 |

|

|

4 |

3 |

187.3 |

|

|

Sysbench table with two additional indexes: contains one primary key index and three secondary indexes |

1 |

1 |

849.5 |

|

1 |

2 |

602.3 |

|

|

1 |

3 |

534.9 |

|

|

1 |

4 |

533.3 |

• It can be found that the innodb_parallel_read_threads parameter does not result in performance improvement when rebuilding the table.

• The innodb_ddl_threads parameter improves performance by enabling parallel processing for REBUILD TABLE statements that contain multiple secondary indexes. The more secondary indexes there are, the higher the degree of parallelism.

This article describes the basic usage, effects, principles, and restrictions of the parallel acceleration technologies provided by MySQL at the InnoDB layer. Some queries and DDL statements support parallel scan, parallel sort, and parallel construction of the B+ tree. All of these technologies are available in AliSQL. Feel free to use them. MySQL currently supports a limited number of parallel statements. We expect the community to launch more supported parallel statements in the future. The AliSQL team will also add features on parallel queries and DDL, and will support parallel scans of secondary indexes in the future. Below are some practical suggestions.

• We recommend that you set the appropriate innodb_parallel_read_threads, innodb_ddl_threads, and innodb_ddl_buffer_size parameters based on the instance specifications and load.

• Since parallel scan significantly improves the effect of the COUNT(*) full table statement, you can set an appropriate innodb_parallel_read_threads parameter based on the table size.

• As parallel scan has little effect on improving CHECK TABLE statements, it is recommended to set the innodb_parallel_read_threads value to 2 during CHECK TABLE.

• For statements that create secondary indexes, parallel scan and parallel sort can significantly improve the performance. We recommend that you set the innodb_parallel_read_threads and innodb_ddl_threads to the same value. You can set applicable values based on the table size. When you increase innodb_parallel_read_threads, you'd better increase innodb_ddl_buffer_size simultaneously.

• For REBUILD TABLE statements, parallel operations significantly improve the performance. You can sort and rebuild files with indexes as the parallel granularity. You can set the innodb_ddl_threads value based on the number of original table indexes. There is no need to set the innodb_parallel_read_threads parameter and the innodb_ddl_buffer_size parameter can be set based on memory usage.

[01] https://github.com/mysql/mysql-server

[02] http://mysql.taobao.org/monthly/2020/11/03/

[03] http://mysql.taobao.org/monthly/2019/03/05/

[04] http://mysql.taobao.org/monthly/2021/03/03/

[05] http://mysql.taobao.org/monthly/2019/12/05/

[06]https://dev.mysql.com/doc/refman/8.4/en/online-ddl-parallel-thread-configuration.html

[07] https://dev.mysql.com/blog-archive/mysql80-innodb-parallel-threads-ddl/

[08] http://mysql.taobao.org/monthly/2019/12/05/

Connection Skew after Tair (Redis® OSS-Compatible) Proxy RT Rise

Does a Single Table with 5 Million Rows Need Database Sharding and Table Sharding?

ApsaraDB - September 10, 2025

ApsaraDB - August 23, 2024

ApsaraDB - October 26, 2023

Alibaba Cloud Project Hub - April 11, 2023

ApsaraDB - December 12, 2025

ApsaraDB - June 19, 2024

PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Time Series Database (TSDB)

Time Series Database (TSDB)

TSDB is a stable, reliable, and cost-effective online high-performance time series database service.

Learn More AnalyticDB for MySQL

AnalyticDB for MySQL

AnalyticDB for MySQL is a real-time data warehousing service that can process petabytes of data with high concurrency and low latency.

Learn More Security Center

Security Center

A unified security management system that identifies, analyzes, and notifies you of security threats in real time

Learn MoreMore Posts by ApsaraDB