By Jinwu

This article focuses on the offline data import and export scenarios for MySQL and PolarDB-X. Firstly, it compares the performance of the ecological BatchTool and the traditional mysqldump through experiments. Then, it introduces the usage of different parameters of BatchTool combined with specific practices.

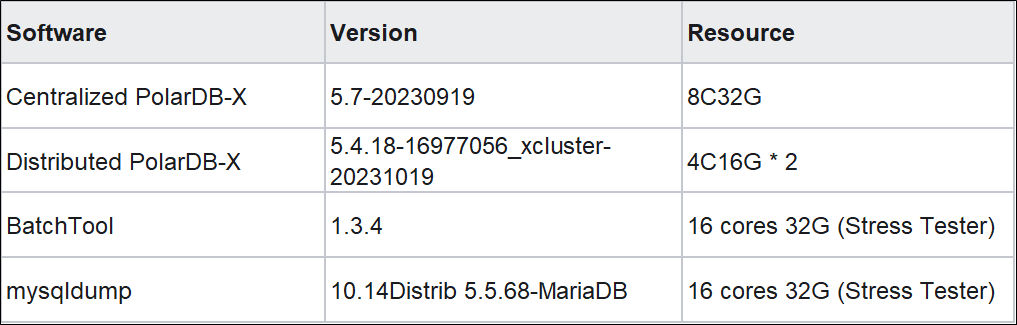

The following table lists the software, versions, and system resources used in the performance experiment.

The test table is a lineitem table of the TPC-H specification, with a total of 59.98 million rows. The size of the exported CSV file is 7.4 GB.

CREATE TABLE `lineitem` (

`l_orderkey` bigint(20) NOT NULL,

`l_partkey` int(11) NOT NULL,

`l_suppkey` int(11) NOT NULL,

`l_linenumber` bigint(20) NOT NULL,

`l_quantity` decimal(15,2) NOT NULL,

`l_extendedprice` decimal(15,2) NOT NULL,

`l_discount` decimal(15,2) NOT NULL,

`l_tax` decimal(15,2) NOT NULL,

`l_returnflag` varchar(1) NOT NULL,

`l_linestatus` varchar(1) NOT NULL,

`l_shipdate` date NOT NULL,

`l_commitdate` date NOT NULL,

`l_receiptdate` date NOT NULL,

`l_shipinstruct` varchar(25) NOT NULL,

`l_shipmode` varchar(10) NOT NULL,

`l_comment` varchar(44) NOT NULL,

PRIMARY KEY (`l_orderkey`,`l_linenumber`)

) ENGINE=InnoDB DEFAULT CHARSET=latin1;

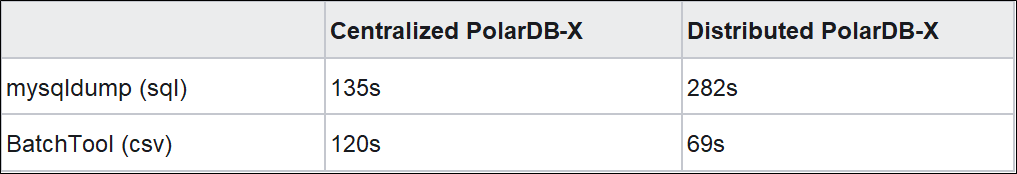

Note: mysqldump supports exporting CSV files, but it relies on the

select ... into outfilecapability of MySQL on the server. This function is not open to the ApsaraDB used in the article. Therefore, the article only tests the efficiency of mysqldump native exporting SQL files.

When exporting data as SQL files, mysqldump automatically combines data from multiple rows into a batch insert statement. The size of this statement is controlled by the net-buffer-length parameter, with a default size of 1MB. Increasing this value appropriately can enhance the performance of subsequent imports.

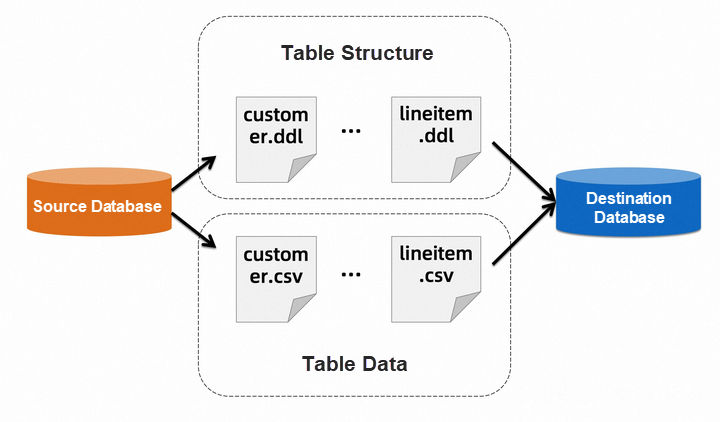

When BatchTool imports the distributed tables of PolarDB-X, its performance is significantly improved compared to mysqldump. This is because BatchTool is adapted to the partitioned tables of PolarDB-X. After obtaining the metadata of a logical table, BatchTool establishes multiple parallel connections to simultaneously export the physical table of the underlying storage, making full use of the network bandwidth.

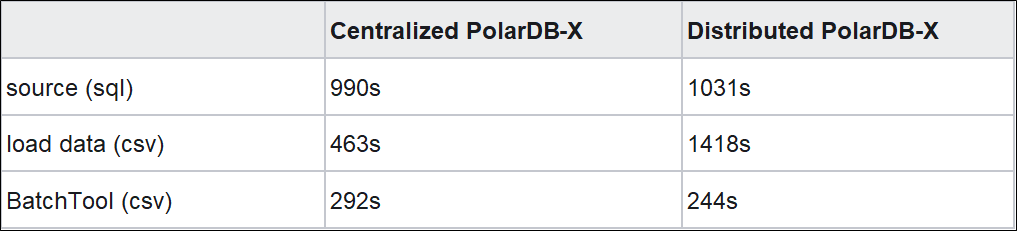

Note: Each round of test creates an empty table for import.

• Importing SQL Files by Using Source:

The whole process of importing SQL files from the source is serial execution. Because mysqldump has spliced batch insert statements before exporting SQL files, the import efficiency is not low.

• Importing CSV Files by Using Load Data:

In MySQL, although load data is also executed in a single thread, the efficiency is higher than that of importing SQL files from the source. The reason is that load data only transmits text files on the network but does not parse or optimize the SQL files when load data executes on MySQL. If we want to further improve the performance, we need to manually split files and open multiple database connections to import databases in parallel.

However, in PolarDB-X, load data is slower and the performance is lower because the text stream calculates routes on CNs. Then, load data splices batch insert statements and sends the statements to the storage node for execution. The process cannot use the high-performance MySQL native load data protocol.

• Importing CSV Files by Using BatchTool:

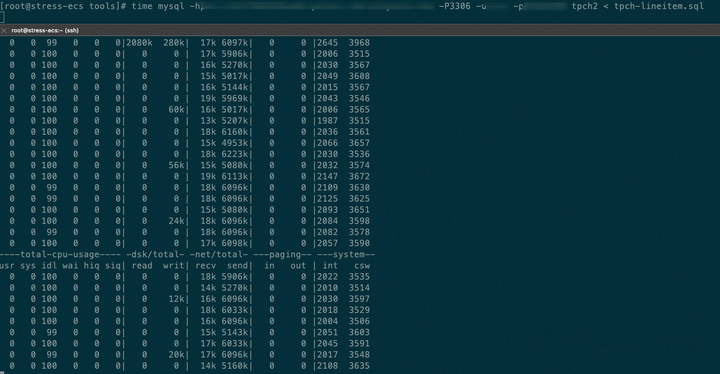

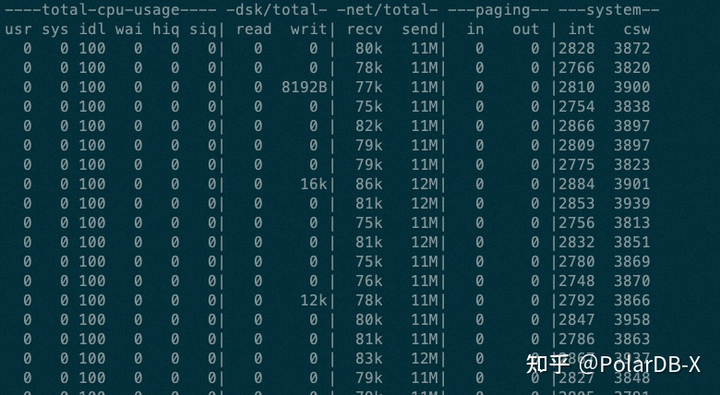

From the system monitoring, the network transmission bandwidth of CSV imported from BatchTool reaches 39 MB/s. That is more than three times that of load data. Based on the producer-consumer model, BatchTool allows you to concurrently read a single file and then concurrently send batch insert statements to the database. It makes full use of the hardware resources of the stress tester and improves the throughput when importing data.

In addition to regular parallel data import and export, BatchTool also supports ecological features for data migration. The following section describes how to use BatchTool and the values of various built-in modes in different scenarios.

BatchTool supports the import and export of various databases that are compatible with the MySQL protocol. The parameters used to connect to databases are -h $(the host of the database) -P $(the port number) -u $(user name) -p $(password) -D $(target database). It also supports connections to the load balance address, for example: -lb true -h "host1:3306,host2:3306" –uroot.

Note: The following introduction omits parameters related to database connection. It only displays parameter settings related to the function.

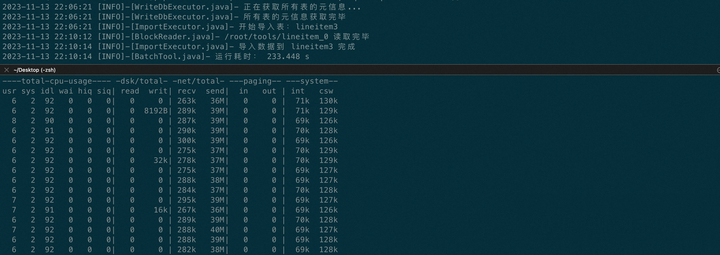

BatchTool supports to import or export the entire database, including all table structures and table data. If a database contains many tables (for example, thousands of tables), it is inefficient to export the table structure based on mysqldump and then execute the source to migrate the table structure, because the process is completely single-threaded.

BatchTool supports executing DDL statements to create tables in parallel while reading SQL files of the table structure to improve efficiency.

If -t $(table name) is specified in the command line parameters, the table is imported or exported. If not, the database is imported or exported.

The command parameter that corresponds to metadata is -DDL $(migration mode). The following migration modes are available:

• NONE: The table structure is not migrated. This is the default value.

• ONLY: Only the table structure is migrated. No data is migrated.

• WITH: Both the table structure and data are migrated.

For example, the table structure of all tables in the tpch will be exported: -D tpch -o export -DDL only.

BatchTool supports specifying the number of exported files or the maximum number of rows in a single file. For a standalone MySQL database, BatchTool exports a table as a file by default. For a distributed database PolarDB-X, BatchTool exports each physical table shard as a file by default, that is, the number of files equals the number of shards. In addition, another two parameters will affect the number of files exported:

• -F $(the number of files): it fixes the number of files to be exported. The file is evenly split based on the total data size of the table.

• -L $(the maximum number of rows): it specifies the maximum number of rows in a single file. When the number of rows in a single file equals the maximum number, a new file is opened to continue writing.

For example, export each table in the tpch as a separate CSV file: -D tpch -o export -s , -F 1.

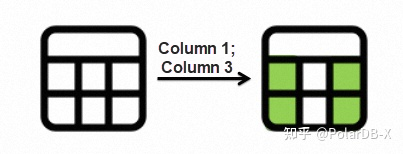

BatchTool supports importing or exporting specific columns of a specific table. The corresponding command line parameter is -col "$(column names separated by semicolon)". For example, we can specify the c_name, c_address, and c_phone columns of the exported table. Use commas (,) to separate them. In the first line of the file, the field name is -o export -t customer -col "c_name;c_address;c_phone" -s , -header true.

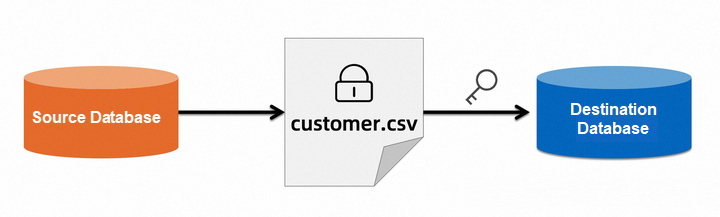

BatchTool supports streaming output of encrypted ciphertext data when a file is exported to avoid manual encryption after the plaintext data is exported. BatchTool also supports direct reading of encrypted files for data import (a correct key is needed) to avoid repeated decryption operations. Currently, two encryption algorithms are supported:

• AES-CBC

• SM4-ECB

The corresponding command line parameter is -enc $(the algorithm to encrypt) -key $(the key). For example, if we use the AES algorithm to encrypt the data of the customer table to be exported as a file, we need to specify the key as "admin123456": -o export -s , -t sbtest1 -enc AES -key admin123456 -F 1.

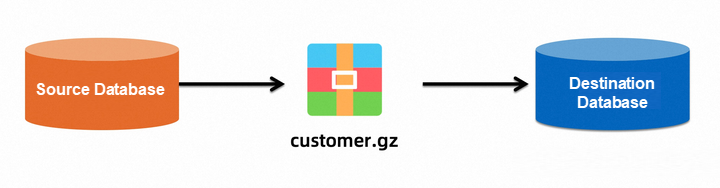

BatchTool can directly output files in a compacted form to reduce space usage. It also supports direct reading of data in compacted files to avoid repeated data decompaction. The corresponding command line parameter is -comp $(the algorithm to compress). For example:

-o export -t customer -s , -comp GZIP

-o import -t customer -s , -comp GZIP -dir data-test

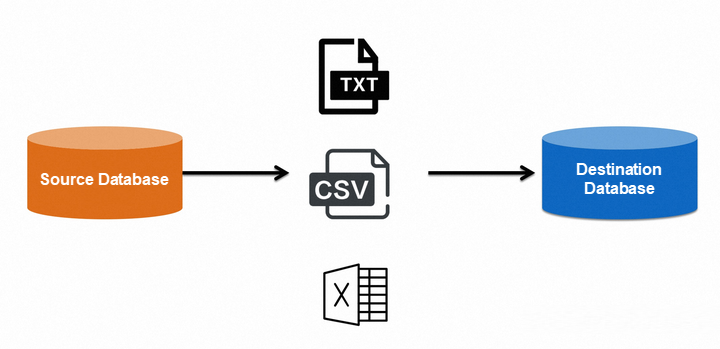

BatchTool supports import and export of the following file formats:

• CSV (a text file delimited by specified characters)

• XLS, XLSX (Excel table, a binary file)

• ET (WPS table, a binary file)

The corresponding command line parameter is -format $(the file format). For example, export the customer table to XLSX format table: -o export -t customer -format XLSX.

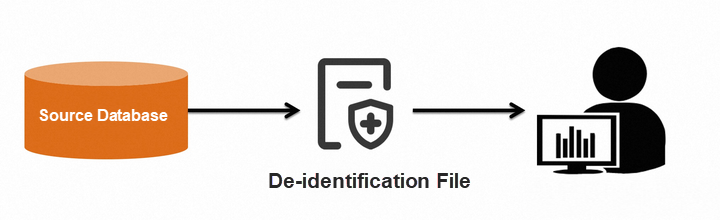

In many cases, the exported table contains sensitive data, such as name, mobile phone, and email. Therefore, the sensitive data needs to be processed and blurred through certain algorithms. By making the data unrecognizable or unrestored, data de-identification ensures data security and prevents data leaks.

This process is also called data desensitization/masking. BatchTool integrates with the simple data de-identification feature. With configuration, we de-identify specified field values while exporting table data, and then output the data to a file. BatchTool supports the following algorithms:

• Mask: It uses special characters such as asterisks (*) to replace true values in string data. It is commonly used in fields such as the mobile phone.

• Encryption: Symmetric encryption is a special reversible de-identification method. It uses a specified encryption algorithm and a key to encrypt sensitive fields. Low-privileged users without a key can only view meaningless ciphertext data. In some special scenarios, the raw data is accessible by using the key to decrypt data.

• Hash/Digest: It uses hash to calculate digest value. The method is commonly used in string data. For example, we can replace the user name string "PolarDB-X" with "d7f19613a15dcf8a088c73e2c7e9b856" to protect user privacy. The algorithm also supports salting to prevent hash value cracking.

• Rounding: This method ensures data security and the accuracy of the data range. For example, the date field is rounded from “2023-11-11 15:23:41” to “2023-11-11 15:00:00” for output.

The corresponding command line parameter is -mask $( the configuration of the de-identification algorithm). Taking the customer table of the TPC-H dataset as an example, the exported table data only shows the first three and the last four digits of the mobile phone c_phone (here, we can use the yaml configuration file instead of the command line parameters):

operation: export

# Use | as the field delimiter. Special characters must be enclosed in quotation marks.

sep: "|"

table: customer

# Sort by primary key c_custkey.

orderby: asc

orderCol: c_custkey

# Output the field name.

header: true

# Specify a data de-identification algorithm. Only display the first three and the last four digits.

mask: >-

{

"c_phone": {

"type": "hiding",

"show_region": "0-2",

"show_end": 4

}

}c_custkey|c_name|c_address|c_nationkey|c_phone

1|Customer#000000001|IVhzIApeRb ot,c,E|15|25-989-741-2988

2|Customer#000000002|XSTf4,NCwDVaWNe6tEgvwfmRchLXak|13|23-768-687-3665

3|Customer#000000003|MG9kdTD2WBHm|1|11-719-748-3364

...c_custkey|c_name|c_address|c_nationkey|c_phone

1|Customer#000000001|IVhzIApeRb ot,c,E|15|25-********2988

2|Customer#000000002|XSTf4,NCwDVaWNe6tEgvwfmRchLXak|13|23-********3665

3|Customer#000000003|MG9kdTD2WBHm|1|11-********3364

...

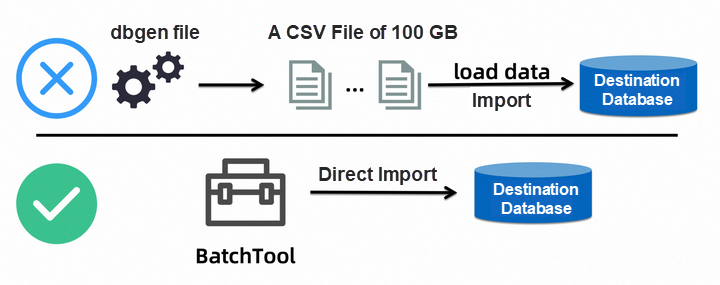

TPC-H is a commonly used benchmark test for the analytical query capabilities of databases in the industry. The traditional method of importing TPC-H datasets involves using the tpck-kit toolset to generate CSV text datasets on disk, followed by writing the datasets into the database using methods such as load data. This approach not only requires reserving corresponding disk storage space on the stress tester (for example, at least 1 TB for a TPC-H 1T test), but also necessitates scripting for parallelism during both data generation and import, resulting in overall inefficiency.

In contrast, BatchTool has a built-in TPC-H dataset generation component that allows for direct streaming import of TPC-H data to the database without the need to generate text data in advance, significantly improving efficiency.

The corresponding command line parameter is -o import -benchmark tpch -scale $(the size of the dataset). For instance, importing a 100GB TPC-H dataset traditionally takes 10 minutes to generate a text file and 42 minutes to import using load data, totaling 52 minutes. However, with BatchTool's online import, it only takes 28 minutes and does not occupy additional disk space, enhancing the efficiency of benchmark test preparation.

To sum up, the database import and export tool BatchTool has the following features:

• Lightweight and cross-platform capabilities

• High performance (optimized execution model that can adapt to distributed scenarios)

• Supporting a wide range of features and suitable for various scenarios

Furthermore, BatchTool is also open-sourced on GitHub. Feel free to give it a try.

Performance Comparison Between Alibaba Cloud DTS and MongoShake

PolarDB-X Open Source | Three Replicas of MySQL Based on Paxos

ApsaraDB - April 10, 2024

ApsaraDB - August 19, 2025

ApsaraDB - November 25, 2022

Alibaba F(x) Team - March 1, 2023

OpenAnolis - February 10, 2025

Alibaba Cloud Community - September 19, 2024

PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More AnalyticDB for MySQL

AnalyticDB for MySQL

AnalyticDB for MySQL is a real-time data warehousing service that can process petabytes of data with high concurrency and low latency.

Learn More ApsaraDB RDS for MySQL

ApsaraDB RDS for MySQL

An on-demand database hosting service for MySQL with automated monitoring, backup and disaster recovery capabilities

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by ApsaraDB