By Donggu

An index is an important means to accelerate database queries. In addition to offering high-performance secondary indexes, Lindorm also supports Search Indexes (SearchIndex), which are primarily aimed at complex multidimensional query scenarios. These indexes can cover use cases such as fuzzy queries, aggregation analysis, sorting, and pagination. This article focuses on the technical principles and core capabilities of SearchIndex.

In the context of massive data storage, with the advent of cloud-native, 5G/IoT era, new business models are continuously emerging. Beyond simple primary key queries and range queries, basic business needs now include simple analysis and multi-dimensional retrieval. Common query requirements include:

Such diverse needs for low-cost storage and retrieval of massive data have become basic requirements for increasingly more businesses. The Lindorm system continues to explore how to support diverse query scenarios on top of its own highly scalable, low-cost advantages.

To efficiently meet the demands of complex business queries with limited resources, Lindorm aims to design a new engine that operates with database features and is ready to use. This engine will help businesses address complex query issues over massive datasets. Indexes are typically used to accelerate queries, and introducing a new type of index can solve the problem of complex queries over vast amounts of data. As a multi-model database, Lindorm natively supports search engines and inherently possesses full-text indexing capabilities. By integrating search engines, Lindorm wide tables have added SearchIndex, allowing businesses to resolve complex query problems by applying for a new index, without the need to be aware of multiple underlying engines and data flows, similar to the convenience and speed of using Lindorm secondary indexes. The SearchIndex focuses on building the following capabilities:

When creating an index table using SearchIndex, you only need to enumerate the index column names; there is no need to be aware of the existence of the index table when querying. The following examples illustrate how to use Lindorm CQL to operate SearchIndex.

Note:

CQL is the query language for Cassandra, and Lindorm seamlessly supports the Cassandra API.

|

System Generated |

User Defined Attributes |

||||

|

ID |

Name |

Age |

Sex |

City |

Address |

|

1001 |

Alice Smith |

30 |

F |

New York |

123 Maple Street, Apt 4B |

|

1002 |

Bob Johnson |

32 |

M |

Los Angeles |

456 Oak Drive, Suite 210 |

|

1003 |

Carol White |

33 |

F |

Chicago |

789 Pine Lane, Unit 12 |

|

1004 |

David Brown |

28 |

M |

Houston |

101 Elm Court, Bldg 5 |

|

1005 |

Emma Davis |

41 |

F |

Phoenix |

202 Birch Avenue, House 10 |

|

1006 |

Frank Wilson |

17 |

M |

Philadelphia |

303 Cedar Boulevard, Apt 3C |

CREATE TABLE myTable (

id bigint,

name text,

age int,

sex text,

city text,

address text,

PRIMARY KEY (id)

) WITH compression = {'class': 'ZstdCompressor'};Create a full-text index on name, age, sex, city, and address.

CREATE SEARCH INDEX myIndex ON myTable WITH COLUMNS (name, age, sex, city, address);Note:

The order of the indexed columns does not affect the outcome; that is, an index on columns (c3, c2, c1) will have the same final effect as an index on columns (c1, c2, c3).

● Query

• Standard query

fuzzy query:SELECT * FROM myTable WHERE name LIKE 'A%'

Multi-dimensional query sorting:SELECT * FROM myTable WHERE city='Chicago' AND age>=18 ORDER BY age ASC

Multi-dimensional query pagination:SELECT * FROM myTable WHERE name='Carol White' AND sex=false OFFSET 100 LIMIT 10 ORDER BY age DESC• Advance query

Multi-dimensional query sorting:SELECT * FROM myTable WHERE search_query='+city:Phoenix +age:[18 TO *] ORDER BY age ASC'

Text retrieval:SELECT * FROM myTable WHERE search_query='address:Birch Avenue'SearchIndex has already been successfully applied to various business scenarios within Alibaba internally. This feature is now publicly available on the cloud, supporting the following key functionalities:

* represents any number of characters; ? represents any single character.With these functionalities, Lindorm can be easily applied to diverse business scenarios. Classic use cases include but are not limited to:

Order Details: Such as logistics orders and transaction bills, supporting multidimensional queries and sorting of orders.

Tagging and Profiling: For example, merchants tagging and targeting messages to buyers.

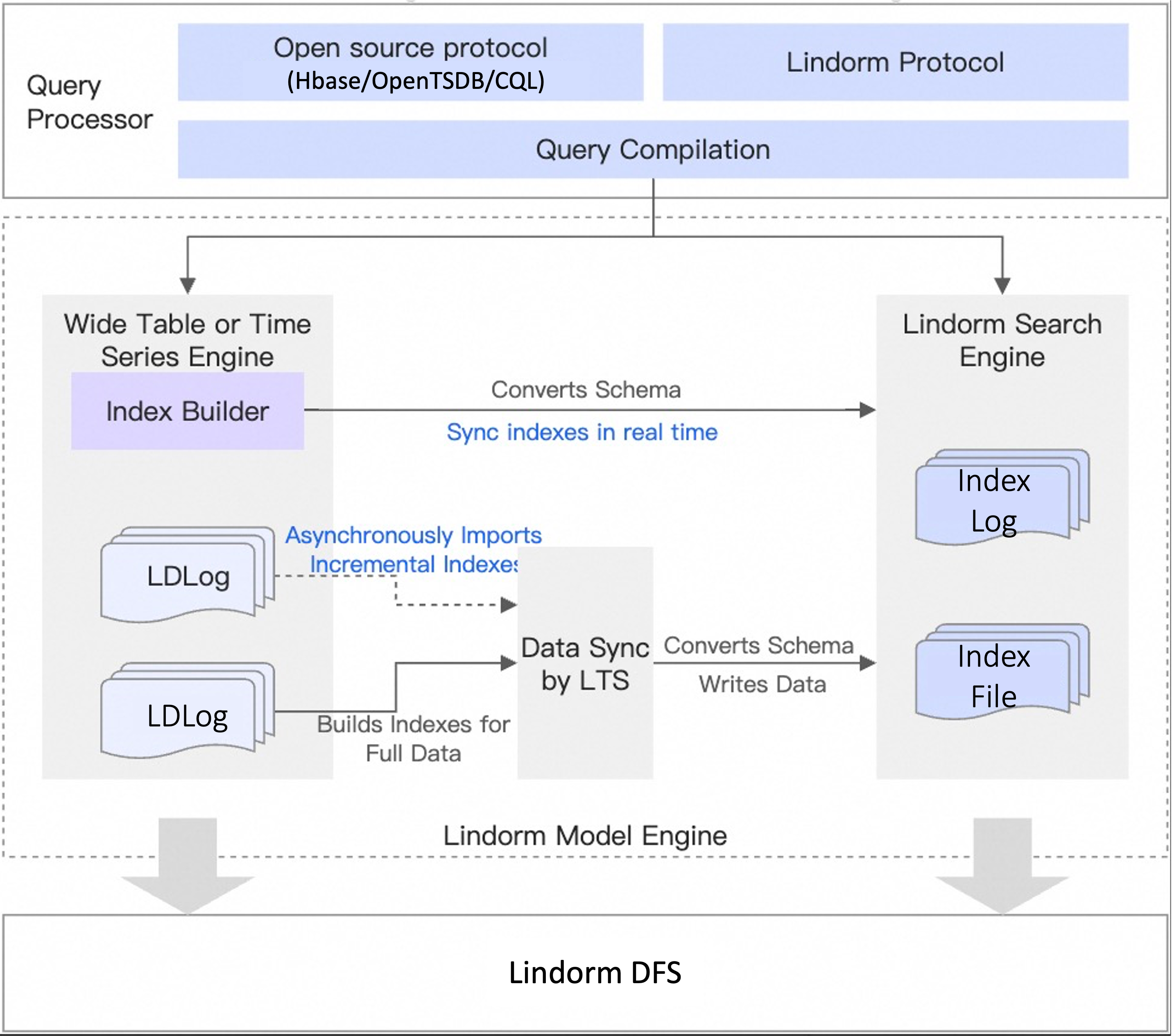

As a multi-model database, Lindorm supports various models and deeply integrates the search engine with the wide table model, offering a simple and user-friendly SearchIndex. The overall layered architecture is as follows:

It is composed of multiple QueryProcessor nodes, primarily responsible for query access, SQL parsing, and automatically selecting the appropriate index based on RBO.

Based on the metadata of index columns, this step transforms newly inserted or updated raw data into index data. For different scenarios, it allows choosing compatible Mutability attributes. For example, in everyday monitoring where data does not need updating after it’s written, the Immutable mode can be selected to generate index data directly. For stateful data that often requires partial updates, the process involves reading historical data to assemble index data and supports business-defined timestamp writing to ensure data is not disordered.

For eventual consistency mode (default), LTS (Lindorm Tunnel Service) serves as the data synchronization service within the Lindorm ecosystem, featuring efficient real-time synchronization and full data migration capabilities. It can monitor changes in the WAL in real-time, convert index data into searchable data, and supports multiple writes from a single read (one WAL can sync to multiple index tables), greatly enhancing sync efficiency. For strong consistency mode, after building the raw index data, it is synchronized to the search engine in real-time, creating full-text indexes immediately available for querying, thus providing a write-to-read strong consistency experience.

A distributed Lucene cluster composed of multiple nodes, where the data is divided into multiple shards based on Hash or Range, offering full-text search capabilities externally.

The index data is stored on the distributed file system Lindorm DFS. The architecture with separate storage and computation ensures excellent scalability. Additionally, transparent compression at the storage layer and smart cold/hot data separation can significantly reduce index storage costs.

As a distributed database, Lindorm can horizontally scale to support processing capabilities of up to hundreds of millions per second. If index DDL operations were to block DML, it would amplify the impact on high-concurrency business applications. Using Lindorm's distributed metadata management, SearchIndex supports online DDL operations through appropriate extensions without compromising data integrity.

In wide table applications, columns might not be fixed, particularly in labeling and portrait scenarios, where adding or deleting index columns is often required. SearchIndex provides Java API/CQL interface for dynamic index column operations.

Each index column supports multiple attributes: indexed (whether to index, default true), stored (whether to store raw value, default false), docvalues (whether to maintain forward index, default true), token type, etc. Initially, if an index column is not set to stored, raw values will not be stored in the index table, and the service will automatically fetch raw data from Lindorm wide table. At any time, these settings can be changed, for example, changing the stored attribute to true via an interface.

Lindorm wide tables support setting compression algorithms (e.g., ZSTD, Snappy) on creation or modifying them dynamically post-creation. SearchIndex, being an independent index table relying on Lucene, initially only supported LZ4 and ZLIB compression. To ensure uniform attributes between the primary and index tables, modifications to Lindorm include support for ZSTD and Snappy compression in Lucene. Thus, any changes in the primary table compression algorithm will trigger linked modification in SearchIndex, effectively reducing index storage size.

To automatically discard historical data, Lindorm supports dynamic table TTL changes, for example, setting TTL=30 days means data older than 30 days from now will be discarded and unavailable for queries. Lindorm implemented row-level TTL capabilities in Lucene, synchronized with the primary table’s TTL to ensure data consistency between the primary and index tables.

Index table statuses include: DISABLED (non-writable, non-readable), BUILDING (writable, non-readable), and ACTIVE (writable, readable). During index creation, deletion, or historical data indexing, dynamic status changes in the index table are often required without affecting ongoing DML requests.

Similar to the Lindorm wide table, when designing SearchIndex, Lindorm provides support for multiple consistency levels suited for various business scenarios.

|

Consistency Levels |

Read-Write Consistency Guarantee |

Availability |

|

Eventual Consistency (EC) |

After data is written, a certain period of time (in seconds) is required before it can be read. |

Read and write operations can avoid any hangs and glitches. Read and write recovery time after a crash is 10 ms. |

|

Strong Consistency (SC) |

After data is written, it can be read immediately with 100% certainty. |

Only the primary replica provides read and write services. Recovery time for the primary replica is generally at seconds level after crash. |

Indexes can accelerate queries, helping businesses further extract value from their data, but they also increase write and storage costs. On one hand, Lindorm significantly reduces the storage size of indexes with various efficient compression algorithms. On the other hand, it offers optional index building methods to minimize the impact on write throughput. The speed ofbuilding the Index WALdirectly affects the write performance of raw data. Lindorm's wide table is a KV database that naturally supports partial column updates, whereas the search engine Lucene can only update entire rows, not individual columns. Therefore, when building indexes, it is necessary to back-read the original table to obtain historical data in order to piece together a complete index WAL. Lindorm categorizes this back-read operation according to business scenarios and supports different options.

| Construction Method | Applicable Scenarios | Characteristics |

|---|---|---|

| IMMUTABLE (Cost: Lowest) |

Data only increases, not deleted (data can be eliminated via TTL). For example, monitoring data/log data. Once written, is not updated or deleted. |

Index construction is highly efficient, as there is no need to refer back to old data, and the index is directly generated based on the current data. |

| MUTABLE_LATEST (Cost: Medium) |

General use cases (excluding UDT). | If the index columns are c1 and c2, and first c1 is written, then c2 is written, you need to read the original c1 value upon writing the value of c2 to be able to assemble the complete index data c1, c2. |

| MUTABLE_ALL (Cost: Highest) |

Custom business-defined timestamp during data writing (User-Defined Timestamp). For example, in scenarios where both full tasks and incremental tasks coexist, timestamps are often required to be carried during full tasks to ensure incremental write data is not overwritten. |

● The storage engine layer has timestamps for each key-value. If the business does not explicitly set it when writing, the server will automatically set it to the system timestamp, following the principle of “larger timestamps take precedence.” ● When writing with a business-defined timestamp, it is necessary to retrieve all historical data (including deleted data) to accurately determine if the current write is valid. If the timestamp is smaller, the data is discarded, and no index is constructed. |

In the eventual consistency model, index data synchronization relies on the LTS service. As the data channel within the Lindorm ecosystem, the LTS service offers efficient real-time synchronization and full data migration capabilities. Data written to the wide table can be detected within milliseconds and quickly synchronized to the search engine.

LTS provides a web interface that allows users to view detailed information regarding index synchronization, such as synchronization duration, index table information, and the volume of synchronized data. Additionally, LTS can export this information as monitoring metrics, integrate with alert systems, and monitor the health of the synchronization pipeline in real-time.

Internally, LTS employs a high-concurrency producer/consumer model that supports rapid digestion of large volumes of data, enabling each WAL (Write-Ahead Log) to be read only once. It also supports horizontal scalability, allowing newly added nodes to quickly join the synchronization pipeline and accelerate the synchronization of index data.

Through a hidden timestamp attribute, data written first into the wide table is guaranteed to be indexed first in the search engine, and later data is indexed afterward. This ensures data consistency between the wide table and the search engine, completely resolving data inconsistency issues that existed with LilyIndexer.

For existing historical data, the full task execution mechanism of LTS enables efficient retrieval of raw data from wide tables to generate indexes. TB-level data volumes can complete index construction within minutes.

Supports comparison and validation of existing data to quickly identify inconsistent index data, helping businesses promptly discover issues.

Index data can be queried immediately after being written successfully, achieving real-time visibility. This is a strongly consistent model. The underlying SearchIndex relies on Lucene, which has a notable "limitation": data cannot be queried immediately after being written; it requires explicit execution of Flush or Commit operations to be searchable. This makes Lucene-based services unsuitable for real-time business scenarios and only applicable to monitoring, logging, and other weak real-time scenarios. In the industry, Lucene-based distributed search engines like Elasticsearch/Solr offer near real-time query (NRT) functionality to mitigate this issue, ensuring that index data is searchable within a certain timeframe (usually seconds), but still failing to meet real-time requirements.

To solve the problem where written data cannot be queried immediately, Lindorm implements a real-time visible indexing scheme based on Lucene. Through fine-grained data structure design and dynamic memory management mechanisms, it ensures that once index data is successfully written, it can be queried immediately, achieving true real-time visibility.

CQL is the official query language of Cassandra, acting as an SQL dialect suitable for NoSQL databases. Given Lindorm's seamless compatibility with Cassandra, it is recommended to use CQL to access SearchIndex by default.

DDL

CREATE SEARCH INDEX index_name [ IF NOT EXISTS ] ON [keyspace_name.]table_name

| [ WITH [ COLUMNS (column1,...,columnn) ]

| [ WITH [ COLUMNS (*) ]DROP SEARCH INDEX [IF EXISTS] ON [keyspace_name.]table_name;REBUILD SEARCH INDEX [IF EXISTS] ON [keyspace_name.]table_name;ALTER SEARCH INDEX SCHEMA [IF EXISTS] ON [keyspace_name.]table_name

( ADD column_name

| DROP column_name) ;DML

SELECT selectors

FROM table

WHERE (indexed_column_expression | search_query = 'search_expression')

[ LIMIT n ]

[ ORDER BY column_name ] When the standard query syntax cannot meet the retrieval requirements, you can consider using search_query to directly retrieve data from the search engine. The syntax used is also Lucene's syntax. For example, in the use case below, it is equivalent to retrieving data where the city is 'hangzhou' and the age is between 1 and 18.

SELECT name,id FROM myTable WHERE search_query = '+city:hangzhou +age:[1 TO 18]';Note:

More detailed CQL Syntax can refer to CREATE INDEX.

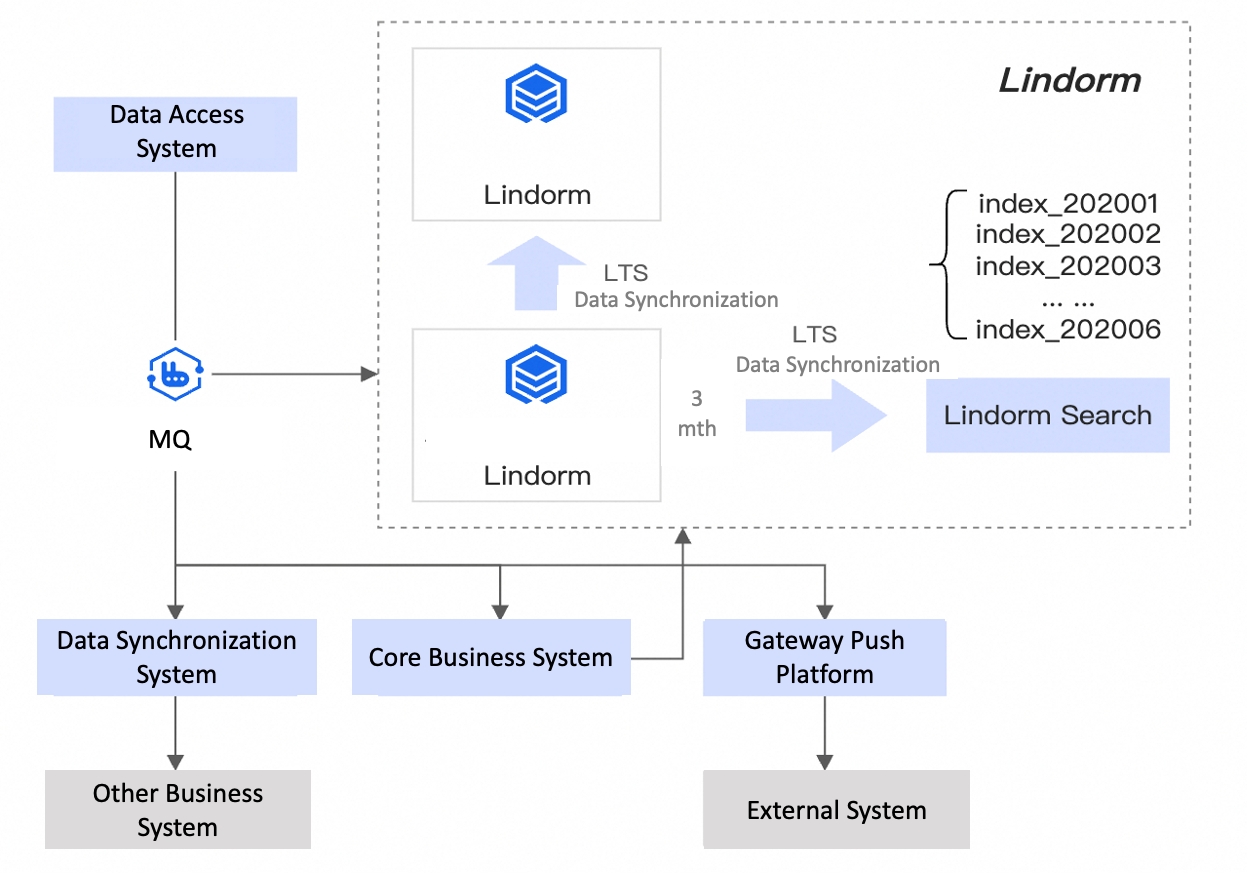

For business scenarios such as logistics, third-party payment, and mobile travel, the storage of order data is a core requirement. Additionally, order data often has its unique inherent characteristics.

Previously, to address these demands, a typical solution was MySQL plus a search engine. The business data would be written to both systems, or real-time synchronization would be achieved using binlog, and queries would retrieve results from different systems. As the data volume grows, the architecture might evolve into MySQL for hot data, Lindorm for cold data, plus the search engine. While this setup can effectively solve business problems, it requires considerable time and human resources to maintain multiple systems.

Now, Lindorm can solve these problems with a one-stop solution, eliminating concerns about data transfer and providing a unified API for access.

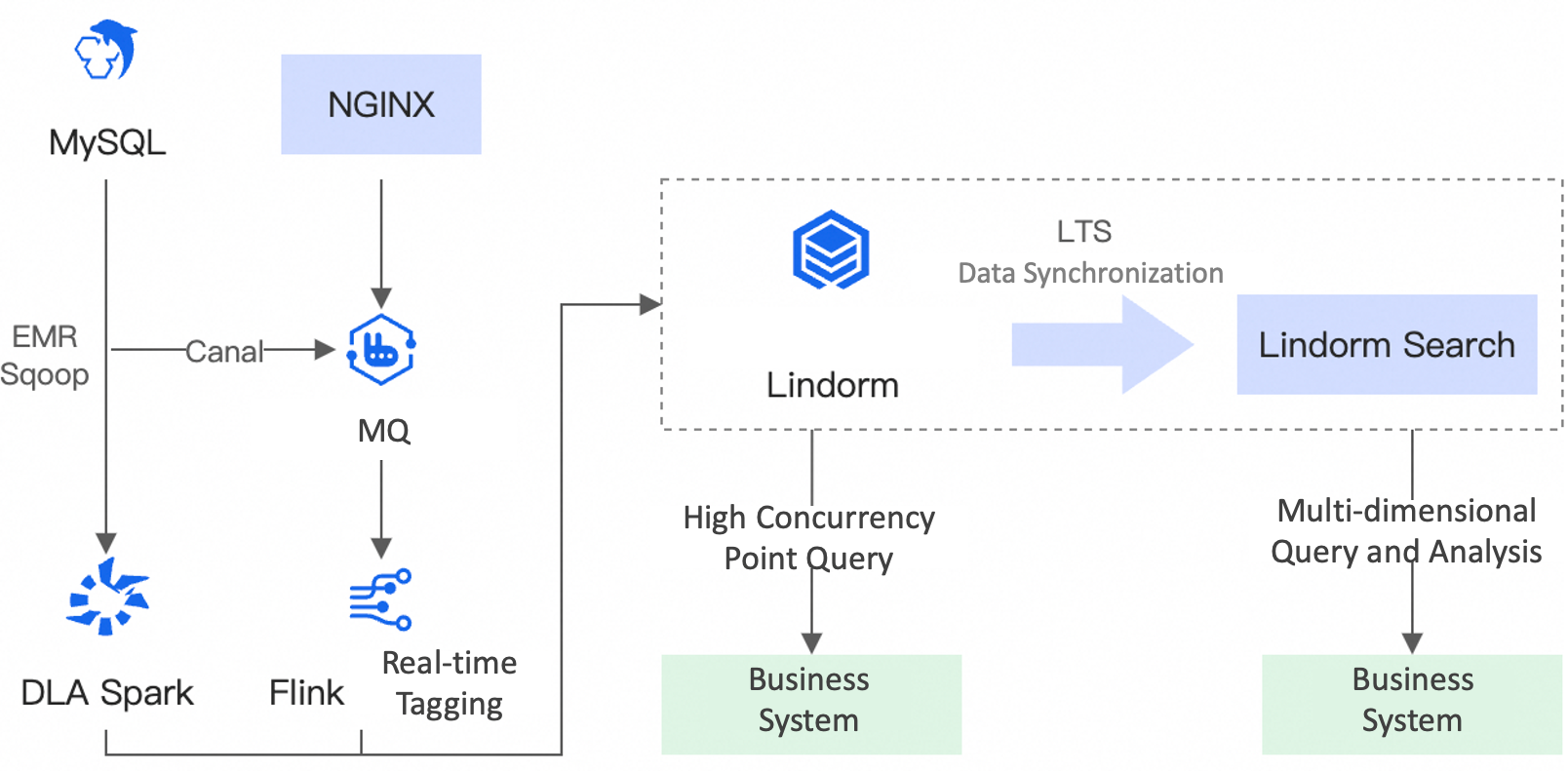

There are generally two types of user profile data: basic data and tagged data obtained through analysis. This data can be applied to marketing, recommendation, and other scenarios, helping businesses achieve rapid revenue growth. The main pain points of profile data are as follows:

Profile scenarios generally do not require strong transactional demands but involve large data volumes and high concurrent read/write operations, making relational databases less suitable. Lindorm, as a NoSQL database, is very suitable for such scenarios.

Features like multiple column families, dynamic columns, and TTL (Time-To-Live) are well-suited for business scenarios with non-fixed table structures and frequent changes. It also offers:

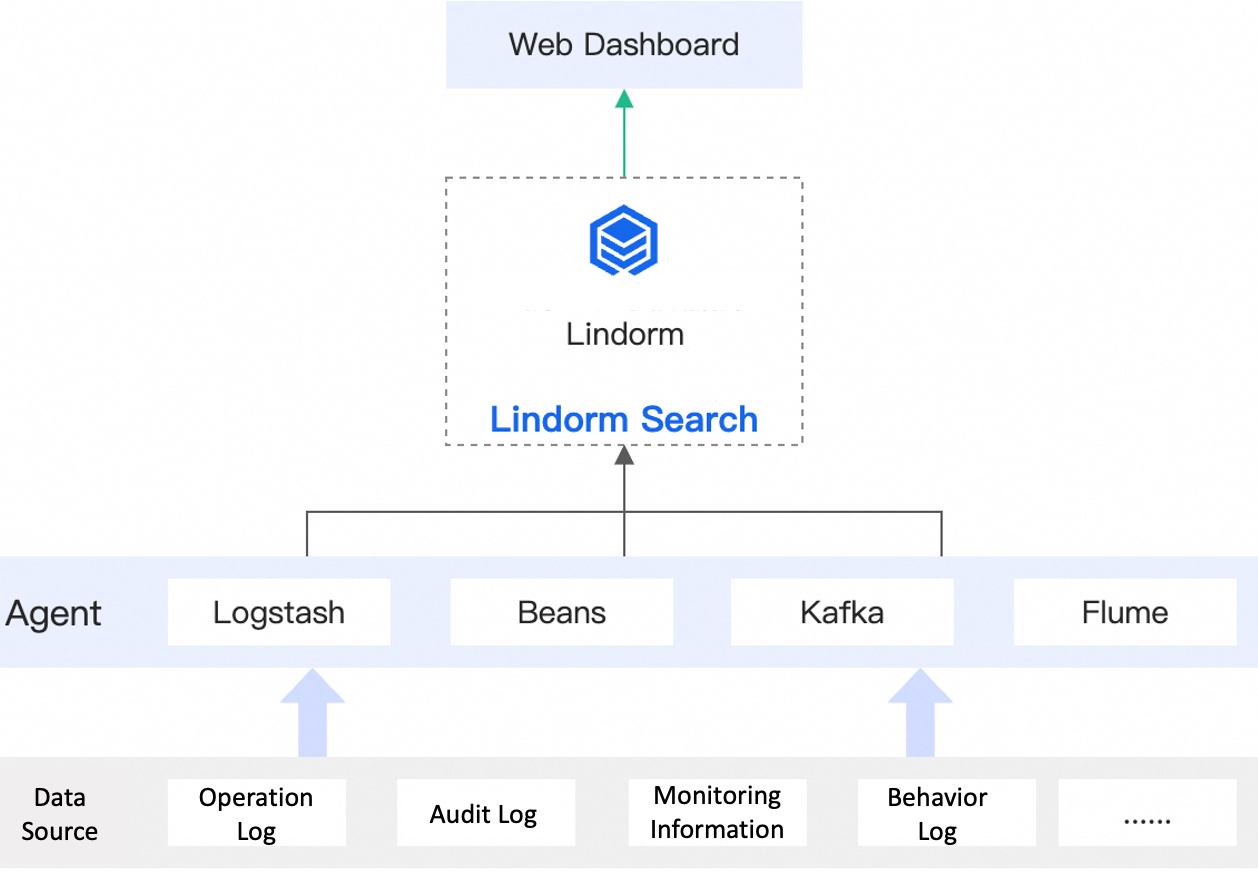

The sources of logs are very diverse, such as system logs, database audit logs, user behavior logs, etc. These data are typically stored in open-source Elasticsearch (ES) in internet companies, constructing a one-stop log platform with the help of the ELK stack. However, the storage cost of ES is very high, and storage and computation often need to be deployed on the same machine. Under massive data volumes, system maintenance faces numerous challenges, and tasks such as data migration and node scaling often require manual intervention. The SearchIndex of the multi-model database Lindorm is a better choice for log retrieval scenarios. It reduces costs by storing massive data through a wide-table engine, accelerates queries by building appropriate indexes with a search engine, and further reduces business development costs through unified API operations.

Alibaba Clouder - February 14, 2020

ApsaraDB - June 4, 2020

ApsaraDB - December 13, 2024

ApsaraDB - December 17, 2024

Hironobu Ohara - June 13, 2023

ApsaraDB - July 28, 2021

Best Practices

Best Practices

Follow our step-by-step best practices guides to build your own business case.

Learn More Lindorm

Lindorm

Lindorm is an elastic cloud-native database service that supports multiple data models. It is capable of processing various types of data and is compatible with multiple database engine, such as Apache HBase®, Apache Cassandra®, and OpenTSDB.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More Oracle Database Migration Solution

Oracle Database Migration Solution

Migrate your legacy Oracle databases to Alibaba Cloud to save on long-term costs and take advantage of improved scalability, reliability, robust security, high performance, and cloud-native features.

Learn MoreMore Posts by ApsaraDB