By Yang Wenlong, nicknamed Zhengyan at Alibaba.

Since 2019, Alibaba's Lindorm engine has come to serve several of the core business units at Alibaba Group, including the e-commerce platforms of Taobao and Tmall, Ant Financial, the logistics network of Cainiao, as well as several other platforms including Alimama, Youku, Amap, and the Alibaba Media and Entertainment Unit, bring new levels of performance and availability to these systems. In fact, during last year's Double 11 shopping festival-China's Black Friday and easily the world's largest online shopping event-the number of requests during the promotion's peak hours reached a whopping 750 million requests per second, with an insane daily throughput of 22.9 trillion requests. And, the average response time was less than 3 milliseconds, and the overall storage volume had reached hundreds of petabytes.

These mind-blowing figures reflect a year's worth of effort, hard work, and dedication from Alibaba's HBase and Lindorm team. Lindorm is a brand-new product that has been reconstructed and upgraded based on HBase by the HBase and Lindorm teams at Alibaba in the face of great pressures in terms of both scale and cost at Alibaba Group. The team drew on its past experience and expertise in handling hundreds of petabytes of data, managing hundreds of millions of requests, and dealing with thousands of businesses over many years. Lindorm in many ways represents a significant improvement and evolution over HBase in terms of performance, functionality, and availability.

In this post, we will be discussing Lindorm's core capabilities paying special attention to Lindorm's advantages in terms of performance, functionality, and availability. Last, we will describe some ongoing projects involving Lindorm.

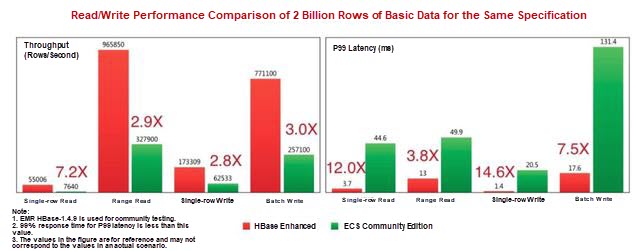

Compared with HBase, Lindorm provides a completely new level of optimizations for remote procedure calling, memory management, cache, as well as log writing. At the same time, it also introduced many new technologies, which have significantly improved read and write speeds through Alibaba's several business systems. Although using the same hardware, Lindorm's throughput can exceed that of HBase by over 500%, while Lindorm only suffers from 10% of the glitches that have plague HBase in the past. This performance data was not generated in labs, but it was shown with the Yahoo Cloud Serving Benchmark (YCSB)-and without any modifications to original parameters. We have published the test tools and scenarios in Alibaba Cloud's help documentation so that anyone can obtain the same results by following our guide there.

The performance that we've achieved was, of course, backed by some cutting-edge technologies that were developed by and for Lindorm over several years' time. The following sections briefly introduce some of the technologies used in the Lindorm kernel.

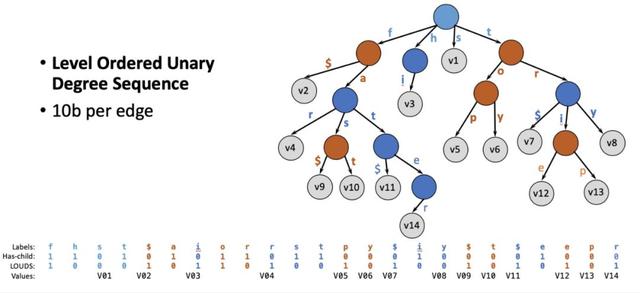

First, the Lindorm file LDFile, similar to HFile in HBase, was created in the read-only B+ tree structure, where file indexes are crucial data structures. Indexes in the block cache have a high priority and need to be resident in memory as far as possible. Therefore, if you can reduce the index space, the memory space required by indexes in the block cache can also be reduced. And, if the index space remains unchanged, you can increase the index density and reduce the data block size to improve performance. In HBase, index blocks contain all rowkeys, while many rowkeys have the same prefix in a sorted file.

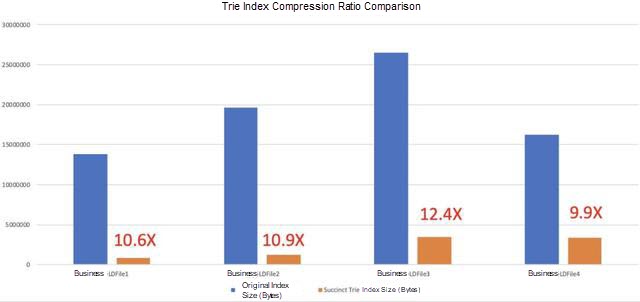

The trie data structure can save only one prefix for rowkeys that have the same prefix, which prevents the possibility of creating waste through repeated storage operations. However, in the traditional prefix tree structure, pointers from one node to another take up an excessive amount of space. You can use Succinct Prefix Tree to solve this problem. SuRF, which is arguably SIGMOD's best paper in 2018, proposed a Succinct Prefix Tree to replace the Bloom filter and provide the range filtering function. Having been inspired by the paper, our team used Succinct Trie to create file block indexes.

At present, we use the index structure implemented by Trie index in many of our online business units. The results show that the Trie index can greatly reduce the index size in various scenarios, reducing the space required by up to massive 1200%. The saved space allows more index and data files in the memory cache, greatly improving request performance.

The Z Garbage Collector enables a pause of five milliseconds for 100 GB. It is powered by Dragonwell JDK and is a next-generation Pauseless Garbage Collector algorithm. It uses Mutator to identify pointer changes through read memory barriers, so that most mark and relocate operations can be executed concurrently, which is a huge advancement.

After many improvements and adjustments made by the Lindorm team and AJDK team, Z Garbage Collector has achieved production-level availability in the Lindorm scenario. Their main achievements include:

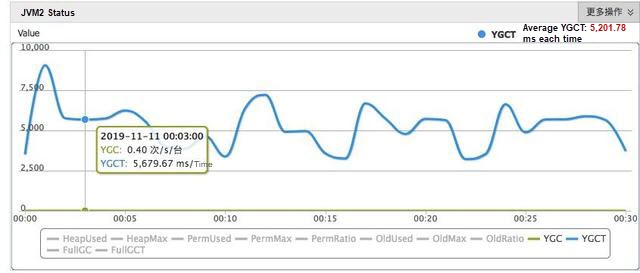

In particular, this last point was quite a large achievement because it meant that AJDK's Z Garbage Collector had run stably on Lindorm for two months and handled the traffic spikes of the big Double 11 shopping event with ease. The pause time of JVM is about 5 milliseconds and it never exceeds 8 milliseconds. Z Garbage Collector greatly improved the response time and glitch indexes in online operations, with the average response time reduced by 15% to 20% and the P999 response time reduced by half. And, with Z Garbage Collector, the P999 response time was reduced from 12 to only 5 milliseconds in the Ant risk control cluster, which is used to judge the risk involved in Alipay transactions, during last year's Double 11. Just consider this figure below.

Note that, for this figure, the unit used is in microseconds (us), but average garbage collection (GC) response time is 5 milliseconds (ms).

Now let's discuss the LindrormBlockingQueue. Before we can discuss it, though, it's important to provide a bit of context.

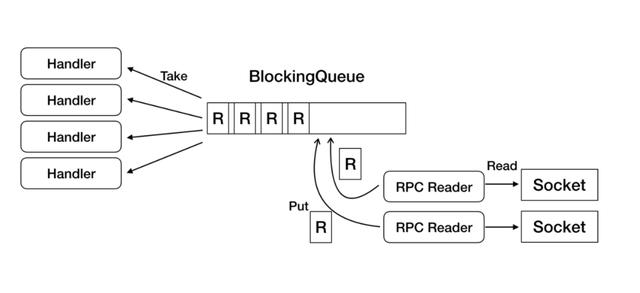

The above figure shows the process by which the RegionServer of HBase reads remote procedure call (RPC) requests from the network and distributes them to various handlers. RPC readers in HBase read RPC requests from the sockets and put them in BlockingQueue. Following this, handlers subscribe to BlockingQueue and execute the requests. The BlockingQueue used by HBase is LinkedBlockingQueue, provided by Java's native JDK. Generally speaking, LinkedBlockingQueue ensures thread security and synchronization between threads by locks and conditions. However, when the throughput increases, LinkedBlockingQueue can cause a serious performance bottleneck.

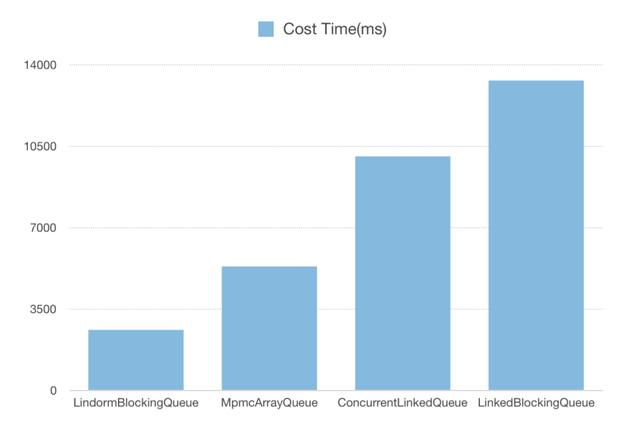

Designed to address this problem, our new LindormBlockingQueue was designed in Lindorm to maintain elements in the Slot array. It maintains the head and tail pointers and performs compare and swap (CAS) operations to read and write data in queues, which helps to eliminate unnecessary zones. It also uses cache line padding and dirty read caches for acceleration, and a variety of wait policies, including spin, yield, and block, can be customized to prevent frequent entry into the Park state when the queue is empty or full. All of this leads to a clear improvement, with the performance of LindormBlockingQueue being more than four times better than that of LinkedBlockingQueue. Just for a bit of a visual, consider the figure below.

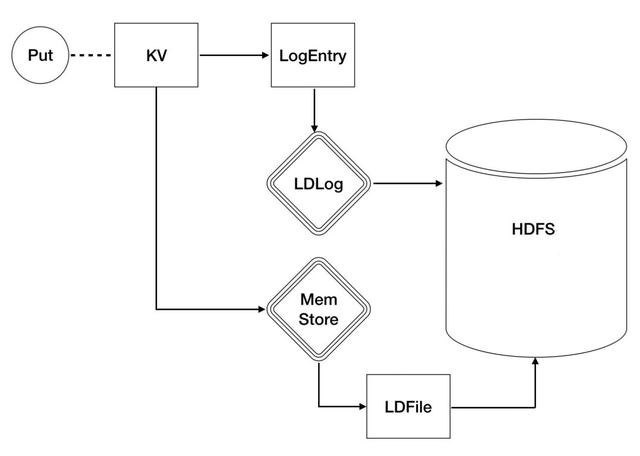

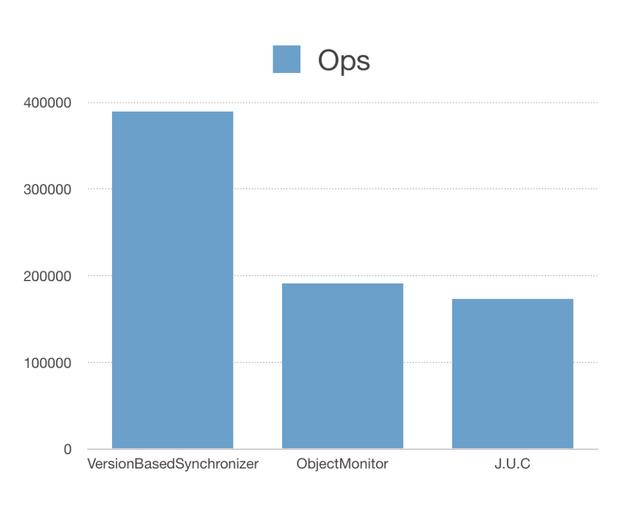

As seen in the above figure, LDLog is a log used in Lindorm for data recovery on system failover, ensuring data atomicity and reliability. All data must first be written into the Lindorm log, or LDLog for short. Operations such as writing to MemStore can be performed only after LDLog writing is successful. Therefore, all handlers in Lindorm are woken up for the next operation after write-ahead logging (WAL) writing is completed. Under high pressure, useless wake-up can result in a large number of CPU context switches, degrading the performance. Therefore, the Lindorm team has developed VersionBasedSynchronizer, a version-based concurrent multi-thread synchronization mechanism to significantly improve context switching.

VersionBasedSynchronizer allows Notifier to perceive the waiting conditions of handlers to reduce the wake-up pressure on Notifier. According to the module test results, the efficiency of our VersionBasedSynchronizer is more than twice that of the ObjectMonitor and java.util.concurrent (J.U.C) of the official Java Development Kit.

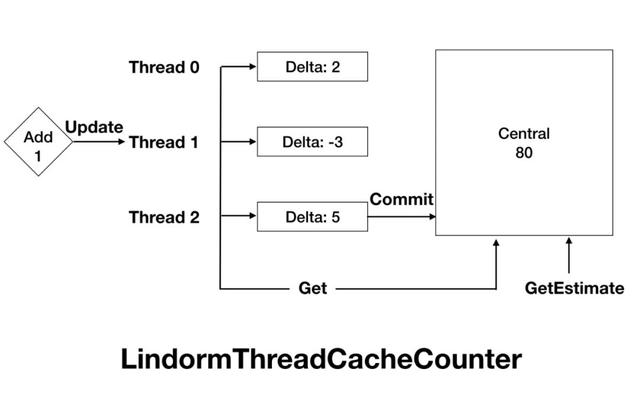

The HBase kernel has a large number of locks on the critical path, and thus, in high-concurrency scenarios, these locks can cause thread scrambling and performance degradation. The Lindorm kernel makes key links lockless, completely doing away with locks in the multiversion concurrency control (MVCC) and write-ahead logging (WAL) modules. In addition, various metrics are recorded during HBase operations, such as the queries per second, response time, and cache hit ratio of the operations. There are a large number of locks for the operation of recording these metrics. To solve the Metrics performance problem, Lindorm developed LindormThreadCacheCounter by building on the TCMalloc concept.

In concurrent applications, a remote procedure call (RPC) request involves multiple submodules and several I/O operations. Context switches in the system are quite frequent due to the collaboration between these submodules. Therefore, it is necessary to optimize the context switches in high-concurrency systems. Everyone has their own way to do this, and the industry is full of different ideas and different real-world implementations. Here we will focus on the coroutine and Staged Event-driven Architecture (SEDA) solutions.

Due to considerations of project costs, maintainability, and code readability, Lindorm uses the coroutine solution for asynchronous optimization. We use the built-in Wisp2.0 function of Dragonwell JDK provided by the Alibaba JVM team to implement coroutine for HBase handlers. Wisp2.0 is a simple and easy solution that effectively reduces system resource consumption. The results of the optimization were objectively demonstrated.

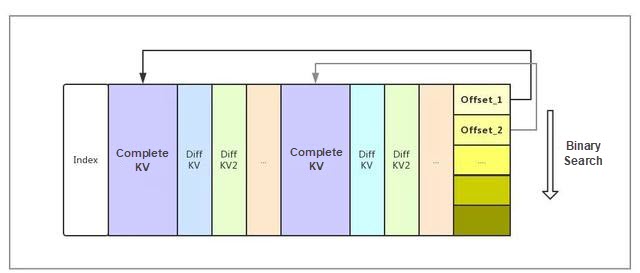

To improve performance, HBase usually needs to load meta information into the block cache. If the block is small, a large amount of meta information cannot be completely loaded into the cache, resulting in performance degradation. If the block is large, the sequential query performance of blocks after encoding can be a performance bottleneck for random read. To address this, Lindorm has developed Indexable Delta Encoding, which allows quick queries in blocks by index, greatly improving the seek performance. The following figure shows how Indexable Delta Encoding works.

After Indexable Delta Encoding is used, the random seek performance of HFile is doubled. Taking a 64 KB block as an example, the random seek performance is similar to that before encoding. Note that performance loss occurs when other encoding algorithms are used. In a random Get scenario with a cache hit ratio of 100%, response times were reduced by 50% compared with DIFF encoding response times.

The development of today's Lindorm had also involved several other performance optimizations and adjustments, along with many new technologies. Given the limited space in this post, we'll only list a few more of some of the most important technologies.

The native HBase only supports KV structure queries. It is a simple approach, but cannot meet the complex needs of several different businesses today. Therefore, we developed various query models based on the characteristics of different business units with Lindorm. The scenario-specific APIs and index designs of Lindorm make development easier than it was in the past.

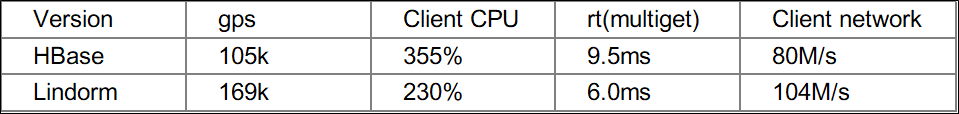

The wide column model is an access model and data structure consistent with HBase. Also using the wide column model, Lindorm is completely compatible with HBase API calls. You can use the wide column API of the high-performance native client in Lindorm to access Lindorm, or use the HBase client and API-no code modification required-to directly access Lindorm through the alihbase-connector plug-in. Lindorm also uses a lightweight client design to implement a large amount of logical operations, including data routing, batch distribution, timeout, and retry on the server, and significantly optimized the network transport layer. This helps to reduce the CPU consumption of applications. Compared with HBase, Lindorm has improved the CPU use efficiency by 60% and the bandwidth efficiency by 25%, as shown in the following table.

Note that the client CPU in the table indicates the CPU resources consumed by the HBase or Lindorm client. Generally speaking, the smaller the CPU usage, the better.

As an exclusive, we also support high-performance secondary indexes in HBase native APIs. When you use HBase native APIs to write data, index data is transparently written to index tables. Given this, queries can be performed in the index tables instead of all tables, greatly improving query performance.

HBase only supports the rowkey index mode, making multi-field query is inefficient. This means that you need to maintain multiple tables to meet query requirements in different scenarios, making it difficult to develop applications and ensure data consistency and write efficiency. HBase only provides the KV API, which allows only simple API operations, such as PUT, GET, and SCAN, and has no data types. Therefore, you need to manually convert and store all data. Developers who are used to the SQL language cannot easily convert to HBase and are likely to make errors.

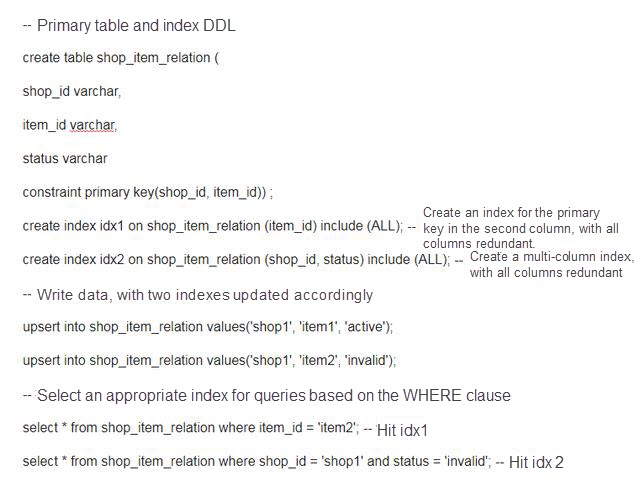

To resolve this difficulty, we have added the TableService model to Lindorm. This model provides a wide range of data types and structured query expression APIs and natively supports SQL access and global secondary indexes. This addresses numerous technical challenges and greatly reduces the difficulty of use for normal users. By using SQL and SQL like API calls, you can use Lindorm as easily as you use relational databases. The following is a simple example of Lindorm SQL:

-- 主表和索引DDL

create table shop_item_relation (

shop_id varchar,

item_id varchar,

status varchar

constraint primary key(shop_id, item_id)) ;

create index idx1 on shop_item_relation (item_id) include (ALL); -- 对第二列主键建索引,冗余所有列

create index idx2 on shop_item_relation (shop_id, status) include (ALL); -- 多列索引,冗余所有列

-- 写入数据,会同步更新2个索引

upsert into shop_item_relation values('shop1', 'item1', 'active');

upsert into shop_item_relation values('shop1', 'item2', 'invalid');

-- 根据WHERE子句自动选择合适的索引执行查询

select * from shop_item_relation where item_id = 'item2'; -- 命中idx1

select * from shop_item_relation where shop_id = 'shop1' and status = 'invalid'; -- 命中idx2Compared with SQL in relational databases, Lindorm does not support multi-row transactions and complex analysis, such as Join and Groupby. This is the difference between them.

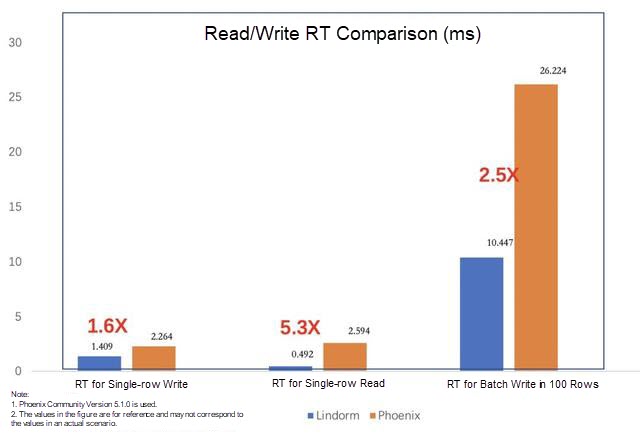

And, compared with the secondary indexes provided by Phoenix on HBase, the secondary indexes of Lindorm provide much better functionality, performance, and stability. The following figure shows a simple performance comparison.

Note that this model has been tested in Alibaba Cloud HBase Enhanced (Lindorm). You can contact the Alibaba Cloud HBase DingTalk account or submit a ticket for consultation.

In a modern Internet architecture, message queues play an important role in improving the performance and stability of core systems. Typical application scenarios include system decoupling, peak clipping and throttling, log collection, eventual consistency guarantees, and distribution and push.

Common message queue tools include RabbitMQ, Kafka, and RocketMQ. Although these databases are slightly different in their architectures, usage methods, and overall performance, their basic application scenarios are similar. However, the traditional message queues are not perfect. They have the following problems in scenarios such as message push and feed streaming:

In response to the preceding requirements, Lindorm has launched the FeedStreamService queue model, which can solve problems such as message synchronization, device notification, and auto-increment ID allocation in scenarios with massive numbers of users.

This year, the FeedStream model assumed an important role in the messaging system of Taobao Mobile, solving problems such as order preservation and idempotence during message push. During last year's Double 11, Lindorm was used in the building activity and big red envelope gifting on the Taobao Mobile. The number of messages pushed by Taobao Mobile in peak hours exceeded one million per second, and messages were pushed to all users within minutes.

Note that this model has been tested in Alibaba Cloud HBase Enhanced (Lindorm). You can contact the Alibaba Cloud HBase DingTalk account or submit a ticket for consultation.

Although the TableService model in Lindorm provides data types and secondary indexes, it still has trouble meeting the various complex-condition query and full-text search requirements. SOLR and Elasticsearch are excellent full-text search engines. By using Lindorm and SOLR or Elasticsearch, we can maximize their advantages to build complex big data storage and retrieval services. Lindorm has a built-in external index synchronization component, which can automatically synchronize data written to Lindorm to an external index component, such as SOLR or Elasticsearch. This model is suitable for businesses that need to store a large amount of data and require queries using various combinations of query criteria with few query fields. Below are three examples.

The full-text search model has been launched in Alibaba Cloud and supports external search engines such as SOLR and Elasticsearch. Currently, you need to directly query SOLR or Elasticsearch before Lindorm. Later, we will package the process of querying external search engines by using the TableService syntax, and then you will only need to interact with Lindorm to perform full-text searches.

In addition to these above models, we will also develop more easy-to-use models based on business needs and difficulties. We will provide time series and graph models soon.

Thanks to the trust of our customers, Ali-HBase has grown from a humble prototype into a robust product after fighting through numerous difficulties. After nine years of application experience, Alibaba has developed a lot of high-availability technologies, all of which have been applied to Lindorm.

HBase is an open-source implementation based on BigTable, a well-known paper published by Google. Its core feature is that data is persistently stored in Hadoop Distributed File System (HDFS). HDFS maintains multiple data copies to ensure high reliability. HBase does not have to worry about multiple replicas and data consistency, which helps to simplify the overall project. However, it also introduces a single point of failure (SPOF) into the service. That is, when a node is down, the service can be restored only after the memory status is restored through replay logs and the data is redistributed to new nodes and loaded.

When the cluster is large, it will take 10 to 20 minutes to recover from an SPOF of HBase. It may take several hours for a large-scale cluster to recover. However, in the Lindorm kernel, we have made a series of optimizations to reduce mean time to repair (MTTR). For example, we bring the region online first before failback, replay the faults in parallel, and reduce the number of small files generated. These optimizations improve the failback speed by more than 1000%, which is close to the original theoretical value of HBase's design.

In the original HBase architecture, each region could only be put online on one RegionServer. If a RegionServer went down, the corresponding region needed to go through reassignment, WAL segmentation by region, WAL data playback, and other steps before the read and write ability was restored. This meant recovery could take several minutes, which was an issue for businesses with high requirements. In addition, although data is synchronized between the primary and secondary clusters in HBase, you can only manually switch clusters upon failure, and the data of primary and secondary clusters can only achieve eventual consistency (EC). However, some businesses only accept strong consistency (SC), which was unachievable for HBase.

A shared log-based consistency protocol has been implemented in Lindorm, which supports automatic and rapid service recovery upon failure through the multi-replica mechanism of partitions, completely adapting to the storage and computing separation architecture. By using the same system, we can support SC semantics and achieve better performance and availability at the expense of consistency. This allows us to provide multiple capabilities such as multi-active and high-availability.

The Lindorm architecture provides the following consistency levels and you can choose from them based on your business situation. Note that, however, this function is currently unavailable on Lindorm.

Although HBase is a primary-secondary architecture, there is no efficient switching solution for the client currently on the market. The HBase client can only access HBase clusters at certain addresses. If the primary cluster fails, you need to stop the HBase client, modify the HBase configuration, and restart the client to connect to a secondary cluster. As an alternative to this, you would need to design complex access logic on the business side to access both primary and secondary clusters. Ali-HBase has modified the HBase client so that traffic switching occurs inside the client. The switching command is sent through a high availability channel to the client, which then closes the old link, opens the link to the secondary cluster, and resends the request.

Migration to the cloud was considered during the design of Lindorm. Various optimizations were made to reuse cloud infrastructure as much as possible. For example, in addition to disks, we also support data storage in low-cost storage services such as Alibaba Cloud Object Storage Service (OSS) to reduce costs. We also made several optimizations to our Elastic Compute Service (ECS) deployment to adapt to small-memory models and enhance deployment flexibility. This allowed us to achieve cloud native solution and reduce overall costs.

Currently, Lindorm is deployed by using ECS instances and cloud disks-although some customers choose to use local disks instead. This gives Lindorm extreme elasticity.

At the beginning, the Alibaba Group deployed HBase on physical machines. Before launching each business, we had to plan the number of machines and the disk size. When using physical machines to deploy HBase, you may encounter the following problems:

Migrating to the cloud with Alibaba Cloud ECS servers resolves many of these issues. Alibaba Cloud ECS provides an almost infinite resource pool. To quickly resize a business, you only need to apply for a new ECS instance in the resource pool, start it, and add it to the cluster. The whole process only takes a few minutes, which can help your business and other businesses deal with traffic spikes during peak hours. By using an architecture that decouples storage and computing from each other, we can also flexibly allocate different disk space to different businesses. When the space is insufficient, we can directly scale up online. In addition, the O&M personnel do not need to worry about hardware faults anymore. When an ECS instance fails, it can be started on another host, while cloud disks completely shield the upper layer from damaged physical disks. Extreme elasticity also increases cost efficiency. We do not need to reserve too many additional resources for the business. In addition, we can quickly scale down to reduce costs after a business promotion ends.

In massive big data scenarios, some business data in a table is used only as archive data or rarely accessed, and the amount of the historical data, including order data or monitoring data, is very large. Reducing the storage costs of such data can greatly reduce enterprise's overall expenses in fact. Lindorm's cold and hot separation function was developed specifically for this purpose. Lindorm provides new storage media for cold data. The storage costs of new storage media are only one third of the cost of ultra disk storage.

After Lindorm separates the cold and hot data in the same table, the system automatically archives the cold data in the table to the cold storage based on the user-defined demarcation line between cold and hot data. The user access mode is almost the same as that of a common table. In the query process, you only need to configure the query by a Hint or TimeRange and then the system automatically determines whether the query should be in the hot or cold data zone based on the conditions. This process is almost completely transparent to users.

Two years ago, we replaced the storage compression algorithm with ZSTD at Alibaba Group. Compared with the original SNAPPY algorithm, ZSTD increases compression by an additional 25%. In 2019, we developed the new algorithm, ZSTD-v2. With this new algorithm, we can use pre-sampled data to train dictionaries and then use the dictionaries to accelerate the compression of small pieces of data. We use this feature to sample data, build a dictionary, and then compress data when building LDFile in Lindorm. In data tests for different businesses, we achieved a compression ratio that was 100% higher than that of the native ZSTD algorithm, indicating that we can reduce customer storage costs by another 50%.

Alibaba Cloud HBase Serverless is a new set of HBase services built by using the serverless architecture based on the Lindorm kernel. Alibaba Cloud HBase Serverless turns HBase into a service. You do not need to plan resources, select the quantities of CPU resources and memory resources, or purchase clusters in advance. Complex O&M operations such as resizing are not required for businesses in peak hours periods of business growth, and no idle resources are wasted in off-peak hours.

You can purchase all storage resources based on your business volume. Given HBase Serverless's high elasticity and scalability, you may have the feeling that you are using an HBase cluster with infinite resources when using it. These seemingly unlimited resources can be ready for any sudden changes in your business traffic. In addition, given Alibaba's flexible payment systems, you only need to pay for the resources you actually use.

The Lindorm engine has a built-in username and password system, which provides permission control at multiple levels and authenticates each request to prevent unauthorized data access and ensure data access security. In addition, Lindorm provides multi-tenant isolation functions, such as group and quota control, to meet the demands of major enterprise customers. This function ensures that businesses in the same HBase cluster share the big data platform securely and efficiently without affecting each other.

The Lindorm kernel provides a simple user authentication and Access Control List (ACL) system. You only need to enter the username and password in the configuration for user authentication. User passwords are stored in ciphertext on the server and are transmitted in ciphertext during authentication. Even if the ciphertext is intercepted during authentication, the communication content used for authentication cannot be reused or forged.

Lindorm has three permission levels: Global, Namespace, and Table, which are mutually inclusive. For example, if user1 is granted global read and write permission, user1 can read and write all tables in all namespaces. If user2 is granted the Namespace1 read and write permission, user2 can read and write all tables in Namespace1.

When multiple users or businesses use the same HBase cluster, they may compete for resources. In this case, the batch read and write operations of offline businesses may affect the read and write operations of some important online businesses. The Group function is provided by Lindorm to solve the multi-tenant isolation problem.

RegionServers are divided into different groups and each group hosts different tables. This allows them to isolate resources.

For example, assume we have created Group1, moved RegionServer1 and RegionServer2 to Group1, created Group2, and moved RegionServer3 and RegionServer4 to Group2. In addition, we have moved Table1 and Table2 to Group1. In this way, all regions of Table1 and Table2 are allocated only to RegionServer1 and RegionServer2 in Group1.

Similarly, the regions of Table3 and Table4 in Group2 are only allocated to RegionServer3 and RegionServer4 in the balance process. Therefore, when you request these tables, requests sent to Table1 and Table2 are only served by RegionServer1 and RegionServer2, whereas requests sent to Table3 and Table4 are only served by RegionServer3 and RegionServer4. Through this method, resource isolation can be achieved.

The Lindorm kernel has a complete Quota system to limit the resources used by each user. The Lindorm kernel accurately calculates the number of consumed Capacity Units (CUs) for each request based on consumed resources. For example, due to the filter feature, only a small amount of data is returned for your scan request, but the RegionServer may have already consumed a large amount of CPU and I/O resources to filter the data. All the consumed resources are calculated as consumed CUs. When using Lindorm as a big data platform, enterprise administrators can allocate different users to different businesses and limit the maximum number of CUs read by a user per second or the total number of CUs in the Quota system. This prevents users from occupying too many resources and affecting other users. Quota throttling also supports restrictions at the Namespace and Table levels.

Lindorm, as a next-generation NoSQL database, is the product of technologies generated by the Alibaba HBase and Lindorm team over the past nine years. Lindorm can provide world leading high-performance, cross-domain, multi-consistency, and multi-model hybrid storage processing capabilities for massive data scenarios. It aims to meet big data with unlimited expansion and high throughput, online service with low latency and high availability, and multi-functional query requirements and provides you with real-time hybrid data access capabilities. This includes seamless scale-up, high throughput, continuous availability, stable response in milliseconds, strong and weak consistencies, low storage costs, and rich indexes.

Lindorm is one of the core products in Alibaba's big data system, supporting thousands of businesses in each business unit of the Alibaba Group. It has also stood the test of Double 11 Shopping Festival for years. Former Alibaba CTO Xingdian said that Alibaba technologies should be output by Alibaba Cloud to benefit millions of customers in all industries. Realizing this gesture, Lindorm has been made available to customers in Apsara Stack and, on Alibaba Cloud, in the form of "HBase Enhanced", allowing customers in the cloud to enjoy the benefits of Alibaba technologies.

What Is a Chatbot Really Thinking When You're Talking with It

2,593 posts | 792 followers

FollowApsaraDB - January 26, 2021

Hironobu Ohara - June 13, 2023

Alibaba Cloud ECS - January 8, 2020

Alibaba Clouder - October 28, 2019

Alibaba Clouder - March 12, 2020

Alibaba Clouder - November 18, 2020

2,593 posts | 792 followers

Follow ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn MoreMore Posts by Alibaba Clouder