This article was written by Peng Jiahao, nicknamed Yixin at Alibaba. Peng is a development engineer from the Alibaba Taobao Technology Department and a avid devotee of cloud computing.

With the rapid development of computer software technologies, the whole software architecture world is evolving in many and new ways that enable developers to easily and quickly build large-scale and complex applications. Container technology was first developed to solve problems of inconsistency in the runtime environment. However, during the continuous development of container technology, more and more new possibilities have been opened up by container technology.

In recent years, this development has lead to many new software architecture models that have emerged in the cloud computing field, including cloud native, function compute, serverless, and Service Mesh. This article can serve as a brief and easy-to-read guide to Service Mesh.

In the process of software development before microservices existed, all modules were often included in one application and were compiled, packaged, deployed, and maintained together. As a result, a single application often would contain too many modules. And, if a module in this application failed or needed to be updated, the entire application had to be redeployed. So, as you may have guessed, this method was a major headache for the developers, specifically the DevOps personnel in charge. And, of course, as applications became more complex, they involved more and more objects.

Developers discovered many shortcomings in the process. To combat these problems, developers started to group services and divide large applications into many small applications with inter-application call relationships. Different small applications were managed, deployed, and maintained by different developers. This is essentially how the concept of microservices emerged.

Applications in microservices are deployed on different hosts, which means that you need to find a way for services to communicate and coordinate with each other. This situation is much more complex than a single application. Calls between different methods in the same application are addressable and fast because they are linked in the same memory during code compilation and packaging. However, calls between different services in a microservice involve the communication between different processes or hosts, which generally need to be mediated and coordinated through third-party middleware.

For these reasons, a great deal of microservice-oriented middleware was developed, including service governance frameworks. These service governance tools can manage all the applications they are integrated with, simplifying and accelerating communication and coordination between services.

Container technology was developed to solve inconsistency problems in application runtime environments, avoiding situations where applications can run normally in the local or test environment but crash in the production environment. Programs and their dependencies are packaged into images through containers, and several containers are started on any host where a container service is installed and executed.

Each container is an application runtime instance. These instances generally have the same runtime environment and parameters. Therefore, applications can attain consistent performance on different hosts. This fact facilitates development, testing, and O&M, with no need to build the same runtime environment for different hosts.

Images can be pushed to image repositories, facilitating application migration and deployment. Among other technologies, Docker is one of the most widely used container technology. Currently, many applications are deployed by using containers as microservices, and the number of these developments is only increasing over time. All of this has greatly energized software development.

And as more and more applications are deployed by using containers, the number of containers used in a single cluster has also increased significantly over time. But, this has made it difficult to manually manage and maintain these containers, so many orchestration tools have been developed to manage the relationships between containers. These tools can manage the entire lifecycle of containers. For example, Docker Compose and Docker Swarm released by Docker can start and orchestrate containers in batches. However, they only provide simple functions and cannot support large container clusters.

To remedy this problem, Google developed the Kubernetes project based on its extensive experience in container management. Designed for Google clusters with hundreds of millions of containers per week, Kubernetes has powerful container orchestration capabilities and various functions. Kubernetes defines a lot of resources. These resources are created in a declarative manner. A resource can be represented by JSON or YAML files. Kubernetes supports multiple containers, among which Docker containers are the most common. Kubernetes provides relevant standards for container access and can orchestrate any container compliant with these standards. Kubernetes has many functions.

After all applications of a company have been converted to microservices and deployed by using containers, you can deploy Kubernetes in a cluster, manage the application containers by using the capabilities provided by Kubernetes, and perform O&M operations on Kubernetes. As the most widely used container orchestration tool, Kubernetes has become a standard for container orchestration. However, Alibaba Group also has developed its own containers and container orchestration tools. Moreover, in the industry at large, some new technologies have been derived from the container management methods represented by Kubernetes.

In the past two years, cloud native has been something of a very hot topic in in cloud computing and in IT more generally. The Cloud Native Computing Foundation (CNCF) gives the following definition for Cloud Native:

Cloud-native technologies empower organizations to build and run scalable applications in modern and dynamic environments, such as public cloud, private cloud, and hybrid cloud. Containers, service meshes, microservices, immutable infrastructure, and declarative APIs exemplify this approach.

These technologies can build loosely coupled systems with good fault tolerance, ease of management, and ease of observation. Combined with robust automation, they allow engineers to make high-impact changes frequently and predictably with minimal effort.

To put things more simply, cloud native essentially refers to the following actions: developing applications as microservices, deploying them by using containers, and managing container clusters by using container orchestration tools like Kubernetes to orient development and O&M toward Kubernetes. Cloud native is useful because it allows you to easily build applications, comprehensively monitor them, and quickly scale them up and down based on traffic.

This is shown in the image below.

Cloud native more or less consists of four parts: microservices, containers, continuous integration and delivery, and DevOps.

So far in this article, we have discussed microservices, containers, container orchestration, and cloud native. All of these provide backdrop to the main topic of this article, which is Service Mesh. In short, you can broadly define Service Mesh as a cloud-native microservice governance solution.

Once we have deployed applications on Kubernetes as microservices by using containers, Service Mesh can offer us and our applications a new and transparent solution for inter-service calls and governance. This solution can free us from being reliant on the traditional microservice governance framework.

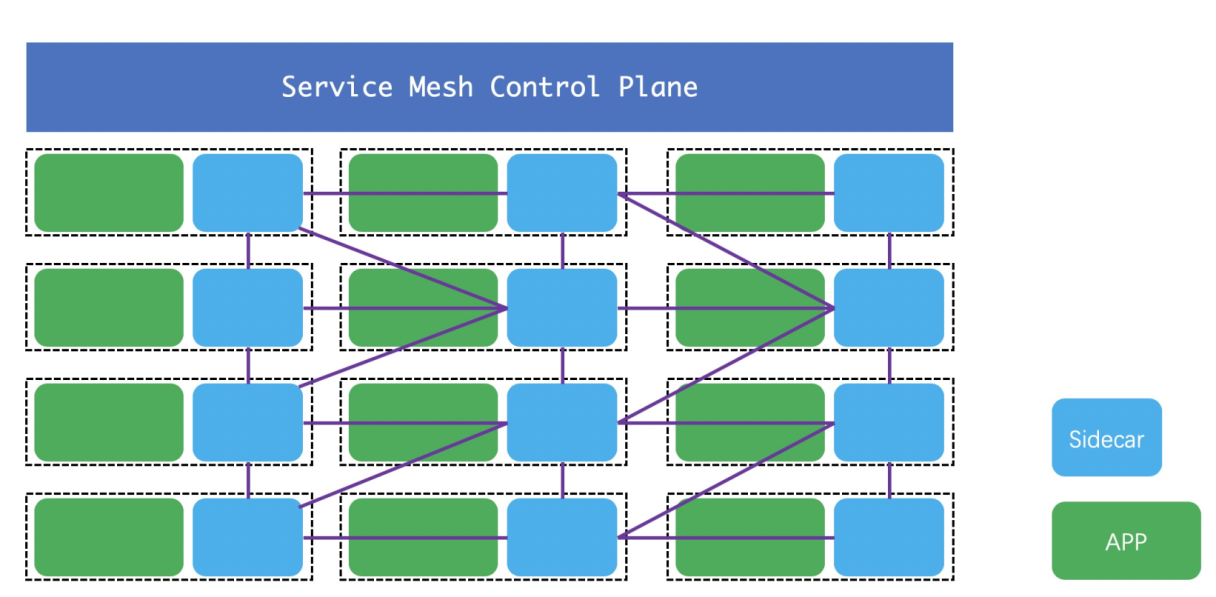

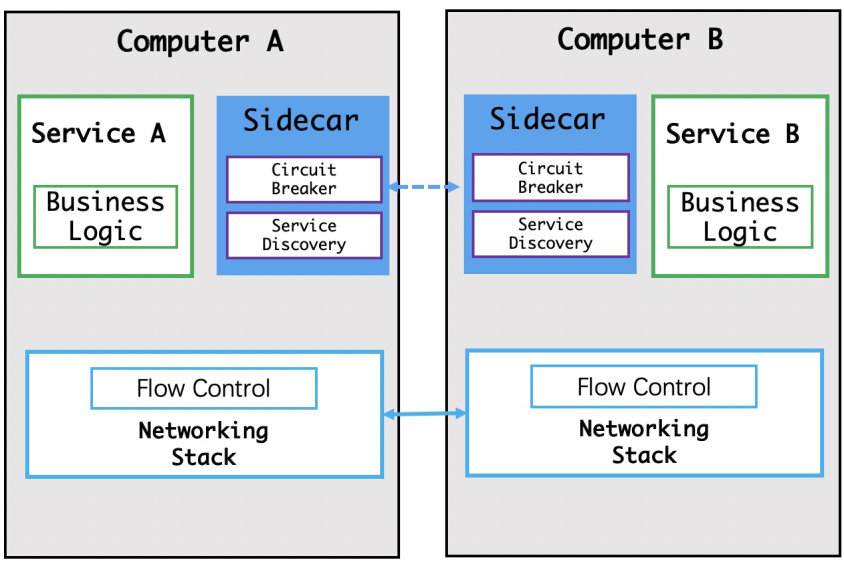

The way in which Service Mesh works is it starts a Sidecar for each application in its pod as a transparent proxy for the application. Then, all of the application's inbound and outbound traffic goes through its Sidecar. Calls between services become calls between Sidecars, and service governance becomes Sidecar governance.

In a Service Mesh, the Sidecar is transparent and imperceptible to developers. In the past, we always had to introduce libraries and register services so that an application could be discovered and called. However, applications in a Service Mesh are completely transparent to developers.

This implementation relies on container orchestration tools. During continuous application integration and delivery on Kubernetes, after the pod of an application is started, its services have already been registered with Kubernetes in a YAML file and have declared the relationships between services. Service Mesh obtains all service information in the cluster by communicating with Kubernetes and achieves transparency to developers through Kubernetes.

The image below shows the basic structure of a Service Mesh, including the data plane and control plane.

This mode has many advantages. The language used in this mode does not affect the service governance process. A Service Mesh is only concerned with pods or container instances in pods, not the implementation language of the application in the container. A Sidecar is located in the same pod as the containers it manages.

Service Mesh can implement cross-language support, which is a major shortcoming of many traditional service governance frameworks. In addition, using traditional service governance introduces a great deal of application dependency, which can result in dependency conflicts. Alibaba Group uses Pandora to isolate the dependencies of applications. Furthermore, traditional service governance is difficult, requiring developers to have some knowledge of the entire architecture.

In traditional service governance frameworks, it is hard to troubleshoot problems. It also results in an unclear boundary between development and O&M. With Service Mesh, however, developers only need to deliver code, and the O&M personnel can maintain the entire container cluster based on Kubernetes.

The term Service Mesh first appeared in 2016 and has become very popular over the past two years. Ant Financial has a complete Service Mesh service framework named SOFAMesh, and many other teams in Alibaba have followed suit.

Despite a lot of changes over the past few years, program development is becoming simpler. For example, here at Alibaba, thanks to our complete technical systems and powerful technical capabilities, we can now easily build a service that supports a high number of queries per second easily.

So, let's take a look at the application development process.

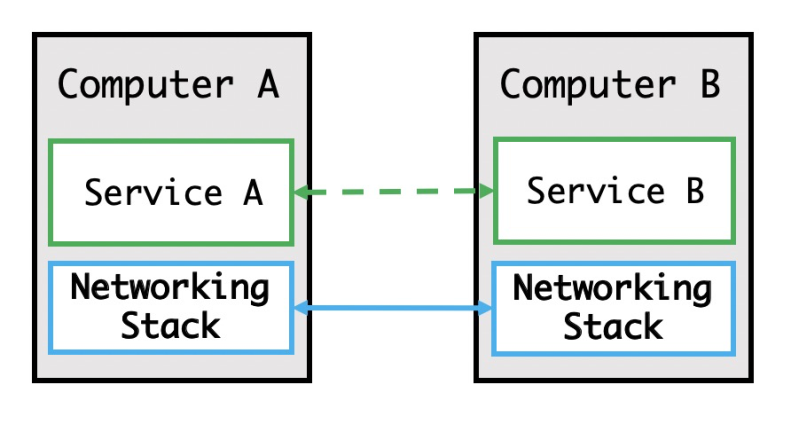

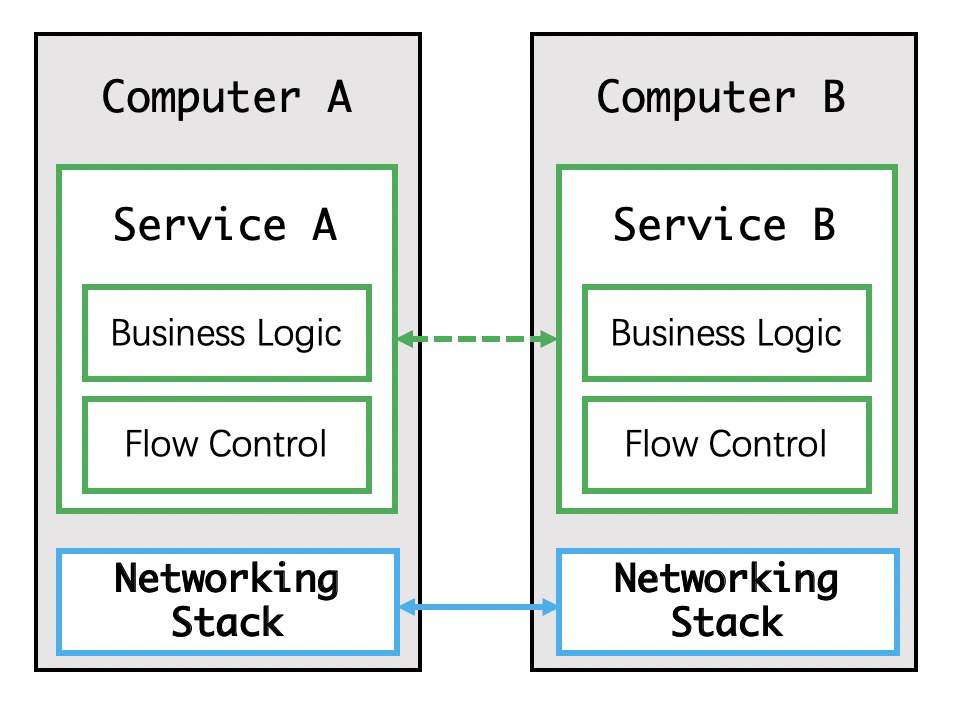

The above figure shows the oldest stage. At this stage, two hosts were directly connected by a network cable, and one application contained all possible functions, including the connection management for the two hosts. At that time, the concept of a network had not been yet formed. After all, only hosts directly connected by network cables could communicate with each other.

At this stage, the network layer emerged with the development of some new technologies. A host could communicate with all other hosts connected to it through the network.

At this stage, the capability to receive traffic would vary with the environment and host configuration of each application. When the traffic sent by application A, for example, is more than that allowed by application B, data packets that cannot be received, and are discarded as a result. Because of this issue, traffic needed to be controlled. At this stage, throttling was implemented by applications, and the network layer only received and transmitted data packets from the applications.

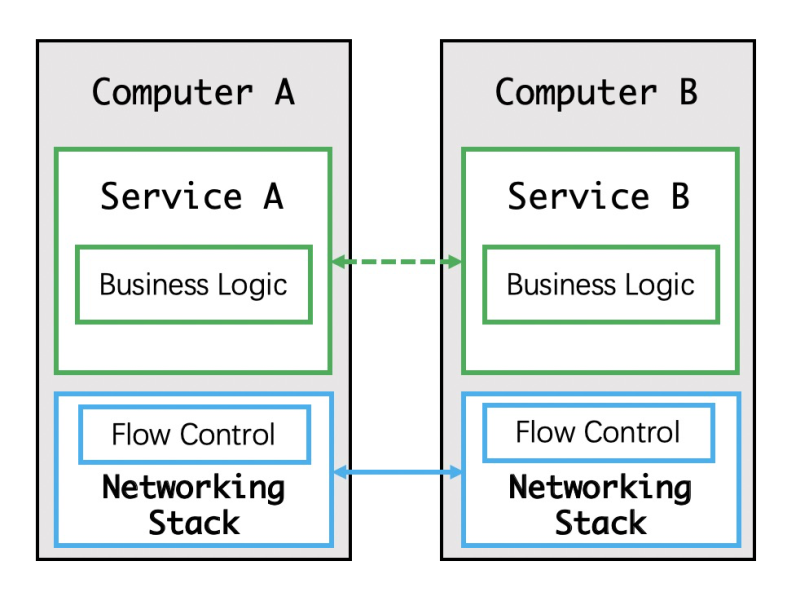

Gradually, by this stage, we found that network throttling in applications could be implemented by the network layer, as shown in the figure above. Of course, by throttling at the network layer, what we mean is TCP throttling, which was done to ensure reliable network communication.

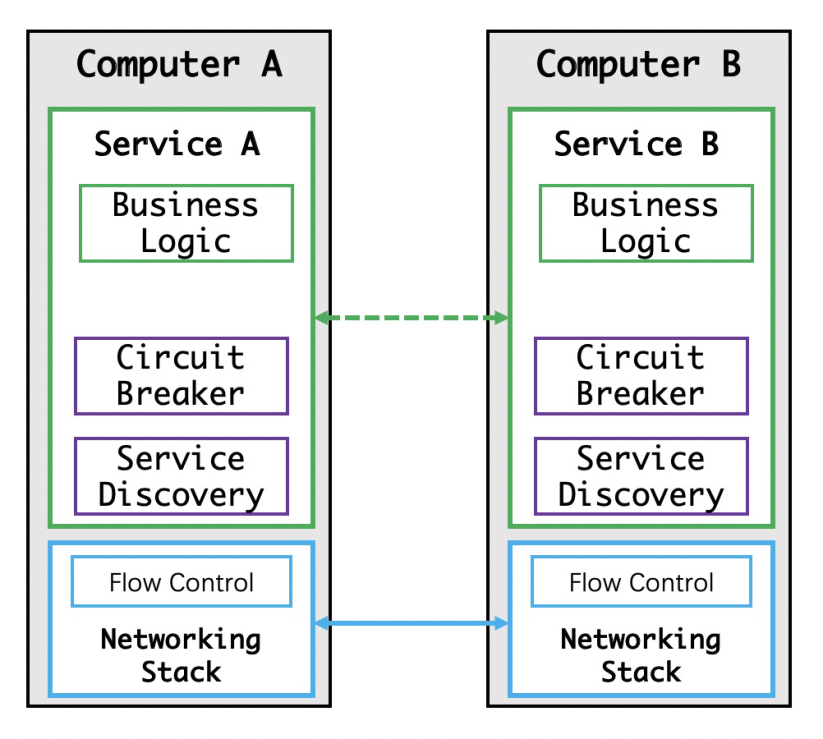

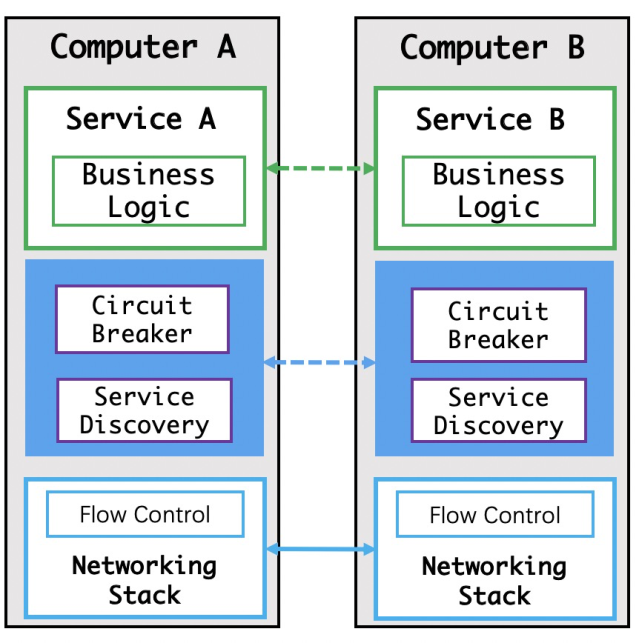

Developers started to implement service discovery and circuit breakers in their own code modules at this stage.

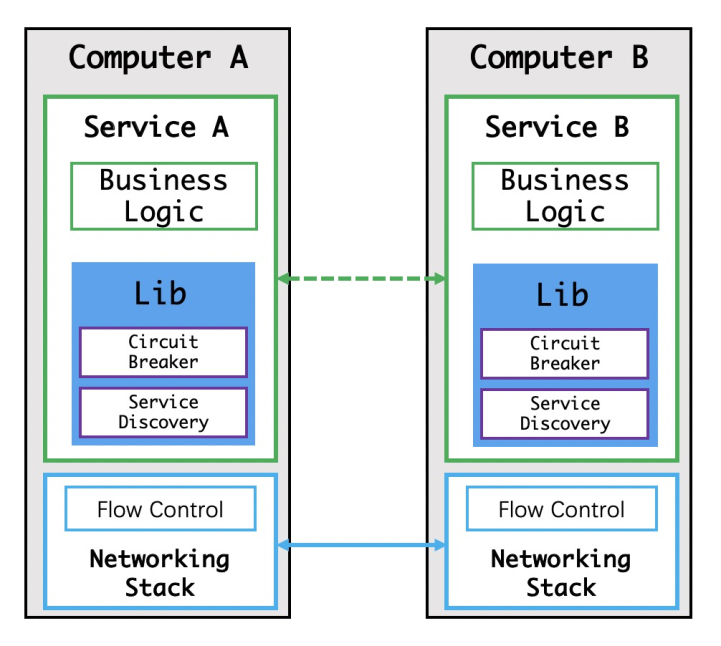

Developers began to be able to implement service discovery and circuit breakers by referencing third-party dependencies at this stage.

At this stage, service discovery and circuit breakers are implemented based on various middleware.

The emergence of Service Mesh has further increased productivity and improved efficiency throughout the software lifecycle.

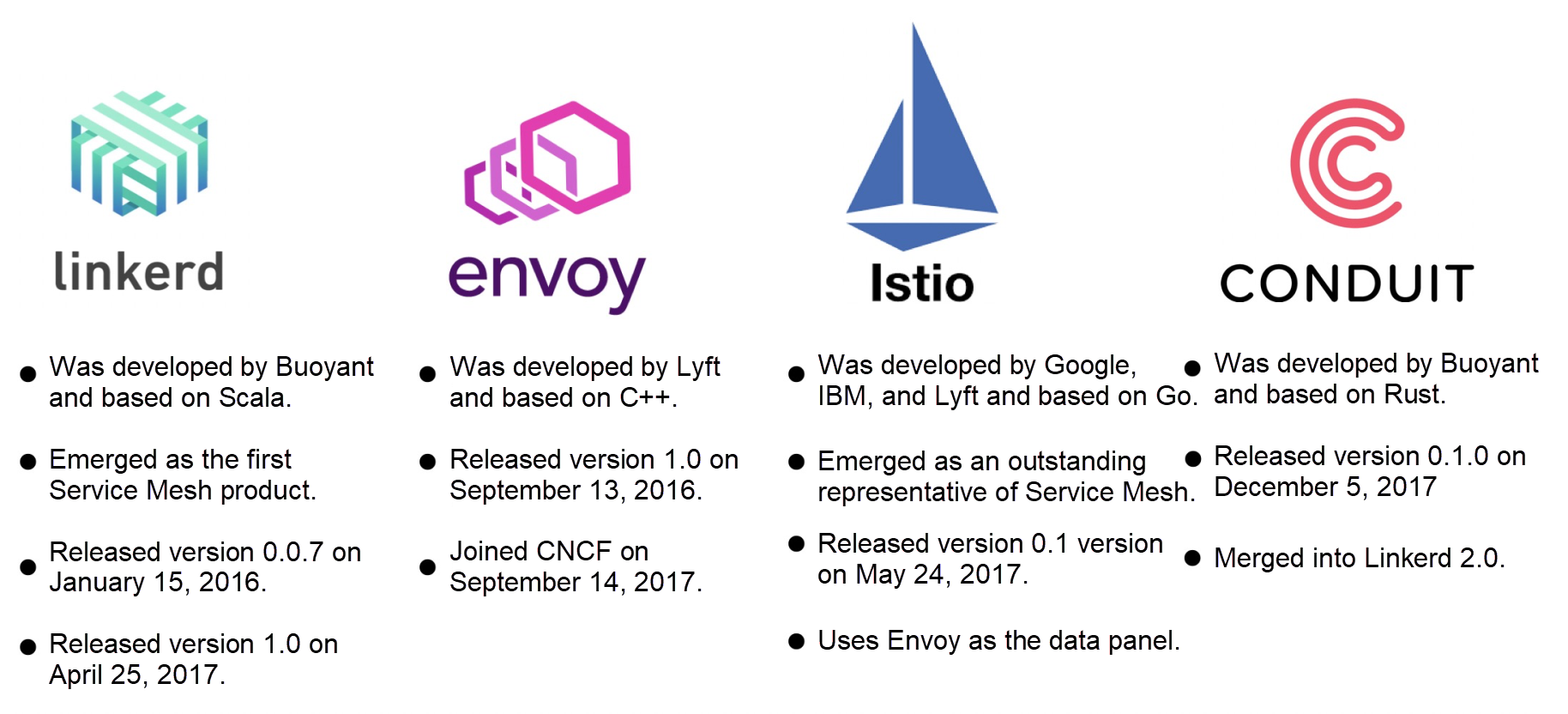

It was not until the end of 2017 that Service Mesh became a popular solution. With this popularity, competition started to burn up in the microservice market. But the reality was Service Mesh emerged as early as the beginning of 2016.

In January 2016, infrastructure engineers William Morgan and Oliver Gould released Linkerd 0.0.7 on GitHub after leaving Twitter. This marked the birth of the first Service Mesh project in the industry. Based on Twitter's Finagle open-source project, Linkerd reused Finagle class libraries but achieved universal capabilities and became the first Service Mesh project in the industry.

Envoy was the second Service Mesh project to emerge. It was developed at nearly the same time as Linkerd, and both of them became CNCF projects in 2017.

On May 24, 2017, Istio 0.1 was released. Google and IBM announced it with high-profile speeches, the community responded enthusiastically, and many companies expressed their support for the project.

Following Istio's release, Linkerd was soon overshadowed by Istio. As one of the only two production-level Service Mesh implementations in the industry, Linkerd was still able to maintain some of its competitiveness in the market that was of course until Istio reached full-on maturity.

However, as Istio steadily advanced and matured, it was only a matter of time before Istio replaced Linkerd.

In contrast to Linkerd, Envoy made the decision to serve as a Sidecar for Istio starting in 2016. Envoy did not require many functions because it worked on the data plane and most of the work can be completed on the control plane of Istio. This has allowed the team supporting Envoy to focus on detailed improvements to the data plane. With a completely different nature from Linkerd, Envoy has made a niche for itself, making it no longer a subject to the intense competition in the market of the main Service Mesh market.

Jointly launched by Google and IBM, Istio has always been in the limelight ever since and received wide praise from Service Mesh enthusiasts. As a next-generation Service Mesh, Istio has obvious advantages over Linkerd. In addition, its project roadmap calls for a variety of new functions to be released in the future. We are looking forward to it.

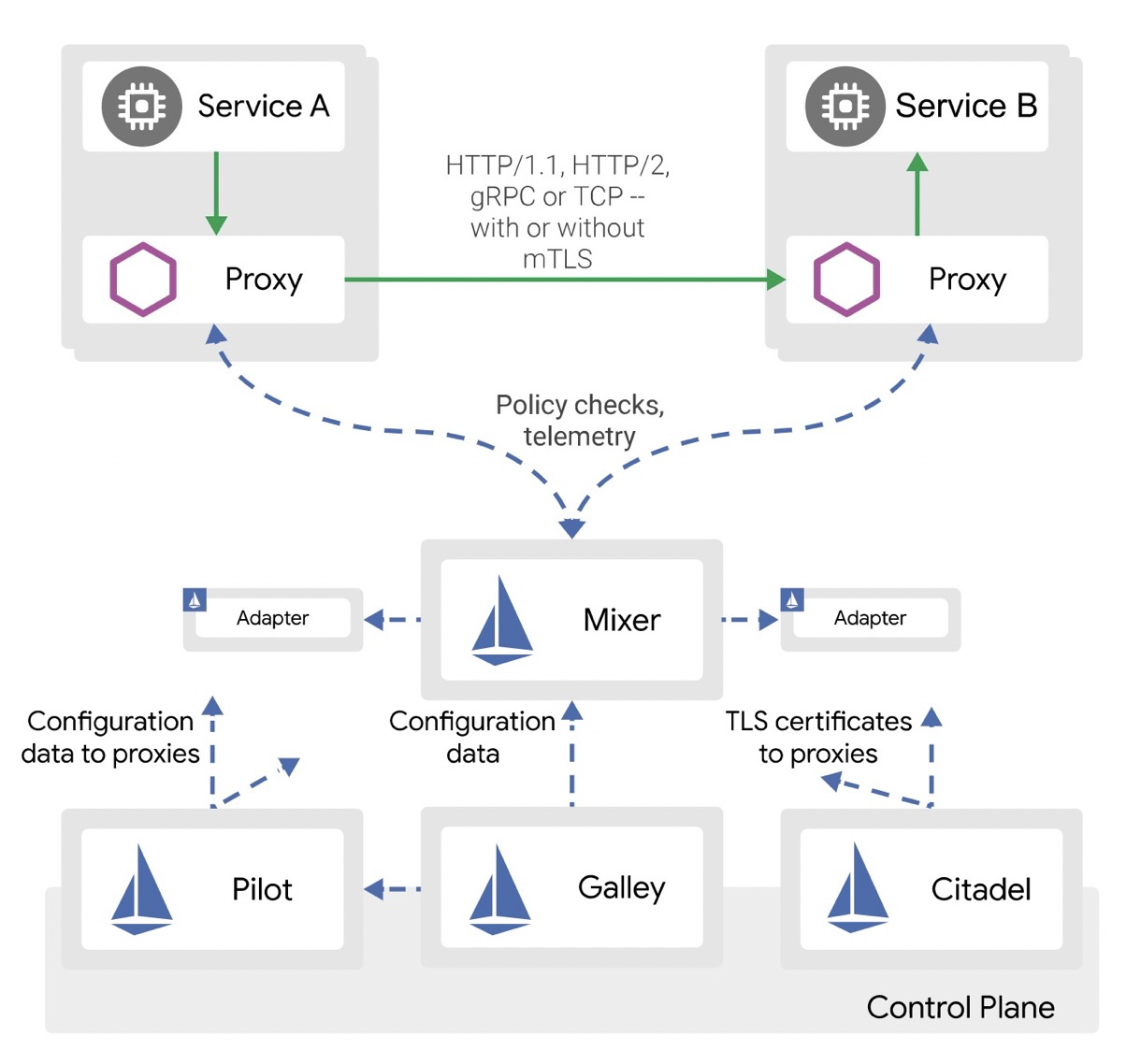

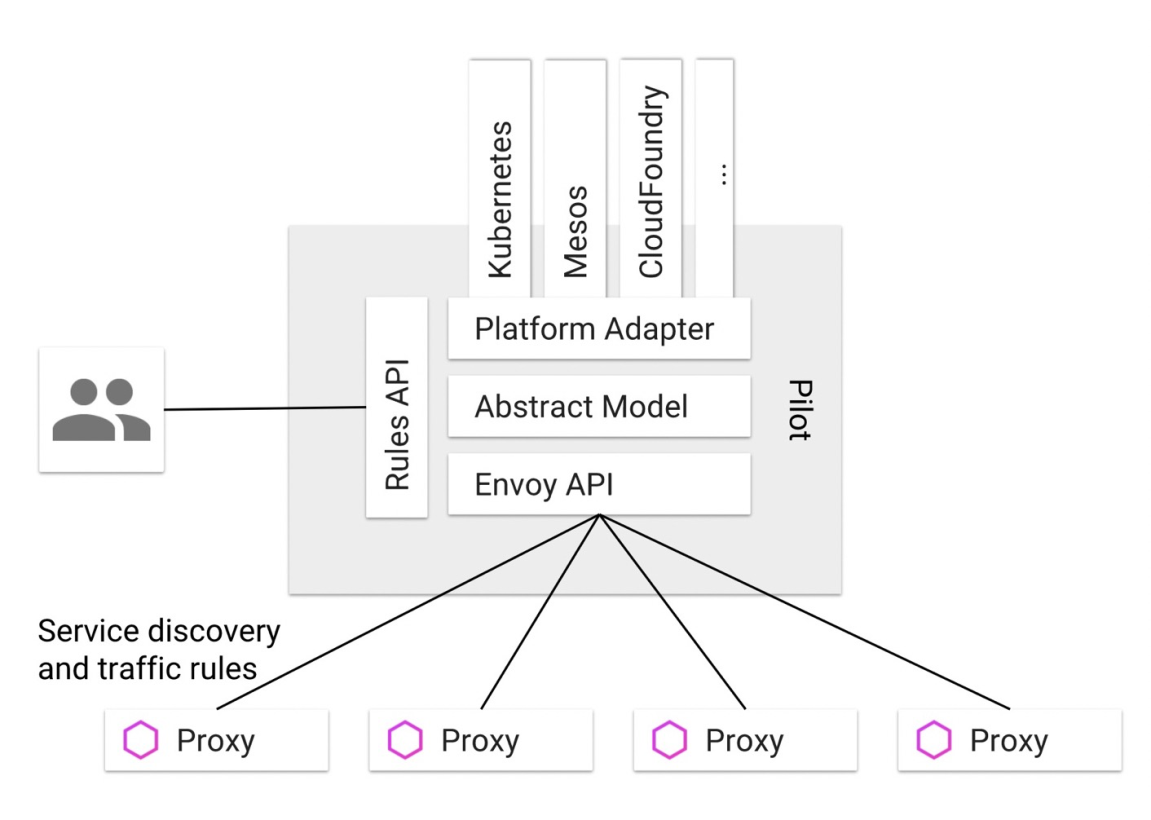

So to provide an even more detailed picture, Istio is the most popular open-source Service Mesh project. It is divided into the data plane and control plane. Istio implements cloud-native microservice governance and can also implement service discovery, throttling, and security monitoring. Istio provides transparent proxy services by starting an application and a Sidecar in a pod. Istio is a highly scalable framework that supports Kubernetes and other resource schedulers such as Mesos. The figure below shows the architecture of Istio.

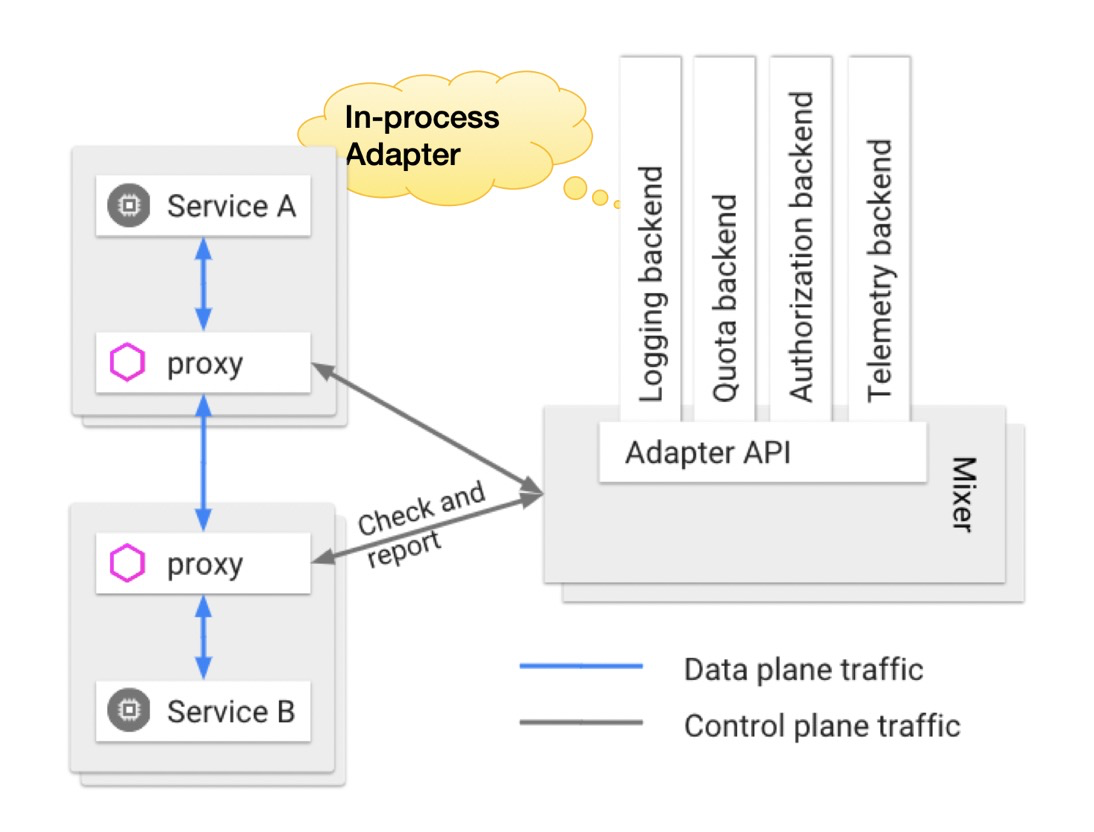

Mixer is also a scalable module in Istio. Sidecar continuously reports its traffic to Mixer, which then summarizes the traffic information and displays it. Sidecars can call some BaaS capabilities provided by Mixer, such as authentication, logon, and logging. Mixer connects to various BaaS modules through adapters.

In earlier versions of Istio, BaaS adapters were integrated into Mixer. In this mode, method calls are fast in the same process, but the failure of one BaaS adapter will affect the entire Mixer.

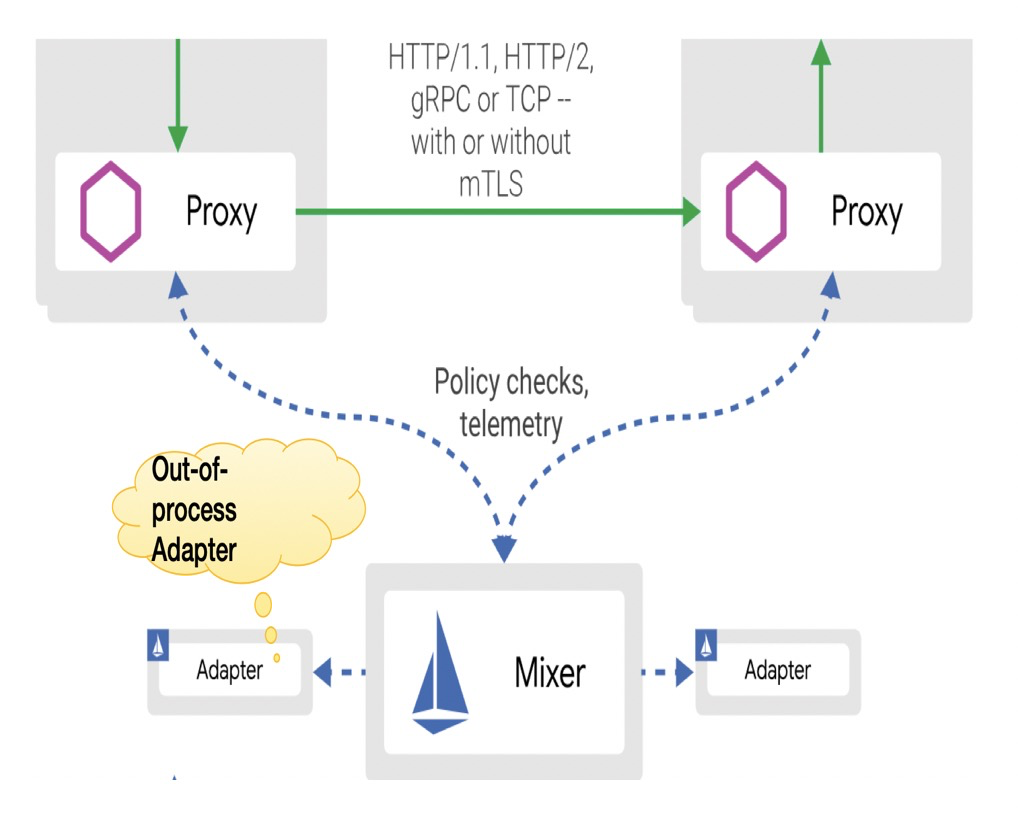

In the latest version of Istio, adapters were moved outside Mixer, which meant that Mixer was decoupled from the adapters. This then in turn also means that the failure of any adapter does not affect Mixer. But, one downside to this is that calls between adapters and Mixer are implemented through remote procedure calls (RPCs), which are much slower than the method calls within the same process. Therefore, performance is affected.

Galley is part of the control panel of Istio. Originally, Galley only verified configurations. But, starting from Istio version 1.1, Galley can serve as the configuration management center of the entire control plane, and can also manage and distribute configurations. Galley uses the Mesh Configuration Protocol to exchange configurations with other components.

Istio has more than 50 Custom Resource Definitions (CRDs). Many say that Kubernetes-oriented programming is similar to YAML-oriented programming. In the early days, Galley only verified configurations during runtime, and each component of the Istio control plane listed or watched corresponding configurations. However, the increasing number and complexity of configurations brought much inconvenience to Istio users:

With the further evolution of Istio, the number of Istio CRDs will continue to increase. The community plans to strengthen Galley to make it the configuration control layer of Istio. In addition to verifying configurations, Galley will also provide a configuration management pipeline, including input, conversion, distribution, and the Mesh Config Protocol (MCP) suitable for the control plane of Istio.

Now let's discuss the security side of Istio, Citadel. Splitting a service into microservices brings various benefits but imposes more security requirements. After all, the method calls between different functional modules have been changed to remote calls between microservices.

Citadel is the security component in Istio. However, Citadel must work with multiple other components.

Istio supports the following security functions:

How Can Alibaba's Newest Databases Support 700 Million Requests a Second?

2,593 posts | 793 followers

FollowAdrian Peng - February 1, 2021

Alibaba Container Service - September 11, 2025

Alibaba Developer - March 3, 2020

Alibaba Developer - January 10, 2020

Alibaba Clouder - June 30, 2020

Alibaba Cloud Native - September 8, 2022

2,593 posts | 793 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn MoreMore Posts by Alibaba Clouder