The Alibaba Cloud 2021 Double 11 Cloud Services Sale is live now! For a limited time only you can turbocharge your cloud journey with core Alibaba Cloud products available from just $1, while you can win up to $1,111 in cash plus $1,111 in Alibaba Cloud credits in the Number Guessing Contest.

By Fang Keming (Xi Weng), Technical Expert from Alibaba Cloud's Middleware Technology Department.

In 2019, cloud native became the technical infrastructure for Alibaba's e-commerce platforms as well as its entire ecosystem. As one of the major pieces of modern cloud-native technology, Service Mesh is an integral component of the cloud-native strategy. To explore and realize the benefits of cloud-native technologies more efficiently, at Alibaba we worked hard to implement Service Mesh in our core applications in preparation for Double 11 , China's and arguably the world's largest online shopping promotion and event. For this, we took advantage of the rigorous and complicated scenarios involved with the huge traffic spikes during the Double 11 online shopping festival to test the maturity of the Service Mesh solution. This article will discuss the challenges we faced and had to overcome in implementing this Service Mesh solution.

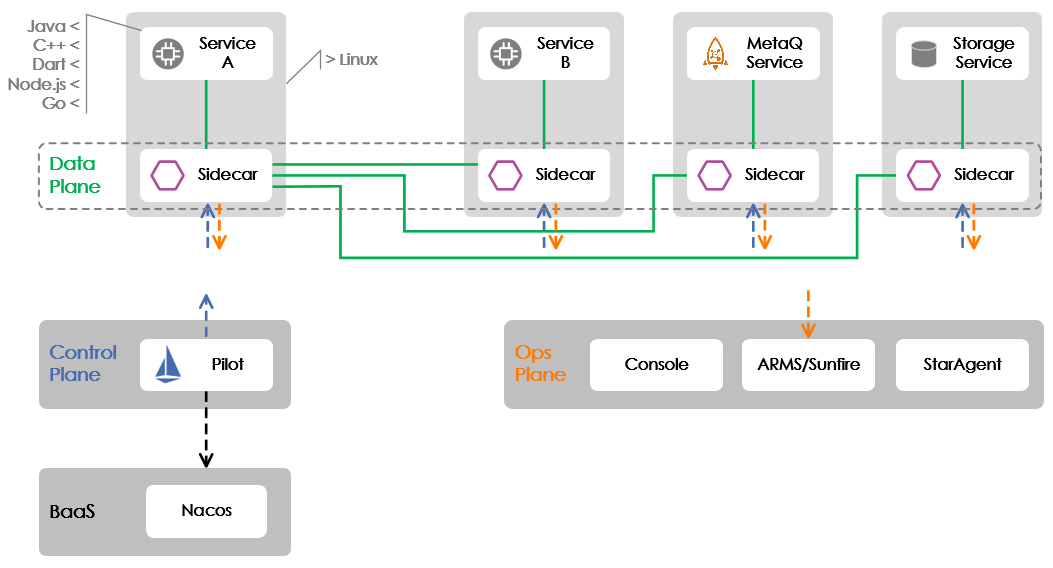

Before getting fully into the topic we'd like to discuss with you in this article, let's first take a look at the deployment architecture of Alibaba's core applications for the big annual Double 11 shopping event. The core applications are shown in the following graphic below. In this article, we will mainly focus on the Mesh implementation for the remote procedure call (RPC) protocol between Service A and Service B, which is shown below.

The above graphic shows the three major planes included in Service Mesh: the data, control and operation planes. The data plane uses the third-party, open-source edge and service proxy, Envoy , which is represented by Sidecar in the above graphic. (Note that, the two names, Envoy and Sidecar, are used interchangeably in this article.) The control plane uses the open-source and independent service mesh named Istio , of which only the Pilot component is currently used, whereas the O&M plane was completely developed in-house.

Unlike when it was first launched six months ago, we have now adopted the Pilot cluster deployment mode for Service Mesh implementation in the core applications for Double 11. This means that now Pilot is no longer deployed in the business container with Envoy. Rather, an independent cluster has been created. This change has brought about the deployment method of the control plane to the appropriate state that it should have in Service Mesh.

Our selected core applications for Double 11, which were all implemented in Java, faced the following challenges:

When we decided to implement Mesh in the core applications for Double 11, the RPC SDK version that our Java applications depended on had been finalized. As such, there was no time to develop and upgrade the RPC SDK to make it suitable for Mesh. So, the team faced had to figure out how exactly they would implement the Service Mesh in each application without upgrading the related SDK.

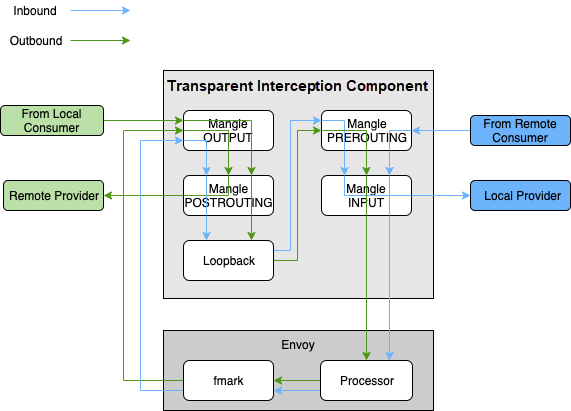

If you are familiar with Istio, you know that it intercepts traffic transparently through the NAT table in iptables. With transparent traffic interception, the traffic can be directed to Envoy without being perceived by the application, which is exactly how our Service Mesh has been implemented. Unfortunately, the nf_contrack kernel module used in the NAT table was removed from Alibaba's online production servers due to its low efficiency. As such, the community solution could no longer be used directly. Then, shortly after the beginning of the year, we worked with the AliOS team, who built the basic capabilities required by the Service Mesh, including transparent traffic interception and network acceleration. Through the close cooperation between both of our teams, the OS team explored a brand new transparent interception solution based on userID and mark traffic identification and implemented a new transparent interception component based on the mangle table in IPtables.

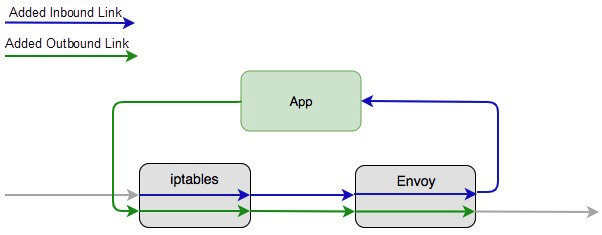

The graphic below shows the traffic trends for RPC service calls when the transparent interception component is used. Inbound traffic refers to the incoming traffic (with the recipient of the traffic being the provider role), and the outbound traffic refers to the outgoing traffic (with the sender of the traffic being the consumer role). An application typically assumes both roles, and therefore inbound and outbound traffic coexist.

With the transparent interception component, application Mesh implementation can be completed imperceptibly, which can facilitate Mesh implementation. While the RPC SDK retained its previous service discovery and routing logic, redirecting traffic to Envoy lead to an increase in the response time for outbound traffic due to the replicated service discovery and routing processes. This effect was also seen in the subsequent data section. However, when implementing Service Mesh in its final state, it is necessary to remove the service discovery and routing logic in the RPC SDK to reduce the corresponding CPU and memory overhead.

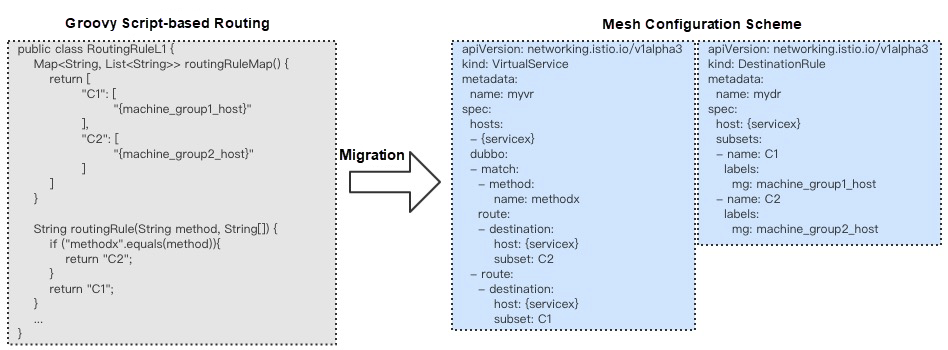

The various computing and networking scenarios involved in Alibaba e-commerce business include a wide range of routing features. In addition to support for unitization, environment isolation, and other routing policies, it's important that we also perform service routing based on the method name, call parameters, and application name of RPC requests. Alibaba's internal Java RPC framework supports these routing policies by running an embedded Groovy script. Users can configure a template Groovy routing script in the O&M console. When the SDK initiates a call, this script is executed to apply the routing policy.

In the future, Service Mesh does won't be using Groovy as a flexible routing policy customization solution to avoid putting too much pressure on the evolution of Service Mesh due to its high flexibility. Therefore, at Alibaba we decided to take the opportunity of Mesh implementation to remove Groovy scripts. By analyzing the scenarios in which applications use Groovy scripts, we abstracted a set of cloud-native solutions. Specifically, we expanded VirtualService and DestinationRule in the Istio-native custom resource definitions (CRD) and added the route configuration segment required by the RPC protocol to interpret the routing policy.

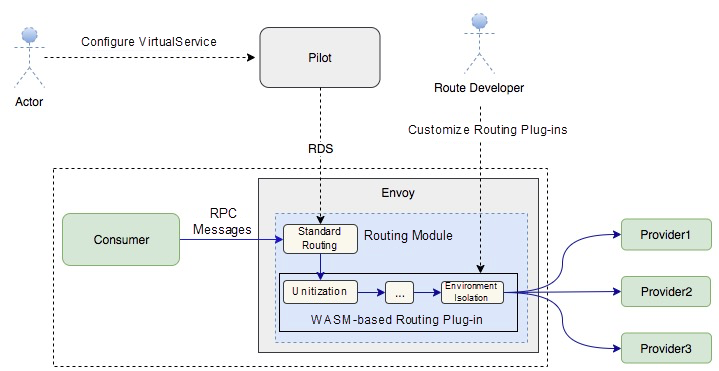

Currently, policies such as unitization and environment isolation in the Alibaba environment are customized in the standard routing module of Istio or Envoy, and therefore certain hacking logic is inevitable. In addition to Istio's or Envoy's standard routing policies, we will design a WASM -based routing plug-in solution to objectify these simple routing policies as plug-ins. This will not only reduce intrusions into the standard routing module, but also meet business needs for custom service routing. The following figure shows the envisioned architecture:

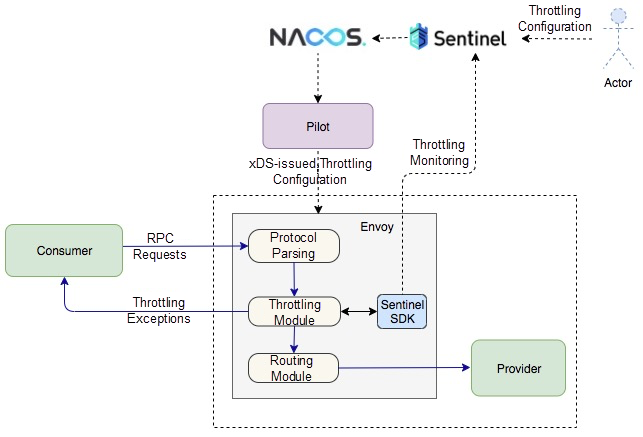

For performance-related reasons, the Service Mesh solution implemented at Alibaba does not use the Mixer component in Istio. The throttling function takes advantage of the Sentinel component widely used within Alibaba and by several other companies in China. This not only works with open-source Sentinel, but also reduces the migration costs of Alibaba's internal users, because it is directly compatible with their existing throttling configurations. To facilitate Mesh integration, multiple teams developed the C++ version of Sentinel. Here, the entire throttling function is implemented through Envoy's Filter mechanism. We have built the corresponding Filter based on the Dubbo protocol. In Envoy, this term indicates an independent functional module that processes requests. Each request is processed by Sentinel Filter. The configuration information required for throttling is obtained from Nacos through Pilot and delivered to Envoy through the xDS protocol.

One of the core problems to be solved when Envoy was developed was the observability of services. Therefore, Envoy has embedded a large amount of statistics from the very beginning to better observe services.

Envoy offers fine-grained statistics, even at the IP level of the entire cluster. In the Alibaba environment, the sum of consumer and provider services for some e-commerce applications are hosted by hundreds of thousands of IP addresses. In this case, each IP address carries different metadata under different services, and therefore the same IP addresses are independent under different services. As a result, Envoy consumes a huge amount of memory. To address this issue, we have added the stats toggle to Envoy to disable or enable IP-level statistics. Disabling IP-level statistics directly reduces memory usage by 30%. Next, we will follow the community's stats symbol table solution to solve the problem of repeated stats indicator strings, which will further reduce the memory overhead.

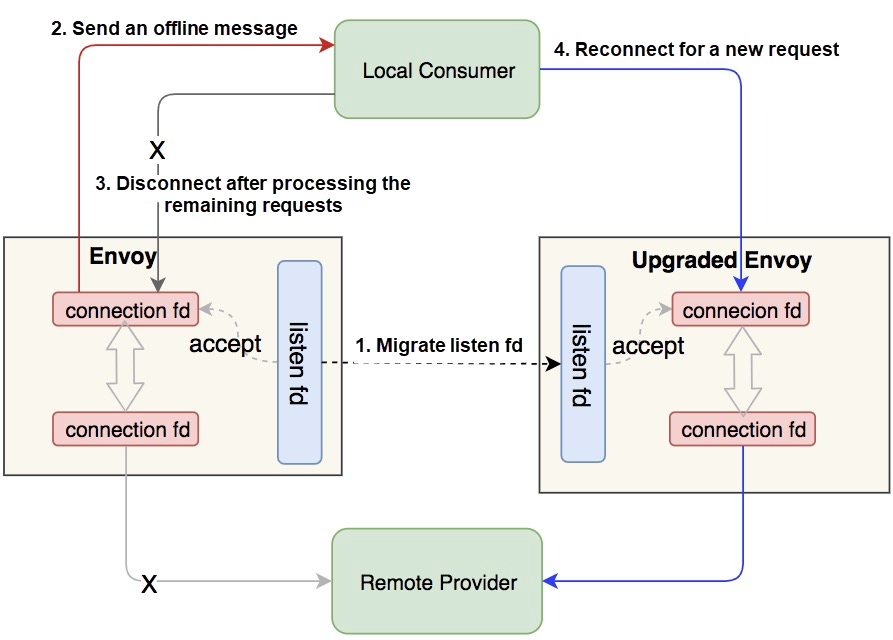

One of the core advantages of Service Mesh implementation is that it completely decouples infrastructure and business logic so that they can evolve independently. To realize this advantage, Sidecar needs to incorporate the hot upgrade capability to avoid business traffic interruption during the upgrade. This poses a major challenge to the solution design and technical implementation.

Our hot upgrade feature adopts a dual-process approach. First, we start a new Sidecar container, which exchanges runtime data with the old Sidecar. After the new Sidecar is ready to send, receive, and control traffic, the old Sidecar will exit after a certain period of time. In this approach, no business traffic is lost. The core technologies used by this approach are UNIX Domain Socket and the graceful offline function of RPC nodes. The following figure demonstrates a general example of the key process.

Incautious publication of performance data can lead to controversy and misunderstandings because there are many variables that can affect performance data in different scenarios. For example, the concurrency, queries per second (QPS), and payload size have a critical impact on the final data performance. Envoy has never officially provided the data listed in this article because the author, Matt Klein, was worried about causing misunderstandings. It is worth noting that, due to time constraints, our current Service Mesh implementation is not optimal and does not represent our final solution. For example, two routing problems exist on the consumer side. We chose to share this information to show our progress and current status.

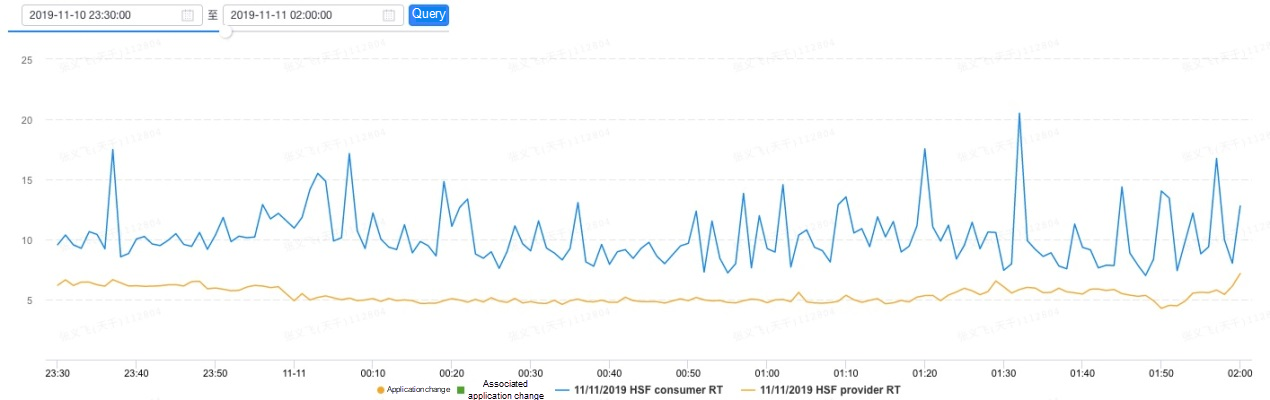

This article only lists the data for one of the core applications launched for Double 11. In terms of single-host response time sampling, the average response time on the provider side of a server with Service Mesh is 5.6 milliseconds, and that on the consumer side is 10.36 milliseconds. The response time performance of this server around the start of Double 11 is shown in this figure below:

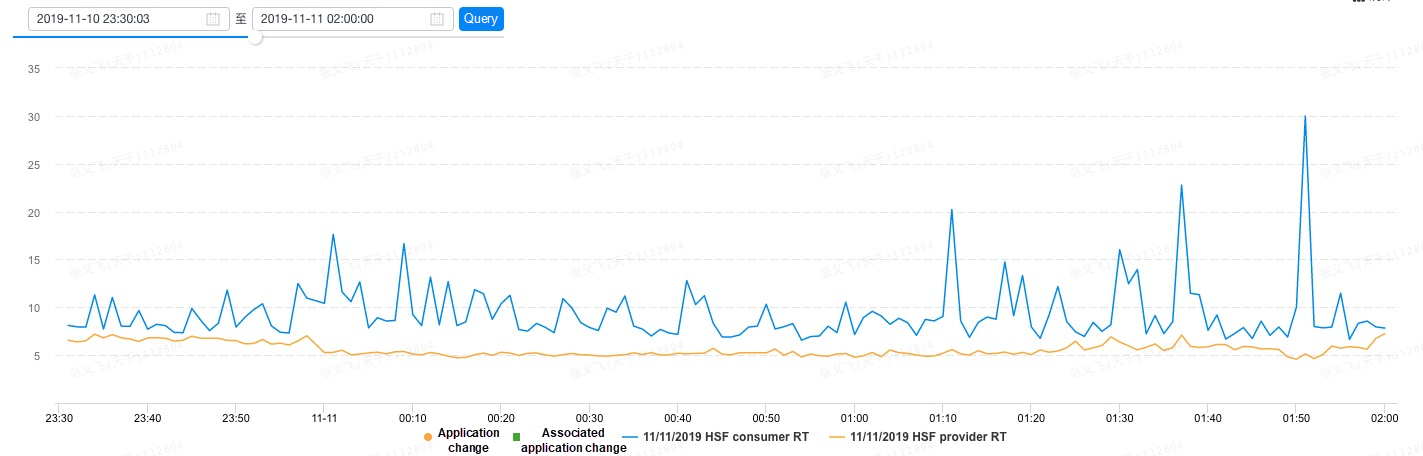

On a server without Service Mesh, the average response time on the provider side is 5.34 milliseconds, while that on the consumer side is 9.31 milliseconds. The below figure shows the response time performance of this server around the start of Double 11.

As you can see, the response time on the provider side increases by 0.26 milliseconds after Mesh implementation, while the RT on the consumer side increases by 1.05 milliseconds. Note that this difference in response time involves the time of communication between the business application and Sidecar, as well as the Sidecar processing time. The graphic below shows the link that causes the increased latency.

We also compared the average response time of all instances launched with Service Mesh and those without Service Mesh over a certain period of time. After implementing Mesh on the provider side, the response time increased by 0.52 milliseconds, while the response time on the consumer side increased by 1.63 milliseconds.

In terms of CPU and memory overhead, after implementing Mesh, the CPU usage of Envoy is maintained at about 0.1 cores across all the core applications, which will cause glitches as Pilot pushes data. In the future, we need to use incremental push between Pilot and Envoy to avoid glitches. The memory overhead varies greatly with the service and cluster size of the application. At present, Envoy still has much room for improvement in memory usage.

According to the data performance of all the core applications launched for Double 11, the introduction of Service Mesh has basically the same impact on the response time and the CPU overhead, whereas the memory overhead varies greatly with the dependent service and cluster size.

With the emergence of cloud-native technology, Alibaba has been committed to building a future-oriented technological infrastructure that is based on cloud-native technologies. In the development of such an infrastructure, we started out by working with several different open-source products, and our focus has been and continues to be on open source, having produced several open-source solutions ourselves. We hope to popularize our technology through providing our own solutions to the larger community and popularizing cloud-native technologies in general.

Moving forward, our goals can be summarized as follows:

We will continue to work with the Istio open-source community to enhance the data push capability of Pilot. With ultra-large-scale application scenarios like the Double 11 online shopping promotions at Alibaba, we have rather large and demanding requirements for Pilot's data push capability. However, we believe that, in the process of pushing the envelope and pursuing an even greater level of excellence, we can work with several different open-source communities to accelerate the construction of the next generation of new, open standards.

Connected to this, internally at Alibaba, we have established a co-creation partnership with the Nacos team. We will connect to Nacos through the community's miscabling protocol (MCP) so that the great multitude of Alibaba's open-source technical components can collaborate in a systematic manner.

And, following this, by integrating Istio and Envoy, we will also further optimize the protocols and data management structures for both Istio and Envoy, and reduce memory overhead through a more refined and rational data structure. And we will also focus on solving O&M capacity building issues for large-scale Sidecars. As such, Sidecar upgrades can be phased, monitored, and rolled back. Similarly, we will monetize Service Mesh, allowing businesses and technical facilities to evolve independently and more efficiently.

Operating Large Scale Kubernetes Clusters on Alibaba X-Dragon Servers

Fast, Stable, and Efficient: etcd Performance After 2019 Double 11

508 posts | 49 followers

FollowAlibaba Developer - March 3, 2020

Alibaba Developer - March 3, 2020

Alibaba Container Service - March 29, 2019

Alibaba Clouder - May 29, 2020

Alibaba Cloud Native - October 9, 2021

Alibaba Clouder - April 8, 2020

508 posts | 49 followers

Follow Black Friday Cloud Services Sale

Black Friday Cloud Services Sale

Get started on cloud with $1. Start your cloud innovation journey here and now.

Learn More Livestreaming for E-Commerce Solution

Livestreaming for E-Commerce Solution

Set up an all-in-one live shopping platform quickly and simply and bring the in-person shopping experience to online audiences through a fast and reliable global network

Learn More E-Commerce Solution

E-Commerce Solution

Alibaba Cloud e-commerce solutions offer a suite of cloud computing and big data services.

Learn MoreMore Posts by Alibaba Cloud Native Community