By Busu

Database recovery methods include backup set recovery and point-in-time recovery (PITR). As the name suggests, backup set recovery directly uses the saved data backup set for recovery and can only restore the database to a fixed database state at a certain time. PITR, on the other hand, uses both data backup and log backup of the database. PITR first restores the data to the database state at a certain time using the data backup. There is a log point in the data backup set, and logs from the log point to the specified time are downloaded for playback, allowing the database to be restored to the specified time.

In a standalone MySQL database, if data backup sets and binlogs exist, you can use the XtraBackup tool to restore the backup sets and then use the mysqlbinlog tool to play back the binlogs. As a distributed database, PolarDB-X stores data through components such as the Global Metadata Service (GMS) and the Data Node (DN). Compute Nodes (CN) are stateless. When PolarDB-X performs recovery at a specified point in time, the general idea is to restore both GMS and DN to a certain point in time. However, in a distributed database, there are distributed transactions, and branch transactions will fall on different data nodes. If each data node is restored independently, the atomicity of distributed transactions cannot be guaranteed.

The previous article Safeguarding Your Data with PolarDB-X: Backup and Restoration explains how to perform globally consistent PITR when the transaction policy is a TSO transaction. This article describes how the PolarDB-X Operator achieves globally consistent PITR when the transaction policy is an XA or a TSO transaction and proposes a recovery scheme based on two heartbeat transactions.

After reading the previous two articles Safeguarding Your Data with PolarDB-X: Backup and Restoration and Interpretation of Global Binlog and Backup and Restoration Capabilities of PolarDB-X 2.0, we can see that there are two schemes for PITR in PolarDB-X, referred to as PITR based on TSO and PITR based on global binlogs. The comparison is as follows:

| Scheme Name | Whether the business side must use TSO transactions | Full backup set | Log recovery volume | Whether it relies on the stability of CDC components | Playback efficiency | Whether it requires a heartbeat transaction |

| PITR based on TSO | Yes. TSO transactions are based on XA transactions and a global timestamp is added to achieve a repeatable read isolation level. The performance is lower than XA transactions | Data node physical backup | Small. Full backup set end point + incremental logs | No | High. You can use SQL threads on the data node to play back binlogs. Each data node is played back concurrently | No |

| PITR based on Global binlogs | No | Data node physical backup or PolarDB-X logical backup | Large. Full backup set start point + incremental logs | Yes | Low. You need a third-party tool to convert the global binlog into SQL and execute it on the compute node. | Yes |

| PITR based on two heartbeats | No | Data node physical backup | Small. Full backup set end point + incremental logs | No | High. You can use SQL threads on the data node to play back binlogs. Each data node is played back concurrently | Yes |

The PITR scheme based on global binlogs is significantly different from the other two schemes. It is less dependent on the internal implementation of PolarDB-X but consumes a large amount of computing resources. In most cases, it can be used to synchronize data from PolarDB-X to another system.

The PITR scheme based on two heartbeats introduces continuous heartbeat transactions to solve the problem when the business side must use TSO transactions in the scheme based on TSO.

We propose a scheme based on two continuous heartbeat transactions to achieve globally consistent binlog point pruning, which only requires the PolarDB-X transaction policy to be an XA transaction or a TSO transaction.

This scheme requires a broadcast table. For example, the table creation statements are:

CREATE TABLE `__heartbeat__` (

`id` bigint(20) NOT NULL AUTO_INCREMENT BY GROUP,

`sname` varchar(10) DEFAULT NULL,

`gmt_modified` datetime(3) DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE = InnoDB AUTO_INCREMENT = 2 DEFAULT CHARSET = utf8mb4 broadcastBy updating a record in this table at regular intervals (the period in the PolarDB-X Operator is 1 second, which ensures that the error of the recovery point in time does not exceed 1 second), distributed heartbeat transactions can be generated, for example:

set drds_transaction_policy='TSO';

replace into binlogcut.`_heartbeat__`(id, sname, gmt_modified) values(1, 'binlogcut', now());Next, we will explain how to use two-heartbeat distributed transactions to determine the consistency point. We can suppose that one is a distributed transaction Tm, and mark its Prepare Event on the binlog as Pm, Commit Event as Cm, and two continuous heartbeat transactions as Tnh and Tn+1h.

We can obtain the following known information:

Having known the first and the second piece of information above, if we find transactions T1 and T2 have the following event flow in a binlog: C1P2, we can know it means that the Commit time of transaction T1 is earlier than the Prepare time of transaction T2. Therefore, we can know: Prepare time of transaction T1 < Commit time of transaction T1 < Prepare time of transaction T2 < Commit time of transaction T2, that is:

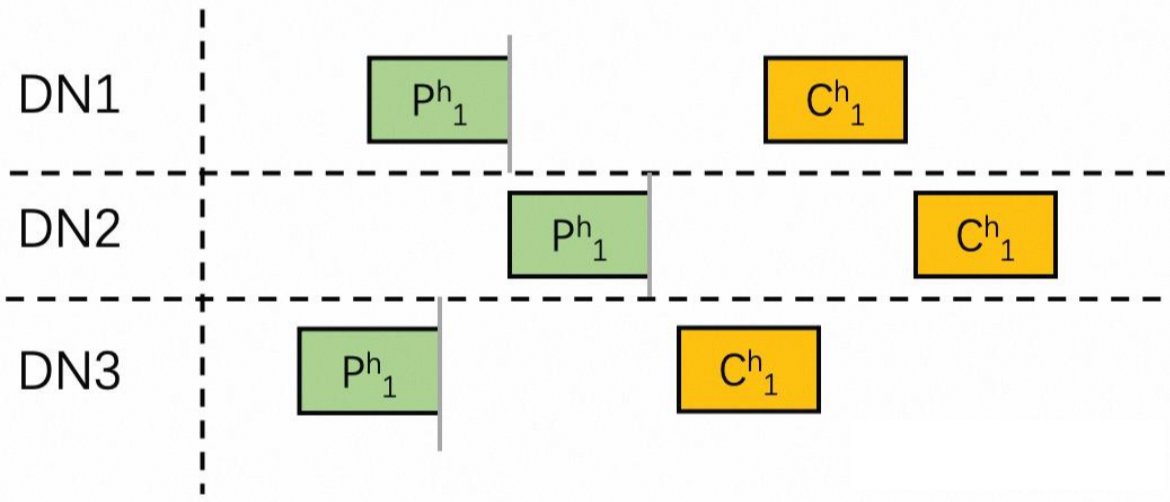

Try to prune binlogs of each data node with the Prepare Event end position of the heartbeat transaction T1h:

After pruning according to the above figure, we can perform the analysis by using the Pn of the distributed transaction Tn, and Cn positions on different data nodes:

Tn completes the commit before a heartbeat transaction T1h is initiated, and both Pn and Cn fall before P1h. Obviously, the current pruning line will not destroy the transaction characteristics of Tn.

Tn has not decided to commit before the heartbeat transaction is initiated and has not issued a commit. If this is the case, we only need to roll back the hanging transaction after recovery.

Tn has decided to commit before the heartbeat transaction is initiated and has issued a commit, but has not yet completed the commit of all branches. In the binlog of a data node, there is an event flow of... Pn... Cn... P1h... C1h... According to the above inference, we can conclude that Pn precedes C1h in the binlog of each data node. If Pn occurs before P1h, we only need to commit the hanging transaction after recovery.

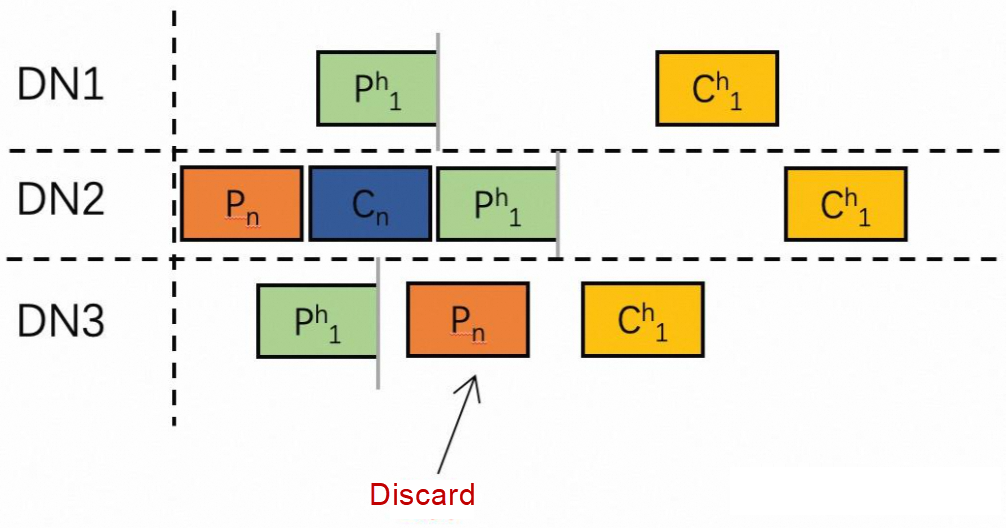

Question 1: Pn occurs after P1h:

Pn may appear in the following locations:

There is a problem that the branch transactions of the transaction Tn cannot be committed on DN3. Therefore, the pruning position on DN3 needs to be set to the end position of the Pn event. However, there may be a new committable transaction Tm. We also retain all its Pm events, meeting the following conditions: Tm Prepare time < Tm Commit time < Tn Commit time < T1h Commit time, so Tm Prepare time < T1h Commit time and Pm precedes C1h. The above process of pruning point expansion will not continue indefinitely but end before C1h.

Question 2: How to determine that Tn has sent a commit before the heartbeat transaction T1h? :

If we traverse all binlogs of each data node before P1h, we can definitely find this Commit Event. In the production environment, the amount of binlog data generated every day is huge, and binlogs will have a retention period, so it is not feasible to traverse all binlogs.

In the commit phase of the two-phase commit protocol, once the commit has been decided, the commit must be run even if an exception occurs. In practice, for this behavior of deciding to commit, a transaction log needs to be written to record that the distributed transaction has been decided to commit. After the database recovers to a healthy state and finds an uncommitted branch transaction, the transaction log is queried to judge whether the transaction is finally committed or rolled back.

When there is a Cn before T1h on a data node, it can be seen that the time to record the transaction log < the time to commit the branch transaction < the Prepare time of the heartbeat transaction < the time to commit the heartbeat transaction. The operation to record the transaction log precedes the commitment of the heartbeat transaction. What can be performed during the pruning is to keep all its Pn and its transaction logs, and we need to prune to C1h. After the recovery is completed, we can query the transaction log to determine whether to roll back or commit the transaction.

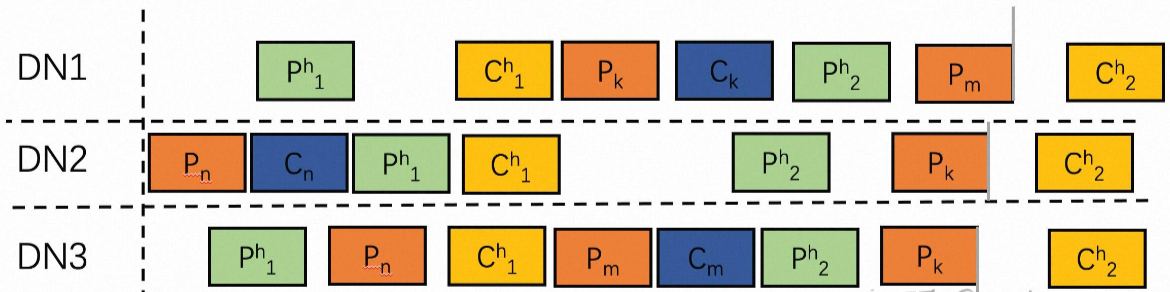

As shown in the figure above, we introduce the second heartbeat transaction T2h to solve Questions 1 and 2 at the same time. We analyze each data node binlog [ P1h start position, C2h end position] and set P2h as the initial pruning position:

The iteration process records the committable transaction ID, which facilitates the commit of the corresponding hanging transaction after recovery.

Among them, for transactions that are not in the recorded list of to-be-committed hanging transactions, we query the transaction log to determine whether they need to be committed, and the remaining branches are rolled back.

After a physical backup is initiated for a PolarDB-X data node, the completion time of the physical backup varies for each data node. Therefore, a globally consistent backup set = physical backup + incremental logs used to restore data to a globally consistent state.

Lock mode: After completing the physical backup of all data nodes, the database is locked to prevent transaction commits, and the binlog point of the data node is recorded.

Lock-free mode: After completing the physical backup of all data nodes, a portion of incremental logs is retained. You can restore data to a globally consistent location by PITR.

With a consistent backup set, users can quickly restore a globally consistent database instance by restoring physical backups and playing back incremental logs as little as possible, if necessary.

This scheme ensures that all data nodes are restored to a globally consistent state. However, because the metadata on GMS does not participate in distributed transaction collaboration, if the NTP machine clock is inconsistent (for example, the time on the GMS machine node is one second slower than the time on the data node machine), there may be a possibility that the restored GMS metadata has a column in a table, but the restored data node does not have this column.

In general, during the backup process, operations that collaboratively modify data on the data node and GMS, such as concurrent DDL operations and the addition and deletion of data nodes, may cause inconsistency between the restored metadata and business data. Therefore, we have two optimization schemes for this problem:

This article describes how to use two successive distributed heartbeat transactions and the dependency between the Prepare Event and the Commit Event to obtain a consistent binlog pruning point. During recovery, restore the logs to the pruning point, commit the pending transactions recorded during pruning, and commit or roll back some transactions by transaction logs.

Safeguarding Your Data with PolarDB-X: Backup and Restoration

Building High-Availability Routing Service for PolarDB-X Standard Edition Using ProxySQL

Alibaba Cloud Community - June 14, 2024

ApsaraDB - June 13, 2024

ApsaraDB - June 19, 2024

ApsaraDB - October 24, 2024

ApsaraDB - October 17, 2024

ApsaraDB - October 17, 2024

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Database Backup

Database Backup

A reliable, cost-efficient backup service for continuous data protection.

Learn MoreMore Posts by ApsaraDB

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free