By Mengshi

In the Interpretation of PolarDB-X Data Distribution (1), we focused on some concepts of data distribution in PolarDB-X but did not describe the reason. Starting from this article, we will talk about some design principles of data distribution in PolarDB-X.

As we mentioned earlier, PolarDB-X supports both hash and range partitioning algorithms, with hash being the default choice.

For other databases, YugabyteDB also defaults to the hash partitioning algorithm, while TiDB and CockroachDB use the range partitioning algorithm.

So, what does hash and range mean for databases? How should we choose as an application? What are the differences between PolarDB-X's hash partitioning and that of other databases?

Let's draw a conclusion first:

First of all, we need to make it clear again that hash in PolarDB-X refers to consistent hashing. In fact, the consistent hashing is very similar to range. The bottom layer is partitioned by range interval. Consistent hashing in both PolarDB-X and YugabyteDB is implemented in this way.

Simply put, the consistent hashing here is to range hash values. For example, for the same value:

• Consistent hashing: Range(Hash(value))

• Range: Range(value)

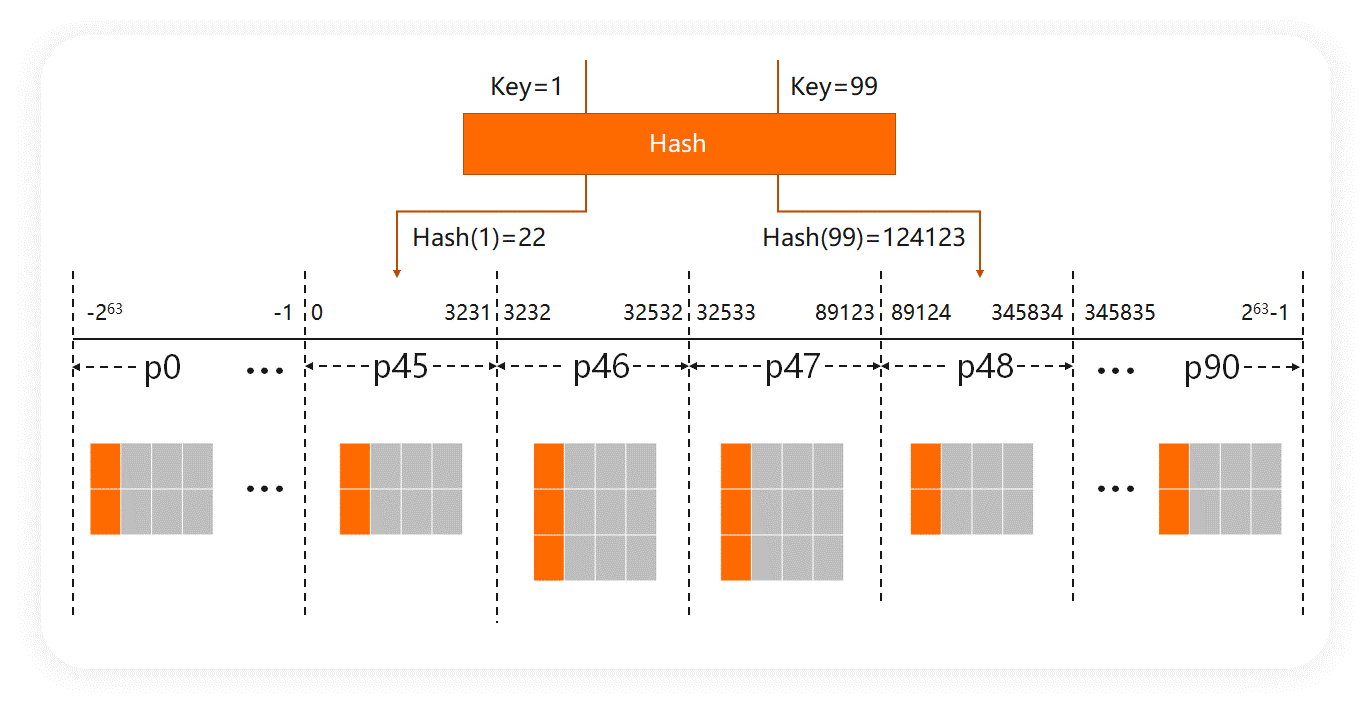

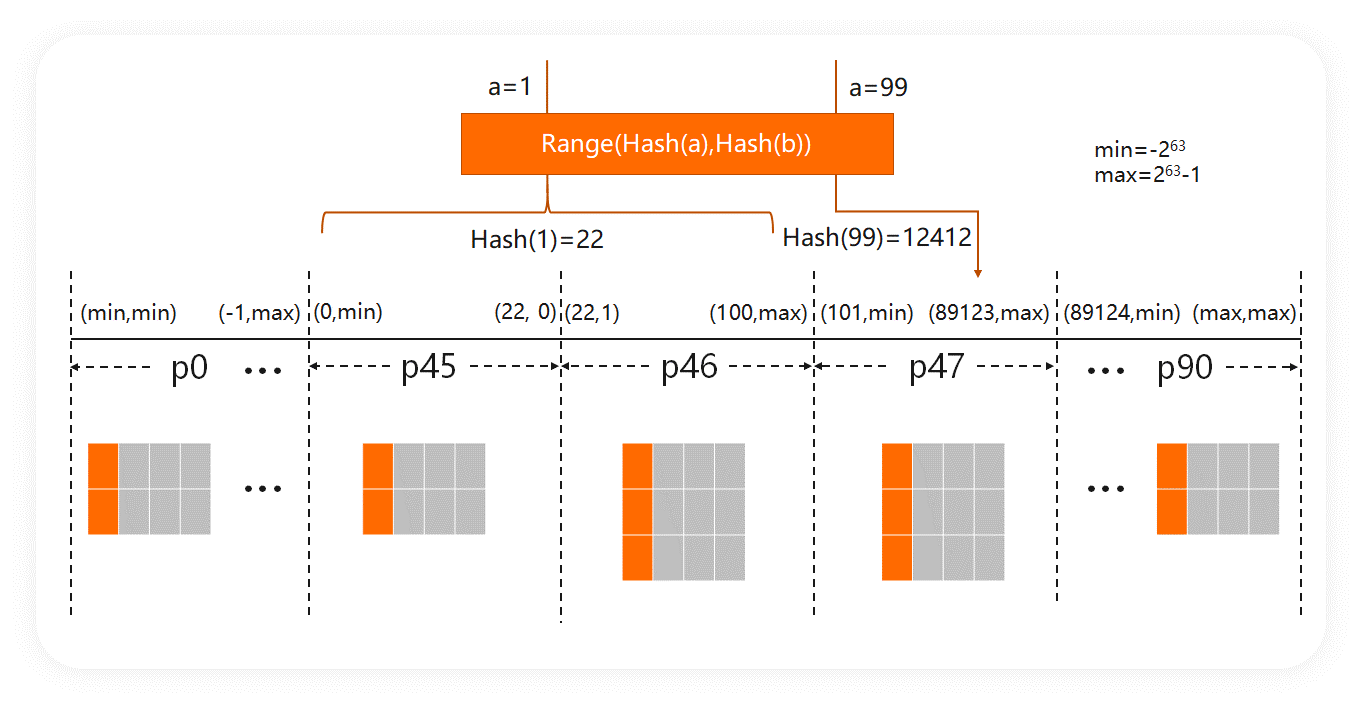

The following figure shows a consistent hashing:

Consistent hashing supports many range operations, such as splitting a partition and merging certain partitions. Therefore, consistent hashing can locally add or remove shards.

In contrast, some hashes are implemented by using a modulo algorithm, such as most database middleware.

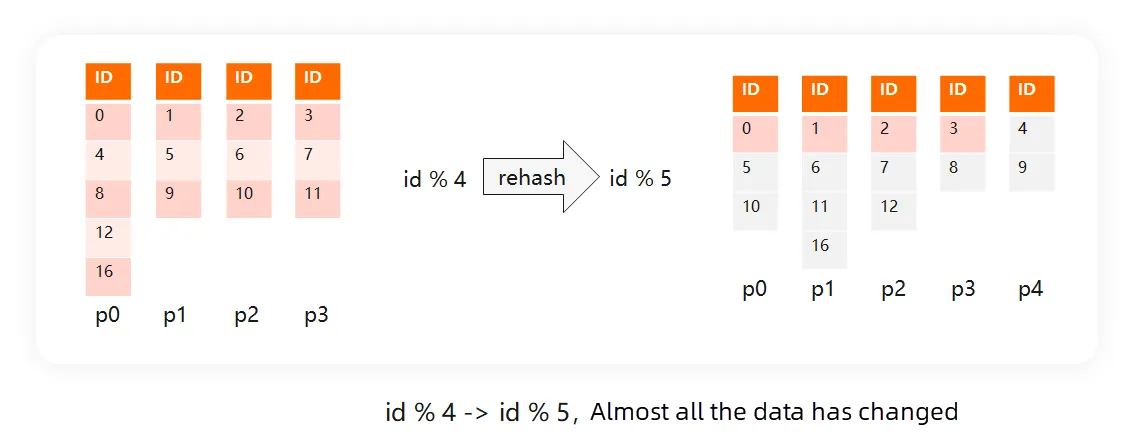

Although the modulo algorithm is simple to implement, you cannot split a single shard locally.

For example, there are currently 4 shards, and its modulo algorithm is ID % 4. When you want to increase to 5 shards, the modulo algorithm is ID % 5. At this time, you will find that more than 80% of the data have to be moved again, and the cost is not much different from that of completely rebuilding the data, as shown in the following figure:

So the hash implemented by the modulo algorithm is a very poor distribution strategy in distributed databases.

Here, we compare the consistent hashing with the range.

Generally, the distribution of hash values is relatively uniform, so it is convenient for hash to perform pre-partitioning. For example, if you want to divide the hash values into 64 partitions in advance, you only need to divide the whole range of hash values into 64 equal parts.

However, for range partitioning, the distribution of data is strongly related to business features, and there is no way to predict the distribution in advance, which makes it difficult to perform pre-partitioning. There are two types of common range partitioning methods:

The first method applies to limited scenarios, while the second method which relies on the scheduler requires the business to run for a long time to reach a relatively stable state, so there are some uncertainties.

For OLTP services with high stability, the database is expected to be determined in terms of performance and stability. Therefore, pre-partitioning is preferred.

The obvious difference between hash and range lies in the range query.

Range is sequential and allows adjacent values to be in the same partition or adjacent partitions. When range queries are performed, partition pruning can also be easily performed.

However, for hash, its hash values are sequential but its values are not sequential. Therefore, in most cases, if a range query involves several values, several partitions are involved.

The query of a partition is evidently more cost-effective than a query of multiple partitions.

Range queries are commonly used in several scenarios:

For most businesses, querying a range of user IDs or querying a range of item IDs is definitely not commonly used. Therefore, in terms of business practices, range queries are mainly used for time types.

For range queries, range must be advantageous, so we also recommend you use range partitioning on some global indexes of time types.

There is also another less obvious range query, which is very useful.

The prefix-match query of the index is a range query.

The composite index is also commonly used and contains multiple index fields. In general, we expect to narrow the scan range even if there is no way to provide the values of all index fields in the case of prefix matching.

For example, for the composite index (a,b), if a can be differentiated, then the condition a=1 can also be filtered.

Range partitioning processes prefix-match queries in a natural manner. As described in the preceding section, the prefix-match query of the index is a range query. For example, a query of a=1 can be expressed as follows:

(1,b.MIN) <= key <= (1,b.MAX)Such a range query can be used for partition pruning in range partitioning. Intuitively speaking, all records with a=1 are in the same partition or adjacent partitions.

Therefore, when range partitioning is used for composite indexes, you do not need to perform multi-level partitioning, but only level-1 partitioning.

For the composite index (a,b), if hash partitioning is used, how should we design the partition key?

Scheme 1: Use a as the partition key. The problem is that if a has a very low discrimination, for example, a represents the province, then hot data will appear. This is the scheme used by YugabyteDB.

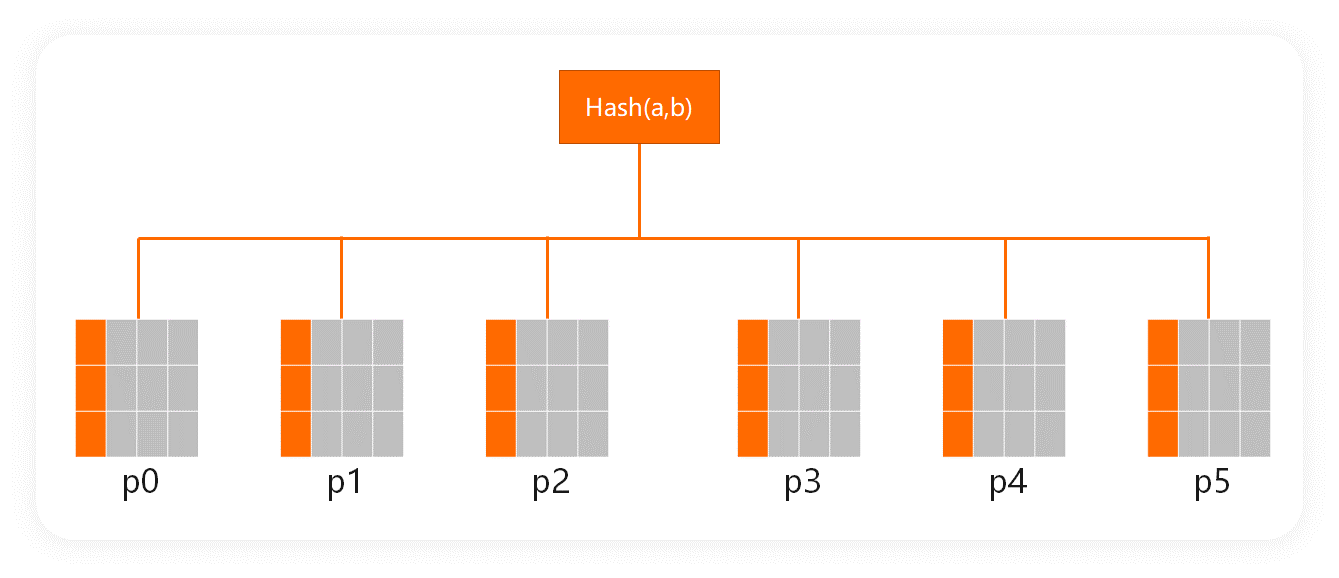

Scheme 2: Use (a,b) as the partition key. That is, Hash(a,b)=Hash(concat(a,b)).

As shown in the following figure:

Under this scheme, as long as (a,b) has relatively high discrimination, hot data will not appear. This idea is the same as that of using a standalone database.

However, if the given query condition is only a, the hash value cannot be calculated and partition pruning cannot be performed.

This is different from the secondary index of a standalone database that generally supports prefix queries.

Note that most databases that provide a partition by hash(a,b) syntax, whether standalone or distributed, cannot perform prefix pruning in this way.

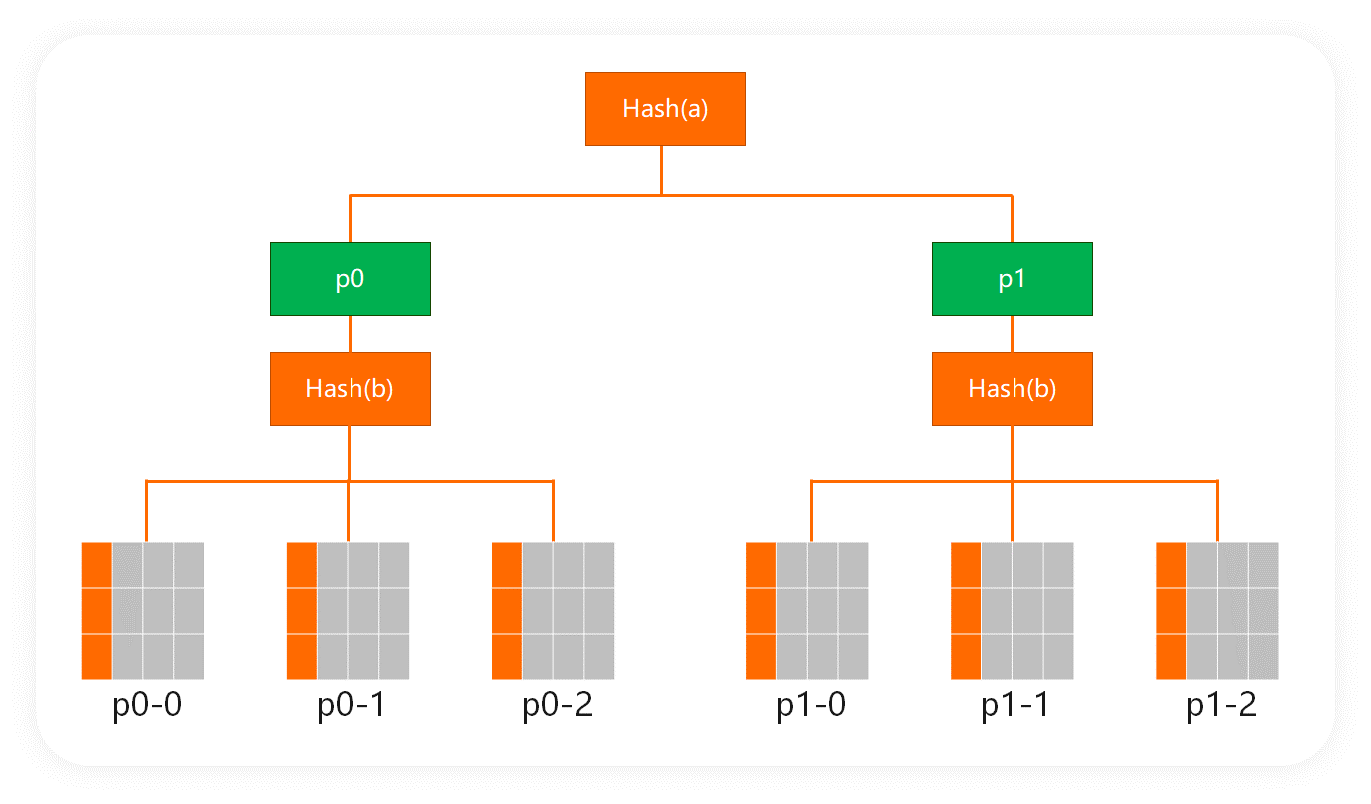

Scheme 3: Use level-2 partitions, and a and b as the partition keys of the level-1 partition and the level-2 partition respectively.

As shown in the following figure:

In this way, partition pruning can still be performed if the prefix is matched.

However, in this case, you need to support unlimited levels of subpartitions (the number of subpartitions that can be created is determined by the number of columns that the index supports). This usage is strange, and there is currently no database that supports unlimited levels of subpartitions.

In fact, this is the method you can use in most distributed databases that support hash partitioning, such as ApsaraDB for OceanBase. You can only use it to solve prefix queries with no more than two columns.

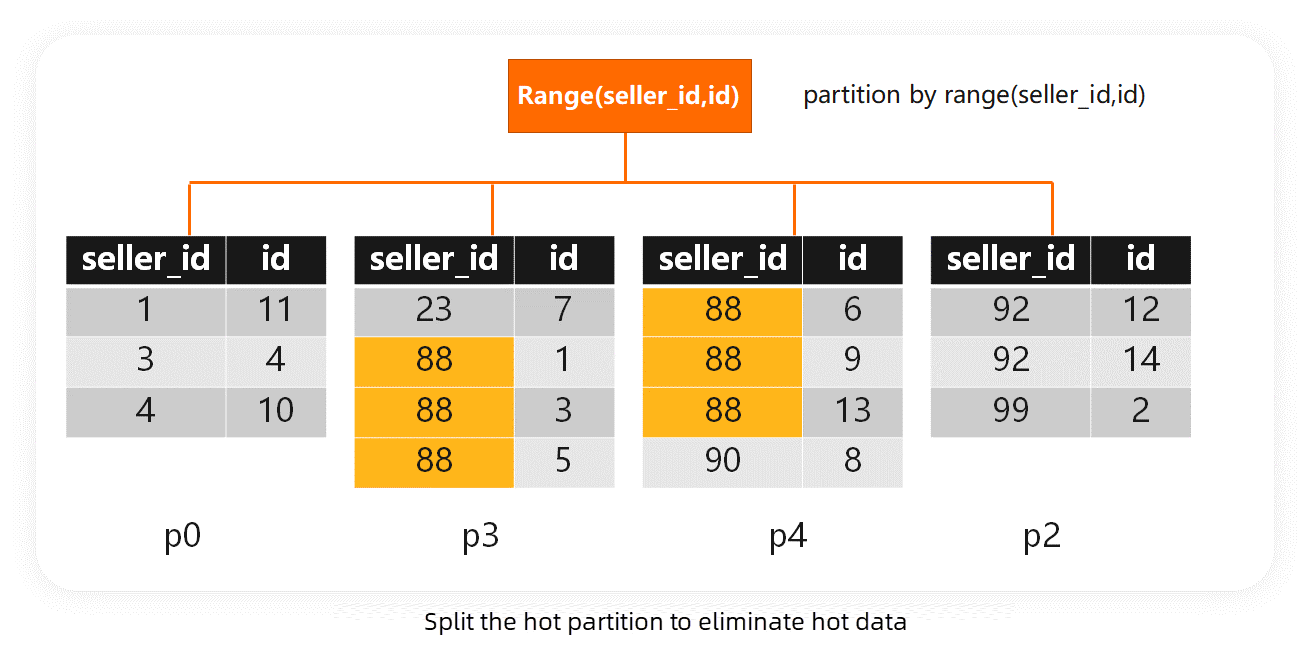

Scheme 4: Use the hash value of a and the hash value of b as the range partition key. That is, define Hash(a,b)=Range(Hash(a),Hash(b)).

In this way, partition pruning can still be performed if the prefix is matched. At the same time, only level-1 partitions are required, without multi-level partitioning.

For example, the query of a=1 can be expressed as:

(hash(1),b.HASH_MIN) <= key <= (hash(1),b.HASH_MAX)As shown in the following figure:

It can be found from the above figure that a=1 contains two adjacent partitions of p45 and p46, and a=99 contains only one partition of p47.

The partition by hash(a,b) in PolarDB-X uses Scheme 4, which supports prefix queries.

This is a very interesting point.

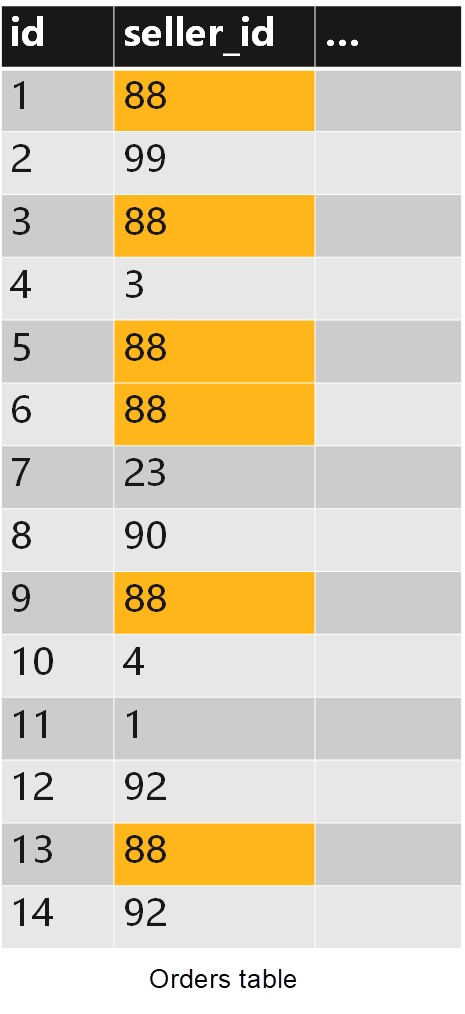

The hot value here refers to a large number of rows or writes of a key value. For example, the following is an orders table of a typical e-commerce system:

create table orders (

id bigint,

buyer_id varchar(128) comment 'buyer',

seller_id varchar(128) comment 'seller',

primary key(id),

index sdx(seller_id),

index bdx(buyer_id)

)Data is as follows:

Let's focus on the global index of sdx.

This field indicates the seller ID. We can imagine that there are generally some big sellers in the e-commerce system, such as Tmall Supermarket which is a big seller in Taobao. This kind of big seller will generate a lot of orders. As shown in the above figure, the global index of sdx has a lot of data with seller_id=88, which is much higher than that of ordinary sellers.

This kind of data is the hot value we discuss here.

Note: The hot value can be easily interpreted as a hotspot of data volume. However, in actual production systems, the amount of data itself often does not become a bottleneck (in this case, no matter how much data a single seller has, it is rare to exceed the magnitude of T). More commonly, the write volume becomes hot data. For example, a single seller generates a write TPS that is much greater than the capacity of a single DN. This is a typical scenario in which the write volume of orders at 00:00 on Double 11 is generated.

Let's take a look at how range and hash in PolarDB-X process hot data.

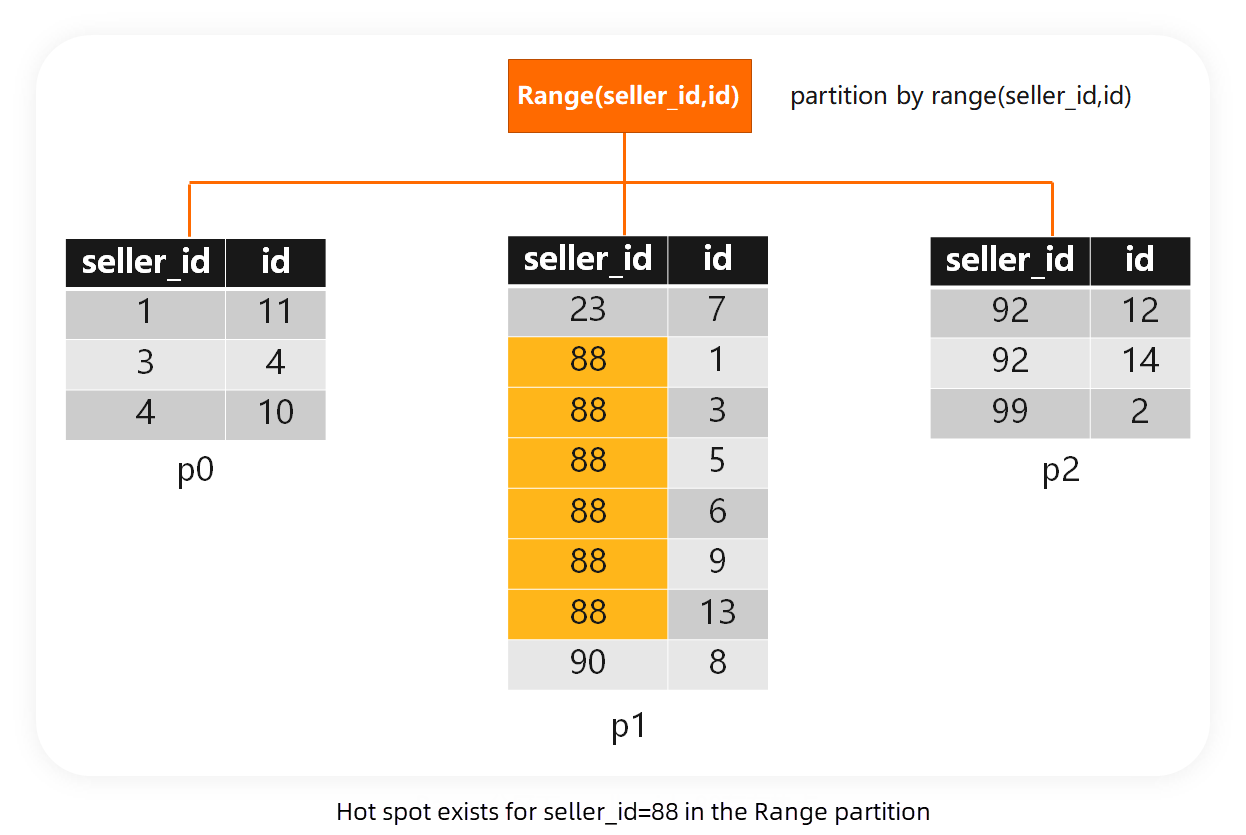

According to regular practice, we use range to partition the index, and the index key together with the primary key as the partition key. For this example, that is partition by range(seller_id,id). We will explain the reason later.

In this example, the data is ordered by (seller_id, id) and distributed as follows:

When p1 is found to have hot data, we can split the p1 partition:

Records with seller_id=88 are distributed in two partitions. The benefit is that the data is scattered, and the two partitions can be scheduled to different DNs to jointly share the write load and storage space. However, for the query of seller_id, you also need to query both shards at the same time. In this way, the write capacity and the upper limit of storage space are expanded, but the cost of reading is increased.

For large sellers like seller_id=88, this cost is acceptable. However, for ordinary small sellers, we still want to distribute their data in one partition as much as possible. It can be guaranteed with the features of range.

Here you can find the features of range to handle hot data:

Additional operations are not required, and the features of range (range queries, prefix matching, and splitting) naturally eliminate the hot data.

For this reason, we will put the primary key together as part of the index partition key at the end (for TiDB, CockroachDB, and other distributed KV-based data, this is also used to implement non-unique keys).

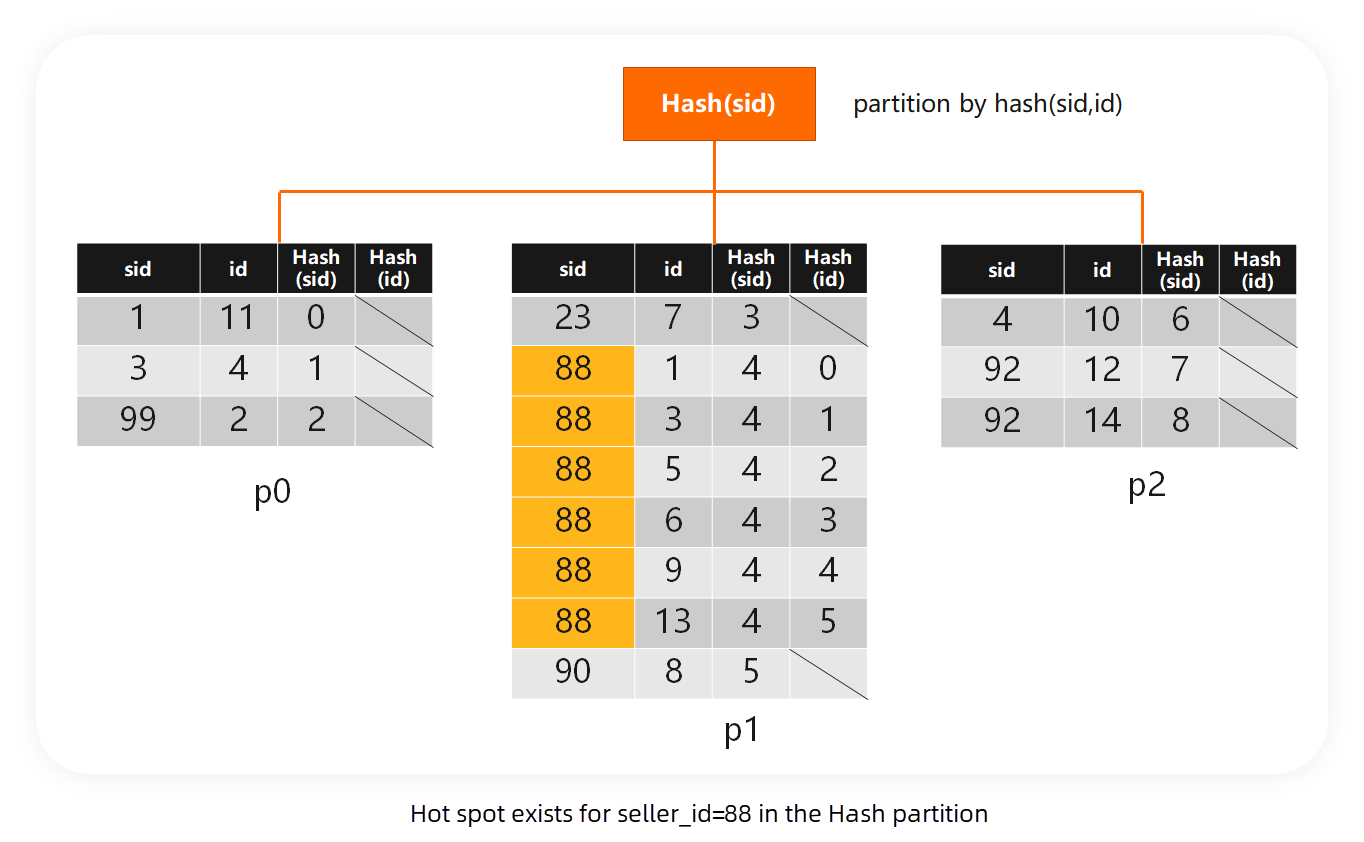

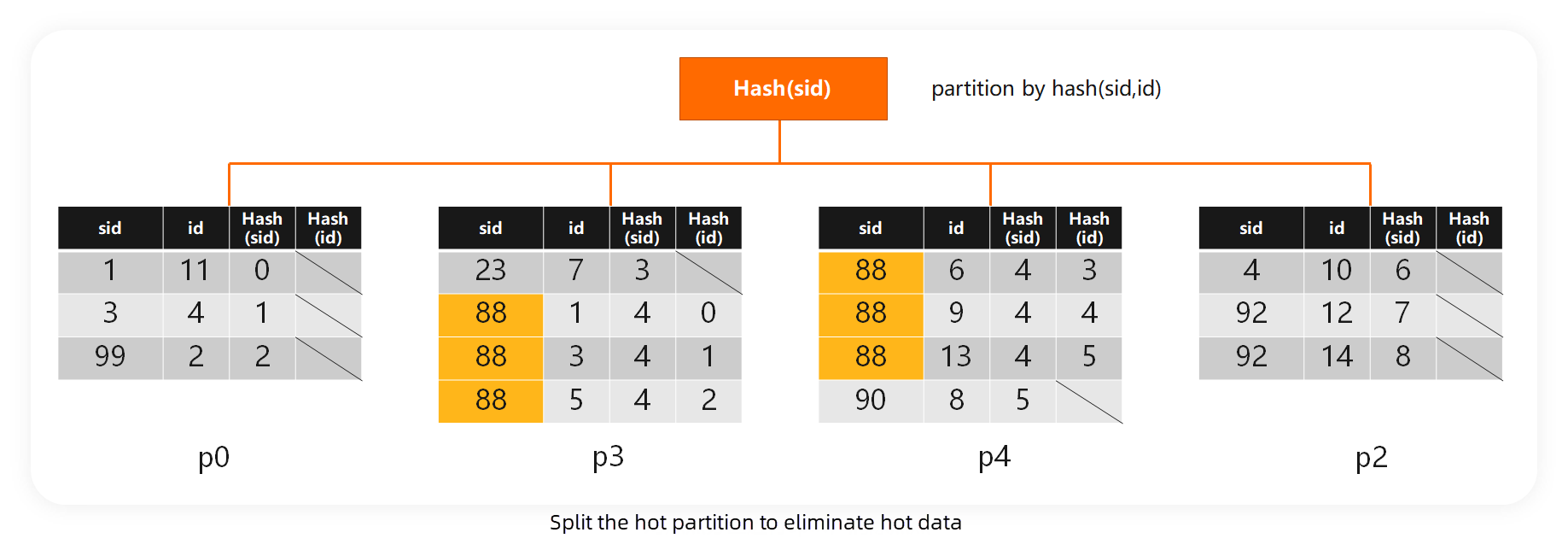

Similar to a range-partitioned index, if we use a hash-partitioned index, the index key and primary key are also used together as the partition key. In this example, that is partition by hash(seller_id,id).

In this example, the data is ordered by (hash(seller_id), hash(id)) and distributed as follows:

In the example, the data with seller_id=88 is concentrated in the partition p1 which is an obvious hot partition compared to other partitions.

Similar to the range partitioning, when p1 is found to have hot data, we can split the p1 partition:

It can be seen that the processing results of hot data in PolarDB-X hash partitioning are similar to those of range partitioning, and it is also possible to divide only the data of big sellers without affecting the data of small sellers.

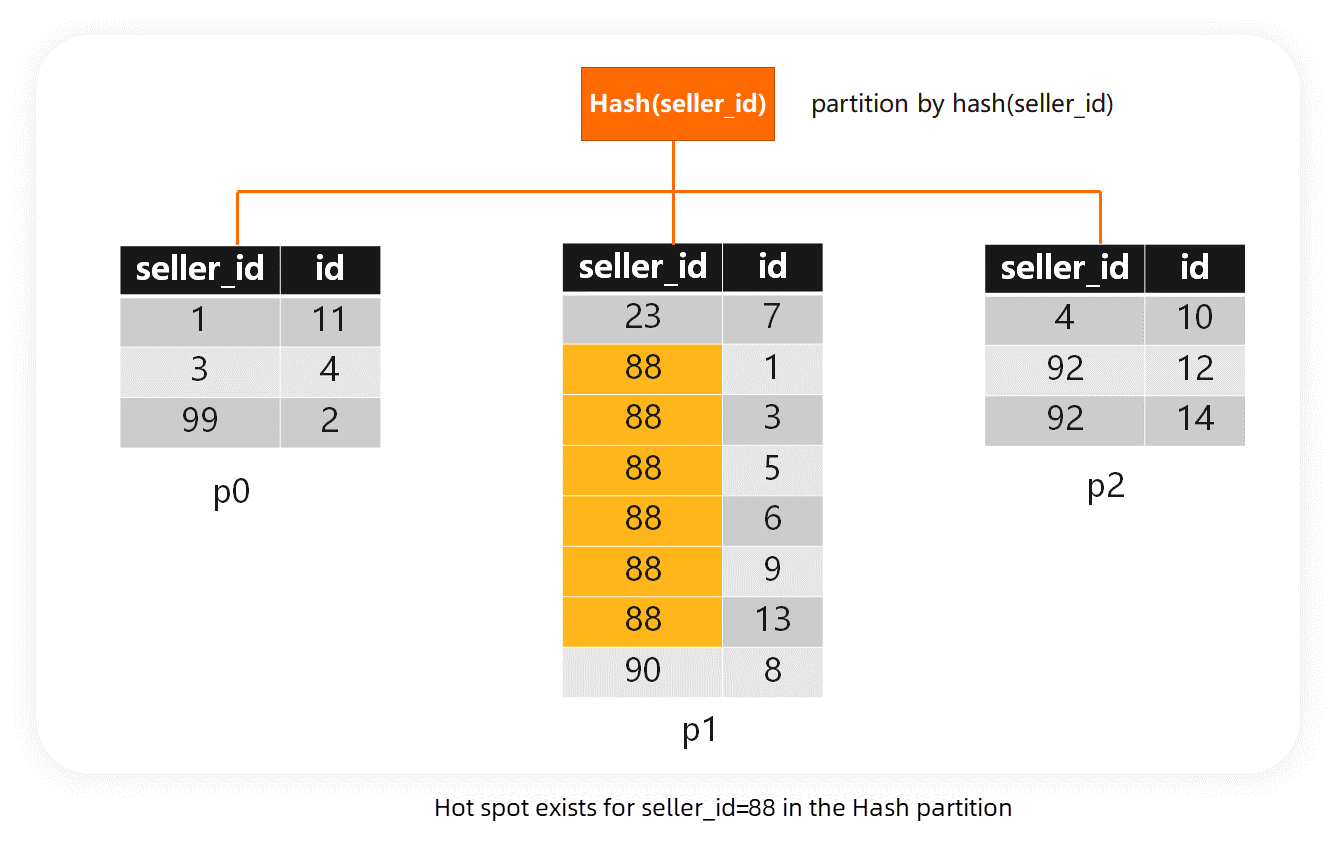

As described in the preceding section, for non-PolarDB-X databases, most hash partitioning with multiple columns does not support prefix queries. Therefore, you cannot split partitions as easily as PolarDB-X to divide hot data. Such databases generally use subpartitions to partially solve such problems.

Different from PolarDB-X using (seller_id, id) as the partition key, because prefix queries are not supported, only seller_id can be selected as the partition key, that is, partition by hash(seller_id). In this example, the data is distributed as follows:

In the example, the data with seller_id=88 is concentrated in the partition p1 which is an obvious hot partition compared to other partitions.

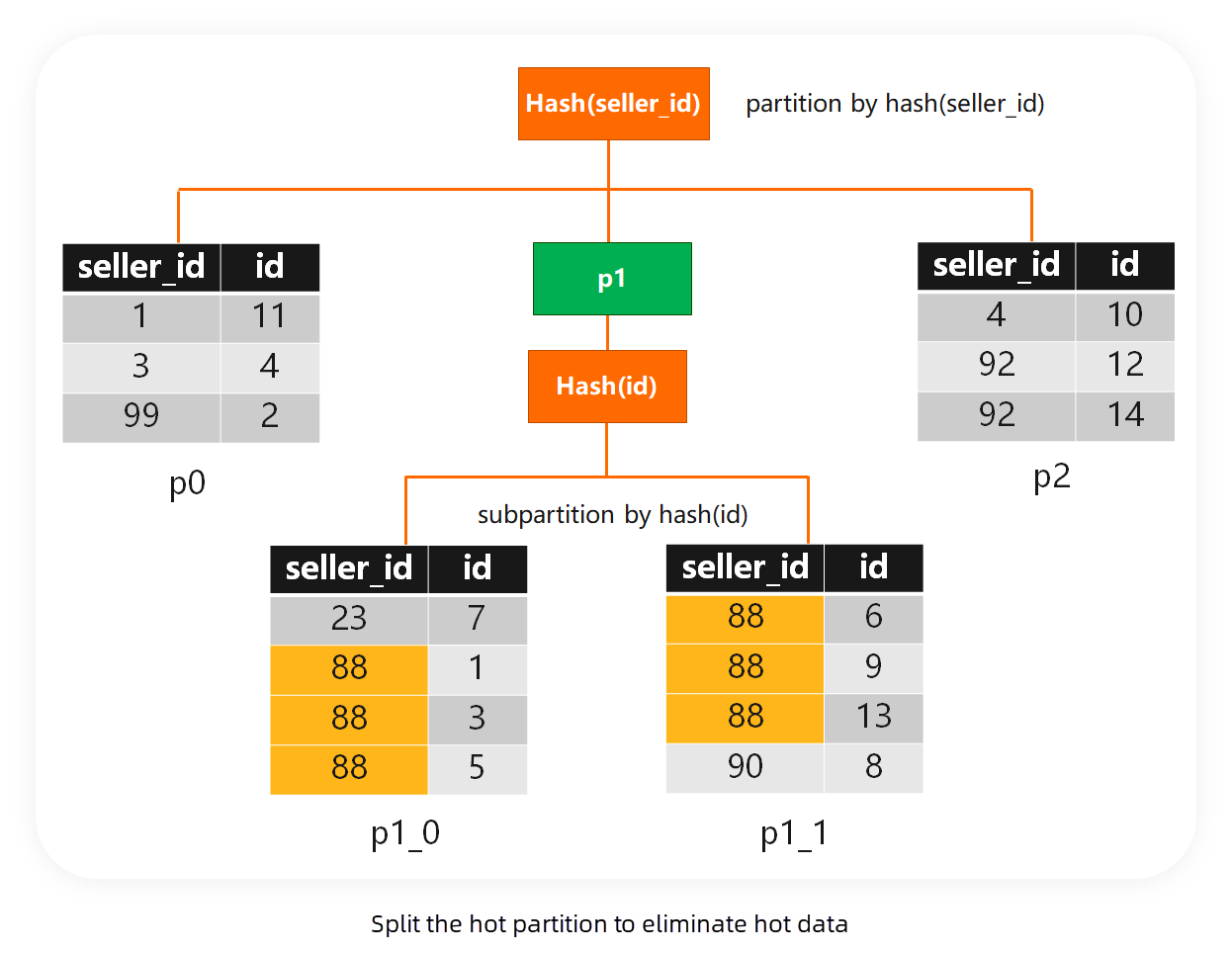

In other databases, the typical method to deal with such problems is to create subpartitions in this partition according to other fields (usually primary key fields), as shown in the figure:

In this way, p1 becomes two partitions, p1_0 and p1_1, and the data volume of each partition reaches a balanced state again, without hot data.

Note: To avoid the read amplification problem that will affect small sellers, we must create subpartitions in specific hot partitions instead of all partitions. This is commonly referred to as a non-templated subpartition.

Therefore, if the hash partitioning of a database does not support prefix queries, whether it can solve some hot issues depends on whether it has the non-template subpartition capability.

This is a common issue unique to range partitioning.

For example, when the generated primary key has a certain level of monotonicity and continuous INSERT operations occur, write requests will be concentrated on the last shard.

This scenario is very common, and a typical solution is to scatter the generated primary keys. For instance, in TiDB, the SHARD_ROW_ID_BITS randomly changes the initial values of the generated sequence, effectively eliminating the monotonous nature of AUTO_INCREMENT.

Another interesting example is with Date and Datetime.

For example, we have a log table that is partitioned by range:

CREATE TABLE log(

id int primary key,

c1 datetime,

c2 date,

index idx1(c1),

index idx2(c2)

)When we keep writing records to this table, we will find that there will be hot data of writes on idx1, but not on idx2. Why?

Since c2 is accurate only to the date, the c2 values of the data written before and after are the same, so it is not monotone. In this case, the records with c2="2021-08-11" become hot data. However, as the data continues to be written, the partition to which the data with c2="2021-08-11" belong will be split as described in the previous section, which means that the data is divided based on ID so the hot data is no longer hot.

However, c1 is accurate to milliseconds or even higher, and the c1 values of the data written before and after are different and increasing. Therefore, hot data will appear on c1, and these hot data will keep hot. The last partition will be hot even if the data is divided.

Range partitioning has a significant advantage over hash partitioning in range queries. However, to achieve this advantage, range partitioning cannot pre-partition data and relies heavily on automatic scheduling, which continuously splits and merges data as it changes. In your database system, range partitioning operations occur dynamically and unpredictably, making it challenging to ensure stability.

Scheduling depends on multiple variables, such as data volume, read/write volume, I/O, CPU, and network. We perform stress tests before Double 11, but these variables are difficult to replicate during the actual event. It's challenging to predict the scheduler's behavior, and you can only trust that it's correct. As a DBA or application developer, you hope that the database's running state during critical moments is the same as during stress tests.

Hash supports pre-partitioning and has a stronger certainty, which ensures that data distribution remains relatively static on systems with heavy stability. At the same time, PolarDB-X's hash partitioning is also suitable for handling hot issues.

PolarDB-X was designed for the core scenarios of Taobao, such as e-commerce and transactions, emphasizing stability and certainty. Therefore, hash is selected as the default partitioning method. We recommend using range partitioning in scenarios involving a large number of range queries.

Try out database products for free:

Interpretation of PolarDB-X Data Distribution (3): TPC-C and Transparent Distribution

ApsaraDB - August 15, 2024

ApsaraDB - August 9, 2024

ApsaraDB - August 13, 2024

ApsaraDB - August 29, 2024

ApsaraDB - November 8, 2024

ApsaraDB - April 20, 2023

PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More LedgerDB

LedgerDB

A ledger database that provides powerful data audit capabilities.

Learn MoreMore Posts by ApsaraDB