By Mengshi

Recently, there has been a lot of buzz in the field of distributed databases, with many people paying close attention to the test results of TPC-C.

We first need to understand what kind of evaluation we can derive for this distributed database from the test results of TPC-C.

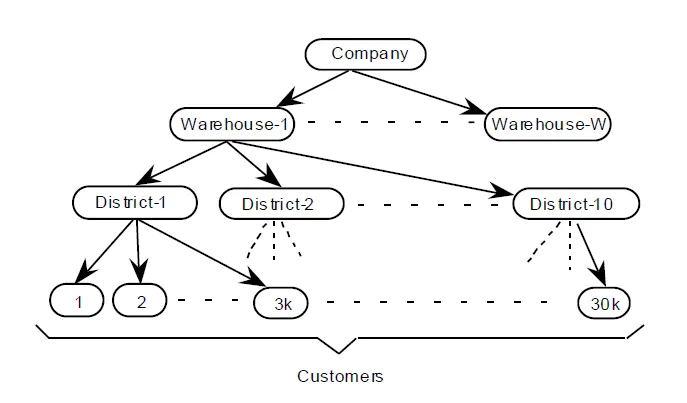

The business scenario of TPC-C is very simple. In this article, we will focus on several important concepts:

Based on such business features, it is easy to think of sharding the database according to the ID of the warehouse (wid) during the design of the database. This sharding ensures that most transactions can be completed within a single shard.

For example, if we have a TPC-C test report like this:

Transaction Mix Percent Number

New-Order 45%

Payment 43%

We can conduct the following interpretation:

It can be seen that in the TPC-C, the standalone transactions contribute the most to the tpmC indicator. However, these standalone transactions do not reflect some problems inherent to distributed systems. For example, data transmission between nodes is not required, and transaction state coordination is not necessary.

The first conclusion is that TPC-C can represent a database's standalone performance, but it cannot reflect a distributed database's distributed transaction capability, including performance, extensibility, and even consistency, which cannot be verified in some non-strict tests.

If distributed transactions are strictly controlled like TPC-C, what does this mean for business?

That is, the partition key must always be considered in the business. Only when the business can draw a uniform partition key can distributed transactions be minimized.

No matter how "advanced" a distributed database claims to be, it is essentially a "pseudo" distributed database as long as partition keys are required. There is no significant difference between using such a database and using database-sharding and table-sharding middleware + standalone database.

In fact, except for the 11% distributed transactions that cannot be implemented by database-sharding and table-sharding middleware + standalone database, other TPC-C indicators such as performance and extensibility can be well performed. As long as enough machines are added, a very high tpmC can be achieved, and the process will be very linear. The scheme of database-sharding and table-sharding middleware + standalone database is not suitable due to the 11% distributed transactions.

The second conclusion is that if your business uses a distributed database as middleware to "carefully and well design the partition key of each table to avoid distributed transactions", then the tpmC carried by each machine (450,000 in the above example) is a good reference, while the total tpmC of the cluster (100,000,000 in the above example) has no practical significance for reference.

If you want to view the tpmC of standalone databases, we can compare it with some traditional standalone databases. Let's look at some data.

• Data 1:

According to an official test on PostgreSQL 11, with a similar machine configuration, 1,030,000 tpmC can be achieved.

• Data 2:

The TPC-C official list contains a lot of data from standalone databases, and the tpmC is generally above the million level (even for CPU more than a decade ago).

It is a huge number (100 million tpmC). However, compared with the size of the machine, it does not seem to have any advantage over the scheme of middleware + standalone database. Let alone, to improve tpmC, stored procedures are usually used to encapsulate TPC-C transactions, which is too intrusive for common business and is rarely used.

You may say that there are 11% distributed transactions, which cannot be solved by the middleware + standalone database sheme.

The problem here is that these 11% distributed transactions must represent strong dependence on partition keys. I think, most businesses are unwilling to always focus on the partition key since it is quite difficult to choose an appropriate partition key.

Admittedly, TPC-C is very, very friendly to choose a partition key, and every primary (ITEMWAREHOUSECUSTOMERORDER) table can be partitioned by WAREHOUSE ID, but in actual business, the situation is much more complicated. For example:

Naturally, we want to know how we can deal with the partition key more efficiently.

The broad concept of partition key is not unique to distributed databases.

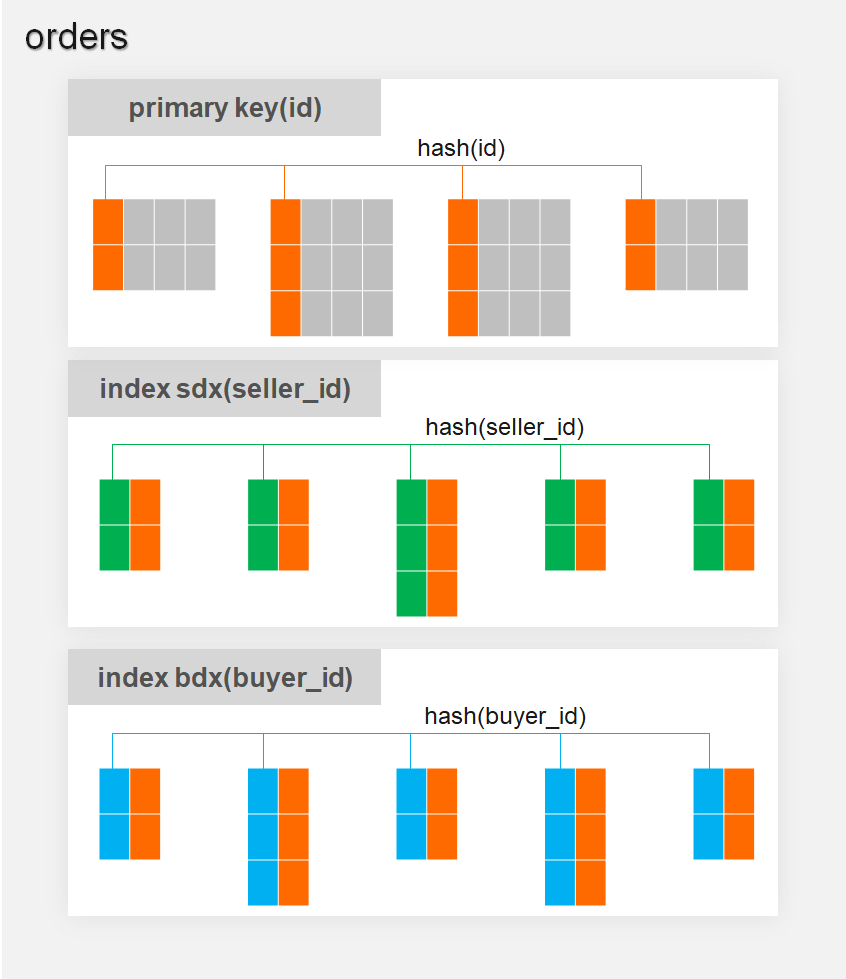

In a standalone database, such as MySQL, data is stored as B-trees. If a table has only one primary key, it will only have one B-tree. For example:

CREATE TABLE orders(

id int,

seller_id int,

buyer_id int,

primary key (id)

);The unique B-tree of this table is sorted by the primary key (id). If our query condition contains an equivalent condition with an ID, such as where id=1, then we can quickly locate the record corresponding to this ID in the tree. Otherwise, a full table scan is required.

The key used for sorting in the B-tree can be located to a leaf node by using a binary search, and the partition key can be located to a partition by using a hash or range binary search. It can be seen that they are all designed to locate data quickly.

If we want to query the above table with where seller_id=1, in MySQL, we don't need to set seller_id as the primary key. A more natural way is to create a secondary index on seller_id:

CREATE INDEX idx_seller_id ON orders (seller_id);Each secondary index is an independent B-tree in MySQL, and its key used for sorting is the column of the secondary index.

In other words, the current t1 table has two B-trees, one primary key and the other idx_seller_id, as follows:

id->seller_id,buyer_id

seller_id->idWhen where seller_id=1 is used for query, the B-tree of idx_seller_id will be accessed first, and the leaf node will be located according to seller_id=1 to obtain the id value. Then, the id value will be used to find the complete record on the B-tree of the primary key.

In fact, the secondary index gains query performance by redundant data, using space and increasing the cost of writing.

At the same time, the cost of maintaining secondary indexes is not very high, so you can create several secondary indexes on a table without worries.

Similarly, in a distributed database, you can use a distributed secondary index, also known as a global index, to prevent partition keys. This global index needs to be efficient, inexpensive, and highly compatible with traditional secondary indexes.

Global Indexes have been described in Interpretation of PolarDB-X Data Distribution (1).

A global index consists of a set of homogeneous partitions. It contains two types of fields: key field and covering field. The key field is an index field, while the covering field includes all the other fields. A global index has two attributes: partition key and partition algorithm. The partition key is the key field of the global index. For queries of the key field, compute nodes can directly locate the corresponding partition.

The global secondary index is also a type of data redundancy. For example, when an SQL statement is executed:

INSERT INTO orders (id,seller_id,buyer_id) VALUES (1,99,1000);If the orders table has the global secondary index seller_id, it can be simply understood that we will execute this insert to the two global indexes the primary key and seller_id respectively, with a total of two write records:

INSERT INTO orders (id,seller_id,buyer_id) VALUES (1,99,1000);

INSERT INTO idx_seller_id (id,seller_id) VALUES (1,99);In the orders table, the partition key of the primary key is id, while the partition key of the idx_seller_id is seller_id.

In addition, since the two records are not likely to be on the same DN, we need to encapsulate the two writes into a distributed transaction to ensure consistency (it is similar to writing the secondary index through a standalone transaction in the standalone database).

When all our DML operations can maintain global indexes through distributed transactions, the secondary indexes and primary key indexes can always be in a consistent state.

It seems that the global index is very simple.

In fact, for a global index that can be widely used, all the following conditions are necessary, otherwise, it can only be useless.

Indexes must be strongly consistent. For example:

• If the writes fail in the index table but succeed in the primary table, it will result in a data shortage in the index table.

• If you read the index table and the primary table at the same time, the records you view should be the same, that is, you cannot read the submitted results in one table while reading the unsubmitted results in the other table.

The consistency requirements for indexes here are actually the requirements for distributed transactions.

Due to the introduction of global indexes, 100% of transactions will be distributed transactions, and the requirements for distributed transactions are completely different from distributed databases that rely heavily on partition keys. The requirements become higher:

In a standalone database, indexes have some seemingly natural behaviors that need to be compatible.

Examples:

• Indexes can be created directly through DDL statements instead of various peripheral tools.

• Prefix queries. In a standalone database, indexes can well support prefix queries. How should global indexes solve such problems?

• Hot issues (Big Key issues). In a standalone database, if an index is not highly selective (for example, an index is created based on gender), there will be no serious problems except a slight waste of resources. However, for a distributed database, the index with low selectivity will become hot, causing some hot nodes in the entire cluster to become the bottleneck of the entire system. Global indexes need to have corresponding methods to solve such problems.

We know that the core working mechanism of the database optimizer is:

For example, three tables are involved in an SQL statement and only the left deep tree is considered:

• When there is no global index, it can be simply understood that the space of the execution plan is mainly reflected in the JSON order of the three tables, and the space size is approximately 3x2x1=6. The space for execution plans is relatively small, and it will be much easier for the optimizer to determine the cost of these six execution plans. (Of course, the optimizer still has a lot of work to do, such as partition pruning. These optimizations should be performed with or without indexes, so they will not be discussed in detail).

• When there is a global index, the situation is much more complicated. Assuming that each table has three global indexes, the size of the execution plan space will roughly become (3x3)x(2x3)x(1x3)=162, and the complexity will rise sharply. Accordingly, the requirements for the optimizer will be much higher. The optimizer needs to consider more statistics to select a better execution plan, and more pruning is required to complete query optimization in a shorter time.

Therefore, we can see that in distributed databases or some middleware products without global indexes, the optimizers are very weak, and most of them are RBOs. They do not need a powerful optimizer at all, and more optimization content is replaced by the standalone optimizer.

The speed of creating an index, the performance of index lookup, the functional limitations of indexes, clustered indexes, and the storage cost of indexes all greatly affect the use experience of global indexes. For space reasons, we will not continue to expand here.

These requirements for global indexes are essentially derived from the number of global indexes.

For databases with good transparency, all indexes will be global indexes, and the number of global indexes will be very large (just like the number of secondary indexes of one table and one database in a standalone database). The requirements will be higher only when the quantity is more.

However, even if there is a global index, you will find that the usage of these incomplete distributed databases is still strongly dependent on the partition key.

They will make it optional and special to create a global index. As a result, it will be careful in businesses using global indexes. Naturally, the number of global indexes becomes very limited.

When the number of global indexes and their usage scenarios are strictly limited, the above disadvantages become less important.

PolarDB-X implements excellent distributed transactions and global indexes. It meets the requirements for global indexes mentioned above and achieves transparent distribution.

In the transparent distribution mode (mode='auto' is specified in CREATE DATABASE), all indexes are global indexes, and the application does not need to care about the partition key.

For example, our table creation statement is completely consistent with that of a standalone MySQL instance, and it does not need to specify a partition key:

create table orders (

id bigint,

buyer_id varchar(128) comment 'buyer',

seller_id varchar(128) comment 'seller',

primary key(id),

index sdx(seller_id),

index bdx(buyer_id)

)

The experience of creating a global index is the same as that of creating a secondary index on a standalone MySQL. The whole process is online:

CREATE INDEX idx_seller_id ON orders (seller_id);The global index of PolarDB-X is strongly consistent, and its data consistency experience is not significantly different from that of standalone MySQL. It provides an isolation level between RC and RR that conforms to MySQL semantics.

At the same time, PolarDB-X has also done a lot of work on index maintenance and optimizers to ensure that the index can be created and maintained efficiently and that the optimizer can correctly generate execution plans that use indexes.

The PolarDB-X partitioning algorithm can also handle issues such as hot data and data skew in indexes. For more information, see Interpretation of PolarDB-X Data Distribution (2): Hash vs. Range.

• The business logic of TPC-C aligns perfectly with the architecture of database sharding and table sharding, with 90% of transactions being standalone transactions. When it comes to TPC-C test results, we recommend focusing more on the average tpmC of each machine.

• Only databases that utilize global indexes can achieve true transparency. Databases without global indexes are no different from database sharding and table sharding, requiring manual design of partition keys.

• Writing global indexes requires distributed transactions, which demand high performance and consistency. In a transparent distributed database, 100% of transactions are distributed transactions.

• The number of global indexes is crucial. In a transparent distributed database, all indexes are global, and the number will be substantial.

Distributed databases that require manual partition key specification were popular five or six years ago. However, in modern times, transparent distribution is a capability that a qualified distributed database must offer.

It can significantly reduce application migration costs. For instance, you don't need to modify the SQL files in the application to include partition keys or design a partition key for each table. At the same time, it can also greatly reduce the learning curve for developers, allowing them to focus on meeting business requirements. For example, they can leverage their SQL optimization experience from standalone databases to improve SQL execution performance by adding indexes.

Lastly, is transparency always the best option? In the next article in this series, we'll explore the trade-offs between transparent and manual distribution, and how PolarDB-X makes its choices. Stay tuned for the updates!

Try out database products for free:

Interpretation of PolarDB-X Data Distribution (2): Hash vs. Range

Interpretation of PolarDB-X Data Distribution (4): Transparent vs. Manual

ApsaraDB - August 15, 2024

ApsaraDB - August 23, 2024

ApsaraDB - September 11, 2024

ApsaraDB - July 7, 2022

ApsaraDB - August 13, 2024

ApsaraDB - August 9, 2024

PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More LedgerDB

LedgerDB

A ledger database that provides powerful data audit capabilities.

Learn MoreMore Posts by ApsaraDB