We are glad to introduce PolarDB-X kernel V5.4.14. As an enterprise-level product, we continue to strengthen data manageability in this latest version to make the product more responsive to the demands of enterprises.

For distributed databases, the management of massive data is a meticulous task. In addition to providing all the required capabilities, an important deciding factor when customers chooses a database service is whether the database can be more refined. The depth of manageability also directly affects the cost of using the database. The new version of PolarDB-X also provides more powerful SQL capabilities, as well as better MySQL compatibility.

Moreover, to offer better services for our users, PolarDB-X introduces the separation of hot and cold data storage, data locality, data hotspot diagnosis, parallel DML optimization, flashback query and AUTO_INCREMENT compatibility in the kernel V5.4.14. This greatly improves data manageability, SQL processing capability and compatibility, and provides more O&M methods for users to solve data hotspot issues.

Together, these features will give users a more professional experience, allowing PolarDB-X to move forward towards a mature cloud-native distributed database. In this version, compared with previous versions, the product has also made great progress, with 16 issues fixed and 24 enhanced features incorporated. We will continue to synchronize features of the new version to the open source community.

The manageability of data is an important capability of a database. We have summarized the feedback from users and the market, which involves "controlling the specific storage location of data", "distinguishing management according to the frequency of data access", "intuitive data distribution management". PolarDB-X V5.4.14 has strengthened these aspects from the kernel and other aspects.

Let's look at an interesting example first. During the use of the database, a large amount of data is written and updated every day. However, usually only data that is recent, such as within a month or even a week, is frequently updated and accessed. The remaining bulk of data lies within the disk, which becomes a large waste to the storage space and increases the cost of database maintenance. A common workaround is to store "hot" data in high-performance storage devices to cope with frequent daily writes and updates to meet users' needs for transactional data processing. "Cold" data is then expected to be migrated to low-cost storage devices to reduce the maintenance pressure of hot data, but at the same time, we still need to be able to query and partially revise it.

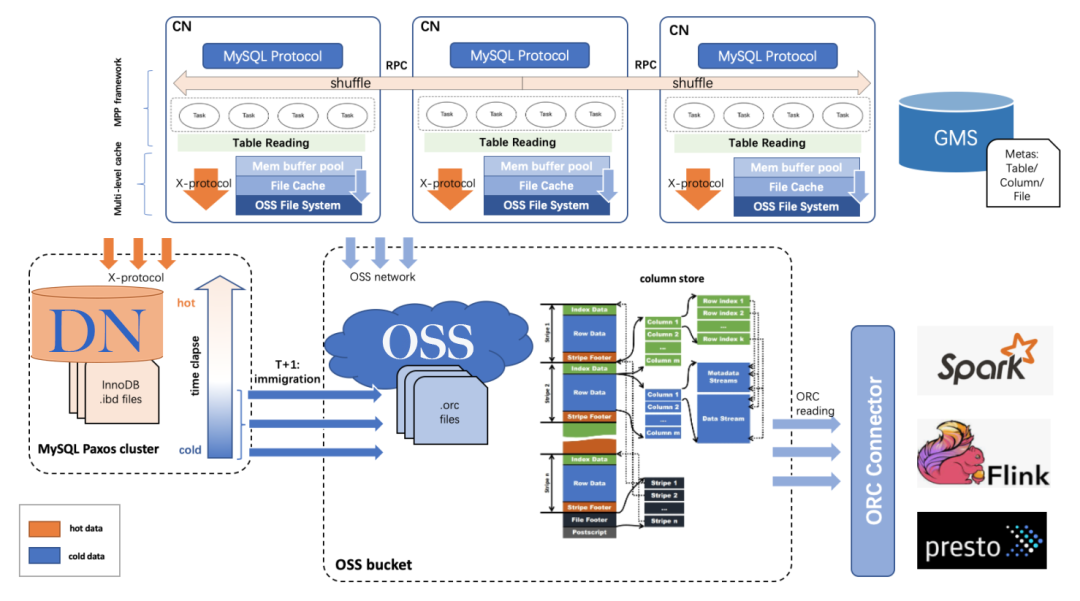

In PolarDB-X V5.4.14, we provide a new feature of separation of hot and cold data storage based on the OSS storage service. This feature allows you to separate cold data from the source table and archive it to OSS at a lower cost to form an archive table. In addition, the archive table supports efficient primary key and index point queries, and complex analytical queries to meet the requirements for high availability, MySQL compatibility, and point-in-time flashback. You can access the archive table in the same way as a MySQL table.

The separation of cold and hot data storage provided by PolarDB-X takes full advantage of the low cost and large capacity of OSS services. Cold data is quickly and efficiently separated from the online database, reducing the pressure on online data maintenance and the scale cost of the online database, and decreasing the storage cost of full data. At the same time, it provides access methods compatible with MySQL, takes into account the performance of point query and analytical query, and supports access to big data products.

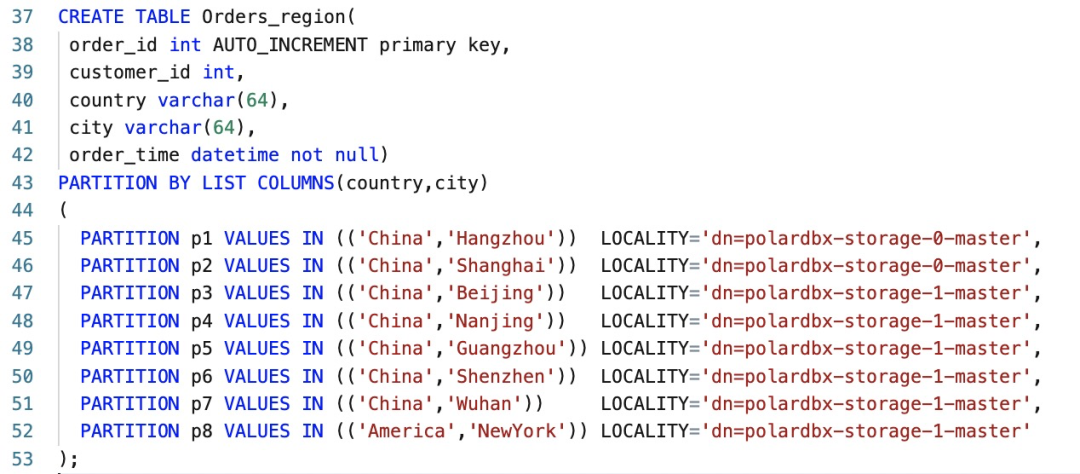

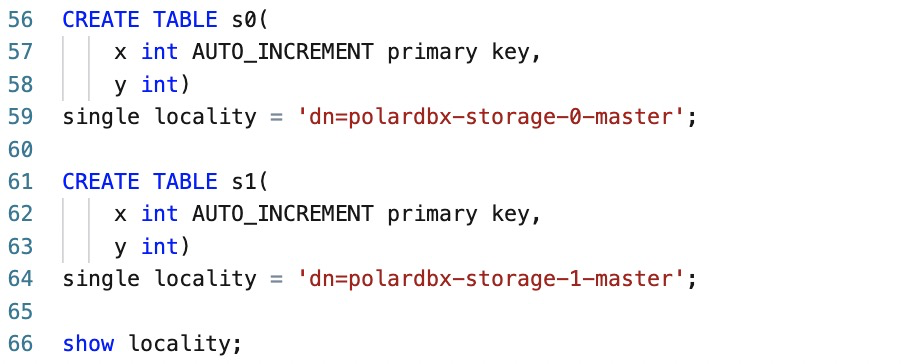

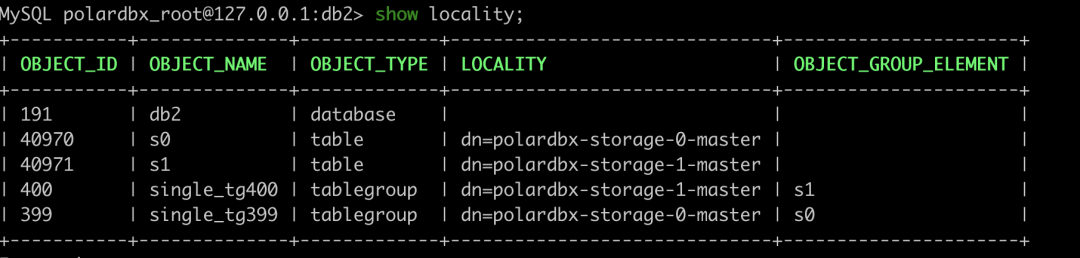

In a distributed data architecture, data is scattered and distributed. However, from a business perspective, data is often stored in a centralized manner. How is the location of data storage specified? PolarDB-X V5.4.14 proposes a solution of data locality for the problem. It defines partition-level isolation, instead of multi-tenant mechanism. By specifying the partition-level locality, different partitions of the same logical table can be controlled on different storage nodes. Currently, the locality supports all three partition modes: Range, Hash, and LIST.

By specifying the locality of a single table, the single table can be distributed on different storage nodes to isolate its physical storage resources.

Under the distributed data architecture, the most ideal situation is that the data and traffic in each partition are balanced, which can maximize the benefit of the distributed processing capability of multiple nodes. In order to achieve the best results, the database needs to avoid hotspot partitions, including hotspots of traffic and data volume. To avoid the occurrence of hotspots, you need to be able to quickly and easily find hotspots, so that targeted processing can be carried out. Therefore, quickly and accurately finding hotspot partitions becomes an important capability required by distributed databases.

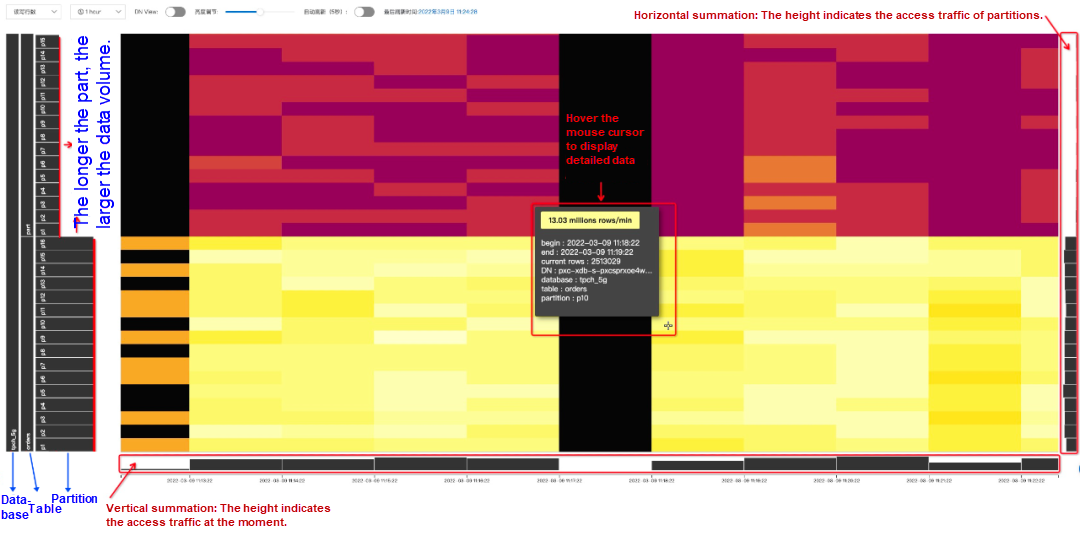

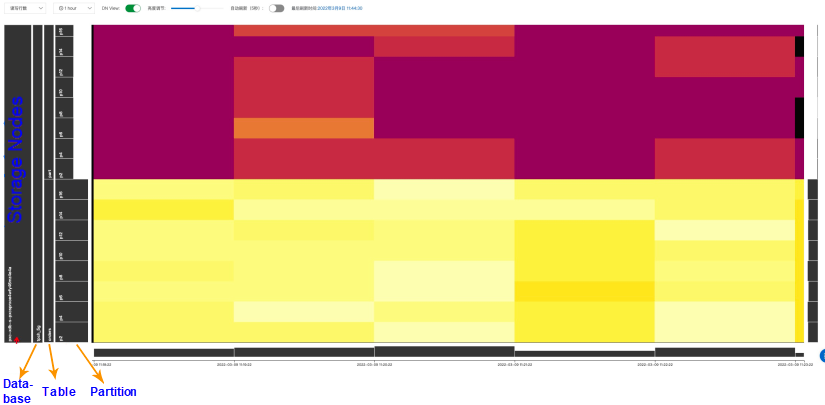

Feature overview first selects a small range of data for introduction, as shown in the following figure. The vertical axis shows the relations between logical databases, logical tables, and logical partitions, and the partitions are sorted by logical serial numbers. The horizontal axis represents the time. The column chart below and on the right side of the figure represent the summary data. The lower column chart represents the vertical summation, that is, the sum of access traffic of all partitions at a certain time. The column chart on the right indicates the horizontal summation, that is, the sum of access traffic in all time ranges of a certain partition.

You can clearly and intuitively see whether data is balanced in physical storage nodes, how data is distributed in various partitions, and whether hotspots exist in physical storage nodes.

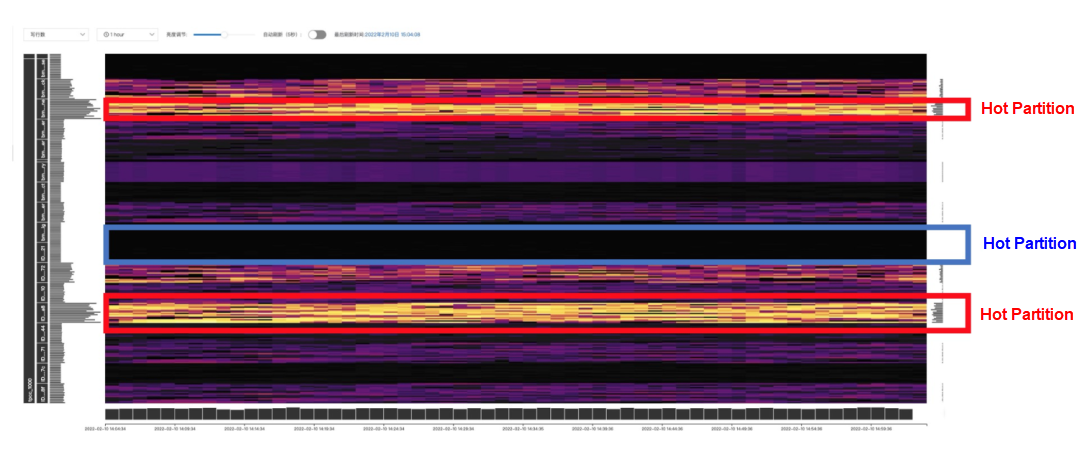

With the TPC-C traffic to test, you can see a complete heat distribution. From the following figure, it can be clearly found that there are two hotspot areas in the TPC-C traffic, and the hotspots of data volume can also be found by comparing the width of the vertical axis.

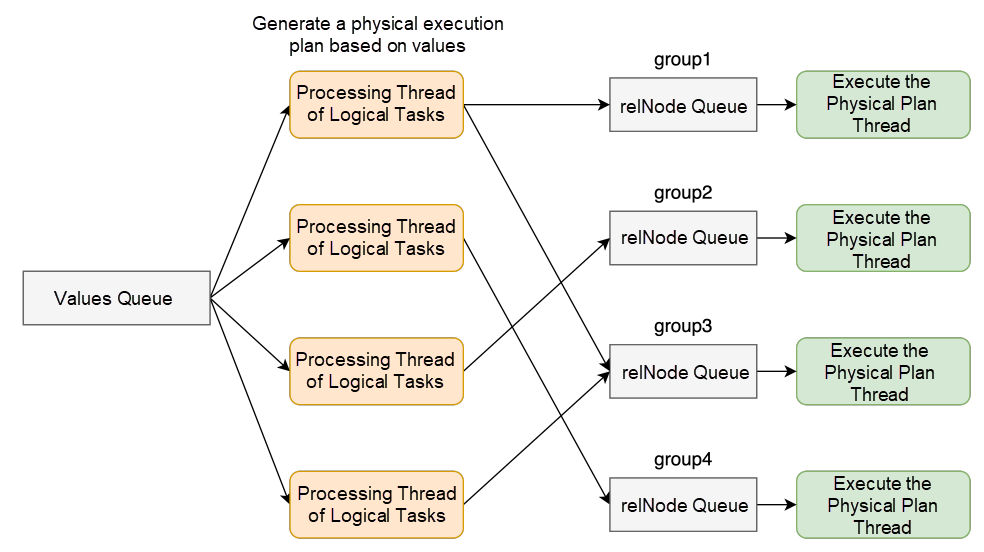

Distributed databases are based on DML and support for large transactions of parallel computing, and effectively meet the requirements of running batch processing tasks and execution efficiency. In the new version of PolarDB-X, multi-threaded operations are used to run parsing tasks and execute physical plans at the same time. The original scheduling within a single logical task is changed into common scheduling for multiple logical tasks, which improves the execution efficiency.

After optimization, the performance of large-scale data modification caused by a single SQL can be improved by about 2 times. Therefore, it is especially suitable for large transactions and data import and export scenarios.

In the IT industry, "deleting the database and leaving the enterprise" has become a running joke often mentioned by programmers. It reflects the importance of databases to enterprises. Although intentional deletion is not common, accidental data deletion happens more often than not. This could be caused either by a slip of the thumb or a bug in the published code, resulting in the data being deleted by mistake. The effect is often destructive.

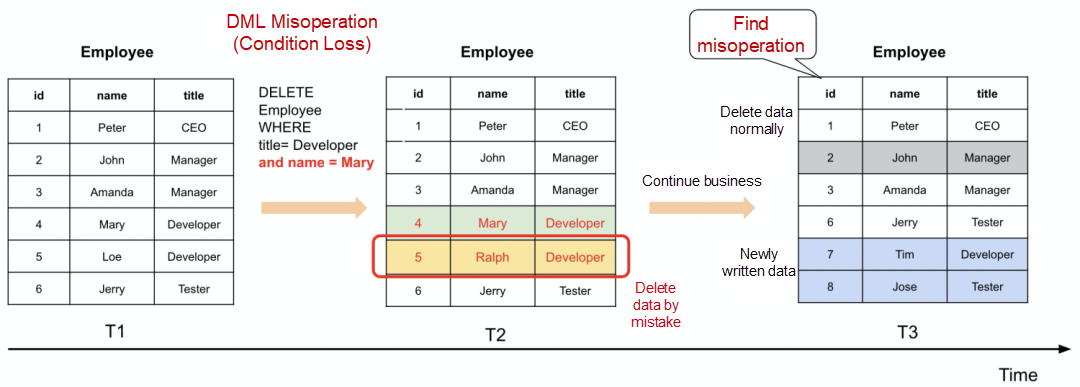

First, let's investigate an accident that is caused by actually deleting data by mistake.

Let's sort out the accident according to the above figure: T1: Xiaoming maintains a list that records the employee information of a company. T2: Xiaoming needs to delete records related to Mary, so he executes a DELETE statement in the database. Unfortunately, he misses an and statement, resulting in the employee Ralph's data being accidentally deleted. T3: The business continues. The data of John is deleted, and Tim and Jose are inserted into the table. At this time, Xiaoming finds that the data has been deleted by mistake, and he is eager to restore the data. For this accident, how does PolarDB-X help careless Xiaoming? The new version of PolarDB-X offers the flashback query feature. This feature provides fast fallback for incorrect operations in a short period of time for scenarios where row-level data is unintentionally deleted.

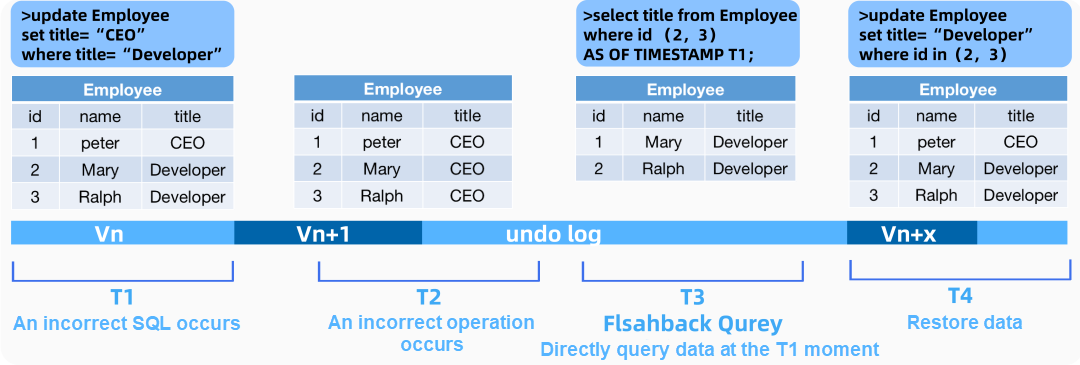

When incorrect SQL occurs, the changes will be recorded in undo log with version Vn +1. At the T2 moment, the problem of incorrect modification is found and the incorrect operation time and affected data range are determined. The correct values of the two rows of affected records at the T1 moment are directly found through the flashback query feature. The data is revised according to the correct values returned by the flashback query.

The main purpose of providing globally unique number sequences in distributed databases is to generate globally unique and sequentially increasing number sequences, which are often used to generate column values such as primary key columns and unique index columns.

In the new version, to maintain good compatibility with the features of MySQL auto-increment columns, PolarDB-X provides a globally unique, continuous, and monotonically increasing new sequence. It generates values that are a sequence of natural numbers starting from 1 by default. If you specify the AUTO_INCREMENT column when you create a table in the AUTO mode database, a new sequence object is automatically created and associated with the table by default. The object is used to automatically fill with the value of the auto-increment column during INSERT.

We are constantly advancing and improving on the development of cloud-native distributed database. The PolarDB-X kernel V5.4.14 is a small milestone and a new starting point for us to move forward. In the planning of subsequent versions, we will continue to introduce technologies such as flexible decoupling of computing and storage and data table optimization in order to provide users with experience beyond the previous versions.

Senior Technical Experts from Alibaba Discuss Data Warehouse Tuning – Part 2

ClickHouse: Analysis of the Core Technologies behind Cloud Hosting to Cloud-Native

Alibaba Cloud Community - July 8, 2022

ApsaraDB - April 20, 2023

ApsaraDB - June 5, 2024

ApsaraDB - October 16, 2024

ApsaraDB - November 12, 2024

ApsaraDB - June 19, 2024

PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Tair

Tair

Tair is a Redis-compatible in-memory database service that provides a variety of data structures and enterprise-level capabilities.

Learn MoreMore Posts by ApsaraDB