Check out the Part 1 here

After the increase in the cost of Internet traffic, the era of spending a lot of money on traffic has become history. Customer advertising and marketing are becoming more sophisticated, relying more on real-time and accurate analysis of existing customer data to improve the conversion rate of advertising. There are the following typical scenarios in terms of user marketing:

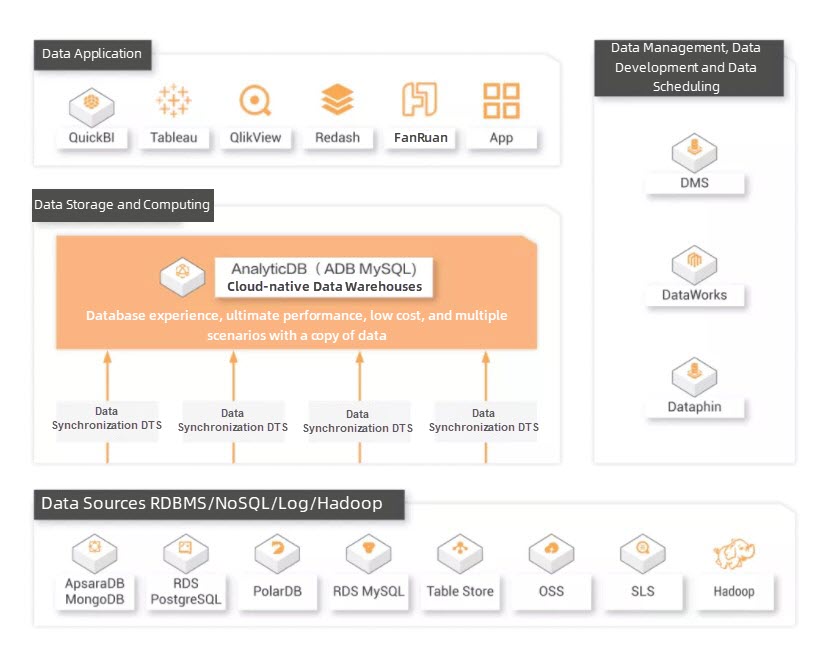

Here is the typical system architecture:

The following statement is used to create a core table:

CREATE TABLE db.order (

order_id,

user_id,

shop_vip,

last_trade_time,

last_cart_time,

member_grade,

seller_zone,

member_credits,

clustered key index_mmsi(`user_id`)

)

DISTRIBUTED BY HASH(order_id)

PARTITION BY VALUE(DATE_FORMAT(last_trade_time, '%Y%m%d')) LIFECYCLE 30

COMMENT 'Order information table';Note: Use order_id as the distribution key to ensure data is evenly distributed without skew. At the same time, since you need to frequently query or associate according to user_id, user_id is used as the clustered index.

User perspective refers to selecting specific groups of people according to various labels of users. Under normal circumstances, the user table or user behavior table is used as the fact table, and the user's various tags or attributes are used as the dimension table. The starlike model is adopted, and the fact table is used to join each dimension table for multi-dimensional analysis. Sometimes, the anti-paradigm method of data redundancy may be used to build the fact table into a wide table at the expense of data storage. The purpose is to omit multi-table association during analysis. It is impossible to use traditional databases to analyze such indefinite dimensions because of the uncertainty of user analysis tags. Indexes of traditional databases cannot be created indefinitely. Therefore, AnalyticDB is the best solution to solve such problems. A typical SQL statement is listed below:

SELECT

t2.buyer_id,

t3.seller_id,

t1.shop_vip,

t1.last_trade_time,

t1.last_cart_time,

t1.member_grade,

t1.seller_zone,

t1.member_credits,

sum(t1.pay_amount)

FROM

db.order t1

JOIN db.dimension_table1 t2 ON t1.user_id= t2.buyer_id

JOIN db.dimension_table2 t3 ON t1.user_id= t3.seller_id

WHERE

t1.is_market_target IN('4')

AND t1.seller_zone = 1019

AND t1.attributes IN('6742081')

AND t3.buyer_id = ‘xxxx’

and t3.tseller_id = ‘yyyy’

group by

t2.buyer_id,

t3.seller_id,

t1.shop_vip,

t1.last_trade_time,

t1.last_cart_time,

t1.member_grade,

t1.seller_zone,

t1.member_credits;The order table may be at the trillion level. The multidimensional and multi-table association online real-time analysis of huge amounts of data requires extremely high capabilities of the underlying analysis system.

User selection is similar to user perspective. More often, it may be used to select the specific number of people rather than specific detailed data. AnalyticDB aggregation computing power is used more in this case. COUNT DISTINCT or GROUP BY operations are performed according to various indefinite dimensions. A typical SQL statement is listed below:

SELECT count(1) AS cnt

FROM(

SELECT DISTINCT t1.buyer_id

FROM(

SELECT buyer_id

FROM db.order

WHERE seller_zone= 11111

AND seller_id= 121211121

AND algorithm_crowd IN('84')) t1

JOIN(

SELECT user_id AS buyer_id

FROM db.dimension_table1) t2

ON t1.buyer_id= t2.buyer_id

JOIN(

SELECT user_id AS seller_id

FROM db.dimension_table2) t3

ON t1.buyer_id= t3.seller_id

) t;User delivery refers to the delivery of marketing information to people selected above according to certain promotion channels, such as text message delivery and advertisement delivery on portal websites. Data from different channels can be stored in different OSS, while AnalyticDB can easily dump data in the database to OSS or other downstream products. Moreover, the dumping is very efficient, improving the delivery efficiency of users. Typical SQL statements in AnalyticDB 2.0 are listed below:

CREATE TABLE output WITH(oss_dump_endpoint= 'xxxxxx.oss-internal.aliyun-inc.com', oss_dump_bucket_name= 'xxxx',

oss_dump_file_name= 'xx_prod/20190710/63218721',

oss_dump_is_overwrite= true,

oss_dump_compatibility_mode= false,

oss_dump_access_key_id= 'xxxxxxxxx',

oss_dump_access_key_secret= 'xxxxxxxxxxxxxxxxxxxx',

oss_dump_row_del= '\r\n',

oss_dump_col_del= '\t', table_type= 'oss_dump', dump_charset_code= 'UTF-8',

oss_dump_table_header= 'false', return_dump_result_count= true) as

SELECT DISTINCT t1.buyer_id

FROM(

SELECT buyer_id

FROM db.order

WHERE last_cart_time>= 20190610

AND last_cart_time< 20190710

AND is_market_target IN('1')

AND seller_zone= 1018

AND seller_id= 3687815378) t1

JOIN(

SELECT user_id AS buyer_id

FROM db.dimension_table) t2

ON t1.buyer_id= t2.buyer_id

LIMIT 1000;AnalyticDB supports real-time writing, and data written in real-time can have complex real-time analysis. Therefore, AnalyticDB is widely used in some scenarios, such as monitoring screens, monitoring dashboards, and real-time billboards.

A typical system architecture is listed below:

The upstream production data is written to AnalyticDB in real-time using tools (such as Flink, DTS, Jingwei, or Dataworks). It is also analyzed online in AnalyticDB in real-time and displayed on the screen in the report presentation tool.

This type of business requires high data timeliness, especially for real-time data writing. It requires a large amount of data to be written in real-time, real-time visibility after writing, and fast analysis. Therefore, it is required to pay attention to designing the table a lot. This article summarizes several considerations for such scenarios:

A typical SQL statement is listed below:

CREATE TABLE tb__record_info

(

a_info_id bigint NOT NULL AUTO_INCREMENT,

domain varchar NOT NULL,

region varchar NOT NULL,

ip varchar NOT NULL,

result_ts varchar NOT NULL,

time_stamp timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

key idx_domain(domain),

key idx_time(time_stamp),

primary key (a_info_id, domain, time_stamp)

)

DISTRIBUTE BY HASH(domain)

PARTITION BY VALUE(DATE_FORMAT(time_stamp,'%Y%m%d')) LIFECYCLE 60;Competition in the gaming sector has become more intense. Traffic costs have continued to rise with the rapid growth of the Internet, and marketing has begun to develop towards refinement. Gaming enterprises need more detailed and accurate data for the evaluation of channels, users, and game performance. They hope to use excellent data analysis tools to help their teams analyze the market and user trends more comprehensively. At the same time, the game behavior and preferences of players are gradually changing. Therefore, how to detect these changes in time and adjust products and game design accordingly is very important. Here are the business requirements for the gaming industry:

AnalyticDB supports the following typical scenarios.

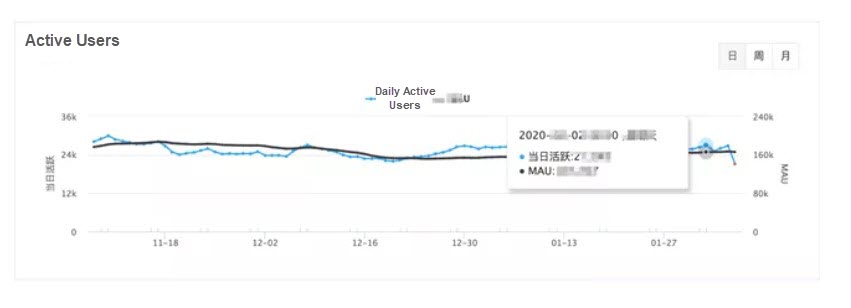

Daily active users (DAU) and monthly active users (MAU) of gaming products are very important metrics to evaluate whether a game is widely accepted by players.

The SQL example for DAU calculation is listed below:

SELECT count(DISTINCT uid) AS count

FROM login_log

WHERE timestamp >= <start_timestamp>

AND timestamp <= <end_timestamp>

AND qita1 = <x>

AND qita2 = <y>;Based on the basic statistics above, more exploration can be made on the player’s active state, such as:

Active Account Analysis

Online Analysis

Player Behavior Analysis

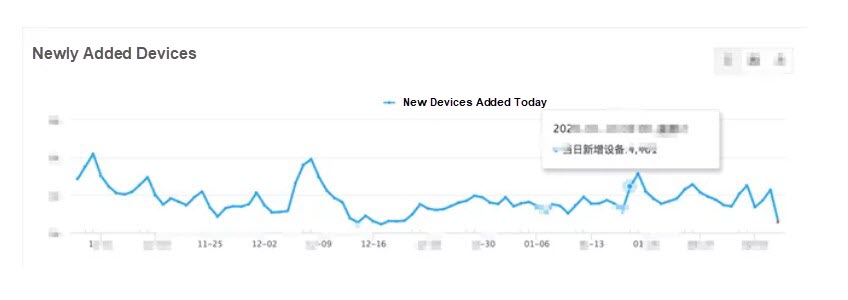

The newly added device analysis in the game player source analysis is used to predict the lifecycle and efficiency of attracting new players in a game. It is also a very important metric to evaluate whether the game is widely accepted by players.

The SQL examples for calculating new devices and players are listed below:

SELECT Count(*) AS count FROM

(

SELECT deviceid

FROM login_log

WHERE channel_id = ‘X’

AND timestamp >= ‘XXX’

AND timestamp <= ‘YYY’

GROUP BY deviceid

) AS d1

LEFT JOIN

(

SELECT deviceid

FROM login_log

WHERE channel_id = ‘X’

AND timestamp < ‘YYY’

) AS d2

ON d1.deviceid = d2.deviceid

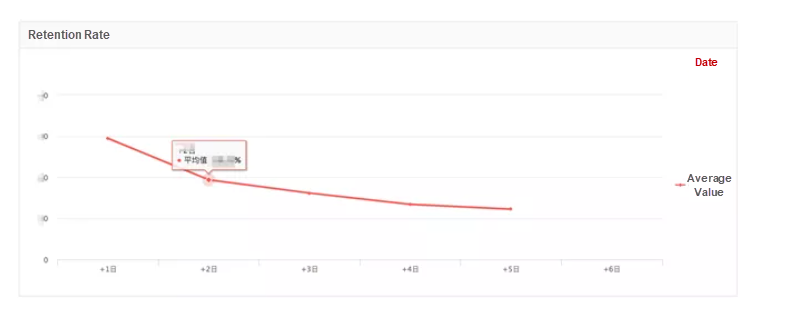

WHERE d1.deviceid IS NULL;The retention metric reflects the quality of the gaming product and the ability to retain players to some extent. On the other hand, it indicates the fitness between the channels, the target users of a game, and the quality of the channels. Therefore, the analysis of the retention metric is even more important.

The SQL example for calculating the player retention rate is listed below:

SELECT

channel_id,

count(

DISTINCT IF (

datediff(payorder_riqi, login_riqi) = 0,

user_id,

NULL

)

) AS 'liucun_1',

count(

DISTINCT IF (

datediff(payorder_riqi, login_riqi) = 1,

user_id,

NULL

)

) AS 'liucun_2',

count(

DISTINCT IF (

datediff(payorder_riqi, login_riqi) = 2,

user_id,

NULL

)

) AS 'liucun_3',

count(

DISTINCT IF (

datediff(payorder_riqi, login_riqi) = 3,

user_id,

NULL

)

) AS 'liucun_4',

count(

DISTINCT IF (

datediff(payorder_riqi, login_riqi) = 4,

user_id,

NULL

)

) AS 'liucun_5',

count(

DISTINCT IF (

datediff(payorder_riqi, login_riqi) = 5,

user_id,

NULL

)

) AS 'liucun_6',

count(

DISTINCT IF (

datediff(payorder_riqi, login_riqi) = 6,

user_id,

NULL

)

) AS 'liucun_7',

count(

DISTINCT IF (

datediff(payorder_riqi, login_riqi) = 14,

user_id,

NULL

)

) AS 'liucun_15'

FROM

pay_order p

LEFT JOIN login_log l ON p.uid = l.uid

WHERE

payorder_riqi >= '2019-01-17'

AND payorder_riqi <= '2019-01-24'

GROUP BY

`channel_id`

ORDER BY

`liucun_1` DESC;The disk usage consists of data and indexes. The indexes temporarily occupy a small amount of additional space and may cause slight data expansion during the construction process. You can use the following SQL statement to query the disk usage. (Note: This metric is delayed and is counted every hour):

SELECT (SUM(data_length)+SUM(index_length))/1024/1024/1024 AS 'data space (GB)' FROM information_schema.tables. You can also use the following SQL statement to query the current log usage: show binary logs. The adb-bin .log in the returned result indicates binlog, and adb-system.log indicates system log.

The disk is locked when the disk usage of a single node exceeds 80%. There are two reasons for the high disk usage of a single node. One reason is some data of the nodes are skewed due to the unreasonable selection of the level-1 partition key. The other reason is the data distribution is relatively average, and the overall usage is too large. You can determine whether a table partition is skewed according to the storage level on the console page.

Currently, the disk uses ECS disks and only supports scale-out. The number of nodes can be scaled in and scaled out. The quantity range is related to the initial specifications of the instance. You can see the change range of the number of nodes in the current instance on the change configuration page in the console. The scaling of the number of nodes performs partial data migration between nodes.

We can use the batch write method as much as possible. When using Dataworks for data synchronization, pay attention to whether the number of concurrent tasks and the write batch size are too small. Set the primary key as simple as possible and select partition keys for writing to the table as evenly as possible.

The data is split into several level-1 partitions in the AnalyticDB. Generally, an AnalyticDB instance has about 100 orders of magnitude of level-1 partitions. Different level-1 partitions are concurrently performed when the query is performed. Therefore, the most important point of the level-1 partition key is to ensure the data is as even as possible. Otherwise, long-tail queries will occur, slowing down the overall query progress.

If level-1 partition keys are the same for different tables, the data shuffle can be significantly reduced when the table is executing the JOIN with the level-1 partition key as Join Key. Therefore, if the data is unified, the same level-1 partition keys can accelerate the JOIN.

The level-2 partition is a further split based on the level-1 partition, which is generally performed in the time dimension. However, we do not recommend too many level-2 partitions. The number of level-2 partitions in a single table is expected not to exceed 100. Let’s assume an order form adds about one million pieces of data per day and needs to retain the data for ten years. An AnalyticDB cluster typically has about 100 level-1 partitions. Therefore, if the table is partitioned on a daily basis, the data volume of a single level-2 partition is about 10,000, which is much lower than our recommended value. Therefore, it is appropriate to use month or year as the level-2 partition. AnalyticDB allows you to modify the lifecycle of a level-2 partition. The example ALTER TABLE lineitem PARTITIONS 12 indicates that the number of level-2 partitions of lineitem has changed to 12. Note: The modification of the number of level-2 partitions is performed asynchronously in the background, and the execution of BUILD TABLE lineitem can accelerate the partition modification.

Currently, the expiration policy for level-2 partitions is to sort level-2 partitions by size and then only keep the top N level-2 partitions. The rest of the partitions will be eliminated. The N is the size of the lifecycle. Let’s assume Table A has three level-2 partitions currently (202001, 202002, and 202003), and the number of the lifecycle is 3. Then, when data whose partition value is 202004 is written, the 202001 partition is eliminated. Note: Partition elimination is performed asynchronously. If you need to eliminate expired level-2 partitions as soon as possible, you can trigger the expired level-2 partition elimination mechanism by executing build table table_name. In addition, pay attention to the problem of elimination fault caused by dirty data when using level-2 partitions. If Table A is written with three dirty data with partition values of 300001, 300002, and 300003, the partition elimination strategy is also triggered, and only the three dirty data with the largest partition values will be left in the whole table.

A clustered index is used to sort data according to several fields. Data with the same or similar values are physically stored together through sorting.

This field is required for query. Fields (such as seller ID) can be a clustered index. the locality of data is ensured, and thus performance can be improved by the order of magnitude.

Currently, only one clustered index is supported. However, a clustered index can contain multiple columns. Clustered indexes are of little use unless performing point queries for very scattered data.

The primary key is generally used for data deduplication. The length of the primary key is inversely proportional to the efficiency of deduplication. Therefore, we recommend using 1 to 3 long integer fields as the primary key, instead of a longer string.

In addition, the primary key is expected to contain the level-1 partition key and the level-2 partition key. Currently, it does not support modifying the primary key.

AnalyticDB uses full field indexes by default. Generally, you do not need to maintain indexes. The method for viewing table indexes is SHOW INDEX FROM tablename. If you want to delete an index, you can use the ALTER TABLE tablename DROP KEY keyname. The keyname can be queried by using the preceding statement. (Note: Deleting an index may slow down the query.) If you want to specify your index, use the KEY keyword: KEY key_name (column_name). For example, CREATE TABLE tablename (id bigint,c1 varchar,key id_idx(id)) DISTRIBUTE BY HASH(id);.

Yes, and the specific behavior is listed below:

If the DDL contains a primary key, use the primary key as the distribution key.

If the DDL does not contain a primary key, a field __adb_auto_id__ is created automatically. Then, the __adb_auto_id__ is used as the primary key and partition key.

Yes, and AnalyticDB 3.0 is compatible with the AnalyticDB 2.0 table creation statements.

Senior Technical Experts from Alibaba Discuss Data Warehouse Tuning – Part 1

PolarDB-X Kernel V5.4.14: A New Version with More Refined Data Management Capabilities

ApsaraDB - July 4, 2022

ApsaraDB - December 21, 2023

Alibaba Cloud MaxCompute - October 9, 2023

Alibaba Clouder - June 23, 2020

Alibaba Clouder - June 10, 2020

Alibaba Cloud Community - October 17, 2025

Cloud Database Solutions for Gaming

Cloud Database Solutions for Gaming

Alibaba Cloud’s world-leading database technologies solve all data problems for game companies, bringing you matured and customized architectures with high scalability, reliability, and agility.

Learn More Gaming Solution

Gaming Solution

When demand is unpredictable or testing is required for new features, the ability to spin capacity up or down is made easy with Alibaba Cloud gaming solutions.

Learn More Digital Marketing Solution

Digital Marketing Solution

Transform your business into a customer-centric brand while keeping marketing campaigns cost effective.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn MoreMore Posts by ApsaraDB