By Renjie

With the continuous development and upgrade of IaaS capabilities of cloud service platforms and the continuous expansion of user data scale, mainstream database products are developing towards cloud and service. The word cloud-native has been closely integrated with databases, and cloud service vendors are committed to building their own cloud-native database product matrix.

It has been more than two years since ApsaraDB for ClickHouse was launched. During this period, we have accumulated a large number of customer cases on the cloud. We have also gained a deep understanding of ApsaraDB for ClickHouse database ecosystem through long-term customer service support. After fully understanding the ecosystem of existing products and the pain points of customers' practices, we upgraded the architecture of the open-source ClickHouse database. Next, we will bring users a new cloud-native version of the ClickHouse database.

People are familiar with the word cloud-native database, but many users have not constructed a clear measure of whether a database product is cloud-native yet. In the previous cloud hosting mode database, users feel that the database instance is moved from the local physical machine to the ECS instance on the cloud, and the local disk becomes reliable disk storage. ApsaraDB product also provides stable and easy-to-use O&M and monitoring methods.

Cloud hosting frees users from the era of manual deployment and O&M. The core of a cloud-native database for users is to provide better resource elasticity and data sharing. From the perspective of the database engine kernel level, cloud-native databases need to better embrace the infrastructure capabilities on the cloud platform. Some traditional database architecture models are designed based on local unreliable storage devices and limited computing resources. Under the premise, it will no longer be the best choice. The cloud-native ClickHouse database that we will launch mainly has the following changes for users (They are also the direction in which the kernel capabilities of the cloud-native ClickHouse database continue to evolve):

Products with the same user form also have many cases in the industry, which shows that everyone has the same understanding of cloud-native and is evolving towards extreme elasticity, computing service, and storage volume. However, the difference in the elasticity of computing resources makes different products have different feelings when users use them. For most users, the computing resources in minutes need to be pulled up in advance, and the resources are resident. If the computing resources are pulled up fast enough, the action of pulling up in advance can be omitted. For users, the computing resources are completely service-oriented, and they can be used as they are used and released.

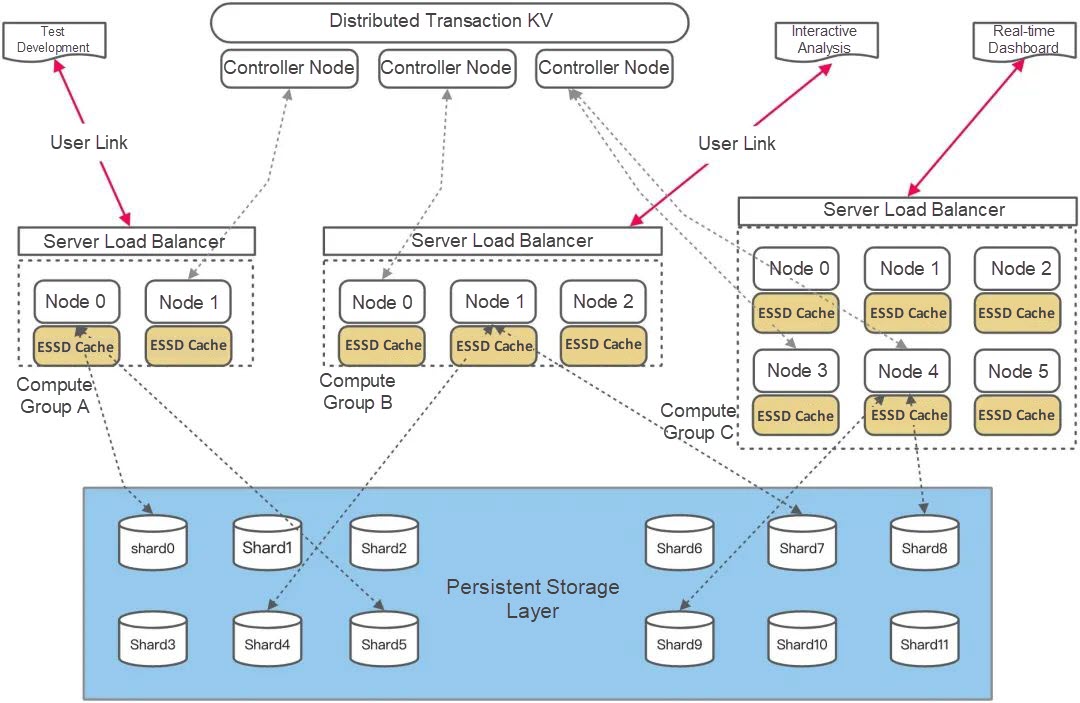

The architecture of the cloud-native ClickHouse database is as follows:

Each computing group exposes an independent link string to users, and users can log in to the corresponding computing group through different link strings. The concept of a computing group is equivalent to a "cluster" in the original cloud hosting mode from the user's perspective. The machine resources between computing groups are completely isolated, and the query requests received by the computing group will only be executed on the nodes in its group. The persistent storage layer is separated from the computing group resources. It is not attached to a single computing group. Multiple computing groups need to share a set of persistent storage layers. Cloud-native ApsaraDB for ClickHouse uses distributed Object Storage Service (OSS) as the persistent storage layer. In addition, the distributed KV system provides the storage service for all metadata of the cluster, so data between computing groups can be communicated with each other.

A compute group consists of multiple nodes. Each node pulls up a clickhouse-server as the main process to provide query services. At the same time, each node will mount an Enhanced SSD as a local cache. This cache currently has two functions: read cache of hot data and write staging of real-time data (real-time write will not be written through persistent storage). Therefore, the cache here is equivalent to stateful and needs to be processed during node migration and destruction. Finally, as shown in the figure, we do shard processing on the data. However, the number of nodes and shards are not exactly equal, which only ensures that shards can be evenly distributed to the nodes. The next chapter will provide a more detailed analysis and interpretation of the architectural choices of cloud-native ClickHouse.

In traditional MPP databases with Share Nothing architecture, data is sharded based on individual primary key columns (Hash partitioning is generally used in analysis scenarios). This shards the data and binds compute nodes to corresponding data shards. A single compute node is only responsible for reading and writing a data shard. This is the current distributed deployment mode of open-source ClickHouse. This one-to-one mapping and strong binding relationship between compute nodes and data shards limit the horizontal scaling of compute nodes. Once the ClickHouse cluster needs to add or subtract nodes, you need to perform full data re-sharding. Re-sharding is a complete logical data relocation process, which is equivalent to selecting the data of the old cluster and inserting it back into the new cluster. ClickHouse-copier is a logical data relocation tool provided by the community for this purpose. The sharding of data will more or less restrict the scalability and elasticity of clusters throughout all distributed database products. The reason why data sharding is so important to distributed database products is that if there is no data sharding, writing changes based on primary keys lacks the most important parallel capability.

Currently, there is no need to do data sharding in the Hive big data ecosystem (without the primary key semantics) or in some cloud data warehouse products. Although the ClickHouse ecosystem currently does not have real-time primary key capabilities, the cloud-native version of ClickHouse still cannot abandon the sharding of the data itself. It is because the Merge storage of ClickHouse still has some primary key semantics (it is its Order By Key), which is mainly manifested in variant engines (such as ReplacingMergeTree/AggregatingMergeTree). ApsaraDB for ClickHouse provides the optimized partition eventual consistency feature and the final scan feature.

If the data is not sharded, the performance or cost of these two capabilities will regress by an order of magnitude, breaking the user usage patterns in some scenarios. Another important reason is that ApsaraDB for ClickHouse currently does not have a very complete MPP computing engine, which heavily relies on data sharding to achieve distributed parallelism. At the computing engine level, we hope the cloud-native version of ClickHouse can continue to follow the community, always maintain the same SQL capabilities as the open-source version, and provide the same usage method as the open-source ClickHouse.

In the end, we retained the sharding design of the data in cloud-native ClickHouse but modified the standalone engine of ClickHouse so a single node can be responsible for multiple data shards. With this capability, the number of data shards is unbound from the number of nodes in the cluster. Set a large number of initial data shards to ensure that the number of nodes can be freely changed within a certain range. When the number of compute nodes changes, as long as the data shards can still be evenly distributed, you do not need to perform very heavy full data re-sharding operations. You only need to physically move the local state data based on the shards (The local state data here mainly refers to the local temporary data written in real-time) and then reallocate the mounted shards to the compute nodes.

In the architecture design of cloud-native ClickHouse, multiple computing groups are visible to each other and share the data of the cluster. For a specific data shard of a single table, whether a multi-computing group is one-write multi-read or multi-write multi-read determines the data sharing method between multiple computing groups. The core of the ClickHouse MergeTree engine is completely capable of multi-write and multi-read. Its original design is a multi-write and conflict-free mode, but some other non-mainstream table engines may not be able to do it. Another important point is that MergeTree merges multiple data files at the beginning of data writing. This kind of repeated write and delete operations in a short period are very unfriendly to the object storage service of the persistence layer, and the object storage write bandwidth currently used by cloud-native ClickHouse is also very valuable. Therefore, cloud-native ClickHouse selects the one-write-multi-read mode. Only one primary compute group accepts the request to write real-time data to a specific single table and then persists it to the local ESSD cache. After the real-time written data is fully merged in the background, it is moved to object storage.

When other compute groups receive write requests from this table, they can only forward the requests. Under the current settings of cloud-native ClickHouse, the compute group requested when you create a table is used as the write compute group for the table. You can use this method to specify that different tables correspond to different write compute groups. It should be noted that cloud-native ClickHouse is a table-level one-write multi-read mode. It can map the write load of different tables to different computing groups instead of simply a single computing group responsible for all writes. The benefit of this one-write multi-read mode is that it minimizes the object storage write pressure but introduces additional real-time data states. It results in additional processing during cross-compute group reads and computes group node destruction and migration.

In terms of code architecture design or user experience, it is undoubtedly better to multi-write multi-read (real-time data is written through to shared storage) mode, and multi-write multi-read is not difficult for MergeTree storage at all. The one-write multi-read mode is designed based on a comprehensive consideration of the current productization capabilities. The write bandwidth of the persistent Object Storage Service layer is improved in the future. After Enhanced SSD, the performance of features (such as shared mounting and cloud-native) ClickHouse can be further upgraded to the multi-write multi-read mode.

If you are familiar with ClickHouse, you should know that the storage capacity of ClickHouse is exposed in the form of library engines and table engines. This plug-in storage module design makes the table engines provided by ClickHouse very diverse. The data sharding logic for a single MergeTree table engine cannot guarantee instance-level data sharding. Another problem is that the information about data sharding in ClickHouse is not defined in the original storage table engine. In a separate distributed table engine (Proxy table), the normal storage table engine does not have sharding information at all. Sharding rules are attached for read and write routing only when users access through the Proxy table.

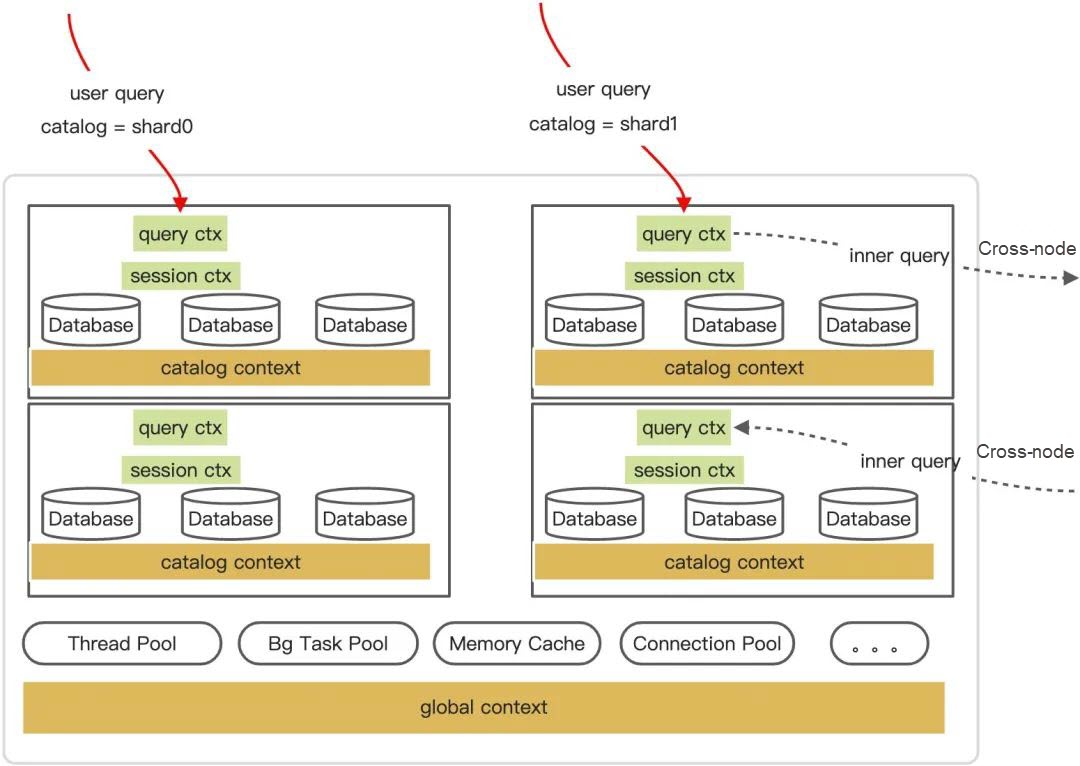

The problems above make it very difficult to superimpose the shard concept on the original storage design of standalone ClickHouse and break the original usage mode. Finally, we transformed the Catalog level to make a single node look like multiple nodes, which is fully compatible with the MPP computing engine of ClickHouse.

As is shown in the following figure, cloud-native Edition ClickHouse inserts the Catalog Context at the entire instance status level. Catalog Context manages database and table metadata information exposed to users in a unified manner. Database and table metadata management between Catalog Context is completely independent. This is equivalent to moving part of the metadata of the original single node to the Catalog Context. The objects currently managed in the Catalog Context are mainly database and table objects at the storage layer. The database and table objects between different Catalog contexts do not affect each other. We have implemented the ability of a single node to serve multiple data shards by binding multiple data shards to the unique Catalog Context of the node. The link request between nodes can access the specified data shard by setting the catalog parameter.

All data in the open-source ClickHouse cluster is localized and has no center. It does not mean ClickHouse can decentralize metadata, but that metadata has not entered the stage of distributed consistency yet. It leads to some strange problems when users often encounter their operations and deployment: inconsistent local configuration files, unsynchronized database and table structures on nodes, etc. Cloud-native ApsaraDB for ClickHouse introduces the distributed transaction KV system to build the metadata of the entire cluster. It allows users to centrally manage all cluster metadata. Metadata in cloud-native ClickHouse includes the following categories:

For example, in parameter configuration, account permission data, and distributed DDL tasks, a single node can initiate data change requests to a controller node. Then, the controller node operates the underlying KV system for persistence. All other nodes will sense the version change of the metadata by using the heartbeat of the controller node and constantly align the metadata state in memory. There may be a basic multi-node writing conflict problem here, and there are different solutions for different metadata type systems. The user reads and writes are serialized isolation levels in the underlying distributed transaction KV system. However, the controller node may adopt policies for different types of metadata modification: overwrite, reject modification commits that are out of version (the modification version submitted by the node is behind the version in the KV system), and automatic Sequential write (DDL task queue).

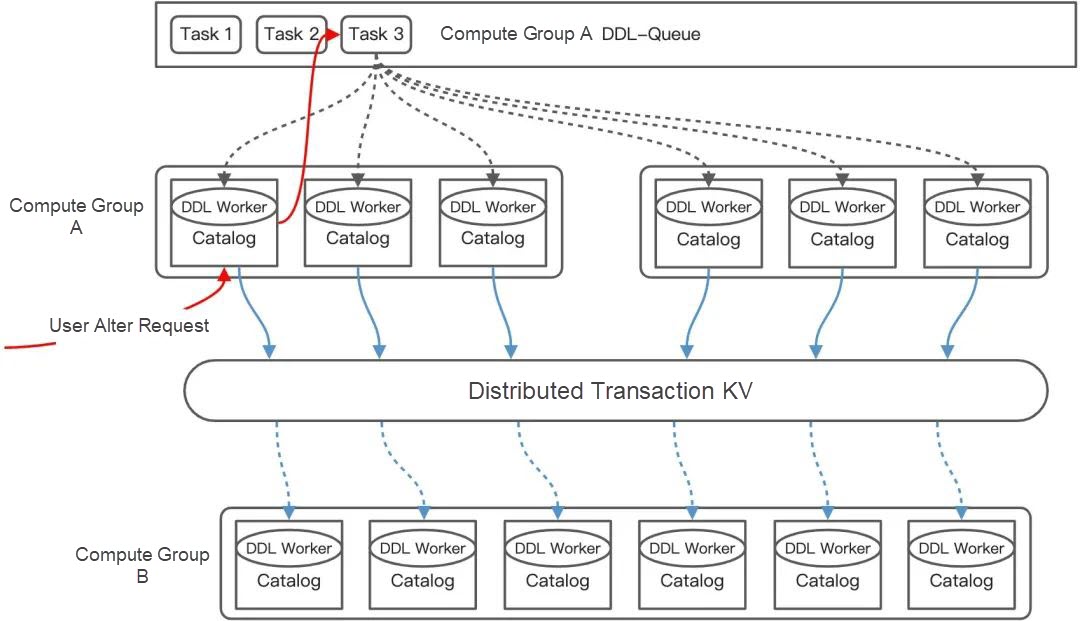

The DDL information of databases and tables is the core metadata information in cloud-native ApsaraDB for ClickHouse. This metadata cannot be aligned among multiple nodes. ClickHouse's decentralized, single-layer architecture mode makes it not have the Master node in the traditional distributed database. Users directly see the Worker node below, and each Worker node must have its local metadata version. When you perform an Alter Table, you generate and publish a distributed DDL task based on the metadata of the current node. Then, you wait for all nodes to complete the task asynchronously. This causes temporary inconsistency between multiple nodes when ClickHouse executes the distributed Alter Table.

For this reason, the ClickHouse community version has been optimizing the parallel processing of distributed DDL tasks. It improves the efficiency by parallel processing DDL tasks, significantly reduces the blocking of a full queue caused by a single task, and avoids long-term inconsistency of metadata between nodes. After the cloud-native edition of ClickHouse has been transformed with a single node and multiple shard capabilities, the management granularity of database and table metadata has changed from the node level to the shard level. The metadata of databases and tables in all data shards of the instance is stored in a centralized distributed KV system. The metadata of different data shards is independent of each other, so data shards can be dynamically attached to any node. After a data shard is attached to a node, asynchronous DDLWorker threads in the Catalog Context monitor and execute the DDL tasks published by the compute group and perform metadata sharding.

There may be multi-node Alter Table conflicts in the multi-node scenario of a single compute group. ClickHouse handles this type of writing conflict very simply: all distributed DDL tasks are in the same queue and must be serialized. This way, tasks that conflict with the previous tasks will fail, which is equivalent to delaying the conflict until the end before returning the conflict error. The cluster introduces the problem of read and write synchronization in the multi-computing group scenario: how other computing groups perceive the metadata change after a computing group modifies the metadata of a table.

In the previous chapter, the architecture design of cloud-native ClickHouse is described in a one-write, many-read mode. In metadata management of databases and tables, the system also works in one-write, many-read mode. Each user table only has one owner compute group, and the user's Alter Table operations can only be routed to the corresponding owner compute group node. The owner compute group uses distributed DDL task queues to change the metadata of all shard shards. After this part of metadata is persisted in the distributed KV system, the non-owner computing group can detect the change in the metadata version of the table by using the heartbeat of the controller node.

When non-owner computing groups treat read-only tables, they will convert their engine types to provide query services. For example, the MySQL external table engine and the Distributed table engine have no local status and can be built and managed by ReadOnly SnapShot. Other Kafka table engines of the data link class do not need to be visible across computing groups. The most complex part is the cross-computing group read-only capability construction of the MergeTree table engine. The next section will expand it in detail.

ApsaraDB for ClickHouse's MergeTree storage engine has the features of remote updates and no memory. ApsaraDB for ClickHouse is ideal for shared storage-based one-write multiple-read operations. Students familiar with ClickHouse should know that the data state of the MergeTree table engine can be represented by the DataPart collection. DataPart is an Immutable object, which corresponds to an independent folder (which will not be modified after submission), while the asynchronous change state of MergeTree is represented by Mutation collection. Commit id is referenced in the names of DataPart and Mutation, which can indicate the time range of their commit and visibility into each other.

We first put the DataPart metadata and Mutation records into distributed KV storage to transform MergeTree with one write and multiple reads so multiple computing groups can be seen. However, the changes of DataPart and the execution of Mutation will only be executed by a single node with write permissions. Read-only nodes can easily create read-only objects of the current MergeTree table engine from the shared storage with the metadata collection of DataParts. There are three core difficulties here:

The first is how the read-only computing group obtains and maintains the collection of DataParts in real-time. The first method is to perform full synchronization from the controller at a scheduled time. The second method is to perform full synchronization + background incremental synchronization. The system selects the synchronization method based on the frequency of read-only tables. We record the instantaneous collection of DataParts in distributed KV and the change logs of DataParts to achieve incremental synchronization. Read-only nodes can complete incremental synchronization by continuously synchronizing the change logs.

Some data stored in DataPart may still be in the local cache disk of the owner compute group node in the existing one-write multi-read mode. Read-only nodes cannot access through shared storage and can only read data through internal queries for this part of data. Here, we have also modified the original MergeTree engine read process.

The original MergeTree table engine enters index data strong loading mode when it is started. In the dynamic multi-computing group mode, this strong loading behavior is invalid in many scenarios. Users tend to use specific business tables in specific computing groups. Therefore, we changed the loading mode of DataPart to the Lazy Load mode, which significantly reduces the unnecessary impact between computing groups.

There must be two types of acceleration optimization in object storage data-type products. The first is to accelerate the object storage metadata read and write operations. The second is to accelerate the local disk cache of hot data. The MergeTree storage engine of ClickHouse relies heavily on the operation performance of the underlying file system interfaces. Rename, hardlink, listDir, and rmDir are all common operations in the MergeTree storage engine. However, the object storage itself does not provide fs-like APIs. It is also unacceptable to directly use Object Storage Service to simulate the fs operations above. The S3 object storage feature in open-source ClickHouse uses a local disk file as a reference handle to an S3 object. Reading, writing, and deleting this local handle file will further manipulate S3 objects. We save the reference handles of these object storage to the distributed KV system in cloud-native ClickHouse.

We borrowed the abstraction of the POSIX file system from the Controller node to organize and manage the reference information of Object Storage Service files. The specific reference information is saved to the inode. This way, the distributed shared storage of ClickHouse is cloud-native to have high directory operation performance and is also compatible with advanced usage methods (such as hardlink). When you perform regular directory operations, the ClickHouse kernel requests the controller node to complete the corresponding operations. On the performance-critical read/write link, the ClickHouse kernel only interacts with the controller for necessary reference information and then bypasses the controller node to directly read and write the actual Object Storage Service files.

When ClickHouse reads Object Storage Service data, it can use local disks to build a data cache. This improves the scanning throughput of hotspot data. Cloud-native ClickHouse did not choose to use a distributed shared file cache similar to alluxio here. The main reason is that ClickHouse belongs to the long-running MPP architecture category and has a clear attribution binding between nodes and data. We are committed to maximizing the caching capability of the single-machine dimension. Building this data cache capability in the ClickHouse kernel is the most efficient and can best understand the system load.

PolarDB-X Kernel V5.4.14: A New Version with More Refined Data Management Capabilities

MySQL Deep Dive - Implementation and Acquisition Mechanism of Metadata Locking

Hologres - July 7, 2021

Alibaba EMR - March 18, 2022

Alibaba EMR - March 18, 2022

DavidZhang - June 24, 2021

ApsaraDB - June 7, 2022

ApsaraDB - May 7, 2021

Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More Database Migration Solution

Database Migration Solution

Migrating to fully managed cloud databases brings a host of benefits including scalability, reliability, and cost efficiency.

Learn More Oracle Database Migration Solution

Oracle Database Migration Solution

Migrate your legacy Oracle databases to Alibaba Cloud to save on long-term costs and take advantage of improved scalability, reliability, robust security, high performance, and cloud-native features.

Learn More Database Gateway

Database Gateway

A tool product specially designed for remote access to private network databases

Learn MoreMore Posts by ApsaraDB