Many compression algorithms have been added to ClickHouse in recent years to adapt better to time-series scenarios, increase the compression ratio, and save storage space. Thus, this article will give an introduction to the ClickHouse compression algorithms.

The ClickHouse compression and decompression code are relatively clear and well abstracted, which is convenient for subsequent addition and modification of a compression algorithm. With no details about the code structure provided, this article rolls out directly from the core algorithm. Currently, various compression algorithms provided by ClickHouse can be divided into three types:

ClickHouse supports non-compression. As a result, data read does not consume the CPU but takes up significantly large storage space, coupled with high I/O overhead. Therefore, this algorithm is generally not recommended.

This is a very efficient compression algorithm widely used in SLS. It features strong performance in compression and decompression, especially with its single-core decompression efficiency of 4 GB/s. The weakness is its low compression ratio, but it is still viable with a compression ratio of 5 to 15 times for the log scenarios.

The compression principle of LZ4 is to find out if the latter values match the previous values through four-byte window scanning. If so, match the values with the maximum length in accordance with the greedy algorithm. The compression format is listed below. Due to the simple LZ4 format, decompression can be implemented at a high speed through sequential scanning and some memcpy operations. For details, please refer to this link.

raw:abcde_bcdefgh_abcdefghxxxxxxx

compressed:abcde_(5,4)fgh_(14,5)fghxxxxxxxThe compression performance of LZ4 is closely related to the Hash function. xxHash has excellent performance and is used on the official website and in SLS in terms of many Hash-related codes. For implementation, please refer to this link.

Though being less capable than LZ4, ZSTD can achieve a single-core compression efficiency of 400 M/s and a decompression efficiency of about 1 M/s, which depends on the specific data characteristics and machine specifications. Compared with LZ4, ZSTD has a better compression ratio. Online data was compressed for testing, and it showed that the compression ratio of ZSTD increased by 30% compared to LZ4. It saved 30% of the storage space. Since the current SLS machine has a relatively low CPU utilization rate but a high storage level, part of the data was compressed by ZSTD to save disk space. Compared with LZ4, ZSTD can adjust the compression speed according to parameters, which also implies that an increase in the speed may court a decrease in the compression ratio.

For benchmark tests, please see this link. What's behind the ZSTD is an entropy encoder (please see this paper and this code), which the author calls Finite State Entropy (FSE)..

T64 is an encoding method introduced in 2019 that only supports compression of int and uint type. Firstly, it obtains the data type before compression. Then, it calculates the Max Min of the data. After that, it maps the data to a 64-bit space according to the valid bit obtained based on the Max Min. Since 64 is a fixed number, it is called T64 (transpose 64-bit matrix.) This compression method achieves a high compression ratio for data that changes little. However, with only the header bits removed, there may be a lot of redundancies left. Therefore, in most scenarios, LZ4 or ZSTD is nested. The nested compression method will be described later.

There is an option for controlling the conversion granularity in T64 compression. By default, data is converted by byte, which indicates that data is directly copied by byte, and bits in the byte are not converted. If data is converted by bit, one more conversion will be made for each byte, which is very performance-consuming. However, T64 could achieve a higher compression ratio in combination with ZSTD instead of TZ4 due to the different implementation mechanisms of LZ4 and ZSTD. The additional round of bit conversion aims to make the same bit more concentrated and achieve a better entropy encoding effect while considering the risk that the coherence between bytes is violated. LZ4 searches for the similarities between bytes, while the bytes are hardly the same after conversion. Therefore, LZ4 achieves a worse effect.

Delta coding stores a basic value and the difference value between two subsequent adjacent data values. Delta coding works better for constant difference values, and those change slightly. Generally, Delta encoding needs to cooperate with LZ4 or ZSTD to compress the later difference values further.

raw : 5 6 7 8 9 10 11 12 13 ....

Delta : 5(Base) 1 1 1 1 1 1 1 1 ....First appearing in Facebook's Gorilla, Double Delta stores the Delta of Delta. For a constantly increasing or decreasing value, Delta is a generally fixed value. This value can be small or large and occupies a relatively large space for storage. If calculating the Delta of Delta again, it turns to be 0 in most cases. This type of data is very common in big data scenarios, especially in monitoring scenarios. For example, the time column is usually fixed at a point of N seconds, the data in the incremental column increases by 1 each time, and the accumulative values, such as request times and the traffic values, change stably. There are still many encoding details about the Delta of Delta, which is mainly to reduce the amount of data to be stored and increase the information entropy. If you are interested, please read the paper on Gorilla.

raw : 1589636543 1589636553 1589636563 1589636573 1589636583 1589636594 1589636603...

Delta of Delta : 1589636543(Base) 10(Delta) 0 0 0 1 -1 ...Double Delta is mainly suitable for int-type compression. There is a specific compression algorithm in Double Gorilla, but it was not named. Gradually, it was unofficially called the Gorilla algorithm.

| Sign bit | Exponent bit | Mantissa bit |

| 1 bit | 11 bits | 52 bits |

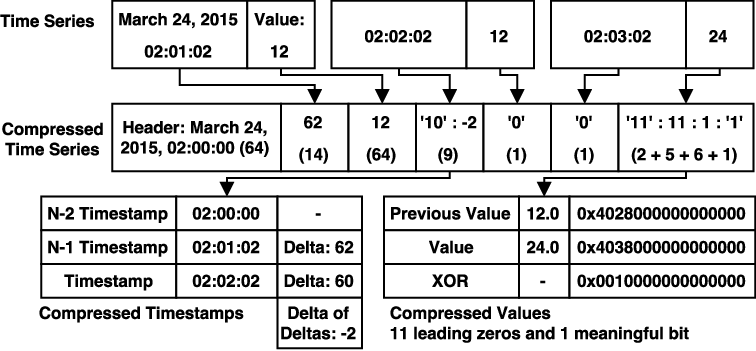

A 64-bit float contains one sign bit, 11 exponent bits, and 52 mantissa bits. There are little changes in terms of each bit. However, a float is typically used to represent a specific value, such as GPS, temperature, and request success rate. The effect is poor if calculating the Double Delta directly based on each part and encoding it. The Gorilla algorithm calculates Delta before and after through xor, checks the 0 digit before and after the xor values, and saves only the 0 digit together with the intermediate significance bits. However, Delta first compares the 0 digit before and after the xor values. If they are the same, only the intermediate significance bits will be written.

For the specific structure, please see the diagram below:

Multiple is not a compression algorithm but a combination of several previous algorithms. For example, when combining T64 and ZSTD, Multiple will call T64 first and then ZSTD for compression. Then, it goes to LZ4 first and T64 then for decompression.

compress : T64 -> ZSTD

uncompress : ZSTD -> T64It is very common to use multiple compression algorithms in combination. Similar algorithms are used often in SLS. For example, a combination of 2 to 3 compression algorithms is used in many index codes in SLS. There are more combinations of compression algorithms on time-series engines, some of which include 4 to 5 algorithms.

The new T64, Delta, Double Delta, and Gorilla algorithms added to ClickHouse are mainly applied in time-series scenarios. These algorithms are mainly designed for data that does not change much, so they can achieve a very high compression ratio in time-series scenarios.

Since ClickHouse makes an abstraction for compression and decompression, some algorithms that support compressed read still require decompressed read, resulting in a loss in efficiency. This is the only drawback.

There are commonly used Prefix encoding and RLE, which are also very useful. They feature extremely high performance and support compressed read. We hope they will be available in ClickHouse in the future.

12 posts | 1 followers

FollowApsaraDB - May 7, 2021

ApsaraDB - July 7, 2021

ApsaraDB - November 26, 2025

ApsaraDB - November 19, 2025

ApsaraDB - July 7, 2021

ApsaraDB - September 10, 2025

12 posts | 1 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More ApsaraDB for ClickHouse

ApsaraDB for ClickHouse

ApsaraDB for ClickHouse is a distributed column-oriented database service that provides real-time analysis.

Learn MoreMore Posts by DavidZhang